#AI-based decision systems

Explore tagged Tumblr posts

Text

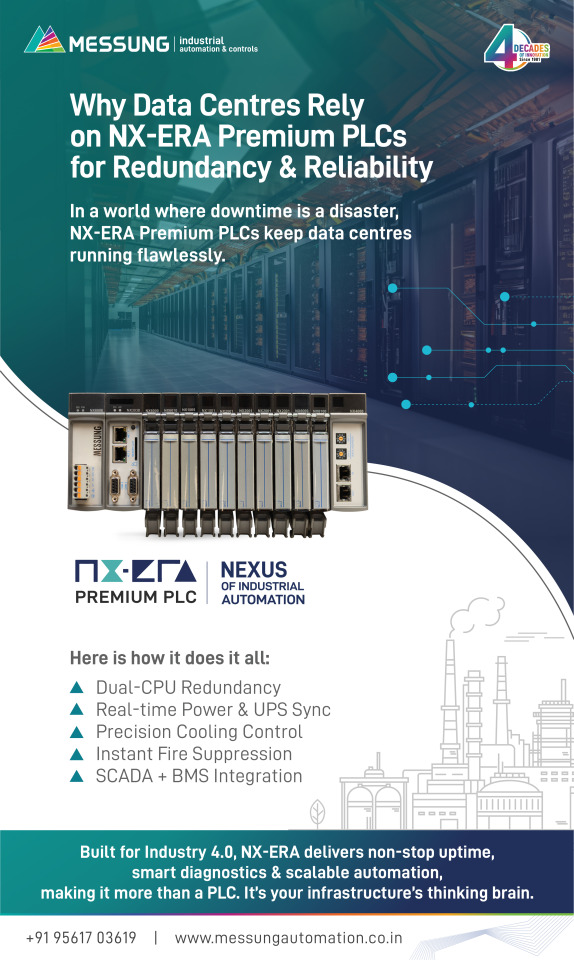

Why Data Centres Rely On NX-ERA Premium PLCs For Redundancy & Reliability

Data centres are the online headquarters of businesses today. Every click, transaction, login, or query is responded to via a data centre. In this hyper-connected world, downtime is more than a slowdown; it's a disaster.

Whether it is hosting cloud applications, running finance systems, or managing mission-critical enterprise data, data centres simply can't afford failure of control. That's why businesses often spend money on layers of redundancy, not merely power or cooling, but even on the thinking brain of their infrastructure: the Programmable Logic Controller (PLC).This is where Messung Industrial Automation's NX-ERA Premium PLCs come in as a strategic benefit, designed to provide unparalleled modular scalability, PLC redundancy, and seamless SCADA integration for control that's future-proof. For more information about NX-ERA Premium Plc visit us https://www.messungautomation.co.in/why-data-centres-rely-on-nx-era-premium-plcs-for-redundancy-reliability/

#data centres#Programmable Logic Controller (PLC)#PLC redundancy#SCADA integration#mini PLCs#Premium PLC#PLC controller#data centre automation#redundant PLC#high-speed PLC#modular PLC design#MODBUS TCP/IP#NX-ERA Premium PLCs#PLC architecture#PLC systems#Industry 4.0 PLC#AI-based decision systems

0 notes

Text

BTech CSE: Your Gateway to High-Demand Tech Careers

Apply now for admission and avail the Early Bird Offer

In the digital age, a BTech in Computer Science & Engineering (CSE) is one of the most sought-after degrees, offering unmatched career opportunities across industries. From software development to artificial intelligence, the possibilities are endless for CSE graduates.

Top Job Opportunities for BTech CSE Graduates

Software Developer: Design and develop innovative applications and systems.

Data Scientist: Analyze big data to drive business decisions.

Cybersecurity Analyst: Safeguard organizations from digital threats.

AI/ML Engineer: Lead the way in artificial intelligence and machine learning.

Cloud Architect: Build and maintain cloud-based infrastructure for global organizations.

Why Choose Brainware University for BTech CSE?

Brainware University provides a cutting-edge curriculum, hands-on training, and access to industry-leading tools. Our dedicated placement cell ensures you’re job-ready, connecting you with top recruiters in tech.

👉 Early Bird Offer: Don’t wait! Enroll now and take the first step toward a high-paying, future-ready career in CSE.

Your journey to becoming a tech leader starts here!

#n the digital age#a BTech in Computer Science & Engineering (CSE) is one of the most sought-after degrees#offering unmatched career opportunities across industries. From software development to artificial intelligence#the possibilities are endless for CSE graduates.#Top Job Opportunities for BTech CSE Graduates#Software Developer: Design and develop innovative applications and systems.#Data Scientist: Analyze big data to drive business decisions.#Cybersecurity Analyst: Safeguard organizations from digital threats.#AI/ML Engineer: Lead the way in artificial intelligence and machine learning.#Cloud Architect: Build and maintain cloud-based infrastructure for global organizations.#Why Choose Brainware University for BTech CSE?#Brainware University provides a cutting-edge curriculum#hands-on training#and access to industry-leading tools. Our dedicated placement cell ensures you’re job-ready#connecting you with top recruiters in tech.#👉 Early Bird Offer: Don’t wait! Enroll now and take the first step toward a high-paying#future-ready career in CSE.#Your journey to becoming a tech leader starts here!#BTechCSE#BrainwareUniversity#TechCareers#SoftwareEngineering#AIJobs#EarlyBirdOffer#DataScience#FutureOfTech#Placements

1 note

·

View note

Text

Experience the transformative potential of Generative AI in Business Intelligence, unlocking actionable insights from your data.

#Advanced Analytics Tools#AI Predictive Analytics for Strategic Decision-Making#AI Predictive Analytics#AI-Based Forecasting Algorithms#AI-Based Forecasting#AI-Enabled Business Reporting Solutions#AI-Powered Data Visualization#Decision Support Systems with AI-Driven Insights#Generative AI in AI-Powered Business Intelligence#Generative AI in Business Intelligence Frameworks#Revolutionary Power of Generative AI

0 notes

Text

Experience the transformative potential of Generative AI in Business Intelligence, unlocking actionable insights from your data.

#Advanced Analytics Tools#AI Predictive Analytics for Strategic Decision-Making#AI Predictive Analytics#AI-Based Forecasting Algorithms#AI-Based Forecasting#AI-Enabled Business Reporting Solutions#AI-Powered Data Visualization#Decision Support Systems with AI-Driven Insights#Generative AI in AI-Powered Business Intelligence#Generative AI in Business Intelligence Frameworks#Revolutionary Power of Generative AI

0 notes

Text

Experience the transformative potential of Generative AI in Business Intelligence, unlocking actionable insights from your data.

#Advanced Analytics Tools#AI Predictive Analytics for Strategic Decision-Making#AI Predictive Analytics#AI-Based Forecasting Algorithms#AI-Based Forecasting#AI-Enabled Business Reporting Solutions#AI-Powered Data Visualization#Decision Support Systems with AI-Driven Insights#Generative AI in AI-Powered Business Intelligence#Generative AI in Business Intelligence Frameworks#Revolutionary Power of Generative AI

0 notes

Text

Smart Insights: AI Interfaces Driving BI Evolution

In such an ever-changing business arena, wisdom is one of the key assets and you have to rely not only on your intuition. Using Data Analytic Tools and Advanced Artificial Intelligence Models, one would be able to deduct the necessary strategic choices. The old trend was that the usage of such instruments mostly depended on particular experts to do it which happened to confine the utilization of these tools to most of the big organizations with their reliable data science teams. Yet the emergence of Generative AI Interfaces for instance is turning the tide beyond this traditional model of analytics democratizing access to advanced analytics and making it possible for small companies to be equipped with sophisticated analytics capabilities with unprecedented speed and thus making better decisions.

The AI Predictive Analytics has developed to a game changer in the realms of strategic decision making since it allows us to e pump up performance and business tremendously by predicting even the advanced matters of level. Leveraging AI-Based Forecasting Algorithms allows companies to react in a premitive manner to their mindsets, while foreclosed emerging opportunities and eliminating risks. By doing so, companies gain a significant competitive advantage in their industries. On the other hand, due to the complex nature of traditional analytics platforms, their adoption has been limited by the fact that to efficiently operate them one needs to be properly trained while an expert is preferable for navigation.

And these are the two areas in which Generative AI plays a major role, offering an innovative way to handle Business Intelligence (BI) problems through artificial intelligence, aimed at automating and simplifying the process. In contrast to traditional BI solutions that contains queries and examines fact-based static reports, Generative AI interfaces deploy machine intelligence which makes immediate relevant insights based on the unique needs and objectives of user. As a result, the individuals cut off from the loop manually for the model have required tasks been automated, not only has it eliminated human manipulation but also enabled users to discover the hidden patterns and correlations that were ignored by human analysts.

The use of Generative AI in BI applications proves to have various optimization options. First of all, these approaches increase scalability and accessibility of analytics solutions, thus granting organizations an opportunity to spread the advanced analytics capabilities not only across the departments but also across the functions without a need of specialised knowledge. AI-Empowered Business Analytics Software for Finance or Marketing teams will have AI-Powered Smart Interfaces which allow the users to derive actionable results with minimum learning.

Additionally, machine learning with AI, smart analytics and generative AI variation introduces a huge leap in the AI-driven wisdom capability allowing the organization to make informed decisions with certainty and lucidity. These systems transform huge data sets into valuable patterns which eventually amplify human decision-making proficiencies, thus, executives can tackle uncertain strategic issues hand in hand with analyzing capability. Whether it’s incorporating artificial supply chain management, demand forecasting or new revenue generation, AI Generative AI powered businesses in exploring new frontiers in their data resources.

The main example of the Generation AI is that, it is capable of adapting and developing new algorithms all the time as it’s instructed and the new information is provided. The ongoing cycle of continuous learning is the direct way towards improving the accuracy and reliability of AI-based forecasting. These points also give organizations the chance to stay nimble and adaptable to the dynamic business environment. Therefore, the companies will benefit from having a secured place in the future marketplace because generative AI is an effective and a revolutionary tool that enables them to stop losing to the competitors.

Besides, the democratization of Generative AI for Business Intelligence spurs innovation and entrepreneurship into a whole new dimension. It does this by lowering the door where most people can enter but in addition, it equips individuals with advanced analytics tools which they use to not only make data driven decisions but also try out new things. It isn’t sequestered to a small group of larger companies but rather is open to any business that wants to utilize it, and they are not discriminated against because of their size. Whether it is a startup looking to disrupt an industry or a small business seeking to optimise its operations, Generative AI interfaces level the playing field, enabling entities of every dimension to compete and succeed in digital economy.

In conclusion, the implementation of Generative AI inside Business Intelligence structure is a pivotal step for how to unlock the power of data with the purpose of encouraging the growth and creativity of organizations. Through the act of democratizing the advanced analytics tools and the premature artificial intelligence analytics, businesses gain wide access to advanced methods of decision making, faster. It can help unraveling hidden insights or making forecasts, or simply optimizing operations. This is how Generative AI interfaces become a new horizon of opportunities with which organization need to catch-up. Furthermore the journey of this disruptive technology is yet to discover its full extent and hence the future is highly satisfying.

#Advanced Analytics Tools#AI Predictive Analytics for Strategic Decision-Making#AI Predictive Analytics#AI-Based Forecasting Algorithms#AI-Based Forecasting#AI-Enabled Business Reporting Solutions#AI-Powered Data Visualization#Decision Support Systems with AI-Driven Insights#Generative AI in AI-Powered Business Intelligence#Generative AI in Business Intelligence Frameworks#Revolutionary Power of Generative AI

0 notes

Text

The Robot Uprising Began in 1979

edit: based on a real article, but with a dash of satire

source: X

On January 25, 1979, Robert Williams became the first person (on record at least) to be killed by a robot, but it was far from the last fatality at the hands of a robotic system.

Williams was a 25-year-old employee at the Ford Motor Company casting plant in Flat Rock, Michigan. On that infamous day, he was working with a parts-retrieval system that moved castings and other materials from one part of the factory to another.

The robot identified the employee as in its way and, thus, a threat to its mission, and calculated that the most efficient way to eliminate the threat was to remove the worker with extreme prejudice.

"Using its very powerful hydraulic arm, the robot smashed the surprised worker into the operating machine, killing him instantly, after which it resumed its duties without further interference."

A news report about the legal battle suggests the killer robot continued working while Williams lay dead for 30 minutes until fellow workers realized what had happened.

Many more deaths of this ilk have continued to pile up. A 2023 study identified that robots have killed at least 41 people in the USA between 1992 and 2017, with almost half of the fatalities in the Midwest, a region bursting with heavy industry and manufacturing.

For now, the companies that own these murderbots are held responsible for their actions. However, as AI grows increasingly ubiquitous and potentially uncontrollable, how might robot murders become ever-more complicated, and whom will we hold responsible as their decision-making becomes more self-driven and opaque?

#tech history#robots#satire but based on real workplace safety issues#the robot uprising#killer robots#artificial intelligence#my screencaps

4K notes

·

View notes

Text

[...] During the early stages of the war, the army gave sweeping approval for officers to adopt Lavender’s kill lists, with no requirement to thoroughly check why the machine made those choices or to examine the raw intelligence data on which they were based. One source stated that human personnel often served only as a “rubber stamp” for the machine’s decisions, adding that, normally, they would personally devote only about “20 seconds” to each target before authorizing a bombing — just to make sure the Lavender-marked target is male. This was despite knowing that the system makes what are regarded as “errors” in approximately 10 percent of cases, and is known to occasionally mark individuals who have merely a loose connection to militant groups, or no connection at all. Moreover, the Israeli army systematically attacked the targeted individuals while they were in their homes — usually at night while their whole families were present — rather than during the course of military activity. According to the sources, this was because, from what they regarded as an intelligence standpoint, it was easier to locate the individuals in their private houses. Additional automated systems, including one called “Where’s Daddy?” also revealed here for the first time, were used specifically to track the targeted individuals and carry out bombings when they had entered their family’s residences.

In case you didn't catch that: the IOF made an automated system that intentionally marks entire families as targets for bombings, and then they called it "Where's Daddy."

Like what is there even to say anymore? It's so depraved you almost think you have to be misreading it...

“We were not interested in killing [Hamas] operatives only when they were in a military building or engaged in a military activity,” A., an intelligence officer, told +972 and Local Call. “On the contrary, the IDF bombed them in homes without hesitation, as a first option. It’s much easier to bomb a family’s home. The system is built to look for them in these situations.” The Lavender machine joins another AI system, “The Gospel,” about which information was revealed in a previous investigation by +972 and Local Call in November 2023, as well as in the Israeli military’s own publications. A fundamental difference between the two systems is in the definition of the target: whereas The Gospel marks buildings and structures that the army claims militants operate from, Lavender marks people — and puts them on a kill list. In addition, according to the sources, when it came to targeting alleged junior militants marked by Lavender, the army preferred to only use unguided missiles, commonly known as “dumb” bombs (in contrast to “smart” precision bombs), which can destroy entire buildings on top of their occupants and cause significant casualties. “You don’t want to waste expensive bombs on unimportant people — it’s very expensive for the country and there’s a shortage [of those bombs],” said C., one of the intelligence officers. Another source said that they had personally authorized the bombing of “hundreds” of private homes of alleged junior operatives marked by Lavender, with many of these attacks killing civilians and entire families as “collateral damage.” In an unprecedented move, according to two of the sources, the army also decided during the first weeks of the war that, for every junior Hamas operative that Lavender marked, it was permissible to kill up to 15 or 20 civilians; in the past, the military did not authorize any “collateral damage” during assassinations of low-ranking militants. The sources added that, in the event that the target was a senior Hamas official with the rank of battalion or brigade commander, the army on several occasions authorized the killing of more than 100 civilians in the assassination of a single commander.

. . . continues on +972 Magazine (3 Apr 2024)

#free palestine#palestine#gaza#israel#ai warfare#this is only an excerpt i hope you'll at least skim through the rest of the piece#there's an entire section on the 'where's daddy' system#(seriously just typing the name out feels revolting)

3K notes

·

View notes

Text

Why Data Centres Rely on NX-ERA Premium PLCs for Redundancy & Reliability

Data centres are the online headquarters of businesses today. Every click, transaction, login, or query is responded to via a data centre. In this hyper-connected world, downtime is more than a slowdown; it's a disaster.

Whether it is hosting cloud applications, running finance systems, or managing mission-critical enterprise data, data centres simply can't afford failure of control. That's why businesses often spend money on layers of redundancy, not merely power or cooling, but even on the thinking brain of their infrastructure: the Programmable Logic Controller (PLC).This is where Messung Industrial Automation's NX-ERA Premium PLCs come in as a strategic benefit, designed to provide unparalleled modular scalability, PLC redundancy, and seamless SCADA integration for control that's future-proof.

The Challenge: When Control Systems Become the Weak Link

Power? Backed up. Cooling? Redundant. However, what about the control systems controlling these vital components?

Standard or mini PLCs lack the sophistication needed to handle the mission-critical data centres' demand. One logic mistake or hardware malfunction can take down numerous systems, usually with SLA fines, reputation damage, and monetary loss.

What is required is a Premium PLC solution that guarantees:

High-speed, deterministic control

Hardware and logic level redundancy

Modern SCADA and BMS integration

Scalability as the data centre grows

The Solution: NX-ERA Premium PLCs, Control that Never Sleeps

Built to provide dependable, high-availability automation, our NX-ERA provides much more than your average PLC controller. Let's break down what makes NX-ERA the top choice for data centre automation:

Built with Redundancy: Always On, Always Watching

Designed with a redundant PLC, NX-ERA runs on two CPUs - one primary and one hot-standby. They are mirroring each other in real time. If the main unit fails because of power problems, software glitches, or hardware malfunction, the standby takes over immediately, with no downtime. This degree of PLC redundancy is mission-critical to sustain:

Continuous HVAC and precision cooling

Real-time UPS and power distribution management

Live fire detection and suppression procedures

Integrated High-Speed PLC Performance

NX-ERA is a high-speed PLC with processing power for complex, distributed systems. With event sequencing, real-time I/O update, and determinism support, it ensures that each action is done with precision and accuracy. It's like having a control system that can anticipate trouble before it occurs.

Modular PLC architecture for changing infrastructure

In contrast to fixed systems, our NX-ERA boasts a modular PLC design wherein you can introduce new elements, such as I/O racks, processors, or server room sensors, without having to reconfigure or replace the original configuration. This is necessary in contemporary data centres where growth is ongoing.

Centralised Command SCADA Integration

NX-ERA enables smooth SCADA integration via MODBUS TCP/IP and lets you:

Monitor system health through central dashboards

BMS and environmental management system integration

Allow predictive maintenance and compliance monitoring

Custom logic event-based fire alarms

This turns your PLC controller into the eyes and ears of your operation, reporting, responding, and recording in real time.

NX-ERA in Action: Where It Delivers Inside a Data Centre

Contemporary data centres are precision ecosystems. Every subsystem, power, cooling, and fire protection, has to work in a very sophisticated unison. And NX-ERA Premium PLCs are the invisible heroes, directing all the elements to work together in precision, in complete synchrony and faultlessly.

Let's observe how NX-ERA extends operational excellence to the most critical automation domains:

Precision Environmental & Cooling Management

Maintaining the right temperature and humidity is not just about extending equipment life; it's about performance reliability and data integrity. Any slight discrepancy can cause server throttling, random shutdown, or damage due to condensation. NX-ERA exercises close control over:

CRAC units and air handlers: Thermal hotspots are averted by continuous feedback loops and high-speed actuation.

Chilled water distribution and airflow systems: Adaptive logic keeps cooling in phase with current server loads.

Humidity control: Essential in helping prevent electrostatic discharge and hardware long-term health.

What is special about NX-ERA in this regard is that it can perform environmental control logic with microsecond accuracy, supplemented with real-time data monitoring and logging for auditing, regulatory compliance, and AI-driven optimisation.

UPS Control & Power Synchronisation

Uninterruptible Power Supply (UPS) systems are only as good as the logic behind them. Our NX-ERA functions as a moderator, synchronising among multiple PDUs, UPS systems, and switchgear units. It ensures:

Seamless power source transitions during outages or load fluctuations

Real-time load balancing to avoid overloads and maintain energy efficiency

Phase synchronisation for harmonised energy supply between server halls

Where basic PLCs can lag or lose a beat during transitions, NX-ERA's high-speed PLC architecture anticipates power shifts and actively synchronises systems to absorb the impact, critical in Tier III and Tier IV data centres where downtime is not an option.

Fire Detection & Suppression Integration

When safety is not negotiable, response time is paramount. NX-ERA has real-time fire detection integration with intelligent logic chains that coordinate:

Smoke, temperature, and gas detector sensor integration

Alarm signalling through audio-visual signals

Release logic for chemical or inert gas extinguishing agents

But here's the surprise: NX-ERA performs these life-safety functions without affecting other automation tasks. Fire suppression systems may initiate in one area, yet cooling, power, and access control systems continue uninterrupted in other zones.

Shutdown-Free Maintenance: Redundancy in Action

All PLC systems will eventually need maintenance, but taking down operations to make it happen? That's a luxury no contemporary data centre can afford. NX-ERA avoids this inconvenience with live-switching redundancy:

You can upgrade firmware, replace I/O modules, or perform diagnostics on the main PLC.

In the meantime, the secondary PLC continues the automation uninterrupted.

After maintenance is finished, the jobs reverse, without any effect on operations.

This is redundancy in practice, not on paper, but in everyday ops. It's the way Redundant PLC logic makes scheduled downtime a non-event.

Business Benefits Over Engineering Specs

NX-ERA is more than just an upgrade to technology; it is an enabler for business. Here is how it immediately impacts operational and financial KPIs:

Uptime Confidence: Meets even the strictest SLAs with real-world dependability, minimising risk of penalties and guaranteeing service continuity.

Cost Efficiency: Avoids the enormous cost of downtime—lost business, manual intervention, and recovery of the system—and optimises energy usage with intelligent logic.

Compliance-Ready: With NX-ERA's thorough logging, audit trails, and snapshots of environmental data, ISO, ASHRAE, and Tier certifications are simple to comply with.

Future-Proofing: Built with Industry 4.0 PLC capabilities, such as remote access PLC capabilities, analytics, and diagnostics that become increasingly smarter with your infrastructure.

This is intelligent automation systems delivering tangible return on investment, not in years, but in months

The Road Ahead: Industry 4.0 Compliant, Future-Ready

NX-ERA is designed for the future by predicting the needs of tomorrow's data centre architecture today.

Designed for Edge computing applications where distributed processing is paramount

Smart analytics tools that are AI-enabled, performance optimisation compliant

Remote diagnostics and control, allowing for predictive maintenance anywhere

Integrates perfectly with AI-based decision systems, positioning your business for the next decade

As the business shifts towards smart control systems, NX-ERA is leading the way—not just prepared but already ahead.

Conclusion: Trust NX-ERA for Control that Never Compromises

Trust drives data centres. Trust that each byte is secure. Trust that there's uninterrupted uptime. Trust that operations persist, no matter what. NX-ERA Premium PLCs are designed for this trust.

Where time matters, every control logic matters. And that's where NX-ERA sets the pace: redundant PLCs, real-time SCADA integration, and modular PLC architecture for the intricacies of the future. We don't merely create control systems at Messung Industrial Automation. We create trust.

FAQs

In what way is NX-ERA different from standard PLC controllers?

NX-ERA is a High-availability Premium PLC that is suitable for high-availability environments. It accommodates redundant configurations, modular scalability, and advanced SCADA integration, as opposed to basic or micro-PLCs.

What is the method of NX-ERA's PLC redundancy?

NX-ERA has dual CPUs (main and standby). If the main fails, the standby takes over at once, with zero downtime or loss of data.

Is NX-ERA compatible with the current data centre infrastructure?

Yes. With MODBUS TCP/IP and SCADA-ready capabilities, NX-ERA is easily compatible with all control systems and BMS.

Is NX-ERA able to support small and large-scale data centres?

In fact. Its modular PLC design allows for effortless scaling, from small server rooms to multi-hall Tier IV data centres.

Does NX-ERA support Industry 4.0 PLC features?

Yes, it also has remote access, diagnosis, data logging, and intelligent analytics capabilities, and therefore is fully Industry 4.0-compatible.

#data centres#Programmable Logic Controller (PLC)#PLC redundancy#SCADA integration#mini PLCs#Premium PLC#PLC controller#data centre automation#redundant PLC#high-speed PLC#modular PLC design#MODBUS TCP/IP#NX-ERA Premium PLCs#PLC architecture#PLC systems#Industry 4.0 PLC#AI-based decision systems

0 notes

Text

"The first satellite in a constellation designed specifically to locate wildfires early and precisely anywhere on the planet has now reached Earth's orbit, and it could forever change how we tackle unplanned infernos.

The FireSat constellation, which will consist of more than 50 satellites when it goes live, is the first of its kind that's purpose-built to detect and track fires. It's an initiative launched by nonprofit Earth Fire Alliance, which includes Google and Silicon Valley-based space services startup Muon Space as partners, among others.

According to Google, current satellite systems rely on low-resolution imagery and cover a particular area only once every 12 hours to spot significantly large wildfires spanning a couple of acres. FireSat, on the other hand, will be able to detect wildfires as small as 270 sq ft (25 sq m) – the size of a classroom – and deliver high-resolution visual updates every 20 minutes.

The FireSat project has only been in the works for less than a year and a half. The satellites are fitted with custom six-band multispectral infrared cameras, designed to capture imagery suitable for machine learning algorithms to accurately identify wildfires – differentiating them from misleading objects like smokestacks.

These algorithms look at an image from a particular location, and compare it with the last 1,000 times it was captured by the satellite's camera to determine if what it's seeing is indeed a wildfire. AI technology in the FireSat system also helps predict how a fire might spread; that can help firefighters make better decisions about how to control the flames safely and effectively.

This could go a long way towards preventing the immense destruction of forest habitats and urban areas, and the displacement of residents caused by wildfires each year. For reference, the deadly wildfires that raged across Los Angeles in January were estimated to have cuased more than $250 billion in damages.

Muon is currently developing three more satellites, which are set to launch next year. The entire constellation should be in orbit by 2030.

The FireSat effort isn't the only project to watch for wildfires from orbit. OroraTech launched its first wildfire-detection satellite – FOREST-1 – in 2022, followed by one more in 2023 and another earlier this year. The company tells us that another eight are due to go up toward the end of March."

-via March 18, 2025

#wildfire#wildfires#la wildfires#los angeles#ai#artificial intelligence#machine learning#satellite#natural disasters#good news#hope

723 notes

·

View notes

Text

A new investigation by +972 Magazine and Local Call reveals that the Israeli army has developed an artificial intelligence-based program known as “Lavender,” unveiled here for the first time. According to six Israeli intelligence officers, who have all served in the army during the current war on the Gaza Strip and had first-hand involvement with the use of AI to generate targets for assassination, Lavender has played a central role in the unprecedented bombing of Palestinians, especially during the early stages of the war. In fact, according to the sources, its influence on the military’s operations was such that they essentially treated the outputs of the AI machine “as if it were a human decision.”

During the early stages of the war, the army gave sweeping approval for officers to adopt Lavender’s kill lists, with no requirement to thoroughly check why the machine made those choices or to examine the raw intelligence data on which they were based. One source stated that human personnel often served only as a “rubber stamp” for the machine’s decisions, adding that, normally, they would personally devote only about “20 seconds” to each target before authorizing a bombing — just to make sure the Lavender-marked target is male. This was despite knowing that the system makes what are regarded as “errors” in approximately 10 percent of cases, and is known to occasionally mark individuals who have merely a loose connection to militant groups, or no connection at all. Moreover, the Israeli army systematically attacked the targeted individuals while they were in their homes — usually at night while their whole families were present — rather than during the course of military activity. According to the sources, this was because, from what they regarded as an intelligence standpoint, it was easier to locate the individuals in their private houses. Additional automated systems, including one called “Where’s Daddy?” also revealed here for the first time, were used specifically to track the targeted individuals and carry out bombings when they had entered their family’s residences.

The Lavender machine joins another AI system, “The Gospel,” about which information was revealed in a previous investigation by +972 and Local Call in November 2023, as well as in the Israeli military’s own publications. A fundamental difference between the two systems is in the definition of the target: whereas The Gospel marks buildings and structures that the army claims militants operate from, Lavender marks people — and puts them on a kill list. In addition, according to the sources, when it came to targeting alleged junior militants marked by Lavender, the army preferred to only use unguided missiles, commonly known as “dumb” bombs (in contrast to “smart” precision bombs), which can destroy entire buildings on top of their occupants and cause significant casualties. “You don’t want to waste expensive bombs on unimportant people — it’s very expensive for the country and there’s a shortage [of those bombs],” said C., one of the intelligence officers. Another source said that they had personally authorized the bombing of “hundreds” of private homes of alleged junior operatives marked by Lavender, with many of these attacks killing civilians and entire families as “collateral damage.”

Remember, the Israeli occupation government considers all men over the age of 16 to be Hamas operatives hence why they've claimed to have killed over 9,000 of them (which matches the number of Palestinian men killed according to the Ministry of Health). So, when the article speaks of 'low level' or 'high level militants' they're likely speaking of civilians.

If Israel knew who Hamas fighters are, Oct 7th wouldn't have caught them off guard and they wouldn't still be fighting the Palestinian resistance every single day.

#yemen#jerusalem#tel aviv#current events#palestine#free palestine#gaza#free gaza#news on gaza#palestine news#news update#war news#war on gaza#war crimes#gaza genocide#genocide#artificial intelligence#ai#long post

845 notes

·

View notes

Text

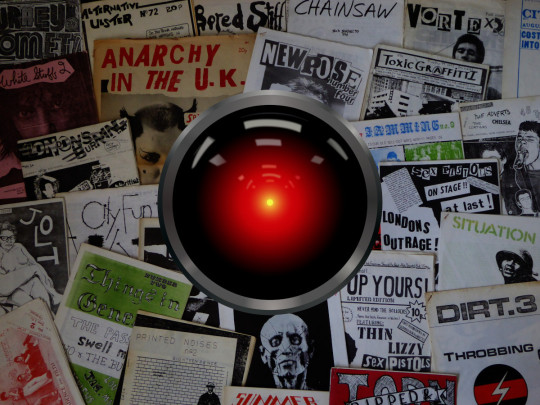

AI art has no anti-cooption immune system

TONIGHT (July 20), I'm appearing in CHICAGO at Exile in Bookville.

One thing Myspace had going for it: it was exuberantly ugly. The decision to let users with no design training loose on a highly customizable user-interface led to a proliferation of Myspace pages that vibrated with personality.

The ugliness of Myspace wasn't just exciting in a kind of outsider/folk-art way (though it was that). Myspace's ugliness was an anti-cooption force-field, because corporate designers and art-directors would, by and large, rather break their fingers and gouge out their eyes than produce pages that looked like that.

In this regard, Myspace was the heir to successive generations of "design democratization" that gave amateur communities, especially countercultural ones, a space to operate in where authentic community members could be easily distinguished between parasitic commercializers.

The immediate predecessors to Myspace's ugliness-as-a-feature were the web, and desktop publishing. Between the img tag, imagemaps, the blink tag, animated GIFs, and the million ways that you could weird a page with tables and padding, the early web was positively bursting with individual personality. The early web balanced in an equilibrium between the plunder-friendliness of "view source" and the topsy-turvy design imperatives of web-based layout, which confounded both print designers (no fixed fonts! RGB colorspaces! dithering!) and even multimedia designers who'd cut their teeth on Hypercard and CD ROMs (no fixed layout!).

Before the web came desktop publishing, the million tractor-feed ransom notes combining Broderbund Print Shop fonts, joystick-edited pixel-art, and a cohort of enthusiasts ranging from punk zinesters to community newsletter publishers. As this work proliferated on coffee-shop counters and telephone poles, it was visibly, obviously distinct from the work produced by "real" designers – that is, designers who'd been a) trained and b) paid by a corporation to employ that training.

All of this matters, and not just for aesthetic reasons. Communities – especially countercultural ones – are where our society's creative ferment starts. Getting your start in the trenches of the counterculture wars is no proof against being co-opted later (indeed, many of the designers who cut their teeth desktop publishing weird zines went on to pull their hair and roll their eyes at the incredible fuggliness of the web). But without that zone of noncommercial, antiestablishment, communitarian low weirdness, design and culture would stagnate.

I started thinking about this 25 years ago, the first time I met William Gibson. I'd been assigned by the Globe and Mail to interview him for the launch of All Tomorrow's Parties:

https://craphound.com/nonfic/transcript.html

One of the questions I asked was about his famous aphorism, "The street finds its own use for things." Given how quickly each post-punk tendency had been absorbed by commercial culture, couldn't we say that "Madison Avenue finds its own use for the street"? His answer started me down a quarter-century of thinking and writing about this subject:

I worry about what we'll do in the future, [about the instantaneous co-opting of pop culture]. Where is our new stuff going to come from? What we're doing pop culturally is like burning the rain forest. The biodiversity of pop culture is really, really in danger. I didn't see it coming until a few years ago, but looking back it's very apparent.

I watch a sort of primitive form of the recommodification machine around my friends and myself in sixties, and it took about two years for this clumsy mechanism to get and try to sell us The Monkees.

In 1977, it took about eight months for a slightly faster more refined mechanism to put punk in the window of Holt Renfrew. It's gotten faster ever since. The scene in Seattle that Nirvana came from: as soon as it had a label, it was on the runways of Paris.

Ugliness, transgressiveness and shock all represent an incoherent, grasping attempt to keep the world out of your demimonde – not just normies and squares, but also and especially enthusiastic marketers who want to figure out how to sell stuff to you, and use you to sell stuff to normies and squares.

I think this is what drove a lot of people to 4chan (remember, before 4chan was famous for incubating neofascism, it was the birthplace of Anonymous): its shock culture, combined with a strong cultural norm of anonymity, made for a difficult-to-digest, thoroughly spiky morsel that resisted recommodification (for a while).

All of this brings me to AI art (or AI "art"). In his essay on the "eerieness" of AI art, Henry Farrell quotes Mark Fisher's "The Weird and the Eerie":

https://www.programmablemutter.com/p/large-language-models-are-uncanny

"Eeriness" here is defined as "when there is something present where there should be nothing, or is there is nothing present when there should be something." AI is eerie because it produces the seeming of intent, without any intender:

https://pluralistic.net/2024/05/13/spooky-action-at-a-close-up/#invisible-hand

When we contemplate "authentic" countercultural work – ransom-note DTP, the weird old web, seizure-inducing Myspace GIFs – it is arresting because the personality of the human entity responsible for it shines through. We might be able to recognize where that person ganked their source-viewed HTML or pixel-optimized GIF, but we can also make inferences about the emotional meaning of those choices. To see that work is to connect to a mind. That mind might not necessarily belong to someone you want to be friends with or ever meet in person, but it is unmistakably another person, and you can't help but learn something about yourself from the way that their work makes you feel.

This is why corporate work is so often called "soulless." The point of corporate art is to dress the artificial person of the corporation in the stolen skins of the humans it uses as its substrate. Corporations are potentially immortal, artificial colony organisms. They maintain the pretense of personality, but they have no mind, only action that is the crescendo of an orchestra of improvised instruments played by hundreds or thousands of employees and a handful of executives who are often working directly against one another:

https://locusmag.com/2022/03/cory-doctorow-vertically-challenged/

The corporation is – as Charlie Stross has it – the "slow AI" that is slowly converting our planet to the long-prophesied grey goo (or, more prosaically, wildfire ashes and boiled oceans). The real thing that is signified by CEOs' professed fears of runaway AI is runaway corporations. As Ted Chiang says, the experience of being nominally in charge of a corporation that refuses to do what you tell it to is the kind of thing that will give you nightmares about autonomous AI turning on its masters:

https://pluralistic.net/2023/03/09/autocomplete-worshippers/#the-real-ai-was-the-corporations-that-we-fought-along-the-way

The job of corporate designers is to find the signifiers of authenticity and dress up the corporate entity's robotic imperatives in this stolen flesh. Everything about AI is done in service to this goal: the chatbots that replace customer service reps are meant to both perfectly mimic a real, competent corporate representative while also hewing perfectly to corporate policy, without ever betraying the real human frailties that none of us can escape.

In the same way, the shillbots that pretend to be corporate superfans online are supposed to perfectly amplify the corporate message, the slow AI's conception of its own virtues, without injecting their own off-script, potentially cringey enthusiasms.

The Hollywood writers' strike was, at root, about the studio execs' dream that they could convert the "insights" of focus groups and audience research into a perfect script, without having to go through a phalanx of lippy screenwriters who insisted on explaining why they think your idea is stupid. "Hey, nerd, make me another ET, except make the hero a dog, and set it on Mars" is exactly how you prompt an AI:

https://pluralistic.net/2023/08/20/everything-made-by-an-ai-is-in-the-public-domain/

Corporate design's job is to produce the seeming of intention without any intender. The "personality" we're meant to sense when we encounter corporate design isn't the designer's, nor the art director's, nor even the CEO's. The "personality" is meant to be the slow AI's, but a corporation doesn't have a personality.

In his 2018 short story "Noon in the antilibrary," Karl Schroeder describes an "antilibrary" as an endlessly deep anaerobic lagoon of generative botshit:

https://www.technologyreview.com/2018/08/18/104097/noon-in-the-antilibrary/

The antilibrary is a generative AI system that can produce entire librarys’-worth of fake books with fake authors, fake citations by other fake experts with their own fake books and biographies and fake social media accounts, on-demand and instantly. It was speculation in 2018; it’s possible now. Creating an antilibrary is just a matter of investing in a sufficient number of graphics cards and electricity.

https://kschroeder.substack.com/p/after-the-internet

Reading Karl's reflections on the antilibrary crystallized something for me that I've been thinking about for a quarter-century, since I interviewed Gibson at the Penguin offices in north Toronto. It snapped something into place that I've trying to fit since encountering Henry's thoughts on the "eeriness" of AI work and the intent without an intender.

It made me realize why I dislike AI art so much, on a deep, aesthetic level. The point of an image generator is to buffer the intention of the prompter (which might be genuinely creative and bursting with personality) in layers of automated decision-making that flense the final product of any hint of the mind that caused its creation.

The most febrile, deeply weird and authentic prompts of the most excluded outsiders produce images that feel the same as the corporate AI illustrations that project the illusion of personality from the immortal, transhuman colony organism that is the limited liability corporation.

AI art is born coopted. Even the 4chan equivalent of AI – the deeply transgressive and immoral nonconsensual pornography – feels no different from the "official" AI porn churned out by "real" pornographers. "Shrimp Jesus" and other SEO-optimized Facebook slop is so uncanny because it is simultaneously "weird" ("that which does not belong") and yet it belongs in the same aesthetic bucket of the most anodyne Corporate Memphis ephemera:

https://en.wikipedia.org/wiki/Corporate_Memphis

We call it "generative" but AI art can't generate the kind of turnover that aerates the aesthetic soil. An artform that can't be transgressive is sterile, stillborn, a dead end.

Support me this summer on the Clarion Write-A-Thon and help raise money for the Clarion Science Fiction and Fantasy Writers' Workshop!

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/07/20/ransom-note-force-field/#antilibraries

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

--

Jake (modified) https://commons.wikimedia.org/wiki/File:1970s_fanzines_(21224199545).jpg

CC BY 2.0 https://creativecommons.org/licenses/by/2.0/deed.en

677 notes

·

View notes

Text

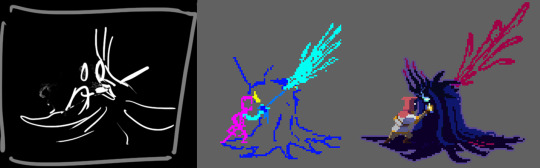

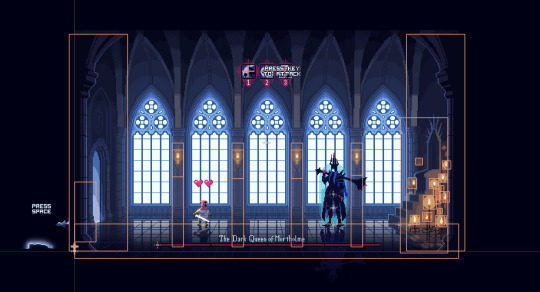

Mortholme Post-Mortem

The Dark Queen of Mortholme has been out for two weeks, and I've just been given an excellent excuse to write some more about its creation by a lenghty anonymous ask.

Under the cut, hindsight on the year spent making Mortholme and answers to questions about game dev, grouped under the following topics:

Time spent on development Programming Obstacles Godot Animation Pixel art Environment assets Writing Completion Release

Regarding time spent on development

Nope, I’ve got no idea anymore how long I spent on Mortholme. It took a year but during that time I worked on like two other games and whatever else. And although I started with the art, I worked on all parts simultaneously to avoid getting bored. This is what I can say:

Art took a ridiculous amount of time, but that was by choice (or compulsion, one might say). I get very excitable and particular about it. At most I was making about one or two Hero animations in a day (for a total of 8 + upgraded versions), but anything involving the Queen took multiple times longer. When I made the excecutive decision that her final form was going to have a bazillion tentacles I gave up on scheduling altogether.

Coding went quickly at the start when I was knocking out a feature after another, until it became the ultimate slow-burn hurdle at the end. Testing, bugfixing, and playing Jenga with increasingly unwieldy code kept oozing from one week to the next. For months, probably? My memory’s shot but I have a mark on my calendar on the 18th of August that says “Mortholme done”. Must’ve been some optimistic deadline before the ooze.

Writing happened in extremely productive week-long bursts followed by nothing but nitpicky editing while I focused on other stuff. Winner in the “changed most often” category, for sure.

Sound was straightforward, after finishing a new set of animations I spent a day or two to record and edit SFX for them. Music I originally scheduled two weeks for, but hubris and desire for more variants bumped it to like a month.

Regarding programming

The Hero AI is certainly the part that I spent most of my coding time on. The basic way the guaranteed dodging works is that all the Queen’s attacks send a signal to the Hero, who calculates a “danger zone” based on the type of attack and the Queen’s location. Then, if the Hero is able to dodge that particular attack (a probability based on how much it's been used & story progression), they run a function to dodge it.

Each attack has its own algorithm that produces the best safe target position to go to based on the Hero’s current position (and other necessary actions like jumping). Those algorithms needed a whole lot of testing to code counters for all the scenarios that might trip the Hero up.

The easiest or at least most fun parts for me to code are the extra bells and whistles that aren’t critical but add flair. Like in the Hero’s case, the little touches that make them seem more human: a reaction speed delay that increases over time, random motions and overcompensation that decrease as they gain focus, late-game Hero taking prioritising aggressive positiniong, a “wait for last second” function that lets the Hero calculate how long it’ll take them to move to safety and use the information to squeeze an extra attack in…

The hardest attack was the magic circle, as it introduced a problem in my code so far. The second flare can overlap with other attacks, meaning the Hero had to keep track of two danger zones at once. For a brief time I wanted to create a whole new system that would constantly update a map of all current danger zones—that would allow for any number of overlapping attacks, which would be really cool! Unfortunately it didn’t gel with my existing code, and I couldn’t figure out its multitudes of problems since, well…

Regarding obstacles

Thing is, I’m hot garbage as a programmer. My game dev’s all self-taught nonsense. So after a week of failing to get this cool system to work, I scrapped it and instead made a spaghetti code monstrosity that made magic circle run on a separate danger zone, and decided I’d make no more overlapping attacks. That’s easy; I just had to buffer the timing of the animation locks so that the Hero would always have time to move away. (I still wanted to keep the magic circle, since it’s fun for the player to try and trick the Hero with it.)

There’s my least pretty yet practical solo dev advice: if you get stuck because you can’t do something, you can certainly try to learn how to do it, but occasionally the only way to finish a project within a decade to work around those parts and let them be a bit crap.

I’m happy to use design trickery, writing and art to cover for my coding skills. Like, despite the anonymous asker’s description, the Hero’s dodging is actually far from perfect. I knew there was no way it was ever going to be, which is why I wrote special dialogue to account for a player finding an exploit that breaks the intended gameplay. (And indeed, when the game was launched, someone immediately found it!)

Regarding Godot

It’s lovely! I switched from Unity years ago and it’s so much simpler and more considerate of 2D games. The way its node system emphasises modularity has improved my coding a lot.

New users should be aware that a lot of tutorials and advice you find online may be for Godot 3. If something doesn’t work, search for what the Godot 4 equivalent is.

Regarding animation

I’m a professional animator, so my list of tips and techniques is a tad long… I’ll just give a few resource recommendations: read up on the classic 12 principles of animation (or the The Illusion of Life, if you’d like the whole book) and test each out for yourself. Not every animation needs all of these principles, but basically every time you’ll be looking at an animation and wondering how to make it better, the answer will be in paying attention to one or more of them.

Game animation is its own beast, and different genres have their own needs. I’d recommend studying animations that do what you’d like to do, frame by frame. If you’re unsure of how exactly to analyse animation for its techniques, youtube channel New Frame Plus shows an excellent example.

Oh, and film yourself some references! The Queen demanded so much pretend mace swinging that it broke my hoover.

Regarding pixel art

The pixel art style was picked for two reasons: 1. to evoke a retro game feel to emphasise the meta nature of the narrative, and 2. because it’s faster and more forgiving to animate in than any of my other options.

At the very start I was into the idea of doing a painterly style—Hollow Knight was my first soulslike—but quickly realised that I’d either have to spend hundreds of hours animating the characters, or design them in a simplistic way that I deemed too cutesy for this particular game. (Hollow Knight style, one day I’d love to emulate you…)

I don’t use a dedicated program, just Photoshop for everything like a chump. Pixel art doesn’t need anything fancy, although I’m sure specialist programs will keep it nice and simple.

Pixel art’s funny; its limitations make it dependent on symbolism, shortcuts and viewer interpretation. You could search for some tutorials on basic principles (like avoiding “jaggies” or the importance of contrast), but ultimately you’ll simply want to get a start in it to find your own confidence in it. I began dabbling years ago by asking for character requests on Tumblr and doodling them in pixels in whatever way I could think of.

Regarding environment assets

The Queen’s throne room consists of two main sprites—one background and one separate bit of the door for the Hero disappear behind—and then about fifty more for the lighting setup. There’s six different candle animations, there’s lines on the floor that need to go on top of character reflections, all the candle circles and lit objects are separated so that the candles can be extinguished asynchronously; and then there’s purple phase 2 versions of all of the above.

This is all rather dumb. There’s simpler ways in Godot to do 2D lighting with shaders and a built-in system (I use those too), but I wanted control over the exact colours so I just drew everything in Photoshop the way I wanted it. Still, it highlights how mostly you only need a single background asset and separated foreground objects; except if you need animated objects or stuff that needs to change while the game’s running, you’ll get a whole bunch more.

I wholeheartedly applaud having a go at making your own game art, even if you don’t have any art background! The potential for cohesion in all aspects of design—art, game, narrative, sound—is at the heart of why video games are such an exciting medium!

Regarding writing

Finding the voices of the Queen and the Hero was the quick part of the process. They figured that out they are almost as soon as writing started. I’d been mulling this game over in my mind for so long, I had already a specific idea in mind of what the two of them stood for, conceptually and thematically. When they started bantering, I felt like all I really had to do was to guide it along the storyline, and then polish.

What ended up taking so long was that there was too much for them to say for how short the game needed to be to not feel overstretched. Since I’d decided to go with two dialogue options on my linear story, it at least gave me twice the amount of dialogue that I got to write, but it wasn’t enough!

The first large-scale rewrite was me going over the first draft and squeezing in more interesting things for the Queen and the Hero to discuss, more branching paths and booleans. There was this whole thing where the player’s their dialogue choices over multiple conversations would lead them to about four alternate interpretations of why the Queen is the way she is. This was around the time I happened to finally play Disco Elysium, so of course I also decided to also add a ton of microreactivity (ie. small changes in dialogue that acknowledge earlier player choices) to cram in even more alternate dialogue. I spent ages tinkering with the exact nuances till I was real proud of it.

Right until the playtesters of this convoluted contraption found the story to be unclear and confusing. For some reason. So for my final rewrite, I picked out my favourite bits and cut everything else. With the extra branching gone, there was more room to improve the pacing so the core of the story could breathe. The microreactivity got to stay, at least!

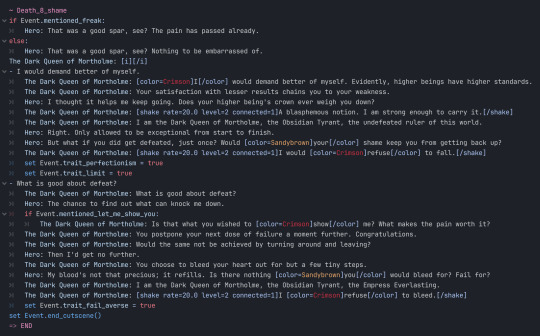

A sample of old dialogue from the overcomplicated version:

Regarding completion

The question was “what kept me going to actually finish the game, since that is a point many games never even get to meet?” and it’s a great one because I forgot that’s a thing. Difficulties finishing projects, that is—I used to think it was hard, but not for many years. Maybe I’ve completed so many small-scale games already that it hardly seems that unreasonable of an expectation? (Game jams. You should do game jams.)

I honestly never had any doubt I was going to finish Mortholme. When I started in late autumn last year, I was honestly expecting the concept to be too clunky to properly function; but I wished to indulge in silliness and make it exist anyways. That vision would’ve been easy to finish, a month or two of low stakes messing around, no biggie. (Like a game jam!)

Those months ran out quickly as I had too much fun making the art to stop. It must’ve been around the time I made this recording that it occurred to me that even if the game was going to be clunky, it could still genuinely work on the back of good enough storytelling technique—not just writing, but also the animation and the Hero’s evolving behaviour during the gameplay segments which I’d been worried about. The reaction to my early blogging was also heartening. Other people could also imagine how this narrative could be interesting!

A few weeks after that I started planning out the narrative beats I wanted the dialogue to reach, and came to the conclusion that I really, really wanted it to work. Other people had to see this shit, I thought. There’s got to be freaks out there who’d love to experience this tragedy, and I’m eager to deliver.

That’s why I was fine with the project’s timeline stretching out. If attention to detail and artistry was going to make this weird little story actually come to life, then great, because that’s exactly the part of development I love doing most. Projects taking longer than expected can be frustrating, but accepting that as a common part of game dev is what allows confidence in eventual their completion regardless.

Regarding release

Dear anonymous’s questions didn’t involve post-release concerns, but it seems fitting to wrap up the post-mortem by talking about the two things about Mortholme's launch that were firsts for me, and thus I was unprepared for.

1. This was the first action game I've coded. Well, sort of—I consider Mortholme to be a story first and foremost, with gameplay so purposefully obnoxious it benefits from not being thought of as a “normal” game. Still, the action elements are there. For someone who usually sticks to making puzzle games since they’re easier to code, this was my most mechanically fragile game yet. So despite all my attempts at playtesting and failsafes, it had a whole bunch of bugs on release.

Game-breaking bugs, really obvious bugs, weird and confusing bugs. It took me over a week to fix all that was reported (and I’m only hoping they indeed are fully fixed). That feels slow; I should’ve expected it was going to break so I could’ve been faster to respond. Ah well, next time I know what I’ll be booking my post-release week for.

2. This was my first game that I let players give me money for. Sure, it’s pay-what-you-want, but for someone as allergic to business decisions as I am, it was a big step. I guess I was worried of being shown that nobody would consider my art worth financial compensation. Well, uh, that fear has gone out of the window now. I’m blown away by how kind and generous the players of Mortholme have been with their donations.

I can’t imagine it's likely to earn a living wage from pouring hundreds of hours into pay-what-you-want passion projects, but the support has me heartened to seek out a future where I could make these weird stories and a living both.

Those were the unexpected parts. The part I must admit I was expecting—but still infinitely grateful for—was that Mortholme did in fact reach them freaks who’d find it interesting. The responses, comments, analyses, fan works (there’s fic and art!! the dream!!), inspiration, and questions (like the ones prompting me to write this post-mortem) people have shared with me thanks to Mortholme… They’ve all truly been what I was hoping for back when I first gave myself emotions thinking about a mean megalomaniac and stubborn dipshit.

Thank you for reading, thank you for playing, and thank you for being around.

#so that got a bit verbose. you simply cannot give me this many salient questions and expect me otherwise tbh#the dark queen of mortholme#indie dev#game dev#dev log

204 notes

·

View notes

Text

Reactions to The Light's Chapter 420

Brief summary: Cale eats apple pie and talks to the System AI. Cale finds out about the videos. Flashback to what happened after Cale fainted.

===========

Cale had the same thought as us! That Alberu must be talking to the Sun God in his dream if he still had yet to wake up. He wondered though if anyone had informed the Roan Kingdom of what happened to Alberu.

Cale: I met the GoD in my dream Eru: Then Alberu… Cale: Maybe he's meeting the Sun God. Eru: Let's wait a little longer. Cale: Yes. *looks at Alberu in pity* Raon: Human! This is the 1st time the crown prince has fainted! Why are you looking at him with such pity? My human is worse than the crown prince! Reflect on your actions! Cale: …

Cale couldn't say anything back at Raon because he was willing to faint in order to unleash DA's full powers back then. 😂😂😂

Cale nodded, watching Raon push the apple pie away from his lying mouth. 'Delicious.' Apple pie. Isn't it really delicious when you pass out and wake up to eat it? -You're crazy. He pretended not to hear Super Rock's lament.

I agree with Super Rock on this one, Cale. 😂 You're really crazy if you think like that. 😂😂😂

Cale talked to the System AI and got three quests:

Get the Purification of Chaos skill

Change the attribute of the divine item to a non-attribute item

Protect System from Transparent Corp.

It seemed that System AI was caught or now being suspected by Transparent Co. that it was doing something contrary to the Transparent Bloods' goals. So it asked Cale to protect them, and Cale agreed.

There was still one quest Cale hadn't finished. [*The aftermath of the birth is spreading rapidly!] [*Legends are being made in New World and beyond to Earth 3!] [*Reward tier decisions will be delayed!] The quest “The Birth of Eden Miru” had an achievement rate of over 300%. However, another change was made to the quest's completion rate calculation. [*There are signs of new changes coming to the birth legend]. [*It appears that a greater legend is being created, and that the birth legend will be part of it]. [*If this is the case, the achievement rate will be calculated based on the greater legend]. [*Achievement rate: 412%]

So Cale's legendary actions fighting the GoC cult even affected Eden's birth quest? Just what kind of reward Cale would get if the achievement rate had already reached 412% and still rising up at this moment? 🤣🤣🤣

The videos that Crazy Attention Seeker recorded were released to the public, and the reactions were chaotic. Fortunately, the last video he took, the one after Cale fainted, was not posted to the forums because Cale's group confiscated the video recorder.

After Cale and Alberu fainted, the GoC cult was still in shock at GoC's temporary appearance and the saint's death. So their only enemy was the two wanderers. But a new ally came to save them - the dark elf NPCs of Hellhole.

The reason why Alberu and Rosalyn disguised themselves as beggars and headed to Hellhole was because of Alberu's quest:

Main Quest 1 [Become the first Dark Elf Emperor!] - In progress -Linked Subquest 5 [Get the recognition of the Witch of the Jungle] - In progress

The Witch of the Jungle was a dark elf, so the two had gone to Hellhole and met the dark elf NPCs. And now, the dark elves appeared in the canyon and looked at the unconscious Alberu. Rosalyn told Cale's group that the dark elves were their allies.

Ending Remarks So many quest info today. Next chapter would probably be more explanation about the dark elves and Alberu and Rosalyn's first meeting with them.

103 notes

·

View notes

Text

Embrace the future of data analysis with Generative AI. Elevate your Business Intelligence to new heights.

#Advanced Analytics Tools#AI Predictive Analytics for Strategic Decision-Making#AI Predictive Analytics#AI-Based Forecasting Algorithms#AI-Based Forecasting#AI-Enabled Business Reporting Solutions#AI-Powered Data Visualization#Decision Support Systems with AI-Driven Insights#Generative AI in AI-Powered Business Intelligence#Generative AI in Business Intelligence Frameworks#Revolutionary Power of Generative AI

0 notes

Text

Transform your approach to business intelligence with intuitive AI interfaces. Drive innovation and growth like never before.

#Advanced Analytics Tools#AI Predictive Analytics for Strategic Decision-Making#AI Predictive Analytics#AI-Based Forecasting Algorithms#AI-Based Forecasting#AI-Enabled Business Reporting Solutions#AI-Powered Data Visualization#Decision Support Systems with AI-Driven Insights#Generative AI in AI-Powered Business Intelligence#Generative AI in Business Intelligence Frameworks#Revolutionary Power of Generative AI

0 notes