#AMD EPYC server

Explore tagged Tumblr posts

Text

High-Density Server for HPC & Edge – HexaData HD‑H242‑Z10

The HexaData HD‑H242‑Z10 Ver Gen001 is a 2U high-density server with 4 independent single-socket nodes powered by AMD EPYC™ 7003 CPUs. It offers up to 32 DDR4 DIMMs, NVMe storage, PCIe Gen4 support, and redundant 1200W PSUs. Designed for HPC, data analytics, 5G, and edge deployments, it delivers performance and efficiency in a compact footprint. For more details Visit: Hexadata HD-H242-Z10 Ver: Gen001 | High Density Server Page

#high density server#AMD EPYC server#2U server#data center hardware#edge computing server#5G infrastructure#HPC server#NVMe server#enterprise server#multi-node server#server with redundant PSU#DDR4 memory server#PCIe Gen4 server#rackmount server#remote management server

0 notes

Text

Contabo Web Hosting Boost Efficiency with AMD EPYC Servers

AMD EPYC Servers Are Best for Hosters Wanting Performance, Power Efficiency, and Value

Webhost Contabo

Why Contabo Picked AMD

Contabo offers bare-metal hosting and high-performance VPS using AMD EPYC servers. Contabo's Bare Metal offering now incorporates the AMD EPYC 9224 (formerly “Genoa”) processor for performance, efficiency, and security.

A Contabo blog article explained why they chose AMD, noting its performance, efficiency, security, and innovation improvements.

AMD EPYC 9224 processors have DDR5 memory, AMD Infinity Guard security, and better energy efficiency.

In the competitive hosting sector, speed to market is key. Hosts often provide the latest gear to attract customers before their competitors. Others specialise in market-unserved setups.

AMD's fast performance and advanced features help hosters stay ahead.

As computing evolves, hosters serve a specialised market by offering cloud, bare-metal, virtual private servers, and managed hosting services, unlike hyperscalers like AWS and Azure. The business is rewarding but difficult: Their clientele seek competitive pricing, performance, and flexibility.

Hosters have traditionally created and delivered services using midrange to high-end server architecture. Despite their scalability and reliability, these servers may be prohibitively expensive for smaller clients or workloads. To stay competitive, several hosters have turned to entry-level server CPU platforms that offer performance, value, and energy efficiency.

Hosting Without Metal

In Europe, geographical limits and data protection legislation give hosts an advantage over hyperscalers in a fragmented market. Bare-metal hosting, where clients rent physical servers rather than virtual ones, dominates the European hosting business. Bare-metal hosting gives clients full control, root access, and customisation over a single piece of hardware, unlike cloud-based solutions that charge clients for a fixed amount of compute power from a provider-defined pool.

This type of business must offer affordable hardware combinations. OVHcloud and others want the latest AMD EPYC processors, especially the 4004 series, which balances cost, efficiency, and raw performance, because to rising energy prices and workload demands.

AMD EPYC 4000 series processors offer customisable versatility and reliable performance.

AMD Benefits Hosters

Hosters optimise platform architecture, cooling, and hardware use to maximise ROI on tight margins. Controlling TCO and quickly recouping hardware expenditures are crucial.

AMD designs its products to address hosters' business concerns because it knows them. AMD's product value lets hosts recoup hardware costs without sacrificing quality. AMD EPYC stands out in these ways:

Unbeatable Price-Performance Ratio: Price sensitivity dominates the hosting sector, especially at the low end where huge volume earns money but clients don't need “yestertech.” AMD EPYC 4000 series CPUs are affordable, have several core counts, and perform well in single-threaded mode. Hosters can boost product appeal by renting sturdy setups at competitive pricing.

Power utilisation is a major operating cost for hosts. Servers often idle, which raises electricity expenses. AMD will provide 65-watt 12-core and 16-core CPUs for hosters that want to save energy without sacrificing performance.

Due to their clients' diverse needs, hosts need a streamlined portfolio with powerful performance options across a range of price points. AMD's well-defined product line lets hosters offer scalable solutions without too many SKUs. Hosters can simplify inventories and procurement by offering many computer options.

Security & Reliability: AMD EPYC 4000 series CPUs enable server operating systems, ECC memory, Secure Boot, and TSME to power end users' daily processing needs.

AMD partners with Supermicro, Lenovo, ASRock Rack, MSi, Gigabyte, Tyan, and others to offer several platform alternatives.

Cloud vs. bare-metal

Bare-metal hosting could be cheaper than cloud computing for clients who demand full infrastructure control. Hyperscalers offer virtualised compute capability, but bare-metal alternatives let consumers rent whole physical servers for additional flexibility and performance consistency.

AMD leads this market because of its large line of AMD EPYC 4004, 7003, 8004, 9004, and 9005 CPU families:

Excellent features and performance throughout price points.

Low power utilisation reduces overhead and enhances sustainability.

Virtualisation lets hosters offer dedicated and multi-tenant environments.

Initial to enterprise RAS features.

Shadow Stack, Secure Encrypted Virtualisation, Transparent Secure Memory Encryption, and Secure Boot are AMD Infinity Guard security features.

Even in cloud solutions, AMD EPYC processors stand out. A wide range of AMD EPYC processors with high thread counts and efficiency can assist cloud-based and VPS hosters accommodate more users per server while meeting strict cost and performance specifications. The AMD EPYC 4004 is a good value, but this diverse market requires several solution options.

Why AMD EPYC is Best for Hosters

Cost, performance, and power economy make AMD the obvious choice for hosters. AMD EPYC CPUs maximise revenue and reduce costs via scalability, performance, and energy economy. The AMD EPYC processor series gives hosts the resources they need to serve high-performance or budget-conscious customers.

0 notes

Text

🚀 AMD Zen 5 & EPYC Turin: A New Era of Performance ⚡️

AMD is shaking up the tech world with its Zen 5 architecture! 💥 The new Ryzen 9000 series promises lightning-fast speeds ⚡️ and incredible energy efficiency 🔋 for gamers 🎮 and content creators 🎥. But the real star? The EPYC Turin processor 🖥️. With a staggering 192 cores 🧠 and 384 threads 🧵, these chips are a game-changer, delivering AI performance up to 5.4x faster than Intel’s best! 🤖✨

Whether you're into gaming, content creation, or running a data center, Zen 5 is built to elevate your performance 🚀 while cutting down on energy use 💡. Get ready for a whole new level of multitasking, speed, and AI magic! 🌟

0 notes

Text

Hetzner ra mắt máy chủ AX162: Hiệu suất mạnh mẽ cho ảo hóa và các ứng dụng đòi hỏi cao

Hetzner vừa ra mắt sản phẩm Máy Chủ Dành Riêng (Dedicated Server) AX162 hoàn toàn mới, dựa trên nền tảng AMD EPYC™ Genoa 9454P! Đây chính là lựa chọn hoàn hảo cho các dự án liên quan đến ảo hóa (virtualization) và đòi hỏi hiệu suất cao. Tính năng nổi bật: Bộ vi xử lý AMD EPYC™ Genoa 9454P: 48 nhân / 96 luồng Hiệu suất gấp đôi thế hệ trước Công nghệ đa luồng đồng thời (SMT) Kiến trúc nhân Zen…

View On WordPress

0 notes

Text

Shifting AMD's server-grade processor line to TSMC's Arizona facility marks the first time the U.S. company will produce these chips domestically, eliminating the supply chain risks associated with manufacturing at TSMC's Taiwan-based fabs.

"We want to have a very resilient supply chain, so Taiwan continues to be a very important part of that supply chain, but the United States is also going to be important and we're expanding our work there, including our work with TSMC and other key supply chain partners," Su said.

News that AMD's fifth-generation EPYC will be produced in America comes one day after Nvidia unveiled new initiatives aimed at strengthening America's chip manufacturing sector:

Nvidia is localizing AI chip and supercomputer manufacturing in the U.S. for the first time, partnering with TSMC, Foxconn, Wistron, Amkor, and SPIL.

Over 1 million square feet of manufacturing space has been commissioned for Blackwell chips and AI supercomputers in Arizona and Texas.

Mass production of these chips is expected within 12–15 months.

Total AI infrastructure by Nvidia could total $500 billion over the next four years.

Restoring U.S. chipmaking capacity is critical for several reasons, but national security stands above all. China can easily disrupt chip supply chains in Taiwan—something that could send shockwaves around the world, impacting U.S. defense production of missiles, tanks, and other critical systems, many of which rely on chips fabricated overseas.

If the U.S. intends to compete—and win—in the 2030s, the ongoing expansion of domestic chip manufacturing is not just welcome news; it's essential for survival.

11 notes

·

View notes

Text

How AMD EPYC Is Transforming Cloud Hosting & AI Solutions

The modern world is observing a tremorous move in technology, and AMD EPYC is at the core of it. Ranging from reevaluating cloud hosting measures to breaking speed records in the case of visual effects (VFX) and artificial intelligence, AMD EPYC is enhancing the upcoming technology. For all those organizations that are looking for high performance, generally in managed cloud hosting plans, AMD EPYC appears as a complete game-changer. This blog deeply delves into how AMD EPYC processors boost cloud solutions and improve computing landscapes.

The Power Behind AMD EPYC Processors

AMD EPYC is built for heavy workloads, ensuring smooth performance across different applications. They outshine in :

Energy Efficiency

Decreased energy usage reduces costs while supporting sustainability-related goals.

Scalability

Ideal for individual cloud hosting service providers, it offers scalable infrastructures perfect for organizations with varying needs.

Speed

AMD EPYC processors broke a variety of world records in terms of computing speed, providing unmatched capabilities for demanding workloads.

All these features make AMD EPYC processors a perfect choice for cloud hosting services and organizations opting for top-tier cloud solutions.

AMD EPYC & Managed Cloud Hosting

Managed cloud hosting service completely depends on cutting-edge servers. AMD EPYC processors improve cloud hosting in various ways:

Flexibility for Diverse Workloads

At the time of running VFX rendering, e-commerce platforms, or AI algorithms, AMD EPYC manages everything smoothly, making it a privilege for everyone.

Enhanced Efficiency

Hosting service providers can increase resource usage with AMD EPYC’s powerful infrastructure. This is very important for top cloud servers providing managed cloud hosting packages.

Increased Security

Nowadays, security is on the top in managed cloud hosting services. AMD EPYC processors have advanced security measures to protect complex data.

Breaking High Speed Records: A Complete Game-Changer for Cloud Hosting Solutions

AMD EPYC has been constantly breaking speed records across different computing situations. Varying from AI/ML to quick big data processing, their proficiency to manage demanding computations at high speed makes them an essential component for the best cloud hosting services providers. Businesses that use AMD EPYC processors for cloud hosting can need:

Quicker processing for data analytics.

Increased performance for high-level AI-based tasks.

Decreased latency in the case of cloud-based apps.

Revolutionzing VFX with AMD EPYC

In the case of innovative industries, VFX needs robust computational power. AMD EPYC helps both VFX studios and artists by:

Rendering advanced frames more quickly than usual.

Supporting concurrent collaboration across worldwide teams with the help of private cloud hosting service providers.

Offering the scalability required for high-level graphic rendering tasks.

With AMD EPYC processors, studios can decrease production deadlines while offering realistic output—a genuine game-changer in terms of VFX.

Leveling Up AI Innovation with AMD EPYC Processors

AI is the essential domain where AMD EPYC outshines. They’re required for training complex ML-based models, processing huge datasets, and utilizing AI solutions.

Key Benefits

Quicker Training Times

Advanced AI-based models can be easily trained, fueling innovation.

Budget-Friendly Performance

Minimum total cost of ownership (TCO) makes AMD EPYC processors the perfect choice for private cloud hosting service providers providing AI capabilities.

Increased Reliability

Constant performance offers reliable working for essential AI-based workloads.

Managed cloud hosting packages fueled by AMD EPYC processors enable organizations to level up their AI initiatives successfully.

AMD EPYC & Cloud Hosting Service Providers: An Ideal Match

The top cloud hosting service providers are constantly adopting AMD EPYC to remain in the lead among all competitors. These types of processors not only increase performance but also help service providers provide scalable cloud hosting plans customized to fulfill customer demands.

For those organizations that are opting for the top-notch cloud hosting services, here’s why AMD EPYC processors excel:

Cost Efficiency

Budget-friendly cloud server price without compromising performance.

Tailored Plans

Managed cloud hosting packages that adapt to essential business needs.

Worldwide Accessibility

Quick connectivity ensures smooth access to cloud assets from any place in the whole world.

Infinitive Host: Providing Cloud Hosting Excellence

Infinitive Host is in the lead in providing reliable cloud hosting plans and solutions. As a top service provider of managed cloud hosting solutions, Infinitive Host provides:

Customized Hosting Plans

Ranging from small businesses to big organizations, Infinitive Host’s managed cloud hosting plans are engineered to fulfill a variety of demands.

24/7 Customer Support

Expert guidance ensures constant services.

Cutting-Edge Servers

Advanced top cloud servers offer high performance.

With Infinitive Host, organizations can unlock the complete power of cloud hosting to determine success.

Conclusion

AMD EPYC technology is forming the technological landscape by breaking several world records, increasing visual effects (VFX) innovation, and boosting AI solutions. Their utilization in managed cloud solutions highlights a new generation of high performance and scalability.

Infinitivehost offers the best cloud services, ensuring organizations have access to advanced services for their development. Even if it’s developing AI-based solutions, enhancing cloud resources, or providing exceptional support, Infinitive Host’s servers are the main force behind all these.

1 note

·

View note

Text

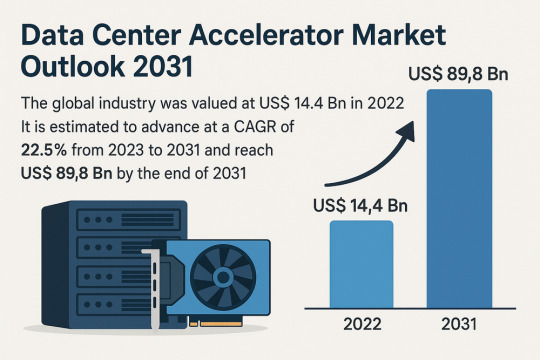

Data Center Accelerator Market Set to Transform AI Infrastructure Landscape by 2031

The global data center accelerator market is poised for exponential growth, projected to rise from USD 14.4 Bn in 2022 to a staggering USD 89.8 Bn by 2031, advancing at a CAGR of 22.5% during the forecast period from 2023 to 2031. Rapid adoption of Artificial Intelligence (AI), Machine Learning (ML), and High-Performance Computing (HPC) is the primary catalyst driving this expansion.

Market Overview: Data center accelerators are specialized hardware components that improve computing performance by efficiently handling intensive workloads. These include Graphics Processing Units (GPUs), Tensor Processing Units (TPUs), Field Programmable Gate Arrays (FPGAs), and Application-Specific Integrated Circuits (ASICs), which complement CPUs by expediting data processing.

Accelerators enable data centers to process massive datasets more efficiently, reduce reliance on servers, and optimize costs a significant advantage in a data-driven world.

Market Drivers & Trends

Rising Demand for High-performance Computing (HPC): The proliferation of data-intensive applications across industries such as healthcare, autonomous driving, financial modeling, and weather forecasting is fueling demand for robust computing resources.

Boom in AI and ML Technologies: The computational requirements of AI and ML are driving the need for accelerators that can handle parallel operations and manage extensive datasets efficiently.

Cloud Computing Expansion: Major players like AWS, Azure, and Google Cloud are investing in infrastructure that leverages accelerators to deliver faster AI-as-a-service platforms.

Latest Market Trends

GPU Dominance: GPUs continue to dominate the market, especially in AI training and inference workloads, due to their capability to handle parallel computations.

Custom Chip Development: Tech giants are increasingly developing custom chips (e.g., Meta’s MTIA and Google's TPUs) tailored to their specific AI processing needs.

Energy Efficiency Focus: Companies are prioritizing the design of accelerators that deliver high computational power with reduced energy consumption, aligning with green data center initiatives.

Key Players and Industry Leaders

Prominent companies shaping the data center accelerator landscape include:

NVIDIA Corporation – A global leader in GPUs powering AI, gaming, and cloud computing.

Intel Corporation – Investing heavily in FPGA and ASIC-based accelerators.

Advanced Micro Devices (AMD) – Recently expanded its EPYC CPU lineup for data centers.

Meta Inc. – Introduced Meta Training and Inference Accelerator (MTIA) chips for internal AI applications.

Google (Alphabet Inc.) – Continues deploying TPUs across its cloud platforms.

Other notable players include Huawei Technologies, Cisco Systems, Dell Inc., Fujitsu, Enflame Technology, Graphcore, and SambaNova Systems.

Recent Developments

March 2023 – NVIDIA introduced a comprehensive Data Center Platform strategy at GTC 2023 to address diverse computational requirements.

June 2023 – AMD launched new EPYC CPUs designed to complement GPU-powered accelerator frameworks.

2023 – Meta Inc. revealed the MTIA chip to improve performance for internal AI workloads.

2023 – Intel announced a four-year roadmap for data center innovation focused on Infrastructure Processing Units (IPUs).

Gain an understanding of key findings from our Report in this sample - https://www.transparencymarketresearch.com/sample/sample.php?flag=S&rep_id=82760

Market Opportunities

Edge Data Center Integration: As computing shifts closer to the edge, opportunities arise for compact and energy-efficient accelerators in edge data centers for real-time analytics and decision-making.

AI in Healthcare and Automotive: As AI adoption grows in precision medicine and autonomous vehicles, demand for accelerators tuned for domain-specific processing will soar.

Emerging Markets: Rising digitization in emerging economies presents substantial opportunities for data center expansion and accelerator deployment.

Future Outlook

With AI, ML, and analytics forming the foundation of next-generation applications, the demand for enhanced computational capabilities will continue to climb. By 2031, the data center accelerator market will likely transform into a foundational element of global IT infrastructure.

Analysts anticipate increasing collaboration between hardware manufacturers and AI software developers to optimize performance across the board. As digital transformation accelerates, companies investing in custom accelerator architectures will gain significant competitive advantages.

Market Segmentation

By Type:

Central Processing Unit (CPU)

Graphics Processing Unit (GPU)

Application-Specific Integrated Circuit (ASIC)

Field-Programmable Gate Array (FPGA)

Others

By Application:

Advanced Data Analytics

AI/ML Training and Inference

Computing

Security and Encryption

Network Functions

Others

Regional Insights

Asia Pacific dominates the global market due to explosive digital content consumption and rapid infrastructure development in countries such as China, India, Japan, and South Korea.

North America holds a significant share due to the presence of major cloud providers, AI startups, and heavy investment in advanced infrastructure. The U.S. remains a critical hub for data center deployment and innovation.

Europe is steadily adopting AI and cloud computing technologies, contributing to increased demand for accelerators in enterprise data centers.

Why Buy This Report?

Comprehensive insights into market drivers, restraints, trends, and opportunities

In-depth analysis of the competitive landscape

Region-wise segmentation with revenue forecasts

Includes strategic developments and key product innovations

Covers historical data from 2017 and forecast till 2031

Delivered in convenient PDF and Excel formats

Frequently Asked Questions (FAQs)

1. What was the size of the global data center accelerator market in 2022? The market was valued at US$ 14.4 Bn in 2022.

2. What is the projected market value by 2031? It is projected to reach US$ 89.8 Bn by the end of 2031.

3. What is the key factor driving market growth? The surge in demand for AI/ML processing and high-performance computing is the major driver.

4. Which region holds the largest market share? Asia Pacific is expected to dominate the global data center accelerator market from 2023 to 2031.

5. Who are the leading companies in the market? Top players include NVIDIA, Intel, AMD, Meta, Google, Huawei, Dell, and Cisco.

6. What type of accelerator dominates the market? GPUs currently dominate the market due to their parallel processing efficiency and widespread adoption in AI/ML applications.

7. What applications are fueling growth? Applications like AI/ML training, advanced analytics, and network security are major contributors to the market's growth.

Explore Latest Research Reports by Transparency Market Research: Tactile Switches Market: https://www.transparencymarketresearch.com/tactile-switches-market.html

GaN Epitaxial Wafers Market: https://www.transparencymarketresearch.com/gan-epitaxial-wafers-market.html

Silicon Carbide MOSFETs Market: https://www.transparencymarketresearch.com/silicon-carbide-mosfets-market.html

Chip Metal Oxide Varistor (MOV) Market: https://www.transparencymarketresearch.com/chip-metal-oxide-varistor-mov-market.html

About Transparency Market Research Transparency Market Research, a global market research company registered at Wilmington, Delaware, United States, provides custom research and consulting services. Our exclusive blend of quantitative forecasting and trends analysis provides forward-looking insights for thousands of decision makers. Our experienced team of Analysts, Researchers, and Consultants use proprietary data sources and various tools & techniques to gather and analyses information. Our data repository is continuously updated and revised by a team of research experts, so that it always reflects the latest trends and information. With a broad research and analysis capability, Transparency Market Research employs rigorous primary and secondary research techniques in developing distinctive data sets and research material for business reports. Contact: Transparency Market Research Inc. CORPORATE HEADQUARTER DOWNTOWN, 1000 N. West Street, Suite 1200, Wilmington, Delaware 19801 USA Tel: +1-518-618-1030 USA - Canada Toll Free: 866-552-3453 Website: https://www.transparencymarketresearch.com Email: [email protected] of Form

Bottom of Form

0 notes

Text

High-Performance 2U 4-Node Server – Hexadata HD-H252-Z10

The Hexadata HD-H252-Z10 Ver: Gen001 is a cutting-edge 2U high-density server featuring 4 rear-access nodes, each powered by AMD EPYC™ 7003 series processors. Designed for HPC, AI, and data analytics workloads, it offers 8-channel DDR4 memory across 32 DIMMs, 24 hot-swappable NVMe/SATA SSD bays, and 8 M.2 PCIe Gen3 x4 slots. With advanced features like OCP 3.0 readiness, Aspeed® AST2500 remote management, and 2000W 80 PLUS Platinum redundant PSU, this server ensures optimal performance and scalability for modern data centers. for more details, Visit- Hexadata Server Page

#High-density server#AMD EPYC Server#2U 4-Node Server#Data Center Solutions#HPC Server#AI Infrastructure#NVMe Storage Server#OCP 3.0 Ready#Remote Server Management#HexaData servers

0 notes

Text

Implementing AI: Step-by-step integration guide for hospitals: Specifications Breakdown, FAQs, and More

Implementing AI: Step-by-step integration guide for hospitals: Specifications Breakdown, FAQs, and More

The healthcare industry is experiencing a transformative shift as artificial intelligence (AI) technologies become increasingly sophisticated and accessible. For hospitals looking to modernize their operations and improve patient outcomes, implementing AI systems represents both an unprecedented opportunity and a complex challenge that requires careful planning and execution.

This comprehensive guide provides healthcare administrators, IT directors, and medical professionals with the essential knowledge needed to successfully integrate AI technologies into hospital environments. From understanding technical specifications to navigating regulatory requirements, we’ll explore every aspect of AI implementation in healthcare settings.

Understanding AI in Healthcare: Core Applications and Benefits

Artificial intelligence in healthcare encompasses a broad range of technologies designed to augment human capabilities, streamline operations, and enhance patient care. Modern AI systems can analyze medical imaging with remarkable precision, predict patient deterioration before clinical symptoms appear, optimize staffing schedules, and automate routine administrative tasks that traditionally consume valuable staff time.

The most impactful AI applications in hospital settings include diagnostic imaging analysis, where machine learning algorithms can detect abnormalities in X-rays, CT scans, and MRIs with accuracy rates that often exceed human radiologists. Predictive analytics systems monitor patient vital signs and electronic health records to identify early warning signs of sepsis, cardiac events, or other critical conditions. Natural language processing tools extract meaningful insights from unstructured clinical notes, while robotic process automation handles insurance verification, appointment scheduling, and billing processes.

Discover the exclusive online health & beauty, designed for people who want to stay healthy and look young.

Technical Specifications for Hospital AI Implementation

Infrastructure Requirements

Successful AI implementation demands robust technological infrastructure capable of handling intensive computational workloads. Hospital networks must support high-bandwidth data transfer, with minimum speeds of 1 Gbps for imaging applications and 100 Mbps for general clinical AI tools. Storage systems require scalable architecture with at least 50 TB initial capacity for medical imaging AI, expandable to petabyte-scale as usage grows.

Server specifications vary by application type, but most AI systems require dedicated GPU resources for machine learning processing. NVIDIA Tesla V100 or A100 cards provide optimal performance for medical imaging analysis, while CPU-intensive applications benefit from Intel Xeon or AMD EPYC processors with minimum 32 cores and 128 GB RAM per server node.

Data Integration and Interoperability

AI systems must seamlessly integrate with existing Electronic Health Record (EHR) platforms, Picture Archiving and Communication Systems (PACS), and Laboratory Information Systems (LIS). HL7 FHIR (Fast Healthcare Interoperability Resources) compliance ensures standardized data exchange between systems, while DICOM (Digital Imaging and Communications in Medicine) standards govern medical imaging data handling.

Database requirements include support for both structured and unstructured data formats, with MongoDB or PostgreSQL recommended for clinical data storage and Apache Kafka for real-time data streaming. Data lakes built on Hadoop or Apache Spark frameworks provide the flexibility needed for advanced analytics and machine learning model training.

Security and Compliance Specifications

Healthcare AI implementations must meet stringent security requirements including HIPAA compliance, SOC 2 Type II certification, and FDA approval where applicable. Encryption standards require AES-256 for data at rest and TLS 1.3 for data in transit. Multi-factor authentication, role-based access controls, and comprehensive audit logging are mandatory components.

Network segmentation isolates AI systems from general hospital networks, with dedicated VLANs and firewall configurations. Regular penetration testing and vulnerability assessments ensure ongoing security posture, while backup and disaster recovery systems maintain 99.99% uptime requirements.

Step-by-Step Implementation Framework

Phase 1: Assessment and Planning (Months 1–3)

The implementation journey begins with comprehensive assessment of current hospital infrastructure, workflow analysis, and stakeholder alignment. Form a cross-functional implementation team including IT leadership, clinical champions, department heads, and external AI consultants. Conduct thorough evaluation of existing systems, identifying integration points and potential bottlenecks.

Develop detailed project timelines, budget allocations, and success metrics. Establish clear governance structures with defined roles and responsibilities for each team member. Create communication plans to keep all stakeholders informed throughout the implementation process.

Phase 2: Infrastructure Preparation (Months 2–4)

Upgrade network infrastructure to support AI workloads, including bandwidth expansion and latency optimization. Install required server hardware and configure GPU clusters for machine learning processing. Implement security measures including network segmentation, access controls, and monitoring systems.

Establish data integration pipelines connecting AI systems with existing EHR, PACS, and laboratory systems. Configure backup and disaster recovery solutions ensuring minimal downtime during transition periods. Test all infrastructure components thoroughly before proceeding to software deployment.

Phase 3: Software Deployment and Configuration (Months 4–6)

Deploy AI software platforms in staged environments, beginning with development and testing systems before production rollout. Configure algorithms and machine learning models for specific hospital use cases and patient populations. Integrate AI tools with clinical workflows, ensuring seamless user experiences for medical staff.

Conduct extensive testing including functionality verification, performance benchmarking, and security validation. Train IT support staff on system administration, troubleshooting procedures, and ongoing maintenance requirements. Establish monitoring and alerting systems to track system performance and identify potential issues.

Phase 4: Clinical Integration and Training (Months 5–7)

Develop comprehensive training programs for clinical staff, tailored to specific roles and responsibilities. Create user documentation, quick reference guides, and video tutorials covering common use cases and troubleshooting procedures. Implement change management strategies to encourage adoption and address resistance to new technologies.

Begin pilot programs with select departments or use cases, gradually expanding scope as confidence and competency grow. Establish feedback mechanisms allowing clinical staff to report issues, suggest improvements, and share success stories. Monitor usage patterns and user satisfaction metrics to guide optimization efforts.

Phase 5: Optimization and Scaling (Months 6–12)

Analyze performance data and user feedback to identify optimization opportunities. Fine-tune algorithms and workflows based on real-world usage patterns and clinical outcomes. Expand AI implementation to additional departments and use cases following proven success patterns.

Develop long-term maintenance and upgrade strategies ensuring continued system effectiveness. Establish partnerships with AI vendors for ongoing support, feature updates, and technology evolution. Create internal capabilities for algorithm customization and performance monitoring.

Regulatory Compliance and Quality Assurance

Healthcare AI implementations must navigate complex regulatory landscapes including FDA approval processes for diagnostic AI tools, HIPAA compliance for patient data protection, and Joint Commission standards for patient safety. Establish quality management systems documenting all validation procedures, performance metrics, and clinical outcomes.

Implement robust testing protocols including algorithm validation on diverse patient populations, bias detection and mitigation strategies, and ongoing performance monitoring. Create audit trails documenting all AI decisions and recommendations for regulatory review and clinical accountability.

Cost Analysis and Return on Investment

AI implementation costs vary significantly based on scope and complexity, with typical hospital projects ranging from $500,000 to $5 million for comprehensive deployments. Infrastructure costs including servers, storage, and networking typically represent 30–40% of total project budgets, while software licensing and professional services account for the remainder.

Expected returns include reduced diagnostic errors, improved operational efficiency, decreased length of stay, and enhanced staff productivity. Quantifiable benefits often justify implementation costs within 18–24 months, with long-term savings continuing to accumulate as AI capabilities expand and mature.

Discover the exclusive online health & beauty, designed for people who want to stay healthy and look young.

Frequently Asked Questions (FAQs)

1. How long does it typically take to implement AI systems in a hospital setting?

Complete AI implementation usually takes 12–18 months from initial planning to full deployment. This timeline includes infrastructure preparation, software configuration, staff training, and gradual rollout across departments. Smaller implementations focusing on specific use cases may complete in 6–9 months, while comprehensive enterprise-wide deployments can extend to 24 months or longer.

2. What are the minimum technical requirements for AI implementation in healthcare?

Minimum requirements include high-speed network connectivity (1 Gbps for imaging applications), dedicated server infrastructure with GPU support, secure data storage systems with 99.99% uptime, and integration capabilities with existing EHR and PACS systems. Most implementations require initial storage capacity of 10–50 TB and processing power equivalent to modern server-grade hardware with minimum 64 GB RAM per application.

3. How do hospitals ensure AI systems comply with HIPAA and other healthcare regulations?

Compliance requires comprehensive security measures including end-to-end encryption, access controls, audit logging, and regular security assessments. AI vendors must provide HIPAA-compliant hosting environments with signed Business Associate Agreements. Hospitals must implement data governance policies, staff training programs, and incident response procedures specifically addressing AI system risks and regulatory requirements.

4. What types of clinical staff training are necessary for AI implementation?

Training programs must address both technical system usage and clinical decision-making with AI assistance. Physicians require education on interpreting AI recommendations, understanding algorithm limitations, and maintaining clinical judgment. Nurses need training on workflow integration and alert management. IT staff require technical training on system administration, troubleshooting, and performance monitoring. Training typically requires 20–40 hours per staff member depending on their role and AI application complexity.

5. How accurate are AI diagnostic tools compared to human physicians?

AI diagnostic accuracy varies by application and clinical context. In medical imaging, AI systems often achieve accuracy rates of 85–95%, sometimes exceeding human radiologist performance for specific conditions like diabetic retinopathy or skin cancer detection. However, AI tools are designed to augment rather than replace clinical judgment, providing additional insights that physicians can incorporate into their diagnostic decision-making process.

6. What ongoing maintenance and support do AI systems require?

AI systems require continuous monitoring of performance metrics, regular algorithm updates, periodic retraining with new data, and ongoing technical support. Hospitals typically allocate 15–25% of initial implementation costs annually for maintenance, including software updates, hardware refresh cycles, staff training, and vendor support services. Internal IT teams need specialized training to manage AI infrastructure and troubleshoot common issues.

7. How do AI systems integrate with existing hospital IT infrastructure?

Modern AI platforms use standard healthcare interoperability protocols including HL7 FHIR and DICOM to integrate with EHR systems, PACS, and laboratory information systems. Integration typically requires API development, data mapping, and workflow configuration to ensure seamless information exchange. Most implementations use middleware solutions to manage data flow between AI systems and existing hospital applications.

8. What are the potential risks and how can hospitals mitigate them?

Primary risks include algorithm bias, system failures, data security breaches, and over-reliance on AI recommendations. Mitigation strategies include diverse training data sets, robust testing procedures, comprehensive backup systems, cybersecurity measures, and continuous staff education on AI limitations. Hospitals should maintain clinical oversight protocols ensuring human physicians retain ultimate decision-making authority.

9. How do hospitals measure ROI and success of AI implementations?

Success metrics include clinical outcomes (reduced diagnostic errors, improved patient safety), operational efficiency (decreased processing time, staff productivity gains), and financial impact (cost savings, revenue enhancement). Hospitals typically track key performance indicators including diagnostic accuracy rates, workflow efficiency improvements, patient satisfaction scores, and quantifiable cost reductions. ROI calculations should include both direct cost savings and indirect benefits like improved staff satisfaction and reduced liability risks.

10. Can smaller hospitals implement AI, or is it only feasible for large health systems?

AI implementation is increasingly accessible to hospitals of all sizes through cloud-based solutions, software-as-a-service models, and vendor partnerships. Smaller hospitals can focus on specific high-impact applications like radiology AI or clinical decision support rather than comprehensive enterprise deployments. Cloud platforms reduce infrastructure requirements and upfront costs, making AI adoption feasible for hospitals with 100–300 beds. Many vendors offer scaled pricing models and implementation support specifically designed for smaller healthcare organizations.

Discover the exclusive online health & beauty, designed for people who want to stay healthy and look young.

Conclusion: Preparing for the Future of Healthcare

AI implementation in hospitals represents a strategic investment in improved patient care, operational efficiency, and competitive positioning. Success requires careful planning, adequate resources, and sustained commitment from leadership and clinical staff. Hospitals that approach AI implementation systematically, with proper attention to technical requirements, regulatory compliance, and change management, will realize significant benefits in patient outcomes and organizational performance.

The healthcare industry’s AI adoption will continue accelerating, making early implementation a competitive advantage. Hospitals beginning their AI journey today position themselves to leverage increasingly sophisticated technologies as they become available, building internal capabilities and organizational readiness for the future of healthcare delivery.

As AI technologies mature and regulatory frameworks evolve, hospitals with established AI programs will be better positioned to adapt and innovate. The investment in AI implementation today creates a foundation for continuous improvement and technological advancement that will benefit patients, staff, and healthcare organizations for years to come.

0 notes

Text

4th Gen AMD EPYC vs Competitors Cloud Performance

4th Gen AMD EPYC Processors

Amazon EC2 M7a instances bring an even greater variety of workloads to AWS and provide up to 50% more compute performance than M6a instances do.

When compared to Amazon EC2 Hpc6a instances, 4th Gen AMD EPYC processor-powered Amazon EC2 Hpc7a instances offer up to 2.5x the performance.

The general availability of Amazon Elastic Compute Cloud (EC2) M7a and Amazon EC2 Hpc7a instances, which provide next-generation performance and efficiency for applications that benefit from high performance, high throughput, and tightly coupled HPC workloads, respectively, was announced today by AMD.

According to David Brown, vice president of Amazon EC2 at AWS, “4th Gen EPYC processor-powered Amazon EC2 instances deliver a differentiating offering for customers with increasingly complex and compute-intensive workloads.” Both M7a and Hpc7a instances enable quick and low-latency internode communications when combined with the strength of the AWS Nitro System, enhancing what our customers can accomplish across our expanding family of Amazon EC2 instances.

“4th Gen EPYC processors have unmatched performance leadership; and our collaboration with AWS is delivering the full 4th Gen EPYC performance per core in new M7a and Hpc7a instances,” said Dan McNamara, senior vice president and general manager, AMD’s Server Business Unit. As a result, “our customers can optimize their businesses while performing more work with less resources across a variety of workloads.”

EC2 is being expanded by AMD and AWS for improved performance and new applications

The Amazon EC2 M7a instances support a variety of workloads and offer customers up to 50% more compute performance than Amazon EC2 M6a instances while also introducing new processor capabilities like AVX3-512, VNNI, and BFloat16. They were first showcased at this year’s AMD Data Center and AI Technology Premiere. The Amazon EC2 M7a instances are excellent for applications that benefit from high performance and high throughput, such as financial applications, simulation modeling, gaming, mid-sized data stores, and more, in addition to providing high performance for general-purpose workloads.

When compared to Amazon EC2 Hpc6a instances, Amazon EC2 Hpc7a instances perform 2.5 times better and are intended for tightly coupled high performance computing workloads. These instances provide AWS customers with increased compute, memory, and network performance to meet the demands of escalating workload complexity. The Hpc7a instances are perfectly suited for compute-intensive, latency-sensitive workloads like computational fluid dynamics, weather forecasting, molecular dynamics, and computational chemistry thanks to their 300 Gb/s EFA network bandwidth. Next-generation server technology is also included in Amazon EC2 Hpc7a instances, such as DDR5 memory, which offers 50% more memory bandwidth than DDR4 memory to enable high-speed access to data in memory.

“DTN deploys a suite of weather data and models that deliver sophisticated, high-resolution outputs and require continual processing of vast amounts of data from inputs across the globe,” said Lars Ewe, Chief Product and Technology Officer, DTN. “To deliver high-level operational intelligence for weather-dependent industries, DTN deploys a suite of weather data and models.” “Thanks to our partnership with AWS, we are able to provide our customers with the most recent weather data, which powers those analytical engines. We are eager to learn how the upcoming Amazon EC2 Hpc7a instances may help us in fulfilling our goal of giving customers the insights they require at the precise moment they are required.

In 2018, AMD and AWS started collaborating, and as of right now, AMD offers over 100 EPYC processor-based instances for general-purpose, compute- and memory-optimized, and high-performance computing workloads. With AMD-based Amazon EC2 instances, customers like DNT, Sprinklr, and TrueCar have all benefited from significant cost and cloud utilization optimization. These new instances allow AWS users to continue benefiting from AMD EPYC processors‘ excellent performance, scalability, and efficiency.

0 notes

Photo

Are AMD’s latest AI advancements about to shake up the industry and challenge NVIDIA’s dominance? With next-gen Instinct MI500 accelerators and EPYC “Verano” CPUs, AMD is preparing to deliver a powerful punch into rack-scale AI solutions. At the recent Advancing AI event, AMD unveiled plans for architecture that targets performance parity with NVIDIA’s Vera Rubin lineup. Using TSMC's N2P process and innovative packaging like CoWoS-L, these accelerators promise a significant leap in AI compute capacity. AMD's focus on rack-scale AI racks, including the new Helio servers, signals a direct challenge to NVIDIA’s market leadership. This move is a game-changer for data centers and enterprise AI deployments, emphasizing energy efficiency and high-end processing. AMD’s commitment to annual product cadence ensures stable, reliable solutions for businesses now looking to expand their AI capabilities. Are you ready for AMD’s next big leap in AI hardware? Will they be able to match or surpass NVIDIA’s performance? Explore more about these innovative products at GroovyComputers.ca, your trusted partner for custom computer builds. What do you think? Will AMD’s fresh approach redefine the AI hardware race? Share your thoughts below! 🚀 #AIHardware #DataCenterSolutions #NvidiaVsAMD #AIInnovation #TechNews #CustomComputers #GroovyComputers #NextGenAI #RackScaleAI #InstinctMI500 #EPYCVerano #AIProcessing

0 notes

Photo

Are AMD’s latest AI advancements about to shake up the industry and challenge NVIDIA’s dominance? With next-gen Instinct MI500 accelerators and EPYC “Verano” CPUs, AMD is preparing to deliver a powerful punch into rack-scale AI solutions. At the recent Advancing AI event, AMD unveiled plans for architecture that targets performance parity with NVIDIA’s Vera Rubin lineup. Using TSMC's N2P process and innovative packaging like CoWoS-L, these accelerators promise a significant leap in AI compute capacity. AMD's focus on rack-scale AI racks, including the new Helio servers, signals a direct challenge to NVIDIA’s market leadership. This move is a game-changer for data centers and enterprise AI deployments, emphasizing energy efficiency and high-end processing. AMD’s commitment to annual product cadence ensures stable, reliable solutions for businesses now looking to expand their AI capabilities. Are you ready for AMD’s next big leap in AI hardware? Will they be able to match or surpass NVIDIA’s performance? Explore more about these innovative products at GroovyComputers.ca, your trusted partner for custom computer builds. What do you think? Will AMD’s fresh approach redefine the AI hardware race? Share your thoughts below! 🚀 #AIHardware #DataCenterSolutions #NvidiaVsAMD #AIInnovation #TechNews #CustomComputers #GroovyComputers #NextGenAI #RackScaleAI #InstinctMI500 #EPYCVerano #AIProcessing

0 notes

Text

AMD EPYC 9654 Dedicated Server: Step-by-Step Guide

Sellecting the right server hardware can create or break your operational efficiency. Submit the AMD EPYC 9654 — a top-tier, enterprise-grade processor designed to deliver unprecedented performance, scalability, and energy efficiency. The EPYC 9654 dedicated server is revolutionary for companies handling demanding workloads like cloud computing, virtualization, AI/ML, or large-scale databases.…

0 notes

Text

HPE Servers' Performance in Data Centers

HPE servers are widely regarded as high-performing, reliable, and well-suited for enterprise data center environments, consistently ranking among the top vendors globally. Here’s a breakdown of their performance across key dimensions:

1. Reliability & Stability (RAS Features)

Mission-Critical Uptime: HPE ProLiant (Gen10/Gen11), Synergy, and Integrity servers incorporate robust RAS (Reliability, Availability, Serviceability) features:

iLO (Integrated Lights-Out): Advanced remote management for monitoring, diagnostics, and repairs.

Smart Array Controllers: Hardware RAID with cache protection against power loss.

Silicon Root of Trust: Hardware-enforced security against firmware tampering.

Predictive analytics via HPE InfoSight for preemptive failure detection.

Result: High MTBF (Mean Time Between Failures) and minimal unplanned downtime.

2. Performance & Scalability

Latest Hardware: Support for newest Intel Xeon Scalable & AMD EPYC CPUs, DDR5 memory, PCIe 5.0, and high-speed NVMe storage.

Workload-Optimized:

ProLiant DL/ML: Versatile for virtualization, databases, and HCI.

Synergy: Composable infrastructure for dynamic resource pooling.

Apollo: High-density compute for HPC/AI.

Scalability: Modular designs (e.g., Synergy frames) allow scaling compute/storage independently.

3. Management & Automation

HPE OneView: Unified infrastructure management for servers, storage, and networking (automates provisioning, updates, and compliance).

Cloud Integration: Native tools for hybrid cloud (e.g., HPE GreenLake) and APIs for Terraform/Ansible.

HPE InfoSight: AI-driven analytics for optimizing performance and predicting issues.

4. Energy Efficiency & Cooling

Silent Smart Cooling: Dynamic fan control tuned for variable workloads.

Thermal Design: Optimized airflow (e.g., HPE Apollo 4000 supports direct liquid cooling).

Energy Star Certifications: ProLiant servers often exceed efficiency standards, reducing power/cooling costs.

5. Security

Firmware Integrity: Silicon Root of Trust ensures secure boot.

Cyber Resilience: Runtime intrusion detection, encrypted memory (AMD SEV-SNP, Intel SGX), and secure erase.

Zero Trust Architecture: Integrated with HPE Aruba networking for end-to-end security.

6. Hybrid Cloud & Edge Integration

HPE GreenLake: Consumption-based "as-a-service" model for on-premises data centers.

Edge Solutions: Compact servers (e.g., Edgeline EL8000) for rugged/remote deployments.

7. Support & Services

HPE Pointnext: Proactive 24/7 support, certified spare parts, and global service coverage.

Firmware/Driver Ecosystem: Regular updates with long-term lifecycle support.

Ideal Use Cases

Enterprise Virtualization: VMware/Hyper-V clusters on ProLiant.

Hybrid Cloud: GreenLake-managed private/hybrid environments.

AI/HPC: Apollo systems for GPU-heavy workloads.

SAP/Oracle: Mission-critical applications on Superdome Flex.

Considerations & Challenges

Cost: Premium pricing vs. white-box/OEM alternatives.

Complexity: Advanced features (e.g., Synergy/OneView) require training.

Ecosystem Lock-in: Best with HPE storage/networking for full integration.

Competitive Positioning

vs Dell PowerEdge: Comparable performance; HPE leads in composable infrastructure (Synergy) and AI-driven ops (InfoSight).

vs Cisco UCS: UCS excels in unified networking; HPE offers broader edge-to-cloud portfolio.

vs Lenovo ThinkSystem: Similar RAS; HPE has stronger hybrid cloud services (GreenLake).

Summary: HPE Server Strengths in Data Centers

Reliability: Industry-leading RAS + iLO management. Automation: AI-driven ops (InfoSight) + composability (Synergy). Efficiency: Energy-optimized designs + liquid cooling support. Security: End-to-end Zero Trust + firmware hardening. Hybrid Cloud: GreenLake consumption model + consistent API-driven management.

Bottom Line: HPE servers excel in demanding, large-scale data centers prioritizing stability, automation, and hybrid cloud flexibility. While priced at a premium, their RAS capabilities, management ecosystem, and global support justify the investment for enterprises with critical workloads. For SMBs or hyperscale web-tier deployments, cost may drive consideration of alternatives.

0 notes

Text

Cheap Dedicated Server in Germany: The Best Hosting Offers for 2025

For businesses and individuals seeking robust online presence, reliable and affordable dedicated servers are crucial. Germany, known for its high-quality infrastructure and data centers, offers an ideal location for hosting services.

The demand for cheap dedicated server solutions has increased as users look for cost-effective ways to enhance their online capabilities without compromising on performance.

This article will explore the best dedicated server hosting offers available in Germany for 2025, providing insights into the benefits and options that can cater to various needs.

By examining the top hosting providers, readers will gain a comprehensive understanding of what to expect from these services and make informed decisions.

Why Choose a Dedicated Server in Germany

Germany has emerged as a hub for dedicated server hosting, offering a unique blend of strategic location, robust infrastructure, and stringent data protection laws. This combination makes it an attractive option for businesses worldwide, particularly those in the US, looking to expand their online presence.

Strategic Location and Network Infrastructure

Germany's central location in Europe provides low latency and high connectivity, making it an ideal hub for businesses targeting the European market. The country's advanced network infrastructure ensures reliable and fast data transfer, enhancing overall performance. With multiple undersea cables and a well-developed internet backbone, Germany offers a robust connectivity framework.

German Data Protection and Security Standards

Germany is renowned for its stringent data protection laws, providing a secure environment for businesses to host their data. The country's commitment to data privacy and security is reflected in its rigorous regulations, ensuring that businesses operating in Germany adhere to high standards of data handling and storage.

Performance Benefits for US-Based Businesses

For US-based businesses, hosting a dedicated server in Germany can significantly enhance their online presence in the European market. The proximity to major European cities reduces latency, improving user experience and potentially boosting conversion rates. Moreover, the robust infrastructure ensures high uptime and reliability, critical factors for businesses aiming to maintain a competitive edge.

Best Cheap Dedicated Server Hosting Offers in Germany for 2025

For businesses seeking a cheap dedicated server in Germany, several top-tier hosting providers stand out in 2025. Germany's strategic location in Europe, coupled with its robust infrastructure, makes it an ideal hub for dedicated server hosting.

Hetzner Online GmbH

Hetzner Online GmbH is a renowned German hosting provider known for its competitive pricing and high-performance dedicated servers. With a strong focus on customer satisfaction, Hetzner offers a range of plans tailored to different business needs.

Pricing and Specifications

Hetzner's dedicated server plans start at competitive prices, with options for Intel Xeon and AMD EPYC processors, up to 128 GB of RAM, and various storage configurations including SSD and NVMe.

Performance Highlights

Hetzner's infrastructure is optimized for high performance, with low latency and high availability. Their dedicated servers are housed in secure, ISO-certified data centers in Germany.

Contabo

Contabo is another prominent player in the German hosting market, offering a range of dedicated server solutions at affordable prices. Their plans are designed to cater to businesses of all sizes.

Pricing and Specifications

Contabo's dedicated servers are priced competitively, with configurations that include the latest processors, generous RAM options, and a variety of storage solutions.

Performance Highlights

Contabo's dedicated servers are known for their reliability and scalability, making them suitable for businesses with growing demands.

netcup GmbH

netcup GmbH is a German hosting provider that has gained a reputation for its affordable and high-quality dedicated server hosting services. They offer a range of plans with flexible configurations.

Pricing and Specifications

netcup's dedicated server plans are competitively priced, with options for various processors, RAM sizes, and storage types, including SSD storage for faster performance.

Performance Highlights

netcup's infrastructure is designed for high performance and security, with dedicated servers located in Germany.

OVHcloud Germany

OVHcloud Germany, part of the global OVHcloud group, offers a comprehensive range of dedicated server hosting solutions. Their services are designed to meet the needs of businesses worldwide.

Pricing and Specifications

OVHcloud Germany's dedicated server plans are priced competitively, with a variety of configurations available, including different processors, RAM, and storage options.

Performance Highlights

OVHcloud Germany's dedicated servers are known for their robust security features and high availability, making them a reliable choice for businesses.

When choosing a cheap dedicated server in Germany, it's essential to consider not just the price, but also the performance, security, and support offered by the hosting provider. The providers listed above are among the top dedicated server hosting offers in Germany for 2025, each with their unique strengths and offerings.

Conclusion

Germany's strategic location, robust network infrastructure, and stringent data protection laws make it an ideal location for businesses seeking reliable dedicated server hosting offers. As discussed, top providers like Hetzner Online GmbH, Contabo, netcup GmbH, and OVHcloud Germany offer competitive pricing and high-performance services.

When selecting a dedicated server hosting provider, consider factors such as server configuration, network quality, and customer support. By choosing a reputable provider, businesses can ensure optimal performance, security, and scalability for their online operations.

By leveraging Germany's dedicated server hosting offers, US-based businesses can enhance their online presence in the European market, improve latency, and comply with EU data protection regulations. Carefully evaluating the available options will help businesses make an informed decision and achieve their goals.

FAQ

What are the benefits of using a cheap dedicated server in Germany?

Using a cheap dedicated server in Germany offers several benefits, including a strategic location with low latency and high connectivity, robust data protection and security standards, and enhanced performance for businesses, particularly those based in the US.

How do German data protection and security standards compare to others?

German data protection and security standards are among the most stringent in the world, ensuring the safety and privacy of data. This is due to the country's robust data protection laws and regulations, which are designed to safeguard sensitive information.

What are the key factors to consider when choosing a dedicated server hosting provider in Germany?

When choosing a dedicated server hosting provider in Germany, key factors to consider include pricing, specifications, performance, and the provider's reputation. It's essential to evaluate these factors to ensure that the chosen provider meets your business needs.

What are some of the best cheap dedicated server hosting offers in Germany for 2025?

Some of the best cheap dedicated server hosting offers in Germany for 2025 are provided by Hetzner Online GmbH, Contabo, netcup GmbH, and OVHcloud Germany. These providers offer competitive pricing, robust specifications, and excellent performance.

How can a dedicated server in Germany improve the performance of my online business?

A dedicated server in Germany can improve the performance of your online business by providing a fast, reliable, and secure hosting environment. This can lead to improved website loading times, enhanced user experience, and increased search engine rankings.

Are cheap dedicated servers in Germany suitable for US-based businesses?

Yes, cheap dedicated servers in Germany can be suitable for US-based businesses. Germany's central location in Europe provides low latency and high connectivity, making it an ideal location for businesses looking to expand their online presence in the European market.

0 notes