#Annotated Datasets

Explore tagged Tumblr posts

Text

How Video Transcription Services Improve AI Training Through Annotated Datasets

Video transcription services play a crucial role in AI training by converting raw video data into structured, annotated datasets, enhancing the accuracy and performance of machine learning models.

#video transcription services#aitraining#Annotated Datasets#machine learning#ultimate sex machine#Data Collection for AI#AI Data Solutions#Video Data Annotation#Improving AI Accuracy

0 notes

Text

Image Annotation Services: Powering AI with Precision

Image annotation services play a critical role in training computer vision models by adding metadata to images. This process involves labeling objects, boundaries, and other visual elements within images, enabling machines to recognize and interpret visual data accurately. From bounding boxes and semantic segmentation to landmark and polygon annotations, these services lay the groundwork for developing AI systems used in self-driving cars, facial recognition, retail automation, and more.

High-quality image annotation requires a blend of skilled human annotators and advanced tools to ensure accuracy, consistency, and scalability. Industries such as healthcare, agriculture, and e-commerce increasingly rely on annotated image datasets to power applications like disease detection, crop monitoring, and product categorization.

At Macgence, our image annotation services combine precision, scalability, and customization. We support a wide range of annotation types tailored to specific use cases, ensuring that your AI models are trained on high-quality, well-structured data. With a commitment to quality assurance and data security, we help businesses accelerate their AI initiatives with confidence.

Whether you're building object detection algorithms or fine-tuning machine learning models, image annotation is the foundation that drives performance and accuracy—making it a vital step in any AI development pipeline.

0 notes

Text

https://justpaste.it/cg903

0 notes

Text

How to Choose the Right OCR Dataset for Your Project

Introduction:

In the realm of Artificial Intelligence and Machine Learning, Optical Character Recognition (OCR) technology is pivotal for the digitization and extraction of textual data from images, scanned documents, and various visual formats. Choosing an appropriate OCR dataset is vital to guarantee precise, efficient, and dependable text recognition for your project. Below are guidelines for selecting the most suitable OCR dataset to meet your specific requirements.

Establish Your Project Specifications

Prior to selecting an OCR Dataset, it is imperative to clearly outline the scope and goals of your project. Consider the following aspects:

What types of documents or images will be processed?

Which languages and scripts must be recognized?

What degree of accuracy and precision is necessary?

Is there a requirement for support of handwritten, printed, or mixed text formats?

What particular industries or applications (such as finance, healthcare, or logistics) does your OCR system aim to serve?

A comprehensive understanding of these specifications will assist in refining your search for the optimal dataset.

Verify Dataset Diversity

A high-quality OCR dataset should encompass a variety of samples that represent real-world discrepancies. Seek datasets that feature:

A range of fonts, sizes, and styles

Diverse document layouts and formats

Various image qualities (including noisy, blurred, and scanned documents)

Combinations of handwritten and printed text

Multi-language and multilingual datasets

Data diversity is crucial for ensuring that your OCR model generalizes effectively and maintains accuracy across various applications.

Assess Labeling Accuracy and Quality

A well-annotated dataset is critical for training a successful OCR model. Confirm that the dataset you select includes:

Accurately labeled text with bounding boxes

High fidelity in transcription and annotation

Well-organized metadata for seamless integration into your machine-learning workflow

Inadequately labeled datasets can result in inaccuracies and inefficiencies in text recognition.

Assess the Size and Scalability of the Dataset

The dimensions of the dataset are pivotal in the training of models. Although larger datasets typically produce superior outcomes, they also demand greater computational resources. Consider the following:

Whether the dataset's size is compatible with your available computational resources

If it is feasible to generate additional labeled data if necessary

The potential for future expansion of the dataset to incorporate new data variations

Striking a balance between dataset size and quality is essential for achieving optimal performance while minimizing unnecessary resource consumption.

Analyze Dataset Licensing and Costs

OCR datasets are subject to various licensing agreements—some are open-source, while others necessitate commercial licenses. Take into account:

Whether the dataset is available at no cost or requires a financial investment

Licensing limitations that could impact the deployment of your project

The cost-effectiveness of acquiring a high-quality dataset compared to developing a custom-labeled dataset

Adhering to licensing agreements is vital to prevent legal issues in the future.

Conduct Tests with Sample Data

Prior to fully committing to an OCR dataset, it is prudent to evaluate it using a small sample of your project’s data. This evaluation assists in determining:

The dataset’s applicability to your specific requirements

The effectiveness of OCR models trained with the dataset

Any potential deficiencies that may necessitate further data augmentation or preprocessing

Conducting pilot tests aids in refining dataset selections before large-scale implementation.

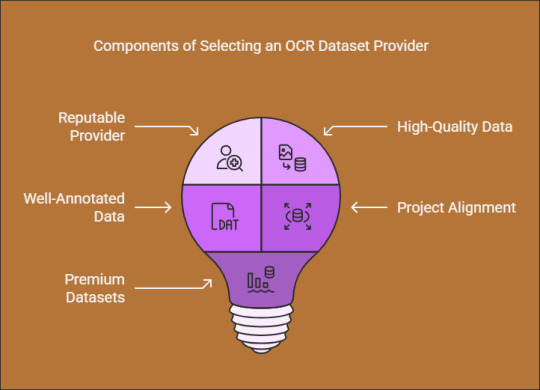

Select a Trustworthy OCR Dataset Provider

Choosing a reputable dataset provider guarantees access to high-quality, well-annotated data that aligns with your project objectives. One such provider. which offers premium OCR datasets tailored for accurate data extraction and AI model training. Explore their OCR dataset solutions for more information.

Conclusion

Selecting an appropriate OCR dataset is essential for developing a precise and effective text recognition model. By assessing the requirements of your project, ensuring a diverse dataset, verifying the accuracy of labels, and considering licensing agreements, you can identify the most fitting dataset for Globose Technology Solutions AI application. Prioritizing high-quality datasets from trusted sources will significantly improve the reliability and performance of your OCR system.

0 notes

Text

The Backbone of Machine Learning: Image Datasets Explained

Introduction

In the realm of artificial intelligence (AI) and image datasets for machine learning (ML) are the unsung heroes that power intelligent systems. These datasets, comprising labeled images, are foundational to training ML models to understand, interpret, and generate insights from visual data. Let's discuss the critical role image datasets play and why they are indispensable for AI success.

What Are Image Datasets?

Image datasets are the collections of images curated for the training, testing, and validation of machine learning models. Many of these datasets come with associated annotations or metadata that provide the context, for example, in the form of object labels, bounding boxes, or segmentation masks. This contextual information is used in supervised learning in which the ultimate goal is teaching a model how to make predictions using labeled examples.

Why Are Image Datasets Important for Machine Learning?

Training Models to Identify PatternsMachine learning models, especially deep learning models such as convolutional neural networks (CNNs), rely on large volumes of data to identify patterns and features in images. A diverse and well-annotated dataset ensures that the model can generalize effectively to new, unseen data.

Fueling Computer Vision Applications From autonomous vehicles to facial recognition systems, computer vision applications rely on large, robust image datasets. Such datasets empower machines to do tasks like object detection, image classification, and semantic segmentation.

Improving Accuracy and Reducing BiasHigh-quality datasets with diverse samples help reduce bias in machine learning models. For example, an inclusive dataset representing various demographics can improve the fairness and accuracy of facial recognition systems.

Types of Image Datasets

General Image Datasets : These are datasets of images spread across various classes. An example is ImageNet, the most significant object classification and detection benchmark.

Domain-specific datasets : These datasets are specifically designed for particular applications. Examples include: medical imagery, like ChestX-ray8, or satellite imagery, like SpaceNet.

Synthetic Datasets : Dynamically generated through either simulations or computer graphics, synthetic datasets can complement or even sometimes replace real data. This is particularly useful in niche applications where data is scarce.

Challenges in Creating Image Datasets

Data Collection : Obtaining sufficient quantities of good-quality images can be time-consuming and resource-intensive.

Annotation Complexity : Annotating images with detailed labels, bounding boxes, or masks is time-consuming and typically requires human expertise or advanced annotation tools.

Achieving Diversity : Diversity in scenarios, environments, and conditions should be achieved to ensure model robustness, but this is a challenging task.

Best Practices for Building Image Datasets

Define Clear Objectives : Understand the specific use case and requirements of your ML model to guide dataset creation.

Prioritize Quality Over Quantity : While large datasets are important, the quality and relevance of the data should take precedence.

Leverage Annotation Tools : Tools like GTS.ai’s Image and Video Annotation Services streamline the annotation process, ensuring precision and efficiency.

Regularly Update the Dataset : Continuously add new samples and annotations to improve model performance over time.

Conclusion

Image datasets have been the very backbone of machine learning, training models to perceive and learn complex visual tasks. As such, the increase in demand for intelligent systems implies that high-quality, annotated datasets are of importance. Businesses and researchers can take advantage of these tools and services, such as those offered by GTS.ai, to construct robust datasets which power next-generation AI solutions. To learn more about how GTS.ai can help with image and video annotation, visit our services page.

0 notes

Text

#off-the-shelf datasets#dataset provider#ai training data#data collection#data annotation#dataset for AI

0 notes

Text

#off-the-shelf datasets#dataset provider#ai training data#data collection#data annotation#dataset for AI

0 notes

Text

0 notes

Text

Unlock the potential of your AI projects with high-quality data. Our comprehensive data solutions provide the accuracy, relevance, and depth needed to propel your AI forward. Access meticulously curated datasets, real-time data streams, and advanced analytics to enhance machine learning models and drive innovation. Whether you’re developing cutting-edge applications or refining existing systems, our data empowers you to achieve superior results. Stay ahead of the competition with the insights and precision that only top-tier data can offer. Transform your AI initiatives and unlock new possibilities with our premium data annotation services. Propel your AI to new heights today.

0 notes

Text

Unlock the potential of your AI models with accurate video transcription services. From precise annotations to seamless data preparation, transcription is essential for scalable AI training.

#video transcription services#video transcription#video data transcription#AI Training#Data Annotation#Accurate Transcription#Dataset Quality#AI Data Preparation#Machine Learning Training#Scalable AI Solutions

0 notes

Text

Medical Datasets represent the cornerstone of healthcare innovation in the AI era. Through careful analysis and interpretation, these datasets empower healthcare professionals to deliver more accurate diagnoses, personalized treatments, and proactive interventions. At Globose Technology Solutions, we are committed to harnessing the transformative power of medical datasets, pushing the boundaries of healthcare excellence, and ushering in a future where every patient will receive the care they deserve.

#Medical Datasets#Healthcare datasets#Healthcare AI Data Collection#Data Collection in Machine Learning#data collection company#datasets#data collection#globose technology solutions#ai#technology#data annotation for ml

0 notes

Text

Annotated Text-to-Speech Datasets for Deep Learning Applications

Introduction:

Text To Speech Dataset technology has undergone significant advancements due to developments in deep learning, allowing machines to produce speech that closely resembles human voice with impressive precision. The success of any TTS system is fundamentally dependent on high-quality, annotated datasets that train models to comprehend and replicate natural-sounding speech. This article delves into the significance of annotated TTS datasets, their various applications, and how organizations can utilize them to create innovative AI solutions.

The Importance of Annotated Datasets in TTS

Annotated TTS datasets are composed of text transcripts aligned with corresponding audio recordings, along with supplementary metadata such as phonetic transcriptions, speaker identities, and prosodic information. These datasets form the essential framework for deep learning models by supplying structured, labeled data that enhances the training process. The quality and variety of these annotations play a crucial role in the model’s capability to produce realistic speech.

Essential Elements of an Annotated TTS Dataset

Text Transcriptions – Precise, time-synchronized text that corresponds to the speech audio.

Phonetic Labels – Annotations at the phoneme level to enhance pronunciation accuracy.

Speaker Information – Identifiers for datasets with multiple speakers to improve voice variety.

Prosody Features – Indicators of pitch, intonation, and stress to enhance expressiveness.

Background Noise Labels – Annotations for both clean and noisy audio samples to ensure robust model training.

Uses of Annotated TTS Datasets

The influence of annotated TTS datasets spans multiple sectors:

Virtual Assistants: AI-powered voice assistants such as Siri, Google Assistant, and Alexa depend on high-quality TTS datasets for seamless interactions.

Audiobooks & Content Narration: Automated voice synthesis is utilized in e-learning platforms and digital storytelling.

Accessibility Solutions: Screen readers designed for visually impaired users benefit from well-annotated datasets.

Customer Support Automation: AI-driven chatbots and IVR systems employ TTS to improve user experience.

Localization and Multilingual Speech Synthesis: Annotated datasets in various languages facilitate the development of global text-to-speech (TTS) applications.

Challenges in TTS Dataset Annotation

Although annotated datasets are essential, the creation of high-quality TTS datasets presents several challenges:

Data Quality and Consistency: Maintaining high standards for recordings and ensuring accurate annotations throughout extensive datasets.

Speaker Diversity: Incorporating a broad spectrum of voices, accents, and speaking styles.

Alignment and Synchronization: Accurately aligning text transcriptions with corresponding speech audio.

Scalability: Effectively annotating large datasets to support deep learning initiatives.

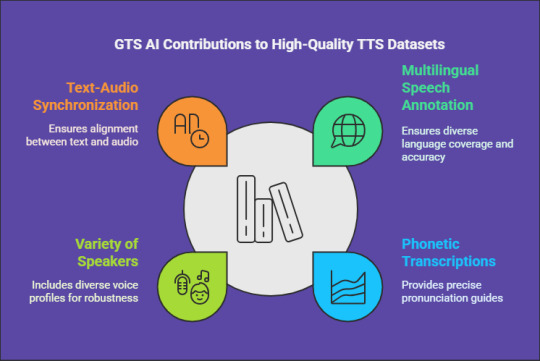

How GTS Can Assist with High-Quality Text Data Collection

For organizations and researchers in need of dependable TTS datasets, GTS AI provides extensive text data collection services. With a focus on multilingual speech annotation, GTS delivers high-quality, well-organized datasets specifically designed for deep learning applications. Their offerings guarantee precise phonetic transcriptions, a variety of speakers, and flawless synchronization between text and audio.

Conclusion

Annotated text-to-speech datasets are vital for the advancement of high-performance speech synthesis models. As deep learning Globose Technology Solutions progresses, the availability of high-quality, diverse, and meticulously annotated datasets will propel the next wave of AI-driven voice applications. Organizations and developers can utilize professional annotation services, such as those provided by GTS, to expedite their AI initiatives and enhance their TTS solutions.

0 notes

Text

#machine learning#annotation#Stock Market Datasets#Market Predictions#Risk Management#Historical Stock Market Dataset#News and Stock Data

0 notes

Text

The Way We Can Decrease Waste in the Food Industry Using Video Annotation

Global concern about waste management in the food business is significant. Waste Annotation is the process of knowing how waste is managed to a bare minimum with video and image annotations as we go further into this article. The food and beverage packaging business generates a lot of waste plastic. Waste Annotation distinguishes various food sector wastes using video and image annotations. Single-use plastic and cans are included. Once recognized, it is simple to segregate the different waste types for the various functions they can perform.

Some Stages Related To Waste Management In The Food Sector

An Image Annotation

It classifies a picture in a training model to train the computer models. The manual annotation stage of the image annotation process involves labeling the photos. They undergo additional processing through a machine learning model.

Annotation For Video

It involves using frame-by-frame labeled lines to capture a variety of visuals in a Video Annotation Service. It makes the machines’ recognition of moving things easy. Except for these, different language annotations in other countries, like Arabic Content Annotation, are used in managing the whole system.

Waste Management In The Food Sector

The main goal of this system is to reduce food waste and its effects on the environment. Food waste can be either solid or liquid, or it can be packaged.

Donation Of Food

To reach out to individuals and families in desperate need of food assistance, several humanitarian organizations and food banks employ image and video annotations to plan vehicle routes. Instead of occasionally stopping to reach these folks, GPS signals like HAIVO Annotation Data Collection for AI can be used to locate convenient distribution spots. Additionally, specific areas where there is a food crisis, or residents have trouble getting adequate food are captured in satellite photos.

Composting

It is a process where organic waste, including food, gets broken down into smaller forms by microorganisms like fungi and bacteria. Additionally, they can be used as fertilizer in the soil to promote plant growth. Convolutional neural networks are a Video Annotation Tool used in waste classification to create models for classifying trash-related images. After trash photographs have been annotated for the training models, mobile apps have been made to assist in garbage identification. The composting process has been observed and tracked using monitors and sensors.

Animal Feed Manufacturing

Using machine learning models and annotated photos, robots sort and grade nutritious leftover food or crops for animals to eat. To determine how long crops will be available to feed cattle, they find any existing areas for improvement in the yields. Robots now use video and picture annotations to classify various plant elements, such as stems. They identify the plant variety that is suitable for a given cattle diet.

Conclusion

Plastic bottles have been used to make pencil bags, backpacks, umbrellas, and nurse seedlings. Even the construction of roads and bricks uses them occasionally. On the other side, some rural communities use food cans as piggy banks and for bulk water storage, among other things.

0 notes