#Automation vs Orchestration

Explore tagged Tumblr posts

Text

What Is Orchestration in DevOps? Understanding Its Role in Automating Workflows- OpsNexa!

Learn what orchestration in DevOps is and how it helps automate and manage complex workflows. Discover the benefits of orchestration for improving efficiency, What Is Orchestration in DevOps? Reducing errors, and accelerating software delivery, along with the tools and strategies commonly used in modern DevOps pipelines.

#DevOps Orchestration#Orchestration in DevOps#Automation vs Orchestration#CI/CD Orchestration#Infrastructure Orchestration#Workflow Orchestration DevOps

0 notes

Text

Collaborative Intelligence: How Human-AI Teams Are Redefining Enterprise Success

In today’s rapidly evolving digital economy, success is no longer defined by human ingenuity alone. It now hinges on collaborative intelligence—the synergistic partnership between humans and artificial intelligence (AI). This fusion is not about replacement; it’s about augmentation. Enterprises that are thriving in 2025 are those that have embraced this shift, harnessing the strengths of both human intuition and machine precision.

The Rise of Collaborative Intelligence

Collaborative intelligence refers to systems and workflows where AI and humans work together to achieve goals that neither could accomplish alone. While humans bring creativity, empathy, and ethical judgment, AI contributes speed, data processing power, and predictive accuracy. When orchestrated correctly, this combination unlocks unprecedented value for organizations—especially in complex, data-heavy environments.

Whether it's in finance, healthcare, supply chain, or marketing, enterprises are discovering that AI enhances decision-making but still relies on human oversight for context and nuance. From automating repetitive tasks to analyzing real-time data for insights, AI frees up employees to focus on strategic thinking and innovation.

Real-Time Decisions, Human Context

The future of enterprise success lies in making decisions faster and smarter. AI systems can parse massive volumes of data in milliseconds. But interpreting the implications of those data points—especially within a business or cultural context—is where human leaders step in. For instance, AI may forecast a market downturn, but it’s the human executive who decides whether to pivot strategy, launch a new product, or maintain course.

Tools like Decision Pulse exemplify this synergy. By delivering AI-powered dashboards, predictive analytics, and natural language queries, it equips leaders with real-time insights while still empowering them to make final calls. It doesn’t replace C-suite decision-makers; it sharpens their focus and extends their cognitive reach.

Driving Innovation with AI-Human Partnerships

When AI is integrated into cross-functional teams, it acts as a digital collaborator. For example, in product development, AI can analyze customer feedback, competitor moves, and market trends, while humans apply creativity to build emotionally resonant and market-ready solutions. The result? Reduced time to market, more precise targeting, and higher success rates.

AI also enhances learning within teams. By analyzing workflows and performance data, it can offer training suggestions and efficiency recommendations tailored to individuals. This creates a feedback loop where both the system and the team continuously improve.

Creating a Culture of Trust

To make collaborative intelligence work, companies must build a culture that promotes transparency, digital literacy, and ethical AI use. Employees need to understand how AI supports their roles—not threatens them. Organizations must also ensure that data is handled responsibly and that algorithms are explainable and fair.

Conclusion

The future of enterprise success is not man vs. machine—it’s man with machine. Collaborative intelligence is reshaping what’s possible in today’s businesses, offering a blueprint for speed, innovation, and resilience. At OfficeSolution, we believe that empowering human-AI teams is not just a strategy—it’s the next frontier of enterprise evolution.

👉 Learn how your business can thrive with collaborative intelligence: Visit https://innovationalofficesolution.com/

0 notes

Text

Adobe Campaign Classic vs Standard: Which Platform Suits Your Marketing Strategy in 2025?

Introduction

If you're evaluating Adobe Campaign Classic vs Standard for your business in 2025, you're not alone. With today’s marketing teams facing rising customer expectations, fragmented touchpoints, and increasing pressure for personalization at scale, choosing the right campaign platform has become a strategic necessity—not just a technical decision.

In this blog, we’ll break down the key differences between Adobe Campaign Classic and Adobe Campaign Standard, what each one is best suited for, and how to align your choice with your marketing strategy in 2025. Whether you’re a data-heavy enterprise or a fast-moving digital team, understanding which platform meets your operational and strategic goals is critical.

Adobe Campaign Classic: Power for Data-Driven Enterprises

Adobe Campaign Classic (ACC) is the preferred choice for enterprises with complex data architectures, legacy systems, and highly customized workflows.

Key Features:

Advanced data modeling and scripting

On-premise or hybrid hosting

Granular control over workflows, segmentation, and campaign orchestration

Seamless integration with legacy CRMs and ERPs

Extensive SQL and API capabilities

Best For:

Regulated industries (finance, healthcare)

Organizations with a dedicated IT or martech team

Businesses needing deep personalization and data access

Companies managing multibrand or multi-market campaigns

Considerations:

Longer implementation time

Higher total cost of ownership (TCO)

Requires strong technical expertise for maintenance

Bottom Line: Choose Adobe Campaign Classic if your business relies on robust backend systems, and your marketing strategy demands granular control over every campaign dimension.

Adobe Campaign Standard: Simplicity and Speed for Agile Marketing

Adobe Campaign Standard (ACS) is built for modern, cloud-first marketing teams looking to launch fast, scale easily, and reduce operational complexity.

Key Features:

Cloud-native and hosted by Adobe

Intuitive drag-and-drop UI

Built-in workflows for email, SMS, push, and web campaigns

Out-of-the-box integrations with Adobe Experience Cloud

Easy onboarding and faster time-to-value

Best For:

Mid-size to large companies scaling quickly

Digital-first brands needing speed over deep customization

Teams focused on fast experimentation and omnichannel reach

Organizations with lean IT support

Considerations:

Limited advanced scripting and data modeling

Less customizable than Classic

Relies on Adobe’s infrastructure and updates

Bottom Line: Go with Adobe Campaign Standard if you need rapid deployment, lower complexity, and strong campaign execution across channels without getting buried in technical depth.

Strategic Considerations: It’s More Than Just a Tool

Choosing Adobe Campaign Classic vs Standard is about more than comparing features—it’s about aligning your platform with your business vision.

Ask yourself:

How complex are your current marketing workflows?

How integrated is your data stack?

Are you prioritizing speed, scale, or control?

Do you need deep segmentation or rapid time-to-market?

What’s your internal team’s technical capacity?

Remember, the tool itself is only as good as how it's implemented and optimized. Without proper change management, training, and process redesign, even the most powerful platform will fall short.

How Xerago Helps You Make the Right Choice

At Xerago, we’ve helped enterprises across industries implement and scale both Adobe Campaign Classic and Standard—driving measurable ROI through smarter campaign architecture and seamless integrations.

With Xerago, you get:

Strategic evaluation of your current martech stack

Platform selection and licensing guidance

Full implementation and custom integration support

Campaign automation strategy and setup

Ongoing optimization, reporting, and training

Whether you're just starting or transitioning from another platform, we ensure that your marketing technology directly supports your business outcomes—not the other way around.

Conclusion

In 2025, the debate between Adobe Campaign Classic vs Standard comes down to what your marketing strategy demands. If your enterprise needs deep data integration and campaign complexity, Classic is your power tool. But if you value agility, fast execution, and ease of use, Standard delivers.

The right platform is the one that fuels your business—not slows it down.

Ready to choose confidently and implement successfully? Talk to Xerago. Let’s transform your campaign execution into a competitive advantage.

0 notes

Text

The Perfect ERP Stack for Logistics & Distribution Companies

In today’s global economy, logistics and distribution are no longer just about moving goods, they’re about orchestrating efficiency, minimizing waste, and maximizing visibility across complex, multi-layered supply chains.

Warehouses must talk to order management. Inventory should update in real-time. Transport delays must trigger instant rerouting. Customers now expect tracking links, not excuses. And all of it must be done faster, smarter, and cheaper.

That’s where an intelligent ERP stack steps in.

The perfect ERP stack for logistics and distribution isn’t a single piece of software, it’s an ecosystem of seamlessly integrated modules designed to deliver end-to-end control, from first mile to last-mile.

In this definitive guide, we break down exactly what your ERP stack needs to deliver competitive edge, real-time intelligence, and customer delight at scale.

The Growing Complexity of Logistics & Distribution

From hyperlocal delivery startups to international freight giants, every logistics company now deals with:

Multichannel order flows

Volatile demand

Fragmented supply chains

Rising customer expectations

Tight delivery SLAs (Service Level Agreements)

Cost pressures and razor-thin margins

And the post-pandemic world hasn’t helped. Disruptions are now a constant. Visibility gaps are costly. Spreadsheets? Obsolete.

Logistics ERP systems need to power:

Real-time coordination

Proactive decision-making

Predictive insights

Seamless collaboration

Why Traditional Systems Are Failing the Industry

Many logistics companies still rely on:

Legacy software with siloed data

Manual coordination across departments

Inflexible tools that can’t scale with new routes or partners

Fragmented warehouse management

Poor integration between customer portals and backend systems

This leads to:

Delays in fulfilment

Inventory mismatches

Routing inefficiencies

Increased operational costs

Unhappy customers

Modern ERP for logistics is the cure.

What Makes an ERP Stack “Perfect” for Logistics

A perfect ERP stack is:

Modular: Adaptable to the size and complexity of your operations

Integrated: Every module shares data — no silos, no sync delays

Scalable: From 1 warehouse to 100, without rebuilding everything

Real-Time: Live updates across inventory, transport, and demand

Cloud-Native: Accessible, flexible, and secure

AI-Powered: Smarter predictions, faster routing, automated alerts

Core Modules Every Logistics ERP Must Include

1.Warehouse Management System (WMS)

Bin-level inventory tracking

Put away optimization

Cycle counting automation

Barcode & RFID integration

2.Transportation Management System (TMS)

Route optimization

Carrier management

Freight audit

Real-time GPS tracking

3.Order Management

Omnichannel order orchestration

Returns handling

Order lifecycle visibility

4.Inventory Management

Stock forecasting

Dead stock alerts

Auto-replenishment

5.Finance & Billing

Automated invoicing

Freight cost reconciliation

Tax compliance

6.Customer Relationship Management (CRM)

Client order history

SLA monitoring

Issue tracking and resolution

Advanced Features to Look For in 2025

AI-based Demand Forecasting

Digital Twins for Warehouses

Autonomous Mobile Robots (AMRs) Integration

Blockchain-enabled Shipment Verification

Voice-enabled Pick-Pack Commands

Cold Chain Monitoring (IoT Sensors)

Carbon Footprint Tracking & Reporting

The future of logistics ERP is intelligent, adaptive, and sustainable.

How Real-Time Data Changes Everything

In logistics, lag equals loss. Real-time ERP platforms provide:

Live inventory updates

ETA recalculations with traffic/weather data

Dynamic re-routing of shipments

Instant alerts on exceptions

Dashboard views of every touchpoint from supplier to customer

Cloud ERP vs. On-Premises: What Works Best?

Cloud ERP is winning, and for good reason:

Lower upfront cost

Faster implementation

Scalable infrastructure

Anywhere access (critical for distributed teams)

Built-in disaster recovery

Seamless updates and AI enhancements

While on-prem may still serve legacy-heavy firms, cloud-native ERP is the future.

Integration with IoT, GPS, and Telematics

Modern ERP stacks thrive on data from connected devices:

IoT sensors track container temperature, humidity, or tilt

GPS integration feeds real-time location into dashboards

Vehicle telematics inform route efficiency and driver behaviour

Smart pallets and tags enhance warehouse throughput

This enables:

Predictive maintenance

Intelligent asset tracking

Shipment anomaly detection

Increased compliance

Warehouse Automation: When ERP Meets Robotics

Smart ERP platforms integrate with:

Conveyor belts and pick robots

AGVs (Automated Guided Vehicles)

AMRs for zone picking

Vision systems for damage detection

The result? → Faster fulfillment → Lower labour dependency → Improved accuracy → 24/7 operations

ERPONE, for instance, supports full robotic orchestration via API-ready architecture.

KPIs to Track with a Smart ERP Stack

OTIF (On-Time, In-Full)

Average Warehouse Throughput

Order Fulfilment Cycle Time

Transport Cost per Mile

Inventory Turnover Rate

Customer SLA Adherence

Returns Ratio

Carbon Emissions per Shipment

ERP dashboards let you track these in real time, empowering instant optimization.

Final Thoughts

The logistics sector is under pressure from consumers, competitors, and climate realities. The winners will be those who:

See what’s happening in real time

Automate what slows them down

Anticipate disruptions

Serve smarter and faster than anyone else

That’s the power of a perfect ERP stack.

It’s not about having more software, it’s about having the right ecosystem: cloud-native, AI-infused, fully integrated, and future-ready.

If your ERP can’t scale with your routes, connect with your assets, and learn from your data, you’re not ready for what’s next.

But with the right stack? You’ll deliver delight at every touchpoint, every time.

0 notes

Text

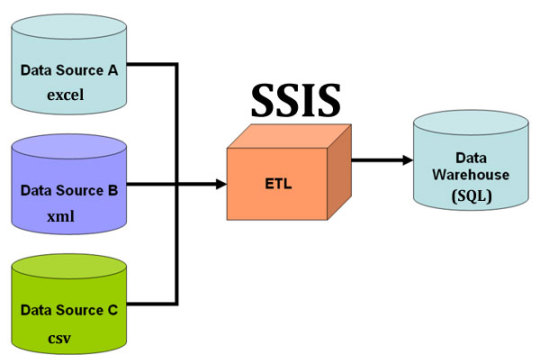

ETL at Scale: Using SSIS for Big Data Workflows

In today's data-driven world, managing and processing large volumes of data efficiently is crucial for businesses. Enter ETL (Extract, Transform, Load) processes, which play a vital role in consolidating data from various sources, transforming it into actionable insights, and loading it into target systems. This blog explores how SQL Server Integration Services (SSIS) can serve as a powerful tool for managing ETL workflows, especially when dealing with big data.

ETL Process

Introduction to ETL (Extract, Transform, Load)

ETL is a fundamental data processing task that involves three key steps:

Extract: Retrieving raw data from various sources, such as databases, flat files, or cloud services.

Transform: Cleaning, standardizing, and enriching the data to fit business needs.

Load: Ingesting the transformed data into target databases or data warehouses for analytics.

These steps are essential for ensuring data integrity, consistency, and usability across an organization.

Overview of SQL Server Integration Services (SSIS)

SSIS is a robust data integration platform from Microsoft, designed to facilitate the creation of high-performance data transformation solutions. It offers a comprehensive suite of tools for building and managing ETL workflows. Key features of SSIS include:

Graphical Interface: SSIS provides a user-friendly design interface for building complex data workflows without extensive coding.

Scalability: It efficiently handles large volumes of data, making it suitable for big data applications.

Extensibility: Users can integrate custom scripts and components to extend the functionality of SSIS packages.

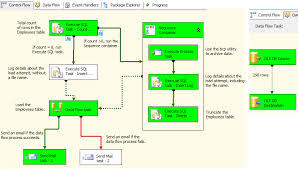

Data Flow vs. Control Flow

Understanding the distinction between data flow and control flow is crucial for leveraging SSIS effectively:

Data Flow: This component manages the movement and transformation of data from sources to destinations. It involves tasks like data extraction, transformation, and loading into target systems.

Control Flow: This manages the execution workflow of ETL tasks. It includes defining the sequence of tasks, setting precedence constraints, and handling events during package execution.

SSIS allows users to orchestrate these flows to create seamless and efficient ETL processes.

Data Flow vs. Control Flow

Integrating Data from Flat Files, Excel, and Cloud Sources

One of the strengths of SSIS is its ability to integrate data from a variety of sources. Whether you're working with flat files, Excel spreadsheets, or cloud-based data, SSIS provides connectors and adapters to streamline data integration.

Flat Files: Importing data from CSV or text files is straightforward with built-in SSIS components.

Excel: SSIS supports Excel as a data source, facilitating the extraction of data from spreadsheets for further processing.

Cloud Sources: With the rise of cloud-based services, SSIS offers connectors for platforms like Azure and AWS, enabling seamless integration of cloud data into your ETL workflows.

Integrating Data

Scheduling and Automation of ETL Tasks

Automation is key to maintaining efficient ETL processes, especially when dealing with big data. SSIS provides robust scheduling and automation capabilities through SQL Server Agent. Users can define schedules, set up alerts, and automate the execution of ETL packages, ensuring timely and consistent data processing.

By leveraging these features, organizations can minimize manual intervention, reduce errors, and ensure data is readily available for decision-making.

Frequently Asked Questions

1. What is the primary benefit of using SSIS for ETL?

SSIS provides a powerful and scalable platform for managing data integration tasks. Its graphical interface and extensive toolset make it accessible for users to build complex ETL solutions efficiently.

2. Can SSIS handle real-time data processing?

While SSIS is primarily designed for batch processing, it can integrate with real-time data sources using additional components and configurations. However, it might require advanced setup to achieve true real-time processing.

3. How does SSIS facilitate error handling in ETL processes?

SSIS offers robust error handling mechanisms, including event handlers, try-catch blocks, and logging features. These tools help identify and manage errors during ETL execution, ensuring data integrity.

4. Is SSIS suitable for cloud-based data sources?

Yes, SSIS supports integration with various cloud platforms, such as Azure and AWS, through dedicated connectors. This makes it suitable for cloud-based data processing tasks.

5. What are some best practices for optimizing SSIS performance?

To optimize SSIS performance, consider parallel processing, using SQL queries for data filtering, minimizing transformations in the data flow, and optimizing memory usage. Regular monitoring and tuning can also enhance performance.

By implementing these best practices, organizations can ensure their ETL processes are efficient and capable of handling large-scale data operations.

Home

instagram

youtube

#SSIS#DataIntegration#ETL#BigDataPipeline#SQLServerTools#DataWorkflow#Automation#SunshineDigitalServices#DataMigration#BusinessIntelligence#Instagram#Youtube

0 notes

Text

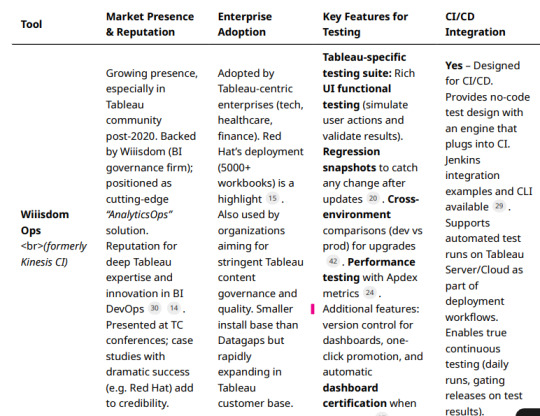

Leading Tableau Test Automation Tools — Comparison and Market Leadership

Automated testing for Tableau dashboards and analytics content is a growing niche, with a few specialized commercial tools vying for leadership.

We compare the top solutions — Datagaps BI Validator, Wiiisdom Ops (formerly Kinesis CI), QuerySurge, and others — based on their marketing presence, enterprise adoption, feature set (especially for functional/regression testing), CI/CD integration, and industry reputation.

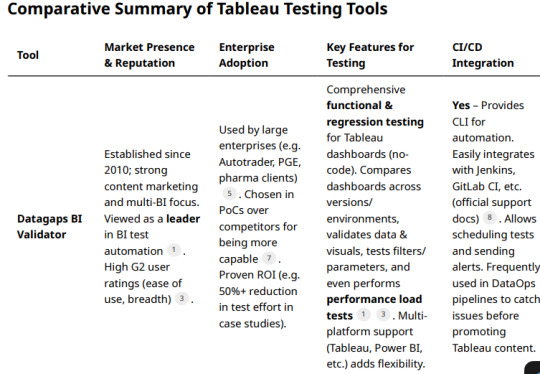

Datagaps BI Validator (DataOps Suite)

Industry sources describe BI Validator as a “leading no-code BI testing tool” . It has recognition on review platforms; for example, G2 reviewers give the overall Datagaps suite a solid rating (around 4.0–4.6/5) and specifically praise the Tableau-focused module

Industry sources describe BI Validator as a “leading no-code BI testing tool” . It has recognition on review platforms; for example, G2 reviewers give the overall Datagaps suite a solid rating (around 4.0–4.6/5) and specifically praise the Tableau-focused module.

Customer Adoption: BI Validator appears to have broad enterprise adoption. Featured customers include Autotrader, Portland General Electric, and University of California, Davis.

A case study mentions a “Pharma Giant” cutting Tableau upgrade testing time by 55% using BI Validator.

Users on forums often recommend Datagaps; one BI professional who evaluated both Datagaps and Kinesis-CI reported that Datagaps was “more capable” and ultimately their choice . Such feedback indicates a strong reputation for reliability in complex enterprise scenarios.

Feature Set: BI Validator offers end-to-end testing for Tableau covering:

Functional regression testing: It can automatically compare workbook data, visuals, and metadata between versions or environments (e.g. before vs. after a Tableau upgrade) . A user notes it enabled automated regression testing of newly developed Tableau content as well as verifying dashboard outputs during database migrations . It tests dashboards, reports, filters, parameters, even PDF exports for changes.

Data validation: It can retrieve data from Tableau reports and validate against databases. One review specifically highlights using BI Validator to check Tableau report data against the source DB efficiently . The tool supports virtually any data source (“you name the datasource, Datagaps has support for it”

UI and layout testing: The platform can compare UI elements and catalog/metadata across environments to catch broken visuals or missing fields post-migration.

Performance testing: Uniquely, BI Validator can simulate concurrent user loads on Tableau Server to test performance and robustness . This allows stress testing of dashboards under multi-user scenarios, complementing functional tests. (This is analogous to Tableau’s TabJolt, but integrated into one suite.) Users have utilized its performance/stress testing feature to benchmark Tableau with different databases.

Datagaps provides a well-rounded test suite (data accuracy, regression, UI regression, performance) tailored for BI platforms. It is designed to be easy to use (no coding; clean UI) — as one enterprise user noted, the client/server toolset is straightforward to install and navigate.

CI/CD Integration: BI Validator is built with DataOps in mind and integrates with CI/CD pipelines. It offers a command-line interface (CLI) and has documented integration with Jenkins and GitLab CI, enabling automated test execution as part of release pipelines . Test plans can be scheduled and triggered automatically, with email notifications on results . This allows teams to include Tableau report validation in continuous integration flows (for example, running a battery of regression tests whenever a data source or workbook is updated). The ability to run via CLI means it can work with any CI orchestrator (Jenkins, Azure DevOps, etc.), and users have leveraged this to incorporate automated Tableau testing in their DevOps processes.

Wiiisdom Ops (formerly Kinesis CI)

Wiiisdom (known for its 360Suite in the SAP BusinessObjects world) has since rebranded Kinesis CI as Wiiisdom Ops for Tableau and heavily markets it as a cornerstone of “AnalyticsOps” (bringing DevOps practices to analytics).

The product is positioned as a solution to help enterprises “trust their Tableau dashboards” by automating testing and certification . Wiiisdom has been active in promoting this tool via webinars, Tableau Conference sessions, e-books, and case studies — indicating a growing marketing presence especially in the Tableau user community. Wiiisdom Ops/Kinesis has a solid (if more niche) reputation. It’s Tableau-exclusive focus is often viewed as strength in depth.

The acquiring company’s CEO noted that Kinesis-CI was a “best-in-breed” technology and a “game-changer” in how it applied CI/CD concepts to BI testing . While not as widely reviewed on generic software sites, its reputation is bolstered by public success stories: for instance, Red Hat implemented Wiiisdom Ops for Tableau and managed to reduce their dashboard testing time “from days to minutes,” while handling thousands of workbooks and data sources . Such testimonials from large enterprises (Red Hat, and also Gustave Roussy Institute in healthcare ) enhance Wiiisdom Ops’ credibility in the industry.

Customer Adoption: Wiiisdom Ops is used by Tableau-centric organizations that require rigorous testing. The Red Hat case study is a flagship example, showing adoption at scale (5,000+ Tableau workbooks) . Other known users include certain financial institutions and healthcare organizations (some case studies are mentioned on Wiiisdom’s site). Given Wiiisdom’s long history with BI governance, many of its existing customers (in the Fortune 500, especially those using Tableau alongside other BI tools) are likely evaluating or adopting Wiiisdom Ops as they extend governance to Tableau.

While overall market share is hard to gauge, the tool is gaining traction specifically among Tableau enterprise customers who need automated testing integrated with their development lifecycle. The acquisition by Wiiisdom also lends it a broader sales network and support infrastructure, likely increasing its adoption since 2021.

Feature Set: Wiiisdom Ops (Kinesis CI) provides a comprehensive test framework for Tableau with a focus on functional, regression, and performance testing of Tableau content.

QuerySurge (BI Tester for Tableau)

Marketing & Reputation: QuerySurge is a well-known data testing automation platform, primarily used for ETL and data warehouse testing. While not Tableau-specific, it offers a module called BI Tester that connects to BI tools (including Tableau) to validate the data in reports. QuerySurge is widely used in the data quality and ETL testing space (with many Fortune 500 users) and is often mentioned as a top solution for data/ETL testing.

Its marketing emphasizes ensuring data accuracy in the “last mile” of BI. However, QuerySurge’s brand is stronger in the data engineering community than specifically in Tableau circles, since it does not perform UI or functional testing of dashboards (it focuses on data correctness beneath the BI layer).

Customer Adoption: QuerySurge has a broad user base across industries for data testing, and some of those users leverage it for Tableau report validation. It’s known that organizations using multiple BI tools (Tableau, Cognos, Power BI, etc.) might use QuerySurge centrally to validate that data shown in reports matches the data in the warehouse. The vendor mentions “dozens of teams” using its BI integrations to visualize test results in tools like Tableau and Power BI , suggesting an ecosystem where QuerySurge ensures data quality and BI tools consume those results.

Notable QuerySurge clients include large financial institutions, insurance companies, and tech firms (as per their case studies), though specific Tableau-centric references are not heavily publicized. As a generic tool, its adoption overlaps with but is not exclusive to Tableau projects

Feature Set: For Tableau testing, QuerySurge’s BI Tester provides a distinct but important capability: datalevel regression testing for Tableau reports. Key features include.

Conclusion:

The Market Leader in Tableau Testing Considering the above, Datagaps BI Validator currently stands out as the best all-around commercial Tableau testing tool leading the market. It edges out others in terms of breadth of features and proven adoption.

Enterprises appreciate its ability to handle everything from data validation to UI regression and performance testing in one package .

Its multi-BI versatility also means a wider user base and community knowledge pool. Many practitioners point to BI Validator as the most “efficient testing tool for Tableau” in practice . That said, the “best” choice can depend on an organization’s specific needs. Wiiisdom Ops (Kinesis) is a very close competitor, especially for organizations focusing solely on Tableau and wanting seamless CI/CD pipeline integration and governance extras.

Wiiisdom Ops has a strong future outlook given its Tableaufocused innovation and success stories like Red Hat (testing time cut from days to minutes) . It might be considered the leader in the Tableau-only segment, and it’s rapidly gaining recognition as Tableau customers pursue DevOps for analytics.

QuerySurge, while not a one-stop solution for Tableau, is the leader for data quality assurance in BI and remains indispensable for teams that prioritize data correctness in reports . It often complements either Datagaps or Wiiisdom by covering the data validation aspect more deeply. In terms of marketing presence and industry buzz, Datagaps and Wiiisdom are both very active:

Datagaps publishes thought leadership on BI testing and touts AI-driven DataOps, whereas Wiiisdom evangelizes AnalyticsOps and often partners with Tableau ecosystem events. Industry analysts have started to note the importance of such tools as BI environments mature.

In conclusion, Datagaps BI Validator is arguably the market leader in Tableau test automation today — with the strongest combination of features, enterprise adoption, and cross-platform support . Wiiisdom Ops (Kinesis CI) is a close runner-up, leading on Tableau-centric continuous testing and rapidly improving its footprint . Organizations with heavy data pipeline testing needs also recognize QuerySurge as an invaluable tool for ensuring Tableau outputs are trustworthy .

All these tools contribute to higher confidence in Tableau dashboards by catching errors and regressions early. As analytics continues to be mission-critical, adopting one of these leading solutions is becoming a best practice for enterprises to safeguard BI quality and enable faster, error-free Tableau deployments

#datagaps#dataops#bivalidator#dataquality#tableau testing#automation#wiiisdom#querysurge#etl testing#bi testing

0 notes

Link

0 notes

Text

Beyond Models: Building AI That Works in the Real World

Artificial Intelligence has moved beyond the research lab. It's writing emails, powering customer support, generating code, automating logistics, and even diagnosing disease. But the gap between what AI models can do in theory and what they should do in practice is still wide.

Building AI that works in the real world isn’t just about creating smarter models. It’s about engineering robust, reliable, and responsible systems that perform under pressure, adapt to change, and operate at scale.

This article explores what it really takes to turn raw AI models into real-world products—and how developers, product leaders, and researchers are redefining what it means to “build AI.”

1. The Myth of the Model

When AI makes headlines, it’s often about models—how big they are, how many parameters they have, or how well they perform on benchmarks. But real-world success depends on much more than model architecture.

The Reality:

Great models fail when paired with bad data.

Perfect accuracy doesn’t matter if users don’t trust the output.

Impressive demos often break in the wild.

Real AI systems are 20% models and 80% engineering, data, infrastructure, and design.

2. From Benchmarks to Behavior

AI development has traditionally focused on benchmarks: static datasets used to evaluate model performance. But once deployed, models must deal with unpredictable inputs, edge cases, and user behavior.

The Shift:

From accuracy to reliability

From static evaluation to dynamic feedback

From performance in isolation to value in context

Benchmarks are useful. But behavior in production is what matters.

3. The AI System Stack: More Than Just a Model

To make AI useful, it must be embedded into systems—ones that can collect data, handle errors, interact with users, and evolve.

Key Layers of the AI System Stack:

a. Data Layer

Continuous data collection and labeling

Data validation, cleansing, augmentation

Synthetic data generation for rare cases

b. Model Layer

Training and fine-tuning

Experimentation and evaluation

Model versioning and reproducibility

c. Serving Layer

Scalable APIs for inference

Real-time vs batch deployment

Latency and cost optimization

d. Orchestration Layer

Multi-step workflows (e.g., agent systems)

Memory, planning, tool use

Retrieval-Augmented Generation (RAG)

e. Monitoring & Feedback Layer

Drift detection, anomaly tracking

User feedback collection

Automated retraining triggers

4. Human-Centered AI: Trust, UX, and Feedback

An AI system is only as useful as its interface. Whether it’s a chatbot, a recommendation engine, or a decision assistant, user trust and usability are critical.

Best Practices:

Show confidence scores and explanations

Offer user overrides and corrections

Provide feedback channels to learn from real use

Great AI design means thinking beyond answers—it means designing interactions.

5. AI Infrastructure: Scaling from Prototype to Product

AI prototypes often run well on a laptop or in a Colab notebook. But scaling to production takes planning.

Infrastructure Priorities:

Reproducibility: Can results be recreated and audited?

Resilience: Can the system handle spikes, downtime, or malformed input?

Observability: Are failures, drifts, and bottlenecks visible in real time?

Popular Tools:

Training: PyTorch, Hugging Face, JAX

Experiment tracking: MLflow, Weights & Biases

Serving: Kubernetes, Triton, Ray Serve

Monitoring: Arize, Fiddler, Prometheus

6. Retrieval-Augmented Generation (RAG): Smarter Outputs

LLMs like GPT-4 are powerful—but they hallucinate. RAG is a strategy that combines an LLM with a retrieval engine to ground responses in real documents.

How It Works:

A user asks a question.

The system searches internal documents for relevant content.

That content is passed to the LLM to inform its answer.

Benefits:

Improved factual accuracy

Lower risk of hallucination

Dynamic adaptation to private or evolving data

RAG is becoming a default approach for enterprise AI assistants, copilots, and document intelligence systems.

7. Agents: From Text Completion to Action

The next step in AI development is agency—building systems that don’t just complete text but can take action, call APIs, use tools, and reason over time.

What Makes an Agent:

Memory: Stores previous interactions and state

Planning: Determines steps needed to reach a goal

Tool Use: Calls calculators, web search, databases, etc.

Autonomy: Makes decisions and adapts to context

Frameworks like LangChain, AutoGen, and CrewAI are making it easier to build such systems.

Agents transform AI from a passive responder into an active problem solver.

8. Challenges of Real-World AI Deployment

Despite progress, several obstacles remain:

Hallucination & Misinformation

Solution: RAG, fact-checking, prompt engineering

Data Privacy & Security

Solution: On-premise models, encryption, anonymization

Bias & Fairness

Solution: Audits, synthetic counterbalancing, human review

Cost & Latency

Solution: Distilled models, quantization, model routing

Building AI is as much about risk management as it is about optimization.

9. Case Study: AI-Powered Customer Support

Let’s consider a real-world AI system in production: a support copilot for a global SaaS company.

Objectives:

Auto-answer tickets

Summarize long threads

Recommend actions to support agents

Stack Used:

LLM backend (Claude/GPT-4)

RAG over internal KB + docs

Feedback loop for agent thumbs-up/down

Cost-aware routing: small model for basic queries, big model for escalations

Results:

60% faster response time

30% reduction in escalations

Constant improvement via real usage data

This wasn’t just a model—it was a system engineered to work within a business workflow.

10. The Future of AI Development

The frontier of AI development lies in modularity, autonomy, and context-awareness.

Trends to Watch:

Multimodal AI: Models that combine vision, audio, and text (e.g., GPT-4o)

Agentic AI: AI systems that plan and act over time

On-device AI: Privacy-first, low-latency inference

LLMOps: Managing the lifecycle of large models in production

Hybrid Systems: AI + rules + human oversight

The next generation of AI won’t just talk—it will listen, learn, act, and adapt.

Conclusion: Building AI That Lasts

Creating real-world AI is more than tuning a model. It’s about crafting an ecosystem—of data, infrastructure, interfaces, and people—that can support intelligent behavior at scale.

To build AI that works in the real world, teams must:

Think in systems, not scripts

Optimize for outcomes, not just metrics

Design for feedback, not just deployment

Prioritize trust, not just performance

As the field matures, successful AI developers will be those who combine cutting-edge models with solid engineering, clear ethics, and human-first design.

Because in the end, intelligence isn’t just about output—it’s about impact.

0 notes

Text

In the rapidly evolving landscape of modern business, the imperative for digital transformation has never been more pronounced, driven by the relentless pressures of competition. Central to this transformational journey is the strategic utilization of data, which serves as a cornerstone for gaining insights and facilitating predictive analysis. In effect, data has assumed the role of a contemporary equivalent to gold, catalyzing substantial investments and sparking a widespread adoption of data analytics methodologies among businesses worldwide. Nevertheless, this shift isn't without its challenges. Developing end-to-end applications tailored to harness data for generating core insights and actionable findings can prove to be time-intensive and costly, contingent upon the approach taken in constructing data pipelines. These comprehensive data analytics applications, often referred to as data products within the data domain, demand meticulous design and implementation efforts. This article aims to explore the intricate realm of data products, data quality, and data governance, highlighting their significance in contemporary data systems. Additionally, it will explore data quality vs data governance in data systems, elucidating their roles and contributions to the success of data-driven initiatives in today's competitive landscape. What are Data Products? Within the domain of data analytics, processes are typically categorized into three distinct phases: data engineering, reporting, and machine learning. Data engineering involves ingesting raw data from diverse sources into a centralized repository such as a data lake or data warehouse. This phase involves executing ETL (extract, transform, and load) operations to refine the raw data and then inserting this processed data into analytical databases to facilitate subsequent analysis in machine learning or reporting phases. In the reporting phase, the focus shifts to effectively visualizing the aggregated data using various business intelligence tools. This visualization process is crucial for uncovering key insights and facilitating better data-driven decision-making within the organization. By presenting the data clearly and intuitively, stakeholders can derive valuable insights to inform strategic initiatives and operational optimizations. Conversely, the machine learning phase is centered around leveraging the aggregated data to develop predictive models and derive actionable insights. This involves tasks such as feature extraction, hypothesis formulation, model development, deployment to production environments, and ongoing monitoring to ensure data quality and workflow integrity. In essence, any software service or tool that orchestrates the end-to-end pipeline—from data ingestion and visualization to machine learning—is commonly referred to as a data product, serving as a pivotal component in modern data-driven enterprises. At this stage, data products streamline and automate the entire process, making it more manageable while saving considerable time. Alongside these efficiencies, they offer a range of outputs, including raw data, processed-aggregated data, data as a machine learning service, and actionable insights. What is Data Quality? Data quality refers to the reliability, accuracy, consistency, and completeness of data within a dataset or system. It encompasses various aspects such as correctness, timeliness, relevance, and usability of the data. In simpler terms, data quality reflects how well the data represents the real-world entities or phenomena it is meant to describe. High-quality data is free from errors, inconsistencies, and biases, making it suitable for analysis, decision-making, and other purposes. The Mission of Data Quality in Data Products In the realm of data products, where decisions are often made based on insights derived from data, ensuring high data quality is paramount. The mission of data quality in data products is multifaceted.

First and foremost, it acts as the foundation upon which all subsequent analyses, predictions, and decisions are built. Reliable data fosters trust among users and stakeholders, encourages the adoption and utilization of data products, and drives innovation, optimization, and compliance efforts. Moreover, high-quality data enables seamless integration, collaboration, and interoperability across different systems and platforms, maximizing the value derived from dataasset What is Data Governance? Data governance is the framework, policies, procedures, and practices that organizations implement to ensure the proper management, usage, quality, security, and compliance of their data assets. It involves defining roles, responsibilities, and decision-making processes related to data management, as well as establishing standards and guidelines for data collection, storage, processing, and sharing. Data governance aims to optimize the value of data assets while minimizing risks and ensuring alignment with organizational objectives and regulatory requirements. The Mission of Data Governance in Data Products In data products, data governance ensures accountability, transparency, and reliability in data management. It maintains data quality and integrity, fostering trust among users. Additionally, data governance facilitates compliance with regulations, enhances data security, and promotes efficient data utilization, driving organizational success through informed decision-making and collaboration. By establishing clear roles, responsibilities, and standards, data governance provides a structured framework for managing data throughout its lifecycle. This framework mitigates errors and inconsistencies, ensuring data remains accurate and usable for analysis. Furthermore, data governance safeguards against data breaches and unauthorized access, while also enabling seamless integration and sharing of data across systems, optimizing its value for organizational objectives. Data Quality vs. Data Governance: A Brief Comparison Data quality focuses on the accuracy, completeness, and reliability of data, ensuring it meets intended use requirements. It guarantees that data is error-free and suitable for analysis and decision-making. Data governance, meanwhile, establishes the framework, policies, and procedures for managing data effectively. It ensures data is managed securely, complies with regulations, and aligns with organizational goals. In essence, data quality ensures the reliability of data, while data governance provides the structure and oversight to manage data effectively. Both are crucial for informed decision-making and organizational success. Conclusion In summary, data quality and data governance play distinct yet complementary roles in the realm of data products. While data quality ensures the reliability and accuracy of data, data governance provides the necessary framework and oversight for effective data management. Together, they form the foundation for informed decision-making, regulatory compliance, and organizational success in the data-driven era.

0 notes

Text

Developing and Deploying AI/ML Applications: From Idea to Production

In the rapidly evolving world of artificial intelligence (AI) and machine learning (ML), developing and deploying intelligent applications is no longer a futuristic concept — it's a competitive necessity. Whether it's predictive analytics, recommendation engines, or computer vision systems, AI/ML applications are transforming industries at scale.

This article breaks down the key phases and considerations for developing and deploying AI/ML applications in modern environments — without diving into complex coding.

💡 Phase 1: Problem Definition and Use Case Design

Before writing a single line of code or selecting a framework, organizations must start with clear business goals:

What problem are you solving?

What kind of prediction or automation is expected?

Is AI/ML the right solution?

Examples: 🔹 Forecasting sales 🔹 Classifying customer feedback 🔹 Detecting fraudulent transactions

📊 Phase 2: Data Collection and Preparation

Data is the foundation of AI. High-quality, relevant data fuels accurate models.

Steps include:

Gathering structured or unstructured data (logs, images, text, etc.)

Cleaning and preprocessing to remove noise

Feature selection and engineering to extract meaningful inputs

Tools often used: Jupyter Notebooks, Apache Spark, or cloud-native services like AWS Glue or Azure Data Factory.

Phase 3: Model Development and Training

Once data is prepared, ML engineers select algorithms and train models. Common types include:

Classification (e.g., spam detection)

Regression (e.g., predicting prices)

Clustering (e.g., customer segmentation)

Deep Learning (e.g., image or speech recognition)

Key concepts:

Training vs. validation datasets

Model tuning (hyperparameters)

Accuracy, precision, and recall

Cloud platforms like SageMaker, Vertex AI, or OpenShift AI simplify this process with scalable compute and managed tools.

Phase 4: Model Evaluation and Testing

Before deploying a model, it’s critical to validate its performance on unseen data.

Steps:

Measure performance against benchmarks

Avoid overfitting or bias

Ensure the model behaves well in real-world edge cases

This helps in building trustworthy, explainable AI systems.

🚀 Phase 5: Deployment and Inference

Deployment involves integrating the model into a production environment where it can serve real users.

Approaches include:

Batch Inference (run periodically on data sets)

Real-time Inference (API-based predictions on-demand)

Edge Deployment (models deployed on devices, IoT, etc.)

Tools used for deployment:

Kubernetes or OpenShift for container orchestration

MLflow or Seldon for model tracking and versioning

APIs for front-end or app integration

🔄 Phase 6: Monitoring and Continuous Learning

Once deployed, the job isn’t done. AI/ML models need to be monitored and retrained over time to stay relevant.

Focus on:

Performance monitoring (accuracy over time)

Data drift detection

Automated retraining pipelines

ML Ops (Machine Learning Operations) helps automate and manage this lifecycle — ensuring scalability and reliability.

Best Practices for AI/ML Application Development

✅ Start with business outcomes, not just algorithms ✅ Use version control for both code and data ✅ Prioritize data ethics, fairness, and security ✅ Automate with CI/CD and MLOps workflows ✅ Involve cross-functional teams: data scientists, engineers, and business users

🌐 Real-World Examples

Retail: AI recommendation systems that boost sales

Healthcare: ML models predicting patient risk

Finance: Real-time fraud detection algorithms

Manufacturing: Predictive maintenance using sensor data

Final Thoughts

Building AI/ML applications goes beyond model training — it’s about designing an end-to-end system that continuously learns, adapts, and delivers real value. With the right tools, teams, and practices, organizations can move from experimentation to enterprise-grade deployments with confidence.

Visit our website for more details - www.hawkstack.com

0 notes

Text

DevOps vs SRE vs Platform Engineering — Where Does BuildPiper

BuildPiper is an enterprise-grade central DevSecOps platform that enables modern engineering teams to deliver secure, scalable applications across cloud-native environments with high velocity. It provides a single pane of glass to manage infrastructure orchestration, CI/CD pipeline automation, security enforcement, and observability — all within one seamless, production-ready platform.

With native, one-click integrations across 50+ leading tools — including GitHub, GitLab, Jenkins, ArgoCD, Kubernetes, Prometheus, Datadog, AWS, Azure, and more — BuildPiper fits effortlessly into modern enterprise ecosystems. It supports Day 0 Kubernetes operations, multi/hybrid-cloud setups, cloud cost governance, and self-service environments to drive developer efficiency without operational sprawl.

BuildPiper helps teams improve DORA metrics like deployment frequency, lead time for changes, and MTTR, while also enabling audit readiness, developer insights, and real-time visibility. Enterprises using BuildPiper typically report 30–40% cost savings, faster release velocity, and stronger platform resilience across environments.

Trusted by brands like Airtel, Lenskart, McKesson, WM, and several Fortune 100 enterprises, BuildPiper brings the standardization, velocity, control, and precision needed to engineer modern software delivery — securely, efficiently, and at scale.

0 notes

Text

The Future of AI in Manufacturing: Trends to Watch in 2025

Artificial Intelligence (AI) is no longer a futuristic concept in manufacturing—it’s a present-day powerhouse reshaping every corner of the industry. As we move through 2025, the integration of AI technologies is accelerating, driving smarter production lines, optimizing operations, and redefining workforce dynamics. Here’s a deep dive into the most transformative AI trends set to shape the manufacturing landscape in 2025 and beyond.

1. Hyperautomation and Autonomous Factories

In 2025, manufacturing is leaning heavily into hyperautomation—the orchestration of advanced technologies like AI, machine learning (ML), robotics, and the Industrial Internet of Things (IIoT) to automate complex processes.

Autonomous factories, where machinery self-monitors, self-adjusts, and even self-repairs with minimal human intervention, are becoming a reality. These smart factories rely on real-time data, predictive analytics, and AI-driven systems to make instant decisions, optimize throughput, and reduce downtime.

Key Example: Tesla and Siemens are pushing the envelope with AI-powered factories that adapt on the fly, resulting in faster production cycles and improved product quality.

2. Predictive and Prescriptive Maintenance

Maintenance is no longer reactive. In 2025, manufacturers are using AI to forecast when equipment will fail—before it happens.

Predictive maintenance uses historical data, sensor input, and AI models to detect anomalies. Prescriptive maintenance goes a step further by recommending specific actions to avoid failures.

Benefits include:

Reduced unplanned downtime

Lower repair costs

Prolonged equipment life

Stat Insight: According to Deloitte, predictive maintenance can reduce breakdowns by 70% and maintenance costs by 25%.

3. AI-Enhanced Quality Control

AI vision systems are revolutionizing quality assurance. In 2025, computer vision combined with deep learning can identify even microscopic defects in real time—far beyond the accuracy and speed of human inspectors.

Trends in 2025:

Real-time defect detection

AI models trained on vast image datasets

Adaptive quality control systems that learn and evolve

Industries like automotive and semiconductor manufacturing are already deploying these systems for better precision and consistency.

4. Generative Design and Digital Twins

Generative design, powered by AI, allows engineers to input goals and constraints, and the software suggests thousands of design variations optimized for performance and manufacturability.

Digital twins—virtual replicas of physical assets—are now powered by AI to simulate, predict, and optimize production performance. These twins learn from real-world data and provide insights into:

Product development

Production efficiency

Predictive simulations

By 2025, the synergy between digital twins and AI will allow real-time optimization of complex manufacturing ecosystems.

5. AI in Supply Chain Optimization

In a post-pandemic world, supply chain resilience is critical. AI is key to making supply chains smarter, more responsive, and adaptable to disruptions.

2025 Trends:

Real-time inventory tracking and demand forecasting

AI-driven supplier risk assessments

Automated logistics route optimization

By crunching vast datasets, AI ensures materials arrive just in time, reducing costs and delays.

6. Human-AI Collaboration and Upskilling

The narrative isn’t AI vs. humans—it’s AI with humans. In 2025, manufacturers are investing heavily in reskilling and upskilling their workforce to work alongside AI tools.

Examples include:

AR/VR interfaces for AI-assisted training

Human-in-the-loop systems for decision validation

AI-powered cobots (collaborative robots) working side-by-side with technicians

Companies are rethinking workforce models, blending human creativity with AI precision to create agile, future-ready teams.

7. Sustainability Through AI

Sustainability is no longer optional—it’s a strategic imperative. Manufacturers are leveraging AI to minimize waste, reduce energy consumption, and ensure greener operations.

How AI is enabling sustainability in 2025:

Smart energy grid management in factories

Optimization of raw material use

Real-time emissions monitoring

Sustainable manufacturing not only meets regulatory demands but also aligns with growing consumer and investor expectations.

Conclusion: AI as the Backbone of Smart Manufacturing

2025 marks a pivotal year in the evolution of AI in manufacturing. From autonomous operations to sustainable production, AI is not just a tool—it’s becoming the backbone of modern manufacturing. The companies that lead this transformation will be the ones that embrace AI’s potential, adapt quickly, and invest in both technology and talent.

As innovation continues to evolve, one thing is clear: the factories of the future are intelligent, efficient, and deeply interconnected—with AI at the core.

0 notes

Text

Inside Agentic Workflows: How AI Agents Can Drive Cross-System Decision-Making

85% of enterprise leaders say AI will revolutionize business processes—yet many struggle to integrate AI into cross-system decision-making. How can AI agents bridge this gap?

Artificial intelligence (AI) is redefining business operations, particularly in cross-system decision-making. AI-driven workflows, powered by intelligent agents, are transforming how organizations integrate and optimize processes across multiple platforms. This blog post explores agentic workflows, their key features, and how they enhance business outcomes.

Designed for enterprise architects, CTOs, AI solution leads, innovation officers, and data systems designers, this guide provides a comprehensive understanding of AI-driven workflows and their role in orchestrating cross-tool logic and actions.

Understanding Agents in This Context

An AI agent is an intelligent system that combines decision logic, action, and memory to autonomously execute tasks. These agents perceive information from multiple sources, analyze data, make decisions, and seamlessly execute actions across different systems.

Unlike traditional automation systems, which follow rigid, rule-based processes, AI agents adapt dynamically, optimizing processes in real time based on contextual reasoning and stored knowledge.

Automations vs. Workflows Powered by Agents

Understanding the distinction between traditional automations and agentic workflows is crucial:

Automations: These are pre-programmed processes designed to execute specific tasks following a set of predefined rules. They work well for repetitive, predictable tasks such as sending email notifications, updating records in a database, or executing scheduled jobs. However, they lack the flexibility to adjust to unforeseen changes or evolving conditions.

Agentic Workflows: Unlike traditional automation, agentic workflows integrate AI-driven decision-making into the process. AI agents can:

Assess real-time data instead of relying on static rules.

Adjust workflow paths dynamically based on contextual reasoning.

Handle unexpected conditions by modifying their actions or escalating issues.

Learn and improve over time, refining decision logic based on past outcomes.

For example, in a customer support workflow:

A traditional automation might route all refund requests over a certain amount to a human agent.

An AI agent-driven workflow could analyze historical refund data, customer sentiment, and fraud detection models to determine whether the refund can be automatically processed, needs additional verification, or should be escalated to a manager.

Key Features of Agentic Workflows

Agentic workflows stand out due to several key capabilities:

1. Memory

AI agents store and recall information from past interactions, enabling better decision-making based on historical patterns and learned behaviors.

2. Dynamic Input Handling

Unlike static workflows, AI-driven systems process and respond to real-time data, adjusting actions dynamically to reflect new conditions.

3. Contextual Reasoning

AI agents consider the broader context of data, making informed decisions that go beyond simple rule-based automation.

“Advances in AI are making it possible to do more with less, and that’s going to improve the quality of life for billions of people.” — Mark Zuckerberg, CEO of Meta

Improving Outcomes vs. Chained Logic Rules

Traditional chained logic rules rely on predefined if-then sequences. While effective in structured scenarios, they lack adaptability when conditions change. These rules often struggle to handle complexity because they require explicit programming for every possible scenario and lack the ability to learn from previous outcomes.

AI-powered agentic workflows enhance business outcomes by:

Identifying patterns across systems and adjusting strategies accordingly.

Managing exceptions without human intervention.

Escalating decisions intelligently when necessary.

Optimizing processes dynamically based on real-time insights.

A Deeper Look: Why AI Agents Outperform Chained Logic Rules

Context Awareness: AI agents take into account the larger operational context rather than just following linear rule sets.

Adaptive Learning: Machine learning-powered agents can refine their decision-making over time, learning from new data and improving workflow efficiency.

Scalability: Unlike rigid rule-based workflows, AI-driven workflows can scale efficiently across systems, handling increasing complexity and larger datasets.

Reduced Maintenance Burden: Traditional rule-based systems require frequent updates to accommodate new conditions, whereas AI-driven systems self-adjust based on new insights.

Proactive Decision-Making: Instead of waiting for predefined triggers, AI agents can anticipate issues and take proactive actions before a problem arises.

Real-World Example: AI Agents in Supply Chain Management

In a supply chain scenario, a company may rely on multiple systems to manage inventory, demand forecasting, and order fulfillment. A chained logic rule-based system might dictate that:

If inventory drops below a set threshold, an order is automatically placed.

If demand is high, additional orders are scheduled.

However, this approach fails to account for real-world complexities such as:

Seasonal fluctuations.

Supplier delays.

Unexpected demand spikes.

An AI-driven agentic workflow would continuously assess:

Live inventory data.

Supplier availability.

Customer demand patterns.

Market trends.

The AI agent could then:

Adjust orders dynamically.

Predict and mitigate stock shortages.

Optimize supplier selection based on cost, reliability, and shipping times.

By shifting from a rigid, rules-based approach to an adaptive, AI-driven system, businesses can achieve greater accuracy, efficiency, and resilience in their operations.

Example of an Agentic Workflow

Scenario: AI-Driven Purchase Order Approval

Consider a procurement workflow where an AI agent automates purchase order (PO) approvals:

Receives a PO request from the enterprise resource planning (ERP) system.

Analyzes inventory levels and demand forecasts.

Cross-checks budget constraints in the finance system.

Approves the order if forecasts are positive and inventory is low.

Escalates to a manager if conditions are unclear or financial risks exist.

This cross-system orchestration enhances efficiency and reduces human decision fatigue.

Key Takeaways

Agentic Workflows: AI agents perceive, reason, and act autonomously across multiple platforms.

Cross-System Decisions: AI agents can approve POs, assign leads, or optimize supply chains dynamically.

Exception Handling: AI agents adapt to new information, escalate issues, and manage deviations effectively.

AI Logic Chaining: AI-driven workflows integrate multiple tools for maximum efficiency and decision-making power.

As businesses navigate increasingly complex landscapes, AI-driven agentic workflows present a game-changing opportunity. In order to stay ahead, begin mapping out decision pathways that span multiple systems and explore how AI-driven workflows can revolutionize your operations. By leveraging AI, organizations have an opportunity to create more adaptive and resilient processes, aligning their capabilities with the dynamic demands of today's environment. The key lies in taking a proactive approach—integrating AI thoughtfully and strategically to drive meaningful, long-term impact.

Learn more about DataPeak:

#datapeak#factr#saas#technology#agentic ai#artificial intelligence#machine learning#ai#ai-driven business solutions#machine learning for workflow#ai business tools#aiinnovation#ai solutions for data driven decision making#digitaltools#digital trends#digital technology#datadrivendecisions#dataanalytics#data driven decision making

0 notes

Text

Top Healthcare RCM Companies in USA: How to Choose the Best Revenue Cycle Management Partner for Your Practice

Introduction to Healthcare RCM Companies in USA

Managing finances in the healthcare world isn't just about billing patients. It's about orchestrating a finely tuned system that begins when a patient schedules an appointment and ends when every dollar owed is collected. That’s Revenue Cycle Management (RCM) in a nutshell—and Healthcare RCM Companies in the USA are the unsung heroes keeping this engine running smoothly.

What is Revenue Cycle Management (RCM)?

RCM is the process of tracking patient care from the initial appointment to final payment. It includes everything from verifying insurance to submitting claims and managing denials. Think of it as the financial bloodstream of your healthcare practice.

Why RCM is Crucial for Healthcare Providers

Without a strong RCM system, even the best care providers can suffer financially. Delayed payments, denied claims, and coding errors can strangle cash flow and drain resources.

The Role of Healthcare RCM Companies

What Services Do They Provide?

Eligibility verification

Medical coding

Billing and claims submission

Payment posting

Denial management

Financial reporting

How RCM Companies Improve Financial Health

They automate and optimize every step, allowing providers to collect faster, reduce errors, and improve patient satisfaction.

Components of Revenue Cycle Management

Patient Scheduling and Registration

Capturing accurate patient data upfront prevents headaches down the line.

Insurance Eligibility Verification

Verifying coverage in advance avoids rejected claims and surprises for patients.

Charge Capture and Coding

Proper documentation and coding are critical to maximizing reimbursement.

Claims Submission and Follow-Up

Submitting clean claims quickly = faster payments.

Payment Posting and Denial Management

Accurate posting and aggressive denial follow-ups keep the revenue cycle flowing.

Benefits of Hiring Healthcare RCM Companies in USA

Increased Revenue and Profitability

Outsourcing RCM can increase collections by up to 30%.

Improved Efficiency and Reduced Errors

Trained experts spot issues before they become financial sinkholes.

Enhanced Compliance and Reporting

Meet all federal and state regulations with ease.

Focus on Patient Care

Free your staff to concentrate on what matters most: the patients.

Top Qualities to Look for in an RCM Company

Experience in Your Specialty

Choose a partner who understands your unique billing needs.

Technological Capabilities

Integration with your EMR and automation tools is a must.

Transparent Pricing and Communication

No one likes surprise fees or radio silence from vendors.

HIPAA Compliance and Data Security

Protecting patient data isn't optional—it’s mandatory.

Common Challenges Solved by RCM Companies

High Denial Rates

RCM experts dig into the causes and fix them fast.

Slow Reimbursements

Streamlined processes speed up the entire revenue cycle.

Incomplete Documentation

Professional coders ensure everything’s properly recorded.

Staff Overload

Outsourcing reduces the burden on your in-house team.

In-House vs Outsourced RCM: Which is Better?

Pros and Cons of In-House RCM

Control vs. cost—keeping it internal gives visibility but eats resources.

Pros and Cons of Outsourcing RCM

External vendors offer scalability, expertise, and cost savings.

Making the Right Choice for Your Practice

Evaluate your budget, goals, and current team bandwidth.

Spotlight on My Billing Provider

Services Offered

From insurance verification to full-cycle billing and denial management.

Why They Stand Out Among Healthcare RCM Companies in the USA

U.S.-based team

24/7 support

Custom solutions for every practice size

Real Client Success Stories

One clinic saw a 40% drop in denials within 60 days of switching.

Technology Trends in Healthcare RCM

AI and Automation

Smart tools catch coding errors and suggest billing improvements.

Predictive Analytics

See future trends based on historical billing data.

Cloud-Based RCM Platforms

Access your financial data anytime, anywhere.

Patient Portals and Engagement Tools

Give patients more control and boost satisfaction.

Cost Structure of Healthcare RCM Companies

Flat Rate vs Percentage-Based Pricing

Some charge a fixed monthly fee; others take a cut of collected revenue.

Hidden Fees to Watch Out For

Always ask about setup charges, tech fees, or cancellation clauses.

Understanding ROI from RCM Services

A good RCM partner should pay for themselves—and then some.

Legal and Compliance Considerations

HIPAA and HITECH Act

Strict rules govern how patient data is handled.

Audit Readiness

Good RCM companies keep you ready for an audit 24/7.

Accurate Medical Coding Standards

They use certified coders trained in ICD-10, CPT, and HCPCS.

Choosing the Best Healthcare RCM Company

Questions to Ask During the Vetting Process

What specialties do you serve?

How do you handle denials?

What’s your average collection time?

Red Flags to Avoid

Vague contracts, poor communication, or lack of references.

How to Transition Smoothly

Plan ahead, assign a team lead, and request a phased onboarding.

Industry Trends Shaping the Future of RCM

Value-Based Care

Shift from volume to value requires smarter billing strategies.

Interoperability Standards

Better data sharing between systems = better outcomes and payments.

Growing Demand for Outsourced RCM

More practices are ditching in-house billing for expert partners.

RCM for Different Practice Sizes and Specialties

Solo Practitioners

Need streamlined, low-cost solutions.

Large Hospital Systems

Require advanced integration and scalable teams.

Specialty Practices (Dental, Behavioral Health, etc.)

Benefit from niche-specific coding and billing expertise.

Conclusion

RCM isn’t just a back-office function—it’s the lifeline of your practice. Choosing the right Healthcare RCM Companies in USA, like My Billing Provider, can revolutionize your financial health, reduce stress, and let you focus more on patient outcomes. With the right partner, managing your revenue cycle doesn’t have to feel like a second job.

0 notes

Text

Hybrid Testing: Combining Manual and Automated Testing

In the ever-evolving landscape of software development, ensuring product quality requires a balanced approach to testing. Hybrid Testing has emerged as a strategic method that combines the best of manual and automated testing, creating a flexible and effective framework that adapts to varying project needs. This approach empowers QA teams to leverage human insight alongside automation speed, enhancing both test coverage and efficiency.

What is Hybrid Testing?

Hybrid Testing is a quality assurance methodology that blends manual and automated testing practices. Rather than relying solely on one approach, hybrid testing allows teams to execute tests based on the complexity, frequency, and criticality of application features.

For example, exploratory and usability testing is often better performed manually, while repetitive regression tests are ideal candidates for automation. The hybrid model orchestrates these efforts into a unified testing strategy.

Why Hybrid Testing?

No single testing approach can address all the challenges posed by modern software systems. Manual testing provides the human intuition needed for understanding UX and edge cases, while automated testing offers speed, repeatability, and scalability.

Hybrid testing:

Optimizes testing resources and time.

Enhances test coverage across both UI and backend layers.

Provides faster feedback cycles with strategic automation.

Reduces human error in repetitive tasks.

Supports continuous integration and agile development models.

Types of Hybrid Models in Software Testing

Several hybrid models have been adopted depending on the team’s testing goals and product maturity:

Sequential Hybrid Model Manual testing is performed first, followed by automation for stable test cases.

Parallel Hybrid Model Manual and automated testing are executed simultaneously to maximize efficiency.

Layered Hybrid Model Different layers of the application (UI, API, DB) are tested using appropriate techniques — manual for UI/UX, automation for APIs or backend logic.

Continuous Hybrid Model Integrated within CI/CD pipelines, this model automates stable tests and uses manual testing for critical or new features in every sprint.

When to Use Manual Testing

Manual testing is most valuable in the following scenarios:

Exploratory Testing: Investigating unknown functionality and behaviors.

Usability Testing: Evaluating user experience and interface design.

Ad Hoc Testing: Performing unscripted testing based on intuition and domain knowledge.

UI/UX Validation: Testing visual and interactive elements of the application.

Short-term Projects: When automation may not yield ROI due to tight timelines.

When to Use Automated Testing

Automated testing is ideal for:

Regression Testing: Repeatedly validating that existing features remain unaffected.

Performance Testing: Simulating high loads to assess system responsiveness.

Smoke and Sanity Testing: Quickly verifying core functionality after builds.

Data-Driven Testing: Running tests with multiple input combinations efficiently.

Long-Term Projects: Projects that require ongoing releases and updates.

Key Benefits of Hybrid Testing

Improved Test Coverage: Both broad (automation) and deep (manual) testing.

Faster Time-to-Market: Reduces delays with automated feedback loops.

Cost-Effective QA: Strategic automation reduces manual workload and resources.

Increased Flexibility: Adaptable to changing requirements and project phases.

Higher Quality Deliverables: Combines human oversight with automation precision.

Strategies for Implementing Hybrid Testing

Define Test Objectives: Clearly differentiate what should be tested manually vs. automated.

Prioritize Test Cases: Automate high-risk, repetitive, and stable test scenarios first.

Collaborate Across Teams: Ensure developers, testers, and stakeholders are aligned.

Use AI-Driven Platforms like genqe.ai: Platforms like genqe.ai can intelligently recommend which tests to automate, optimize test flows, and support hybrid workflows seamlessly.

Monitor and Review: Regularly assess test effectiveness and update scripts/test plans as necessary.

Challenges in Hybrid Testing

Resource Allocation: Balancing between manual testers and automation engineers.

Maintenance Overhead: Keeping automated tests updated with application changes.

Integration Complexity: Ensuring both types of tests work within CI/CD pipelines.

Skillset Gap: Need for testers to be familiar with both manual testing techniques and scripting.

Conclusion

Hybrid testing is not just a compromise — it is a strategic blend of intuition and innovation. By combining manual testing’s depth with automation’s speed, organizations can achieve a high level of test reliability, scalability, and quality assurance. With AI-powered platforms like genqe.ai, teams can elevate their hybrid testing strategies by making intelligent, data-driven decisions that reduce effort while increasing test effectiveness. As software systems grow in complexity, hybrid testing ensures QA remains agile, thorough, and aligned with business goals.

0 notes

Text

Multi-Cloud vs. Hybrid Cloud: Which Strategy Fits Your Organization?

As cloud computing matures, the choice between multi-cloud and hybrid cloud strategies has become a pivotal decision for IT leaders. While both models offer flexibility, cost optimization, and scalability, they serve different business needs and technical purposes. Understanding the nuances between the two can make or break your digital transformation initiative.

Understanding the Basics

What is Hybrid Cloud?