#Workflow Orchestration DevOps

Explore tagged Tumblr posts

Text

What Is Orchestration in DevOps? Understanding Its Role in Automating Workflows- OpsNexa!

Learn what orchestration in DevOps is and how it helps automate and manage complex workflows. Discover the benefits of orchestration for improving efficiency, What Is Orchestration in DevOps? Reducing errors, and accelerating software delivery, along with the tools and strategies commonly used in modern DevOps pipelines.

#DevOps Orchestration#Orchestration in DevOps#Automation vs Orchestration#CI/CD Orchestration#Infrastructure Orchestration#Workflow Orchestration DevOps

0 notes

Text

A Comprehensive Guide to Deploy Azure Kubernetes Service with Azure Pipelines

A powerful orchestration tool for containerized applications is one such solution that Azure Kubernetes Service (AKS) has offered in the continuously evolving environment of cloud-native technologies. Associate this with Azure Pipelines for consistent CI CD workflows that aid in accelerating the DevOps process. This guide will dive into the deep understanding of Azure Kubernetes Service deployment with Azure Pipelines and give you tips that will enable engineers to build container deployments that work. Also, discuss how DevOps consulting services will help you automate this process.

Understanding the Foundations

Nowadays, Kubernetes is the preferred tool for running and deploying containerized apps in the modern high-speed software development environment. Together with AKS, it provides a high-performance scale and monitors and orchestrates containerized workloads in the environment. However, before anything, let’s deep dive to understand the fundamentals.

Azure Kubernetes Service: A managed Kubernetes platform that is useful for simplifying container orchestration. It deconstructs the Kubernetes cluster management hassles so that developers can build applications instead of infrastructure. By leveraging AKS, organizations can:

Deploy and scale containerized applications on demand.

Implement robust infrastructure management

Reduce operational overhead

Ensure high availability and fault tolerance.

Azure Pipelines: The CI/CD Backbone

The automated code building, testing, and disposition tool, combined with Azure Kubernetes Service, helps teams build high-end deployment pipelines in line with the modern DevOps mindset. Then you have Azure Pipelines for easily integrating with repositories (GitHub, Repos, etc.) and automating the application build and deployment.

Spiral Mantra DevOps Consulting Services

So, if you’re a beginner in DevOps or want to scale your organization’s capabilities, then DevOps consulting services by Spiral Mantra can be a game changer. The skilled professionals working here can help businesses implement CI CD pipelines along with guidance regarding containerization and cloud-native development.

Now let’s move on to creating a deployment pipeline for Azure Kubernetes Service.

Prerequisites you would require

Before initiating the process, ensure you fulfill the prerequisite criteria:

Service Subscription: To run an AKS cluster, you require an Azure subscription. Do create one if you don’t already.

CLI: The Azure CLI will let you administer resources such as AKS clusters from the command line.

A Professional Team: You will need to have a professional team with technical knowledge to set up the pipeline. Hire DevOps developers from us if you don’t have one yet.

Kubernetes Cluster: Deploy an AKS cluster with Azure Portal or ARM template. This will be the cluster that you run your pipeline on.

Docker: Since you’re deploying containers, you need Docker installed on your machine locally for container image generation and push.

Step-by-Step Deployment Process

Step 1: Begin with Creating an AKS Cluster

Simply begin the process by setting up an AKS cluster with CLI or Azure Portal. Once the process is completed, navigate further to execute the process of application containerization, and for that, you would need to create a Docker file with the specification of your application runtime environment. This step is needed to execute the same code for different environments.

Step 2: Setting Up Your Pipelines

Now, the process can be executed for new projects and for already created pipelines, and that’s how you can go further.

Create a New Project

Begin with launching the Azure DevOps account; from the screen available, select the drop-down icon.

Now, tap on the Create New Project icon or navigate further to use an existing one.

In the final step, add all the required repositories (you can select them either from GitHub or from Azure Repos) containing your application code.

For Already Existing Pipeline

Now, from your existing project, tap to navigate the option mentioning Pipelines, and then open Create Pipeline.

From the next available screen, select the repository containing the code of the application.

Navigate further to opt for either the YAML pipeline or the starter pipeline. (Note: The YAML pipeline is a flexible environment and is best recommended for advanced workflows.).

Further, define pipeline configuration by accessing your YAML file in Azure DevOps.

Step 3: Set Up Your Automatic Continuous Deployment (CD)

Further, in the next step, you would be required to automate the deployment process to fasten the CI CD workflows. Within the process, the easiest and most common approach to execute the task is to develop a YAML file mentioning deployment.yaml. This step is helpful to identify and define the major Kubernetes resources, including deployments, pods, and services.

After the successful creation of the YAML deployment, the pipeline will start to trigger the Kubernetes deployment automatically once the code is pushed.

Step 4: Automate the Workflow of CI CD

Now that we have landed in the final step, it complies with the smooth running of the pipelines every time the new code is pushed. With the right CI CD integration, the workflow allows for the execution of continuous testing and building with the right set of deployments, ensuring that the applications are updated in every AKS environment.

Best Practices for AKS and Azure Pipelines Integration

1. Infrastructure as Code (IaC)

- Utilize Terraform or Azure Resource Manager templates

- Version control infrastructure configurations

- Ensure consistent and reproducible deployments

2. Security Considerations

- Implement container scanning

- Use private container registries

- Regular security patch management

- Network policy configuration

3. Performance Optimization

- Implement horizontal pod autoscaling

- Configure resource quotas

- Use node pool strategies

- Optimize container image sizes

Common Challenges and Solutions

Network Complexity

Utilize Azure CNI for advanced networking

Implement network policies

Configure service mesh for complex microservices

Persistent Storage

Use Azure Disk or Files

Configure persistent volume claims

Implement storage classes for dynamic provisioning

Conclusion

Deploying the Azure Kubernetes Service with effective pipelines represents an explicit approach to the final application delivery. By embracing these practices, DevOps consulting companies like Spiral Mantra offer transformative solutions that foster agile and scalable approaches. Our expert DevOps consulting services redefine technological infrastructure by offering comprehensive cloud strategies and Kubernetes containerization with advanced CI CD integration.

Let’s connect and talk about your cloud migration needs

2 notes

·

View notes

Text

🚀 Red Hat Services Management and Automation: Simplifying Enterprise IT

As enterprise IT ecosystems grow in complexity, managing services efficiently and automating routine tasks has become more than a necessity—it's a competitive advantage. Red Hat, a leader in open-source solutions, offers robust tools to streamline service management and enable automation across hybrid cloud environments.

In this blog, we’ll explore what Red Hat Services Management and Automation is, why it matters, and how professionals can harness it to improve operational efficiency, security, and scalability.

🔧 What Is Red Hat Services Management?

Red Hat Services Management refers to the tools and practices provided by Red Hat to manage system services—such as processes, daemons, and scheduled tasks—across Linux-based infrastructures.

Key components include:

systemd: The default init system on RHEL, used to start, stop, and manage services.

Red Hat Satellite: For managing system lifecycles, patching, and configuration.

Red Hat Ansible Automation Platform: A powerful tool for infrastructure and service automation.

Cockpit: A web-based interface to manage Linux systems easily.

🤖 What Is Red Hat Automation?

Automation in the Red Hat ecosystem primarily revolves around Ansible, Red Hat’s open-source IT automation tool. With automation, you can:

Eliminate repetitive manual tasks

Achieve consistent configurations

Enable Infrastructure as Code (IaC)

Accelerate deployments and updates

From provisioning servers to configuring complex applications, Red Hat automation tools reduce human error and increase scalability.

🔍 Key Use Cases

1. Service Lifecycle Management

Start, stop, enable, and monitor services across thousands of servers with simple systemctl commands or Ansible playbooks.

2. Automated Patch Management

Use Red Hat Satellite and Ansible to automate updates, ensuring compliance and reducing security risks.

3. Infrastructure Provisioning

Provision cloud and on-prem infrastructure with repeatable Ansible roles, reducing time-to-deploy for dev/test/staging environments.

4. Multi-node Orchestration

Manage workflows across multiple servers and services in a unified, centralized fashion.

🌐 Why It Matters

⏱️ Efficiency: Save countless admin hours by automating routine tasks.

🛡️ Security: Enforce security policies and configurations consistently across systems.

📈 Scalability: Manage hundreds or thousands of systems with the same effort as managing one.

🤝 Collaboration: Teams can collaborate better with playbooks that document infrastructure steps clearly.

🎓 How to Get Started

Learn Linux Service Management: Understand systemctl, logs, units, and journaling.

Explore Ansible Basics: Learn to write playbooks, roles, and use Ansible Tower.

Take a Red Hat Course: Enroll in Red Hat Certified Engineer (RHCE) to get hands-on training in automation.

Use RHLS: Get access to labs, practice exams, and expert content through the Red Hat Learning Subscription (RHLS).

✅ Final Thoughts

Red Hat Services Management and Automation isn’t just about managing Linux servers—it’s about building a modern IT foundation that’s scalable, secure, and future-ready. Whether you're a sysadmin, DevOps engineer, or IT manager, mastering these tools can help you lead your team toward more agile, efficient operations.

📌 Ready to master Red Hat automation? Explore our Red Hat training programs and take your career to the next level!

📘 Learn. Automate. Succeed. Begin your journey today! Kindly follow: www.hawkstack.com

1 note

·

View note

Text

DevOps Training in Marathahalli Bangalore – Build a Future-Proof Tech Career

Are you looking to transition into a high-growth IT role or strengthen your skills in modern software development practices? If you're in Bangalore, particularly near Marathahalli, you're in one of the best places to do that. eMexo Technologies offers a detailed and industry-ready DevOps Training in Marathahalli Bangalore designed for beginners, professionals, and career changers alike.

Explore DevOps Course in Marathahalli Bangalore – Key Skills You’ll Gain

This DevOps Course in Marathahalli Bangalore is structured to give you both theoretical knowledge and hands-on experience. Here’s what the curriculum typically covers:

Core Modules:

Step into the world of DevOps Training Center in Marathahalli Bangalore, where you'll discover how this powerful approach bridges the gap between development and operations to streamline processes and boost efficiency in today’s tech-driven environment.

✅ Linux Basics: Learn command-line fundamentals essential for DevOps engineers.

✅ Version Control with Git & GitHub: Master source code management.

✅ Automate your software build and deployment: workflows seamlessly using Jenkins as a powerful Continuous Integration tool.

✅ Configuration Management with Ansible: Manage infrastructure more efficiently.

✅ Containerization using Docker: Package apps with all their dependencies.

✅ Orchestration with Kubernetes: Manage containers at scale.

✅ Cloud Computing with AWS: Get started with DevOps in cloud environments.

✅ Monitoring & Logging with Prometheus, Grafana: Gain insights into application performance.

✅ CI/CD Pipeline Implementation: Build end-to-end automation flows.

✅ Everything is taught with real-time: examples, project work, and lab exercises.

Get Recognized with a DevOps Certification Course in Marathahalli Bangalore – Boost Your Industry Credibility

Upon completing the course, you'll be awarded a DevOps Certification Course in Marathahalli Bangalore issued by eMexo Technologies, validating your expertise in the field. This certification is designed to validate your expertise and improve your credibility with hiring managers. It’s especially useful for roles like:

✅ DevOps Engineer

✅ Site Reliability Engineer (SRE)

✅ Build & Release Engineer

✅ Automation Engineer

✅ System Administrator with DevOps skills

Top-Rated Best DevOps Training in Marathahalli Bangalore – Why eMexo Technologies Stands Out from the Rest

When it comes to finding the Best DevOps Training in Marathahalli Bangalore, eMexo Technologies ticks all the boxes:

✅ Up-to-Date Curriculum: Constantly refreshed to include the latest industry tools and practices.

✅ Experienced Trainers: Learn from industry professionals with 8–15 years of real-world experience.

✅ Real Projects: Work on case studies and scenarios inspired by actual DevOps environments.

✅ Flexible Timings: Choose from weekday, weekend, and online batches.

✅ Affordable Pricing: With the current 40% discount, this course is great value for money.

✅ Lifetime Access to Materials: Revise and revisit anytime.

DevOps Training Institute in Marathahalli Bangalore – Why Location Matters

Located in one of Bangalore’s biggest IT hubs, eMexo Technologies is a top-rated DevOps Training Institute in Marathahalli Bangalore. Proximity to major tech parks and MNCs means better networking opportunities and access to local job openings right after your course.

DevOps Training Center in Marathahalli Bangalore – Hands-On, Tech-Ready Lab Environment

At the DevOps Training Center in Marathahalli Bangalore, students don’t just watch tutorials—they do the work. You’ll have access to a fully equipped lab environment where you’ll implement DevOps pipelines, configure cloud environments, and monitor system performance.

DevOps Training and Placement in Marathahalli Bangalore – Start Working Faster

Job support is one of the biggest advantages here. The DevOps Training and Placement in Marathahalli Bangalore equips you with everything you need to launch a successful career in DevOps, including:

✅ 1:1 career counseling

✅ Resume building support

✅ Mock interviews with real-time feedback

✅ Interview scheduling and referrals through their hiring partners

✅ Post-course job alerts and updates

Many past students have successfully secured roles at top companies like Infosys, Wipro, TCS, Mindtree, and Accenture.

youtube

Don’t Miss This Opportunity!

If you're serious about breaking into tech or advancing in your current role, now is the perfect time to act. With expert-led training, hands-on labs, and full placement support, eMexo Technologies' DevOps Training in Marathahalli Bangalore has everything you need to succeed.

👉 Enroll now and grab an exclusive 30% discount on our DevOps training – limited seats available!

🔗 Click here to view course details and enroll

#DevOps#DevOpsTraining#DevOpsCourse#LearnDevOps#DevOpsEngineer#DevOpsSkills#DevOpsCulture#Git#Jenkins#Docker#Kubernetes#Ansible#Terraform#Prometheus#Grafana#CI/CD#Automation#ITTraining#CloudComputing#SoftwareDevelopment#CareerGrowth#DevOpsTrainingcenterinBangalore#DevOpsTrainingInMarathahalliBangalore#DevOpsCourseInMarathahalliBangalore#DevOpsTrainingInstitutesInMarathahalliBangalore#DevOpsClassesInMarathahalliBangalore#BestDevOpsTrainingInMarathahalliBangalore#DevOpsTrainingandPlacementinMarathahalliBangalore#Youtube

1 note

·

View note

Text

Cloud-Native Development in the USA: A Comprehensive Guide

Introduction

Cloud-native development is transforming how businesses in the USA build, deploy, and scale applications. By leveraging cloud infrastructure, microservices, containers, and DevOps, organizations can enhance agility, improve scalability, and drive innovation.

As cloud computing adoption grows, cloud-native development has become a crucial strategy for enterprises looking to optimize performance and reduce infrastructure costs. In this guide, we’ll explore the fundamentals, benefits, key technologies, best practices, top service providers, industry impact, and future trends of cloud-native development in the USA.

What is Cloud-Native Development?

Cloud-native development refers to designing, building, and deploying applications optimized for cloud environments. Unlike traditional monolithic applications, cloud-native solutions utilize a microservices architecture, containerization, and continuous integration/continuous deployment (CI/CD) pipelines for faster and more efficient software delivery.

Key Benefits of Cloud-Native Development

1. Scalability

Cloud-native applications can dynamically scale based on demand, ensuring optimal performance without unnecessary resource consumption.

2. Agility & Faster Deployment

By leveraging DevOps and CI/CD pipelines, cloud-native development accelerates application releases, reducing time-to-market.

3. Cost Efficiency

Organizations only pay for the cloud resources they use, eliminating the need for expensive on-premise infrastructure.

4. Resilience & High Availability

Cloud-native applications are designed for fault tolerance, ensuring minimal downtime and automatic recovery.

5. Improved Security

Built-in cloud security features, automated compliance checks, and container isolation enhance application security.

Key Technologies in Cloud-Native Development

1. Microservices Architecture

Microservices break applications into smaller, independent services that communicate via APIs, improving maintainability and scalability.

2. Containers & Kubernetes

Technologies like Docker and Kubernetes allow for efficient container orchestration, making application deployment seamless across cloud environments.

3. Serverless Computing

Platforms like AWS Lambda, Azure Functions, and Google Cloud Functions eliminate the need for managing infrastructure by running code in response to events.

4. DevOps & CI/CD

Automated build, test, and deployment processes streamline software development, ensuring rapid and reliable releases.

5. API-First Development

APIs enable seamless integration between services, facilitating interoperability across cloud environments.

Best Practices for Cloud-Native Development

1. Adopt a DevOps Culture

Encourage collaboration between development and operations teams to ensure efficient workflows.

2. Implement Infrastructure as Code (IaC)

Tools like Terraform and AWS CloudFormation help automate infrastructure provisioning and management.

3. Use Observability & Monitoring

Employ logging, monitoring, and tracing solutions like Prometheus, Grafana, and ELK Stack to gain insights into application performance.

4. Optimize for Security

Embed security best practices in the development lifecycle, using tools like Snyk, Aqua Security, and Prisma Cloud.

5. Focus on Automation

Automate testing, deployments, and scaling to improve efficiency and reduce human error.

Top Cloud-Native Development Service Providers in the USA

1. AWS Cloud-Native Services

Amazon Web Services offers a comprehensive suite of cloud-native tools, including AWS Lambda, ECS, EKS, and API Gateway.

2. Microsoft Azure

Azure’s cloud-native services include Azure Kubernetes Service (AKS), Azure Functions, and DevOps tools.

3. Google Cloud Platform (GCP)

GCP provides Kubernetes Engine (GKE), Cloud Run, and Anthos for cloud-native development.

4. IBM Cloud & Red Hat OpenShift

IBM Cloud and OpenShift focus on hybrid cloud-native solutions for enterprises.

5. Accenture Cloud-First

Accenture helps businesses adopt cloud-native strategies with AI-driven automation.

6. ThoughtWorks

ThoughtWorks specializes in agile cloud-native transformation and DevOps consulting.

Industry Impact of Cloud-Native Development in the USA

1. Financial Services

Banks and fintech companies use cloud-native applications to enhance security, compliance, and real-time data processing.

2. Healthcare

Cloud-native solutions improve patient data accessibility, enable telemedicine, and support AI-driven diagnostics.

3. E-commerce & Retail

Retailers leverage cloud-native technologies to optimize supply chain management and enhance customer experiences.

4. Media & Entertainment

Streaming services utilize cloud-native development for scalable content delivery and personalization.

Future Trends in Cloud-Native Development

1. Multi-Cloud & Hybrid Cloud Adoption

Businesses will increasingly adopt multi-cloud and hybrid cloud strategies for flexibility and risk mitigation.

2. AI & Machine Learning Integration

AI-driven automation will enhance DevOps workflows and predictive analytics in cloud-native applications.

3. Edge Computing

Processing data closer to the source will improve performance and reduce latency for cloud-native applications.

4. Enhanced Security Measures

Zero-trust security models and AI-driven threat detection will become integral to cloud-native architectures.

Conclusion

Cloud-native development is reshaping how businesses in the USA innovate, scale, and optimize operations. By leveraging microservices, containers, DevOps, and automation, organizations can achieve agility, cost-efficiency, and resilience. As the cloud-native ecosystem continues to evolve, staying ahead of trends and adopting best practices will be essential for businesses aiming to thrive in the digital era.

1 note

·

View note

Text

Navigating the DevOps Landscape: Opportunities and Roles

DevOps has become a game-changer in the quick-moving world of technology. This dynamic process, whose name is a combination of "Development" and "Operations," is revolutionising the way software is created, tested, and deployed. DevOps is a cultural shift that encourages cooperation, automation, and integration between development and IT operations teams, not merely a set of practises. The outcome? greater software delivery speed, dependability, and effectiveness.

In this comprehensive guide, we'll delve into the essence of DevOps, explore the key technologies that underpin its success, and uncover the vast array of job opportunities it offers. Whether you're an aspiring IT professional looking to enter the world of DevOps or an experienced practitioner seeking to enhance your skills, this blog will serve as your roadmap to mastering DevOps. So, let's embark on this enlightening journey into the realm of DevOps.

Key Technologies for DevOps:

Version Control Systems: DevOps teams rely heavily on robust version control systems such as Git and SVN. These systems are instrumental in managing and tracking changes in code and configurations, promoting collaboration and ensuring the integrity of the software development process.

Continuous Integration/Continuous Deployment (CI/CD): The heart of DevOps, CI/CD tools like Jenkins, Travis CI, and CircleCI drive the automation of critical processes. They orchestrate the building, testing, and deployment of code changes, enabling rapid, reliable, and consistent software releases.

Configuration Management: Tools like Ansible, Puppet, and Chef are the architects of automation in the DevOps landscape. They facilitate the automated provisioning and management of infrastructure and application configurations, ensuring consistency and efficiency.

Containerization: Docker and Kubernetes, the cornerstones of containerization, are pivotal in the DevOps toolkit. They empower the creation, deployment, and management of containers that encapsulate applications and their dependencies, simplifying deployment and scaling.

Orchestration: Docker Swarm and Amazon ECS take center stage in orchestrating and managing containerized applications at scale. They provide the control and coordination required to maintain the efficiency and reliability of containerized systems.

Monitoring and Logging: The observability of applications and systems is essential in the DevOps workflow. Monitoring and logging tools like the ELK Stack (Elasticsearch, Logstash, Kibana) and Prometheus are the eyes and ears of DevOps professionals, tracking performance, identifying issues, and optimizing system behavior.

Cloud Computing Platforms: AWS, Azure, and Google Cloud are the foundational pillars of cloud infrastructure in DevOps. They offer the infrastructure and services essential for creating and scaling cloud-based applications, facilitating the agility and flexibility required in modern software development.

Scripting and Coding: Proficiency in scripting languages such as Shell, Python, Ruby, and coding skills are invaluable assets for DevOps professionals. They empower the creation of automation scripts and tools, enabling customization and extensibility in the DevOps pipeline.

Collaboration and Communication Tools: Collaboration tools like Slack and Microsoft Teams enhance the communication and coordination among DevOps team members. They foster efficient collaboration and facilitate the exchange of ideas and information.

Infrastructure as Code (IaC): The concept of Infrastructure as Code, represented by tools like Terraform and AWS CloudFormation, is a pivotal practice in DevOps. It allows the definition and management of infrastructure using code, ensuring consistency and reproducibility, and enabling the rapid provisioning of resources.

Job Opportunities in DevOps:

DevOps Engineer: DevOps engineers are the architects of continuous integration and continuous deployment (CI/CD) pipelines. They meticulously design and maintain these pipelines to automate the deployment process, ensuring the rapid, reliable, and consistent release of software. Their responsibilities extend to optimizing the system's reliability, making them the backbone of seamless software delivery.

Release Manager: Release managers play a pivotal role in orchestrating the software release process. They carefully plan and schedule software releases, coordinating activities between development and IT teams. Their keen oversight ensures the smooth transition of software from development to production, enabling timely and successful releases.

Automation Architect: Automation architects are the visionaries behind the design and development of automation frameworks. These frameworks streamline deployment and monitoring processes, leveraging automation to enhance efficiency and reliability. They are the engineers of innovation, transforming manual tasks into automated wonders.

Cloud Engineer: Cloud engineers are the custodians of cloud infrastructure. They adeptly manage cloud resources, optimizing their performance and ensuring scalability. Their expertise lies in harnessing the power of cloud platforms like AWS, Azure, or Google Cloud to provide robust, flexible, and cost-effective solutions.

Site Reliability Engineer (SRE): SREs are the sentinels of system reliability. They focus on maintaining the system's resilience through efficient practices, continuous monitoring, and rapid incident response. Their vigilance ensures that applications and systems remain stable and performant, even in the face of challenges.

Security Engineer: Security engineers are the guardians of the DevOps pipeline. They integrate security measures seamlessly into the software development process, safeguarding it from potential threats and vulnerabilities. Their role is crucial in an era where security is paramount, ensuring that DevOps practices are fortified against breaches.

As DevOps continues to redefine the landscape of software development and deployment, gaining expertise in its core principles and technologies is a strategic career move. ACTE Technologies offers comprehensive DevOps training programs, led by industry experts who provide invaluable insights, real-world examples, and hands-on guidance. ACTE Technologies's DevOps training covers a wide range of essential concepts, practical exercises, and real-world applications. With a strong focus on certification preparation, ACTE Technologies ensures that you're well-prepared to excel in the world of DevOps. With their guidance, you can gain mastery over DevOps practices, enhance your skill set, and propel your career to new heights.

11 notes

·

View notes

Text

Why Python Development Services Are Powering the Future of Digital Innovation

Why Python Development Services Are Powering the Future of Digital Innovation

Python has become one of the most trusted programming languages in the world, and its impact continues to grow across industries. From early-stage startups building MVPs to large enterprises creating data pipelines, the demand for reliable, high-quality python development services has never been higher.

What makes Python so powerful is not just its simplicity, but its adaptability. It powers everything from lightweight web applications to complex AI engines. As the digital economy accelerates, companies that leverage expert python development services can bring products to market faster, reduce infrastructure costs, and innovate with confidence.

In this article, we explore why Python is the foundation for modern software development, what to expect from a professional development partner, and how CloudAstra is helping companies worldwide scale with purpose-built Python solutions.

The Rise of Python in Software Development

Python is widely used in multiple domains for a reason. Its syntax is easy to understand, its ecosystem is vast, and its performance scales well with the right architectural practices. In the last decade, Python has dominated development in areas such as:

Web development using frameworks like Django, Flask, and FastAPI

Automation and scripting for internal tooling and DevOps

Data analysis, visualization, and reporting

AI, machine learning, and deep learning with libraries like TensorFlow and PyTorch

API development for SaaS and mobile backends

Enterprise-grade software and microservices architectures

This wide applicability makes python development services a key offering for businesses aiming to move fast and build smarter.

Who Needs Python Development Services

Python is not limited to any specific sector. Whether you are a startup founder, CTO of a SaaS company, or a product manager at a large enterprise, you can benefit from specialized python development services in several scenarios:

You need to develop an MVP quickly and cost-effectively

You’re building a backend system or API for your web/mobile product

You’re launching a machine learning feature or data-driven dashboard

You need to refactor or scale an existing legacy Python application

You want to integrate your product with third-party APIs or automate operations

CloudAstra’s Python development services are designed to support businesses in all of the above stages—from rapid prototyping to long-term scalability.

Core Features of High-Quality Python Development Services

Choosing the right development team is crucial. A strong provider of python development services should bring the following to the table:

Deep Technical Expertise

Python may be beginner-friendly, but enterprise development requires deep architectural knowledge. Your partner should be proficient in:

Backend frameworks like Django, Flask, and FastAPI

Database optimization using PostgreSQL, MongoDB, Redis, or MySQL

Containerization using Docker and orchestration using Kubernetes

Asynchronous programming and performance tuning

CI/CD pipelines and version control workflows

CloudAstra has experience in all of these technologies, ensuring your Python application is built for reliability and growth.

Full Lifecycle Support

A reliable python development services partner will not just write code—they will help you plan, build, test, deploy, and maintain your application.

At CloudAstra, services include product scoping, wireframing, sprint-based development, testing automation, and post-launch DevOps monitoring.

Agile Delivery

Agile methodologies allow teams to build iteratively, adapt to feedback, and reduce time to market. Each sprint adds real value, and project managers ensure transparency through demos and progress updates.

CloudAstra’s team operates in weekly or bi-weekly sprints, with continuous delivery pipelines for rapid deployment.

DevOps and Cloud-Native Integration

Python is powerful, but only when paired with modern DevOps practices. Expect your python development services provider to use:

Terraform or Pulumi for infrastructure as code

CI/CD with GitHub Actions or GitLab pipelines

Cloud hosting via AWS, Azure, or Google Cloud

Monitoring with Prometheus, Grafana, and Sentry

CloudAstra includes these services as part of every Python project, making deployment secure, reliable, and repeatable.

Post-Launch Optimization

Launching is not the end. You’ll need to monitor usage, fix bugs, and roll out features based on user data. An ideal Python team will help you:

Implement analytics and tracking tools

Optimize performance bottlenecks

Extend modules or integrate with other systems

Automate backups and environment rollbacks

With CloudAstra’s post-launch support, you can confidently scale and evolve your application.

Use Cases Where CloudAstra Adds Value

CloudAstra offers Python solutions tailored to specific business needs:

MVP Development: Launch your startup’s core features in weeks, not months

Custom Web Portals: Build dashboards, CRMs, and customer-facing portals

SaaS Platforms: Architect and build subscription-based apps with user roles, billing, and analytics

APIs and Microservices: Design lightweight, maintainable APIs for mobile, web, or third-party use

Data Processing Systems: Create backend systems for ingesting, analyzing, and reporting large datasets

Machine Learning Integration: Embed AI functionality directly into your app workflows

CloudAstra works closely with product teams to ensure Python is implemented in the most effective and scalable way possible.

Why Businesses Choose CloudAstra

CloudAstra’s Python development services are trusted by startups, SMEs, and global brands for:

Expertise in both backend engineering and product strategy

Flexible engagement models: hourly, fixed-price, or dedicated team

Onshore/offshore hybrid teams for cost efficiency and timezone alignment

White-label and NDA-friendly solutions for agencies and consultancies

Fast response times, agile delivery, and long-term partnership approach

CloudAstra isn’t just a development team—it’s a strategic partner that helps you launch smarter, scale faster, and build better.

Final Thoughts

In an increasingly digital world, speed, quality, and scalability are non-negotiable. Investing in professional python development services allows you to meet user expectations, reduce engineering overhead, and innovate continuously.

If you're planning to build a web platform, automate your workflows, or integrate data science features into your application, Python is the ideal foundation—and CloudAstra is the team to deliver it.

Reach out to CloudAstra today and discover how Python can power your next big product.

0 notes

Text

⚙️ YAML in DevOps: The Silent Power Behind Containers 🚢🧾

From single-service apps to multi-cloud microservices, YAML is the unsung hero powering container orchestration with simplicity and precision.

💡 Whether you're using:

🐳 Docker Compose for local development

☸️ Kubernetes for production-grade scaling

🧪 Helm & Kustomize for templating and config layering → YAML is your blueprint.

Here’s why YAML mastery matters:

✅ Declarative = predictable infrastructure

🧠 Easy to version control with GitOps

🔐 Supports secrets, probes, and security contexts

🔄 Plugged into your CI/CD pipeline, start to deploy

🔍 One misplaced indent? Disaster. One clean YAML file? Peace.

🛠️ YAML is more than syntax—it’s the DNA of modern DevOps. Want to strengthen your workflow? Combine clean YAML with smart test automation using Keploy

0 notes

Text

How to Start a Successful DevOps Career in Dubai?

The UAE, and especially Dubai, has rapidly transformed into a global technology hub over the past decade. With its ambitious “Smart Dubai” initiative and a surge in cloud adoption across industries, the demand for DevOps professionals has skyrocketed. Companies in finance, healthcare, logistics, and e-commerce are reimagining their operations with automation, making DevOps an essential part of their IT strategy.

For those aspiring to work in the Dubai tech market, mastering DevOps practices is no longer optional, it’s a gateway to some of the most rewarding careers in the Middle East. Let’s explore how you can build a successful DevOps career in Dubai, the skills required, and why this field is one of the fastest-growing in the region.

Why Dubai Is a Hotspot for DevOps Careers

Dubai’s vision to become a global digital economy leader has accelerated the adoption of cloud computing and DevOps culture. Top enterprises and government initiatives are driving transformation projects that rely heavily on continuous integration, automation, and scalability.

The rise of companies like Amazon Web Services (AWS) Middle East, Microsoft Azure UAE, and Google Cloud in the region has created a strong ecosystem where DevOps professionals are highly sought after. Organizations are looking for engineers who can streamline workflows, improve software delivery, and manage complex cloud infrastructure.

Salary Insights in Dubai:

Entry-level DevOps Engineers: AED 12,000-18,000 per month

Mid-level Engineers: AED 20,000-28,000 per month

Senior DevOps Specialists: AED 30,000+ per month.

The tax-free salary structure in Dubai adds to the attractiveness of these roles.

Essential DevOps Skills to Succeed in Dubai

To establish yourself in Dubai’s competitive market, it’s crucial to develop expertise in tools and practices that are in high demand:

Version Control Systems: Git for efficient collaboration.

Continuous Integration/Continuous Deployment (CI/CD): Jenkins and Maven.

Configuration Management: Master tools like Ansible to automate workflows.

Containerization: Docker to package applications and Kubernetes for orchestration.

Cloud Infrastructure: AWS, Azure, and GCP services.

Infrastructure as Code (IaC): Terraform for building scalable environments.

Monitoring and Logging: Prometheus, Grafana, and Nagios.

Employers also value professionals who understand security, compliance, and multi-cloud strategies.

Dubai’s Growing Demand for DevOps Professionals

Across Dubai, companies are hiring DevOps experts to bridge the gap between software development and IT operations. Roles include:

DevOps Engineer

Cloud DevOps Architect

Site Reliability Engineer (SRE)

Automation Engineer

Cloud Infrastructure Engineer.

Industries like aviation, finance, real estate, and retail are actively seeking certified professionals to manage cloud-native applications and drive digital transformation.

Steps to Build a Successful DevOps Career in Dubai

1. Gain In-Demand Certifications

Certification is highly valued in Dubai’s job market. Enrolling in an industry-recognized program like the DevOps Certification Program at Eduleem ensures you’re learning the right skills and tools employers require.

2. Build Practical Experience

Practical knowledge is critical. Work on real-world projects, internships, or freelance assignments to build a portfolio that stands out.

3. Understand Dubai’s Tech Culture

Networking in Dubai’s IT community and attending cloud or DevOps meetups (like AWS Dubai events) can help you stay ahead.

4. Focus on Continuous Learning

DevOps is an evolving field. Stay updated on the latest tools and practices to remain competitive.

Why I Chose Eduleem for My DevOps Journey

As an aspiring DevOps professional, I enrolled in the DevOps Certification Program at Eduleem. The program provided:

Hands-on training with tools like Git, Jenkins, Docker, Kubernetes, Terraform, and Ansible.

Mock interview preparation and resume assistance to prepare for Dubai’s competitive market.

24/7 support for doubts and project guidance.

Affordable fees designed for working professionals.

100% placement support, helping me land my first DevOps role in Dubai.

With expert-led sessions and real-time projects, I built a strong portfolio that opened doors in leading Dubai-based organizations.

Begin Your DevOps Career in Dubai Today

Dubai’s tech landscape offers immense possibilities for DevOps engineers ready to embrace automation and innovation. Start your journey with the right guidance and training.

Explore DevOps training in Dubai with Eduleem and take the first step toward a high-growth career in one of the world’s leading technology hubs.

0 notes

Text

How AI is Transforming Cloud Computing in 2025

As digital transformation accelerates, two technologies continue to lead the charge: Artificial Intelligence (AI) and Cloud Computing. Individually, they offer massive benefits. But together? They’re revolutionizing how businesses operate, scale, and innovate.

AI in cloud computing is no longer a futuristic concept—it’s a present-day reality that’s enhancing data processing, optimizing resource use, and reshaping industries.

In this article, we’ll explore how AI and cloud computing work hand-in-hand, the key benefits, and emerging use cases you should know about in 2025.

What Is AI in Cloud Computing?

At its core, AI in cloud computing refers to the integration of intelligent algorithms and machine learning models within cloud platforms. It allows systems to automate tasks, analyze vast amounts of data in real time, and make predictive decisions—without relying on traditional on-premise infrastructure.

This fusion brings AI’s analytical capabilities to the cloud’s scalability, creating smarter, more agile systems across various industries.

Key Benefits of AI in Cloud Computing

1. Scalability with Intelligence

Cloud platforms give organizations on-demand access to storage and compute power. Adding AI allows for intelligent auto-scaling, predictive resource allocation, and real-time optimization—making cloud environments more efficient and cost-effective.

2. Advanced Data Analytics

AI can process and analyze massive datasets hosted on the cloud, enabling real-time insights, trend analysis, and anomaly detection. This is critical for industries like finance, healthcare, and retail, where data drives decisions.

3. Automation of Routine Tasks

From automated customer support to intelligent workflow orchestration, AI enables cloud-based systems to reduce manual effort. Virtual agents, smart monitoring tools, and AI-driven DevOps pipelines are just the beginning.

4. Enhanced Security and Threat Detection

Cloud-based AI models can continuously scan networks for suspicious behavior, detect threats faster, and automatically respond to security incidents—reducing risk and improving compliance.

5. Cost Optimization

AI helps businesses optimize cloud usage by predicting workloads and recommending the right instance types or configurations, helping teams avoid over-provisioning and reduce waste.

Real-World Use Cases

Healthcare: AI models on the cloud process medical imaging, predict patient outcomes, and personalize treatment plans.

Finance: Banks use AI in the cloud for fraud detection, risk analysis, and real-time financial forecasting.

Retail: AI-powered recommendation engines analyze consumer behavior hosted on cloud platforms for personalized shopping experiences.

Manufacturing: Predictive maintenance and quality control are driven by AI models running on cloud-based IoT data.

Challenges to Consider

While the benefits are clear, there are still challenges to address:

Data Privacy: Ensuring sensitive data is protected during processing and storage.

Model Training Costs: Training complex models can be resource-intensive and expensive.

Skill Gaps: Organizations need teams skilled in both cloud architecture and AI development.

That’s why strategic implementation—guided by best practices and the right cloud provider—is critical to long-term success.

Final Thoughts

The convergence of AI and cloud computing is not just enhancing how we build and deploy applications—it’s fundamentally changing the speed, scale, and intelligence with which businesses operate. Whether you're leveraging cloud-based AI for automation, analytics, or enhanced customer experiences, this combination is becoming essential in staying competitive in a digital-first world.

To dive deeper into this evolving tech landscape, read this full guide on AI in Cloud Computing for additional insights, challenges, and strategic use cases.

0 notes

Text

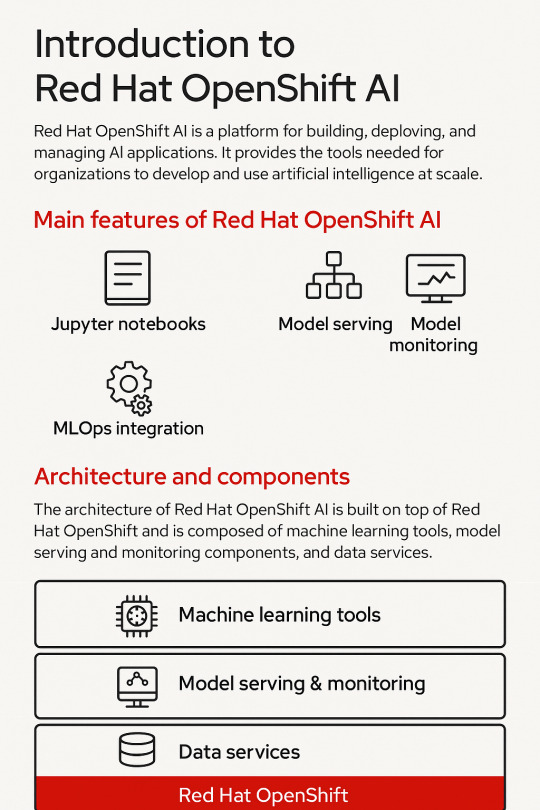

Introduction to Red Hat OpenShift AI: Features, Architecture & Components

In today’s data-driven world, organizations need a scalable, secure, and flexible platform to build, deploy, and manage artificial intelligence (AI) and machine learning (ML) models. Red Hat OpenShift AI is built precisely for that. It provides a consistent, Kubernetes-native platform for MLOps, integrating open-source tools, enterprise-grade support, and cloud-native flexibility.

Let’s break down the key features, architecture, and components that make OpenShift AI a powerful platform for AI innovation.

🔍 What is Red Hat OpenShift AI?

Red Hat OpenShift AI (formerly known as OpenShift Data Science) is a fully supported, enterprise-ready platform that brings together tools for data scientists, ML engineers, and DevOps teams. It enables rapid model development, training, and deployment on the Red Hat OpenShift Container Platform.

🚀 Key Features of OpenShift AI

1. Built for MLOps

OpenShift AI supports the entire ML lifecycle—from experimentation to deployment—within a consistent, containerized environment.

2. Integrated Jupyter Notebooks

Data scientists can use Jupyter notebooks pre-integrated into the platform, allowing quick experimentation with data and models.

3. Model Training and Serving

Use Kubernetes to scale model training jobs and deploy inference services using tools like KServe and Seldon Core.

4. Security and Governance

OpenShift AI integrates enterprise-grade security, role-based access controls (RBAC), and policy enforcement using OpenShift’s built-in features.

5. Support for Open Source Tools

Seamless integration with open-source frameworks like TensorFlow, PyTorch, Scikit-learn, and ONNX for maximum flexibility.

6. Hybrid and Multicloud Ready

You can run OpenShift AI on any OpenShift cluster—on-premise or across cloud providers like AWS, Azure, and GCP.

🧠 OpenShift AI Architecture Overview

Red Hat OpenShift AI builds upon OpenShift’s robust Kubernetes platform, adding specific components to support the AI/ML workflows. The architecture broadly consists of:

1. User Interface Layer

JupyterHub: Multi-user Jupyter notebook support.

Dashboard: UI for managing projects, models, and pipelines.

2. Model Development Layer

Notebooks: Containerized environments with GPU/CPU options.

Data Connectors: Access to S3, Ceph, or other object storage for datasets.

3. Training and Pipeline Layer

Open Data Hub and Kubeflow Pipelines: Automate ML workflows.

Ray, MPI, and Horovod: For distributed training jobs.

4. Inference Layer

KServe/Seldon: Model serving at scale with REST and gRPC endpoints.

Model Monitoring: Metrics and performance tracking for live models.

5. Storage and Resource Management

Ceph / OpenShift Data Foundation: Persistent storage for model artifacts and datasets.

GPU Scheduling and Node Management: Leverages OpenShift for optimized hardware utilization.

🧩 Core Components of OpenShift AI

ComponentDescriptionJupyterHubWeb-based development interface for notebooksKServe/SeldonInference serving engines with auto-scalingOpen Data HubML platform tools including Kafka, Spark, and moreKubeflow PipelinesWorkflow orchestration for training pipelinesModelMeshScalable, multi-model servingPrometheus + GrafanaMonitoring and dashboarding for models and infrastructureOpenShift PipelinesCI/CD for ML workflows using Tekton

🌎 Use Cases

Financial Services: Fraud detection using real-time ML models

Healthcare: Predictive diagnostics and patient risk models

Retail: Personalized recommendations powered by AI

Manufacturing: Predictive maintenance and quality control

🏁 Final Thoughts

Red Hat OpenShift AI brings together the best of Kubernetes, open-source innovation, and enterprise-level security to enable real-world AI at scale. Whether you’re building a simple classifier or deploying a complex deep learning pipeline, OpenShift AI provides a unified, scalable, and production-grade platform.

For more info, Kindly follow: Hawkstack Technologies

0 notes

Text

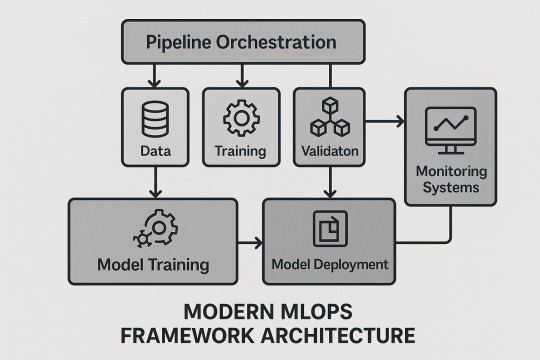

Scaling Machine Learning Operations with Modern MLOps Frameworks

The rise of business-critical AI demands sophisticated operational frameworks. Modern end to end machine learning pipeline frameworks combine ML best practices with DevOps, enabling scalable, reliable, and collaborative operations.

MLOps Framework Architecture

Experiment management and artifact tracking

Model registry and approval workflows

Pipeline orchestration and workflow management

Advanced Automation Strategies

Continuous integration and testing for ML

Automated retraining and rollback capabilities

Multi-stage validation and environment consistency

Enterprise-Scale Infrastructure

Kubernetes-based and serverless ML platforms

Distributed training and inference systems

Multi-cloud and hybrid cloud orchestration

Monitoring and Observability

Multi-dimensional monitoring and predictive alerting

Root cause analysis and distributed tracing

Advanced drift and business impact analytics

Collaboration and Governance

Role-based collaboration and cross-functional workflows

Automated compliance and audit trails

Policy enforcement and risk management

Technology Stack Integration

Kubeflow, MLflow, Weights & Biases, Apache Airflow

API-first and microservices architectures

AutoML, edge computing, federated learning

Conclusion

Comprehensive end to end machine learning pipeline frameworks are the foundation for sustainable, scalable AI. Investing in MLOps capabilities ensures your organization can innovate, deploy, and scale machine learning with confidence and agility.

0 notes

Text

The Growing Role of DevOps in Cloud-Native Development

In today’s fast-paced digital ecosystem, businesses are rapidly shifting towards cloud-native architectures to enhance scalability, resilience, and agility. At the heart of this transformation lies a game-changer: DevOps. At VGD Technologies, we believe that integrating DevOps into cloud-native development is not just a trend—it's a competitive necessity.

What is Cloud-Native Development?

Cloud-native is more than just a buzzword. It's an approach to building and running applications that fully exploit the benefits of the cloud computing model. It focuses on:

Microservices architecture

Containerization (like Docker & Kubernetes)

Scalability and resilience

Automated CI/CD pipelines

But without DevOps, cloud-native is incomplete.

DevOps + Cloud-Native = Continuous Innovation//Game-Changing Synergy

DevOps, the synergy of development and operations, plays a pivotal role in automating workflows, fostering collaboration, and reducing time-to-market. When paired with cloud-native practices—like microservices, containers, and serverless computing—it becomes the engine of continuous delivery and innovation. The integration of DevOps practices in cloud-native environments empowers teams to:

Automate deployments and reduce manual errors

Speed up release cycles using CI/CD pipelines

Ensure reliability and uptime through monitoring and feedback loops

Enable seamless collaboration between development and operations

Together, they create a self-sustaining ecosystem that accelerates innovation and minimizes downtime.

Why It Matters More Than Ever

With the rise of platforms like Kubernetes, Docker, and multi-cloud strategies, enterprises are prioritizing infrastructure as code (IaC), automated CI/CD pipelines, and real-time observability. DevOps ensures seamless integration of these tools into your cloud-native stack, eliminating bottlenecks and improving reliability.

AI-powered DevOps is on the rise

Infrastructure as Code (IaC) is the norm

Security automation is embedded from Day 1

Serverless computing is reshaping how we deploy logic

Observability is now a must-have, not a nice-to-have

At VGD Technologies, we harness these trends to deliver cloud-native solutions that scale, secure, and simplify business operations across industries.

Real-World Impact

Companies adopting DevOps in their cloud-native journey report:

30–50% faster time-to-market

Significant cost reduction in operations

Improved user experience & satisfaction From startups to enterprise-level businesses, this approach is transforming the way software delivers value.

VGD Technologies’ Cloud-Native DevOps Expertise

At VGD Technologies, we help enterprises build cloud-native applications powered by DevOps best practices. Our solutions are designed to:

Faster delivery

Automate infrastructure provisioning

Enable zero-downtime deployments

Implement proactive monitoring and alerts

Enhance scalability through container orchestration

Stronger security posture

Reduced operational overhead

From startups to large-scale enterprises, our clients trust us to deliver robust, scalable, and future-ready applications that accelerate digital transformation.

What’s Next?

As businesses continue to adopt AI/ML, IoT, and edge computing, the fusion of DevOps and cloud-native development will become even more vital. Investing in DevOps today means you're building a foundation for tomorrow’s innovation.

Let’s Talk DevOps-Driven Digital Transformation

Looking to future-proof your applications with a cloud-native DevOps strategy?

Discover how we can help your business grow at: www.vgdtechnologies.com

#DevOps#CloudNative#DigitalTransformation#Kubernetes#Microservices#Serverless#CloudComputing#CICD#InfrastructureAsCode#TechInnovation#VGDTechnologies#FutureOfTech#EnterpriseIT#DevOpsCulture#SoftwareEngineering#ModernDevelopment#AgileDevelopment#AutomationInTech#FullStackDev#CloudSolutions

1 note

·

View note

Text

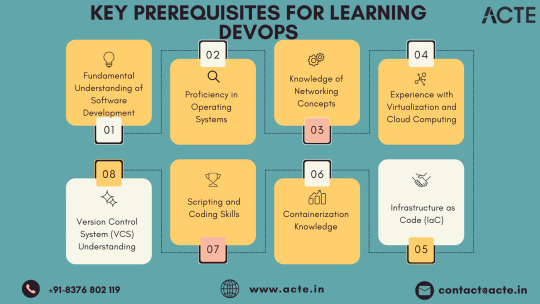

DevOps Landscape: Building Blocks for a Seamless Transition

In the dynamic realm where software development intersects with operations, the role of a DevOps professional has become instrumental. For individuals aspiring to make the leap into this dynamic field, understanding the key building blocks can set the stage for a successful transition. While there are no rigid prerequisites, acquiring foundational skills and knowledge areas becomes pivotal for thriving in a DevOps role.

1. Embracing the Essence of Software Development: At the core of DevOps lies collaboration, making it essential for individuals to have a fundamental understanding of software development processes. Proficiency in coding practices, version control, and the collaborative nature of development projects is paramount. Additionally, a solid grasp of programming languages and scripting adds a valuable dimension to one's skill set.

2. Navigating System Administration Fundamentals: DevOps success is intricately linked to a foundational understanding of system administration. This encompasses knowledge of operating systems, networks, and infrastructure components. Such familiarity empowers DevOps professionals to adeptly manage and optimize the underlying infrastructure supporting applications.

3. Mastery of Version Control Systems: Proficiency in version control systems, with Git taking a prominent role, is indispensable. Version control serves as the linchpin for efficient code collaboration, allowing teams to track changes, manage codebases, and seamlessly integrate contributions from multiple developers.

4. Scripting and Automation Proficiency: Automation is a central tenet of DevOps, emphasizing the need for scripting skills in languages like Python, Shell, or Ruby. This skill set enables individuals to automate repetitive tasks, fostering more efficient workflows within the DevOps pipeline.

5. Embracing Containerization Technologies: The widespread adoption of containerization technologies, exemplified by Docker, and orchestration tools like Kubernetes, necessitates a solid understanding. Mastery of these technologies is pivotal for creating consistent and reproducible environments, as well as managing scalable applications.

6. Unveiling CI/CD Practices: Continuous Integration and Continuous Deployment (CI/CD) practices form the beating heart of DevOps. Acquiring knowledge of CI/CD tools such as Jenkins, GitLab CI, or Travis CI is essential. This proficiency ensures the automated execution of code testing, integration, and deployment processes, streamlining development pipelines.

7. Harnessing Infrastructure as Code (IaC): Proficiency in Infrastructure as Code (IaC) tools, including Terraform or Ansible, constitutes a fundamental aspect of DevOps. IaC facilitates the codification of infrastructure, enabling the automated provisioning and management of resources while ensuring consistency across diverse environments.

8. Fostering a Collaborative Mindset: Effective communication and collaboration skills are non-negotiable in the DevOps sphere. The ability to seamlessly collaborate with cross-functional teams, spanning development, operations, and various stakeholders, lays the groundwork for a culture of collaboration essential to DevOps success.

9. Navigating Monitoring and Logging Realms: Proficiency in monitoring tools such as Prometheus and log analysis tools like the ELK stack is indispensable for maintaining application health. Proactive monitoring equips teams to identify issues in real-time and troubleshoot effectively.

10. Embracing a Continuous Learning Journey: DevOps is characterized by its dynamic nature, with new tools and practices continually emerging. A commitment to continuous learning and adaptability to emerging technologies is a fundamental trait for success in the ever-evolving field of DevOps.

In summary, while the transition to a DevOps role may not have rigid prerequisites, the acquisition of these foundational skills and knowledge areas becomes the bedrock for a successful journey. DevOps transcends being a mere set of practices; it embodies a cultural shift driven by collaboration, automation, and an unwavering commitment to continuous improvement. By embracing these essential building blocks, individuals can navigate their DevOps journey with confidence and competence.

5 notes

·

View notes

Text

Why AIOps Platform Development Is Critical for Modern IT Operations?

In today's rapidly evolving digital world, modern IT operations are more complex than ever. With the proliferation of cloud-native applications, distributed systems, and hybrid infrastructure models, the traditional ways of managing IT systems are proving insufficient. Enter AIOps — Artificial Intelligence for IT Operations — a transformative approach that leverages machine learning, big data, and analytics to automate and enhance IT operations.

In this blog, we'll explore why AIOps platform development is not just beneficial but critical for modern IT operations, how it transforms incident management, and what organizations should consider when building or adopting such platforms.

The Evolution of IT Operations

Traditional IT operations relied heavily on manual intervention, rule-based monitoring, and reactive problem-solving. As systems grew in complexity and scale, IT teams found themselves overwhelmed by alerts, slow in diagnosing root causes, and inefficient in resolving incidents.

Today’s IT environments include:

Hybrid cloud infrastructure

Microservices and containerized applications

Real-time data pipelines

Continuous integration and deployment (CI/CD)

This complexity has led to:

Alert fatigue due to an overwhelming volume of monitoring signals

Delayed incident resolution from lack of visibility and contextual insights

Increased downtime and degraded customer experience

This is where AIOps platforms come into play.

What Is AIOps?

AIOps (Artificial Intelligence for IT Operations) is a methodology that applies artificial intelligence (AI) and machine learning (ML) to enhance and automate IT operational processes.

An AIOps platform typically offers:

Real-time monitoring and analytics

Anomaly detection

Root cause analysis

Predictive insights

Automated remediation and orchestration

By ingesting vast amounts of structured and unstructured data from multiple sources (logs, metrics, events, traces, etc.), AIOps platforms can provide holistic visibility, reduce noise, and empower IT teams to focus on strategic initiatives rather than reactive firefighting.

Why AIOps Platform Development Is Critical

1. Managing Scale and Complexity

Modern IT infrastructures are dynamic, with components spinning up and down in real time. Traditional monitoring tools can't cope with this level of volatility. AIOps platforms are designed to ingest and process large-scale data in real time, adapting to changing environments with minimal manual input.

2. Reducing Alert Fatigue

AIOps uses intelligent noise reduction techniques such as event correlation and clustering to cut through the noise. Instead of bombarding IT teams with thousands of alerts, an AIOps system can prioritize and group related incidents, reducing false positives and highlighting what's truly important.

3. Accelerating Root Cause Analysis

With ML algorithms, AIOps platforms can automatically trace issues to their root cause, analyzing patterns and anomalies across multiple data sources. This reduces Mean Time to Detect (MTTD) and Mean Time to Resolve (MTTR), which are key performance indicators for IT operations.

4. Predicting and Preventing Incidents

One of the key strengths of AIOps is its predictive capability. By identifying patterns that precede failures, AIOps can proactively warn teams before issues impact end-users. Predictive analytics can also forecast capacity issues and performance degradation, enabling proactive optimization.

5. Driving Automation and Remediation

AIOps platforms don’t just detect problems — they can also resolve them autonomously. Integrating with orchestration tools like Ansible, Puppet, or Kubernetes, an AIOps solution can trigger self-healing workflows or automated scripts, reducing human intervention and improving response times.

6. Supporting DevOps and SRE Practices

As organizations adopt DevOps and Site Reliability Engineering (SRE), AIOps provides the real-time insights and automation required to manage CI/CD pipelines, ensure system reliability, and enable faster deployments without compromising stability.

7. Enhancing Observability

Observability — the ability to understand what's happening inside a system based on outputs like logs, metrics, and traces — is foundational to modern IT. AIOps platforms extend observability by correlating disparate data, applying context, and providing intelligent visualizations that guide better decision-making.

Key Capabilities of a Robust AIOps Platform

When developing or evaluating an AIOps platform, organizations should prioritize the following features:

Data Integration: Ability to ingest data from monitoring tools, cloud platforms, log aggregators, and custom sources.

Real-time Analytics: Stream processing and in-memory analytics to provide immediate insights.

Machine Learning: Supervised and unsupervised learning to detect anomalies, predict issues, and learn from operational history.

Event Correlation: Grouping and contextualizing events from across the stack.

Visualization Dashboards: Unified views with drill-down capabilities for root cause exploration.

Workflow Automation: Integration with ITSM tools and automation platforms for closed-loop remediation.

Scalability: Cloud-native architecture that can scale horizontally as the environment grows.

AIOps in Action: Real-World Use Cases

Let’s look at how companies across industries are leveraging AIOps to improve their operations:

E-commerce: A major retailer uses AIOps to monitor application health across multiple regions. The platform predicts traffic spikes, balances load, and automatically scales resources — all in real time.

Financial Services: A global bank uses AIOps to reduce fraud detection time by correlating transactional logs with infrastructure anomalies.

Healthcare: A hospital network deploys AIOps to ensure uptime for mission-critical systems like electronic medical records (EMRs), detecting anomalies before patient care is affected.

Future of AIOps: What Lies Ahead?

As AIOps matures, we can expect deeper integration with adjacent technologies:

Generative AI for Incident Resolution: Intelligent agents that recommend fixes, draft playbooks, or even explain anomalies in plain language.

Edge AI for Distributed Systems: Bringing AI-driven observability to edge devices and IoT environments.

Conversational AIOps: Integrating with collaboration tools like Slack, Microsoft Teams, or voice assistants to simplify access to insights.

Continuous Learning Systems: AIOps platforms that evolve autonomously, refining their models as they process more data.

The synergy between AI, automation, and human expertise will define the next generation of resilient, scalable, and intelligent IT operations.

Conclusion

The shift toward AIOps is not just a trend — it's a necessity for businesses aiming to remain competitive and resilient in an increasingly digital-first world. As IT infrastructures become more dynamic, distributed, and data-intensive, the ability to respond in real-time, detect issues before they escalate, and automate responses is mission-critical.

Developing an AIOps platform isn’t about replacing humans with machines — it’s about amplifying human capabilities with intelligent, data-driven automation. Organizations that invest in AIOps today will be better equipped to handle the challenges of tomorrow’s IT landscape, ensuring performance, reliability, and innovation at scale.

0 notes

Text

Governance Without Boundaries - CP4D and Red Hat Integration

The rising complexity of hybrid and multi-cloud environments calls for stronger and more unified data governance. When systems operate in isolation, they introduce risks, make compliance harder, and slow down decision-making. As digital ecosystems expand, consistent governance across infrastructure becomes more than a goal, it becomes a necessity. A cohesive strategy helps maintain control as platforms and regions scale together.

IBM Cloud Pak for Data (CP4D), working alongside Red Hat OpenShift, offers a container-based platform that addresses these challenges head-on. That setup makes it easier to scale governance consistently, no matter the environment. With container orchestration in place, governance rules stay enforced regardless of where the data lives. This alignment helps prevent policy drift and supports data integrity in high-compliance sectors.

Watson Knowledge Catalog (WKC) sits at the heart of CP4D’s governance tools, offering features for data discovery, classification, and controlled access. WKC lets teams organize assets, apply consistent metadata, and manage permissions across hybrid or multi-cloud systems. Centralized oversight reduces complexity and brings transparency to how data is used. It also facilitates collaboration by giving teams a shared framework for managing data responsibilities.

Red Hat OpenShift brings added flexibility by letting services like data lineage, cataloging, and enforcement run in modular, scalable containers. These components adjust to different workloads and grow as demand increases. That level of adaptability is key for teams managing dynamic operations across multiple functions. This flexibility ensures governance processes can evolve alongside changing application architectures.

Kubernetes, which powers OpenShift’s orchestration, takes on governance operations through automated workload scheduling and smart resource use. Its automation ensures steady performance while still meeting privacy and audit standards. By handling deployment and scaling behind the scenes, it reduces the burden on IT teams. With fewer manual tasks, organizations can focus more on long-term strategy.

A global business responding to data subject access requests (DSARs) across different jurisdictions can use CP4D to streamline the entire process. These built-in tools support compliant responses under GDPR, CCPA, and other regulatory frameworks. Faster identification and retrieval of relevant data helps reduce penalties while improving public trust.

CP4D’s tools for discovering and classifying data work across formats, from real-time streams to long-term storage. They help organizations identify sensitive content, apply safeguards, and stay aligned with privacy rules. Automation cuts down on human error and reinforces sound data handling practices. As data volumes grow, these automated capabilities help maintain performance and consistency.

Lineage tracking offers a clear view of how data moves through DevOps workflows and analytics pipelines. By following its origin, transformation, and application, teams can trace issues, confirm quality, and document compliance. CP4D’s built-in tools make it easier to maintain trust in how data is handled across environments.

Tight integration with enterprise identity and access management (IAM) systems strengthens governance through precise controls. It ensures only the right people have access to sensitive data, aligning with internal security frameworks. Centralized identity systems also simplify onboarding, access changes, and audit trails.

When governance tools are built into the data lifecycle from the beginning, compliance becomes part of the system. It is not something added later. This helps avoid retroactive fixes and supports responsible practices from day one. Governance shifts from a task to a foundation of how data is managed.

As regulations multiply and workloads shift, scalable governance is no longer a luxury. It is a requirement. Open, container-driven architectures give organizations the flexibility to meet evolving standards, secure their data, and adapt quickly.

0 notes