#Azure Data Catalog

Explore tagged Tumblr posts

Text

Databricks Unity Catalog - Data Governance | Learn Azure Databricks

Learn more at Best place to learn Data engineering, Bigdata, Apache Spark, Databricks, Apache … source

0 notes

Text

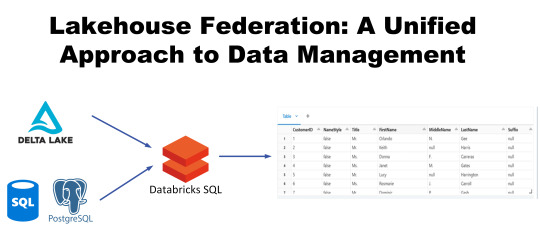

Lakehouse Federation Best Practices

Step into the future of data management with the revolutionary Lakehouse Federation. Envision a world where data lakes and data warehouses merge, creating a formidable powerhouse for data handling.

Step into the future of data management with the revolutionary Lakehouse Federation. Envision a world where data lakes and data warehouses merge, creating a formidable powerhouse for data handling. In today’s digital age, where data pours in from every corner, relying on traditional methods can leave you in the lurch. Enter Lakehouse Federation, a game-changer that harnesses the best of both…

View On WordPress

#Azure Databricks#Databricks Delta Sharing#Databricks Lakehouse#Databricks Lakehouse Federation#Databricks Unity catalog#Query Federation#unified data management#Unity catalog External Tables

0 notes

Text

A Complete Guide to Mastering Microsoft Azure for Tech Enthusiasts

With this rapid advancement, businesses around the world are shifting towards cloud computing to enhance their operations and stay ahead of the competition. Microsoft Azure, a powerful cloud computing platform, offers a wide range of services and solutions for various industries. This comprehensive guide aims to provide tech enthusiasts with an in-depth understanding of Microsoft Azure, its features, and how to leverage its capabilities to drive innovation and success.

Understanding Microsoft Azure

A platform for cloud computing and service offered through Microsoft is called Azure. It provides reliable and scalable solutions for businesses to build, deploy, and manage applications and services through Microsoft-managed data centers. Azure offers a vast array of services, including virtual machines, storage, databases, networking, and more, enabling businesses to optimize their IT infrastructure and accelerate their digital transformation.

Cloud Computing and its Significance

Cloud computing has revolutionized the IT industry by providing on-demand access to a shared pool of computing resources over the internet. It eliminates the need for businesses to maintain physical hardware and infrastructure, reducing costs and improving scalability. Microsoft Azure embraces cloud computing principles to enable businesses to focus on innovation rather than infrastructure management.

Key Features and Benefits of Microsoft Azure

Scalability: Azure provides the flexibility to scale resources up or down based on workload demands, ensuring optimal performance and cost efficiency.

Vertical Scaling: Increase or decrease the size of resources (e.g., virtual machines) within Azure.

Horizontal Scaling: Expand or reduce the number of instances across Azure services to meet changing workload requirements.

Reliability and Availability: Microsoft Azure ensures high availability through its globally distributed data centers, redundant infrastructure, and automatic failover capabilities.

Service Level Agreements (SLAs): Guarantees high availability, with SLAs covering different services.

Availability Zones: Distributes resources across multiple data centers within a region to ensure fault tolerance.

Security and Compliance: Azure incorporates robust security measures, including encryption, identity and access management, threat detection, and regulatory compliance adherence.

Azure Security Center: Provides centralized security monitoring, threat detection, and compliance management.

Compliance Certifications: Azure complies with various industry-specific security standards and regulations.

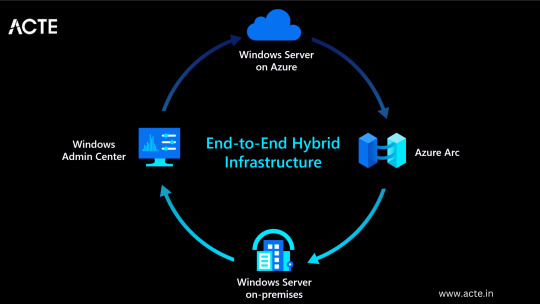

Hybrid Capability: Azure seamlessly integrates with on-premises infrastructure, allowing businesses to extend their existing investments and create hybrid cloud environments.

Azure Stack: Enables organizations to build and run Azure services on their premises.

Virtual Network Connectivity: Establish secure connections between on-premises infrastructure and Azure services.

Cost Optimization: Azure provides cost-effective solutions, offering pricing models based on consumption, reserved instances, and cost management tools.

Azure Cost Management: Helps businesses track and optimize their cloud spending, providing insights and recommendations.

Azure Reserved Instances: Allows for significant cost savings by committing to long-term usage of specific Azure services.

Extensive Service Catalog: Azure offers a wide range of services and tools, including app services, AI and machine learning, Internet of Things (IoT), analytics, and more, empowering businesses to innovate and transform digitally.

Learning Path for Microsoft Azure

To master Microsoft Azure, tech enthusiasts can follow a structured learning path that covers the fundamental concepts, hands-on experience, and specialized skills required to work with Azure effectively. I advise looking at the ACTE Institute, which offers a comprehensive Microsoft Azure Course.

Foundational Knowledge

Familiarize yourself with cloud computing concepts, including Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS).

Understand the core components of Azure, such as Azure Resource Manager, Azure Virtual Machines, Azure Storage, and Azure Networking.

Explore Azure architecture and the various deployment models available.

Hands-on Experience

Create a free Azure account to access the Azure portal and start experimenting with the platform.

Practice creating and managing virtual machines, storage accounts, and networking resources within the Azure portal.

Deploy sample applications and services using Azure App Services, Azure Functions, and Azure Containers.

Certification and Specializations

Pursue Azure certifications to validate your expertise in Azure technologies. Microsoft offers role-based certifications, including Azure Administrator, Azure Developer, and Azure Solutions Architect.

Gain specialization in specific Azure services or domains, such as Azure AI Engineer, Azure Data Engineer, or Azure Security Engineer. These specializations demonstrate a deeper understanding of specific technologies and scenarios.

Best Practices for Azure Deployment and Management

Deploying and managing resources effectively in Microsoft Azure requires adherence to best practices to ensure optimal performance, security, and cost efficiency. Consider the following guidelines:

Resource Group and Azure Subscription Organization

Organize resources within logical resource groups to manage and govern them efficiently.

Leverage Azure Management Groups to establish hierarchical structures for managing multiple subscriptions.

Security and Compliance Considerations

Implement robust identity and access management mechanisms, such as Azure Active Directory.

Enable encryption at rest and in transit to protect data stored in Azure services.

Regularly monitor and audit Azure resources for security vulnerabilities.

Ensure compliance with industry-specific standards, such as ISO 27001, HIPAA, or GDPR.

Scalability and Performance Optimization

Design applications to take advantage of Azure’s scalability features, such as autoscaling and load balancing.

Leverage Azure CDN (Content Delivery Network) for efficient content delivery and improved performance worldwide.

Optimize resource configurations based on workload patterns and requirements.

Monitoring and Alerting

Utilize Azure Monitor and Azure Log Analytics to gain insights into the performance and health of Azure resources.

Configure alert rules to notify you about critical events or performance thresholds.

Backup and Disaster Recovery

Implement appropriate backup strategies and disaster recovery plans for essential data and applications.

Leverage Azure Site Recovery to replicate and recover workloads in case of outages.

Mastering Microsoft Azure empowers tech enthusiasts to harness the full potential of cloud computing and revolutionize their organizations. By understanding the core concepts, leveraging hands-on practice, and adopting best practices for deployment and management, individuals become equipped to drive innovation, enhance security, and optimize costs in a rapidly evolving digital landscape. Microsoft Azure’s comprehensive service catalog ensures businesses have the tools they need to stay ahead and thrive in the digital era. So, embrace the power of Azure and embark on a journey toward success in the ever-expanding world of information technology.

#microsoft azure#cloud computing#cloud services#data storage#tech#information technology#information security

6 notes

·

View notes

Text

Data Engineering Tip of the Day: Day 16

🔹 Tip: Integrate data lineage tools like OpenLineage, Marquez, Azure Purview, or Databricks Unity Catalog.

🔸 Why?: Lineage helps trace errors, manage impact of changes, and improves governance. Essential for compliance and debugging.

#datadoseoftheday #DataEngineering

0 notes

Text

Discover the Key Benefits of Implementing Data Mesh Architecture

As data continues to grow at an exponential rate, enterprises are finding traditional centralized data architectures inadequate for scaling. That’s where Data Mesh Architecture steps in bringing a decentralized and domain oriented approach to managing and scaling data in modern enterprises. We empower businesses by implementing robust data mesh architectures tailored to leading cloud platforms like Azure, Snowflake, and GCP, ensuring scalable, secure, and domain-driven data strategies.

Key Benefits of Implementing Data Mesh Architecture

Scalability Across Domains - By decentralizing data ownership to domain teams, data mesh architecture enables scalable data product creation and faster delivery of insights. Teams become responsible for their own data pipelines, ensuring agility and accountability.

Improved Data Quality and Governance - Data Mesh encourages domain teams to treat data as a product, which improves data quality, accessibility, and documentation. Governance frameworks built into platforms like Data Mesh Architecture on Azure provide policy enforcement and observability.

Faster Time-to-Insights - Unlike traditional centralized models, data mesh allows domain teams to directly consume and share trusted data products—dramatically reducing time-to-insight for analytics and machine learning initiatives.

Cloud-Native Flexibility - Whether you’re using Data Mesh Architecture in Snowflake, Azure, or GCP, the architecture supports modern cloud-native infrastructure. This ensures high performance, elasticity, and cost optimization.

Domain-Driven Ownership and Collaboration - By aligning data responsibilities with business domains, enterprises improve cross-functional collaboration. With Data Mesh Architecture GCP or Snowflake integration, domain teams can build, deploy, and iterate on data products independently.

What Is Data Mesh Architecture in Azure?

Data Mesh Architecture in Azure decentralizes data ownership by allowing domain teams to manage, produce, and consume data as a product. Using services like Azure Synapse, Purview, and Data Factory, it supports scalable analytics and governance. With Dataplatr, enterprises can implement a modern, domain-driven data strategy using Azure’s cloud-native capabilities to boost agility and reduce data bottlenecks.

What Is the Data Architecture in Snowflake?

Data architecture in Snowflake builds a data model that separates storage. It allows instant scalability, secure data sharing, and real-time insights with zero-copy cloning and time travel. At Dataplatr, we use Snowflake to implement data mesh architecture that supports distributed data products, making data accessible and reliable across all business domains.

What Is the Architecture of GCP?

The architecture of GCP (Google Cloud Platform) offers a modular and serverless ecosystem ideal for analytics, AI, and large-scale data workloads. Using tools like BigQuery, Dataflow, Looker, and Data Catalog, GCP supports real-time processing and decentralized data governance. It enables enterprises to build flexible, domain led data mesh architectures on GCP, combining innovation with security and compliance.

Ready to Modernize Your Data Strategy?

Achieve the full potential of decentralized analytics with data mesh architecture built for scale. Partner with Dataplatr to design, implement, and optimize your data mesh across Azure, Snowflake, and GCP.

Read more at dataplatr.com

0 notes

Text

Driving Business Success with the Power of Azure Machine Learning

Azure Machine Learning is revolutionizing how businesses drive success through data. By transforming raw data into actionable insights, Azure ML enables you to uncover hidden trends and anticipate customer needs and empowers you to make proactive, data-driven decisions that fuel growth, instilling a sense of confidence and control.

With capabilities that streamline prompt engineering and accelerate the building of machine learning models, Azure ML provides the agility and scale to stay ahead in a competitive market. You can adapt and grow, unlocking new avenues for innovation, efficiency, and long-term success.

Azure Machine Learning empowers you with advanced analytics and simplifies complex AI processes, making predictive modeling more easily accessible than ever. By automating critical model creation and deployment aspects, Azure ML helps you rapidly iterate and scale your machine learning initiatives.

You can focus on strategic insights, allowing you to respond faster to market demands, optimize resource allocation, and precisely refine customer experiences. With Azure ML, businesses gain a reliable foundation for agile decision-making and a robust pathway to achieving measurable, data-driven success.

Azure Machine Learning Capabilities for AI and ML Development

1-Build Language Model–Based Applications

Azure Machine Learning offers a vast library of pre-trained foundation models from industry leaders like Microsoft, OpenAI Service, Hugging Face, and Meta within its unified model catalog. This expansive access to language models enables developers to seamlessly build powerful applications tailored to natural language processing (NLP), sentiment analysis, chatbots, and more. With these ready-to-deploy models, organizations can leverage the latest advancements in language AI, significantly reducing development time and resources while ensuring their applications are built on robust, cutting-edge technology.

2-Build Your Models

With Azure's no-code interface, businesses can create and customize machine learning models quickly and efficiently, even without extensive coding expertise. This user-friendly approach democratizes AI, allowing team members from various backgrounds to develop data-driven solutions that meet specific business needs. The drag-and-drop tools make it possible to explore, train, and fine-tune models effortlessly, accelerating the journey from concept to deployment and empowering companies to innovate faster than ever.

3-Built-in Security and Compliance

Azure Machine Learning is designed with robust, enterprise-grade security and compliance standards that ensure data privacy, protection, and adherence to global regulatory requirements. Whether handling sensitive customer data or proprietary business insights, organizations can trust Azure ML's built-in security protocols to safeguard their assets. This commitment to security minimizes risks and instills confidence, allowing businesses to focus on AI innovation without compromising compliance.

4-Streamline Machine Learning Tasks

Azure's automated machine learning capabilities simplify identifying the best classification models for various tasks, freeing teams from manually testing multiple algorithms. With its intelligent automation, Azure ML evaluates numerous model configurations, identifying the most effective options for specific use cases, whether for image recognition, customer segmentation, or predictive analytics. This streamlining of tasks allows businesses to harness AI-driven insights faster, boosting productivity and accelerating time-to-market for AI solutions.

5-Implement Responsible AI

Azure Machine Learning prioritizes transparency and accountability with its Responsible AI dashboard, which supports users in making informed, data-driven decisions. This powerful tool enables teams to assess model performance, evaluate fairness, and ensure AI outputs align with ethical standards and organizational values. By embedding Responsible AI practices, Azure empowers businesses to achieve their strategic goals, build trust, and uphold integrity, ensuring their AI solutions positively contribute to business and society.

Why Web Synergies?

Web Synergies stands out as a trusted partner in harnessing the full potential of Azure Machine Learning, empowering businesses to quickly turn complex data into valuable insights. With our deep domain expertise in AI and machine learning, we deliver tailored solutions that align with your unique business needs, helping you stay competitive in today's data-driven landscape.

Our commitment to responsible, sustainable, and secure AI practices means you can trust us to implement solutions that are not only powerful but also ethical and compliant. Partnering with Web Synergies means choosing a team dedicated to your long-term success, ensuring you maximize your investment in AI for measurable, impactful results.

0 notes

Text

What Is a Data Mesh Architecture?

Data mesh is a decentralized approach to managing and accessing data across large organizations. Unlike traditional data lakes or data warehouses, which centralize data into one location, data mesh distributes data ownership across different business domains.

Each domain such as marketing, finance, or sales owns and manages its own data as a product. Teams are responsible not only for producing data but also for making it available in a usable, reliable, and secure format for others.

This domain oriented model promotes scalability by minimizing bottlenecks that occur when data is funneled through a centralized team.

What Is Data Mesh Architecture in Azure?

Data mesh architecture Azure refers to a decentralized, domain oriented approach to managing and accessing data within an organization's data platform, particularly in the context of large, complex ecosystems. It emphasizes distributed data ownership across business domains, creating "data products" that are easily discoverable and accessible by other domains. This contrasts with traditional, centralized data architectures where a single team manages all data.

Microsoft Azure doesn’t have a single tool called “data mesh” but it gives you the parts you need to build one. You can use different Azure services to manage and work with data. For example, Azure Synapse Analytics helps process large amounts of data, and Azure Data Factory helps move and organize it.

Azure Purview keeps track of where data comes from and helps with data rules and policies. Azure Data Lake Storage stores big data safely, and tools like Azure Databricks or Microsoft Fabric help with deeper data analysis or machine learning.

With the right setup, different teams in a company can manage their own data while still following company-wide rules. Azure also allows secure access control and keeps data organized using catalogs, so teams can work independently without creating confusion or delays.

What is the data architecture of Snowflake?

Snowflake is a cloud based data platform that helps companies store, process, and share large amounts of data. It allows different teams to use the same system while still managing their own data separately.

Snowflake is built to handle big workloads and many users at the same time. It uses a multi-cluster system, which means teams can run their data jobs without slowing each other down. It also supports secure data sharing, so teams can easily give others access to their data when needed.

Each team can control its own data, including who can see it and how it’s used. Data mesh architecture snowflake has tools for data governance, which help teams set rules for access, security, and compliance. It supports different types of data, like traditional tables or semi-structured data such as JSON. While everything is stored in one place, responsibility is shared teams manage their own data as if it’s their own product.

What is the architecture of GCP?

Google Cloud Platform is a cloud service that gives companies the tools they need to manage and analyze large amounts of data. It’s often used to build a data mesh, where different teams control their own data but still follow shared rules.

One of the main tools in GCP is BigQuery, which helps teams quickly analyze big data sets. Dataplex is used to keep data organized and make sure teams follow the same data rules. Pub/Sub and Dataflow help move and update data in real time, which is useful when information needs to stay current.

Another useful tool is Looker, which lets teams build their own dashboards and reports. data mesh architecture gcp allows teams to connect these tools in ways that fit their work. Among them, Dataplex stands out because it helps keep things consistent across all teams, even when they work separately.

We work closely with our technology partners Microsoft Azure, Snowflake, and Google Cloud Platform to support organizations in making this shift. Together, we combine platform capabilities with our domain expertise to help build decentralized, domain data ecosystems that are scalable, secure, and aligned with real business needs. Whether it’s setting up the infrastructure, enabling data governance, or guiding teams to treat data as a product, our joint approach ensures that data mesh is not just a concept but a working solution customized for your organization. Also see how our Data Analytics Consulting services can help you move toward a data mesh model that’s easier to manage and fits your business goals.

What are the 4 principles of data mesh?

Data mesh is a modern approach to managing data at scale by decentralizing ownership. Instead of relying on a central team, it empowers individual domains to manage, share, and govern their own data. Let’s explore the four data mesh principles that make this model both scalable and effective for modern enterprises.

1. Domain-Oriented Data Ownership

Each team is responsible for its own data. This means they take care of collecting, updating, and keeping their data accurate. Instead of relying on one central data team, every business area handles its own information. This helps reduce bottlenecks and gives teams more control.

2. Data as a Product

Teams should treat their data like a product that others will use. That means it should be clean, reliable, well-documented, and easy to find. Someone from the team should be clearly responsible for making sure the data is useful and up to date. This helps other teams trust and use that data without confusion.

3. Self-Serve Data Platform

Teams need access to the tools and systems that let them work with data on their own. A self-serve platform gives them everything they need like storage, processing tools, and security settings without always depending on the central IT team. This speeds up work and makes teams more independent.

4. Federated Governance

Even though teams manage their own data, there still need to be shared rules. Federated governance means there are common standards for privacy, security, and data quality that all teams must follow. This keeps things safe and consistent, while still allowing teams to work the way that suits them best.

What are the downsides of data mesh?

Data mesh gives teams more control over their own data, but it also comes with some Data Mesh Challenges. While it can make data management faster and more flexible, it only works well when teams are ready to take on that responsibility. If the people managing the data aren’t trained or don’t have the right tools, things can go wrong quickly.

One of the main issues is that data mesh needs strong data skills within each team. Teams must know how to manage, clean, and protect their data. This can lead to higher costs, as each team might need its own tools, training, and support. It also becomes harder to keep everything consistent. Different teams may follow different standards unless clear rules are set.

Another problem is cultural resistance. Many companies are used to having one central team in charge of all data. Shifting control to separate teams can be uncomfortable and hard to manage. Without strong leadership and clear communication, this kind of change can cause confusion and slow down progress.

Why Should Organizations Consider Data Mesh Architecture?

Many companies are finding that the traditional way of managing data through one big central team is starting to show its limits. When all data has to go through one place, it can slow things down. Teams may have to wait a long time for the data they need, and it’s not always clear who’s responsible when problems come up.

Data mesh offers a new approach. It gives each team ownership of its own data, which means faster access, better quality, and clearer accountability. Instead of everything being handled by one group, every team plays a part in keeping data useful and up to date. This is especially helpful for large companies with many departments or fast-changing data needs.

Shifting to a data mesh model is a bold step forward. It's not a quick fix, but a smart move for companies ready to modernize how they handle data.

Conclusion

Data mesh architecture represents a shift in how organizations approach data at scale. By decentralizing ownership and treating data as a product, it addresses some of the most pressing limitations of traditional models. While it doesn't fit all solutions, for many modern enterprises, data mesh offers a path toward building more responsive, scalable and sustainable data systems.

0 notes

Text

Best Software Development Company in Chennai: Delivering Scalable, Cutting-Edge Solutions

Chennai has emerged as a leading hub for technology and innovation in India. If you’re searching for the best software development company in Chennai, you’ve come to the right place. In this comprehensive article, we’ll explore what sets top software development companies in Chennai apart, the services they offer, and how to choose the perfect partner to drive your digital transformation.

Why Chennai Is Your Ideal Development Destination

Tech Talent Pool Chennai is home to world-class engineering institutes and a large community of experienced developers. Whether you need experts in Java, .NET, Python, or emerging technologies like AI and blockchain, a software development company in Chennai can assemble skilled teams tailored to your project.

Cost-Effective Excellence Competitive operating costs combined with high quality standards make Chennai an attractive choice for businesses of all sizes. The best software development companies here deliver enterprise-grade solutions at a fraction of the price of Western markets, without compromising on quality.

Robust IT Ecosystem From established IT parks to innovation labs and co-working spaces, Chennai’s infrastructure supports rapid scaling. A local software development company in Chennai benefits from this ecosystem, ensuring seamless collaboration and access to the latest tools.

Core Services Offered

A top-tier best software development company in Chennai typically offers:

Custom Software Development End-to-end app design and development—from requirement analysis through deployment—for web, mobile, and desktop platforms.

Enterprise Solutions ERP, CRM, and supply-chain management systems that integrate with your existing processes and boost operational efficiency.

E-commerce Platforms Scalable online stores with secure payment gateways, intuitive admin panels, and analytics dashboards to grow your digital revenue.

Cloud & DevOps Migration to AWS, Azure, or Google Cloud; containerization with Docker/Kubernetes; and CI/CD pipelines for faster, more reliable releases.

AI & Data Analytics Machine learning models, natural language processing, and business-intelligence dashboards that turn raw data into actionable insights.

QA & Testing Comprehensive manual and automated testing services, ensuring your software is bug-free, secure, and performs under load.

What Makes the “Best” Software Development Company in Chennai?

Proven Track Record Look for companies with a portfolio of successful projects across industries—healthcare, finance, retail, and more. Case studies demonstrating on-time delivery and measurable ROI are key indicators of reliability.

Agile Methodologies The best software development companies in Chennai embrace Agile and Scrum, enabling rapid iterations, clear communication, and seamless adaptation to changing requirements.

Transparent Communication Regular sprint reviews, dedicated project managers, and 24/7 support channels ensure you’re always in the loop. Transparency breeds trust and keeps your project on track.

Strong Security Practices From GDPR-compliant data handling to robust encryption standards and regular vulnerability assessments, top Chennai-based firms prioritize security at every stage of development.

Post-Launch Support & Maintenance A true partner doesn’t disappear after launch. Maintenance agreements, feature enhancements, and performance monitoring are essential services offered by the best software development company in Chennai.

How to Choose the Right Partner

Define Your Requirements Before you begin your search, catalog your project’s scope, budget, timeline, and technical stack. Clear requirements lead to accurate proposals and estimates.

Evaluate Expertise Review each vendor’s technical skills, domain experience, and team size. Don’t hesitate to ask for technical references or to interview proposed team members.

Assess Cultural Fit Effective collaboration hinges on aligned values and communication styles. Look for partners who demonstrate empathy, proactivity, and a genuine interest in your business goals.

Compare Proposals While cost is a factor, the lowest bid isn’t always the best choice. Weigh technical depth, delivery timelines, and aftercare services alongside pricing.

Start Small Consider beginning with a pilot or MVP (Minimum Viable Product) to validate the partnership. Successful pilots often lead to larger, long-term engagements.

Conclusion & Next Steps

Choosing the best software development company in Chennai can transform your digital ambitions into reality. Whether you need a full-scale enterprise system or a sleek consumer app, Chennai’s top firms combine technical prowess, cost-effectiveness, and unwavering commitment to quality.

Ready to get started? Reach out today for a free consultation and audit of your project requirements. Let Chennai’s leading software experts power your next innovation!

0 notes

Text

Level Up Your Career with Machine Learning Courses from Ascendient Learning

Machine learning is no longer just a buzzword — it is the engine behind modern innovation. From powering search engines and personalized recommendations to driving fraud detection and predictive maintenance, machine learning (ML) is transforming every industry. For IT professionals, mastering ML is not optional — it is essential. Ascendient Learning offers the training that equips you with the right skills, the right tools, and the right certifications to lead in a data-driven world.

Why Machine Learning Is the Skill You Can’t Ignore

Machine learning is at the core of automation, artificial intelligence, and advanced analytics. As organizations gather more data than ever before, they need professionals who can make sense of it, build models, and deploy intelligent systems that learn and adapt. The demand for skilled ML practitioners is growing rapidly, and so is the opportunity for those who are trained and certified.

Companies across sectors are hiring ML engineers, data scientists, AI developers, and cloud architects with strong machine learning knowledge. Whether you want to build recommendation engines, automate decision-making, or enhance user experience through predictive modeling, machine learning gives you the tools to make it happen.

Ascendient Learning: Your Machine Learning Partner for Every Stage

Ascendient Learning provides one of the industry’s most comprehensive selections of machine learning courses, developed in collaboration with top technology vendors like AWS, Microsoft, IBM, Google Cloud, Oracle, and Databricks. Whether you are just beginning your ML journey or advancing to specialized applications like generative AI or MLOps, our programs are built to support real-world success.

Courses cover a wide spectrum, including:

AWS SageMaker and Generative AI Applications

Microsoft Azure ML and AI Fundamentals

Google Cloud ML with Vertex AI

Cloudera Machine Learning with Spark

Databricks Scalable ML with Apache Spark

IBM SPSS Modeler and Watson Studio

Oracle ML for R and Python

Each course is taught by certified instructors with deep technical backgrounds. You will gain academic knowledge and hands-on experience through labs, case studies, and real-world use cases that prepare you for the workplace.

Certifications That Move Your Career Forward

Every course is aligned with a certification path from trusted vendors. Whether you are pursuing an AWS Machine Learning Specialty, Google Cloud ML Engineer, or Microsoft Certified Azure AI Engineer Associate, Ascendient helps you prepare with exam-ready content and practice.

Certified professionals often see significant career gains. In many cases, ML certification leads to higher salaries, greater job security, and eligibility for leadership roles in AI strategy and digital transformation. In today’s market, certifications are more than credentials — they are signals of trust, competence, and readiness.

Take the Next Step Toward Machine Learning Mastery

Machine learning is reshaping industries — and creating unmatched opportunities for skilled professionals. Whether you are building your foundation or ready to lead enterprise AI projects, Ascendient Learning is your partner in achieving machine learning excellence.

Explore our full catalog of ML courses at https://www.ascendientlearning.com/it-training/topics/ai-and-machine-learning and begin your transformation today. The future runs on machine learning. Make sure you do, too.

0 notes

Text

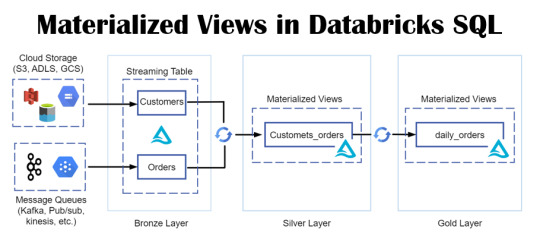

Empower Data Analysis with Materialized Views in Databricks SQL

Envision a realm where your data is always ready for querying, with intricate queries stored in a format primed for swift retrieval and analysis. Picture a world where time is no longer a constraint, where data handling is both rapid and efficient.

View On WordPress

#Azure#Azure SQL Database#data#Database#Database Management#Databricks#Databricks CLI#Databricks Delta Live Table#Databricks SQL#Databricks Unity catalog#Delta Live#Materialized Views#Microsoft#microsoft azure#Optimization#Performance Optimization#queries#SQL#SQL database#Streaming tables#tables#Unity Catalog#views

0 notes

Text

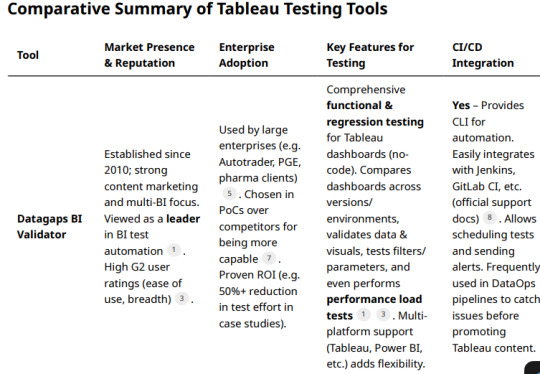

Leading Tableau Test Automation Tools — Comparison and Market Leadership

Automated testing for Tableau dashboards and analytics content is a growing niche, with a few specialized commercial tools vying for leadership.

We compare the top solutions — Datagaps BI Validator, Wiiisdom Ops (formerly Kinesis CI), QuerySurge, and others — based on their marketing presence, enterprise adoption, feature set (especially for functional/regression testing), CI/CD integration, and industry reputation.

Datagaps BI Validator (DataOps Suite)

Industry sources describe BI Validator as a “leading no-code BI testing tool” . It has recognition on review platforms; for example, G2 reviewers give the overall Datagaps suite a solid rating (around 4.0–4.6/5) and specifically praise the Tableau-focused module

Industry sources describe BI Validator as a “leading no-code BI testing tool” . It has recognition on review platforms; for example, G2 reviewers give the overall Datagaps suite a solid rating (around 4.0–4.6/5) and specifically praise the Tableau-focused module.

Customer Adoption: BI Validator appears to have broad enterprise adoption. Featured customers include Autotrader, Portland General Electric, and University of California, Davis.

A case study mentions a “Pharma Giant” cutting Tableau upgrade testing time by 55% using BI Validator.

Users on forums often recommend Datagaps; one BI professional who evaluated both Datagaps and Kinesis-CI reported that Datagaps was “more capable” and ultimately their choice . Such feedback indicates a strong reputation for reliability in complex enterprise scenarios.

Feature Set: BI Validator offers end-to-end testing for Tableau covering:

Functional regression testing: It can automatically compare workbook data, visuals, and metadata between versions or environments (e.g. before vs. after a Tableau upgrade) . A user notes it enabled automated regression testing of newly developed Tableau content as well as verifying dashboard outputs during database migrations . It tests dashboards, reports, filters, parameters, even PDF exports for changes.

Data validation: It can retrieve data from Tableau reports and validate against databases. One review specifically highlights using BI Validator to check Tableau report data against the source DB efficiently . The tool supports virtually any data source (“you name the datasource, Datagaps has support for it”

UI and layout testing: The platform can compare UI elements and catalog/metadata across environments to catch broken visuals or missing fields post-migration.

Performance testing: Uniquely, BI Validator can simulate concurrent user loads on Tableau Server to test performance and robustness . This allows stress testing of dashboards under multi-user scenarios, complementing functional tests. (This is analogous to Tableau’s TabJolt, but integrated into one suite.) Users have utilized its performance/stress testing feature to benchmark Tableau with different databases.

Datagaps provides a well-rounded test suite (data accuracy, regression, UI regression, performance) tailored for BI platforms. It is designed to be easy to use (no coding; clean UI) — as one enterprise user noted, the client/server toolset is straightforward to install and navigate.

CI/CD Integration: BI Validator is built with DataOps in mind and integrates with CI/CD pipelines. It offers a command-line interface (CLI) and has documented integration with Jenkins and GitLab CI, enabling automated test execution as part of release pipelines . Test plans can be scheduled and triggered automatically, with email notifications on results . This allows teams to include Tableau report validation in continuous integration flows (for example, running a battery of regression tests whenever a data source or workbook is updated). The ability to run via CLI means it can work with any CI orchestrator (Jenkins, Azure DevOps, etc.), and users have leveraged this to incorporate automated Tableau testing in their DevOps processes.

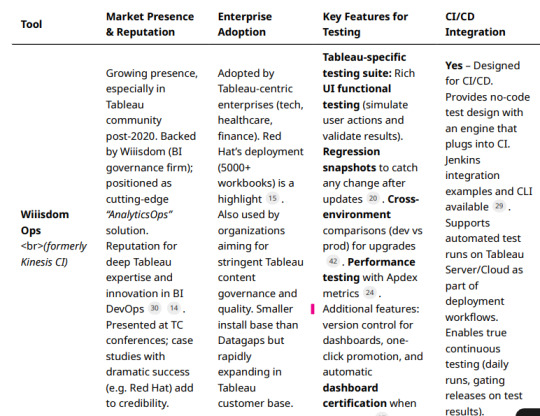

Wiiisdom Ops (formerly Kinesis CI)

Wiiisdom (known for its 360Suite in the SAP BusinessObjects world) has since rebranded Kinesis CI as Wiiisdom Ops for Tableau and heavily markets it as a cornerstone of “AnalyticsOps” (bringing DevOps practices to analytics).

The product is positioned as a solution to help enterprises “trust their Tableau dashboards” by automating testing and certification . Wiiisdom has been active in promoting this tool via webinars, Tableau Conference sessions, e-books, and case studies — indicating a growing marketing presence especially in the Tableau user community. Wiiisdom Ops/Kinesis has a solid (if more niche) reputation. It’s Tableau-exclusive focus is often viewed as strength in depth.

The acquiring company’s CEO noted that Kinesis-CI was a “best-in-breed” technology and a “game-changer” in how it applied CI/CD concepts to BI testing . While not as widely reviewed on generic software sites, its reputation is bolstered by public success stories: for instance, Red Hat implemented Wiiisdom Ops for Tableau and managed to reduce their dashboard testing time “from days to minutes,” while handling thousands of workbooks and data sources . Such testimonials from large enterprises (Red Hat, and also Gustave Roussy Institute in healthcare ) enhance Wiiisdom Ops’ credibility in the industry.

Customer Adoption: Wiiisdom Ops is used by Tableau-centric organizations that require rigorous testing. The Red Hat case study is a flagship example, showing adoption at scale (5,000+ Tableau workbooks) . Other known users include certain financial institutions and healthcare organizations (some case studies are mentioned on Wiiisdom’s site). Given Wiiisdom’s long history with BI governance, many of its existing customers (in the Fortune 500, especially those using Tableau alongside other BI tools) are likely evaluating or adopting Wiiisdom Ops as they extend governance to Tableau.

While overall market share is hard to gauge, the tool is gaining traction specifically among Tableau enterprise customers who need automated testing integrated with their development lifecycle. The acquisition by Wiiisdom also lends it a broader sales network and support infrastructure, likely increasing its adoption since 2021.

Feature Set: Wiiisdom Ops (Kinesis CI) provides a comprehensive test framework for Tableau with a focus on functional, regression, and performance testing of Tableau content.

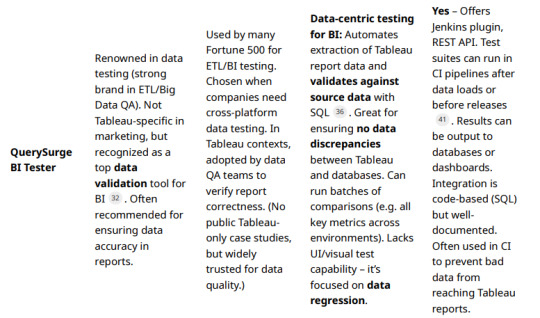

QuerySurge (BI Tester for Tableau)

Marketing & Reputation: QuerySurge is a well-known data testing automation platform, primarily used for ETL and data warehouse testing. While not Tableau-specific, it offers a module called BI Tester that connects to BI tools (including Tableau) to validate the data in reports. QuerySurge is widely used in the data quality and ETL testing space (with many Fortune 500 users) and is often mentioned as a top solution for data/ETL testing.

Its marketing emphasizes ensuring data accuracy in the “last mile” of BI. However, QuerySurge’s brand is stronger in the data engineering community than specifically in Tableau circles, since it does not perform UI or functional testing of dashboards (it focuses on data correctness beneath the BI layer).

Customer Adoption: QuerySurge has a broad user base across industries for data testing, and some of those users leverage it for Tableau report validation. It’s known that organizations using multiple BI tools (Tableau, Cognos, Power BI, etc.) might use QuerySurge centrally to validate that data shown in reports matches the data in the warehouse. The vendor mentions “dozens of teams” using its BI integrations to visualize test results in tools like Tableau and Power BI , suggesting an ecosystem where QuerySurge ensures data quality and BI tools consume those results.

Notable QuerySurge clients include large financial institutions, insurance companies, and tech firms (as per their case studies), though specific Tableau-centric references are not heavily publicized. As a generic tool, its adoption overlaps with but is not exclusive to Tableau projects

Feature Set: For Tableau testing, QuerySurge’s BI Tester provides a distinct but important capability: datalevel regression testing for Tableau reports. Key features include.

Conclusion:

The Market Leader in Tableau Testing Considering the above, Datagaps BI Validator currently stands out as the best all-around commercial Tableau testing tool leading the market. It edges out others in terms of breadth of features and proven adoption.

Enterprises appreciate its ability to handle everything from data validation to UI regression and performance testing in one package .

Its multi-BI versatility also means a wider user base and community knowledge pool. Many practitioners point to BI Validator as the most “efficient testing tool for Tableau” in practice . That said, the “best” choice can depend on an organization’s specific needs. Wiiisdom Ops (Kinesis) is a very close competitor, especially for organizations focusing solely on Tableau and wanting seamless CI/CD pipeline integration and governance extras.

Wiiisdom Ops has a strong future outlook given its Tableaufocused innovation and success stories like Red Hat (testing time cut from days to minutes) . It might be considered the leader in the Tableau-only segment, and it’s rapidly gaining recognition as Tableau customers pursue DevOps for analytics.

QuerySurge, while not a one-stop solution for Tableau, is the leader for data quality assurance in BI and remains indispensable for teams that prioritize data correctness in reports . It often complements either Datagaps or Wiiisdom by covering the data validation aspect more deeply. In terms of marketing presence and industry buzz, Datagaps and Wiiisdom are both very active:

Datagaps publishes thought leadership on BI testing and touts AI-driven DataOps, whereas Wiiisdom evangelizes AnalyticsOps and often partners with Tableau ecosystem events. Industry analysts have started to note the importance of such tools as BI environments mature.

In conclusion, Datagaps BI Validator is arguably the market leader in Tableau test automation today — with the strongest combination of features, enterprise adoption, and cross-platform support . Wiiisdom Ops (Kinesis CI) is a close runner-up, leading on Tableau-centric continuous testing and rapidly improving its footprint . Organizations with heavy data pipeline testing needs also recognize QuerySurge as an invaluable tool for ensuring Tableau outputs are trustworthy .

All these tools contribute to higher confidence in Tableau dashboards by catching errors and regressions early. As analytics continues to be mission-critical, adopting one of these leading solutions is becoming a best practice for enterprises to safeguard BI quality and enable faster, error-free Tableau deployments

#datagaps#dataops#bivalidator#dataquality#tableau testing#automation#wiiisdom#querysurge#etl testing#bi testing

0 notes

Text

Build scalable, enterprise-grade generative AI solutions on Azure

Azure AI Foundry enables organizations to design, customize, and manage the next generation of AI apps and agents at scale. This unit introduces how you can use Azure AI Foundry, Azure AI Agent Service, and related tools and frameworks to build enterprise-grade AI agent solutions.

Overview of Azure AI Foundry

Azure AI Foundry provides a comprehensive platform for designing, customizing, and managing generative AI applications and custom agent solutions. It supports a wide range of models, orchestration frameworks, and integration with Azure AI Agent Service for advanced agent capabilities.

Azure AI Foundry brings together Azure AI models, tooling, safety, and monitoring solutions to help you efficiently and cost-effectively design and scale your AI applications, including Azure AI Agent Service.

Azure AI Agent Service is a flexible, use-case-agnostic platform for building, deploying, and managing AI agents. These agents can operate autonomously with human oversight, leveraging contextual data to perform tasks and achieve specified goals. The service integrates cutting-edge models and tools from Microsoft, OpenAI, and partners like Meta, Mistral, and Cohere, providing an unparalleled platform for AI-driven automation.

Through the Azure AI Foundry SDK and the Azure AI Foundry portal experience, developers can quickly create powerful agents while benefiting from Azure’s enterprise-grade security and performance guarantees. Azure AI Foundry also includes Azure AI Content Safety service, making it easy for you to test and evaluate your solutions for safety and responsibility.

Key features

Rapid development and automation: Azure AI Agent Service offers a developer-friendly interface and a comprehensive toolkit, enabling rapid development of AI agents. It integrates seamlessly with enterprise systems using Azure Logic Apps, OpenAPI 3.0-based tools, and Azure Functions to support synchronous, asynchronous, event-driven, and long-running agent actions. It also supports orchestration frameworks such as Semantic Kernel and AutoGen.

Extensive data connections: The service supports integrations with a variety of data sources, such as Microsoft Bing, Azure Blob Storage, Azure AI Search, local files, and licensed data from data providers.

Flexible model selection: Developers can use a wide range of models from the Azure AI Foundry model catalog, which includes OpenAI and Azure OpenAI models, plus additional models available through Models as a Service (MaaS) serverless API. Multi-modal support and fine-tuning are also provided.

Enterprise readiness: Azure AI Agent Service is built to meet rigorous enterprise requirements, with features that ensure organizations can protect sensitive information while adhering to regulatory standards.

When to use Azure AI Foundry

Choose Azure AI Foundry when you need to:

Deploy scalable, production-grade AI services across web, mobile, and enterprise channels.

Integrate with custom data sources, APIs, and advanced orchestration logic.

Leverage Azure cloud services for hosting, scaling, and lifecycle management.

Leverage Azure AI services such as Azure AI Search, Azure AI Content Safety, and Azure AI Speech to enhance your applications with advanced search, safety, and conversational capabilities.

Example scenarios

Customer-facing AI assistants embedded in web or mobile apps.

Multi-agent solutions for healthcare, finance, or manufacturing.

Domain-specific generative AI applications with custom workflows and integrations.

Azure AI Foundry, together with Semantic Kernel, GitHub Copilot, Visual Studio, and Azure AI Agent Service, enables organizations to deliver advanced, secure, and scalable generative AI solutions.

Customer story

A global online fashion retailer leveraged Azure AI Foundry to revolutionize its customer experience by creating an AI-powered virtual stylist that could engage with customers and help them discover new trends.

In this customer case, Azure AI Foundry enabled the company to rapidly develop and deploy their intelligent apps, integrating natural language processing and computer vision capabilities. This solution takes advantage of Azure’s ability to support cutting-edge AI applications in the retail sector, driving business growth and customer satisfaction.

0 notes

Text

Build Your Data Strategy with Data Mesh Architecture in Azure, Snowflake & GCP

Businesses are moving away from traditional centralized systems to more agile and scalable data solutions. Data mesh architecture helps in a modern, domain oriented approach to data management that empowers teams to own and manage their data as a product.

At Dataplatr, we help businesses implement data mesh architecture using powerful cloud platforms like Azure, Snowflake, and Google Cloud Platform (GCP) to create a robust and scalable data strategy.

Data Mesh Architecture in Azure

Data mesh architecture Azure leverages services like Azure Synapse Analytics, Azure Data Lake, and Azure Purview to enable decentralized data governance, federated computational capabilities, and seamless integration across business domains.

Data Mesh Architecture in Snowflake

With data mesh architecture Snowflake, organizations benefit from Snowflake’s unique architecture, including secure data sharing, data collaboration, and native support for multi-domain access, all while maintaining performance and compliance.

Data Mesh Architecture in GCP

Data mesh architecture GCP combines the scalability of BigQuery with tools like Data Catalog, Dataplex, and Looker. GCP enables federated data management and self-service analytics across distributed teams.

Why Choose Data Mesh Architecture?

Domain-Driven Ownership: Decentralize your data to allow different business units to manage their own pipelines and datasets.

Improved Scalability: Easily manage large, complex datasets across multi-cloud environments.

Faster Time to Insight: Empower teams to access high-quality, trusted data without delays or bottlenecks.

Why Dataplatr?

Dataplatr partners with top cloud providers like Azure, Snowflake, and GCP to bring you a future-ready data architecture. Our experts help you design, implement, and optimize your data mesh strategy, driving better business outcomes through decentralized data ownership, enhanced collaboration, and faster decision-making.

0 notes

Text

Architecting for AI- Effective Data Management Strategies in the Cloud

What good is AI if the data feeding it is disorganized, outdated, or locked away in silos?

How can businesses unlock the full potential of AI in the cloud without first mastering the way they handle data?

And for professionals, how can developing Cloud AI skills shape a successful AI cloud career path?

These are some real questions organizations and tech professionals ask every day. As the push toward automation and intelligent systems grows, the spotlight shifts to where it all begins, data. If you’re aiming to become an AI cloud expert, mastering data management in the cloud is non-negotiable.

In this blog, we will explore human-friendly yet powerful strategies for managing data in cloud environments. These are perfect for businesses implementing AI in the cloud and individuals pursuing AI Cloud Certification.

1. Centralize Your Data, But Don’t Sacrifice Control

The first step to architecting effective AI systems is ensuring your data is all in one place, but with rules in place. Cloud AI skills come into play when configuring secure, centralized data lakes using platforms like AWS S3, Azure Data Lake, or Google Cloud Storage.

For instance, Airbnb streamlined its AI pipelines by unifying data into Amazon S3 while applying strict governance with AWS Lake Formation. This helped their teams quickly build and train models for pricing and fraud detection, without dealing with messy, inconsistent data.

Pro Tip-

Centralize your data, but always pair it with metadata tagging, cataloging, and access controls. This is a must-learn in any solid AI cloud automation training program.

2. Design For Scale: Elasticity Over Capacity

AI workloads are not static—they scale unpredictably. Cloud platforms shine when it comes to elasticity, enabling dynamic resource allocation as your needs grow. Knowing how to build scalable pipelines is a core part of AI cloud architecture certification programs.

One such example is Netflix. It handles petabytes of viewing data daily and processes it through Apache Spark on Amazon EMR. With this setup, they dynamically scale compute power depending on the workload, powering AI-based recommendations and content optimization.

Human Insight-

Scalability is not just about performance. It’s about not overspending. Smart scaling = cost-effective AI.

3. Don’t Just Store—Catalog Everything

You can’t trust what you can’t trace. A reliable data catalog and lineage system ensures AI models are trained on trustworthy data. Tools like AWS Glue or Apache Atlas help track data origin, movement, and transformation—a key concept for anyone serious about AI in the cloud.

To give you an example, Capital One uses data lineage tools to manage regulatory compliance for its AI models in credit risk and fraud detection. Every data point can be traced, ensuring trust in both model outputs and audits.

Why it matters-

Lineage builds confidence. Whether you’re a company building AI or a professional on an AI cloud career path, transparency is essential.

4. Build for Real-Time Intelligence

The future of AI is real-time. Whether it’s fraud detection, customer personalization, or predictive maintenance, organizations need pipelines that handle data as it flows in. Streaming platforms like Apache Kafka and AWS Kinesis are core technologies for this.

For example, Uber’s Michelangelo platform processes real-time location and demand data to adjust pricing and ETA predictions dynamically. Their cloud-native streaming architecture supports instant decision-making at scale.

Career Tip-

Mastering stream processing is key if you want to become an AI cloud expert. It’s the difference between reactive and proactive AI.

5. Bake Security and Privacy into Your Data Strategy

When you’re working with personal data, security isn’t optional—it’s foundational. AI architectures in the cloud must comply with GDPR, HIPAA, and other regulations, while also protecting sensitive information using encryption, masking, and access controls.

Salesforce, with its AI-powered Einstein platform, ensures sensitive customer data is encrypted and tightly controlled using AWS Key Management and IAM policies.

Best Practice-

Think “privacy by design.” This is a hot topic covered in depth during any reputable AI Cloud certification.

6. Use Tiered Storage to Optimize Cost and Speed

Not every byte of data is mission-critical. Some data is hot (frequently used), some cold (archived). An effective AI cloud architecture balances cost and speed with a multi-tiered storage strategy.

For instance, Pinterest uses Amazon S3 for high-access data, Glacier for archival, and caching layers for low-latency AI-powered recommendations. This approach keeps costs down while delivering fast, accurate results.

Learning Tip-

This is exactly the kind of cost-smart design covered in AI cloud automation training courses.

7. Support Cross-Cloud and Hybrid Access

Modern enterprises often operate across multiple cloud environments, and data can’t live in isolation. Cloud data architectures should support hybrid and multi-cloud scenarios to avoid vendor lock-in and enable agility.

Johnson & Johnson uses BigQuery Omni to analyze data across AWS and Azure without moving it. This federated approach supports AI use cases in healthcare, ensuring data residency and compliance.

Why it matters?

The future of AI is multi-cloud. Want to stand out? Pursue an AI cloud architecture certification that teaches integration, not just implementation.

Wrapping Up- Your Data Is the AI Foundation

Without well-architected data strategies, AI can’t perform at its best. If you’re leading cloud strategy as a CTO or just starting your journey to become an AI cloud expert, one thing becomes clear early on—solid data management isn’t optional. It’s the foundation that supports everything from smarter models to reliable performance. Without it, even the best AI tools fall short.

Here’s what to focus on-

Centralize data with control

Scale infrastructure on demand

Track data lineage and quality

Enable real-time processing

Secure data end-to-end

Store wisely with tiered solutions

Built for hybrid, cross-cloud access

Ready To Take the Next Step?

If you are looking forward to building smarter systems or your career, now is the time to invest in the future. Consider pursuing an AI Cloud Certification or an AI Cloud Architecture Certification. These credentials not only boost your knowledge but also unlock new opportunities on your AI cloud career path.

Consider checking AI CERTs AI+ Cloud Certification to gain in-demand Cloud AI skills, fast-track your AI cloud career path, and become an AI cloud expert trusted by leading organizations. With the right Cloud AI skills, you won’t just adapt to the future—you’ll shape it.

Enroll today!

0 notes