#Azure Databricks

Explore tagged Tumblr posts

Text

From Firewall to Encryption: The Full Spectrum of Data Security Solutions

In today’s digitally driven world, data is one of the most valuable assets any business owns. From customer information to proprietary corporate strategies, the protection of data is crucial not only for maintaining competitive advantage but also for ensuring regulatory compliance and customer trust. As cyber threats grow more sophisticated, companies must deploy a full spectrum of data security solutions — from traditional firewalls to advanced encryption technologies — to safeguard their sensitive information.

This article explores the comprehensive range of data security solutions available today and explains how they work together to create a robust defense against cyber risks.

Why Data Security Matters More Than Ever

Before diving into the tools and technologies, it’s essential to understand why data security is a top priority for organizations worldwide.

The Growing Threat Landscape

Cyberattacks have become increasingly complex and frequent. From ransomware that locks down entire systems for ransom to phishing campaigns targeting employees, and insider threats from negligent or malicious actors — data breaches can come from many angles. According to recent studies, millions of data records are exposed daily, costing businesses billions in damages, legal penalties, and lost customer trust.

Regulatory and Compliance Demands

Governments and regulatory bodies worldwide have enacted stringent laws to protect personal and sensitive data. Regulations such as GDPR (General Data Protection Regulation), HIPAA (Health Insurance Portability and Accountability Act), and CCPA (California Consumer Privacy Act) enforce strict rules on how companies must safeguard data. Failure to comply can result in hefty fines and reputational damage.

Protecting Brand Reputation and Customer Trust

A breach can irreparably damage a brand’s reputation. Customers and partners expect businesses to handle their data responsibly. Data security is not just a technical requirement but a critical component of customer relationship management.

The Data Security Spectrum: Key Solutions Explained

Data security is not a single tool or tactic but a layered approach. The best defense employs multiple technologies working together — often referred to as a “defense-in-depth” strategy. Below are the essential components of the full spectrum of data security solutions.

1. Firewalls: The First Line of Defense

A firewall acts like a security gatekeeper between a trusted internal network and untrusted external networks such as the Internet. It monitors incoming and outgoing traffic based on pre-established security rules and blocks unauthorized access.

Types of Firewalls:

Network firewalls monitor data packets traveling between networks.

Host-based firewalls operate on individual devices.

Next-generation firewalls (NGFW) integrate traditional firewall features with deep packet inspection, intrusion prevention, and application awareness.

Firewalls are fundamental for preventing unauthorized access and blocking malicious traffic before it reaches critical systems.

2. Intrusion Detection and Prevention Systems (IDS/IPS)

While firewalls filter traffic, IDS and IPS systems detect and respond to suspicious activities.

Intrusion Detection Systems (IDS) monitor network or system activities for malicious actions and send alerts.

Intrusion Prevention Systems (IPS) not only detect but also block or mitigate threats in real-time.

Together, IDS/IPS adds an extra layer of vigilance, helping security teams quickly identify and neutralize potential breaches.

3. Endpoint Security: Protecting Devices

Every device connected to a network represents a potential entry point for attackers. Endpoint security solutions protect laptops, mobile devices, desktops, and servers.

Antivirus and Anti-malware: Detect and remove malicious software.

Endpoint Detection and Response (EDR): Provides continuous monitoring and automated response capabilities.

Device Control: Manages USBs and peripherals to prevent data leaks.

Comprehensive endpoint security ensures threats don’t infiltrate through vulnerable devices.

4. Data Encryption: Securing Data at Rest and in Transit

Encryption is a critical pillar of data security, making data unreadable to unauthorized users by converting it into encoded text.

Encryption at Rest: Protects stored data on servers, databases, and storage devices.

Encryption in Transit: Safeguards data traveling across networks using protocols like TLS/SSL.

End-to-End Encryption: Ensures data remains encrypted from the sender to the recipient without exposure in between.

By using strong encryption algorithms, even if data is intercepted or stolen, it remains useless without the decryption key.

5. Identity and Access Management (IAM)

Controlling who has access to data and systems is vital.

Authentication: Verifying user identities through passwords, biometrics, or multi-factor authentication (MFA).

Authorization: Granting permissions based on roles and responsibilities.

Single Sign-On (SSO): Simplifies user access while maintaining security.

IAM solutions ensure that only authorized personnel can access sensitive information, reducing insider threats and accidental breaches.

6. Data Loss Prevention (DLP)

DLP technologies monitor and control data transfers to prevent sensitive information from leaving the organization.

Content Inspection: Identifies sensitive data in emails, file transfers, and uploads.

Policy Enforcement: Blocks unauthorized transmission of protected data.

Endpoint DLP: Controls data movement on endpoint devices.

DLP helps maintain data privacy and regulatory compliance by preventing accidental or malicious data leaks.

7. Cloud Security Solutions

With increasing cloud adoption, protecting data in cloud environments is paramount.

Cloud Access Security Brokers (CASB): Provide visibility and control over cloud application usage.

Cloud Encryption and Key Management: Secures data stored in public or hybrid clouds.

Secure Configuration and Monitoring: Ensures cloud services are configured securely and continuously monitored.

Cloud security tools help organizations safely leverage cloud benefits without exposing data to risk.

8. Backup and Disaster Recovery

Even with the best preventive controls, breaches, and data loss can occur. Reliable backup and disaster recovery plans ensure business continuity.

Regular Backups: Scheduled copies of critical data stored securely.

Recovery Testing: Regular drills to validate recovery procedures.

Ransomware Protection: Immutable backups protect against tampering.

Robust backup solutions ensure data can be restored quickly, minimizing downtime and damage.

9. Security Information and Event Management (SIEM)

SIEM systems collect and analyze security event data in real time from multiple sources to detect threats.

Centralized Monitoring: Aggregates logs and alerts.

Correlation and Analysis: Identifies patterns that indicate security incidents.

Automated Responses: Enables swift threat mitigation.

SIEM provides comprehensive visibility into the security posture, allowing proactive threat management.

10. User Education and Awareness

Technology alone can’t stop every attack. Human error remains one of the biggest vulnerabilities.

Phishing Simulations: Train users to recognize suspicious emails.

Security Best Practices: Ongoing training on password hygiene, device security, and data handling.

Incident Reporting: Encourage quick reporting of suspected threats.

Educated employees act as a crucial line of defense against social engineering and insider threats.

Integrating Solutions for Maximum Protection

No single data security solution is sufficient to protect against today’s cyber threats. The most effective strategy combines multiple layers:

Firewalls and IDS/IPS to prevent and detect intrusions.

Endpoint security and IAM to safeguard devices and control access.

Encryption to protect data confidentiality.

DLP and cloud security to prevent leaks.

Backup and SIEM to ensure resilience and rapid response.

Continuous user training to reduce risk from human error.

By integrating these tools into a cohesive security framework, businesses can build a resilient defense posture.

Choosing the Right Data Security Solutions for Your Business

Selecting the right mix of solutions depends on your organization's unique risks, compliance requirements, and IT environment.

Risk Assessment: Identify critical data assets and potential threats.

Regulatory Compliance: Understand applicable data protection laws.

Budget and Resources: Balance costs with expected benefits.

Scalability and Flexibility: Ensure solutions grow with your business.

Vendor Reputation and Support: Choose trusted partners with proven expertise.

Working with experienced data security consultants or managed security service providers (MSSPs) can help tailor and implement an effective strategy.

The Future of Data Security: Emerging Trends

As cyber threats evolve, data security technologies continue to advance.

Zero Trust Architecture: Assumes no implicit trust and continuously verifies every access request.

Artificial Intelligence and Machine Learning: Automated threat detection and response.

Quantum Encryption: Next-generation cryptography resistant to quantum computing attacks.

Behavioral Analytics: Identifying anomalies in user behavior for early threat detection.

Staying ahead means continuously evaluating and adopting innovative solutions aligned with evolving risks.

Conclusion

From the traditional firewall guarding your network perimeter to sophisticated encryption safeguarding data confidentiality, the full spectrum of data security solutions forms an essential bulwark against cyber threats. In a world where data breaches can cripple businesses overnight, deploying a layered, integrated approach is not optional — it is a business imperative.

Investing in comprehensive data security protects your assets, ensures compliance, and most importantly, builds trust with customers and partners. Whether you are a small business or a large enterprise, understanding and embracing this full spectrum of data protection measures is the key to thriving securely in the digital age.

#azure data science#azure data scientist#microsoft azure data science#microsoft certified azure data scientist#azure databricks#azure cognitive services#azure synapse analytics#data integration services#cloud based ai services#mlops solution#mlops services#data governance. data security services#Azure Databricks services

0 notes

Text

Unlock the Future of ML with Azure Databricks – Here's Why You Should Care

youtube

0 notes

Text

Scaling Your Data Mesh Architecture for maximum efficiency and interoperability

View On WordPress

#Azure Databricks#Big Data#Business Intelligence#Cloud Data Management#Collaborative Data Solutions#Data Analytics#Data Architecture#Data Compliance#Data Governance#Data management#Data Mesh#Data Operations#Data Security#Data Sharing Protocols#Databricks Lakehouse#Delta Sharing#Interoperability#Open Protocol#Real-time Data Sharing#Scalable Data Solutions

0 notes

Text

AZURE DATA ENGINEER

0 notes

Text

How to print Azure Keyvault secret value in Databricks notebook ? Print shows REDACTED.

As part of ensuring security, sensitive information will not get printed directly on the Databricks notebooks. Sometimes this good feature becomes a trouble for the developers. For example, if you want to verify the value using a code snippet due to the lack of direct access to the vault, the direct output will show REDACTED. To overcome this problem, we can use a simple code snippet which just…

View On WordPress

#Azure Databricks#how to print keyvault secret value in databricks#print redacted content in plain text#show the actual value of a hidden string in databricks#show value of redacted

0 notes

Text

[Fabric] Leer y escribir storage con Databricks

Muchos lanzamientos y herramientas dentro de una sola plataforma haciendo participar tanto usuarios técnicos (data engineers, data scientists o data analysts) como usuarios finales. Fabric trajo una unión de involucrados en un único espacio. Ahora bien, eso no significa que tengamos que usar todas pero todas pero todas las herramientas que nos presenta.

Si ya disponemos de un excelente proceso de limpieza, transformación o procesamiento de datos con el gran popular Databricks, podemos seguir usándolo.

En posts anteriores hemos hablado que Fabric nos viene a traer un alamacenamiento de lake de última generación con open data format. Esto significa que nos permite utilizar los más populares archivos de datos para almacenar y que su sistema de archivos trabaja con las convencionales estructuras open source. En otras palabras podemos conectarnos a nuestro storage desde herramientas que puedan leerlo. También hemos mostrado un poco de Fabric notebooks y como nos facilita la experiencia de desarrollo.

En este sencillo tip vamos a ver como leer y escribir, desde databricks, nuestro Fabric Lakehouse.

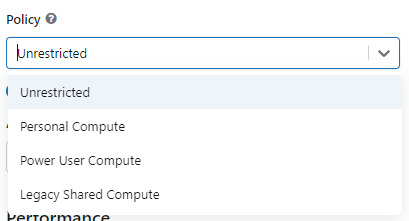

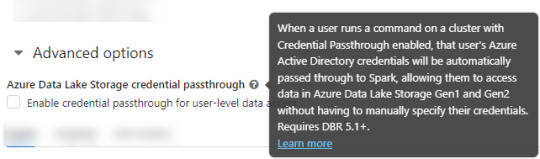

Para poder comunicarnos entre databricks y Fabric lo primero es crear un recurso AzureDatabricks Premium Tier. Lo segundo, asegurarnos de dos cosas en nuestro cluster:

Utilizar un policy "unrestricted" o "power user compute"

2. Asegurarse que databricks podría pasar nuestras credenciales por spark. Eso podemos activarlo en las opciones avanzadas

NOTA: No voy a entrar en más detalles de creación de cluster. El resto de las opciones de procesamiento les dejo que investiguen o estimo que ya conocen si están leyendo este post.

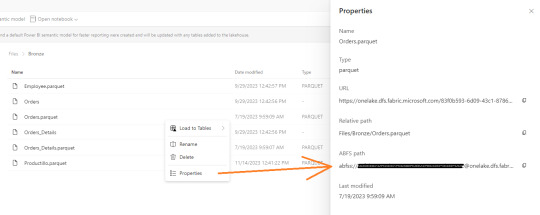

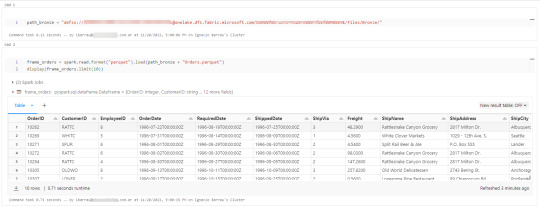

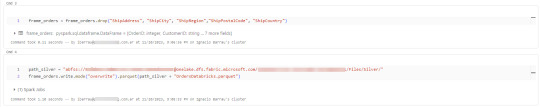

Ya creado nuestro cluster vamos a crear un notebook y comenzar a leer data en Fabric. Esto lo vamos a conseguir con el ABFS (Azure Bllob Fyle System) que es una dirección de formato abierto cuyo driver está incluido en Azure Databricks.

La dirección debe componerse de algo similar a la siguiente cadena:

oneLakePath = 'abfss://[email protected]/myLakehouse.lakehouse/Files/'

Conociendo dicha dirección ya podemos comenzar a trabajar como siempre. Veamos un simple notebook que para leer un archivo parquet en Lakehouse Fabric

Gracias a la configuración del cluster, los procesos son tan simples como spark.read

Así de simple también será escribir.

Iniciando con una limpieza de columnas innecesarias y con un sencillo [frame].write ya tendremos la tabla en silver limpia.

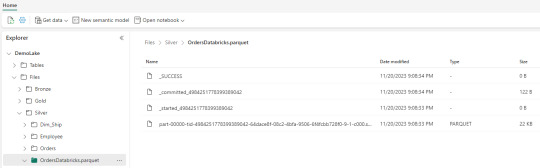

Nos vamos a Fabric y podremos encontrarla en nuestro Lakehouse

Así concluye nuestro procesamiento de databricks en lakehouse de Fabric, pero no el artículo. Todavía no hablamos sobre el otro tipo de almacenamiento en el blog pero vamos a mencionar lo que pertine a ésta lectura.

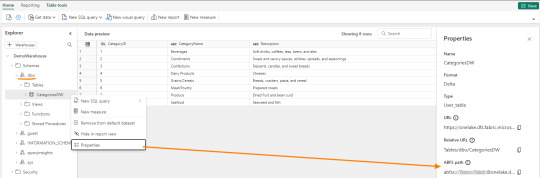

Los Warehouses en Fabric también están constituidos con una estructura tradicional de lake de última generación. Su principal diferencia consiste en brindar una experiencia de usuario 100% basada en SQL como si estuvieramos trabajando en una base de datos. Sin embargo, por detras, podrémos encontrar delta como un spark catalog o metastore.

El path debería verse similar a esto:

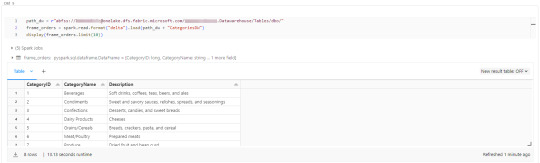

path_dw = "abfss://[email protected]/WarehouseName.Datawarehouse/Tables/dbo/"

Teniendo en cuenta que Fabric busca tener contenido delta en su Spark Catalog de Lakehouse (tables) y en su Warehouse, vamos a leer como muestra el siguiente ejemplo

Ahora si concluye nuestro artículo mostrando como podemos utilizar Databricks para trabajar con los almacenamientos de Fabric.

#fabric#microsoftfabric#fabric cordoba#fabric jujuy#fabric argentina#fabric tips#fabric tutorial#fabric training#fabric databricks#databricks#azure databricks#pyspark

0 notes

Text

Top 10 Predictive Analytics Tools to Strive in 2024

Predictive analytics has become a crucial tool for businesses, thanks to its ability to forecast key metrics like customer retention, ROI growth, and sales performance. The adoption of predictive analytics tools is growing rapidly as businesses recognize their value in driving strategic decisions. According to Statista, the global market for predictive analytics tools is projected to reach $41.52 billion by 2028, highlighting its increasing importance.

What Are Predictive Analytics Tools?

Predictive analytics tools are essential for managing supply chains, understanding consumer behavior, and optimizing business operations. They help organizations assess their current position and make informed decisions for future growth. Tools like Tableau, KNIME, and Databricks offer businesses a competitive advantage by transforming raw data into actionable insights. By identifying patterns within historical data, these tools enable companies to forecast trends and implement effective growth strategies. For example, many retail companies use predictive analytics to improve inventory management and enhance customer experiences.

Top 10 Predictive Analytics Tools

SAP: Known for its capabilities in supply chain, logistics, and inventory management, SAP offers an intuitive interface for creating interactive visuals and dashboards.

Alteryx: This platform excels in building data models and offers a low-code environment, making it accessible to users with limited coding experience.

Tableau: Tableau is favored for its data processing speed and user-friendly interface, which allows for the creation of easy-to-understand visuals.

Amazon QuickSight: A cloud-based service, QuickSight offers a low-code environment for automating tasks and creating interactive dashboards.

Altair AI Studio: Altair provides robust data mining and predictive modeling capabilities, making it a versatile tool for business intelligence.

IBM SPSS: Widely used in academia and market research, SPSS offers a range of tools for statistical analysis with a user-friendly interface.

KNIME: This open-source tool is ideal for data mining and processing tasks, and it supports machine learning and statistical analysis.

Microsoft Azure: Azure offers a comprehensive cloud computing platform with robust security features and seamless integration with Microsoft products.

Databricks: Built on Apache Spark, Databricks provides a collaborative workspace for data processing and machine learning tasks.

Oracle Data Science: This cloud-based platform supports a wide range of programming languages and frameworks, offering a collaborative environment for data scientists.

Conclusion

As businesses continue to embrace digital transformation, predictive analytics tools are becoming increasingly vital. Companies looking to stay competitive should carefully select the right tools to harness the full potential of predictive analytics in today’s business la

#databricks#oracle data science#sap#alteryx#microsoft#microsoft azure#knime#ibm spss#altair studio#amazon quick sight

1 note

·

View note

Text

#dataengineer#onlinetraining#freedemo#cloudlearning#azuredatlake#Databricks#azuresynapse#AzureDataFactory#Azure#SQL#MySQL#NewTechnolgies#software#softwaredevelopment#visualpathedu#onlinecoaching#ADE#DataLake#datalakehouse#AzureDataEngineering

2 notes

·

View notes

Text

Navigating the Data Landscape: A Deep Dive into ScholarNest's Corporate Training

In the ever-evolving realm of data, mastering the intricacies of data engineering and PySpark is paramount for professionals seeking a competitive edge. ScholarNest's Corporate Training offers an immersive experience, providing a deep dive into the dynamic world of data engineering and PySpark.

Unlocking Data Engineering Excellence

Embark on a journey to become a proficient data engineer with ScholarNest's specialized courses. Our Data Engineering Certification program is meticulously crafted to equip you with the skills needed to design, build, and maintain scalable data systems. From understanding data architecture to implementing robust solutions, our curriculum covers the entire spectrum of data engineering.

Pioneering PySpark Proficiency

Navigate the complexities of data processing with PySpark, a powerful Apache Spark library. ScholarNest's PySpark course, hailed as one of the best online, caters to both beginners and advanced learners. Explore the full potential of PySpark through hands-on projects, gaining practical insights that can be applied directly in real-world scenarios.

Azure Databricks Mastery

As part of our commitment to offering the best, our courses delve into Azure Databricks learning. Azure Databricks, seamlessly integrated with Azure services, is a pivotal tool in the modern data landscape. ScholarNest ensures that you not only understand its functionalities but also leverage it effectively to solve complex data challenges.

Tailored for Corporate Success

ScholarNest's Corporate Training goes beyond generic courses. We tailor our programs to meet the specific needs of corporate environments, ensuring that the skills acquired align with industry demands. Whether you are aiming for data engineering excellence or mastering PySpark, our courses provide a roadmap for success.

Why Choose ScholarNest?

Best PySpark Course Online: Our PySpark courses are recognized for their quality and depth.

Expert Instructors: Learn from industry professionals with hands-on experience.

Comprehensive Curriculum: Covering everything from fundamentals to advanced techniques.

Real-world Application: Practical projects and case studies for hands-on experience.

Flexibility: Choose courses that suit your level, from beginner to advanced.

Navigate the data landscape with confidence through ScholarNest's Corporate Training. Enrol now to embark on a learning journey that not only enhances your skills but also propels your career forward in the rapidly evolving field of data engineering and PySpark.

#data engineering#pyspark#databricks#azure data engineer training#apache spark#databricks cloud#big data#dataanalytics#data engineer#pyspark course#databricks course training#pyspark training

3 notes

·

View notes

Text

Microsoft Azure Data Science in Brisbane

Unlock insights with Azure AI Data Science solutions in Brisbane. Expert Azure Data Scientists deliver scalable, AI-driven analytics for your business growth.

#azure data science#azure data scientist#microsoft azure data science#microsoft certified azure data scientist#azure databricks#azure cognitive services#azure synapse analytics#data integration services#cloud based ai services#mlops solution#mlops services#data governance. data security services#Azure Databricks services

0 notes

Text

Roadmap to Becoming an AI Guru in 2025

Roadmap to Becoming an AI Guru in 2025 Timeframe Foundations AI Concepts Hands-On Skills AI Tools Buzzwords Continuous Learning Soft Skills Becoming an “AI Guru” in 2025 transcends basic comprehension; it demands profound technical expertise, continuous adaptation, and practical application of advanced concepts. This comprehensive roadmap outlines the critical areas of knowledge, hands-on…

#Algorithms#apache#Autonomous#AWS#Azure#Career#cloud#Databricks#embeddings#gcp#Generative AI#gpu#image#LLM#LLMs#monitoring#nosql#Optimization#performance#Platform#Platforms#programming#python#pytorch#Spark#sql#vector#Vertex AI

0 notes

Text

Unlock Data Governance: Revolutionary Table-Level Access in Modern Platforms

Dive into our latest blog on mastering data governance with Microsoft Fabric & Databricks. Discover key strategies for robust table-level access control and secure your enterprise's data. A must-read for IT pros! #DataGovernance #Security

View On WordPress

#Access Control#Azure Databricks#Big data analytics#Cloud Data Services#Data Access Patterns#Data Compliance#Data Governance#Data Lake Storage#Data Management Best Practices#Data Privacy#Data Security#Enterprise Data Management#Lakehouse Architecture#Microsoft Fabric#pyspark#Role-Based Access Control#Sensitive Data Protection#SQL Data Access#Table-Level Security

0 notes

Text

Streaming Analytics with Azure Databricks, Event Hub, and Delta Lake: A Step-by-Step Demo

In this video, we’ll show you how to build a real-time data pipeline for advanced analytics using Azure Databricks, Event Hub, and … source

0 notes

Text

How Azure Supports Big Data and Real-Time Data Processing

The explosion of digital data in recent years has pushed organizations to look for platforms that can handle massive datasets and real-time data streams efficiently. Microsoft Azure has emerged as a front-runner in this domain, offering robust services for big data analytics and real-time processing. Professionals looking to master this platform often pursue the Azure Data Engineering Certification, which helps them understand and implement data solutions that are both scalable and secure.

Azure not only offers storage and computing solutions but also integrates tools for ingestion, transformation, analytics, and visualization—making it a comprehensive platform for big data and real-time use cases.

Azure’s Approach to Big Data

Big data refers to extremely large datasets that cannot be processed using traditional data processing tools. Azure offers multiple services to manage, process, and analyze big data in a cost-effective and scalable manner.

1. Azure Data Lake Storage

Azure Data Lake Storage (ADLS) is designed specifically to handle massive amounts of structured and unstructured data. It supports high throughput and can manage petabytes of data efficiently. ADLS works seamlessly with analytics tools like Azure Synapse and Azure Databricks, making it a central storage hub for big data projects.

2. Azure Synapse Analytics

Azure Synapse combines big data and data warehousing capabilities into a single unified experience. It allows users to run complex SQL queries on large datasets and integrates with Apache Spark for more advanced analytics and machine learning workflows.

3. Azure Databricks

Built on Apache Spark, Azure Databricks provides a collaborative environment for data engineers and data scientists. It’s optimized for big data pipelines, allowing users to ingest, clean, and analyze data at scale.

Real-Time Data Processing on Azure

Real-time data processing allows businesses to make decisions instantly based on current data. Azure supports real-time analytics through a range of powerful services:

1. Azure Stream Analytics

This fully managed service processes real-time data streams from devices, sensors, applications, and social media. You can write SQL-like queries to analyze the data in real time and push results to dashboards or storage solutions.

2. Azure Event Hubs

Event Hubs can ingest millions of events per second, making it ideal for real-time analytics pipelines. It acts as a front-door for event streaming and integrates with Stream Analytics, Azure Functions, and Apache Kafka.

3. Azure IoT Hub

For businesses working with IoT devices, Azure IoT Hub enables the secure transmission and real-time analysis of data from edge devices to the cloud. It supports bi-directional communication and can trigger workflows based on event data.

Integration and Automation Tools

Azure ensures seamless integration between services for both batch and real-time processing. Tools like Azure Data Factory and Logic Apps help automate the flow of data across the platform.

Azure Data Factory: Ideal for building ETL (Extract, Transform, Load) pipelines. It moves data from sources like SQL, Blob Storage, or even on-prem systems into processing tools like Synapse or Databricks.

Logic Apps: Allows you to automate workflows across Azure services and third-party platforms. You can create triggers based on real-time events, reducing manual intervention.

Security and Compliance in Big Data Handling

Handling big data and real-time processing comes with its share of risks, especially concerning data privacy and compliance. Azure addresses this by providing:

Data encryption at rest and in transit

Role-based access control (RBAC)

Private endpoints and network security

Compliance with standards like GDPR, HIPAA, and ISO

These features ensure that organizations can maintain the integrity and confidentiality of their data, no matter the scale.

Career Opportunities in Azure Data Engineering

With Azure’s growing dominance in cloud computing and big data, the demand for skilled professionals is at an all-time high. Those holding an Azure Data Engineering Certification are well-positioned to take advantage of job roles such as:

Azure Data Engineer

Cloud Solutions Architect

Big Data Analyst

Real-Time Data Engineer

IoT Data Specialist

The certification equips individuals with knowledge of Azure services, big data tools, and data pipeline architecture—all essential for modern data roles.

Final Thoughts

Azure offers an end-to-end ecosystem for both big data analytics and real-time data processing. Whether it’s massive historical datasets or fast-moving event streams, Azure provides scalable, secure, and integrated tools to manage them all.

Pursuing an Azure Data Engineering Certification is a great step for anyone looking to work with cutting-edge cloud technologies in today’s data-driven world. By mastering Azure’s powerful toolset, professionals can design data solutions that are future-ready and impactful.

#Azure#BigData#RealTimeAnalytics#AzureDataEngineer#DataLake#StreamAnalytics#CloudComputing#AzureSynapse#IoTHub#Databricks#CloudZone#AzureCertification#DataPipeline#DataEngineering

0 notes

Text

What’s New In Databricks? February 2025 Updates & Features Explained!

youtube

What’s New In Databricks? February 2025 Updates & Features Explained! #databricks #spark #dataengineering

Are you ready for the latest Databricks updates in February 2025? 🚀 This month brings game-changing features like SAP integration, Lakehouse Federation for Teradata, Databricks Clean Rooms, SQL Pipe, Serverless on Google Cloud, Predictive Optimization, and more!

✨ Explore Databricks AI insights and workflows—read more: / databrickster

🔔𝐃𝐨𝐧'𝐭 𝐟𝐨𝐫𝐠𝐞𝐭 𝐭𝐨 𝐬𝐮𝐛𝐬𝐜𝐫𝐢𝐛𝐞 𝐭𝐨 𝐦𝐲 𝐜𝐡𝐚𝐧𝐧𝐞𝐥 𝐟𝐨𝐫 𝐦𝐨𝐫𝐞 𝐮𝐩𝐝𝐚𝐭𝐞𝐬. / @hubert_dudek

🔗 Support Me Here! ☕Buy me a coffee: https://ko-fi.com/hubertdudek

🔗 Stay Connected With Me. Medium: / databrickster

==================

#databricks#bigdata#dataengineering#machinelearning#sql#cloudcomputing#dataanalytics#ai#azure#googlecloud#aws#etl#python#data#database#datawarehouse#Youtube

1 note

·

View note

Text

🚀 Azure Data Engineer Online Training – Build Your Cloud Data Career with VisualPath! Step confidently into one of the most in-demand IT roles with VisualPath’s Azure Data Engineer Course Online. Whether you’re a fresher, a working professional, or an enterprise team seeking corporate upskilling, this practical program will help you master the skills to design, develop, and manage scalable data solutions on Microsoft Azure.

💡 Key Skills You’ll Gain:🔹 Azure Data Factory – Create and automate robust data pipelines🔹 Azure Databricks – Handle big data and deliver real-time analytics🔹 Power BI – Build interactive dashboards and data visualizations

📞 Reserve Your FREE Demo Spot Today – Limited Seats Available!

📲 WhatsApp Now: https://wa.me/c/917032290546

🔗 Visit: https://www.visualpath.in/online-azure-data-engineer-course.html 📖 Blog: https://visualpathblogs.com/category/azure-data-engineering/

#visualpathedu#Azure#AzureDataEngineer#MicrosoftAzure#AzureCloud#DataEngineering#CloudComputing#AzureTraining#AzureCertification#BigData#ETL#SQL#PowerBI#Databricks#AzureDataFactory#DataAnalytics#CloudEngineer#MachineLearning#AI#BusinessIntelligence#Snowflake#AzureDataScience

0 notes