#Cloud native

Explore tagged Tumblr posts

Text

Orchestrating Modular AI Workflows with Cloud-Native Foundations

Microservices and serverless functions act as the core engines of AI modularity, enabling loosely coupled, domain-specific intelligence. Event-driven triggers initiate real-time workflows, while AI Fabric connects distributed agents, models, and datasets across platforms. Combined with DevSecOps automation and continuous optimization, enterprises unlock adaptive intelligence that scales horizontally and evolves in sync with dynamic business conditions.

Read more

1 note

·

View note

Text

Discover the core principles of cloud native architecture in this blog that drive scalable, resilient, and efficient apps. Build modern cloud solutions today. https://www.rishabhsoft.com/blog/cloud-native-architecture-principles-and-best-practices

0 notes

Text

How Modern Data Engineering Powers Scalable, Real-Time Decision-Making

In today's world, driven by technology, businesses have evolved further and do not want to analyze data from the past. Everything from e-commerce websites providing real-time suggestions to banks verifying transactions in under a second, everything is now done in a matter of seconds. Why has this change taken place? The modern age of data engineering involves software development, data architecture, and cloud infrastructure on a scalable level. It empowers organizations to convert massive, fast-moving data streams into real-time insights.

From Batch to Real-Time: A Shift in Data Mindset

Traditional data systems relied on batch processing, in which data was collected and analyzed after certain periods of time. This led to lagging behind in a fast-paced world, as insights would be outdated and accuracy would be questionable. Ultra-fast streaming technologies such as Apache Kafka, Apache Flink, and Spark Streaming now enable engineers to create pipelines that help ingest, clean, and deliver insights in an instant. This modern-day engineering technique shifts the paradigm of outdated processes and is crucial for fast-paced companies in logistics, e-commerce, relevancy, and fintech.

Building Resilient, Scalable Data Pipelines

Modern data engineering focuses on the construction of thoroughly monitored, fault-tolerant data pipelines. These pipelines are capable of scaling effortlessly to higher volumes of data and are built to accommodate schema changes, data anomalies, and unexpected traffic spikes. Cloud-native tools like AWS Glue and Google Cloud Dataflow with Snowflake Data Sharing enable data sharing and integration scaling without limits across platforms. These tools make it possible to create unified data flows that power dashboards, alerts, and machine learning models instantaneously.

Role of Data Engineering in Real-Time Analytics

Here is where these Data Engineering Services make a difference. At this point, companies providing these services possess considerable technical expertise and can assist an organization in designing modern data architectures in modern frameworks aligned with their business objectives. From establishing real-time ETL pipelines to infrastructure handling, these services guarantee that your data stack is efficient and flexible in terms of cost. Companies can now direct their attention to new ideas and creativity rather than the endless cycle of data management patterns.

Data Quality, Observability, and Trust

Real-time decision-making depends on the quality of the data that powers it. Modern data engineering integrates practices like data observability, automated anomaly detection, and lineage tracking. These ensure that data within the systems is clean and consistent and can be traced. With tools like Great Expectations, Monte Carlo, and dbt, engineers can set up proactive alerts and validations to mitigate issues that could affect economic outcomes. This trust in data quality enables timely, precise, and reliable decisions.

The Power of Cloud-Native Architecture

Modern data engineering encompasses AWS, Azure, and Google Cloud. They provide serverless processing, autoscaling, real-time analytics tools, and other services that reduce infrastructure expenditure. Cloud-native services allow companies to perform data processing, as well as querying, on exceptionally large datasets instantly. For example, with Lambda functions, data can be transformed. With BigQuery, it can be analyzed in real-time. This allows rapid innovation, swift implementation, and significant long-term cost savings.

Strategic Impact: Driving Business Growth

Real-time data systems are providing organizations with tangible benefits such as customer engagement, operational efficiency, risk mitigation, and faster innovation cycles. To achieve these objectives, many enterprises now opt for data strategy consulting, which aligns their data initiatives to the broader business objectives. These consulting firms enable organizations to define the right KPIs, select appropriate tools, and develop a long-term roadmap to achieve desired levels of data maturity. By this, organizations can now make smarter, faster, and more confident decisions.

Conclusion

Investing in modern data engineering is more than an upgrade of technology — it's a shift towards a strategic approach of enabling agility in business processes. With the adoption of scalable architectures, stream processing, and expert services, the true value of organizational data can be attained. This ensures that whether it is customer behavior tracking, operational optimization, or trend prediction, data engineering places you a step ahead of changes before they happen, instead of just reacting to changes.

1 note

·

View note

Text

Top Platform Engineering Practices for Scalable Applications

In today’s digital world, scalability is a crucial attribute for any platform. As businesses grow and demands change, building a platform that can adapt and expand is essential. Platform engineering practices focus on creating robust, flexible systems. These systems not only perform underload but also evolve with emerging technologies. Here are some top practices to ensure your applications remain scalable and efficient.

1. Adopt a Microservices Architecture

A microservices architecture breaks down a monolithic application into smaller, independent services that work together. This approach offers numerous benefits for scalability:

Independent Scaling: Each service can be scaled separately based on demand. This ensures efficient resource utilization.

Resilience: Isolated failures in one service do not bring down the entire application. This improves overall system stability.

Flexibility: Services can be developed, deployed, and maintained using different technologies. This allows teams to choose the best tools for each job.

2. Embrace Containerization and Orchestration

Containerization, with tools like Docker, has become a staple in modern platform engineering. Containers package applications with all their dependencies. This ensures consistency across development, testing, and production environments. Orchestration platforms like Kubernetes further enhance scalability by automating the deployment, scaling, and management of containerized applications. This combination allows for rapid, reliable scaling. It alsod helps maintain high availability.

3. Leverage Cloud-Native Technologies

Cloud-native solutions are designed to exploit the full benefits of cloud computing. This includes utilizing Infrastructure as Code (IaC) tools such as Terraform or CloudFormation to automate the provisioning of infrastructure. Cloud platforms offer dynamic scaling, robust security features, and managed services that reduce operational complexity. Transitioning to cloud-native technologies enables teams to focus on development. Meanwhile, underlying infrastructure adapts to workload changes.

4. Implement Continuous Integration/Continuous Deployment (CI/CD)

A robust CI/CD pipeline is essential for maintaining a scalable platform. Continuous integration ensures that new code changes are automatically tested and merged. This reduces the risk of integration issues. Continuous deployment, on the other hand, enables rapid, reliable releases of new features and improvements. By automating testing and deployment processes, organizations can quickly iterate on their products. They can also respond to user demands without sacrificing quality or stability.

5. Monitor, Analyze, and Optimize

Scalability isn’t a one-time setup—it requires continuous monitoring and optimization. Implementing comprehensive monitoring tools and logging frameworks is crucial for:

tracking application performance,

spotting bottlenecks, and

identifying potential failures.

Metrics such as response times, error rates, and resource utilization provide insights that drive informed decisions on scaling strategies. Regular performance reviews and proactive adjustments ensure that the platform remains robust under varying loads.

6. Focus on Security and Compliance

As platforms scale, security and compliance become increasingly complex yet critical. Integrating security practices into every stage of the development and deployment process—often referred to as DevSecOps—helps identify and mitigate vulnerabilities early. Automated security testing and regular audits ensure that the platform not only scales efficiently but also maintains data integrity and compliance with industry standards.

Scalable applications require thoughtful platform engineering practices that balance flexibility, efficiency, and security. What happens when organizations adopt a microservices architecture, embrace containerization and cloud-native technologies, and implement continuous integration and monitoring? Organizations can build platforms capable of handling growing user demands. These practices streamline development and deployment. They also ensure that your applications are prepared for the future.

Read more about how platform engineering powers efficiency and enhances business value.

#cicd#cloud native#software development#software services#software engineering#it technology#future it technologies

0 notes

Text

Simplifying Hybrid Cloud Management with Red Hat Advanced Cluster Management for Kubernetes

In today's dynamic IT landscape, organizations are increasingly adopting hybrid and multi-cloud strategies to achieve greater flexibility, scalability, and resilience. However, managing Kubernetes clusters across diverse environments—on-premises data centers, public clouds, and edge locations—presents significant challenges. This complexity can lead to operational overhead, security vulnerabilities, and inconsistent application deployments. This is where Red Hat Advanced Cluster Management for Kubernetes (ACM) steps in, offering a centralized and streamlined approach to hybrid cloud management.

The Challenges of Hybrid Cloud Kubernetes Management:

Managing multiple Kubernetes clusters in a hybrid cloud environment can quickly become overwhelming. Some key challenges include:

Inconsistent Configurations: Maintaining consistent configurations across different clusters can be difficult, leading to inconsistencies in application deployments and potential security risks.

Visibility and Control: Gaining a unified view of all clusters and their health can be challenging, hindering effective monitoring and troubleshooting.

Security and Compliance: Enforcing consistent security policies and ensuring compliance across all environments can be complex and time-consuming.

Application Lifecycle Management: Deploying, updating, and managing applications across multiple clusters can be cumbersome and error-prone.

How Red Hat Advanced Cluster Management Simplifies Hybrid Cloud Management:

Red Hat ACM provides a comprehensive solution for managing the entire lifecycle of Kubernetes clusters across hybrid and multi-cloud environments. It offers several key features that simplify management and improve operational efficiency:

Centralized Management: ACM provides a single console for managing all your Kubernetes clusters, regardless of where they are deployed. This centralized view simplifies operations and provides consistent control.

Policy-Based Governance: ACM allows you to define and enforce consistent policies across all your clusters, ensuring compliance with security and regulatory requirements.

Application Lifecycle Management: ACM simplifies the deployment, updating, and management of applications across multiple clusters, streamlining the application lifecycle.

Cluster Lifecycle Management: ACM streamlines the creation, scaling, and deletion of Kubernetes clusters, simplifying infrastructure management.

Observability and Monitoring: ACM provides comprehensive monitoring and observability capabilities, giving you insights into the health and performance of your clusters and applications.

GitOps Integration: ACM integrates with GitOps workflows, enabling declarative infrastructure and application management for improved automation and consistency.

Key Benefits of Using Red Hat Advanced Cluster Management:

Reduced Operational Overhead: By centralizing management and automating key tasks, ACM reduces the operational burden on IT teams.

Improved Security and Compliance: Policy-based governance ensures consistent security and compliance across all environments.

Faster Application Deployments: Streamlined application lifecycle management accelerates time to market for new applications.

Increased Agility and Flexibility: ACM enables organizations to quickly adapt to changing business needs by easily managing and scaling their Kubernetes infrastructure.

Enhanced Visibility and Control: Centralized monitoring and observability provide a clear view of the health and performance of all clusters and applications.

Use Cases for Red Hat Advanced Cluster Management:

Hybrid Cloud Deployments: Managing Kubernetes clusters across on-premises data centers and public clouds.

Multi-Cloud Deployments: Managing Kubernetes clusters across multiple public cloud providers.

Edge Computing: Managing Kubernetes clusters deployed at the edge of the network.

DevOps and CI/CD: Automating the deployment and management of applications in a CI/CD pipeline.

Conclusion:

Red Hat Advanced Cluster Management for Kubernetes is a powerful tool for simplifying the complexities of hybrid cloud Kubernetes management. By providing centralized management, policy-based governance, and automated workflows, ACM empowers organizations to effectively manage their Kubernetes infrastructure and accelerate their cloud-native journey. If you're struggling to manage your Kubernetes clusters across multiple environments, Red Hat ACM is a solution worth exploring.

Ready to simplify your hybrid cloud Kubernetes management?

Contact Hawkstack Technologies today to learn more about Red Hat Advanced Cluster Management and how we can help you implement it in your environment. www.hawkstack.com

1 note

·

View note

Text

Moving From Cloud-Based to Cloud-Native: Unlocking The Full Potential Of Cloud Computing

In today’s fast-paced digital landscape, businesses are no longer satisfied with simply “being in the cloud.” While cloud-based applications (those lifted and shifted to the cloud from traditional environments) have brought many benefits, organizations increasingly look to go further by adopting cloud-native architectures. This shift enables them to truly harness the power of the cloud: scalability, resilience, flexibility, and faster innovation.

In this blog, we’ll explore the journey from cloud-based to cloud-native, the key differences, and the benefits this transformation brings to your business.

What is Cloud-Based vs. Cloud-Native?

Before diving into the transition, it’s important to distinguish between cloud-based and cloud-native approaches.

Cloud-Based: This refers to applications that were traditionally built for on-premises environments but have been moved to the cloud (e.g., via lift-and-shift) without significant changes to their architecture. These applications may run on virtual machines (VMs) in cloud environments like AWS, Azure, or Google Cloud but don't leverage the full potential of cloud services.

Cloud-Native: Cloud-native refers to designing, building, and running applications that fully exploit the advantages of cloud computing. These applications are often based on microservices architecture, are containerized, and leverage Kubernetes, serverless computing, and other cloud-native technologies. They are built to scale automatically, recover from failures, and be rapidly updated.

Why Move from Cloud-Based to Cloud-Native?

While cloud-based applications offer advantages such as reduced capital expenses and easier scaling compared to on-premises systems, they often fall short in flexibility, efficiency, and speed. Here's why moving to cloud-native is worth considering:

Scalability & Elasticity: Cloud-native applications are inherently scalable. By using microservices and containerization, you can scale individual components of your application independently based on demand, allowing for more granular control and cost optimization.

Faster Development & Deployment: Cloud-native applications embrace DevOps and CI/CD (Continuous Integration/ Deployment) practices. This means faster release cycles, enabling your teams to innovate quickly, respond to market changes, and reduce time to market for new features.

Resilience & Fault Tolerance: Cloud-native applications are designed to be resilient. Using principles like microservices, your application can continue running even if one component fails. Built-in fault tolerance ensures minimal downtime, enhancing user experience.

Cost Optimization: Since cloud-native applications can be dynamically scaled, you only pay for the resources you use. This leads to more efficient resource utilization and reduces operational costs compared to running large monolithic applications on dedicated infrastructure.

Cloud Provider Independence: Cloud-native applications are often built using open standards (such as Kubernetes), making it easier to deploy them across multiple cloud providers (AWS, Azure, GCP). This flexibility avoids vendor lock-in and opens options for multi-cloud strategies.

Steps to Move from Cloud-Based to Cloud-Native

Transitioning from a cloud-based architecture to a cloud-native approach requires a deliberate and phased strategy. Here’s a roadmap to guide your journey.

1. Assess Current Architecture

Evaluate your existing cloud-based applications to understand their architecture, performance bottlenecks, and operational pain points.

Identify dependencies between services, databases, and other components that might impact the migration.

2. Define a Cloud-Native Strategy

Establish clear business and technical goals for your cloud-native transformation. This could include reducing costs, improving development speed, enhancing user experience, or increasing resilience.

3. Adopt a Microservices Architecture

Break down your monolithic application into smaller, independent microservices. Each microservice should handle a specific business function and can be deployed, scaled, and updated independently.

4. Leverage Containers & Kubernetes

Containerization (e.g., using Docker) is a key enabler of cloud-native architectures. Containers allow your applications to be packaged with all their dependencies, ensuring consistency across environments.

Adopt Kubernetes to manage and orchestrate containers, enabling automatic scaling, load balancing, and failover.

5. Embrace DevOps and CI/CD

Build CI/CD pipelines to automate testing and deployment processes, allowing for frequent, error-free updates to your applications.

6. Use Serverless Technologies (Where Appropriate)

For specific use cases like event-driven tasks, consider adopting serverless platforms (e.g., AWS Lambda, Azure Functions). This eliminates the need to manage infrastructure,

7. Ensure Observability and Monitoring

Implement cloud-native observability tools to monitor your applications and infrastructure.

8. Test and Iterate

Before a full-scale migration, test the cloud-native components in a sandbox or staging environment.

9. Migrate and Scale

Gradually move services to the cloud-native environment, starting with less critical components and scaling up as you gain confidence in the new architecture.

Challenges to Expect During the Transition

As with any large-scale transformation, moving to a cloud-native architecture presents some challenges:

Complexity: Decomposing a monolithic application into microservices requires significant effort and planning.

Cultural Shift: Embracing cloud-native often involves a shift in mindset towards DevOps, continuous deployment, and collaboration between development and operations teams.

Skill Gaps: Teams may need to upskill to work with technologies like containers, Kubernetes, and CI/CD pipelines.

Security: Securing a cloud-native environment can be more complex due to the distributed nature of microservices, containers, and serverless functions.

Benefits of Cloud-Native

Once the migration to cloud-native is complete, the benefits become evident:

Greater Agility: Your teams can develop, test, and deploy new features faster, giving you an edge in the market.

Cost Efficiency: Pay only for the resources you use with automatic scaling and container orchestration.

Operational Resilience: Cloud-native applications are built with fault tolerance in mind, ensuring that failures in one area don’t impact the entire system.

Faster Time-to-Market: CI/CD pipelines, combined with microservices, allow you to release features and updates much more frequently.

Conclusion

Moving from a cloud-based to a cloud-native architecture is not just a technological shift but also a strategic one that enables your business to stay competitive in today’s dynamic digital environment. By adopting cloud-native practices such as microservices, containers, DevOps, and serverless computing, you unlock the true potential of the cloud—scalability, resilience, flexibility, and speed.

The journey may be challenging, but the long-term rewards in agility, cost savings, and innovation are worth it. If your organization is considering the leap to cloud-native, the key is to plan carefully, iterate gradually, and invest in the right skills and tools.

Covalensedigital leverages its expertise to optimize and guide this journey.

Visit: Covalense Digital LinkedIn

#Cloud native#Cloud based applications#multi cloud strategies#microservices architecture#CI/CD Pipelines#AWS#google cloud

0 notes

Text

The Serverless Development Dilemma: Local Testing in a Cloud-Native World

Picture this: You’re an AWS developer, sitting in your favorite coffee shop, sipping on your third espresso of the day. You’re working on a cutting-edge serverless application that’s going to revolutionize… well, something. But as you try to test your latest feature, you realize you’re caught in a classic “cloud” vs “localhost” conundrum. Welcome to the serverless development dilemma! The…

#AWS DevOps#AWS Lambda#CI/CD#Cloud Native#Developer Productivity#GitLab CI#Microservices#serverless#Terraform State

0 notes

Text

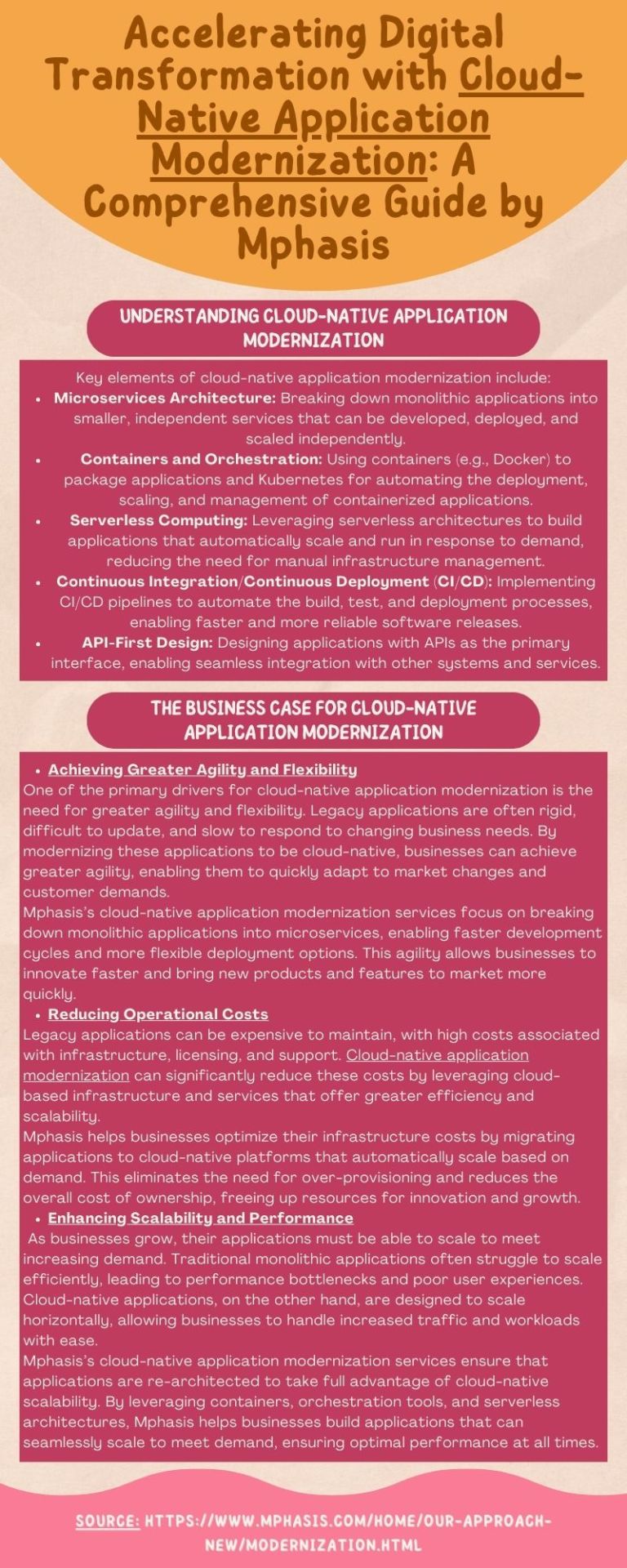

Mphasis, a global leader in digital transformation, offers comprehensive cloud-native application modernization services that help businesses transform their legacy systems into modern, cloud-native applications. By adopting cloud-native principles, businesses can accelerate their digital transformation journey, reduce operational costs, and deliver superior customer experiences.

0 notes

Text

Microsoft Cloud PKI for Intune SCEP URL

Earlier this year, Microsoft announced Cloud PKI for Intune, a cloud service for issuing and managing digital certificates for Intune-managed endpoints. With Cloud PKI for Intune, administrators no longer need to deploy on-premises infrastructure to use certificates for user and device-based authentication for workloads such as Wi-Fi and VPN. Cloud PKI for Intune can be used standalone (cloud…

View On WordPress

#AD CS#ADCS#authentication#Azure#certificate#cloud#cloud native#Cloud PKI#Cloud PKI for Intune#Entra ID#InTune#Microsoft#PKI#SCEP#SCEP URL#Simple Certificate Enrollment Protocol#Wi-Fi#Windows

0 notes

Text

Cloud Native Architecture book

Its a busy time with books at the moment. I am excited to and pleased to hear that Fernando Harris‘ first book project has been published. It can be found on amazon.com and amazon.co.uk among sites. Having been fortunate enough to be a reviewer of the book, I can say that what makes this book different from others that examine cloud-native architecture is its holistic approach to the challenge.…

View On WordPress

0 notes

Text

As businesses evolve, traditional monolithic applications become extremely difficult to scale, maintain, and deploy.

Changing even a small part of the application demands rebuilding and revamping the total architecture, resulting in longer development cycles, operational bottlenecks, and system downtimes.

By embracing modern-day microservices architecture, you can break free from these constraints and unlock new possibilities. By splitting the total application into small, independent services that communicate via APIs, you can:

Scale individual services independently

Deploy new features & updates quickly

Isolate bugs and errors effectively

Improve team productivity

#CloudComputing #MicroservicesAdvantages #ScalabilityInTheCloud #EfficiencyAndFlexibility #CostEffectiveSolutions #CloudNativeArchitecture #DigitalTransformation #InnovationInTechnology #ModernizingInfrastructure #BusinessAgility #benefitsofmicroservices

#microservices#benefits of microservices#cloud native#cloud native technologies#containers and kubernetes

1 note

·

View note

Text

Cloud Native is a software development method that involves the use of cloud computing principles to create and operate scalable applications. It focuses on deploying apps in flexible environments like public, private, and hybrid clouds. Cloud Native applications are designed for efficiency, resilience, and easy scaling.

0 notes

Text

#cloud engineering services#cloud engineering#internet of things#cloud platform#software for services#cloud services#cloud app#cloud application#cloud native#ascendion#Nitor infotech

0 notes

Text

Cloud native deals with building, deploying, and managing modern applications in cloud computing environments to fully benefit from the scalability, flexibility, and efficiency provided by the cloud.

#Cloud Native Applications Market#Cloud Native Applications#Cloud Native#Native Applications Market#Applications Market

0 notes