#DataLabelling

Explore tagged Tumblr posts

Text

What is the difference between tagging and annotation?

Tagging and annotation are both ways of labeling data, but they have different goals and work at different levels of detail. Companies like EnFuse Solutions specialize in document tagging and annotation at scale — delivering 99.1% accuracy across millions of documents annually while ensuring compliance with regulations like GDPR, HIPAA, and CCPA. Get in touch today!

#TaggingServices#DocumentTagging#TaggingAndAnnotation#DocumentTaggingAndAnnotation#DocumentTaggingServices#AnnotationRedactionTagging#AnnotationServices#EntityTagging#EntityAnnotation#DocumentClassification#OrientedBoundingBox#DataLabelling#DataAugmentation#ClaimForms#ObjectDetection#ExceptionHandling#DocumentLabeling#LabelingServices#TaggingServicesIndia#EnFuseSolutions

0 notes

Text

What Is Document Tagging & Annotation? Why It’s Critical for AI Pipelines?

Document tagging and annotation are not just a step in the AI pipeline — they are the foundation upon which the entire pipeline’s success rests. EnFuse Solutions combines human expertise with AI‑assisted platforms to deliver enterprise‑grade data labeling, document tagging, and annotation services. Scale smarter. Tag faster. Deploy with confidence. Get in touch with EnFuse Solutions today!

#TaggingServices#DocumentTagging#TaggingAndAnnotation#DocumentTaggingAndAnnotation#DocumentTaggingServices#AnnotationServices#EntityTagging#EntityAnnotation#DocumentClassification#OrientedBoundingBox#DataLabelling#DataAugmentation#ClaimForms#ObjectDetection#ExceptionHandling#DocumentLabeling#LabelingServices#TaggingServicesIndia#EnFuseSolutions

0 notes

Text

Smart Grid Analytics Market A Comprehensive Analysis of Growth Trends and Opportunities

Global Data Annotation and Labelling Market: A Comprehensive Analysis

Introduction

The Global Data Annotation and Labelling Market is poised for substantial growth, driven by the increasing adoption of AI and machine learning technologies across diverse industries. Data annotation is critical for training AI models, enabling applications such as natural language processing, image recognition, and sentiment analysis. This article delves into the market dynamics, growth factors, regional insights, and competitive landscape of the global data annotation and labelling market.

Market Overview

The Global Data Annotation and Labelling Market is projected to reach USD 2,072.2 million by 2024 and is expected to grow to USD 29,584.2 million by 2033 at a CAGR of 34.4%. This growth is fueled by the demand for high-quality annotated data to enhance AI model accuracy across various applications.

Data Annotation Process

Data annotation involves labeling data for AI model training, facilitating tasks such as image and text recognition, sentiment analysis, and more. The market is segmented by component, data type, deployment type, organization size, annotation type, vertical, and application.

Visit For a Free PDF Sample Copy of This Report@ https://dimensionmarketresearch.com/report/data-annotation-and-labelling-market/request-sample

Key Takeaways

Key Factors Driving Market Growth

Targeted Audience

Market Dynamics

Trends Driving Market Growth

AI Integration

The integration of AI in data annotation tools is revolutionizing the market by automating and improving annotation processes. AI-driven tools enhance accuracy and efficiency, speeding up the data annotation lifecycle.

Cloud Adoption

Cloud-based data annotation solutions are gaining traction due to their scalability, flexibility, and cost-effectiveness. These solutions enable organizations to manage large datasets efficiently and facilitate remote collaboration on annotation projects.

Growth Drivers

Proliferation of Big Data

The exponential growth of big data across industries necessitates advanced data annotation solutions to derive meaningful insights and train AI models effectively. Industries such as healthcare, finance, and retail are leveraging annotated data for operational efficiency and innovation.

Advancements in AI and ML

Technological advancements in AI and machine learning are driving the demand for annotated data. Improved AI models require high-quality annotated datasets for training, enhancing their accuracy and performance in real-world applications.

Growth Opportunities

Healthcare Sector

The healthcare industry is a key growth opportunity for data annotation services, particularly in medical imaging, patient data analysis, and drug discovery. Accurate annotations are crucial for developing AI-driven diagnostic tools and personalized medicine solutions.

Automotive Industry

In the automotive sector, annotated data is essential for developing autonomous driving technologies. Applications such as object detection, traffic analysis, and real-time decision-making rely on annotated datasets to ensure safety and efficiency.

Market Restraints

Data Privacy Concerns

Concerns over data privacy and security pose challenges to the adoption of cloud-based annotation solutions. Industries dealing with sensitive data, such as healthcare and finance, prioritize on-premise solutions to mitigate privacy risks.

High Costs

The high costs associated with manual data annotation methods hinder market growth, particularly for large-scale projects. Although AI-powered solutions offer cost efficiencies, initial implementation costs remain a barrier for some organizations.

Buy This Exclusive Report Here@ https://dimensionmarketresearch.com/checkout/data-annotation-and-labelling-market/

Regional Analysis

North America

North America dominates the global data annotation and labelling market, accounting for 48.1% of market share in 2024. The region's leadership is attributed to its robust technological infrastructure, significant investments in AI research, and widespread adoption of digital technologies across industries.

Europe

Europe is another key region in the data annotation market, driven by advancements in AI technologies and stringent data protection regulations. Countries like Germany, the UK, and France are at the forefront of AI innovation, fostering market growth.

Asia-Pacific

The Asia-Pacific region is witnessing rapid growth in the data annotation market, fueled by expanding IT sectors in countries like China, Japan, and India. Rising investments in AI and machine learning technologies contribute to the region's market expansion.

Recent Developments

Competitive Landscape

The global data annotation and labelling market is fragmented, with key players including Appen, Lionbridge, and Scale AI leading the market. These companies offer a wide range of annotation services across text, image, video, and audio data, catering to diverse industry needs.

About Us

Dimension Market Research (DMR) is the ultimate one step solution to all your research needs and requirements. Dimension Market Research is India and US based company, we have headquarter in USA (New York) with offices in Asia pacific region. Dimension Market Research is specifically designed to provide most relevant syndicated, customized and tailor made market research to suit your specific business needs.

#DataAnnotation#AI#MachineLearning#DataLabelling#ArtificialIntelligence#BigData#TechTrends#MarketResearch#DataScience#DigitalTransformation#IndustryInsights#BusinessIntelligence

0 notes

Text

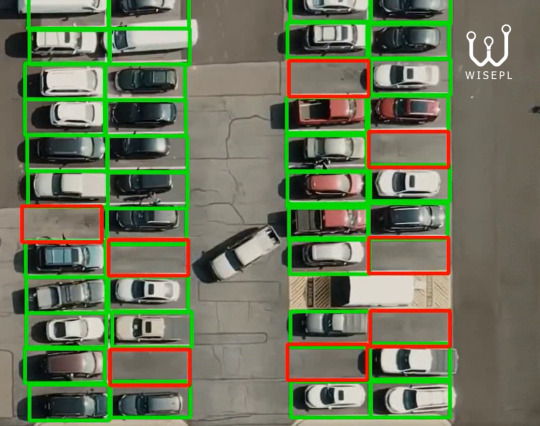

Revolutionize Smart Parking with Precision-Labeled Data

Is your AI model stuck in traffic?

Let Wisepl clear the path. We provide pixel-perfect data annotation for car parking detection systems - from bounding empty slots to tracking vehicle movement in real time.

Whether you're building:

Smart city parking analytics Autonomous valet solutions Real-time congestion monitoring We fuel your model with high-quality, context-aware labeled data.

Our annotation experts don’t just draw boxes—they understand what your algorithm needs.

Multiple vehicles in cluttered lots?

Shadow & lighting issues?

Confusing overlaps?

We handle it all, so your system doesn’t miss a slot.

Bounding boxes & segmentation for parking spaces

Vehicle detection across day/night conditions

Occlusion-aware labeling

Fast turnaround & QA-checked results

Ready to park your AI worries with us? Get a free annotation sample today, Let's talk: https://www.wisepl.com

#DataAnnotation#CarParkingAI#SmartParking#MachineLearningData#ComputerVision#ParkingDetection#AITrainingData#AutonomousParking#ImageLabeling#AIInfrastructure#WiseplAI#AnnotationExperts#AIReadyData#SmartCitySolutions#Wisepl#DataLabeling

0 notes

Text

Engineering Words: A Practical Guide to Optimizing LLM Architecture for Production

Shipping a large language model into production feels a bit like launching a rocket: one misconfigured thruster and the whole mission veers off course. In the AI world, that thruster is your model’s architecture. The glamour of billion-parameter scale often overshadows the gritty optimization work required to translate research prototypes into dependable user experiences. This guide demystifies that process by spotlighting practical levers—tokenization strategy, attention head tuning, activation placement, and inference caching—that directly influence cost, latency, and quality.

Tokenization is the foundation. Many teams simply inherit the default byte-pair encoding from open-source checkpoints, unaware that domain-specific vocabularies can shrink sequence length by 30 percent. Fewer tokens cascade into faster attention, lower GPU memory, and reduced hosting cost. For legal or medical domains, creating a bespoke tokenizer is often cheaper than scaling compute.

Next comes attention head tuning. Transformers sometimes over-allocate heads to tasks that matter little in production. Pruning redundant heads—guided by saliency analysis—yields leaner models without compromising semantics. Coupled with FlashAttention kernels, that trimming can slash inference latency by half on commodity hardware.

Layer normalization placement is another hidden dial. Empirically, pre-RMS Norm stabilizes training for deep stacks, whereas post-LN can eke out marginal accuracy gains in smaller models. Striking the right balance often requires small-scale ablation studies to chart loss landscapes before launching full training runs. A single weekend of experimentation frequently saves weeks of wasted compute on suboptimal setups.

Inference caching underpins snappy user interfaces. By storing key-value tensors from previous tokens, your deployment only computes fresh attention for new words. The speed boost is dramatic, delivering near-instant autocompletion that delights end users. Implementing cache invalidation rules—especially when mixing retrieval-augmented context—takes discipline, but the payoff is worth every line of code.

Finally, never ignore monitoring. Drift detection dashboards tracking perplexity, profanity boosts, or style divergence alert you when the model’s language output strays from acceptable norms. Root-cause analysis often circles back to earlier architectural tweaks—say, a truncated positional-embedding table that silently capped context window mid-flight. Continuous visibility closes that loop before end users notice hiccups.By systematically optimizing from tokenizer to cache, you forge a robust pipeline that scales gracefully as your company’s language demands multiply—a testament to the power of a disciplined, production-first approach to LLM architecture.

#artificialintelligence#aiapplications#aitools#datalabeling#aitrainingdata#dataannotation#ai tools#machinelearning#annotations#aiinnovation

0 notes

Text

Choosing the Right Data Labeling Partner?

Start Here.

Avoid costly mistakes and boost your AI/ML performance with this 6-point checklist:

Domain Expertise Choose teams who understand your industry. Context = better labeling.

Quality Controls Multi-step reviews, audits, and QA workflows = cleaner, more consistent data.

Scalability Need to scale fast? Your partner should ramp up without slowing you down.

Tool Integration Seamless plug-ins with tools like CVAT, Labelbox, or your own stack.

Data Security & Compliance Your data deserves real protection — GDPR, HIPAA, SOC 2 level.

Transparent Pricing No hidden charges. Just faster training, better results, and clear ROI.

✅ Springbord checks every box. 📩 Reach out to build your AI on a stronger, smarter foundation. 🔗 https://www.springbord.com/services-we-offer/data-labeling-services.html

#AI#MachineLearning#DataLabeling#ComputerVision#MLTraining#AIAccuracy#DataAnnotation#EthicalAI#AItools#Springbord

0 notes

Text

What Is Document Tagging & Annotation? Why It’s Critical for AI Pipelines?

In today’s data‑driven world, document tagging and annotation are no longer “Nice To Have” extras; they are the foundation of every successful Machine Learning and Natural Language Processing (NLP) project. By converting raw text, images, audio, and video into richly labeled, machine‑readable datasets, organizations unlock the power to automate decisions, protect PII (Personally Identifiable Information), accelerate innovation, and gain a competitive edge.

What is Document Tagging and Annotation?

Document Tagging – attaches predefined metadata or keywords (tags) to sections of a file, instantly improving document classification and searchability.

Annotation – adds deeper markup: identifying entities, sentiments, intent, relationships, and compliance flags (e.g., policy documents that reference regulated terms).

Overall, it provides meaning to the datasets, which can be in the form of text, images, or videos for machine or AI models to understand.

For example, in a legal document, tagging might categorize content under “contracts,” “NDAs,” or “compliance,” while annotation could label named entities like “client,” “date,” and “jurisdiction” for AI training.

Together, they convert unstructured or semi-structured documents into machine-readable datasets, allowing AI systems to extract insights, learn patterns, and perform intelligent tasks with higher accuracy.

Where Tagging & Annotation Sit Inside an AI Pipeline

The AI pipeline represents the comprehensive process flow for designing, building, and running machine learning models efficiently and effectively. It typically includes stages like:

Data collection

Data cleaning & preprocessing

Data labeling/annotation - the quality gate!

Model training

Evaluation & tuning

Deployment, monitoring & continuous learning

Well‑labeled data shortens every subsequent step, reducing rework and speeding time‑to‑value.

Why Document Tagging & Annotation Matter for AI Pipelines

In short, document tagging and annotation are not just a step in the AI pipeline — they are the foundation upon which the entire pipeline’s success rests.

Use Cases Across Industries

1. Healthcare

Annotating radiology reports, discharge summaries, and EMRs to train clinical NLP systems that aid in diagnosis and treatment planning.

2. Legal & Compliance

Classifying clauses in contracts and policy documents, for instance, due diligence checks.

3. Retail & eCommerce

Annotating customer reviews, product descriptions, and catalog data to drive recommendation engines and improve search relevance

4. Banking & Finance

Labeling transaction records, credit documents, and customer communications to support fraud detection, sentiment analysis, and risk modeling.

Key Types of Document Annotations

Named Entity Recognition (NER): Recognizes and categorizes entities such as people, places, companies, and other specific terms.

Sentiment Annotation: Detects sentiment within text, playing a key role in feedback interpretation and optimizing customer interactions.

Text/Document Classification: Categorizes entire documents or sections (e.g., spam vs. not spam).

Intent Annotation: Labels user goals in conversational interfaces or support tickets.

Semantic Role Labeling: Determines how each word contributes to the overall structure and intent of a sentence.

Sensitive‑data tagging – flags PII such as emails, account numbers, or medical IDs.

Challenges in Document Annotation

Despite its importance, document annotation is resource-intensive:

Requires domain expertise to ensure accuracy

Prone to human errors and inconsistencies

Time-consuming and difficult to scale manually

Needs ongoing updates as new data flows in

To mitigate these, enterprises are increasingly turning to AI-assisted annotation tools and professional annotation services to maintain speed, scalability, and quality.

Spotlight on EnFuse Solutions

EnFuse Solutions – AI & ML Enablement combines human expertise with AI‑assisted platforms to deliver enterprise‑grade data labeling, document tagging, and annotation services.

EnFuse Service Metrics

Millions of data points processed across text, image, audio & video in 300 + languages - Service overview

99 % review accuracy & 20 % productivity lift for a U.S. retailer’s image‑tagging program, delivering 40 % Opex savings - Case study

Why Clients Choose EnFuse

End‑to‑end workflows: collection → tagging → QA / QC → secure delivery

Domain‑trained annotators for healthcare, finance, retail, legal, and more

Robust PII handling and ISO‑certified data‑security processes

Rapid scale‑up with flexible engagement models.

Conclusion

Document tagging and annotation may sound technical, but its role in enabling AI to “understand” human language, classify content, and automate decisions is indispensable. As the complexity and volume of unstructured data grow, so does the need for high-quality annotations to keep AI models relevant, intelligent, and impactful.

If you’re building AI-powered systems and want to ensure your models are trained on accurate, annotated datasets, now is the time to invest in expert solutions.

Ready to scale your AI with smarter document annotation?

Partner with EnFuse Solutions to power up your next project with precision annotation, secure PII handling, and measurable ROI. Explore our AI & ML Enablement services and see the results in our latest image‑tagging case study.

Scale smarter. Tag faster. Deploy with confidence.

Get in touch with EnFuse Solutions today!

#DocumentTagging#DocumentAnnotation#AnnotationServices#DataLabeling#PolicyDocuments#NaturalLanguageProcessing#NLP#SentimentAnnotation#DocumentClassification#EnFuseDataAnnotation#EnFuseDocumentTagging#DocumentTaggingServices#EnFuseSolutions#EnFuseSolutionsIndia

0 notes

Text

Boost Your ROI with Intelligent Image Annotation Outsourcing!

Outsourcing image annotation helps AI companies scale efficiently while ensuring accuracy and cost-effectiveness. From autonomous vehicles to medical imaging, leveraging expert #annotation teams can accelerate project timelines and enhance model performance.

Discover how intelligent outsourcing can maximize your #AI success:

#ImageAnnotation#AITraining#MachineLearning#DataAnnotation#ArtificialIntelligence#DataLabeling#Outsourcing

0 notes

Text

Empower Your AI Models: Ensure Precision with EnFuse Solutions’ Customized Data Labeling Services!

Boost your AI models with EnFuse Solutions’ precise data labeling services. They handle complex datasets involving images, text, audio, and video. EnFuse’s experienced team ensures reliable, consistent annotations to support machine learning applications across industries like retail, healthcare, and finance.

Visit here to explore how EnFuse Solutions delivers precision through customized data labeling services: https://www.enfuse-solutions.com/services/ai-ml-enablement/labeling-curation/

#DataLabeling#DataLabelingServices#DataCurationServices#ImageLabeling#AudioLabeling#VideoLabeling#TextLabeling#DataLabelingCompaniesIndia#DataLabelingAndAnnotation#AnnotationServices#EnFuseSolutions#EnFuseSolutionsIndia

0 notes

Text

Unlock the Potential of AI with EnFuse Solutions' Labeling Services

Supercharge your AI applications with EnFuse Solutions' professional labeling services. They annotate image, audio, video, and text data to build smarter algorithms. Whether it’s autonomous driving or voice recognition, EnFuse’s quality assurance ensures optimal AI training results.

Enhance your AI initiatives today with EnFuse Solutions’ expert data labeling services: https://www.enfuse-solutions.com/services/ai-ml-enablement/labeling-curation/

#DataLabeling#DataLabelingServices#DataCurationServices#ImageLabeling#AudioLabeling#VideoLabeling#TextLabeling#DataLabelingCompaniesIndia#DataLabelingAndAnnotation#AnnotationServices#EnFuseSolutions#EnFuseSolutionsIndia

0 notes

Text

Accelerate AI Training with Quality Data

Speed up your AI development with the perfect training data. Our data labeling services are designed to meet the needs of your machine learning models—boosting performance and ensuring reliability. Trust us to provide the data that fuels your AI.

0 notes

Text

What is text annotation in machine learning? Explain with examples

Text annotation in machine learning refers to the process of labeling or tagging textual data to make it understandable and useful for AI models. It is essential for various AI applications, such as natural language processing (NLP), chatbots, sentiment analysis, and machine translation. With cutting-edge tools and skilled professionals, EnFuse Solutions has the expertise to drive impactful AI solutions for your business.

#TextAnnotation#MachineLearning#NLPAnnotation#DataLabeling#MLTrainingData#AnnotatedText#NaturalLanguageProcessing#SupervisedLearning#AIModelTraining#TextDataPreparation#MLDataAnnotation#AIAnnotationCompanies#DataAnnotationServices#EnFuseSolutions#EnFuseSolutionsIndia

0 notes

Text

What is data labeling?

Data labeling is a fundamental step in the machine learning pipeline, enabling algorithms to learn and make accurate predictions. EnFuse Solutions, a leading data labeling company, provides high-quality services with expert annotators who ensure accurate, consistent labeling for training robust and reliable machine learning and AI models. Contact today to learn more!

#DataLabeling#DataLabelingServices#DataAnnotation#DataTagging#LabeledData#SupervisedLearningData#AITrainingData#MachineLearningDataPrep#AnnotationSolutions#DataLabelingServicesIndia#DataLabelingCompanies#EnFuseSolutions#EnFuseSolutionsIndia

0 notes

Text

How Data Annotation Services Power Artificial Intelligence

Introduction to Data Annotation Services

In a world increasingly driven by artificial intelligence, there's one crucial element that fuels its capabilities: data. But not just any data—it's the meticulously annotated data that breathes life into AI systems. This is where Data Annotation Services come into play, transforming raw information into structured insights that machine learning algorithms can understand and learn from. As businesses across various industries harness the power of AI to improve efficiency and decision-making, understanding the role of data annotation becomes paramount. Let’s dive into how these services not only enhance AI performance but also shape the future of technology itself.

The Importance of Accurate and High-Quality Data Annotation

Accurate and high-quality data annotation is critical in the realm of artificial intelligence. It acts as the foundation upon which AI models are built. When labeled correctly, it allows machine learning algorithms to learn effectively from the data provided.

Poorly annotated data can lead to inaccurate predictions and unreliable outcomes. This not only hampers performance but also undermines trust in AI technologies.

High-quality annotations ensure that machines interpret information accurately, mimicking human understanding more closely. This precision enhances the overall quality of AI applications across various industries.

Investing time and resources into meticulous annotation processes pays dividends down the line. Organizations benefit from improved model efficiency and better decision-making capabilities driven by their AI systems.

Types of Data Annotation Techniques for AI Training

Data annotation company techniques vary widely, each tailored to specific AI training needs. Image annotation is among the most common methods, involving labeling objects within images for computer vision tasks. This helps machines recognize and classify visuals accurately.

Text annotation plays a critical role as well. It includes tagging parts of speech or identifying entities within text. This technique is essential for natural language processing models that require an understanding of context and meaning.

For audio data, transcription and segmentation are key techniques used in voice recognition systems. By breaking down spoken words into manageable segments, these annotations enable better comprehension by AI algorithms.

Video annotation combines both image and time-based analysis. It tracks movements or actions across frames—vital for applications like autonomous driving where real-time decision-making hinges on understanding dynamic environments. Each method contributes uniquely to enhancing machine learning capabilities through precise data interpretation.

Human vs Automated Annotation: Pros and Cons

Human annotation excels in understanding context and nuances. Humans can interpret subtleties in language or image details that machines might overlook. This capability often leads to richer, more accurate data sets.

However, human annotators can be slow and prone to fatigue. As workloads increase, the possibility of errors also rises. They require training and ongoing management, which adds complexity to projects.

On the flip side, automated tools offer speed and scalability. These systems can process vast amounts of data quickly with consistent output. Yet they may struggle with ambiguity or less common scenarios that require deeper comprehension.

Combining both methods offers a balanced approach. Human oversight on automated outputs helps catch inaccuracies while retaining efficiency for large-scale projects. The ideal solution may depend on specific project needs and goals.

Real-World Applications of Data Annotation in AI

Data annotation plays a pivotal role in various sectors, transforming how we interact with technology. For instance, in healthcare, annotated medical images help AI systems detect diseases early. This precision can save lives.

In the automotive industry, self-driving cars rely heavily on data annotation for recognizing objects like pedestrians and traffic signs. Accurate annotations ensure these vehicles navigate safely through complex environments.

E-commerce platforms utilize data annotation to enhance product recommendations. By categorizing items and tagging features, AI algorithms deliver personalized shopping experiences that boost sales and customer satisfaction.

Moreover, social media companies employ data annotation to filter content effectively. This process allows them to identify harmful material while promoting user safety.

From finance to entertainment, the impact of data annotation is profound and far-reaching. Each application highlights its necessity in creating intelligent systems that understand our world better.

The Impact of Data Bias on AI and How Annotation Can Help Mitigate It

Data bias poses a significant challenge in artificial intelligence. It can skew results and lead to unfair outcomes, impacting users across various sectors. When AI systems are trained on biased datasets, they often perpetuate stereotypes or overlook minority perspectives.

Data annotation services play a crucial role in tackling this issue. By ensuring that training data is diverse and representative, these services help create more balanced datasets. Annotators carefully identify and label different attributes within the data, enhancing its quality and usability.

Moreover, human annotators can recognize subtle nuances that automated tools might miss. This awareness fosters greater inclusivity when developing AI models. Properly annotated data can pave the way for fairer algorithms that reflect real-world diversity.

In turn, organizations benefit from improved decision-making processes powered by unbiased AI systems. The careful attention to detail during annotation significantly reduces the risk of propagating existing biases within artificial intelligence applications.

Future of Data Annotation in Advancing Artificial Intelligence

The future of data annotation service is poised to be transformative. As artificial intelligence continues to evolve, the demand for accurate and efficient annotation will surge. Innovative techniques are emerging, integrating machine learning and human expertise.

Crowdsourcing may become a more prevalent strategy. By harnessing diverse perspectives, we can achieve richer datasets that capture nuances often overlooked by automated systems. This collaborative approach could lead to higher quality annotations at scale.

Moreover, advancements in AI tools are enabling faster processing times while maintaining accuracy. These intelligent systems learn from previous annotations, reducing human error significantly.

As ethical considerations grow paramount in AI development, annotated data will play a critical role in ensuring fairness and accountability. The alignment between technology and ethics hinges on robust annotation practices that address biases effectively.

With these developments on the horizon, the landscape of data annotation services will not only support AI but also shape its ethical framework for years to come.

Conclusion

Data annotation services are the backbone of artificial intelligence development. They provide the essential groundwork that allows AI systems to learn and evolve. As the demand for machine learning models continues to grow, so does the need for accurate and high-quality data annotation.

Understanding different types of data annotation techniques is crucial for businesses looking to leverage AI effectively. Whether it’s image labeling, text classification, or audio transcription, each technique plays a vital role in training algorithms that drive innovation across various sectors.

The ongoing debate between human and automated annotation will likely continue as technology advances. Each method has its benefits and challenges; finding a balance between efficiency and accuracy is key.

Real-world applications demonstrate how critical data annotation is across industries—from healthcare diagnostics to autonomous vehicles—showing us just how far we've come with AI capabilities driven by quality annotations.

Moreover, addressing issues like data bias through careful annotation practices not only enhances model performance but also promotes fairness in AI outcomes. This ethical dimension reinforces why meticulous attention during this phase can make a substantial difference.

As we look ahead, advancements in tools and processes for data annotation services promise even greater possibilities in pushing artificial intelligence forward. The future holds exciting potential as these foundational elements become more refined.

Having recognized all these aspects emphasizes the indispensable role of data annotation services in shaping an intelligent world where machines understand better than ever before.

#dataannotationservices#datalabelingservices#natural language processing#dataannotation#datalabeling

0 notes

Text

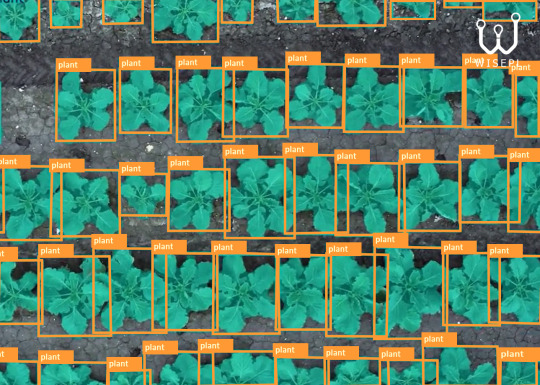

Teaching Machines to See the Harvest Before It Grows

In the age of smart farming, machines need more than sensors - they need vision. We empower agricultural AI with precision-labeled data built specifically for crop detection.

From drone images to satellite scans, our expert annotators craft pixel-perfect polygons, segmentations, and object tags that help models:

-> Detect crop types -> Monitor growth stages -> Analyze field health -> Predict yield patterns

Just clean, human-verified data that turns raw footage into actionable insight for next-gen agri-tech.

Build smarter agri-AI with us. Let’s annotate the future of farming - one pixel at a time. DM us or visit www.wisepl.com to get started.

#cropdetection#smartfarming#AgriTech#AIinAgriculture#WiseplAI#dataannotation#precisionfarming#satelliteimagery#machinelearning#geospatialAI#MLTrainingData#AgriAI#datalabeling#Wisepl

0 notes