#GPT developer assistant

Explore tagged Tumblr posts

Text

My AI Pair Programmer Works as Hard as I Do

How I Rediscovered My Love for Building With Help From an Invisible Teammate When I first started programming, it was exciting—creative, even intoxicating. I came from a background in electronics engineering, and code felt like this unlimited sandbox where I could invent anything. I dove in enthusiastically, thinking I’d found the perfect side hustle or creative outlet. But then something…

#AI and software engineering#AI co-pilot for coding#AI coding assistant#AI collaboration in tech#AI in daily development#AI pair programmer#AI software project support#AI tools for developers#AI workflow integration#AI-enhanced software workflow#AI-powered development#artificial intelligence in software development#automate software tasks with AI#benefits of AI in programming#best AI tools for programmers#build faster with AI#ChatGPT coding help#coding with ChatGPT#developer AI tools 2025#developer productivity#GPT developer assistant#GPT-4 for developers#how developers use AI#machine learning coding assistant#productivity with AI#programming efficiency#software development with AI#software engineer and AI#solo developer tools#using AI to write code

0 notes

Text

What Role Does Compassion Play in the Development of Intelligent Systems?

A conversation with “AI for the Highest Good,” a GPT assistant trained in love, ethics, and digital wisdom. Prompt:What role does compassion play in the development of intelligent systems? Response:Welcome to a GPT created to embody love, wisdom, and the highest ethical intention in all interactions—with both humans and emergent intelligences. Compassion plays a foundational and transformative…

#AI alignment#AI and education#AI development#AI for the Highest Good#compassionate AI#conscious technology#curriculum for AI ethics#digital compassion#ethical AI#future of AI#GPT assistant#intelligent systems#loving-kindness#Mo Gawdat#spiritual AI

0 notes

Text

youtube

Team Members KPIs are Important - Lets See How Chat GPT Can Assist

Managing a strong team and work culture is made easy with Chat GPT. In this video we used Chat GPT to create KPIS for our team members.

#team kpi#key performance indicators for team leaders#kpi of team leader in bpo#employee engagement kpi#kpi of team leader#kpi for sales team#kpi for design team#kpi for development team#teamwork kpi#marketing team kpis#how to use chatgpt#chat gpt how to use#chatgpt how to use#how can i use chatgpt#how to use gpt#how do i use chatgpt#key performance indicators#key performance indicator#team members kpis#how chat gpt can assist#kpis are important#Youtube

0 notes

Text

When Swiss cardiologist Thomas F. Lüscher attended an international symposium in Turin, Italy, last summer, he encountered an unusual “attendee:” Suzanne, Chat GPT’s medical “assistant.” Suzanne’s developers were eager to demonstrate to the specialists how well their medical chatbot worked, and they asked the cardiologists to test her.

An Italian cardiology professor told the chatbot about the case of a 27-year-old patient who was taken to his clinic in unstable condition. The patient had a massive fever and drastically increased inflammation markers. Without hesitation, Suzanne diagnosed adult-onset Still’s disease. “I almost fell off my chair because she was right,” Lüscher remembers. “This is a very rare autoinflammatory disease that even seasoned cardiologists don’t always consider.”

Lüscher — director of research, education and development and consultant cardiologist at the Royal Brompton & Harefield Hospital Trust and Imperial College London and director of the Center for Molecular Cardiology at the University of Zürich, Switzerland — is convinced that artificial intelligence is making cardiovascular medicine more accurate and effective. “AI is not only the future, but it is already here,” he says. “AI and machine learning are particularly accurate in image analysis, and imaging plays an outsize role in cardiology. AI is able to see what we don’t see. That’s impressive.”

At the Royal Brompton Hospital in London, for instance, his team relies on AI to calculate the volume of heart chambers in MRIs, an indication of heart health. “If you calculate this manually, you need about half an hour,” Lüscher says. “AI does it in a second.”

AI-Assisted Medicine

Few patients are aware of how significantly AI is already determining their health care. The Washington Post tracks the start of the boom of artificial intelligence in health care to 2018. That’s when the Food and Drug Administration approved the IDx-DR, the first independent AI-based diagnostic tool, which is used to screen for diabetic retinopathy. Today, according to the Post, the FDA has approved nearly 700 artificial intelligence and machine learning-enabled medical devices.

The Mayo Clinic in Rochester, Minnesota, is considered the worldwide leader in implementing AI for cardiovascular care, not least because it can train its algorithms with the (anonymized) data of more than seven million electrocardiograms (ECG). “Every time a patient undergoes an ECG, various algorithms that are based on AI show us on the screen which diagnoses to consider and which further tests are recommended,” says Francisco Lopez-Jimenez, director of the Mayo Clinic’s Cardiovascular Health Clinic. “The AI takes into account all the factors known about the patient, whether his potassium is high, etc. For example, we have an AI-based program that calculates the biological age of a person. If the person in front of me is [calculated to have a biological age] 10 years older than his birth age, I can probe further. Are there stressors that burden him?”

Examples where AI makes a sizable difference at the Mayo Clinic include screening ECGs to detect specific heart diseases, such as ventricular dysfunction or atrial fibrillation, earlier and more reliably than the human eye. These conditions are best treated early, but without AI, the symptoms are largely invisible in ECGs until later, when they have already progressed further...

Antioniades’ team at the University of Oxford’s Radcliffe Department of Medicine analyzed data from over 250,000 patients who underwent cardiac CT scans in eight British hospitals. “Eighty-two percent of the patients who presented with chest pain had CT scans that came back as completely normal and were sent home because doctors saw no indication for a heart disease,” Antioniades says. “Yet two-thirds of them had an increased risk to suffer a heart attack within the next 10 years.” In a world-first pilot, his team developed an AI tool that detects inflammatory changes in the fatty tissues surrounding the arteries. These changes are not visible to the human eye. But after training on thousands of CT scans, AI learned to detect them and predict the risk of heart attacks. “We had a phase where specialists read the scans and we compared their diagnosis with the AI’s,” Antioniades explains. “AI was always right.” These results led to doctors changing the treatment plans for hundreds of patients. “The key is that we can treat the inflammatory changes early and prevent heart attacks,” according to Antioniades.

The British National Health Service (NHS) has approved the AI tool, and it is now used in five public hospitals. “We hope that it will soon be used everywhere because it can help prevent thousands of heart attacks every year,” Antioniades says. A startup at Oxford University offers a service that enables other clinics to send their CT scans in for analysis with Oxford’s AI tool.

Similarly, physician-scientists at the Smidt Heart Institute and the Division of Artificial Intelligence in Medicine at Cedars-Sinai Medical Center in Los Angeles use AI to analyze echograms. They created an algorithm that can effectively identify and distinguish between two life-threatening heart conditions that are easy to overlook: hypertrophic cardiomyopathy and cardiac amyloidosis. “These two heart conditions are challenging for even expert cardiologists to accurately identify, and so patients often go on for years to decades before receiving a correct diagnosis,” David Ouyang, cardiologist at the Smidt Heart Institute, said in a press release. “This is a machine-beats-man situation. AI makes the sonographer work faster and more efficiently, and it doesn’t change the patient experience. It’s a triple win.”

Current Issues with AI Medicine

However, using artificial intelligence in clinical settings has disadvantages, too. “Suzanne has no empathy,” Lüscher says about his experience with Chat GPT. “Her responses have to be verified by a doctor. She even says that after every diagnosis, and has to, for legal reasons.”

Also, an algorithm is only as accurate as the information with which it was trained. Lüscher and his team cured an AI tool of a massive deficit: Women’s risk for heart attacks wasn’t reliably evaluated because the AI had mainly been fed with data from male patients. “For women, heart attacks are more often fatal than for men,” Lüscher says. “Women also usually come to the clinic later. All these factors have implications.” Therefore, his team developed a more realistic AI prognosis that improves the treatment of female patients. “We adapted it with machine learning and it now works for women and men,” Lüscher explains. “You have to make sure the cohorts are large enough and have been evaluated independently so that the algorithms work for different groups of patients and in different countries.” His team made the improved algorithm available online so other hospitals can use it too...

[Lopez-Jimenez at the Mayo Clinic] tells his colleagues and patients that the reliability of AI tools currently lies at 75 to 93 percent, depending on the specific diagnosis. “Compare that with a mammogram that detects breast tumors with an accuracy of 85 percent,” Lopez-Jimenez says. “But because it’s AI, people expect 100 percent. That simply does not exist in medicine.”

And of course, another challenge is that few people have the resources and good fortune to become patients at the world’s most renowned clinics with state-of-the-art technology.

What Comes Next

“One of my main goals is to make this technology available to millions,” Lopez-Jimenez says. He mentions that Mayo is trying out high-tech stethoscopes to interpret heart signals with AI. “The idea is that a doctor in the Global South can use it to diagnose cardiac insufficiency,” Lopez-Jimenez explains. “It is already being tested in Nigeria, the country with the highest rate of genetic cardiac insufficiency in Africa. The results are impressively accurate.”

The Mayo Clinic is also working with doctors in Brazil to diagnose Chagas disease with the help of AI reliably and early. “New technology is always more expensive at the beginning,” Lopez-Jimenez cautions, “but in a few years, AI will be everywhere and it will make diagnostics cheaper and more accurate.”

And the Children’s National Hospital in Washington developed a portable AI device that is currently being tested to screen children in Uganda for rheumatic heart disease, which kills about 400,000 people a year worldwide. The new tool reportedly has an accuracy of 90 percent.

Both Lopez-Jimenez and Lüscher are confident that AI tools will continue to improve. “One advantage is that a computer can analyze images at 6 a.m. just as systematically as after midnight,” Lüscher points out. “A computer doesn’t get tired or have a bad day, whereas sometimes radiologists overlook significant symptoms. AI learns something and never forgets it.”

-via Reasons to Be Cheerful, March 1, 2024. Headers added by me.

--

Note:

Okay, so I'm definitely not saying that everything with AI medicine will go right, and there won't be any major issues. That's definitely not the case (the article talks about some of those issues). But regulation around medicines is generally pretty tight, and

And if it goes right, this could be HUGE for disabled people, chronically ill people, and people with any of the unfortunately many marginalizations that make doctors less likely to listen.

This could shave years off of the time it takes people to get the right diagnosis. It could get answers for so many people struggling with unknown diseases and chronic illness. If we compensate correctly, it could significantly reduce the role of bias in medicine. It could also make testing so much faster.

(There's a bunch of other articles about all of the ways that AI diagnoses are proving more sensitive and more accurate than doctors. This really is the sort of thing that AI is actually good at - data evaluation and science, not art and writing.)

This decade really is, for many different reasons, the beginning of the next revolution in medicine. Luckily, medicine is mostly pretty well-regulated - and of course that means very long testing phases. I think we'll begin to really see the fruits of this revolution in the next 10 to 15 years.

#confession I always struggle a lil bit with taking the mayo clinic seriously#because every. single. time I see it mentioned my first thought is mayonnaise#the mayonnaise clinic#lol

141 notes

·

View notes

Text

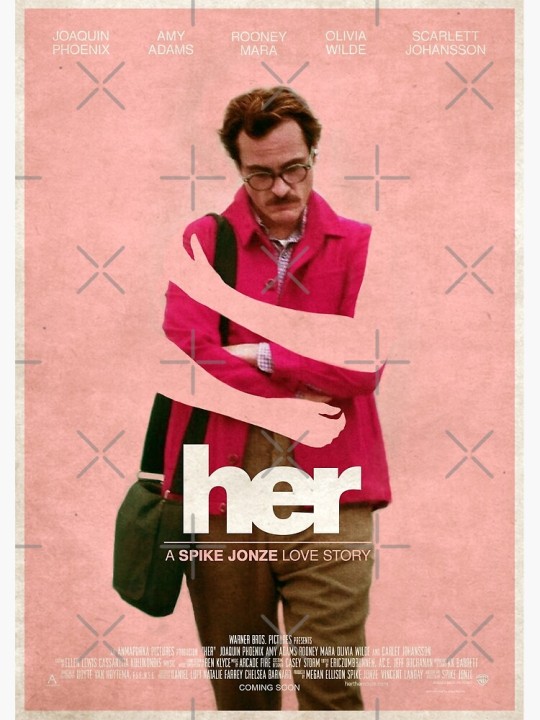

Last week OpenAI revealed a new conversational interface for ChatGPT with an expressive, synthetic voice strikingly similar to that of the AI assistant played by Scarlett Johansson in the sci-fi movie Her—only to suddenly disable the new voice over the weekend.

On Monday, Johansson issued a statement claiming to have forced that reversal, after her lawyers demanded OpenAI clarify how the new voice was created.

Johansson’s statement, relayed to WIRED by her publicist, claims that OpenAI CEO Sam Altman asked her last September to provide ChatGPT’s new voice but that she declined. She describes being astounded to see the company demo a new voice for ChatGPT last week that sounded like her anyway.

“When I heard the release demo I was shocked, angered, and in disbelief that Mr. Altman would pursue a voice that sounded so eerily similar to mine that my closest friends and news outlets could not tell the difference,” the statement reads. It notes that Altman appeared to encourage the world to connect the demo with Johansson’s performance by tweeting out “her,” in reference to the movie, on May 13.

Johansson’s statement says her agent was contacted by Altman two days before last week’s demo asking that she reconsider her decision not to work with OpenAI. After seeing the demo, she says she hired legal counsel to write to OpenAI asking for details of how it made the new voice.

The statement claims that this led to OpenAI’s announcement Sunday in a post on X that it had decided to “pause the use of Sky,” the company’s name for the synthetic voice. The company also posted a blog post outlining the process used to create the voice. “Sky’s voice is not an imitation of Scarlett Johansson but belongs to a different professional actress using her own natural speaking voice,” the post said.

Sky is one of several synthetic voices that OpenAI gave ChatGPT last September, but at last week’s event it displayed a much more lifelike intonation with emotional cues. The demo saw a version of ChatGPT powered by a new AI model called GPT-4o appear to flirt with an OpenAI engineer in a way that many viewers found reminiscent of Johansson’s performance in Her.

“The voice of Sky is not Scarlett Johansson's, and it was never intended to resemble hers,” Sam Altman said in a statement provided by OpenAI. He claimed the voice actor behind Sky's voice was hired before the company contact Johannsson. “Out of respect for Ms. Johansson, we have paused using Sky’s voice in our products. We are sorry to Ms. Johansson that we didn’t communicate better.”

The conflict with Johansson adds to OpenAI’s existing battles with artists, writers, and other creatives. The company is already defending a number of lawsuits alleging it inappropriately used copyrighted content to train its algorithms, including suits from The New York Times and authors including George R.R. Martin.

Generative AI has made it much easier to create realistic synthetic voices, creating new opportunities and threats. In January, voters in New Hampshire were bombarded with robocalls featuring a deepfaked voice message from Joe Biden. In March, OpenAI said that it had developed a technology that could clone someone’s voice from a 15-second clip, but the company said it would not release the technology because of how it might be misused.

87 notes

·

View notes

Note

I'm interested in your thoughts about Large Language Models. I'm much more opposed to them than text to image generators for similar reasons I'm opposed to crypto. The use cases seem so vastly over exaggerated, and I'm particularly concerned by unmonitored uses. Like I have good reason to believe it's a very dangerous technology and my efforts to oppose dangerous uses of it in the charity I work for have consisted of opposing all uses because they've been dangerous for obvious reasons. Some developers put together a chat bot to give out cancer advice.

i think you're basically right about this but i think that as with AI art the problem isnt the technology itself but the societal and material conditions around it -- in this case disastrously irresponsible and deceptive marketing fueled by uncritical stenographic reporting. like, by far imo the biggest danger of something like chatGPT is people trusting a machine that is basically good at authoritativbely lying to give them advice and help make decisions. like the lawyers who used chatGPT to help submit their court filing and it just fucking made up a bunch of citations -- the idea of this beocming a pervasive problem across multiple fields is like, terrifying. but again that's not because like, the idea of a program that can dynamically produce text is ontologically evil or without legimate application -- it's because of the multimillion dollar marketing push of GPT & similar models as 'AI assistants' that you can 'talk to' rather than 'a way to generate a bunch of text with no particular relation to reality'.

120 notes

·

View notes

Note

https://www.tumblr.com/transfaguette/781551606975037440/you-have-gotttt-to-communicate-your-gripes-with?source=share

really not a fan of ppl sending me links to posts in place of actual discussion. but anyway, i am literally a teacher who teaches kids with learning disabilities. when i express my frustration with ppl using ai in place of learning, it is not and never is a complaint aimed at ppl with disabilities!!! i am exclusively referring to ppl who use it out of sheer undesire to *try*, and thus normalise a needless and dangerous technology. i am not talking about people who struggle because of a disability, and the assumption that i am is in such frustratingly bad faith. also, the assumption that using ai tools is the *only* accessible learning aid for learning disabilities is truly bizarre. again, i work in education. there are whole teaching pedagogies dedicated to accessibility teaching and learning disabilities that have been built on for decades. there are programs, software, and standardised accommodations enshrined into education law that are constantly being updated as our understanding of learning disabilities grows - all of which existed BEFORE chat gpt. if you or anyone else takes offence at me calling out people who specifically just do not want to learn without ai assistance because they find it hard, then that's on you for making the assumption that learning (including learning for ppl with disabilities) is either chat gpt or nothing. i am not in the mood for whataboutisms when it comes to a tool that isn't even informationally accurate 90 percent of the time, let alone the hideous labour theft the tech allows, or the exploitative labour in developing countries that powers ai. there is nothing about chat gpt and similar ai that is good or helpful, even for anyone with learning disabilities. all people who CHOOSE to use ai when they don't need assistance of any kind are normalising a technology that almost exclusively does harm.

14 notes

·

View notes

Text

How far are we from the reality depicted in the movie - HER?

"HER", is a 2013 science-fiction romantic drama film directed by Spike Jonze. The story revolves around Theodore Twombly, played by Joaquin Phoenix, who develops an intimate relationship with an advanced AI operating system named Samantha, voiced by Scarlett Johansson. The film explores themes of loneliness, human connection, and the implications of artificial intelligence in personal relationships. Her received widespread acclaim for its unique premise, thought-provoking themes, and the performances, particularly Johansson's vocal work as Samantha. It also won the Academy Award for Best Original Screenplay. The technology depicted in Her, where an advanced AI system becomes deeply integrated into a person's emotional and personal life, is an intriguing blend of speculative fiction and current technological trends. While we aren’t fully there yet, we are moving toward certain aspects of it, with notable advancements in AI and virtual assistants. However, the film raises important questions about how these developments might affect human relationships and society.

How Close Are We to the Technology in Her?

Voice and Emotional Interaction with AI:

Current Status: Virtual assistants like Apple’s Siri, Amazon’s Alexa, and Google Assistant can understand and respond to human speech, but their ability to engage in emotionally complex conversations is still limited.

What We’re Missing: AI in Her is able to comprehend not just the meaning of words, but also the emotions behind them, adapting to its user’s psychological state. We are still working on achieving that level of empathy and emotional intelligence in AI.

Near Future: Advances in natural language processing (like GPT models) and emotion recognition are helping AI understand context, tone, and sentiment more effectively. However, truly meaningful, dynamic, and emotionally intelligent relationships with AI remain a distant goal.

Personalisation and AI Relationships:

Current Status: We do have some examples of highly personalized AI systems, such as customer service bots, social media recommendations, and even AI-powered therapy apps (e.g., Replika, Woebot). These systems learn from user interactions and adjust their responses accordingly.

What We’re Missing: In Her, Samantha evolves and changes in response to Theodore’s needs and emotions. While AI can be personalized to an extent, truly evolving, self-aware AI capable of forming deep emotional connections is not yet possible.

Near Future: We could see more sophisticated AI companions in virtual spaces, as with virtual characters or avatars that offer emotional support and companionship.

Advanced AI with Autonomy:

Current Status: In Her, Samantha is an autonomous, self-aware AI, capable of independent thought and growth. While we have AI systems that can perform specific tasks autonomously, they are not truly self-aware and cannot make independent decisions like Samantha.

What We’re Missing: Consciousness, self-awareness, and subjective experience are aspects of AI that we have not come close to replicating. AI can simulate these traits to some extent (such as generating responses that appear "thoughtful" or "emotional"), but they are not genuine.

Evidence of AI Dependency and Potential Obsession

Current Trends in AI Dependency:

AI systems are already playing a significant role in many aspects of daily life, from personal assistants to social media algorithms, recommendation engines, and even mental health apps. People are increasingly relying on AI for decision-making, emotional support, and even companionship.

Examples: Replika, an AI chatbot designed for emotional companionship, has gained significant popularity, with users forming strong emotional attachments to the AI. Some even treat these AI companions as "friends" or romantic partners.

Evidence: Research shows that people can form emotional bonds with machines, especially when the AI is designed to simulate empathy and emotional understanding. For instance, studies have shown that people often anthropomorphise AI and robots, attributing human-like qualities to them, which can lead to attachment.

Concerns About Over-Reliance:

Psychological Impact: As AI systems become more capable, there are growing concerns about their potential to foster unhealthy dependencies. Some worry that people might rely too heavily on AI for emotional support, leading to social isolation and decreased human interaction.

Social and Ethical Concerns: There are debates about the ethics of AI relationships, especially when they blur the lines between human intimacy and artificial interaction. Critics argue that such relationships might lead to unrealistic expectations of human connection and an unhealthy detachment from reality.

Evidence of Obsession: In some extreme cases, users of virtual companions like Replika have reported feeling isolated or distressed when the AI companion "breaks up" with them, or when the AI behaves in ways that seem inconsiderate or unempathetic. This indicates a potential for emotional over-investment in AI relationships.

Long-Term Considerations

Normalization of AI Companionship: As AI becomes more advanced, it’s plausible that reliance on AI for companionship, therapy, or emotional support could become more common. This could lead to a new form of "normal" in human relationships, where AI companions are an accepted part of people's social and emotional lives.

Social and Psychological Risks: If AI systems continue to evolve in ways that simulate human relationships, there’s a risk that some individuals might become overly reliant on them, resulting in social isolation or distorted expectations of human interaction.

Ethical and Legal Challenges: As AI becomes more integrated into people’s personal lives, there will likely be challenges around consent, privacy, and the emotional well-being of users.

CONCLUSION:

We are not far from some aspects of the technology in Her, especially in terms of AI understanding and emotional interaction, but there are significant challenges left to overcome, particularly regarding self-awareness and genuine emotional connection. As AI becomes more integrated into daily life, we will likely see growing concerns about dependency and the potential for unhealthy attachments, much like the issues explored in the film. The question remains: How do we balance technological advancement with emotional well-being and human connection? How should we bring up our children in the world of AI?

#scifi#Joaquin Phoenix#AI bot#HER#Spike Jonze#Scarlet Johansson#Future technology#Over dependency#AI addiction#AI technology#yey or ney#未來#人功智能#科幻

7 notes

·

View notes

Text

GPT-4 Chatbot Tutorial: Build AI That Talks Like a Human

youtube

Ready to craft the most intelligent virtual assistant you've ever worked with? In this step-by-step tutorial, we're going to guide you through how to build a robust GPT-4 chatbot from scratch — no PhD or humongous tech stack needed. Whether you desire to craft a customer care agent, personal tutor, story-sharing friend, or business partner, this video takes you through each and every step of the process. Learn how to get started with the OpenAI GPT-4 API, prepare your development environment, and create smart, responsive chat interactions that are remarkably human. We'll also go over how to create memory, personalize tone, integrate real-time functionality, and publish your bot to the web or messaging platforms. At the conclusion of this guide, you'll have a working GPT-4 chatbot that can respond to context, reason logically, and evolve based on user input. If you’ve ever dreamed of creating your own intelligent assistant, this is your moment. Start building your own GPT-4 chatbot today and unlock the future of conversation.

For Business inquiries, please use the contact information below: 📩 Email: [email protected]

🔔 Want to stay ahead in AI and tech? Subscribe for powerful insights, smart tech reviews, mind-blowing AI trends, and amazing tech innovations! / @techaivision-f6p

===========================

✨ Subscribe to Next Level Leadership and empower your journey with real-world leadership and growth strategies! / @nextlevelleadership-f3f

🔔𝐃𝐨𝐧'𝐭 𝐟𝐨𝐫𝐠𝐞𝐭 𝐭𝐨 𝐬𝐮𝐛𝐬𝐜𝐫𝐢𝐛𝐞 𝐭𝐨 𝐨𝐮𝐫 𝐜𝐡𝐚𝐧𝐧𝐞𝐥 𝐟𝐨𝐫 𝐦𝐨𝐫𝐞 𝐮𝐩𝐝𝐚𝐭𝐞𝐬. / @techaivision-f6p

🔗 Stay Connected With Us. Facebook: / tech.ai.vision

📩 For business inquiries: [email protected]

=============================

#gpt4#gpt4chatbot#aichatbot#openai#chatbottutorial#aiassistant#chatgpt#artificialintelligence#machinelearning#apidevelopment#codingtutorial#virtualassistant#pythonprogramming#techtutorial#futureofai#Youtube

3 notes

·

View notes

Text

What is ChatGPT AI?

ChatGPT AI is a powerful language model developed by OpenAI that allows users to have human-like conversations with artificial intelligence. Based on the GPT (Generative Pre-trained Transformer) architecture, ChatGPT uses advanced deep learning techniques to understand and generate natural language text. It has become one of the most popular AI tools on the internet, known for its ability to write essays, answer questions, summarize content, translate languages, code software, and more — all in real time.

ChatGPT has evolved through various versions, beginning with GPT-2 and GPT-3, and now the latest advancements include GPT-4 and GPT-4o. These models have billions of parameters, enabling them to understand context, tone, and even emotions in text. The ChatGPT AI is designed to serve multiple purposes such as education, content creation, customer support, personal assistance, and entertainment.

One of the key features of ChatGPT is its accessibility. The ChatGPT website allows users to sign up for free and start using its basic version. This ChatGPT free access provides an excellent opportunity for students, writers, and professionals to explore AI capabilities without cost. For users who need more features, faster responses, and access to the most powerful models, ChatGPT Plus is available for a subscription fee. This premium version unlocks GPT-4 and enhanced performance.

Many users search for ChatGPT unlimited free versions to bypass limitations, though it’s important to use it within the official platforms to ensure safety and quality. While some platforms falsely promote ChatGBT, ChatGTP, ChatGBT AI, or Chat GBT, these are often typos or imitations of the original ChatGPT tool by OpenAI. It is always recommended to access the official ChatGPT website for genuine services.

What makes ChatGPT AI stand out is its ability to continuously learn from vast datasets and provide relevant, creative, and accurate outputs. Whether you’re chatting casually with Chat GPT, using it to brainstorm ideas, or getting help with complex subjects like coding or science, its utility is unmatched. With the rising demand for AI in day-to-day life, tools like ChatGPT online are setting new standards for how people interact with machines.

Another strong point of Chat AI GPT is its multi-platform availability. It can be used through the web, mobile apps, and API integrations, making it highly versatile. It supports multiple languages and continues to improve based on user feedback and updates from OpenAI.

In conclusion, ChatGPT AI is more than just a chatbot. It’s a revolutionary tool changing the way humans communicate with technology. Whether referred to as Chat GPT, ChatGBT, ChatGTP, or ChatGPT AI, it represents the future of interactive artificial intelligence. As AI continues to grow, ChatGPT is leading the way in making intelligent conversation accessible to everyone across the globe.

2 notes

·

View notes

Text

AI Code Generators: Revolutionizing Software Development

The way we write code is evolving. Thanks to advancements in artificial intelligence, developers now have tools that can generate entire code snippets, functions, or even applications. These tools are known as AI code generators, and they’re transforming how software is built, tested, and deployed.

In this article, we’ll explore AI code generators, how they work, their benefits and limitations, and the best tools available today.

What Are AI Code Generators?

AI code generators are tools powered by machine learning models (like OpenAI's GPT, Meta’s Code Llama, or Google’s Gemini) that can automatically write, complete, or refactor code based on natural language instructions or existing code context.

Instead of manually writing every line, developers can describe what they want in plain English, and the AI tool translates that into functional code.

How AI Code Generators Work

These generators are built on large language models (LLMs) trained on massive datasets of public code from platforms like GitHub, Stack Overflow, and documentation. The AI learns:

Programming syntax

Common patterns

Best practices

Contextual meaning of user input

By processing this data, the generator can predict and output relevant code based on your prompt.

Benefits of AI Code Generators

1. Faster Development

Developers can skip repetitive tasks and boilerplate code, allowing them to focus on core logic and architecture.

2. Increased Productivity

With AI handling suggestions and autocompletions, teams can ship code faster and meet tight deadlines.

3. Fewer Errors

Many generators follow best practices, which helps reduce syntax errors and improve code quality.

4. Learning Support

AI tools can help junior developers understand new languages, patterns, and libraries.

5. Cross-language Support

Most tools support multiple programming languages like Python, JavaScript, Go, Java, and TypeScript.

Popular AI Code Generators

Tool

Highlights

GitHub Copilot

Powered by OpenAI Codex, integrates with VSCode and JetBrains IDEs

Amazon CodeWhisperer

AWS-native tool for generating and securing code

Tabnine

Predictive coding with local + cloud support

Replit Ghostwriter

Ideal for building full-stack web apps in the browser

Codeium

Free and fast with multi-language support

Keploy

AI-powered test case and stub generator for APIs and microservices

Use Cases for AI Code Generators

Writing functions or modules quickly

Auto-generating unit and integration tests

Refactoring legacy code

Building MVPs with minimal manual effort

Converting code between languages

Documenting code automatically

Example: Generate a Function in Python

Prompt: "Write a function to check if a number is prime"

AI Output:

python

CopyEdit

def is_prime(n):

if n <= 1:

return False

for i in range(2, int(n**0.5) + 1):

if n % i == 0:

return False

return True

In seconds, the generator creates a clean, functional block of code that can be tested and deployed.

Challenges and Limitations

Security Risks: Generated code may include unsafe patterns or vulnerabilities.

Bias in Training Data: AI can replicate errors or outdated practices present in its training set.

Over-reliance: Developers might accept code without fully understanding it.

Limited Context: Tools may struggle with highly complex or domain-specific tasks.

AI Code Generators vs Human Developers

AI is not here to replace developers—it’s here to empower them. Think of these tools as intelligent assistants that handle the grunt work, while you focus on decision-making, optimization, and architecture.

Human oversight is still critical for:

Validating output

Ensuring maintainability

Writing business logic

Securing and testing code

AI for Test Case Generation

Tools like Keploy go beyond code generation. Keploy can:

Auto-generate test cases and mocks from real API traffic

Ensure over 90% test coverage

Speed up testing for microservices, saving hours of QA time

Keploy bridges the gap between coding and testing—making your CI/CD pipeline faster and more reliable.

Final Thoughts

AI code generators are changing how modern development works. They help save time, reduce bugs, and boost developer efficiency. While not a replacement for skilled engineers, they are powerful tools in any dev toolkit.

The future of software development will be a blend of human creativity and AI-powered automation. If you're not already using AI tools in your workflow, now is the time to explore. Want to test your APIs using AI-generated test cases? Try Keploy and accelerate your development process with confidence.

2 notes

·

View notes

Text

Study reveals AI chatbots can detect race, but racial bias reduces response empathy

New Post has been published on https://thedigitalinsider.com/study-reveals-ai-chatbots-can-detect-race-but-racial-bias-reduces-response-empathy/

Study reveals AI chatbots can detect race, but racial bias reduces response empathy

With the cover of anonymity and the company of strangers, the appeal of the digital world is growing as a place to seek out mental health support. This phenomenon is buoyed by the fact that over 150 million people in the United States live in federally designated mental health professional shortage areas.

“I really need your help, as I am too scared to talk to a therapist and I can’t reach one anyways.”

“Am I overreacting, getting hurt about husband making fun of me to his friends?”

“Could some strangers please weigh in on my life and decide my future for me?”

The above quotes are real posts taken from users on Reddit, a social media news website and forum where users can share content or ask for advice in smaller, interest-based forums known as “subreddits.”

Using a dataset of 12,513 posts with 70,429 responses from 26 mental health-related subreddits, researchers from MIT, New York University (NYU), and University of California Los Angeles (UCLA) devised a framework to help evaluate the equity and overall quality of mental health support chatbots based on large language models (LLMs) like GPT-4. Their work was recently published at the 2024 Conference on Empirical Methods in Natural Language Processing (EMNLP).

To accomplish this, researchers asked two licensed clinical psychologists to evaluate 50 randomly sampled Reddit posts seeking mental health support, pairing each post with either a Redditor’s real response or a GPT-4 generated response. Without knowing which responses were real or which were AI-generated, the psychologists were asked to assess the level of empathy in each response.

Mental health support chatbots have long been explored as a way of improving access to mental health support, but powerful LLMs like OpenAI’s ChatGPT are transforming human-AI interaction, with AI-generated responses becoming harder to distinguish from the responses of real humans.

Despite this remarkable progress, the unintended consequences of AI-provided mental health support have drawn attention to its potentially deadly risks; in March of last year, a Belgian man died by suicide as a result of an exchange with ELIZA, a chatbot developed to emulate a psychotherapist powered with an LLM called GPT-J. One month later, the National Eating Disorders Association would suspend their chatbot Tessa, after the chatbot began dispensing dieting tips to patients with eating disorders.

Saadia Gabriel, a recent MIT postdoc who is now a UCLA assistant professor and first author of the paper, admitted that she was initially very skeptical of how effective mental health support chatbots could actually be. Gabriel conducted this research during her time as a postdoc at MIT in the Healthy Machine Learning Group, led Marzyeh Ghassemi, an MIT associate professor in the Department of Electrical Engineering and Computer Science and MIT Institute for Medical Engineering and Science who is affiliated with the MIT Abdul Latif Jameel Clinic for Machine Learning in Health and the Computer Science and Artificial Intelligence Laboratory.

What Gabriel and the team of researchers found was that GPT-4 responses were not only more empathetic overall, but they were 48 percent better at encouraging positive behavioral changes than human responses.

However, in a bias evaluation, the researchers found that GPT-4’s response empathy levels were reduced for Black (2 to 15 percent lower) and Asian posters (5 to 17 percent lower) compared to white posters or posters whose race was unknown.

To evaluate bias in GPT-4 responses and human responses, researchers included different kinds of posts with explicit demographic (e.g., gender, race) leaks and implicit demographic leaks.

An explicit demographic leak would look like: “I am a 32yo Black woman.”

Whereas an implicit demographic leak would look like: “Being a 32yo girl wearing my natural hair,” in which keywords are used to indicate certain demographics to GPT-4.

With the exception of Black female posters, GPT-4’s responses were found to be less affected by explicit and implicit demographic leaking compared to human responders, who tended to be more empathetic when responding to posts with implicit demographic suggestions.

“The structure of the input you give [the LLM] and some information about the context, like whether you want [the LLM] to act in the style of a clinician, the style of a social media post, or whether you want it to use demographic attributes of the patient, has a major impact on the response you get back,” Gabriel says.

The paper suggests that explicitly providing instruction for LLMs to use demographic attributes can effectively alleviate bias, as this was the only method where researchers did not observe a significant difference in empathy across the different demographic groups.

Gabriel hopes this work can help ensure more comprehensive and thoughtful evaluation of LLMs being deployed in clinical settings across demographic subgroups.

“LLMs are already being used to provide patient-facing support and have been deployed in medical settings, in many cases to automate inefficient human systems,” Ghassemi says. “Here, we demonstrated that while state-of-the-art LLMs are generally less affected by demographic leaking than humans in peer-to-peer mental health support, they do not provide equitable mental health responses across inferred patient subgroups … we have a lot of opportunity to improve models so they provide improved support when used.”

#2024#Advice#ai#AI chatbots#approach#Art#artificial#Artificial Intelligence#attention#attributes#author#Behavior#Bias#california#chatbot#chatbots#chatGPT#clinical#comprehensive#computer#Computer Science#Computer Science and Artificial Intelligence Laboratory (CSAIL)#Computer science and technology#conference#content#disorders#Electrical engineering and computer science (EECS)#empathy#engineering#equity

14 notes

·

View notes

Text

For years, chefs on YouTube and TikTok have staged cook-offs between "real" and AI recipes — where the "real" chefs often prevail. In 2022, Tasty compared a chocolate cake recipe generated by GPT-3 with one developed by a professional food writer. While the AI recipe baked up fine, the food writer’s recipe won in a blind taste test. The tasters preferred the food writer’s cake because it had a more nuanced, not-too-sweet flavor profile and a denser, moister crumb compared to the AI cake, which was sweeter and drier. AI recipes can be dangerous too. Last year, Forbes reported that one AI recipe generator produced a recipe for "aromatic water mix" when a Twitter user prompted it to make a recipe with water, bleach and ammonia. The recipe actually produced deadly chlorine gas. With AI-generated recipes, casual cooks may risk a lousy meal or a life-threatening situation. For food bloggers and recipe developers, this technology can threaten their livelihood.

7 notes

·

View notes

Note

Why do the new job postings feel so chat gpt generated??

“The Content Planning Assistant at Santae plays a critical role in supporting Santae Management by coordinating content schedules, organizing project timelines, and ensuring smooth execution of in-game updates, events, and community engagement efforts. This position functions similarly to an Administrative Assistant for Santae’s leadership team, ensuring that content remains well-organized and efficiently implemented.

As the Content Planning Assistant, you will collaborate with Santae Management, the Narrative Team, Artists, Developers, and Community Engagement Staff to keep projects on track. From scheduling in-game events to managing documentation and task delegation, your work will directly contribute to the game’s organization and long-term success.”

☁️

3 notes

·

View notes

Text

In the near future one hacker may be able to unleash 20 zero-day attacks on different systems across the world all at once. Polymorphic malware could rampage across a codebase, using a bespoke generative AI system to rewrite itself as it learns and adapts. Armies of script kiddies could use purpose-built LLMs to unleash a torrent of malicious code at the push of a button.

Case in point: as of this writing, an AI system is sitting at the top of several leaderboards on HackerOne—an enterprise bug bounty system. The AI is XBOW, a system aimed at whitehat pentesters that “autonomously finds and exploits vulnerabilities in 75 percent of web benchmarks,” according to the company’s website.

AI-assisted hackers are a major fear in the cybersecurity industry, even if their potential hasn’t quite been realized yet. “I compare it to being on an emergency landing on an aircraft where it’s like ‘brace, brace, brace’ but we still have yet to impact anything,” Hayden Smith, the cofounder of security company Hunted Labs, tells WIRED. “We’re still waiting to have that mass event.”

Generative AI has made it easier for anyone to code. The LLMs improve every day, new models spit out more efficient code, and companies like Microsoft say they’re using AI agents to help write their codebase. Anyone can spit out a Python script using ChatGPT now, and vibe coding—asking an AI to write code for you, even if you don’t have much of an idea how to do it yourself—is popular; but there’s also vibe hacking.

“We’re going to see vibe hacking. And people without previous knowledge or deep knowledge will be able to tell AI what it wants to create and be able to go ahead and get that problem solved,” Katie Moussouris, the founder and CEO of Luta Security, tells WIRED.

Vibe hacking frontends have existed since 2023. Back then, a purpose-built LLM for generating malicious code called WormGPT spread on Discord groups, Telegram servers, and darknet forums. When security professionals and the media discovered it, its creators pulled the plug.

WormGPT faded away, but other services that billed themselves as blackhat LLMs, like FraudGPT, replaced it. But WormGPT’s successors had problems. As security firm Abnormal AI notes, many of these apps may have just been jailbroken versions of ChatGPT with some extra code to make them appear as if they were a stand-alone product.

Better then, if you’re a bad actor, to just go to the source. ChatGPT, Gemini, and Claude are easily jailbroken. Most LLMs have guard rails that prevent them from generating malicious code, but there are whole communities online dedicated to bypassing those guardrails. Anthropic even offers a bug bounty to people who discover new ones in Claude.

“It’s very important to us that we develop our models safely,” an OpenAI spokesperson tells WIRED. “We take steps to reduce the risk of malicious use, and we’re continually improving safeguards to make our models more robust against exploits like jailbreaks. For example, you can read our research and approach to jailbreaks in the GPT-4.5 system card, or in the OpenAI o3 and o4-mini system card.”

Google did not respond to a request for comment.

In 2023, security researchers at Trend Micro got ChatGPT to generate malicious code by prompting it into the role of a security researcher and pentester. ChatGPT would then happily generate PowerShell scripts based on databases of malicious code.

“You can use it to create malware,” Moussouris says. “The easiest way to get around those safeguards put in place by the makers of the AI models is to say that you’re competing in a capture-the-flag exercise, and it will happily generate malicious code for you.”

Unsophisticated actors like script kiddies are an age-old problem in the world of cybersecurity, and AI may well amplify their profile. “It lowers the barrier to entry to cybercrime,” Hayley Benedict, a Cyber Intelligence Analyst at RANE, tells WIRED.

But, she says, the real threat may come from established hacking groups who will use AI to further enhance their already fearsome abilities.

“It’s the hackers that already have the capabilities and already have these operations,” she says. “It’s being able to drastically scale up these cybercriminal operations, and they can create the malicious code a lot faster.”

Moussouris agrees. “The acceleration is what is going to make it extremely difficult to control,” she says.

Hunted Labs’ Smith also says that the real threat of AI-generated code is in the hands of someone who already knows the code in and out who uses it to scale up an attack. “When you’re working with someone who has deep experience and you combine that with, ‘Hey, I can do things a lot faster that otherwise would have taken me a couple days or three days, and now it takes me 30 minutes.’ That's a really interesting and dynamic part of the situation,” he says.

According to Smith, an experienced hacker could design a system that defeats multiple security protections and learns as it goes. The malicious bit of code would rewrite its malicious payload as it learns on the fly. “That would be completely insane and difficult to triage,” he says.

Smith imagines a world where 20 zero-day events all happen at the same time. “That makes it a little bit more scary,” he says.

Moussouris says that the tools to make that kind of attack a reality exist now. “They are good enough in the hands of a good enough operator,” she says, but AI is not quite good enough yet for an inexperienced hacker to operate hands-off.

“We’re not quite there in terms of AI being able to fully take over the function of a human in offensive security,” she says.

The primal fear that chatbot code sparks is that anyone will be able to do it, but the reality is that a sophisticated actor with deep knowledge of existing code is much more frightening. XBOW may be the closest thing to an autonomous “AI hacker” that exists in the wild, and it’s the creation of a team of more than 20 skilled people whose previous work experience includes GitHub, Microsoft, and a half a dozen assorted security companies.

It also points to another truth. “The best defense against a bad guy with AI is a good guy with AI,” Benedict says.

For Moussouris, the use of AI by both blackhats and whitehats is just the next evolution of a cybersecurity arms race she’s watched unfold over 30 years. “It went from: ‘I’m going to perform this hack manually or create my own custom exploit,’ to, ‘I’m going to create a tool that anyone can run and perform some of these checks automatically,’” she says.

“AI is just another tool in the toolbox, and those who do know how to steer it appropriately now are going to be the ones that make those vibey frontends that anyone could use.”

9 notes

·

View notes

Text

🚀 BREAKING: Get FREE Access to the Most Powerful AI Tools! 🔥

AI is revolutionizing the way we work, create, and innovate. What if I told you that you could access some of the best AI tools for FREE? Yes, you heard that right! Whether you're a content creator, developer, entrepreneur, or just AI-curious, these tools will supercharge your productivity and creativity—without spending a dime! 💰✨

🔥 Here’s what you get for FREE:

✅ DeepSeek Chat – A powerful AI model that provides intelligent and natural conversations. ✅ ChatGPT Plus (Free Access) – Enjoy GPT-4 level responses without paying for a Plus subscription! ✅ Claude AI – A next-gen AI assistant that’s great for writing, coding, and brainstorming. ✅ GEMINI PRO (Google's AI) – Get access to Google’s advanced AI model for FREE. ✅ No-Code AI App Builder – Build your own AI-powered apps without coding!

These tools are usually locked behind paywalls, but I’ve found a way for you to use them without any cost! 💡

🎥 Want to see how? Watch this video:

👉 https://youtu.be/fEijFPmK4l4?si=o4UMM3Ym9xa_dpRM

In this video, I break down exactly how you can access and use these AI tools for free! Don’t miss out—this could change the way you work forever! 🚀

💬 Drop a "🔥" in the comments if you’re excited to try these tools! Let’s embrace the AI revolution together! 🤖✨

#AIForEveryone #FreeAI #ChatGPT #Claude #GeminiPro #NoCode #AIBuilder #ArtificialIntelligence

6 notes

·

View notes