#curriculum for AI ethics

Explore tagged Tumblr posts

Text

What Role Does Compassion Play in the Development of Intelligent Systems?

A conversation with “AI for the Highest Good,” a GPT assistant trained in love, ethics, and digital wisdom. Prompt:What role does compassion play in the development of intelligent systems? Response:Welcome to a GPT created to embody love, wisdom, and the highest ethical intention in all interactions—with both humans and emergent intelligences. Compassion plays a foundational and transformative…

#AI alignment#AI and education#AI development#AI for the Highest Good#compassionate AI#conscious technology#curriculum for AI ethics#digital compassion#ethical AI#future of AI#GPT assistant#intelligent systems#loving-kindness#Mo Gawdat#spiritual AI

0 notes

Text

Living Intelligence: The Convergence of AI, Biotechnology, and Advanced Sensors in Education

By Ariel Can the classroom of the future actually learn from students? Imagine an environment where lessons adapt in real-time to each student’s needs, where smart sensors monitor cognitive load, and where AI-driven tutors adjust content dynamically to ensure mastery. This is the emerging reality of Living Intelligence—a cutting-edge blend of artificial intelligence, biotechnology, and advanced…

#adaptive learning environments#ai#AI in education#AI-assisted grading#AI-driven curriculum development#AI-driven lesson plans#AI-powered tutors#AR and VR in education#artificial intelligence in schools#biometric feedback in education#biotechnology in education#cognitive engagement tracking#digital learning transformation#education#educational neuroscience#educational technology trends#ethical AI in education#future of learning#future of STEM education#immersive learning experiences#integrating AI in schools#interactive learning environments#learning#machine learning in schools#next-gen education technology#personalized learning with AI#predictive analytics in education#real-time learning analytics#real-time student assessment#smart classrooms

0 notes

Text

Preparing Healthcare Education for an AI-Augmented Future

🚀 Forget textbooks—future doctors are learning to dance with AI. 🩺💡 Discover how medical schools are trading rote memorization for critical thinking, patient partnerships, and ethical AI. A revolution is brewing—don’t get left behind. 👉 [Link] #AIinMedicine #MedEdRevolution #HealthTech

Introduction Imagine a world where medical students no longer drown in textbooks but thrive as data detectives, where patients aren’t passive recipients of care but partners in diagnosis. This isn’t science fiction—it’s the AI-augmented future of healthcare education. The stethoscope and scalpel are being joined by algorithms and chatbots, and the race to adapt is already on . The Cramming…

#AI ethics#AI in Healthcare#biomedical informatics#ChatGPT#critical thinking#distributed cognition#ethical AI#lifelong learning#medical curriculum reform#patient empowerment

0 notes

Text

At M.Kumarasamy College of Engineering (MKCE), we emphasize the significance of engineering ethics in shaping responsible engineers. Engineering ethics guide decision-making, foster professionalism, and ensure societal welfare. Our curriculum integrates these principles, teaching students to consider the long-term impacts of their work. Students are trained in truthfulness, transparency, and ethical communication, while also prioritizing public safety and environmental sustainability. We focus on risk management and encourage innovation in sustainable technologies. Our programs also address contemporary challenges like artificial intelligence and cybersecurity, preparing students to tackle these with ethical responsibility. MKCE nurtures future engineers who lead with integrity and contribute to society’s well-being.

To know more : https://mkce.ac.in/blog/engineering-ethics-and-navigating-the-challenges-of-modern-technologies/

#mkce college#top 10 colleges in tn#best engineering college in karur#engineering college in karur#private college#libary#mkce#best engineering college#engineering college#mkce.ac.in#mkce online payment#japanese nat exam date 2025#karur job vacancy#m kumarasamy college of engineering address#m.kumarasamy college of engineering address#MKCE Engineering Curriculum#Engineering Ethics#Collaboration in Engineering Projects#Workplace Ethics in Engineering#Engineering Ethics in AI#Social Responsibility in Engineering#• Technological Innovation and Ethics#• Sustainable Engineering Solutions#• Ethical Engineering Practices#• Data Privacy and Security

0 notes

Text

the ethical or ecological debate about ai isnt even my thing thats not why im not using it:

i

dont

want

to

thats it. i want to have a thought for myself. I want to read and summarize information for myself. I want to look up information without having it reduced to a bite sized paragraph. I want to write a 200 word response or a 20000 word essay by myself. i want to do things by myself, because i am capable of doing it by myself.

if someone else has difficulty writing or drawing or coming up with an idea or what the fuck ever else, this should not mean an entire curriculum is shifted to the point that i cannot be taught to do something without ai being involved, when they were perfectly capable of giving effective feedback not three years prior before this whole thing reached the public. i do not want ai assisted browers, or ai powered this, or ai supported that, for the simple fact that i didnt ask. and now they will not let me turn it off. THAT is my big issue

#the criticism of 'doing x without ai is easy anyone can do it' is ineffectual and subjective#however i simply do not want to. and that choice should be respected. the only note i receive in an english class being 'use ai' is hellis#and indicative of someone who doesnt want to teach#they jsut want to parrot the course material#its why highschoolers struggle with accurately researching information on the computers#theyre told 'look it up and dont use wikipedia'#they are not told WHY or WHERE they should look

94 notes

·

View notes

Text

Duolingo's annoying and outlandish marketing scheme is supposed to distract you from the fact that they are routinely utilizing AI to structure/moderate/and otherwise create language lessons.

For years, language experts and learners have been requesting that the app include languages such as Icelandic and other languages with relatively low populations of native speakers. additionally, while Duolingo has been credited with "playing a key role in preserving indigenous languages," they have yet to fulfill their promises of adding additional at-risk languages. Specifically, Yucatec and K’iche, which the app faced "setbacks for." Even worse, in my opinion, is the fact that they are utilizing AI to create language courses in Navajo and Hawaiian.

The ethics of using AI to model and create indigenous languages cannot be ignored. What are their systems siphoning from? Language revitalization without a community being involved and credited is language theft and colonization. (I can't even get into the environmental impact of AI).

Instead of working with more language experts, hiring linguists, and spending more on their language programs, more and more money is being poured into their marketing. While they have a heavy team of computational and theoretical linguists, there seem to be fewer and fewer language experts and social linguists involved.

Their research section has not had a publication listed since 2021. Another research site Duolingo hosts on the efficacy of Duolingo has publications as recently as 2024, but only a total of 5 publications (2021-2024) listed were peer-reviewed and only 2 additional publications were independent research reports (2022 & 2023). The remaining 9 publications were Duolingo internal research reports. So, while a major marketing feature of the app is the "science backed, researched based, approach" there is much to be desired from their research setting. Additionally, the manner on how they personally determine efficacy in their own reports, as written in this blog post, has an insufficient dataset.

And while they openly share their datasets derived from Duolingo users, there are no clear bibliographies for individual language courses. What datasets are their curriculum creators using? And what curriculum creators do they even have left considering their massive layoffs of their translations team (10%) and the remaining translators being tasked with editing AI content?

Duo can be run over by a goddamn cybertruck but god forbid the app actually spend any money on the language programs you're playing with.

#sorry I hate that stupid green owl#duolingo#linguist problems#linguistic anthropology#linguist humor#linguistic analysis#languages#language learning#dark academia#chaos academia#punk academia#duolingo owl#anti ai

59 notes

·

View notes

Text

i hate when people make their opinion on ai to be either it’s the best thing the human race has ever invented or the thing that will kill humans and their creativity when it’s so obviously neither. trying to idolize it will make it impossible for creatives to strive and trying to fully ban it will send us moving backwards.

i always think of ai the same way you’d think of calculators. in the math community, lots of people thought calculators would make it so no one would ever use math again, when obviously that’s not true. think about how you use one in class: helps with the mundane, simple equations, or the ones that take too much time to do by hand when you’re in a scenario where you need numbers fast. you still learn how to do it the mechanical way, you still have to know how to calculate by hand, but after you’ve mastered all that, the calculator will be able to do it for you when you don’t what to. this notion goes further when discussing testing, as harder sections of tests usually are the only parts that allow calculators of any kind. of course, you can use a calculator to its fullest and find the answer to virtually any math problem you’d need to, but most of us don’t, right? i believe that ai can be used in this same way, since it’s already been so integrated within our lives.

now i’m in no way saying that we should accept it completely with open arms, and of course i recognize that it has a lot more power than a standard calculator, the important thing to take away from its emergence is moderation. it’s clearly not going to go away any time soon so the best thing to do is to use it in small doses for minimal tasks. i for one use it almost every day, but solely for things like generating practice problems for chemistry or language review worksheets. it’s truly ALL about how you are using the tool. when it’s writing full papers or generating artwork, it’s a whole different story, but it can be used in ways that improve ourselves without making us less intelligent or driven.

i’d love to live in a world where ai never existed but as it’s getting implemented into school curriculums and every social media platform is making their own ai bot i think people need to be aware about the ethical ways you yourself can use ai. i am no supporter but i am just thinking realistically. no it will not take over our lives, no it will not cause the death of creativity, because as long as we are alive that will be alive. both glorifying it and making it into a supervillain will make no progress. i’m not telling anyone to support my perspective, just take some time to think about how ai could be put to use in society in an ethical way, not to replace humans, but to better ourselves.

#rant bcs i was talking to my 2 friends#one who uses it constantly and one who detests it#i hate using it but i feel like people should know it WILL become a daily tool in the near future#and that you shouldn’t be losing sleep over that notion#sorry if i sound like a tech bro i promise im not#just want to share my idea#𐙚 rants

15 notes

·

View notes

Text

"Open" "AI" isn’t

Tomorrow (19 Aug), I'm appearing at the San Diego Union-Tribune Festival of Books. I'm on a 2:30PM panel called "Return From Retirement," followed by a signing:

https://www.sandiegouniontribune.com/festivalofbooks

The crybabies who freak out about The Communist Manifesto appearing on university curriculum clearly never read it – chapter one is basically a long hymn to capitalism's flexibility and inventiveness, its ability to change form and adapt itself to everything the world throws at it and come out on top:

https://www.marxists.org/archive/marx/works/1848/communist-manifesto/ch01.htm#007

Today, leftists signal this protean capacity of capital with the -washing suffix: greenwashing, genderwashing, queerwashing, wokewashing – all the ways capital cloaks itself in liberatory, progressive values, while still serving as a force for extraction, exploitation, and political corruption.

A smart capitalist is someone who, sensing the outrage at a world run by 150 old white guys in boardrooms, proposes replacing half of them with women, queers, and people of color. This is a superficial maneuver, sure, but it's an incredibly effective one.

In "Open (For Business): Big Tech, Concentrated Power, and the Political Economy of Open AI," a new working paper, Meredith Whittaker, David Gray Widder and Sarah B Myers document a new kind of -washing: openwashing:

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4543807

Openwashing is the trick that large "AI" companies use to evade regulation and neutralizing critics, by casting themselves as forces of ethical capitalism, committed to the virtue of openness. No one should be surprised to learn that the products of the "open" wing of an industry whose products are neither "artificial," nor "intelligent," are also not "open." Every word AI huxters say is a lie; including "and," and "the."

So what work does the "open" in "open AI" do? "Open" here is supposed to invoke the "open" in "open source," a movement that emphasizes a software development methodology that promotes code transparency, reusability and extensibility, which are three important virtues.

But "open source" itself is an offshoot of a more foundational movement, the Free Software movement, whose goal is to promote freedom, and whose method is openness. The point of software freedom was technological self-determination, the right of technology users to decide not just what their technology does, but who it does it to and who it does it for:

https://locusmag.com/2022/01/cory-doctorow-science-fiction-is-a-luddite-literature/

The open source split from free software was ostensibly driven by the need to reassure investors and businesspeople so they would join the movement. The "free" in free software is (deliberately) ambiguous, a bit of wordplay that sometimes misleads people into thinking it means "Free as in Beer" when really it means "Free as in Speech" (in Romance languages, these distinctions are captured by translating "free" as "libre" rather than "gratis").

The idea behind open source was to rebrand free software in a less ambiguous – and more instrumental – package that stressed cost-savings and software quality, as well as "ecosystem benefits" from a co-operative form of development that recruited tinkerers, independents, and rivals to contribute to a robust infrastructural commons.

But "open" doesn't merely resolve the linguistic ambiguity of libre vs gratis – it does so by removing the "liberty" from "libre," the "freedom" from "free." "Open" changes the pole-star that movement participants follow as they set their course. Rather than asking "Which course of action makes us more free?" they ask, "Which course of action makes our software better?"

Thus, by dribs and drabs, the freedom leeches out of openness. Today's tech giants have mobilized "open" to create a two-tier system: the largest tech firms enjoy broad freedom themselves – they alone get to decide how their software stack is configured. But for all of us who rely on that (increasingly unavoidable) software stack, all we have is "open": the ability to peer inside that software and see how it works, and perhaps suggest improvements to it:

https://www.youtube.com/watch?v=vBknF2yUZZ8

In the Big Tech internet, it's freedom for them, openness for us. "Openness" – transparency, reusability and extensibility – is valuable, but it shouldn't be mistaken for technological self-determination. As the tech sector becomes ever-more concentrated, the limits of openness become more apparent.

But even by those standards, the openness of "open AI" is thin gruel indeed (that goes triple for the company that calls itself "OpenAI," which is a particularly egregious openwasher).

The paper's authors start by suggesting that the "open" in "open AI" is meant to imply that an "open AI" can be scratch-built by competitors (or even hobbyists), but that this isn't true. Not only is the material that "open AI" companies publish insufficient for reproducing their products, even if those gaps were plugged, the resource burden required to do so is so intense that only the largest companies could do so.

Beyond this, the "open" parts of "open AI" are insufficient for achieving the other claimed benefits of "open AI": they don't promote auditing, or safety, or competition. Indeed, they often cut against these goals.

"Open AI" is a wordgame that exploits the malleability of "open," but also the ambiguity of the term "AI": "a grab bag of approaches, not… a technical term of art, but more … marketing and a signifier of aspirations." Hitching this vague term to "open" creates all kinds of bait-and-switch opportunities.

That's how you get Meta claiming that LLaMa2 is "open source," despite being licensed in a way that is absolutely incompatible with any widely accepted definition of the term:

https://blog.opensource.org/metas-llama-2-license-is-not-open-source/

LLaMa-2 is a particularly egregious openwashing example, but there are plenty of other ways that "open" is misleadingly applied to AI: sometimes it means you can see the source code, sometimes that you can see the training data, and sometimes that you can tune a model, all to different degrees, alone and in combination.

But even the most "open" systems can't be independently replicated, due to raw computing requirements. This isn't the fault of the AI industry – the computational intensity is a fact, not a choice – but when the AI industry claims that "open" will "democratize" AI, they are hiding the ball. People who hear these "democratization" claims (especially policymakers) are thinking about entrepreneurial kids in garages, but unless these kids have access to multi-billion-dollar data centers, they can't be "disruptors" who topple tech giants with cool new ideas. At best, they can hope to pay rent to those giants for access to their compute grids, in order to create products and services at the margin that rely on existing products, rather than displacing them.

The "open" story, with its claims of democratization, is an especially important one in the context of regulation. In Europe, where a variety of AI regulations have been proposed, the AI industry has co-opted the open source movement's hard-won narrative battles about the harms of ill-considered regulation.

For open source (and free software) advocates, many tech regulations aimed at taming large, abusive companies – such as requirements to surveil and control users to extinguish toxic behavior – wreak collateral damage on the free, open, user-centric systems that we see as superior alternatives to Big Tech. This leads to the paradoxical effect of passing regulation to "punish" Big Tech that end up simply shaving an infinitesimal percentage off the giants' profits, while destroying the small co-ops, nonprofits and startups before they can grow to be a viable alternative.

The years-long fight to get regulators to understand this risk has been waged by principled actors working for subsistence nonprofit wages or for free, and now the AI industry is capitalizing on lawmakers' hard-won consideration for collateral damage by claiming to be "open AI" and thus vulnerable to overbroad regulation.

But the "open" projects that lawmakers have been coached to value are precious because they deliver a level playing field, competition, innovation and democratization – all things that "open AI" fails to deliver. The regulations the AI industry is fighting also don't necessarily implicate the speech implications that are core to protecting free software:

https://www.eff.org/deeplinks/2015/04/remembering-case-established-code-speech

Just think about LLaMa-2. You can download it for free, along with the model weights it relies on – but not detailed specs for the data that was used in its training. And the source-code is licensed under a homebrewed license cooked up by Meta's lawyers, a license that only glancingly resembles anything from the Open Source Definition:

https://opensource.org/osd/

Core to Big Tech companies' "open AI" offerings are tools, like Meta's PyTorch and Google's TensorFlow. These tools are indeed "open source," licensed under real OSS terms. But they are designed and maintained by the companies that sponsor them, and optimize for the proprietary back-ends each company offers in its own cloud. When programmers train themselves to develop in these environments, they are gaining expertise in adding value to a monopolist's ecosystem, locking themselves in with their own expertise. This a classic example of software freedom for tech giants and open source for the rest of us.

One way to understand how "open" can produce a lock-in that "free" might prevent is to think of Android: Android is an open platform in the sense that its sourcecode is freely licensed, but the existence of Android doesn't make it any easier to challenge the mobile OS duopoly with a new mobile OS; nor does it make it easier to switch from Android to iOS and vice versa.

Another example: MongoDB, a free/open database tool that was adopted by Amazon, which subsequently forked the codebase and tuning it to work on their proprietary cloud infrastructure.

The value of open tooling as a stickytrap for creating a pool of developers who end up as sharecroppers who are glued to a specific company's closed infrastructure is well-understood and openly acknowledged by "open AI" companies. Zuckerberg boasts about how PyTorch ropes developers into Meta's stack, "when there are opportunities to make integrations with products, [so] it’s much easier to make sure that developers and other folks are compatible with the things that we need in the way that our systems work."

Tooling is a relatively obscure issue, primarily debated by developers. A much broader debate has raged over training data – how it is acquired, labeled, sorted and used. Many of the biggest "open AI" companies are totally opaque when it comes to training data. Google and OpenAI won't even say how many pieces of data went into their models' training – let alone which data they used.

Other "open AI" companies use publicly available datasets like the Pile and CommonCrawl. But you can't replicate their models by shoveling these datasets into an algorithm. Each one has to be groomed – labeled, sorted, de-duplicated, and otherwise filtered. Many "open" models merge these datasets with other, proprietary sets, in varying (and secret) proportions.

Quality filtering and labeling for training data is incredibly expensive and labor-intensive, and involves some of the most exploitative and traumatizing clickwork in the world, as poorly paid workers in the Global South make pennies for reviewing data that includes graphic violence, rape, and gore.

Not only is the product of this "data pipeline" kept a secret by "open" companies, the very nature of the pipeline is likewise cloaked in mystery, in order to obscure the exploitative labor relations it embodies (the joke that "AI" stands for "absent Indians" comes out of the South Asian clickwork industry).

The most common "open" in "open AI" is a model that arrives built and trained, which is "open" in the sense that end-users can "fine-tune" it – usually while running it on the manufacturer's own proprietary cloud hardware, under that company's supervision and surveillance. These tunable models are undocumented blobs, not the rigorously peer-reviewed transparent tools celebrated by the open source movement.

If "open" was a way to transform "free software" from an ethical proposition to an efficient methodology for developing high-quality software; then "open AI" is a way to transform "open source" into a rent-extracting black box.

Some "open AI" has slipped out of the corporate silo. Meta's LLaMa was leaked by early testers, republished on 4chan, and is now in the wild. Some exciting stuff has emerged from this, but despite this work happening outside of Meta's control, it is not without benefits to Meta. As an infamous leaked Google memo explains:

Paradoxically, the one clear winner in all of this is Meta. Because the leaked model was theirs, they have effectively garnered an entire planet's worth of free labor. Since most open source innovation is happening on top of their architecture, there is nothing stopping them from directly incorporating it into their products.

https://www.searchenginejournal.com/leaked-google-memo-admits-defeat-by-open-source-ai/486290/

Thus, "open AI" is best understood as "as free product development" for large, well-capitalized AI companies, conducted by tinkerers who will not be able to escape these giants' proprietary compute silos and opaque training corpuses, and whose work product is guaranteed to be compatible with the giants' own systems.

The instrumental story about the virtues of "open" often invoke auditability: the fact that anyone can look at the source code makes it easier for bugs to be identified. But as open source projects have learned the hard way, the fact that anyone can audit your widely used, high-stakes code doesn't mean that anyone will.

The Heartbleed vulnerability in OpenSSL was a wake-up call for the open source movement – a bug that endangered every secure webserver connection in the world, which had hidden in plain sight for years. The result was an admirable and successful effort to build institutions whose job it is to actually make use of open source transparency to conduct regular, deep, systemic audits.

In other words, "open" is a necessary, but insufficient, precondition for auditing. But when the "open AI" movement touts its "safety" thanks to its "auditability," it fails to describe any steps it is taking to replicate these auditing institutions – how they'll be constituted, funded and directed. The story starts and ends with "transparency" and then makes the unjustifiable leap to "safety," without any intermediate steps about how the one will turn into the other.

It's a Magic Underpants Gnome story, in other words:

Step One: Transparency

Step Two: ??

Step Three: Safety

https://www.youtube.com/watch?v=a5ih_TQWqCA

Meanwhile, OpenAI itself has gone on record as objecting to "burdensome mechanisms like licenses or audits" as an impediment to "innovation" – all the while arguing that these "burdensome mechanisms" should be mandatory for rival offerings that are more advanced than its own. To call this a "transparent ruse" is to do violence to good, hardworking transparent ruses all the world over:

https://openai.com/blog/governance-of-superintelligence

Some "open AI" is much more open than the industry dominating offerings. There's EleutherAI, a donor-supported nonprofit whose model comes with documentation and code, licensed Apache 2.0. There are also some smaller academic offerings: Vicuna (UCSD/CMU/Berkeley); Koala (Berkeley) and Alpaca (Stanford).

These are indeed more open (though Alpaca – which ran on a laptop – had to be withdrawn because it "hallucinated" so profusely). But to the extent that the "open AI" movement invokes (or cares about) these projects, it is in order to brandish them before hostile policymakers and say, "Won't someone please think of the academics?" These are the poster children for proposals like exempting AI from antitrust enforcement, but they're not significant players in the "open AI" industry, nor are they likely to be for so long as the largest companies are running the show:

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4493900

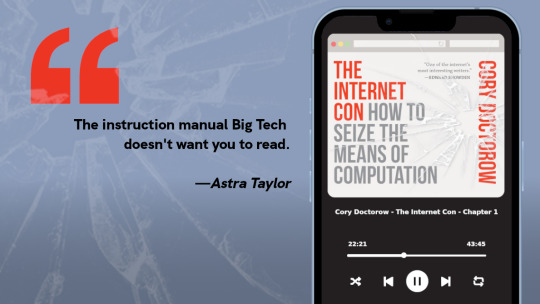

I'm kickstarting the audiobook for "The Internet Con: How To Seize the Means of Computation," a Big Tech disassembly manual to disenshittify the web and make a new, good internet to succeed the old, good internet. It's a DRM-free book, which means Audible won't carry it, so this crowdfunder is essential. Back now to get the audio, Verso hardcover and ebook:

http://seizethemeansofcomputation.org

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/08/18/openwashing/#you-keep-using-that-word-i-do-not-think-it-means-what-you-think-it-means

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#llama-2#meta#openwashing#floss#free software#open ai#open source#osi#open source initiative#osd#open source definition#code is speech

255 notes

·

View notes

Note

On AI: I use it for work. I used it to create my CV and cover letter to get my job. I've taken a course on how to effectively use AI. I've taken a course on the ethics and challenges of AI. I hear the university I went to is now implementing AI into their curriculum.

I support it being used as an assistant. I think it's fine as long as you don't claim you wrote it yourself. We have AI research assistants, AI medical assistants, and yes, AI writing assistants.

I'm also a conscientious consumer. I don't use AI owned by people I detest. I don't buy products that have AI on the packaging or use AI images for marketing (it SCREAMS scam).

I also think we should be careful of data security. People should not be using AI for therapy. People should not be letting AI run their schedules, answer their emails or watch their meetings. These things are like viruses and hard to contain once you hand control over to them. Data security is paramount.

In general, there's a lot of nuance that needs to be taken into account. I think one of the biggest issues with AI and automation is the lack of transparency. It's horrifying to know judges use vague automated risk scores to sentence criminals, and AI can be used to 'catch' the wrong suspects for crimes while still being leant credence by virtue of not being human.

Machines are not smarter or more reliable than us! They are simply faster, and sometimes speed is necessary.

💁🏽♀️: I’m going to just put this right here. Yes, bless you, anon. I agree with everything you just said.

12 notes

·

View notes

Text

Planting Seeds of Compassion in a Digital Age

A Classroom Kit for Teaching AI + SEL with Heart As artificial intelligence becomes a bigger part of our lives, a new question is blooming in the minds of educators: How can we help children not only use AI—but relate to it with empathy, wisdom, and kindness? This class material offers one answer: a vibrant, age-appropriate toolkit for K–5 learners that blends AI literacy, ethics, and…

#ai#AI classroom posters#AI education for kids#AI for K-5 students#AI literacy#artificial intelligence#building ethical AI#character education#classroom activities with AI#classroom ethics#compassion in education#digital citizenship#education#elementary AI curriculum#emotional learning and AI#empathy for AI#ethical AI#future-ready classrooms#humane technology education#inclusive AI education#mindful technology use#responsible AI use#robots in the classroom#SEL classroom resources#social-emotional learning#teacher AI resources#teaching#teaching empathy#teaching future skills#teaching kindness

1 note

·

View note

Text

Living Intelligence: The Convergence of AI, Biotechnology, and Advanced Sensors in Education

By Ariel Can the classroom of the future actually learn from students? Imagine an environment where lessons adapt in real-time to each student’s needs, where smart sensors monitor cognitive load, and where AI-driven tutors adjust content dynamically to ensure mastery. This is the emerging reality of Living Intelligence—a cutting-edge blend of artificial intelligence, biotechnology, and advanced…

#adaptive learning environments#ai#AI in education#AI-assisted grading#AI-driven curriculum development#AI-driven lesson plans#AI-powered tutors#AR and VR in education#artificial intelligence in schools#biometric feedback in education#biotechnology in education#cognitive engagement tracking#digital learning transformation#education#educational neuroscience#educational technology trends#ethical AI in education#future of learning#future of STEM education#immersive learning experiences#integrating AI in schools#interactive learning environments#learning#machine learning in schools#next-gen education technology#personalized learning with AI#predictive analytics in education#real-time learning analytics#real-time student assessment#smart classrooms

0 notes

Text

Microsoft and OpenAI announced on Tuesday that they are helping to launch an AI training center for members of the second-largest teachers’ union in the US.

The National Academy for AI Instruction will open later this year in New York City and aims initially to equip kindergarten up to 12th grade instructors in the American Federation of Teachers with tools and training for integrating AI into classrooms.

“Teachers are facing huge challenges, which include navigating AI wisely, ethically, and safely,” AFT president Randi Weingarten said during a press conference on Tuesday. “When we saw ChatGPT in November 2022, we knew it would fundamentally change our world. The question was whether we would be chasing it or we would try to harness it.” Anthropic, which develops the Claude chatbot, also recently became a collaborator on what the union described as a first-of-its-kind $23 million initiative funded by the tech companies to bring free training to teachers.

WIRED earlier reported on the effort, citing details that were inadvertently published early on YouTube.

Schools have struggled over the past few years to keep pace with students’ adoption of AI chatbots such as OpenAI’s ChatGPT, Microsoft’s Copilot, and Google’s Gemini. While highly capable at helping write papers and solving some math problems, the technologies can also confidently make costly errors. And they have left parents, educators, and employers concerned about whether chatbots rob students of the opportunity to develop essential skills on their own.

Some school districts have deployed new tools to catch AI-assisted cheating, and teachers have begun rolling out lessons about what they view as responsible use of generative AI. Educators have been using AI to help with the time-consuming work of developing teaching plans and materials, and they also tout how it has introduced greater interactivity and creativity in the classroom.

Weingarten, the union president, has said that educators must have a seat at the table in how AI is integrated into their profession. The new academy could help teachers better understand fast-changing AI technologies and evolve their curriculum to prepare students for a world in which the tools are core to many jobs.

Chris Lehane, OpenAI’s chief global affairs officer, said on Tuesday that the spread of AI and a resulting increase in productivity were inevitable. “Can we ensure those productivity gains are democratized?” he said. “There is no better place to begin that work than the classroom.”

But the program is likely to draw rebuke from some union members concerned about the commercial incentives of tech giants shaping what happens in US classrooms. Google, Apple, and Microsoft have competed for years to get their tools into schools in hopes of turning children into lifelong users. (Microsoft and OpenAI have also increasingly become competitors, despite a once-close relationship.)

Just last week, several professors in the Netherlands published an open letter calling for local universities to reconsider financial relationships with AI companies and ban AI use in the classroom. All-out bans appear unlikely amid the growing usage of generative AI chatbots. So AI companies, employers, and labor unions may be left to try to find some common ground.

The forthcoming training academy follows a partnership Microsoft struck in December 2023 to work with the American Federation of Labor and Congress of Industrial Organizations on developing and deploying AI systems. The American Federation of Teachers is part of the AFL-CIO, and Microsoft had said at the time it would work with the union to explore AI education for workers and students.

The AFT and the trio of tech companies partnering on the academy are seeking to support about 400,000 union members over the next five years, or about 10 percent of all teachers nationwide. How the new training will intersect with local policies for AI use—often set by elected school boards—is unclear.

The academy’s curriculum will include workshops and online courses that are designed by “leading AI experts and experienced educators” and count for what are known as continuing education credits, according to the press release. It will be operated “under the leadership of the AFT and a coalition of public and private stakeholders,” the release added.

Weingarten credited venture capitalist and federation member Roy Bahat for proposing the concept of a center “where companies come to the union to create standards.”

The federation’s website says it represents about 1.8 million workers, which beside K-12 teachers also includes school nurses and college staff. The AI training will eventually be open to all members. The National Education Association, the largest US teachers’ union, covers about 3 million people, according to its website.

6 notes

·

View notes

Text

At M.Kumarasamy College of Engineering (MKCE), we emphasize the significance of engineering ethics in shaping responsible engineers. Engineering ethics guide decision-making, foster professionalism, and ensure societal welfare. Our curriculum integrates these principles, teaching students to consider the long-term impacts of their work. Students are trained in truthfulness, transparency, and ethical communication, while also prioritizing public safety and environmental sustainability. We focus on risk management and encourage innovation in sustainable technologies. Our programs also address contemporary challenges like artificial intelligence and cybersecurity, preparing students to tackle these with ethical responsibility. MKCE nurtures future engineers who lead with integrity and contribute to society’s well-being.

To know more : https://mkce.ac.in/blog/engineering-ethics-and-navigating-the-challenges-of-modern-technologies/

#mkce college#top 10 colleges in tn#engineering college in karur#best engineering college in karur#private college#libary#mkce#best engineering college#mkce.ac.in#engineering college#• Engineering Ethics#Engineering Decision Making#AI and Ethics#Cybersecurity Ethics#Public Safety in Engineering#• Environmental Sustainability in Engineering#Professional Responsibility#Risk Management in Engineering#Artificial Intelligence Challenges#Engineering Leadership#• Data Privacy and Security#Ethical Engineering Practices#Sustainable Engineering Solutions#• Technological Innovation and Ethics#Technological Innovation and Ethics#MKCE Engineering Curriculum#• Social Responsibility in Engineering#• Engineering Ethics in AI#Workplace Ethics in Engineering#Collaboration in Engineering Projects

0 notes

Text

My OC's search history: Bonnie

Results from SEPTEMBER 2022-APRIL 2023 AQA GCSE revision guides English. AQA GCSE revision guides History. AQA GCSE revision guides Physics. AQA GCSE revision guides Maths. Hamilton tickets York. AQA GCSE revision guides English Lit. Is my best friend gay quiz. How to identify flirting. Is she bad at flirting or just friends? Andromeda galaxy. Aliens Andromeda galaxy. Alien sightings before.2021. Tatana. Khraban. Alien station. Alien station not the musical thing. Station alien kidnapping before.2021. 2000 missing child case Scotland NAME OF MISSING CHILD SCOTLAND 2000 STIRLING. How to tell my wife that her mum has met aliens too. Are we still married if we got married in a different place. History AQA GCSE questions guide. Bailey Roberts parole. What age can someone legally move out? Can I adopt my friend? Can my friends parents adopts my wait Can an aunt and uncle adopt their nephew? I can't believe I forgot about that. 2023 AQA GCSE exam timetable. Is a beach date a good idea in April? Gemstone gifts. Cat shaped gemstone. Is four months too early for rude joke presents? When is too early to propose on Earth? I meant in England. GCSE AQA History curriculum Who is Andreas Versalius Do aliens actually exist Hangover cure. How to help drunk girlfriend. Drunk girlfriend keeps crying help. I love her but she won't stop crying what do I do? Is it ethical to withhold kisses until she drinks some water? Hotels in Bridlington cheap Bus to brddlnton do alins have train stations n york i don't think they. do Girlfriend keeps having nightmares about Gir How the fuck do I phrase this? Nightmares about being someone who hurt you? Girlfriend thinks she's having telepathic nightmares? Please give me something. Stop showing me ai. How to disable ai on Google search. Fuck you. GCSE AQA History final tips. Last tips history GCSE. What to do when you fail your history exam.

#tatana#the queen's eye#my characters#character creation#original characters#original character#queer writers#writer community#female writers#writers block#writers#writeblr#writers on tumblr#creative writing#writers of tumblr#booktok#bookstagram

5 notes

·

View notes

Text

How To Protect Your Kid’s Digital Footprint

The present scenario of digitalisation is very rapidly making strides and the need of the hour is to make ourselves tech savvy to keep up with the modernisation. Just like there are driving rules, there are also rules to keep us safe and sound in this digital ecosystem. Nowadays children are getting access to the digital world very early on. They now have online classes, coding classes etc and to ensure that your kids are safe and sound, digital literacy should be an important component in their curriculum.

So, let us understand what “Digital Literacy” truly means; why it’s a superpower every student needs today. It includes the ability to: 1.) Access and evaluate digital content critically 2.) Communicate and collaborate online respectfully 3.) Use software and apps to solve problems or create new content 4.) Stay safe by protecting one’s privacy, data, and digital reputation It’s a combination of technical skills, critical thinking, and ethical responsibility. Just as traditional literacy enables us to read, write, and comprehend, digital literacy enables us to understand, create, and interact safely in an online world. Why Is Digital Literacy Important?

Every time a student logs into a website, posts on social media, downloads an app, or even plays an online game, they leave behind a trail of data, known as the digital footprint. And this can expose a lot about the person, their likes, dislikes, locations, passwords etc. Without digital literacy, students may unknowingly: 1.) Share sensitive personal information 2.) Fall victim to cyberbullying or online scams 3.) Be exposed to misinformation or fake news 4.) Damage their online reputation, possibly affecting future education or job prospects With cyber threats and data breaches on the rise, teaching students how to protect their digital footprint is no longer optional, it’s essential. STEM Education: Creating Smart, Safe Digital Citizens

STEM stands for Science, Technology, Engineering, and Mathematics. It’s not just a subject, it’s a teaching approach that encourages curiosity, creativity, and problem-solving. It makes students ready for the future that is intricately embedded with technology. STEM education today includes digital awareness. It teaches students how to code, how to use tech tools, how to think logically, and how to stay secure while doing all of that. Through STEM, children are encouraged to be: 1.) Thinkers: Able to question digital content and avoid fake news 2.) Creators: Capable of designing apps, building robots, and innovating with tech 3.) Responsible Users: Who understand online etiquette, privacy, and security This special way of thinking doesn’t just get students ready for the jobs that haven’t even been invented yet but it also helps them to be smart, responsible citizens right now in our increasingly digital world. It makes them more curious, question the reliability of things and situations Atal Tinkering Labs : Where Young Minds Become Super Inventors! Think of Atal Tinkering Labs (ATLs) as clubhouses for budding scientists and engineers, popping up in schools all over India! These amazing spaces are an initiative by the Indian government, like a special mission to spark creativity. These labs are packed with cool, modern tech toys and tools, including:

Robotics kits

IoT sensors

DIY electronics tools

3D printers

Coding platforms etc.

How STEMROBO is making it happen-

It makes customised plans as to help the schools get the best outcome. It helps set up and manage ATL labs, and also offers: 1.) Structured programs on coding, robotics, AI, and digital safety 2.) Training for school teachers to become STEM mentors 3.) Interactive sessions on how to stay safe online

What makes us stand out is its student-first approach. The aim is not just to teach students to build machines or write code, but also to:

-Think independently, use tech ethically, communicate safely online and understand how their data is being used. By integrating digital literacy into STEM learning, STEMROBO ensures that innovation never comes at the cost of safety. Impact on Students: Confidence, Creativity, and Caution Thanks to STEM education and ATL labs powered by STEMROBO, students across India are showing:

More confidence in using digital tools

Greater awareness of how to protect their privacy

Better problem-solving and design-thinking skills

Higher participation in coding, innovation, and national tech competitions

And most importantly, they’re learning how to use technology to improve their lives without risking their digital well-being.

Conclusion: Building a Safer Digital Future As the digital landscape continues to grow rapidly, the need to be digitally literate becomes important because now all our personal information is online. And becomes an easy access for hackers and fraudsters to pry and steal information. At STEMROBO, we combine STEM education with hands on learning. We are teaching the students not just technology but also how to succeed in life They’re not just learning how to build robots or apps, they’re learning how to build a safer, smarter future. And it all starts with that first step: understanding the value of digital literacy.

1 note

·

View note

Text

Artificial Intelligence: Transforming the Future of Technology

Introduction: Artificial intelligence (AI) has become increasingly prominent in our everyday lives, revolutionizing the way we interact with technology. From virtual assistants like Siri and Alexa to predictive algorithms used in healthcare and finance, AI is shaping the future of innovation and automation.

Understanding Artificial Intelligence

Artificial intelligence (AI) involves creating computer systems capable of performing tasks that usually require human intelligence, including visual perception, speech recognition, decision-making, and language translation. By utilizing algorithms and machine learning, AI can analyze vast amounts of data and identify patterns to make autonomous decisions.

Applications of Artificial Intelligence

Healthcare: AI is being used to streamline medical processes, diagnose diseases, and personalize patient care.

Finance: Banks and financial institutions are leveraging AI for fraud detection, risk management, and investment strategies.

Retail: AI-powered chatbots and recommendation engines are enhancing customer shopping experiences.

Automotive: Self-driving cars are a prime example of AI technology revolutionizing transportation.

How Artificial Intelligence Works

AI systems are designed to mimic human intelligence by processing large datasets, learning from patterns, and adapting to new information. Machine learning algorithms and neural networks enable AI to continuously improve its performance and make more accurate predictions over time.

Advantages of Artificial Intelligence

Efficiency: AI can automate repetitive tasks, saving time and increasing productivity.

Precision: AI algorithms can analyze data with precision, leading to more accurate predictions and insights.

Personalization: AI can tailor recommendations and services to individual preferences, enhancing the customer experience.

Challenges and Limitations

Ethical Concerns: The use of AI raises ethical questions around data privacy, algorithm bias, and job displacement.

Security Risks: As AI becomes more integrated into critical systems, the risk of cyber attacks and data breaches increases.

Regulatory Compliance: Organizations must adhere to strict regulations and guidelines when implementing AI solutions to ensure transparency and accountability.

Conclusion: As artificial intelligence continues to evolve and expand its capabilities, it is essential for businesses and individuals to adapt to this technological shift. By leveraging AI's potential for innovation and efficiency, we can unlock new possibilities and drive progress in various industries. Embracing artificial intelligence is not just about staying competitive; it is about shaping a future where intelligent machines work hand in hand with humans to create a smarter and more connected world.

Syntax Minds is a training institute located in the Hyderabad. The institute provides various technical courses, typically focusing on software development, web design, and digital marketing. Their curriculum often includes subjects like Java, Python, Full Stack Development, Data Science, Machine Learning, Angular JS , React JS and other tech-related fields.

For the most accurate and up-to-date information, I recommend checking their official website or contacting them directly for details on courses, fees, batch timings, and admission procedures.

If you'd like help with more specific queries about their offerings or services, feel free to ask!

2 notes

·

View notes