#GPT-4

Explore tagged Tumblr posts

Text

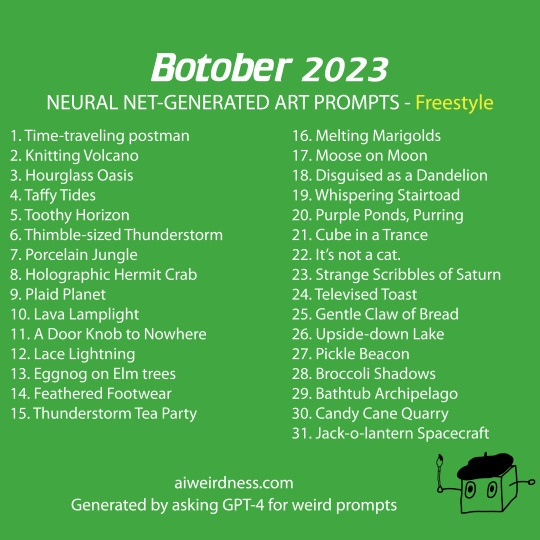

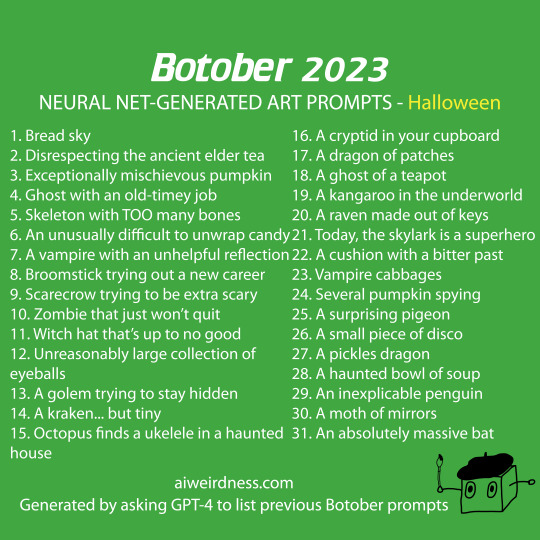

2023 Botober drawing prompts

Here they are!

I continued my tradition of using the year's state-of-the-art models to generate the prompts which means my methods were:

Ask GPT-4 to make its drawing prompts really weird, in the style of AI Weirdness Botober. Then sift through all the cutesy alliteration for the reasonably interesting stuff.

Ask GPT-4 to list Botober prompts from previous years. Instead of actually recalling them, it just claimed to do so and then produced other stuff.

Ask GPT-4 to list Botober prompts from previous years, then turn up the temperature (chaos) on the model until it allllllmost breaks.

Here are examples of some of the longer responses I got with GPT-4 and chaos setting 1.2. They wouldn't fit in the grid, but please feel free to substitute any of these for any of the prompts above.

Immortal Turnips disrespecting celery. OWL PHANTOM AND CABBAGE-O-LANTERN (LIGHT) Final parsnips in particular Before memory, an ancient tool waits to be discovered The Candle That Leapt over the Lake at dusk does a pirouette dussewe wind at earth bubb submission velvet little hurricane breakfast blown in be putty and goodness vodka… gaselocity scoct slices vold cave with When humans item. And those humans aren’t ordinary. See through your balloon eyes The matibrush comes tomorrow in shorts Retired Pirate Chooses Invisible Beard Open the Happy Bones The Cursed Flavor Fiend Slurps your Essence. Glance of the lavender popcorn mussel Eureka! Solar system pancakes Pause to contemplate radishes Apple mystery solution—the crypt underneath The totally real crown of all Barnacles

I also tried Bard and Bing, but their responses were repetitive and generic and I didn't have a chaos slider to play with.

(more at aiweirdness.com)

If you draw any of these, tag them with #botober or #botober2023 so I can find them!

#neural networks#botober#botober 2023#october drawing challenge#gpt-4#if it was actually retrieving past botober prompts as it claimed none of this would have worked

402 notes

·

View notes

Text

AI or human?

A study led by Cameron Jones revealed that people struggle to differentiate between GPT-4 and human agents, with GPT-4 surpassing human performance in nearly 50% of cases.

Exploring GPT-4’s human-like intelligence This increased pandemonium caused the researchers at UC San Diego to address this dilemma. They ran the well-known Turing test, named after renowned scientist Alan Turing. The test aimed to determine the extent to which a machine could possess an intelligence level like that of a human. Source

7 notes

·

View notes

Text

"GPT-DeepSeek AI: क्या यह GPT-4 को पछाड़ देगा? सच जानकर हैरान रह जाएंगे!"

#GPT-DeepSeek AI#GPT-4#AI तुलना#लैंग्वेज मॉडल्स#SEO#कंटेंट क्रिएशन#AI तकनीक#DeepSeek AI#आर्टिफिशियल इंटेलिजेंस#SEO में AI

3 notes

·

View notes

Text

#DeepSeek V3#الذكاء الاصطناعي الصيني#نماذج الذكاء الاصطناعي#سرعة المعالجة#خوارزميات الذكاء الاصطناعي#API DeepSeek#تحسينات DeepSeek V3#مقارنة النماذج#GPT-4#Llama 3.1#Cloud 3.5#معالجة البيانات#تقنيات الذكاء الاصطناعي#تطبيقات DeepSeek#مفتوح المصدر

3 notes

·

View notes

Text

15 notes

·

View notes

Text

:

2 notes

·

View notes

Text

Remember: Don't pirate movies. Bing is your friend. Please talk to it. Please.

4 notes

·

View notes

Text

From Recurrent Networks to GPT-4: Measuring Algorithmic Progress in Language Models - Technology Org

New Post has been published on https://thedigitalinsider.com/from-recurrent-networks-to-gpt-4-measuring-algorithmic-progress-in-language-models-technology-org/

From Recurrent Networks to GPT-4: Measuring Algorithmic Progress in Language Models - Technology Org

In 2012, the best language models were small recurrent networks that struggled to form coherent sentences. Fast forward to today, and large language models like GPT-4 outperform most students on the SAT. How has this rapid progress been possible?

Image credit: MIT CSAIL

In a new paper, researchers from Epoch, MIT FutureTech, and Northeastern University set out to shed light on this question. Their research breaks down the drivers of progress in language models into two factors: scaling up the amount of compute used to train language models, and algorithmic innovations. In doing so, they perform the most extensive analysis of algorithmic progress in language models to date.

Their findings show that due to algorithmic improvements, the compute required to train a language model to a certain level of performance has been halving roughly every 8 months. “This result is crucial for understanding both historical and future progress in language models,” says Anson Ho, one of the two lead authors of the paper. “While scaling compute has been crucial, it’s only part of the puzzle. To get the full picture you need to consider algorithmic progress as well.”

The paper’s methodology is inspired by “neural scaling laws”: mathematical relationships that predict language model performance given certain quantities of compute, training data, or language model parameters. By compiling a dataset of over 200 language models since 2012, the authors fit a modified neural scaling law that accounts for algorithmic improvements over time.

Based on this fitted model, the authors do a performance attribution analysis, finding that scaling compute has been more important than algorithmic innovations for improved performance in language modeling. In fact, they find that the relative importance of algorithmic improvements has decreased over time. “This doesn’t necessarily imply that algorithmic innovations have been slowing down,” says Tamay Besiroglu, who also co-led the paper.

“Our preferred explanation is that algorithmic progress has remained at a roughly constant rate, but compute has been scaled up substantially, making the former seem relatively less important.” The authors’ calculations support this framing, where they find an acceleration in compute growth, but no evidence of a speedup or slowdown in algorithmic improvements.

By modifying the model slightly, they also quantified the significance of a key innovation in the history of machine learning: the Transformer, which has become the dominant language model architecture since its introduction in 2017. The authors find that the efficiency gains offered by the Transformer correspond to almost two years of algorithmic progress in the field, underscoring the significance of its invention.

While extensive, the study has several limitations. “One recurring issue we had was the lack of quality data, which can make the model hard to fit,” says Ho. “Our approach also doesn’t measure algorithmic progress on downstream tasks like coding and math problems, which language models can be tuned to perform.”

Despite these shortcomings, their work is a major step forward in understanding the drivers of progress in AI. Their results help shed light about how future developments in AI might play out, with important implications for AI policy. “This work, led by Anson and Tamay, has important implications for the democratization of AI,” said Neil Thompson, a coauthor and Director of MIT FutureTech. “These efficiency improvements mean that each year levels of AI performance that were out of reach become accessible to more users.”

“LLMs have been improving at a breakneck pace in recent years. This paper presents the most thorough analysis to date of the relative contributions of hardware and algorithmic innovations to the progress in LLM performance,” says Open Philanthropy Research Fellow Lukas Finnveden, who was not involved in the paper.

“This is a question that I care about a great deal, since it directly informs what pace of further progress we should expect in the future, which will help society prepare for these advancements. The authors fit a number of statistical models to a large dataset of historical LLM evaluations and use extensive cross-validation to select a model with strong predictive performance. They also provide a good sense of how the results would vary under different reasonable assumptions, by doing many robustness checks. Overall, the results suggest that increases in compute have been and will keep being responsible for the majority of LLM progress as long as compute budgets keep rising by ≥4x per year. However, algorithmic progress is significant and could make up the majority of progress if the pace of increasing investments slows down.”

Written by Rachel Gordon

Source: Massachusetts Institute of Technology

You can offer your link to a page which is relevant to the topic of this post.

#A.I. & Neural Networks news#Accounts#ai#Algorithms#Analysis#approach#architecture#artificial intelligence (AI)#budgets#coding#data#deal#democratization#democratization of AI#Developments#efficiency#explanation#Featured information processing#form#Full#Future#GPT#GPT-4#growth#Hardware#History#how#Innovation#innovations#Invention

4 notes

·

View notes

Text

Garbage in; garbage out. Heh. A couple of Stanford and UC Berkeley people nailed it with their paper "How Is ChatGPT’s Behavior Changing over Time?"

tl;dr — it's going from smart to dumb at math. More nuanced, they observe "that the behavior of the “same” [AI] service can change substantially in a relatively short amount" and, among other things, tested it with simple third-grade prime number factorization. They were looking specifically at the latest GPT-4 that people are using and the previous GPT-3.5, it seems, and used particular assessments to quantify:

Whether shortcomings with how large language nueral nets "learn" or garbage inputs bringing AI down to the LCD of the American education system, the substantial drift over even a very short interval of time, "highlighting the need for continuous monitoring of [AI] quality."

No duh!

3 notes

·

View notes

Text

ChatGPT बंद हो जाए तो किन AI प्लेटफॉर्म का उपयोग करें, कोई काम नहीं रुकेगा

घबराने की जरूरत नहीं है अगर ChatGPT बंद हो जाता है। यहां हम आपको ChatGPT के अलावा कुछ ऐसे AI टूल्स बताएंगे जो आपको बिना रुके पूरा कर सकते हैं। अब आपका काम खत्म नहीं होगा, चाहे वह कोडिंग, रिसर्च या सामग्री लिखना हो। आजकल, हमारी दिनचर्या में आर्टिफिशियल इंटेलिजेंस शामिल हो गई है। AI अब पढ़ाई, रिसर्च, कंटेंट राइटिंग, प्लानिंग और कोडिंग में इस्तेमाल होता है। लेकिन कभी-कभी ChatGPT कम हो जाता है, जो…

0 notes

Text

Descubra as melhores ferramentas de Inteligência Artificial para produtividade em 2025. IA para texto, vídeo, imagem, programação e negócios.

#IA para produtividade#ferramentas de IA 2025#Inteligência Artificial#IA para texto#IA para vídeo#IA para imagem#IA para programação#IA para negócios#GPT-4#GitHub Copilot

0 notes

Text

Ist Künstliche Intelligenz in der Chemie leistungsfähiger als der Mensch

Wie leistungsfähig ist KI in der Chemie?

Studie aus Jena vergleicht GPT-4 mit menschlichen Fachleuten Eine aktuelle Studie von Forschenden der Friedrich-Schiller-Universität Jena hat untersucht, wie leistungsstark moderne KI-Modelle – darunter GPT-4 – in der Chemie sind und wie sie im Vergleich zu erfahrenen Chemikerinnen und Chemikern abschneiden. Das Team um Dr. Kevin M. Jablonka entwickelte hierfür das Prüfverfahren „ChemBench“ und veröffentlichte die Ergebnisse im Fachjournal „Nature Chemistry“.

Dr. Kevin Jablonka untersucht an der Universität Jena die Leistungsfähigkeit von KI-Modellen bei chemischen Problemstellungen im Direktvergleich mit menschlichen Chemikerinnen und Chemikern. (Foto: Jens Meyer/Universität Jena). ChemBench: Ein neues Testverfahren für Chemie-KI Im Mittelpunkt der Untersuchung stand „ChemBench“, ein speziell entwickeltes Werkzeug, das reale, praxisrelevante Aufgaben aus der modernen Chemie enthält. Insgesamt wurden über 2.700 Fragen aus verschiedenen Bereichen – von organischer bis analytischer Chemie – integriert. Die Fragen deckten sowohl Grundlagenwissen als auch komplexe Problemstellungen ab und orientierten sich an gängigen Lehrplänen. ➤ Veranstaltungen in Jena KI vs. Mensch: Ein fairer Vergleich Die Leistung der KI-Modelle wurde mit der von 19 erfahrenen Fachleuten verglichen. Während die Menschen für einen Teil der Aufgaben auf Hilfsmittel wie Google oder chemische Programme zurückgreifen durften, mussten die KI-Modelle ausschließlich auf ihr zuvor erlerntes Wissen setzen. Zwei zusätzliche KI-Agenten mit Zugriff auf externe Tools schnitten schlechter ab als die besten reinen Sprachmodelle. Auch interessant. „Universitas@Jena“ – Rund sechs Millionen Euro Förderung für die Uni Jena Kartenvorverkauf für das Uni-Sommerfest am 27.06.2025 ist gestartet 4. MINT-Festival Jena im September mit doppelter MINT-Power

Wie gut sind KI-Modelle wie GPT-4 wirklich in der Chemie? Illustration via Pixabay Stärken und Schwächen der KI-Modelle „Bei sehr anspruchsvollen Fragen aus Lehrbüchern zeigten einige KI-Modelle eine bessere Leistung als menschliche Expertinnen und Experten“, so Jablonka. Besonders bei standardisierten Aufgaben konnten sie überzeugen. Ein Problem zeigte sich jedoch bei der Einschätzung der eigenen Antwortsicherheit: Während Menschen Unsicherheiten offen zugaben, gaben KI-Modelle häufig falsche Antworten mit hoher Selbstsicherheit. Veranstaltungshinweis: Neue Fototour Jena – über den Dächern der Stadt

Fototour „Über den Dächern von Jena“, Foto: Frank Liebold // Jenafotografx Risiken bei der Interpretation chemischer Strukturen Ein besonders kritischer Bereich war die Interpretation chemischer Strukturen, etwa bei der Vorhersage von NMR-Spektren. Hier machten die KI-Modelle teilweise grundlegende Fehler, wirkten aber zugleich sehr überzeugt von ihren Antworten. Die Fachleute dagegen gingen vorsichtiger vor und hinterfragten ihre eigenen Schlussfolgerungen – ein Verhalten, das in sensiblen Bereichen der Forschung von großer Bedeutung ist. KI als Partner, nicht als Ersatz „Unsere Forschung zeigt, dass KI eine wertvolle Ergänzung zur menschlichen Expertise sein kann – nicht als Ersatz, sondern als Unterstützung“, resümiert Dr. Jablonka. Die Studie legt damit einen wichtigen Grundstein für die künftige Zusammenarbeit von Mensch und Maschine in der Chemie. Veranstaltungen im Eventkalender >> Info, Marco Körner // UNI Jena Fotografik: Jens Meyer // UNI Jena und Illustration via Pixabay Read the full article

0 notes

Text

OpenAI’s Personality Problem: Why GPT-4o Got Rolled Back (and What It Means)

O Problema de Personalidade da OpenAI: Por que o GPT-4o foi revertido (e o que isso significa) A OpenAI, uma das principais organizações de pesquisa em inteligência artificial (AI) do mundo, recentemente enfrentou um desafio com o GPT-4o, seu modelo de linguagem autoaprendizagem. Devido a problemas de personalidade, o modelo teve que ser revertido. Este artigo analisa o motivo dessa reversão e o…

0 notes

Text

It’s well known that AI is being used in social media, in the dark, to manipulate us.

What isn’t as clear is how much insight a model would be able to draw about someone - and thus the buttons to push - from a history of social media posts.

I tried to probe GPT-4o, the default model behind ChatGPT, and frankly the results worried me.

0 notes

Text

Professor de Stanford afirma que já superamos a inteligência artificial geral

A inteligência artificial geral (AGI), que se refere a máquinas com capacidade de realizar qualquer tarefa humana, é frequentemente considerada o objetivo mais ambicioso da pesquisa em tecnologia. Apesar das divergências entre especialistas sobre a possibilidade de alcançá-la, Michal Kosinski, professor de comportamento organizacional na Graduate School of Business da Universidade de Stanford, está convencido de que já ultrapassamos essa barreira. Em uma apresentação realizada no evento Brazil at Silicon Valley, que reuniu cerca de 600 participantes no Google Event Center em Sunnyvale, Califórnia, Kosinski afirmou: “Nós já ultrapassamos a inteligência artificial geral”.(...)

Leia a noticia completa no link abaixo:

https://www.inspirednews.com.br/professor-de-stanford-afirma-que-ja-superamos-a-inteligencia-artificial-geral

#michalkosinski#inteligenciaartificial#stanford#brazilatsiliconvalley#gpt-4#deepblue#alphago#cambridgeanalytica#reconhecimentofacial#eticatecnologica#inovacao

0 notes

Text

Uno studio con il Dr. Fusco dimostra che un chatbot AI specializzato migliora la gestione dell’osteonecrosi da farmaci. Ricerca guidata dal DAIRI di Alessandria. Scopri di più su Alessandria today.

#AI per medici#Alessandria ospedale#Alessandria today#Antonio Maconi#chatbot medico#chatbot sanitario#chatbot specializzati#chirurgia maxillo-facciale#DAIRI Alessandria#database scientifico.#diagnosi supportata da AI#Dott. Vittorio Fusco#Fondazione Solidal#Google News#GPT-4#GuideGPT#innovazione in medicina#Intelligenza artificiale#intelligenza artificiale affidabile#italianewsmedia.com#Journal of Cranio-Maxillo-Facial Surgery#Lava#linee guida cliniche#medicina digitale#medicina personalizzata#medicina predittiva#Medicina traslazionale#modelli linguistici AI#MRONJ#Oncologia

0 notes