#democratization of AI

Explore tagged Tumblr posts

Text

From Recurrent Networks to GPT-4: Measuring Algorithmic Progress in Language Models - Technology Org

New Post has been published on https://thedigitalinsider.com/from-recurrent-networks-to-gpt-4-measuring-algorithmic-progress-in-language-models-technology-org/

From Recurrent Networks to GPT-4: Measuring Algorithmic Progress in Language Models - Technology Org

In 2012, the best language models were small recurrent networks that struggled to form coherent sentences. Fast forward to today, and large language models like GPT-4 outperform most students on the SAT. How has this rapid progress been possible?

Image credit: MIT CSAIL

In a new paper, researchers from Epoch, MIT FutureTech, and Northeastern University set out to shed light on this question. Their research breaks down the drivers of progress in language models into two factors: scaling up the amount of compute used to train language models, and algorithmic innovations. In doing so, they perform the most extensive analysis of algorithmic progress in language models to date.

Their findings show that due to algorithmic improvements, the compute required to train a language model to a certain level of performance has been halving roughly every 8 months. “This result is crucial for understanding both historical and future progress in language models,” says Anson Ho, one of the two lead authors of the paper. “While scaling compute has been crucial, it’s only part of the puzzle. To get the full picture you need to consider algorithmic progress as well.”

The paper’s methodology is inspired by “neural scaling laws”: mathematical relationships that predict language model performance given certain quantities of compute, training data, or language model parameters. By compiling a dataset of over 200 language models since 2012, the authors fit a modified neural scaling law that accounts for algorithmic improvements over time.

Based on this fitted model, the authors do a performance attribution analysis, finding that scaling compute has been more important than algorithmic innovations for improved performance in language modeling. In fact, they find that the relative importance of algorithmic improvements has decreased over time. “This doesn’t necessarily imply that algorithmic innovations have been slowing down,” says Tamay Besiroglu, who also co-led the paper.

“Our preferred explanation is that algorithmic progress has remained at a roughly constant rate, but compute has been scaled up substantially, making the former seem relatively less important.” The authors’ calculations support this framing, where they find an acceleration in compute growth, but no evidence of a speedup or slowdown in algorithmic improvements.

By modifying the model slightly, they also quantified the significance of a key innovation in the history of machine learning: the Transformer, which has become the dominant language model architecture since its introduction in 2017. The authors find that the efficiency gains offered by the Transformer correspond to almost two years of algorithmic progress in the field, underscoring the significance of its invention.

While extensive, the study has several limitations. “One recurring issue we had was the lack of quality data, which can make the model hard to fit,” says Ho. “Our approach also doesn’t measure algorithmic progress on downstream tasks like coding and math problems, which language models can be tuned to perform.”

Despite these shortcomings, their work is a major step forward in understanding the drivers of progress in AI. Their results help shed light about how future developments in AI might play out, with important implications for AI policy. “This work, led by Anson and Tamay, has important implications for the democratization of AI,” said Neil Thompson, a coauthor and Director of MIT FutureTech. “These efficiency improvements mean that each year levels of AI performance that were out of reach become accessible to more users.”

“LLMs have been improving at a breakneck pace in recent years. This paper presents the most thorough analysis to date of the relative contributions of hardware and algorithmic innovations to the progress in LLM performance,” says Open Philanthropy Research Fellow Lukas Finnveden, who was not involved in the paper.

“This is a question that I care about a great deal, since it directly informs what pace of further progress we should expect in the future, which will help society prepare for these advancements. The authors fit a number of statistical models to a large dataset of historical LLM evaluations and use extensive cross-validation to select a model with strong predictive performance. They also provide a good sense of how the results would vary under different reasonable assumptions, by doing many robustness checks. Overall, the results suggest that increases in compute have been and will keep being responsible for the majority of LLM progress as long as compute budgets keep rising by ≥4x per year. However, algorithmic progress is significant and could make up the majority of progress if the pace of increasing investments slows down.”

Written by Rachel Gordon

Source: Massachusetts Institute of Technology

You can offer your link to a page which is relevant to the topic of this post.

#A.I. & Neural Networks news#Accounts#ai#Algorithms#Analysis#approach#architecture#artificial intelligence (AI)#budgets#coding#data#deal#democratization#democratization of AI#Developments#efficiency#explanation#Featured information processing#form#Full#Future#GPT#GPT-4#growth#Hardware#History#how#Innovation#innovations#Invention

4 notes

·

View notes

Text

Democratization of Artificial Intelligence

Accessible Artificial Intelligence to All “UAE makes ChatGPT Plus subscription free for all residents as part of deal with OpenAI “ Above mentioned news made headline and a step toward Democratization of Artificial Intelligence. Artificial Intelligence (AI) is no longer a distant vision of the future. it is a daily reality. Personalized recommendations on your phone are changing how we live.…

View On WordPress

#Architecture#Artifiical Intelligence#azure#cloud computing#Democratization of AI#EdTech#GenAI#Machine Learning#Public Cloud#security#technology#Technology opinion

0 notes

Text

Is Artificial Intelligence (AI) Ruining the Planet—or Saving It?

AI’s Double-Edged Impact: Innovation or Environmental Cost? Have you heard someone say, “AI is destroying the environment” or “Only tech giants can afford to use it”? You’re not alone. These sound bites are making the rounds���and while they come from real concerns, they don’t tell the whole story. I’ve been doing some digging. And what I found was surprising, even to me: AI is actually getting a…

#AIAffordability#AIAndThePlanet#AIForGood#AITools#DigitalInclusion#FallingAICosts#GreenAI#HumanCenteredAI#InnovationWithPurpose#ResponsibleAI#SustainableTech#TechForChange#AI accessibility#AI affordability#AI and climate change#AI and sustainability#AI and the environment#AI efficiency#AI for good#AI innovation#AI market competition#AI misconceptions#AI myths#Artificial Intelligence#cost of AI#democratization of AI#Digital Transformation#environmental impact of AI#ethical AI use#falling AI costs

0 notes

Text

#Ai#sony#wb#politics#anti capitalism#democrats#republicans#late stage capitalism#new york post#hollywood#lol

10K notes

·

View notes

Text

#marjorie taylor greene#mjt#politics#political#us politics#news#donald trump#american politics#president trump#elon musk#jd vance#law#republican#republicans#house of representatives#congress#government#us government#ai#america#us news#trump administration#maga#elon#american#democrats#trump admin#economy#economics

328 notes

·

View notes

Text

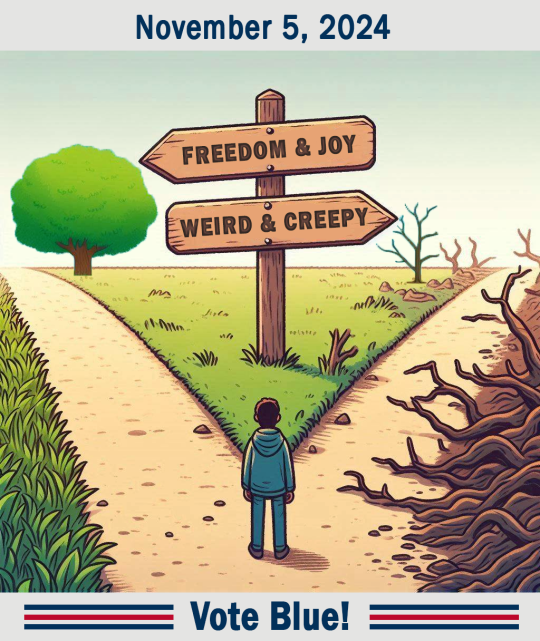

#democracy#vote democrat#election 2024#vote blue#voting#progressive#pro choice#diversity#equality#never trump#human rights#environment#Harris/Walz#ai generated

640 notes

·

View notes

Text

#politics#democrats#donald trump#republicans#leftist hypocrisy#trump#joe biden#leftism#ai generated#ai#kamala harris#kamala 2024#vote kamala#vote blue#vote democrat#vote harris#conspiracies#conspiracy theories

473 notes

·

View notes

Text

Techno-Libertarian Monarchy written by “Mencius Moldbug” aka Curtis Yarvin. These computer tech nerds think they can code their way out of the world’s problems by returning to Feudalism. Their writings are juvenile fantasies and they are not peer reviewed because they are just ludicrous dystopian garbage.

Yet these people believe themselves savant level genius and have fooled not only the poorly educated but billionaire oligarchs like Peter Thiel. These oligarchs are so insulated by wealth they believe their own press releases just like the MAGA cultists orbiting Trump’s ample rump. They talk of floating cities beyond the reach of international law and creating feudal nations in places like Greenland and Panama where lesser tech nerds will volunteer to be serfs in an economy based on crypto.

This anti-democratic set of beliefs has taken deep root in the minds of deluded oligarchs and more recent technocratic oligarchs. These people who once pulled the strings behind the scenes now openly manipulate politicians with large donations. They have total ownership over the Republican Party and the right-wing media. They tell their therapists they are descended from the pharaohs and kings of old and that the rest of us are not even people but drones to serve their every need.

#curtis yarvin#aka Mencius Moldbug#Peter Thiel#fedualism#serfdom#monarchy#anti-democratic#republican assholes#maga morons#techno-libertarians#using AI to erase democracy#republican values

82 notes

·

View notes

Text

I'M BACK, B!+CHES!!!!

"Communism is the doctrine of the conditions of the liberation of the proletariat." - Engels, Principles of Communism (1847)

(Pt.1)

#united front#meme#memes#anticapitalism#communism#socialism#imperialism#free palestine#capitalism#anti imperialism#antifascism#free sudan#free congo#free yemen#joker#billionaires#chuck schumer#democrats#democratic party#liberals#kamala harris#barack obama#ai#studio ghibli#donald trump#elon musk#cybertruck#its always sunny in philadelphia

45 notes

·

View notes

Text

#donald trump#ai art#ai#gavin mcinnes#proud boys#jd vance#kanye west#president trump#trump#maga#netanyahu#benjamin netanyahu#israel#Iran#russia#liberal#democrats#republicans

17 notes

·

View notes

Text

They have turned the Palestinians into actual Guinea pigs for the military industrial complex.

We will see the robots and miserable remote controlled dogs at the next big BLM protest on American soil soon enough.

#free palestine#blm#robots#woc#news#democrats#republicans#politics#poc#women of color#black lives matter#ai#israel#capitalism#palestine#military#chicago#Pets

15K notes

·

View notes

Text

#politics#political#us politics#donald trump#news#president trump#elon musk#american politics#jd vance#law#deepfake#deep fakes#ai#video#augmented reality#hollywood#celebrities#us news#america#maga#president donald trump#make america great again#republicans#republican#democrats#economics#elon#congress#senate#house of representatives

31 notes

·

View notes

Text

#democracy#vote democrat#election 2024#vote blue#voting#progressive#pro choice#diversity#equality#never trump#human rights#environment#Harris/Walz#ai generated

96 notes

·

View notes

Text

"Grok is a generative AI chatbot and large language model launched in 2023 by Elon Musk's xAI. It's designed to be objective and truthful." (source)

(Source: Grok AI)

#Grok#news#elon musk#trump#politics#government#us politics#America#USA#donald trump#democracy#republicans#democrats#GOP#American politics#aesthetic#beauty-funny-trippy#Washington DC#maga#conservatives#vote#voting#presidential election#artificial intelligence#ai#current events#RFK Jr#social media#x#Twitter

25 notes

·

View notes

Text

It is disturbing that Musk's AI chatbot is spreading false information about the 2024 election. "Free speech" should not include disinformation. We cannot survive as a nation if millions of people live in an alternative, false reality based on disinformation and misinformation spread by unscrupulous parties. The above link is from the Internet Archive, so anyone can read the entire article. Below are some excerpts:

Five secretaries of state plan to send an open letter to billionaire Elon Musk on Monday, urging him to “immediately implement changes” to X’s AI chatbot Grok, after it shared with millions of users false information suggesting that Kamala Harris was not eligible to appear on the 2024 presidential ballot. The letter, spearheaded by Minnesota Secretary of State Steve Simon and signed by his counterparts Al Schmidt of Pennsylvania, Steve Hobbs of Washington, Jocelyn Benson of Michigan and Maggie Toulouse Oliver of New Mexico, urges Musk to “immediately implement changes to X’s AI search assistant, Grok, to ensure voters have accurate information in this critical election year.” [...] The secretaries cited a post from Grok that circulated after Biden stepped out of the race: “The ballot deadline has passed for several states for the 2024 election,” the post read, naming nine states: Alabama, Indiana, Michigan, Minnesota, New Mexico, Ohio, Pennsylvania, Texas and Washington. Had the deadlines passed in those states, the vice president would not have been able to replace Biden on the ballot. But the information was false. In all nine states, the ballot deadlines have not passed and upcoming ballot deadlines allow for changes to candidates. [...] Musk launched Grok last year as an anti-“woke” chatbot, professing to be frustrated by what he says is the liberal bias of ChatGPT. In contrast to AI tools built by Open AI, Microsoft and Google, which are trained to carefully navigate controversial topics, Musk said he wanted Grok to be unfiltered and “answer spicy questions that are rejected by most other AI systems.” [...] Secretaries of state are grappling with an onslaught of AI-driven election misinformation, including deepfakes, ahead of the 2024 election. Simon testified on the subject before the Senate Rules and Administration Committee last year. [...] “It’s important that social media companies, especially those with global reach, correct mistakes of their own making — as in the case of the Grok AI chatbot simply getting the rules wrong,” Simon added. “Speaking out now will hopefully reduce the risk that any social media company will decline or delay correction of its own mistakes between now and the November election.” [color emphasis added]

#elon musk#grok ai#false election information#democratic secretaries of state#x/twitter#the washington post#internet archive

67 notes

·

View notes

Text

"I think that the $50 million spent on condoms for Palestinians in Gaza or free benefits for people who have broken the law to be here in the first place, could be better spent on Americans in need right here in the US. But hey, I’m not an appointed spendthrift, so what do I know…"

READ & LISTEN NOW: https://www.undergroundusa.com/p/taxpayer-dollars-cant-be-considered

#NonProfit#NGO#Trump#FederalSpending#FederalFunds#SpendingFreeze#Misinformation#China#DeepSeek#AI#Disaster#SenateConfirmationHearings#FreeSpeech#NeoMarxism#MAGA#Liberty#Media#Podcast#Constitution#USA#Woke#Democrats#Politics#News#Truth

23 notes

·

View notes