#Java programming logic

Explore tagged Tumblr posts

Text

What Is Object-Oriented Programming in Java and Why Does It Matter?

Java is super popular in the programming world, and one of the main reasons for that is its use of object-oriented programming (OOP). So, what exactly is OOP in Java, and why should you care? OOP is a way to organize your code by grouping related data and functions into objects. This makes Java easier to work with, more organized, and simpler to update.

Key Ideas Behind Object-Oriented Programming

To get a grip on OOP in Java, you need to know four main ideas: Encapsulation, Inheritance, Polymorphism, and Abstraction. These concepts help you write clean and reusable code, which is pretty important in software development today.

Encapsulation: Keeping Data Safe

Encapsulation means protecting the internal data of an object from being accessed directly. You do this using private variables and public methods. When you take a Java course in Coimbatore, you’ll learn how this helps keep your code secure and safeguards data from accidental changes.

Inheritance: Building on Existing Code

Inheritance lets a new class take on the properties of an existing class, which cuts down on code repetition and encourages reuse. Grasping this idea is key if you want to dive deeper into Java development, especially in a Java Full Stack Developer course in Coimbatore.

Polymorphism: Flexibility in Code

Polymorphism allows you to treat objects as if they are from their parent class rather than their specific class. This means you can write more flexible code that works across different cases. You’ll definitely encounter this in Java training in Coimbatore, and it’s essential for creating scalable applications.

Abstraction: Simplifying Complexity

Abstraction is all about hiding the messy details and only showing what’s necessary. For example, when you use your smartphone, you don’t need to understand how everything works inside it. Java uses abstract classes and interfaces to make things simpler, which you’ll notice in any solid Java course in Coimbatore.

Why It Matters in Real Life

So, circling back: What is OOP in Java and why is it important? Its real strength comes from making big software projects easier to manage. OOP allows multiple developers to work on their parts without stepping on each other’s toes.

OOP and Full Stack Development

Full stack developers cover both the frontend and backend. Understanding OOP in Java can make your backend logic much better. That’s why a Java Full Stack Developer Course in Coimbatore focuses on OOP right from the start.

Java and Your Career Path

If you're looking to become a software developer, getting a good handle on OOP is a must. Companies love using Java for building big applications because of its OOP focus. Joining a Java training program in Coimbatore can help you get the practical experience you need to be job-ready.

Wrapping Up: Start Your Java Journey with Xplore IT Corp

So, what is object-oriented programming in Java and why is it important? It’s a great way to create secure and reusable applications. Whether you’re interested in a Java Course in Coimbatore, a Full Stack Developer course, or overall Java training, Xplore IT Corp has programs to help you kick off your career.

FAQs

1. What are the main ideas of OOP in Java?

The key ideas are Encapsulation, Inheritance, Polymorphism, and Abstraction.

2. Why should I learn OOP in Java?

Because it’s the foundation of Java and helps you create modular and efficient code.

3. Is it tough to learn OOP for beginners?

Not really! With good guidance from a quality Java training program in Coimbatore, it becomes easy and fun.

4. Do I need to know OOP for full stack development?

Definitely! Most backend work in full stack development is based on OOP, which is covered in a Java Full Stack Developer Course in Coimbatore.

5. Where can I find good Java courses in Coimbatore?

You should check out Xplore IT Corp; they’re known for offering the best Java courses around.

#Java programming#object-oriented concepts#Java syntax#Java classes#Java objects#Java methods#Java interface#Java inheritance#Java encapsulation#Java abstraction#Java polymorphism#Java virtual machine#Java development tools#Java backend development#Java software development#Java programming basics#advanced Java programming#Java application development#Java code examples#Java programming logic

0 notes

Text

Hash Tables in Programming: The Ubiquitous Utility for Efficient Data Lookup

Hash tables are fundamental to modern software development, powering everything from database indexing to web caches and compiler implementations. Despite their simplicity, they solve surprisingly complex problems across different fields of computer science.

Introduction: Hash Tables – The Unsung Heroes of Programming When you open a well-organized filing cabinet, you can quickly find what you’re looking for without flipping through every folder. In programming, hash tables serve a similar purpose: they allow us to store and retrieve data with incredible speed and efficiency. Hash tables are fundamental to modern software development, powering…

#application-developement#c-sharp#embedded-development#hash-tables#java#javascript#learn-application-development#micropython#mobile-development#programming#programming-logic#programming-tables#python#software-developement#software-development#web-development

0 notes

Text

Spring Boot Interview Questions for Experienced Developers

Imagine your service that fetches data from a remote API. Under ideal circumstances, you make an HTTP request, and the data comes back. But in reality, issues might arise. The remote server might be under heavy load, your own service might be experiencing network latency, or any number of other transient problems could occur. If your application doesn’t handle these scenarios well, you end up…

View On WordPress

#Best Practices#Circuit Breaker#interview#Interview Success Tips#Java#Microservices#programming#REtry Logic#Senior Developer#Software Architects#Spring Boot

0 notes

Text

Web Designing VS. Web Development: What's the Difference?

Ever wondered how websites work? Well, there are two main parts to it! Let's break it down for you!

1)Focus:

Web Designing: Emphasizes the visual aspect, creating an appealing user interface with layout, colors, and typography.

Web Development: Ensures smooth functionality, enabling seamless user navigation and interactions.

2)Scripting:

Web Designing: Utilizes client-side scripts like JavaScript for interactive elements and user experience enhancement.

Web Development: Involves both client-side and server-side scripting. Client-side manages user interactions, while server-side handles databases and dynamic content generation.

3)Development:

Web Designing: Front-end development deals with user interface and experience.

Web Development: Includes both front-end and back-end. Front-end focuses on user interface, while back-end manages server-side processes and data.

4)Skill set:

Web Designing: Requires creativity and innovation to design visually appealing interfaces.

Web Development: Demands technical and logical skills to manage server operations and ensure functionality.

5)Programming Languages:

Web Designing: Uses HTML, CSS, and JavaScript to structure visual elements.

Web Development: Employs various languages like Python, SQL, Java, C++, Ruby, .NET, and PHP for back-end processes.

6)Tools:

Web Designing: Utilizes tools like Photoshop, Sketch, and Adobe Dreamweaver for interface design.

Web Development: Relies on tools like GitHub for collaboration and Chrome developer tools for debugging.

In a nutshell, Web Designing focus on making websites look fantastic and user-friendly, while Web Development work behind the scenes to ensure everything works like magic! Both are crucial for creating awesome websites!

So, which side do you find more exciting? Web Designing or Web Development? Let us know in the comments!

#webdesigning#webdevelopment#userinterface#userexperience#innovation#imagination#creativity#technical#logical#programming#html#css#javascript#dotnet#php#sql#python#java#github#photoshop#wordpress#dotsnkey#dotsnkeytechnologies

1 note

·

View note

Text

The so-called Department of Government Efficiency (DOGE) is starting to put together a team to migrate the Social Security Administration’s (SSA) computer systems entirely off one of its oldest programming languages in a matter of months, potentially putting the integrity of the system—and the benefits on which tens of millions of Americans rely—at risk.

The project is being organized by Elon Musk lieutenant Steve Davis, multiple sources who were not given permission to talk to the media tell WIRED, and aims to migrate all SSA systems off COBOL, one of the first common business-oriented programming languages, and onto a more modern replacement like Java within a scheduled tight timeframe of a few months.

Under any circumstances, a migration of this size and scale would be a massive undertaking, experts tell WIRED, but the expedited deadline runs the risk of obstructing payments to the more than 65 million people in the US currently receiving Social Security benefits.

“Of course, one of the big risks is not underpayment or overpayment per se; [it’s also] not paying someone at all and not knowing about it. The invisible errors and omissions,” an SSA technologist tells WIRED.

The Social Security Administration did not immediately reply to WIRED’s request for comment.

SSA has been under increasing scrutiny from president Donald Trump’s administration. In February, Musk took aim at SSA, falsely claiming that the agency was rife with fraud. Specifically, Musk pointed to data he allegedly pulled from the system that showed 150-year-olds in the US were receiving benefits, something that isn’t actually happening. Over the last few weeks, following significant cuts to the agency by DOGE, SSA has suffered frequent website crashes and long wait times over the phone, The Washington Post reported this week.

This proposed migration isn’t the first time SSA has tried to move away from COBOL: In 2017, SSA announced a plan to receive hundreds of millions in funding to replace its core systems. The agency predicted that it would take around five years to modernize these systems. Because of the coronavirus pandemic in 2020, the agency pivoted away from this work to focus on more public-facing projects.

Like many legacy government IT systems, SSA systems contain code written in COBOL, a programming language created in part in the 1950s by computing pioneer Grace Hopper. The Defense Department essentially pressured private industry to use COBOL soon after its creation, spurring widespread adoption and making it one of the most widely used languages for mainframes, or computer systems that process and store large amounts of data quickly, by the 1970s. (At least one DOD-related website praising Hopper's accomplishments is no longer active, likely following the Trump administration’s DEI purge of military acknowledgements.)

As recently as 2016, SSA’s infrastructure contained more than 60 million lines of code written in COBOL, with millions more written in other legacy coding languages, the agency’s Office of the Inspector General found. In fact, SSA’s core programmatic systems and architecture haven’t been “substantially” updated since the 1980s when the agency developed its own database system called MADAM, or the Master Data Access Method, which was written in COBOL and Assembler, according to SSA’s 2017 modernization plan.

SSA’s core “logic” is also written largely in COBOL. This is the code that issues social security numbers, manages payments, and even calculates the total amount beneficiaries should receive for different services, a former senior SSA technologist who worked in the office of the chief information officer says. Even minor changes could result in cascading failures across programs.

“If you weren't worried about a whole bunch of people not getting benefits or getting the wrong benefits, or getting the wrong entitlements, or having to wait ages, then sure go ahead,” says Dan Hon, principal of Very Little Gravitas, a technology strategy consultancy that helps government modernize services, about completing such a migration in a short timeframe.

It’s unclear when exactly the code migration would start. A recent document circulated amongst SSA staff laying out the agency’s priorities through May does not mention it, instead naming other priorities like terminating “non-essential contracts” and adopting artificial intelligence to “augment” administrative and technical writing.

Earlier this month, WIRED reported that at least 10 DOGE operatives were currently working within SSA, including a number of young and inexperienced engineers like Luke Farritor and Ethan Shaotran. At the time, sources told WIRED that the DOGE operatives would focus on how people identify themselves to access their benefits online.

Sources within SSA expect the project to begin in earnest once DOGE identifies and marks remaining beneficiaries as deceased and connecting disparate agency databases. In a Thursday morning court filing, an affidavit from SSA acting administrator Leland Dudek said that at least two DOGE operatives are currently working on a project formally called the “Are You Alive Project,” targeting what these operatives believe to be improper payments and fraud within the agency’s system by calling individual beneficiaries. The agency is currently battling for sweeping access to SSA’s systems in court to finish this work. (Again, 150-year-olds are not collecting social security benefits. That specific age was likely a quirk of COBOL. It doesn’t include a date type, so dates are often coded to a specific reference point—May 20, 1875, the date of an international standards-setting conference held in Paris, known as the Convention du Mètre.)

In order to migrate all COBOL code into a more modern language within a few months, DOGE would likely need to employ some form of generative artificial intelligence to help translate the millions of lines of code, sources tell WIRED. “DOGE thinks if they can say they got rid of all the COBOL in months, then their way is the right way, and we all just suck for not breaking shit,” says the SSA technologist.

DOGE would also need to develop tests to ensure the new system’s outputs match the previous one. It would be difficult to resolve all of the possible edge cases over the course of several years, let alone months, adds the SSA technologist.

“This is an environment that is held together with bail wire and duct tape,” the former senior SSA technologist working in the office of the chief information officer tells WIRED. “The leaders need to understand that they’re dealing with a house of cards or Jenga. If they start pulling pieces out, which they’ve already stated they’re doing, things can break.”

260 notes

·

View notes

Note

Hey I just got an idea what if there was a chrome extension where Markiplier would randomly come to the upper left corner of your screen and react to what you’re doing Idc if you make it I just needed to tell this to a software developer

Just thinking of the logic behind coding each and every possible reaction is giving me a headache lmao, but if what you want is a character on screen you could always make a shimeji desktop pet.

The Shimeji desktop mascot is a configurable, open-source program that allows users to create character-specific desktop buddies. The Shimeji desktop mascot is available on Windows and can be installed as a browser extension on Chrome.

I used to make them back when they worked with java, not sure how the extension works now but it shouldn’t be that difficult. You can always look it up in youtube!

30 notes

·

View notes

Text

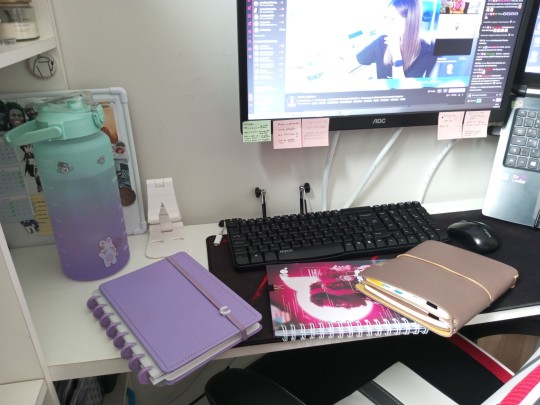

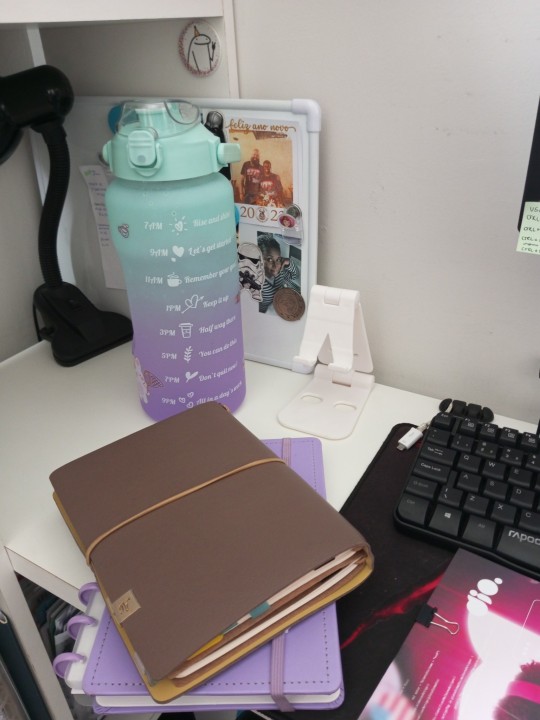

19 July 2023

I am trying my best to drink more water, and this big water bottle with motivational quotes is helping me a lot! Is strange, but to look at it, and know the pacing is great.

About programming, as I have to hurry up with my studies in order to keep up with the new architecture squad, I am trying to figure out the best notebook to write, as I learn better by writing.

I am using the purple one to Java, programming logic, SQL and GIT-Github; the colorful to Typescript and Angular, and I am thinking of using the brown to Spring Framework (as it is a huge topic).

Yes, for whom was working with JSF, JSP, JQuery (it is better to say that I was struggling with JQuery... hated it), it is a big change to turn my mindset to this modern stack - I will deal less with legacy code.

I am accepting all possible tips regarding Angular and Spring Framework, if is there anyone working with it here ❤️

That's it! Have you all a great Wednesday 😘

#studyblr#study#study blog#daily life#dailymotivation#study motivation#studying#study space#productivity#study desk#programming struggles#programming#must lean java#spring framework#coding#coding community#programming community#bottle#water bottle#notebook#stationary#purple#i love purple

66 notes

·

View notes

Text

Top B.Tech Courses in Maharashtra – CSE, AI, IT, and ECE Compared

B.Tech courses continue to attract students across India, and Maharashtra remains one of the most preferred states for higher technical education. From metro cities to emerging academic hubs like Solapur, students get access to diverse courses and skilled faculty. Among all available options, four major branches stand out: Computer Science and Engineering (CSE), Artificial Intelligence (AI), Information Technology (IT), and Electronics and Communication Engineering (ECE).

Each of these streams offers a different learning path. B.Tech in Computer Science and Engineering focuses on coding, algorithms, and system design. Students learn Python, Java, data structures, software engineering, and database systems. These skills are relevant for software companies, startups, and IT consulting.

B.Tech in Artificial Intelligence covers deep learning, neural networks, data processing, and computer vision. Students work on real-world problems using AI models. They also learn about ethical AI practices and automation systems. Companies hiring AI talent are in healthcare, retail, fintech, and manufacturing.

B.Tech in IT trains students in systems administration, networking, cloud computing, and application services. Graduates often work in system support, IT infrastructure, and data management. IT blends technical and management skills for enterprise use.

B.Tech ECE is for students who enjoy working with circuits, embedded systems, mobile communication, robotics, and signal processing. This stream is useful for telecom companies, consumer electronics, and control systems in industries.

Key Differences Between These B.Tech Programs:

CSE is programming-intensive. IT includes applications and system-level operations.

AI goes deeper into data modeling and pattern recognition.

ECE focuses more on hardware, communication, and embedded tech.

AI and CSE overlap, but AI involves more research-based learning.

How to Choose the Right B.Tech Specialization:

Ask yourself what excites you: coding, logic, data, devices, or systems.

Look for colleges with labs, project-based learning, and internship support.

Talk to seniors or alumni to understand real-life learning and placements.

Explore industry demand and long-term growth in each field.

MIT Vishwaprayag University, Solapur, offers all four B.Tech programs with updated syllabi, modern infrastructure, and practical training. Students work on live projects, participate in competitions, and build career skills through soft skills training. The university also encourages innovation and startup thinking.

Choosing the right course depends on interest and learning style. CSE and AI suit tech lovers who like coding and research. ECE is great for those who enjoy building real-world devices. IT fits students who want to blend business with technology.

Take time to explore the subjects and talk to faculty before selecting a stream. Your B.Tech journey shapes your future, so make an informed choice.

#B.Tech in Computer Science and Engineering#B.Tech in Artificial Intelligence#B.Tech in IT#B.Tech ECE#B.Tech Specialization

2 notes

·

View notes

Text

Consistency and Reducibility: Which is the theorem and which is the lemma?

Here's an example from programming language theory which I think is an interesting case study about how "stories" work in mathematics. Even if a given theorem is unambiguously defined and certainly true, the ways people contextualize it can still differ.

To set the scene, there is an idea that typed programming languages correspond to logics, so that a proof of an implication A→B corresponds to a function of type A→B. For example, the typing rules for simply-typed lambda calculus are exactly the same as the proof rules for minimal propositional logic, adding an empty type Void makes it intuitionistic propositional logic, by adding "dependent" types you get a kind of predicate logic, and really a lot of different programming language features also make sense as logic rules. The question is: if we propose a new programming language feature, what theorem should we prove in order to show that it also makes sense logically?

The story I first heard goes like this. In order to prove that a type system is a good logic we should prove that it is consistent, i.e. that not every type is inhabited, or equivalently that there is no program of type Void. (This approach is classical in both senses of the word: it goes back to Hilbert's program, and it is justified by Gödel's completeness theorem/model existence theorem, which basically says that every consistent theory describes something.)

Usually it is obvious that no values can be given type Void, the only issue is with non-value expressions. So it suffices to prove that the language is normalizing, that is to say every program eventually computes to a value, as opposed to going into an infinite loop. So we want to prove:

If e is an expression with some type A, then e evaluates to some value v.

Naively, you may try to prove this by structural induction on e. (That is, you assume as an induction hypothesis that all subexpressions of e normalize, and prove that e does.) However, this proof attempt gets stuck in the case of a function call like (λx.e₁) e₂. Here we have some function (λx.e₁) : A→B and a function argument e₂ : A. The induction hypothesis just says that (λx.e₁) normalizes, which is trivially true since it's already a value, but what we actually need is an induction hypothesis that says what will happen when we call the function.

In 1967 William Tait had a good idea. We should instead prove:

If e is an expression with some type A, then e is reducible at type A.

"Reducible at type A" is a predicate defined on the structure of A. For base types, it just means normalizable, while for function types we define

e is reducable at type A→B ⇔ for all expressions e₁, if e₁ is reducible at A then (e e₁) is reducible at B.

For example, an function is reducible at type Bool→Bool→Bool if whenever you call it with two normalizing boolean arguments, it returns a boolean value (rather than looping forever).

This really is a very good idea, and it can be generalized to prove lots of useful theorems about programming languages beyond just termination. But the way I (and I think most other people, e.g. Benjamin Pierce in Types and Programming Languages) have told the story, it is strictly a technical device: we prove consistency via normalization via reducibility.

❧

The story works less well when you consider programs that aren't normalizing, which is certainly not an uncommon situation: nothing in Java or Haskell forbids you from writing infinite loops. So there has been some interest in how dependent types work if you make termination-checking optional, with some famous projects along these lines being Idris and Dependent Haskell. The idea here is that if you write a program that does terminate it should be possible to interpret it as a proof, but even if a program is not obviously terminating you can still run it.

At this point, with the "consistency through normalization" story in mind, you may have a bad idea: "we can just let the typechecker try to evaluate a given expression at typechecking-time, and if it computes a value, then we can use it as as a proof!" Indeed, if you do so then the typechecker will reject all attempts to "prove" Void, so you actually create a consistent logic.

If you think about it a little longer, you notice that it's a useless logic. For example, an implication like ∀n.(n² = 3) is provable, it's inhabited by the value (λn. infinite_loop()). That function is a perfectly fine value, even though it will diverge as soon as you call it. In fact, all ∀-statements and implications are inhabited by function values, and proving universally quantified statements is the entire point of using logical proof at all.

❧

So what theorem should you prove, to ensure that the logic makes sense? You want to say both that Void is unprovable, and also that if a type A→B is inhabited, then A really implies B, and so on recursively for any arrow types inside A or B. If you think a bit about this, you want to prove that if e:A, then e is reducible at type A... And in fact, Kleene had already proposed basically this (under the name realizability) as a semantics for Intuitionistic Logic, back in the 1940s.

So in the end, you end up proving the same thing anyway—and none of this discussion really becomes visible in the formal sequence of theorems and lemmas. The false starts need to passed along in the asides in the text, or in tumblr posts.

8 notes

·

View notes

Text

why be an academic

be an academic because curiosity is the fuel to passion. because curiosity is the stepping stone to gaining knowledge. because knowledge is the most powerful weapon an individual can possess

because no one can take away your knowledge from you. because knowledge is what you need to lead strong arguments. because life has no meaning without it. because knowledge is what you need to live - to flourish - to evolve - to discover - to explore - to love - to complain - to enjoy little things in life. gaining more knowledge gives you the knack for appreciation of the little things in life. it makes you experience unexpressed emotions because you start exploring their depths. you understand why stars exist. you understand the purpose of your existence. you can suddenly converse in languages you never imagined conversing in.

that is the beauty of knowledge understanding things others don't because you studied them by sitting at your desk late the previous night. devouring over your books with your pen in one hand and highlighter in the other. solving myriads of physics numerical and deriving equations that scholars worked on ages ago. studying various chemical equations so that if anyone asks you any one, you wouldn't have to think twice before answering it. reading shakespeare with your ankles crossed, brows knit up, wrinkled forehead with the smell of coffee in the air knowing that you would think of the scene in the book for a long time after. typing away code scripts for java programs trying to figure out their logic. a calmness engraved inside your mind- knowing that all of this will help you in the end.

in the end, when everyone will be scrambling and looking around for notes, you will be revising them the third time as if it's normal to do so. when everyone's faces will hold fear, yours will hold confidence. when others will sob, you will grin proudly. which is why knowledge is power. and if you have the resources to gain this immense power, do not let them go to waste.

#reading#motivation#my writing#100 days of productivity#notes#knowledge#power#light academia#dark academia#chaotic academia#classic academia

42 notes

·

View notes

Note

Any tips on learning python? I already know Java, C++, and JavaScript.

Hiya! 💗

Since you already know those other languages, Python will be literally a piece of cake. It'll be easy for you, in my opinion.

Tips? I would say:

Start with the Basics: Begin by understanding the fundamental syntax and concepts of Python. After learning those, you can basically apply the languages you know logic into Python code and you'll be done. You can use online tutorials on YouTube or free online pdf books to get a good grasp of the basics.

Leverage Your Programming Experience:Like I mentioned Python shares similarities with many languages, so relate Python concepts to what you already know. For example, understand Python data types and structures in comparison to those in Java, C++, or JavaScript.

Projects and Practice:I sing this on my blog but practice is crucial. Start small projects or challenges to apply your Python knowledge. Depends what you want to build e.g. console apps, games, websites etc. Just build something small every so often!

Hope this helps! More tips I made: ask 1 | project ideas | random resources

⤷ ♡ my shop ○ my mini website ○ pinned ○ navigation ♡

#my asks#codeblr#coding#progblr#programming#studying#studyblr#learn to code#comp sci#tech#programmer#python#resources#python resources

29 notes

·

View notes

Text

It's Not Just An Operator...It's a Ternary Operator!

It's Not Just An Operator...It's a Ternary Operator!

What is the ternary operator? Why is it such a beloved feature across so many programming languages? If you’ve ever wished you could make your code cleaner, faster, and more elegant, this article is for you. Join us as we dive into the fascinating world of the ternary operator—exploring its syntax, uses, pitfalls, and philosophical lessons—all while sprinkling in humor and examples from different…

#application-developement#c-sharp#coding#coding-logic#java#javascript#learn-application-development#micropython#programming#programming-logic#programming-operators#python#software-developement#software-development

0 notes

Text

ByteByteGo | Newsletter/Blog

From the newsletter:

Imperative Programming Imperative programming describes a sequence of steps that change the program’s state. Languages like C, C++, Java, Python (to an extent), and many others support imperative programming styles.

Declarative Programming Declarative programming emphasizes expressing logic and functionalities without describing the control flow explicitly. Functional programming is a popular form of declarative programming.

Object-Oriented Programming (OOP) Object-oriented programming (OOP) revolves around the concept of objects, which encapsulate data (attributes) and behavior (methods or functions). Common object-oriented programming languages include Java, C++, Python, Ruby, and C#.

Aspect-Oriented Programming (AOP) Aspect-oriented programming (AOP) aims to modularize concerns that cut across multiple parts of a software system. AspectJ is one of the most well-known AOP frameworks that extends Java with AOP capabilities.

Functional Programming Functional Programming (FP) treats computation as the evaluation of mathematical functions and emphasizes the use of immutable data and declarative expressions. Languages like Haskell, Lisp, Erlang, and some features in languages like JavaScript, Python, and Scala support functional programming paradigms.

Reactive Programming Reactive Programming deals with asynchronous data streams and the propagation of changes. Event-driven applications, and streaming data processing applications benefit from reactive programming.

Generic Programming Generic Programming aims at creating reusable, flexible, and type-independent code by allowing algorithms and data structures to be written without specifying the types they will operate on. Generic programming is extensively used in libraries and frameworks to create data structures like lists, stacks, queues, and algorithms like sorting, searching.

Concurrent Programming Concurrent Programming deals with the execution of multiple tasks or processes simultaneously, improving performance and resource utilization. Concurrent programming is utilized in various applications, including multi-threaded servers, parallel processing, concurrent web servers, and high-performance computing.

#bytebytego#resource#programming#concurrent#generic#reactive#funtional#aspect#oriented#aop#fp#object#oop#declarative#imperative

8 notes

·

View notes

Text

I'm fairly certain this is just some kind of fork bomb.

or a weaponized logic error based on how ~ATH is described. I mean, it seems extremely characteristic of the language for whoever made it to force it to compile regardless of if the code has an error.

seriously, look at this and tell me that forcing a logic error isn't the easiest way to make it do anything.

as for the curse thing I have no idea. neither THIS is ever defined and NULL isn't either. in Java, NULL is an empty value but that might not be true in ~ATH. Karkat uses it in his program too but he doesn't explain if it's supposed to do anything. he says he's just horsing around with terminating loops so the assumption would be that its a placeholder value, but Sollux's is complete. it's really unclear what part of the code is supposed to effect the user themself, but it might just be that whatever hellish technology Alternian computers run on is just able to do that and the logic error targets that.

2 notes

·

View notes

Text

Close to being done with my programming class and to be honest, I don't know how much I learned. I mean, I guess I now know the syntax of Java, but only the basics, and a lot of that is the same or similar to C++. Java just has a lot more thing.otherThing, which is kind of annoying. C++ has a weird way of doing input and output, but I like it. Loops and ifs are basically identical for both. Function creation and calling is the same. Basically all of the logic and operations are the same.

It makes sense, since that consistency helps with learning more programming languages, but it didn't feel like I was learning much.

I still don't like printf, even though I have a massive appreciation for what it does. I just hate the way it works.

8 notes

·

View notes

Text

Normally I just post about movies but I'm a software engineer by trade so I've got opinions on programming too.

Apparently it's a month of code or something because my dash is filled with people trying to learn Python. And that's great, because Python is a good language with a lot of support and job opportunities. I've just got some scattered thoughts that I thought I'd write down.

Python abstracts a number of useful concepts. It makes it easier to use, but it also means that if you don't understand the concepts then things might go wrong in ways you didn't expect. Memory management and pointer logic is so damn annoying, but you need to understand them. I learned these concepts by learning C++, hopefully there's an easier way these days.

Data structures and algorithms are the bread and butter of any real work (and they're pretty much all that come up in interviews) and they're language agnostic. If you don't know how to traverse a linked list, how to use recursion, what a hash map is for, etc. then you don't really know how to program. You'll pretty much never need to implement any of them from scratch, but you should know when to use them; think of them like building blocks in a Lego set.

Learning a new language is a hell of a lot easier after your first one. Going from Python to Java is mostly just syntax differences. Even "harder" languages like C++ mostly just mean more boilerplate while doing the same things. Learning a new spoken language in is hard, but learning a new programming language is generally closer to learning some new slang or a new accent. Lists in Python are called Vectors in C++, just like how french fries are called chips in London. If you know all the underlying concepts that are common to most programming languages then it's not a huge jump to a new one, at least if you're only doing all the most common stuff. (You will get tripped up by some of the minor differences though. Popping an item off of a stack in Python returns the element, but in Java it returns nothing. You have to read it with Top first. Definitely had a program fail due to that issue).

The above is not true for new paradigms. Python, C++ and Java are all iterative languages. You move to something functional like Haskell and you need a completely different way of thinking. Javascript (not in any way related to Java) has callbacks and I still don't quite have a good handle on them. Hardware languages like VHDL are all synchronous; every line of code in a program runs at the same time! That's a new way of thinking.

Python is stereotyped as a scripting language good only for glue programming or prototypes. It's excellent at those, but I've worked at a number of (successful) startups that all were Python on the backend. Python is robust enough and fast enough to be used for basically anything at this point, except maybe for embedded programming. If you do need the fastest speed possible then you can still drop in some raw C++ for the places you need it (one place I worked at had one very important piece of code in C++ because even milliseconds mattered there, but everything else was Python). The speed differences between Python and C++ are so much smaller these days that you only need them at the scale of the really big companies. It makes sense for Google to use C++ (and they use their own version of it to boot), but any company with less than 100 engineers is probably better off with Python in almost all cases. Honestly thought the best programming language is the one you like, and the one that you're good at.

Design patterns mostly don't matter. They really were only created to make up for language failures of C++; in the original design patterns book 17 of the 23 patterns were just core features of other contemporary languages like LISP. C++ was just really popular while also being kinda bad, so they were necessary. I don't think I've ever once thought about consciously using a design pattern since even before I graduated. Object oriented design is mostly in the same place. You'll use classes because it's a useful way to structure things but multiple inheritance and polymorphism and all the other terms you've learned really don't come into play too often and when they do you use the simplest possible form of them. Code should be simple and easy to understand so make it as simple as possible. As far as inheritance the most I'm willing to do is to have a class with abstract functions (i.e. classes where some functions are empty but are expected to be filled out by the child class) but even then there are usually good alternatives to this.

Related to the above: simple is best. Simple is elegant. If you solve a problem with 4000 lines of code using a bunch of esoteric data structures and language quirks, but someone else did it in 10 then I'll pick the 10. On the other hand a one liner function that requires a lot of unpacking, like a Python function with a bunch of nested lambdas, might be easier to read if you split it up a bit more. Time to read and understand the code is the most important metric, more important than runtime or memory use. You can optimize for the other two later if you have to, but simple has to prevail for the first pass otherwise it's going to be hard for other people to understand. In fact, it'll be hard for you to understand too when you come back to it 3 months later without any context.

Note that I've cut a few things for simplicity. For example: VHDL doesn't quite require every line to run at the same time, but it's still a major paradigm of the language that isn't present in most other languages.

Ok that was a lot to read. I guess I have more to say about programming than I thought. But the core ideas are: Python is pretty good, other languages don't need to be scary, learn your data structures and algorithms and above all keep your code simple and clean.

#programming#python#software engineering#java#java programming#c++#javascript#haskell#VHDL#hardware programming#embedded programming#month of code#design patterns#common lisp#google#data structures#algorithms#hash table#recursion#array#lists#vectors#vector#list#arrays#object oriented programming#functional programming#iterative programming#callbacks

20 notes

·

View notes