#NVIDIAGPU

Explore tagged Tumblr posts

Text

Linux GPU Server Hosting: Power Your AI & High-Performance Workloads

In the era of AI, machine learning, deep learning, and high-performance computing (HPC), having a powerful Linux GPU Server is essential. Whether you're training neural networks, rendering 3D graphics, or running complex simulations, a GPU-accelerated server delivers unmatched performance.

At CloudMinister Technologies, we provide high-performance Linux GPU Server Hosting with top-tier NVIDIA GPUs, optimized for speed and efficiency.

What is a Linux GPU Server?

A Linux GPU Server is a dedicated or virtual private server equipped with Graphics Processing Units (GPUs) to accelerate parallel computing tasks. Unlike traditional CPUs, GPUs excel at handling multiple computations simultaneously, making them ideal for:

AI & Machine Learning (TensorFlow, PyTorch) Deep Learning & Neural Networks Big Data Analytics 3D Rendering & Video Processing Scientific Simulations & HPC Blockchain & Cryptocurrency Mining

Why Choose a Linux GPU Server?

1. Unmatched Computational Power

GPUs provide thousands of cores, drastically reducing processing time for complex workloads compared to CPUs.

2. Cost-Effective for AI & Deep Learning

Training AI models on CPUs can take days or weeks. With a Linux GPU Server, you can complete tasks 10-100x faster, saving time and costs.

3. Optimized for Parallel Processing

Tasks like image recognition, natural language processing (NLP), and financial modeling benefit from GPU acceleration.

4. Full Control & Customization

With root access, you can install CUDA, cuDNN, and other GPU-optimized libraries to fine-tune performance.

5. Scalability & Flexibility

CloudMinister offers scalable GPU servers, allowing you to upgrade resources as your computational needs grow.

Who Needs a Linux GPU Server?

AI/ML Researchers & Data Scientists – Faster model training & inference. Game Developers & 3D Artists – Real-time rendering & simulations. Blockchain Developers – Efficient mining & transaction processing. Universities & Research Labs – Running complex scientific computations. Video Production Studios – High-speed video encoding/decoding.

Why Choose CloudMinister for Linux GPU Server Hosting?

NVIDIA GPU-Powered Servers (Tesla, A100, RTX) High-Speed NVMe SSD Storage 24/7 Technical Support Customizable Configurations (Single/Multi-GPU setups) High-Bandwidth Network (Low-latency connectivity)

Get Started with Linux GPU Servers Today!

Unlock the full potential of GPU-accelerated computing with CloudMinister’s Linux GPU Servers.

0 notes

Text

youtube

Build Your Affordable Home AI Lab: The Ultimate Setup Blueprint

Ever dreamed of having your very own AI lab right at home? Well, now you can! This video is your ultimate blueprint to building an affordable home AI lab for artificial intelligence and machine learning projects. No need to be a super tech wiz – we'll walk you through everything step-by-step.

We'll cover the important computer parts you need, like good graphics cards (GPU), powerful processors (CPU), memory (RAM), and even how to keep things cool. Then, we'll talk about the software to make it all work, like Ubuntu, PyTorch, and tools for building cool stuff like chatbots and seeing things with computer vision.

Why build your own AI lab? Because cloud computing can get super expensive! With your own setup, you get total control, keep your projects private, and save a lot of money in the long run. Whether you're just starting, a student, or a seasoned pro, this guide has tips and real-life stories to help you succeed.

The AI revolution is here, and you can be a part of it from your own house! Get ready to train your own models, play with large language models (LLMs), and create amazing AI projects at home.

#AILab#HomeAILab#ArtificialIntelligence#MachineLearning#DeepLearning#GPU#AIRevolution#DataScience#BuildAI#DIYAI#TechSetup#BuildAILab#AIAtHome#AIStartUp#NvidiaGpu#AIDevelopment#GenerativeAI#LLMTraining#DockerAI#OpenAI#HuggingFace#MLProjects#AITech#AILabSetup#Coding#Python#PyTorch#TensorFlow#LLMs#ComputerVision

1 note

·

View note

Text

Home AI Lab 2025 – Create Your Own Machine Learning Lab #shorts

youtube

Ready to bring AI into your own home? In this ultimate guide to creating a home AI lab, we demystify all the things you'll need to build your own robust, scalable, and affordable AI workspace—right out of your living room or garage. Whether you are an inquisitive new user, a student, or an experienced developer, this video walks you through the key hardware pieces (such as powerful GPUs, CPUs, RAM, and cooling) and the optimal software stack (Ubuntu, PyTorch, Hugging Face, Docker, etc.) to easily run deep learning models. Learn how to train your own models, fine-tune LLMs, delve into computer vision, and even create your own chatbots. With cloud computing prices skyrocketing, having a home AI lab provides you with autonomy, confidentiality, and mastery over your projects and tests. And, listen to real-world stories and pro advice to take you from novice to expert. The home AI lab revolution has arrived—are you in? Subscribe now and get creating.

#homeailab#buildailab#aiathome#deeplearning#machinelearning#aistartup#pytorch#tensorflow#nvidiagpu#aidevelopment#generativeai#computerVision#llmtraining#dockerai#openai#huggingface#mlprojects#artificialintelligence#aitech#ailabsetup#Youtube

0 notes

Text

#AAEON#AIInference#EdgeComputing#DualGPU#IntelCore#NVIDIAGPU#MAXER5100#EmbeddedSystems#Innovation#powerelectronics#powermanagement#powersemiconductor

0 notes

Text

AMD vs NVIDIA GPU, Which is a better buy, AMD or Nvidia?

AMD vs. NVIDIA GPUs

In 2025, AMD Radeon and Nvidia GeForce will fight to develop breakthrough GPU technology for professionals, gamers, and creators. Features, performance, affordability, and efficiency determine the “better” alternative.

Show comparison features

AMD's Radeon RX 7000 series has boosted competition, but Nvidia still leads. Nvidia dominates the high-end market with AI-powered features and software ecosystems, while AMD offers strong mid-range pricing.

Gaming Performance

Entry-level AMD RX 7600 and Nvidia RTX 4060 graphics cards can exceed 60 fps in 1080p.

Most top mid-range 1440p cards reach 60 fps. The AMD RX 7700 XT outperformed the Nvidia RTX 4070 at 79 fps in Cyberpunk 2077 on high graphics, earning 72. 1080p and 1440p gaming performance is comparable between AMD and Nvidia.

Nvidia shines at 4K gaming and ray tracing. RTX 4090 is best at 4K, while AMD's RX 7900 XTX is good. Cyberpunk 2077 ran at 71 FPS on the RX 7900 XTX and 67 on the RTX 4080 Super at 4K extreme settings. 4K ray tracing shows difference.

Nvidia outperforms competitors in Ray Tracing. Nvidia uses proprietary RTX cores, while AMD uses software-based Radeon Ray accelerators. Nvidia's technology is better, even while AMD's strategy boosts performance at cheaper cost. Nvidia drivers often perform better for ray tracing. Fans of ray tracing choose Nvidia.

Deep Learning Super Sampling (DLSS) from Nvidia uses AI to upscale images and enhance frame rates. DLSS is older than AMD's equivalent and improves images more accurately. FSR may improve framerates more. DLSS is needed for 4K monitors because other cards may not support them. The latest DLSS 3.5 has AI-enhanced image quality.

AMD FidelityFX Super Resolution (FSR) crops and sharpens low-resolution images. FSR is open-source and supports more devices. Although FSR is improving, sources indicate it does not match DLSS in performance or image quality, especially at lower resolutions.

Nvidia's Frame Generation technology can raise FPS but also increase input lag and artefacts, especially at lower resolutions. AMD hasn't released a direct analogue.

Both vendors offer adaptive sync, which syncs the GPU frame rate and display refresh rate to prevent stuttering and screen tearing. G-Sync is more expensive than AMD's FreeSync, which works with more monitors, but it may perform better and be more stable. Many modern TVs and monitors unofficially support both.

Compatibility and Software:

AMD's overlay menu (Adrenalin Software) is convenient for some. While a new control panel consolidates some elements, Nvidia divides others among apps. Software authors favour Nvidia card performance due to their greater user base.

Updates and game optimisation are smooth with GeForce Experience. Occasionally, AMD cards may perform poorly or cause compatibility issues in particular apps, but not in gaming.

Nvidia dominates video production, AI, and 3D modelling (3DSMax, Maya). Nvidia cards have hardware acceleration for media encoding and decoding in addition to CUDA technology, which increases AI/ML workloads and creative applications. AMD cards are still functional, however firmware encoding/decoding lowers their performance and stability in professional apps. Professional 3D software supports Nvidia cards better than AMD cards.

AMD consistently leads in value. AMD offers better value if frames per dollar are more important than ray tracing. Most AMD GPUs are cheaper than Nvidia ones. Despite costing $130 more, the RX 7700 XT exhibited slightly higher FPS than the RTX 4070. AMD high-end GPUs are cheaper than Nvidia. AMD's RX 6650 XT and RX 6600 are recommendable budget options.

Cons and Advantages:

AMD has a user-friendly interface, better performance per dollar, more VRAM than comparable cards, and a lower pricing.

AMD drawbacks: requires more power, runs hotter, has inferior ray tracing than Nvidia, and FSR is less advanced than DLSS.

Nvidia has a large software ecosystem (CUDA, professional app support), excellent ray tracing and DLSS, frequent driver updates with game releases, and cooler operation.

Resource-intensive drivers, higher prices, and performance concerns at lower resolutions are Nvidia's cons.

In conclusion,

AMD and Nvidia make great graphics cards, therefore their differences are smaller. Customers who value professional apps, streaming, ray tracing, and video production often choose Nvidia.

For clients who prioritise frame rates and savings, AMD GPUs are superior and cheaper. The decision depends on finding the right blend of features, performance, and cost for specific needs.

#NVIDIAGPU#AMD#AMDRadeon#NvidiaGeForce#AMDRX7600#DeepLearning#DLSS#AMDGPUs#AMDcards#News#Technews#Technology#Technologynews#govindhtech

1 note

·

View note

Text

#LogicMonitor#EnvisionPlatform#EdwinAI#AIOps#ITOperations#NvidiaGPU#AmazonQBusiness#Kubernetes#GenerativeAI#AIAdoption

0 notes

Link

#acceleratedcomputing#agenticAI#AI#artificialintelligence#Futurride#GeneralMotors#GTC#Nvidia#NvidiaBlackwell#NvidiaCosmos#NvidiaDriveAGX#NvidiaGPU#NvidiaGTC#NvidiaHalos#NvidiaNIMs#NvidiaOmniverse#physicalAI#sustainablemobility#Toyota

0 notes

Text

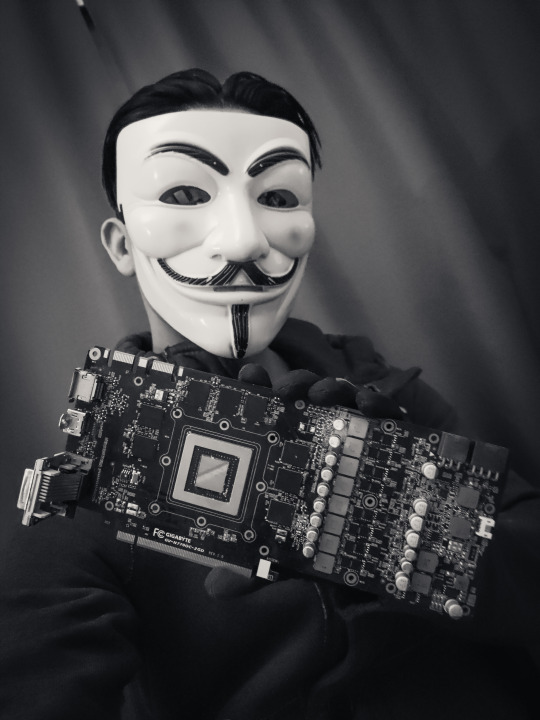

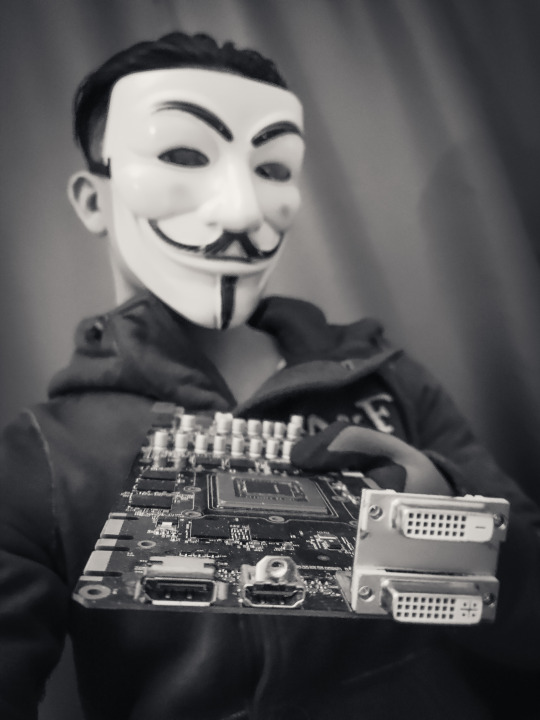

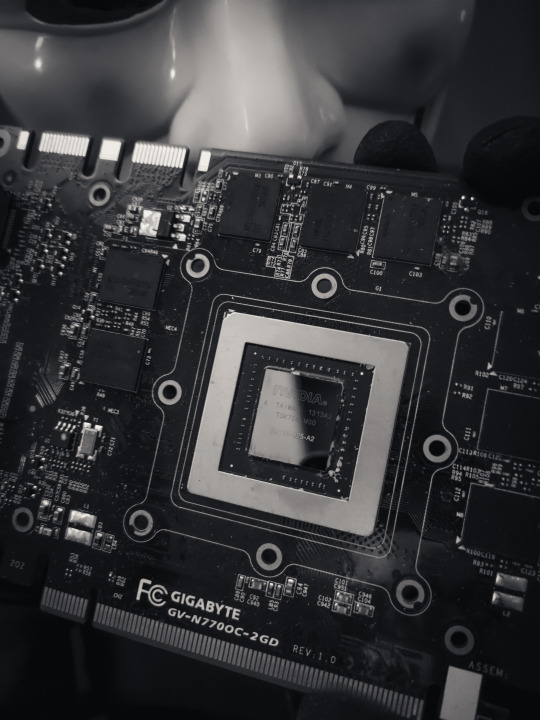

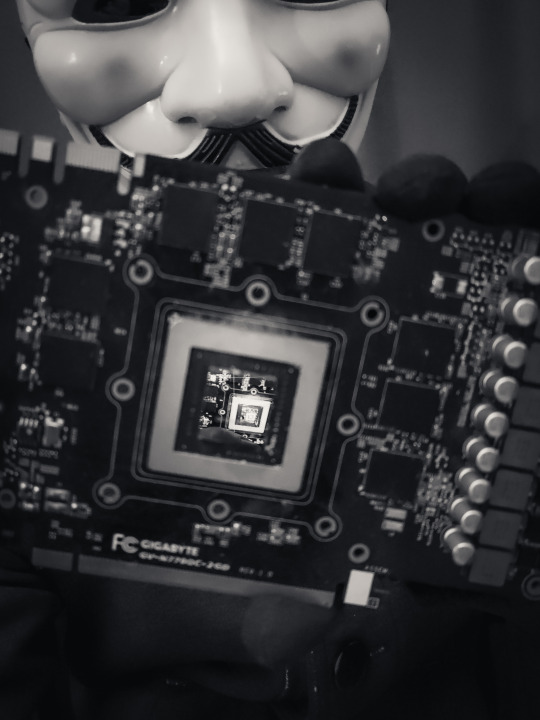

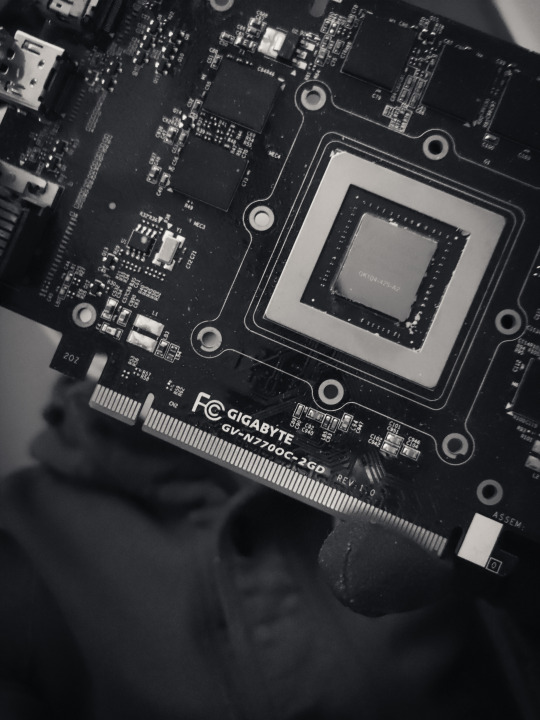

I, @Lusin333, have a 2GB Nvidia Gigabyte GeForce GTX 770 GPU (GV-N770OC-2GD).

I will use this for crypto mining.

Thanks to Gigabyte & Nvidia for giving this graphics card to me FOR FREE.

#gigabyte#gigabytegaming#gigabytegraphicscard#gigabytegpu#nvidia#nvidiageforce#nvidiagaming#nvidiagpu#nvidiagraphics#nvidiagraphicscard#gtx770#ultimategamer#ultimategangster#techgang#techgangster#Lusin333#nvidiartx#nvidiageforcertx#gpu#gpus#graphicscard#graphicscards#gamer#gaming#tech#techstuff#blackandwhite#linustechtips#techmeme#techmemes

0 notes

Photo

Did you know underground repair shops in China are now servicing illicit Nvidia GPUs banned by export restrictions? They are reportedly fixing up to 500 high-end AI accelerators each month, including A100 and H100 units. Despite export bans, these companies have set up secret facilities to repair and resuscitate these powerful GPUs, vital for AI research and supercomputing. This growing underground industry highlights the high demand and profitability of repairing these restricted hardware, even outside official channels. If you're interested in building your own gaming or AI PC, consider custom solutions with trusted hardware. Visit GroovyComputers.ca for custom computer builds tailored to your needs. Our team ensures quality and transparency in every build! Are you surprised that such a vibrant underground repair scene exists for these premium GPUs? Share your thoughts below! #NvidiaGPU #AIhardware #CustomPC #GamingComputer #ComputerBuilds #HighPerformance #AI #GPUs #DataCenter #PCGaming #TechInnovation #GroovyComputers #MadeInCanada

0 notes

Photo

Did you know that AI expansion plans could require more electricity than any human has ever used? President Trump recently expressed surprise at the scale needed, saying, "You're going to need more electricity than ever before." With Nvidia's new AI GPUs, like the Blackwell series drawing up to 1200 W each, powering these data centers demands enormous energy. If you're dreaming of building a high-performance gaming or AI-computing setup, consider a custom build that maximizes efficiency without overloading your power supply. GroovyComputers.ca specializes in custom computer builds tailored to your needs—whether for gaming, AI, or data crunching. Are you prepared for the energy demands of next-gen hardware? Tap into the future with a custom PC that balances power and efficiency. Explore your options now! Visit GroovyComputers.ca for expert guidance on custom builds and stay ahead in gaming and AI tech. #AIGaming #CustomPC #NvidiaGPU #HighPerformance #GamingBuilds #AIHardware #TechInnovation #GamingCommunity #HardwareExperts #FutureTech #PCBuilder #CustomComputer #PowerEfficiency

0 notes

Text

youtube

Home AI Lab 2025 – Create Your Own Machine Learning Lab #shorts #homeailab #buildailab #aiathome #deeplearning #machinelearning #aistartup #pytorch #tensorflow #nvidiagpu #aidevelopment #generativeai #computerVision #llmtraining #dockerai #openai #huggingface #mlprojects #artificialintelligence #aitech #ailabsetup Ready to bring AI into your own home? In this ultimate guide to creating a home AI lab, we demystify all the things you'll need to build your own robust, scalable, and affordable AI workspace—right out of your living room or garage. Whether you are an inquisitive new user, a student, or an experienced developer, this video walks you through the key hardware pieces (such as powerful GPUs, CPUs, RAM, and cooling) and the optimal software stack (Ubuntu, PyTorch, Hugging Face, Docker, etc.) to easily run deep learning models. Learn how to train your own models, fine-tune LLMs, delve into computer vision, and even create your own chatbots. With cloud computing prices skyrocketing, having a home AI lab provides you with autonomy, confidentiality, and mastery over your projects and tests. And, listen to real-world stories and pro advice to take you from novice to expert. The home AI lab revolution has arrived—are you in? Subscribe now and get creating. ✅ 𝐀𝐛𝐨𝐮𝐭 𝐓𝐞𝐜𝐡 𝐀𝐈 𝐕𝐢𝐬𝐢𝐨𝐧. Welcome to Tech AI Vision, your ultimate guide to the future of technology and artificial intelligence! Our channel explores the latest innovations in AI, machine learning, robotics, and tech gadgets. We break down complex concepts into easy-to-understand tutorials, reviews, and insights, helping you stay ahead in the ever-evolving tech world. Subscribe to explore the cutting-edge advancements shaping our future! For Business inquiries, please use the contact information below: 📩 Email: [email protected] 🔔 Want to stay ahead in AI and tech? Subscribe for powerful insights, smart tech reviews, mind-blowing AI trends, and amazing tech innovations! https://www.youtube.com/@TechAIVision-f6p/?sub_confirmation=1 ================================= ✨ Subscribe to Next Level Leadership and empower your journey with real-world leadership and growth strategies! https://www.youtube.com/@NextLevelLeadership-f3f/featured 🔔𝐃𝐨𝐧'𝐭 𝐟𝐨𝐫𝐠𝐞𝐭 𝐭𝐨 𝐬𝐮𝐛𝐬𝐜𝐫𝐢𝐛𝐞 𝐭𝐨 𝐨𝐮𝐫 𝐜𝐡𝐚𝐧𝐧𝐞𝐥 𝐟𝐨𝐫 𝐦𝐨𝐫𝐞 𝐮𝐩𝐝𝐚𝐭𝐞𝐬. https://www.youtube.com/@TechAIVision-f6p/?sub_confirmation=1 🔗 Stay Connected With Us. Facebook: https://ift.tt/VYAuSZK 📩 For business inquiries: [email protected] ============================= 🎬Suggested videos for you: ▶️ https://www.youtube.com/watch?v=uSr6vfNofFw ▶️ https://www.youtube.com/watch?v=rMEUD4xhqBk ▶️ https://www.youtube.com/watch?v=mvlrUSVWbNI ▶️ https://www.youtube.com/watch?v=zpYk4FhSpjM ▶️ https://www.youtube.com/watch?v=g3qgsU59DSk ▶️ https://www.youtube.com/watch?v=lKnnnwizHEg ▶️ https://www.youtube.com/watch?v=98ihHx1c5aQ ▶️ https://www.youtube.com/watch?v=iAVSRaieDCE ▶️ https://www.youtube.com/watch?v=AjmHk3jgWko ▶️ https://www.youtube.com/watch?v=pMlSW6b1VYk ▶️ https://www.youtube.com/watch?v=iKHfhiiL9qA ▶️ https://www.youtube.com/watch?v=pGhToVUzF2k ▶️ https://www.youtube.com/watch?v=YTPLs8pFG6E ▶️ https://www.youtube.com/watch?v=Dgyu11OXIiU ▶️ https://www.youtube.com/watch?v=5NNYJOpdLjI ================================= 𝐂𝐡𝐞𝐜𝐤 𝐎𝐮𝐭 𝐎𝐮𝐫 𝐎𝐭𝐡𝐞𝐫 𝐂𝐡𝐚𝐧𝐧𝐞𝐥! https://www.youtube.com/channel/UCt7hodOQyoeTtsXOKgCB6kQ/ https://www.youtube.com/channel/UCd1ylwYOKpX1LZJk6Ghp0RA/ 𝐓𝐡𝐚𝐧𝐤𝐬 𝐟𝐨𝐫 𝐰𝐚𝐭𝐜𝐡𝐢𝐧𝐠: Home AI Lab 2025 – Create Your Own Machine Learning Lab #shorts 🔎 𝐑𝐞𝐥𝐚𝐭𝐞𝐝 𝐏𝐡𝐫𝐚𝐬𝐞𝐬: how to build a home ai lab home ai lab setup guide best gpu for ai training 2025 ai lab hardware requirements home deep learning workstation pytorch setup on home pc build ai projects without cloud ai workstation vs cloud training https://www.youtube.com/shorts/cj2DYbYGL9c via Tech AI Vision https://www.youtube.com/channel/UCgvOxOf6TcKuCx5gZcuTyVg July 03, 2025 at 05:00AM

#ai#aitechnology#innovation#generativeai#aiinengineering#aiandrobots#automation#futureoftech#echaivision#Youtube

0 notes

Text

Local LLM Model in Private AI server in WSL

Local LLM Model in Private AI server in WSL - learn how to setup a local AI server with WSL Ollama and llama3 #ai #localllm #localai #privateaiserver #wsl #linuxai #nvidiagpu #homelab #homeserver #privateserver #selfhosting #selfhosted

We are in the age of AI and machine learning. It seems like everyone is using it. However, is the only real way to use AI tied to public services like OpenAI? No. We can run an LLM locally, which has many great benefits, such as keeping the data local to your environment, either in the home network or home lab environment. Let’s see how we can run a local LLM model to host our own private local…

View On WordPress

0 notes

Link

"How To Add Games In Nvidia GeForce Experience" Is LIVE NOW LIKE, COMMENT, SHARE & SUBSCRIBE Click ☝️ Here To Subscribe Direct Link To My Channel

#iammrhelper#mrhelper#Windows#Nvidia#GeForce#GTX#RTX#NvidiaGTX#NvidiaRTX#NvidiaGTXSeries#NvidiaRTXSeries#GPU#NvidiaGPU

1 note

·

View note

Text

Build Your Affordable Home AI Lab: The Ultimate Setup Blueprint

youtube

Ever dreamed of having your very own AI lab right at home? Well, now you can! This video is your ultimate blueprint to building an affordable home AI lab for artificial intelligence and machine learning projects. No need to be a super tech wiz – we'll walk you through everything step-by-step. We'll cover the important computer parts you need, like good graphics cards (GPU), powerful processors (CPU), memory (RAM), and even how to keep things cool. Then, we'll talk about the software to make it all work, like Ubuntu, PyTorch, and tools for building cool stuff like chatbots and seeing things with computer vision. Why build your own AI lab? Because cloud computing can get super expensive! With your own setup, you get total control, keep your projects private, and save a lot of money in the long run. Whether you're just starting, a student, or a seasoned pro, this guide has tips and real-life stories to help you succeed. The AI revolution is here, and you can be a part of it from your own house! Get ready to train your own models, play with large language models (LLMs), and create amazing AI projects at home.

#AILab#HomeAILab#ArtificialIntelligence#MachineLearning#DeepLearning#GPU#AIRevolution#DataScience#BuildAI#DIYAI#TechSetup#BuildAILab#AIAtHome#AIStartUp#NvidiaGpu#AIDevelopment#GenerativeAI#LLMTraining#DockerAI#OpenAI#HuggingFace#MLProjects#AITech#AILabSetup#Coding#Python#PyTorch#TensorFlow#LLMs#ComputerVision

0 notes

Text

NVIDIA DLSS 3 already in 17 games, with another 33 games & apps on the way soon. GeForce RTX users can now enable AI-powered performance boosts of DLSS in over 250 released games and creative apps.

NVIDIA DLSS uses AI and GeForce RTX Tensor Cores to boost frame rates while maintaining great image quality

0 notes

Text

NVIDIA RTX 6000 Ada Generation 48GB Graphics Card

The Best Graphics and AI Performance for Desktop Computers

NVIDIA RTX 6000 Ada Generation capability and performance handle AI-driven processes. Based on the NVIDIA Ada Lovelace GPU architecture, the RTX 6000 delivers unmatched rendering, AI, graphics, and compute performance with third-generation RT Cores, fourth-generation Tensor Cores, and next-generation CUDA Cores with 48GB of graphics RAM In demanding corporate contexts, NVIDIA RTX 6000 CPU workstations excel.

Highlights

Industry-Leading Performance

91.1 TFLOPS Single-Precision Performance

Performance of RT Core: 210.6 TFLOPS

Performance of Tensor: 1457 AI TOPS

Features

Powered by the NVIDIA Ada Lovelace Architecture

NVIDIA Ada Lovelace Architecture-Based CUDA Cores

With two times the speed of the previous generation for single-precision floating-point (FP32) operations, NVIDIA RTX 6000 Ada Lovelace Architecture-Based CUDA Cores offer notable performance gains for desktop graphics and simulation workflows, including intricate 3D computer-aided design (CAD) and computer-aided engineering (CAE).

Third-Generation RT Cores

Third-generation RT Cores offer enormous speedups for workloads such as photorealistic rendering of movie content, architectural design evaluations, and virtual prototyping of product designs, with up to 2X the throughput compared to the previous generation. Additionally, this approach speeds up and improves the visual correctness of ray-traced motion blur rendering.

Fourth-Generation Tensor Cores

Rapid model training and inferencing are made possible on RTX-powered AI workstations by fourth-generation Tensor Cores, which use the FP8 data format to give more than two times the AI performance of the previous generation.

NVIDIA RTX 6000 Ada 48GB GDDR6

Data scientists, engineers, and creative professionals may work with massive datasets and tasks including simulation, data science, and rendering with the NVIDIA RTX 6000 Ada 48GB GDDR6 memory.

AV1 Encoders

AV1 encoding gives broadcasters, streamers, and video conferences more options with the eighth-generation dedicated hardware encoder (NVENC). It is 40% more efficient than H.264, thus 1080p streamers can move to 1440p without losing quality.

Virtualization-Ready

A personal workstation can be transformed into several high-performance virtual workstation instances with support for NVIDIA RTX Virtual Workstation (vWS) software, enabling distant users to pool resources to power demanding design, artificial intelligence, and computation workloads.

Performance

Performance for Endless Possibilities

For modern professional workflows, the RTX 6000 delivers unrivaled rendering, AI, graphics, and computing performance. RTX 6000 delivers up to 10X more performance than the previous generation, helping you succeed at work.

NVIDIA RTX 6000 Ada Price

The NVIDIA RTX 6000 Ada Generation is a high-end workstation GPU primarily designed for professional tasks such as 3D rendering, architectural visualization, and engineering simulations. Its price varies based on the retailer and region but generally starts around $6,800 USD and can exceed $7,000 USD depending on configurations and add-ons.

Benefits

The NVIDIA RTX 6000 Ada is a top graphics card for complicated computing and visualization. Some of its key benefits:

Exceptional Performance

The Ada Lovelace-based RTX 6000 Ada excels at video editing, 3D rendering, and AI creation. Rendering and simulations are faster and more accurate because to its advanced RT cores and high core count.

Abundant Memory Space

Large datasets, intricate 3D models, and high-resolution textures can all be handled by the RTX 6000 Ada because to its 48 GB of GDDR6 ECC memory. This makes it perfect for fields like scientific inquiry, filmmaking, and architecture.

Improvements to AI and Ray Tracing

The card offers enhanced performance and realistic images because to its sophisticated ray-tracing capabilities and DLSS technology. This is particularly advantageous for VFX production, animation, and game development.

Efficiency in Energy Use

The RTX 6000 Ada’s effective Ada Lovelace architecture allows it to give excellent performance at a reduced power consumption than its predecessors, which lowers operating expenses for enterprises.

Improved Communication

It supports up to 8K resolution at high refresh rates and has DisplayPort 1.4a and HDMI 2.1 outputs. This is ideal for multi-display configurations and sophisticated visualization.

Read more on Govindhech.com

#NVIDIARTX#RTX6000#RTX6000Ada#NVIDIAGPU#GraphicsCard#48GBGPU#LovelaceGPU#News#Technews#Technology#Technologynews#Technologytrends#Govindhtech

0 notes