#pytorch

Explore tagged Tumblr posts

Text

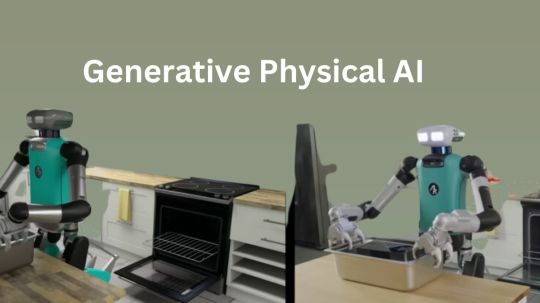

What Is Generative Physical AI? Why It Is Important?

What is Physical AI?

Autonomous robots can see, comprehend, and carry out intricate tasks in the actual (physical) environment with to physical artificial intelligence. Because of its capacity to produce ideas and actions to carry out, it is also sometimes referred to as “Generative physical AI.”

How Does Physical AI Work?

Models of generative AI Massive volumes of text and picture data, mostly from the Internet, are used to train huge language models like GPT and Llama. Although these AIs are very good at creating human language and abstract ideas, their understanding of the physical world and its laws is still somewhat restricted.

Current generative AI is expanded by Generative physical AI, which comprehends the spatial linkages and physical behavior of the three-dimensional environment in which the all inhabit. During the AI training process, this is accomplished by supplying extra data that includes details about the spatial connections and physical laws of the actual world.

Highly realistic computer simulations are used to create the 3D training data, which doubles as an AI training ground and data source.

A digital doppelganger of a location, such a factory, is the first step in physically-based data creation. Sensors and self-governing devices, such as robots, are introduced into this virtual environment. The sensors record different interactions, such as rigid body dynamics like movement and collisions or how light interacts in an environment, and simulations that replicate real-world situations are run.

What Function Does Reinforcement Learning Serve in Physical AI?

Reinforcement learning trains autonomous robots to perform in the real world by teaching them skills in a simulated environment. Through hundreds or even millions of trial-and-error, it enables self-governing robots to acquire abilities in a safe and efficient manner.

By rewarding a physical AI model for doing desirable activities in the simulation, this learning approach helps the model continually adapt and become better. Autonomous robots gradually learn to respond correctly to novel circumstances and unanticipated obstacles via repeated reinforcement learning, readying them for real-world operations.

An autonomous machine may eventually acquire complex fine motor abilities required for practical tasks like packing boxes neatly, assisting in the construction of automobiles, or independently navigating settings.

Why is Physical AI Important?

Autonomous robots used to be unable to detect and comprehend their surroundings. However, Generative physical AI enables the construction and training of robots that can naturally interact with and adapt to their real-world environment.

Teams require strong, physics-based simulations that provide a secure, regulated setting for training autonomous machines in order to develop physical AI. This improves accessibility and utility in real-world applications by facilitating more natural interactions between people and machines, in addition to increasing the efficiency and accuracy of robots in carrying out complicated tasks.

Every business will undergo a transformation as Generative physical AI opens up new possibilities. For instance:

Robots: With physical AI, robots show notable improvements in their operating skills in a range of environments.

Using direct input from onboard sensors, autonomous mobile robots (AMRs) in warehouses are able to traverse complicated settings and avoid impediments, including people.

Depending on how an item is positioned on a conveyor belt, manipulators may modify their grabbing position and strength, demonstrating both fine and gross motor abilities according to the object type.

This method helps surgical robots learn complex activities like stitching and threading needles, demonstrating the accuracy and versatility of Generative physical AI in teaching robots for particular tasks.

Autonomous Vehicles (AVs): AVs can make wise judgments in a variety of settings, from wide highways to metropolitan cityscapes, by using sensors to sense and comprehend their environment. By exposing AVs to physical AI, they may better identify people, react to traffic or weather, and change lanes on their own, efficiently adjusting to a variety of unforeseen situations.

Smart Spaces: Large interior areas like factories and warehouses, where everyday operations include a constant flow of people, cars, and robots, are becoming safer and more functional with to physical artificial intelligence. By monitoring several things and actions inside these areas, teams may improve dynamic route planning and maximize operational efficiency with the use of fixed cameras and sophisticated computer vision models. Additionally, they effectively see and comprehend large-scale, complicated settings, putting human safety first.

How Can You Get Started With Physical AI?

Using Generative physical AI to create the next generation of autonomous devices requires a coordinated effort from many specialized computers:

Construct a virtual 3D environment: A high-fidelity, physically based virtual environment is needed to reflect the actual world and provide synthetic data essential for training physical AI. In order to create these 3D worlds, developers can simply include RTX rendering and Universal Scene Description (OpenUSD) into their current software tools and simulation processes using the NVIDIA Omniverse platform of APIs, SDKs, and services.

NVIDIA OVX systems support this environment: Large-scale sceneries or data that are required for simulation or model training are also captured in this stage. fVDB, an extension of PyTorch that enables deep learning operations on large-scale 3D data, is a significant technical advancement that has made it possible for effective AI model training and inference with rich 3D datasets. It effectively represents features.

Create synthetic data: Custom synthetic data generation (SDG) pipelines may be constructed using the Omniverse Replicator SDK. Domain randomization is one of Replicator’s built-in features that lets you change a lot of the physical aspects of a 3D simulation, including lighting, position, size, texture, materials, and much more. The resulting pictures may also be further enhanced by using diffusion models with ControlNet.

Train and validate: In addition to pretrained computer vision models available on NVIDIA NGC, the NVIDIA DGX platform, a fully integrated hardware and software AI platform, may be utilized with physically based data to train or fine-tune AI models using frameworks like TensorFlow, PyTorch, or NVIDIA TAO. After training, reference apps such as NVIDIA Isaac Sim may be used to test the model and its software stack in simulation. Additionally, developers may use open-source frameworks like Isaac Lab to use reinforcement learning to improve the robot’s abilities.

In order to power a physical autonomous machine, such a humanoid robot or industrial automation system, the optimized stack may now be installed on the NVIDIA Jetson Orin and, eventually, the next-generation Jetson Thor robotics supercomputer.

Read more on govindhtech.com

#GenerativePhysicalAI#generativeAI#languagemodels#PyTorch#NVIDIAOmniverse#AImodel#artificialintelligence#NVIDIADGX#TensorFlow#AI#technology#technews#news#govindhtech

3 notes

·

View notes

Text

PyTorch Workstation Hardware Recommendations for 2025

If you are working on deep learning, AI, or large-scale model training, you've most likely already seen PyTorch's potential. Therefore, the working potential of your hardware limit the functionality of even the best software framework. Selecting the ideal workstation always makes the required difference between hours or days of excruciating waiting and fruitful iterations, regardless of your background as an AI engineer, data scientist, or researcher.

Why Hardware Matters in PyTorch Workflows

PyTorch is known for its dynamic computation graphs, ease of debugging, and GPU acceleration. But all of that potential can go to waste if you’re working on underpowered hardware. When you're training large models on big datasets, hardware becomes the silent partner driving speed, accuracy, and reliability.

Whether you're using a personal workstation or enterprise servers, the right hardware setup ensures faster training, reduced model convergence time, and the flexibility to scale.

Key Components for an Ideal PyTorch Workstation

Let’s break down the critical components you’ll need to consider when building a PyTorch-focused machine:

1. GPU (Graphics Processing Unit) — The Heart of Deep Learning

PyTorch thrives on GPUs. A strong GPU enables large-batch training and faster matrix computations. Here's what we recommend:

Recommended GPUs for 2025:

NVIDIA RTX 4090 or 4080 – Ideal for most AI professionals.

NVIDIA A6000 – For high-end research and enterprise needs.

NVIDIA L40 / L4 Tensor Core GPUs – Perfect for multi-GPU server environments.

Tip: Look for CUDA compatibility and high VRAM (24GB or more for large models).

2. CPU (Central Processing Unit)

While most computations happen on the GPU, a strong CPU ensures the system remains responsive and can handle data preprocessing efficiently.

Recommended CPUs:

AMD Threadripper PRO 7000 Series

Intel Xeon W-Series

AMD Ryzen 9 7950X (for mid-level workstations)

Choose multi-core CPUs with high clock speeds for best results.

3. RAM (Memory)

Training on large datasets can consume significant RAM, especially during data loading and augmentation.

Minimum RAM:

64GB (ideal starting point)

128GB–256GB for enterprise or large dataset projects

At Global Nettech, we recommend ECC (Error-Correcting Code) RAM for server-grade stability.

4. Storage

Fast storage ensures smooth data loading and checkpointing.

Best Setup:

1TB NVMe SSD (Primary) – For OS, Python environment, and current projects

2TB+ SATA SSD or HDD (Secondary) – For datasets and backup

For server environments, RAID configurations are recommended to prevent data loss and enhance speed.

5. Motherboard & Expandability

If you're planning multi-GPU training, make sure the motherboard supports NVLink and has multiple PCIe Gen 4.0 or 5.0 x16 slots. Always future-proof your build with ample room for upgrades.

6. Cooling & Power Supply

Training models can put your components under extreme load. Heat buildup isn’t just bad for performance—it shortens hardware lifespan.

Use liquid cooling for GPUs/CPUs in intensive environments.

Opt for 1000W+ Platinum-rated PSUs for multi-GPU setups.

Recommended PyTorch Workstation Builds by Global Nettech

We’ve handpicked three performance tiers to suit different user profiles:

Entry-Level Workstation (For Learners & Hobbyists)

GPU: NVIDIA RTX 4070

CPU: AMD Ryzen 9 7900X

RAM: 64GB DDR5

Storage: 1TB NVMe SSD

Use Case: Ideal for training small to medium models, Kaggle competitions, and prototype development.

Professional Workstation (For AI Teams & Startups)

GPU: NVIDIA RTX 4090

CPU: AMD Threadripper PRO 7955WX

RAM: 128GB ECC

Storage: 1TB NVMe + 4TB SSD

Use Case: Supports multi-model training, transformer-based architectures, and deployment pipelines.

Enterprise Server (For Research Labs & Data Centers)

GPU: 4x NVIDIA A100 or L40 GPUs

CPU: Dual Intel Xeon Gold 6416H

RAM: 512GB ECC

Storage: 2TB NVMe + RAID 10 SSD Array

Use Case: Multi-user workloads, large-scale language models, and model parallelism training.

Why Choose Global Nettech for PyTorch Workstations?

At Global Nettech, we specialize in providing workstations and servers that are tailor-made for AI workloads like PyTorch. Here’s what sets us apart:

✅ Tested for Deep Learning – Every build is benchmarked on real AI models. ✅ Custom Configurations – Choose specs based on your unique workflow. ✅ Ready-to-Use Setup – Comes preloaded with PyTorch, CUDA, cuDNN, and required drivers. ✅ Post-Sales Support – Dedicated tech support team for any future upgrades or troubleshooting.

Whether you’re setting up a research lab or building your first AI project, we have the right tools to help you succeed.

Conclusion: Build Smarter, Train Faster

Your PyTorch code is only as fast as the hardware it runs on. Investing in the right workstation or server isn’t just about speed—it’s about removing bottlenecks so you can focus on building better models.Need a custom build? Let Global Nettech help you choose the perfect configuration from our range of PyTorch workstation hardware recommendations—because every second counts when you're innovating with AI.

0 notes

Text

youtube

#PyTorch#DeepLearning#MachineLearning#AI#NeuralNetworks#PyTorchTutorial#DataScience#ComputerVision#PyTorchModel#DeepLearningWithPyTorch#ML#NLP#AIResearch#PyTorchCommunity#PyTorchFramework#AIWithPyTorch#Youtube

0 notes

Text

Peta Jalan Karier AI Engineer untuk Pemula

Di era di mana kecerdasan buatan (AI) telah menjadi motor penggerak inovasi di berbagai sektor, sebuah profesi baru telah lahir dan kian vital: AI Engineer. Seringkali dianggap sama dengan Data Scientist atau Machine Learning Engineer, peran AI Engineer sebenarnya memiliki spesialisasi dan tanggung jawab yang unik. Mereka adalah jembatan antara riset dan produksi, arsitek yang merancang,…

#ai engineer#data scientist#fresh graduate#industri ai#machine-learning#peta jalan karier#portofolio proyek#python#pytorch#tensorflow

0 notes

Text

Roadmap to Becoming an AI Guru in 2025

Roadmap to Becoming an AI Guru in 2025 Timeframe Foundations AI Concepts Hands-On Skills AI Tools Buzzwords Continuous Learning Soft Skills Becoming an “AI Guru” in 2025 transcends basic comprehension; it demands profound technical expertise, continuous adaptation, and practical application of advanced concepts. This comprehensive roadmap outlines the critical areas of knowledge, hands-on…

#Algorithms#apache#Autonomous#AWS#Azure#Career#cloud#Databricks#embeddings#gcp#Generative AI#gpu#image#LLM#LLMs#monitoring#nosql#Optimization#performance#Platform#Platforms#programming#python#pytorch#Spark#sql#vector#Vertex AI

0 notes

Text

A first pytorch program to build a simple pattern recognizer.

What is it called if you use AI to build an AI? That’s what I’ve done to understand a bit of how PyTorch works, building a little program to train a simple network to attempt to recognize Fibonacci like sequences. Fibonacci recap. Recall that the Fibonacci series is defined by a recurrence relationship where the next term is the sum of the previous two…

View On WordPress

0 notes

Text

如何快速部署 LLM 模型到 GPU 主機?從環境建置到服務啟動

GPU 主機 – 隨著 ChatGPT、LLaMA、DeepSeek 等大型語言模型(LLM)廣泛應用,越來越多企業與開發者希望將 LLM 模型自建於本地或 GPU 實體主機上。這樣不僅能取得更高的資料控制權,避免私密資訊外洩,也能有效降低長期使用成本,並規避商業 API 在頻率、使用量、功能上的限制與資安疑慮。

然而,部署 LLM 模型的第一道門檻往往是環境建置。從 CUDA 驅動的版本對應、PyTorch 的安裝,到 HuggingFace 模型快取與推論引擎選型,都是需要考量的技術細節。如果沒有明確指引,往往容易在初期階段耗費大量時間摸索。

這篇文章將以平易近人的方式,帶你從挑選 GPU 主機開始,逐步說明環境建置、部署流程、模型上線、API 串接、容器化管理與後續運維建議,協助你成功將 LLM 模型部署到實體主機,快速打造自己的本地 AI 推論平台。

#AI Server#AI 主機#AI 伺服器#AI主機租用#DeepSeek#GPT#GPU Server#GPU 主機#GPU 主機租用#GPU 伺服器#LLaMA#OpenAI#PyTorch#實體主機

0 notes

Text

youtube

Build Your Affordable Home AI Lab: The Ultimate Setup Blueprint

Ever dreamed of having your very own AI lab right at home? Well, now you can! This video is your ultimate blueprint to building an affordable home AI lab for artificial intelligence and machine learning projects. No need to be a super tech wiz – we'll walk you through everything step-by-step.

We'll cover the important computer parts you need, like good graphics cards (GPU), powerful processors (CPU), memory (RAM), and even how to keep things cool. Then, we'll talk about the software to make it all work, like Ubuntu, PyTorch, and tools for building cool stuff like chatbots and seeing things with computer vision.

Why build your own AI lab? Because cloud computing can get super expensive! With your own setup, you get total control, keep your projects private, and save a lot of money in the long run. Whether you're just starting, a student, or a seasoned pro, this guide has tips and real-life stories to help you succeed.

The AI revolution is here, and you can be a part of it from your own house! Get ready to train your own models, play with large language models (LLMs), and create amazing AI projects at home.

#AILab#HomeAILab#ArtificialIntelligence#MachineLearning#DeepLearning#GPU#AIRevolution#DataScience#BuildAI#DIYAI#TechSetup#BuildAILab#AIAtHome#AIStartUp#NvidiaGpu#AIDevelopment#GenerativeAI#LLMTraining#DockerAI#OpenAI#HuggingFace#MLProjects#AITech#AILabSetup#Coding#Python#PyTorch#TensorFlow#LLMs#ComputerVision

1 note

·

View note

Text

Home AI Lab 2025 – Create Your Own Machine Learning Lab #shorts

youtube

Ready to bring AI into your own home? In this ultimate guide to creating a home AI lab, we demystify all the things you'll need to build your own robust, scalable, and affordable AI workspace—right out of your living room or garage. Whether you are an inquisitive new user, a student, or an experienced developer, this video walks you through the key hardware pieces (such as powerful GPUs, CPUs, RAM, and cooling) and the optimal software stack (Ubuntu, PyTorch, Hugging Face, Docker, etc.) to easily run deep learning models. Learn how to train your own models, fine-tune LLMs, delve into computer vision, and even create your own chatbots. With cloud computing prices skyrocketing, having a home AI lab provides you with autonomy, confidentiality, and mastery over your projects and tests. And, listen to real-world stories and pro advice to take you from novice to expert. The home AI lab revolution has arrived—are you in? Subscribe now and get creating.

#homeailab#buildailab#aiathome#deeplearning#machinelearning#aistartup#pytorch#tensorflow#nvidiagpu#aidevelopment#generativeai#computerVision#llmtraining#dockerai#openai#huggingface#mlprojects#artificialintelligence#aitech#ailabsetup#Youtube

0 notes

Text

AI Frameworks Help Data Scientists For GenAI Survival

AI Frameworks: Crucial to the Success of GenAI

Develop Your AI Capabilities Now

You play a crucial part in the quickly growing field of generative artificial intelligence (GenAI) as a data scientist. Your proficiency in data analysis, modeling, and interpretation is still essential, even though platforms like Hugging Face and LangChain are at the forefront of AI research.

Although GenAI systems are capable of producing remarkable outcomes, they still mostly depend on clear, organized data and perceptive interpretation areas in which data scientists are highly skilled. You can direct GenAI models to produce more precise, useful predictions by applying your in-depth knowledge of data and statistical techniques. In order to ensure that GenAI systems are based on strong, data-driven foundations and can realize their full potential, your job as a data scientist is crucial. Here’s how to take the lead:

Data Quality Is Crucial

The effectiveness of even the most sophisticated GenAI models depends on the quality of the data they use. By guaranteeing that the data is relevant, AI tools like Pandas and Modin enable you to clean, preprocess, and manipulate large datasets.

Analysis and Interpretation of Exploratory Data

It is essential to comprehend the features and trends of the data before creating the models. Data and model outputs are visualized via a variety of data science frameworks, like Matplotlib and Seaborn, which aid developers in comprehending the data, selecting features, and interpreting the models.

Model Optimization and Evaluation

A variety of algorithms for model construction are offered by AI frameworks like scikit-learn, PyTorch, and TensorFlow. To improve models and their performance, they provide a range of techniques for cross-validation, hyperparameter optimization, and performance evaluation.

Model Deployment and Integration

Tools such as ONNX Runtime and MLflow help with cross-platform deployment and experimentation tracking. By guaranteeing that the models continue to function successfully in production, this helps the developers oversee their projects from start to finish.

Intel’s Optimized AI Frameworks and Tools

The technologies that developers are already familiar with in data analytics, machine learning, and deep learning (such as Modin, NumPy, scikit-learn, and PyTorch) can be used. For the many phases of the AI process, such as data preparation, model training, inference, and deployment, Intel has optimized the current AI tools and AI frameworks, which are based on a single, open, multiarchitecture, multivendor software platform called oneAPI programming model.

Data Engineering and Model Development:

To speed up end-to-end data science pipelines on Intel architecture, use Intel’s AI Tools, which include Python tools and frameworks like Modin, Intel Optimization for TensorFlow Optimizations, PyTorch Optimizations, IntelExtension for Scikit-learn, and XGBoost.

Optimization and Deployment

For CPU or GPU deployment, Intel Neural Compressor speeds up deep learning inference and minimizes model size. Models are optimized and deployed across several hardware platforms including Intel CPUs using the OpenVINO toolbox.

You may improve the performance of your Intel hardware platforms with the aid of these AI tools.

Library of Resources

Discover collection of excellent, professionally created, and thoughtfully selected resources that are centered on the core data science competencies that developers need. Exploring machine and deep learning AI frameworks.

What you will discover:

Use Modin to expedite the extract, transform, and load (ETL) process for enormous DataFrames and analyze massive datasets.

To improve speed on Intel hardware, use Intel’s optimized AI frameworks (such as Intel Optimization for XGBoost, Intel Extension for Scikit-learn, Intel Optimization for PyTorch, and Intel Optimization for TensorFlow).

Use Intel-optimized software on the most recent Intel platforms to implement and deploy AI workloads on Intel Tiber AI Cloud.

How to Begin

Frameworks for Data Engineering and Machine Learning

Step 1: View the Modin, Intel Extension for Scikit-learn, and Intel Optimization for XGBoost videos and read the introductory papers.

Modin: To achieve a quicker turnaround time overall, the video explains when to utilize Modin and how to apply Modin and Pandas judiciously. A quick start guide for Modin is also available for more in-depth information.

Scikit-learn Intel Extension: This tutorial gives you an overview of the extension, walks you through the code step-by-step, and explains how utilizing it might improve performance. A movie on accelerating silhouette machine learning techniques, PCA, and K-means clustering is also available.

Intel Optimization for XGBoost: This straightforward tutorial explains Intel Optimization for XGBoost and how to use Intel optimizations to enhance training and inference performance.

Step 2: Use Intel Tiber AI Cloud to create and develop machine learning workloads.

On Intel Tiber AI Cloud, this tutorial runs machine learning workloads with Modin, scikit-learn, and XGBoost.

Step 3: Use Modin and scikit-learn to create an end-to-end machine learning process using census data.

Run an end-to-end machine learning task using 1970–2010 US census data with this code sample. The code sample uses the Intel Extension for Scikit-learn module to analyze exploratory data using ridge regression and the Intel Distribution of Modin.

Deep Learning Frameworks

Step 4: Begin by watching the videos and reading the introduction papers for Intel’s PyTorch and TensorFlow optimizations.

Intel PyTorch Optimizations: Read the article to learn how to use the Intel Extension for PyTorch to accelerate your workloads for inference and training. Additionally, a brief video demonstrates how to use the addon to run PyTorch inference on an Intel Data Center GPU Flex Series.

Intel’s TensorFlow Optimizations: The article and video provide an overview of the Intel Extension for TensorFlow and demonstrate how to utilize it to accelerate your AI tasks.

Step 5: Use TensorFlow and PyTorch for AI on the Intel Tiber AI Cloud.

In this article, it show how to use PyTorch and TensorFlow on Intel Tiber AI Cloud to create and execute complicated AI workloads.

Step 6: Speed up LSTM text creation with Intel Extension for TensorFlow.

The Intel Extension for TensorFlow can speed up LSTM model training for text production.

Step 7: Use PyTorch and DialoGPT to create an interactive chat-generation model.

Discover how to use Hugging Face’s pretrained DialoGPT model to create an interactive chat model and how to use the Intel Extension for PyTorch to dynamically quantize the model.

Read more on Govindhtech.com

#AI#AIFrameworks#DataScientists#GenAI#PyTorch#GenAISurvival#TensorFlow#CPU#GPU#IntelTiberAICloud#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

2 notes

·

View notes

Text

instagram

#MachineLearning#DeepLearning#ArtificialIntelligence#TensorFlow#PyTorch#ScikitLearn#AIDevelopment#MLTools#AIFrameworks#SunshineDigitalServices#Instagram

0 notes

Text

youtube

#PyTorch#DeepLearning#MachineLearning#AI#NeuralNetworks#PyTorchTutorial#DataScience#ComputerVision#PyTorchModel#DeepLearningWithPyTorch#ML#NLP#AIResearch#PyTorchCommunity#PyTorchFramework#AIWithPyTorch#Youtube

0 notes

Text

Tools & Framework AI: Panduan Memilih yang Tepat

Di era revolusi kecerdasan buatan (AI) yang terus berkembang pesat, algoritma machine learning yang kompleks telah menjadi tulang punggung dari inovasi-inovasi yang tak terhitung. Namun, di balik setiap model AI yang canggih, tersembunyi sebuah fondasi esensial: alat dan framework yang digunakan untuk membangun, melatih, dan mengimplementasikannya. Pilihan tool yang tepat dapat secara signifikan…

#ai engineer#Deep Learning#framework ai#hugging face#machine-learning#NLP#pytorch#scikit-learn#tensorflow#tools ai

0 notes

Text

The Best Open-Source Tools for Data Science in 2025

Data science in 2025 is thriving, driven by a robust ecosystem of open-source tools that empower professionals to extract insights, build predictive models, and deploy data-driven solutions at scale. This year, the landscape is more dynamic than ever, with established favorites and emerging contenders shaping how data scientists work. Here’s an in-depth look at the best open-source tools that are defining data science in 2025.

1. Python: The Universal Language of Data Science

Python remains the cornerstone of data science. Its intuitive syntax, extensive libraries, and active community make it the go-to language for everything from data wrangling to deep learning. Libraries such as NumPy and Pandas streamline numerical computations and data manipulation, while scikit-learn is the gold standard for classical machine learning tasks.

NumPy: Efficient array operations and mathematical functions.

Pandas: Powerful data structures (DataFrames) for cleaning, transforming, and analyzing structured data.

scikit-learn: Comprehensive suite for classification, regression, clustering, and model evaluation.

Python’s popularity is reflected in the 2025 Stack Overflow Developer Survey, with 53% of developers using it for data projects.

2. R and RStudio: Statistical Powerhouses

R continues to shine in academia and industries where statistical rigor is paramount. The RStudio IDE enhances productivity with features for scripting, debugging, and visualization. R’s package ecosystem—especially tidyverse for data manipulation and ggplot2 for visualization—remains unmatched for statistical analysis and custom plotting.

Shiny: Build interactive web applications directly from R.

CRAN: Over 18,000 packages for every conceivable statistical need.

R is favored by 36% of users, especially for advanced analytics and research.

3. Jupyter Notebooks and JupyterLab: Interactive Exploration

Jupyter Notebooks are indispensable for prototyping, sharing, and documenting data science workflows. They support live code (Python, R, Julia, and more), visualizations, and narrative text in a single document. JupyterLab, the next-generation interface, offers enhanced collaboration and modularity.

Over 15 million notebooks hosted as of 2025, with 80% of data analysts using them regularly.

4. Apache Spark: Big Data at Lightning Speed

As data volumes grow, Apache Spark stands out for its ability to process massive datasets rapidly, both in batch and real-time. Spark’s distributed architecture, support for SQL, machine learning (MLlib), and compatibility with Python, R, Scala, and Java make it a staple for big data analytics.

65% increase in Spark adoption since 2023, reflecting its scalability and performance.

5. TensorFlow and PyTorch: Deep Learning Titans

For machine learning and AI, TensorFlow and PyTorch dominate. Both offer flexible APIs for building and training neural networks, with strong community support and integration with cloud platforms.

TensorFlow: Preferred for production-grade models and scalability; used by over 33% of ML professionals.

PyTorch: Valued for its dynamic computation graph and ease of experimentation, especially in research settings.

6. Data Visualization: Plotly, D3.js, and Apache Superset

Effective data storytelling relies on compelling visualizations:

Plotly: Python-based, supports interactive and publication-quality charts; easy for both static and dynamic visualizations.

D3.js: JavaScript library for highly customizable, web-based visualizations; ideal for specialists seeking full control.

Apache Superset: Open-source dashboarding platform for interactive, scalable visual analytics; increasingly adopted for enterprise BI.

Tableau Public, though not fully open-source, is also popular for sharing interactive visualizations with a broad audience.

7. Pandas: The Data Wrangling Workhorse

Pandas remains the backbone of data manipulation in Python, powering up to 90% of data wrangling tasks. Its DataFrame structure simplifies complex operations, making it essential for cleaning, transforming, and analyzing large datasets.

8. Scikit-learn: Machine Learning Made Simple

scikit-learn is the default choice for classical machine learning. Its consistent API, extensive documentation, and wide range of algorithms make it ideal for tasks such as classification, regression, clustering, and model validation.

9. Apache Airflow: Workflow Orchestration

As data pipelines become more complex, Apache Airflow has emerged as the go-to tool for workflow automation and orchestration. Its user-friendly interface and scalability have driven a 35% surge in adoption among data engineers in the past year.

10. MLflow: Model Management and Experiment Tracking

MLflow streamlines the machine learning lifecycle, offering tools for experiment tracking, model packaging, and deployment. Over 60% of ML engineers use MLflow for its integration capabilities and ease of use in production environments.

11. Docker and Kubernetes: Reproducibility and Scalability

Containerization with Docker and orchestration via Kubernetes ensure that data science applications run consistently across environments. These tools are now standard for deploying models and scaling data-driven services in production.

12. Emerging Contenders: Streamlit and More

Streamlit: Rapidly build and deploy interactive data apps with minimal code, gaining popularity for internal dashboards and quick prototypes.

Redash: SQL-based visualization and dashboarding tool, ideal for teams needing quick insights from databases.

Kibana: Real-time data exploration and monitoring, especially for log analytics and anomaly detection.

Conclusion: The Open-Source Advantage in 2025

Open-source tools continue to drive innovation in data science, making advanced analytics accessible, scalable, and collaborative. Mastery of these tools is not just a technical advantage—it’s essential for staying competitive in a rapidly evolving field. Whether you’re a beginner or a seasoned professional, leveraging this ecosystem will unlock new possibilities and accelerate your journey from raw data to actionable insight.

The future of data science is open, and in 2025, these tools are your ticket to building smarter, faster, and more impactful solutions.

#python#r#rstudio#jupyternotebook#jupyterlab#apachespark#tensorflow#pytorch#plotly#d3js#apachesuperset#pandas#scikitlearn#apacheairflow#mlflow#docker#kubernetes#streamlit#redash#kibana#nschool academy#datascience

0 notes

Text

🎯 Building Smarter AI Agents: What’s Under the Hood?

At CIZO, we’re often asked — “What frameworks do you use to build intelligent AI agents?” Here’s a quick breakdown from our recent team discussion:

Core Frameworks We Use: ✅ TensorFlow & PyTorch – for deep learning capabilities ✅ OpenAI Gym – for reinforcement learning ✅ LangChain – to develop conversational agents ✅ Google Cloud AI & Azure AI – for scalable, cloud-based solutions

Real-World Application: In our RECOVAPRO app, we used TensorFlow to train personalized wellness models — offering users AI-driven routines tailored to their lifestyle and recovery goals.

📈 The right tools aren’t just about performance. They make your AI agents smarter, scalable, and more responsive to real-world needs.

Let’s build AI that works for people — not just data.

💬 Curious about how we apply these frameworks in different industries? Let’s connect! - https://cizotech.com/

#innovation#cizotechnology#techinnovation#ios#mobileappdevelopment#appdevelopment#iosapp#app developers#mobileapps#ai#aiframeworks#deeplearning#tensorflow#pytorch#openai#cloudai#aiapplications

0 notes

Text

📌Project Title: Real-Time Streaming Analytics Engine for High-Frequency Trading Signal Extraction.🔴

ai-ml-ds-hft-stream-004 Filename: real_time_streaming_analytics_for_hft_signal_extraction.py Timestamp: Mon Jun 02 2025 19:11:39 GMT+0000 (Coordinated Universal Time) Problem Domain:Quantitative Finance, Algorithmic Trading (High-Frequency Trading – HFT), Real-Time Analytics, Stream Processing, Deep Learning for Time Series. Project Description:This project constructs an advanced,…

#AlgorithmicTrading#DataScience#DeepLearning#fintech#HFT#Kafka#LSTM#python#PyTorch#QuantitativeFinance#RealTimeAnalytics#StreamProcessing

0 notes