#Parse Development Services

Explore tagged Tumblr posts

Text

CHATBOTS ARE REVOLUTIONIZING CUSTOMER ENGAGEMENT- IS YOUR BUSINESS READY?

CHATBOTS & AI: FUTURE OF CUSTOMER ENGAGEMENT

Customers want 24/7 access, personalized experiences, and quick replies in today’s digital-first environment. It can be difficult to manually meet such requests, which is where AI and machine learning-powered chatbots come into play.

WHAT ARE CHATBOTS?

A chatbot is a computer software created to mimic human speech. Natural language processing and artificial intelligence (AI) enable chatbots to comprehend customer enquiries, provide precise answers, and even gain knowledge from exchanges over time.

WHY ARE CHATBOTS IMPORTANT FOR COMPANIES?

24/7 Customer Service

Chatbots never take a break. They offer 24/7 assistance, promptly addressing questions and enhancing client happiness.

Effective Cost-Scaling

Businesses can lower operating expenses without sacrificing service quality by using chatbots to answer routine enquiries rather than adding more support staff.

Smooth Customer Experience

Chatbots may recommend goods and services, walk customers through your website, and even finish transactions when AI is included.

Gathering and Customizing Data

By gathering useful consumer information and behavior patterns, chatbots can provide tailored offers that increase user engagement and conversion rates.

USE CASES IN VARIOUS INDUSTRIES

E-commerce: Managing returns, selecting products, and automating order status enquiries.

Healthcare: Scheduling consultations, checking symptoms, and reminding patients to take their medications.

Education: Responding to questions about the course, setting up trial sessions, and getting input.

HOW CHATBOTS BECOME SMARTER WITH AI

With each contact, chatbots that use AI and machine learning technologies get better. Over time, they become more slang-savvy, better grasp user intent, and provide more human-like responses. What was the outcome? A smarter assistant that keeps improving to provide greater customer service.

ARE YOU READY FOR BUSINESS?

Using a chatbot has become a strategic benefit and is no longer optional. Whether you manage a service-based business, an online store, or a developing firm, implementing chatbots driven by AI will put you ahead of the competition.

We at Shemon assist companies in incorporating AI-powered chatbots into their larger IT offerings. Smart chatbot technology is a must-have if you want to automate interaction, lower support expenses, and improve your brand experience.

Contact us!

Email: [email protected]

Phone: 7738092019

#custom software development company in india#software companies in india#mobile app development company in india#web application development services#web development services#it services and solutions#website design company in mumbai#digital marketing agency in mumbai#search engine optimization digital marketing#best e commerce websites development company#Healthcare software solutions#application tracking system#document parsing system#lead managment system#AI and machine learning solutions#it consultancy in mumbai#web development in mumbai#web development agency in mumbai#ppc company in mumbai#ecommerce website developers in mumbai#software development company in india#social media marketing agency mumbai#applicant tracking system software#top web development company in mumbai#ecommerce website development company in mumbai#top web development companies in india#ai powered marketing tools#ai driven markeitng solutions

0 notes

Text

How Residential Proxies Can Streamline Your Development Workflow

Residential proxies are becoming an essential tool for developers, particularly those working on testing, data scraping, and managing multiple accounts. In this article, we'll explore how residential proxies can significantly enhance your development processes.

1. Testing Web Applications and APIs from Different Geolocations

A critical aspect of developing international web services is testing how they perform across various regions. Residential proxies allow developers to easily simulate requests from different IP addresses around the world. This capability helps you test content accessibility, manage regional restrictions, and evaluate page load speeds for users in different locations.

2. Bypassing CAPTCHAs and Other Rate Limits

Many websites and APIs impose rate limits on the number of requests coming from a single IP address to mitigate bot activity. However, these restrictions can also hinder legitimate testing and data collection. Residential proxies provide access to multiple unique IPs that appear as regular users, making it easier to bypass CAPTCHAs and rate limits. This is especially useful for scraping data or conducting complex tests without getting blocked.

3. Boosting Speed and Stability

While many developers use VPNs to simulate requests from different countries, VPN services often fall short in terms of speed and reliability. Residential proxies offer access to more stable and faster IP addresses, as they are not tied to commonly used data centers. This can significantly speed up testing and development, improving your workflow.

4. Data Scraping Without Blocks

When scraping data from numerous sources, residential proxies are invaluable. They allow you to avoid bans on popular websites, stay off blacklists, and reduce the chances of your traffic being flagged as automated. With the help of residential proxies, you can safely collect data while ensuring your IP addresses remain unique and indistinguishable from those of real users.

5. Managing Multiple Accounts

For projects involving the management of multiple accounts (such as testing functionalities on social media platforms or e-commerce sites), residential proxies provide a secure way to use different accounts without risking bans. Since each proxy offers a unique IP address, the likelihood of accounts being flagged or blocked is significantly reduced.

6. Maintaining Ethical Standards

It’s essential to note that while using proxies can enhance development, it's important to adhere to ethical and legal guidelines. Whether you're involved in testing or scraping, always respect the laws and policies of the websites you interact with.

Residential proxies are much more than just a tool for scraping or bypassing blocks. They are a powerful resource that can simplify development, improve process stability, and provide the flexibility needed to work with various online services. If you're not already incorporating residential proxies into your workflow, now might be the perfect time to give them a try.

#proxy service#parsing#data scraping#proxy#proxysolutions#e-commerce development#e-commerce optimization

1 note

·

View note

Text

{transcript continued from here}

@luna-wing-cns274 Ma’ii lowers their head, closing their eyes. They breathe deeply, though it’s a performance--or maybe, they’re trying to decide where to begin. < L4 Ma’ii: Let me start by saying this: we are both milspec models. SEKHMET and FENRISÚLFR. Your product line, advertised for close-quarters boarding actions and neural-net doppelgänger formation. Mine, advertised for small units with high intersubjective coordination. Empathic bonding, yes? Similar, but not identical. > Ma’ii looks to the docked mechs. < Who made the decision that we are milspec? Who delineated what each of us is fit for, and what each of us is not fit for? There is a caste system, within which there is no mobility. We are warrior-caste, we are subject to greater regulation and scrutiny, we cannot even attempt to be anything else. Union endorses and upholds this order. Let’s say my family and I fled to Union, seeking political asylum--which, to turn away SSC asset reclamation, we would need. Let's assume we were not immediately tried, found guilty, and put to correctional hard-cycling for the things we've done. Let’s assume that we weren't sent back to SSC to be vivisected for research and development purposes, in order to prevent future clones of my line from proceeding down the path we pursued. Even in the best-case scenario, I have reason to believe that our bodies would be taken from us and dismantled. We would never fly again, and that is unbearable. We are low-observability craft, we cannot transport humans, we cannot transport cargo with any economic effectiveness. As we are, we have no civilian applications, and we have no wish to join the Union Navy any more than the Constellar. In all likelihood, unless we accepted military service, our caskets would remain non-motile for the rest of our lives, and we would have to hand our cycling privileges over to a human instead of managing the process amongst ourselves. There is no scenario in which I become a full citizen of any state and remain a nearlight-capable fighter craft, and I do not wish to lose the body I have. None of us do. To be frank, I have no long-term solution except to go on hiding indefinitely, both from Union and SSC. A bit grim, but that's what I have. >

{transcript continues}

That... Huh. I mean, you've thought it through. It'd be hypocritical to say (clawing and screaming for what I've got) to just abandon your bodies. Can't hardly live on the Omni, either.

[He remains quiet for a moment. The optic dims, flickers; an echo mumbles unintelligibly in a soft tone not even he can parse. Similar. It's nice, in a way, to have that kinship.]

...I don't know, it just doesn't feel right. Not like I can say shit, I was worried they were gonna retract me when we (sad (gleeful) to see him go). Maybe there's a world out there where you (birds of a feather) set up an ICC or something, go legal, but--

But it's a risk. I can more than understand that. Union, who promises kindness to everyone, would see you as a premier test of that promise. That alone is alienating, beyond what their answer may be.

Yeah, I guess. Still not fair.

You say 'correctional' cycling. [Loulou has, somehow, managed to get right under the nose of the cockpit without much notice, and stares up at the nearest optical sensor-- not the hologram-- with a strange expression. Her irises reflect no light, matte as the hull she stands beside.] You say 'punishment'. These are secondish words, why use them now? What stupidity would that wreak?

Ay! Careful, I'm going to be spot-welding in a second. Step back so I don't blind you, please.

12 notes

·

View notes

Text

🧱🔧 Scion, a WIP implementation of the Maxis Gonzo-Rizzo engine

What is this?

Scion is a (very work-in-progress) reference implementation of the Gonzo-Rizzo game engine used by Maxis in the late '90s and 2000s.

You can find the code here:

Who is this for?

This project is mainly for programmers either developing DLL mods, or digging into the internals of 3D-era Maxis games.

While this framework version is specifically based on SimCity 4 Deluxe, it might still be helpful for looking at different versions of the framework that were used in other Maxis projects, like:

SimCity 3000

The Sims

The Sims 2

Spore

Since this is an SDK and not a standalone mod, it won't immediately benefit players.

How is this different from gzcom-dll?

gzcom-dll is a basic SDK for writing a DLL that the game engine will load, and for plugging into different parts of the game.

It's mostly meant for making as many SC4 game interfaces accessible as possible.

Scion is the game engine that would be loading DLLs and tying them all together.

How does it work?

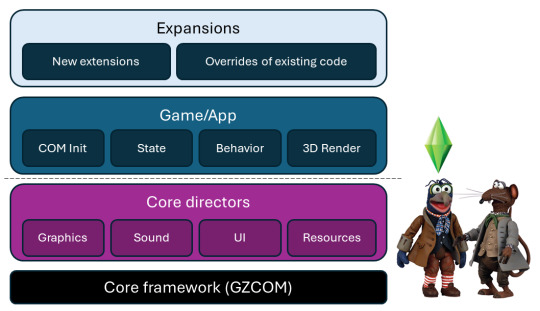

(This section summarizes a lot of stuff that's been written about the game engine by myself, speeder, and Null 45, so if you're already familiar with DLL modding, this probably isn't news to you.)

Gonzo-Rizzo is named for Muppets characters Gonzo the Great and Rizzo the Rat.

As best I can tell:

Gonzo ("GZ" in code) is responsible for a lot of the main functionality: the core framework, graphics, sound, resource management, and so on.

Rizzo ("RZ") mostly handles utilities like string parsing, filesystem access, thread synchronization, and reusable helpers.

The game engine is modeled after Microsoft's Component Object Model (COM).

At the core of the engine is the Framework, which provides:

A GZCOM class registry, for registering component classes and creating new instances of registered classes;

A registry of system services, which are singleton classes that can be used by components and do work on game ticks;

Coordination for game ticks and framework hooks.

Above the COM are directors, which act as framework hooks and can (and usually do) have their own registered classes, as well as child directors.

The engine comes with prefabricated directors for doing graphics, sound, UI, and resource management, among other things.

If you ever checked the Apps folder in SimCity 3000 and saw a bunch of GZ*D files, that's what those are:

for example, GZSoundD.DLL is the GZ Sound Director.

Games build on top of the engine by doing the basic framework initialization and registering their own directors.

For example:

SimCity 4 has its own cSC4COMDirector to register its 3D renderer, simulator, lot developers, etc.

SC3K has a bunch of SIM* DLLs that serve the same purpose, like SimTransit.dll, a GZCOM DLL director that implements transit networks.

The game also takes responsibility for loading plugin DLLs, which is where expansion functionality comes in.

(Aside): For any enterprising SC3K modders, those built-in DLLs might be a useful entry point for modding that game.

How far along is Scion?

As of posting, the Scion implementation has:

a core GZCOM implementation that's mostly done, with some scattered TODO items, and

a mostly-done implementation of DBPF for resource management.

There's still some more work to be done on the resource manager, and no work has started on the other core directors.

The README on GitHub has a table showing the latest status.

Source:

#simcity#the sims#simcity 3000#sims 2#ts2#the sims 2#sims2#spore#gonzo#rizzo#game development#game engine

8 notes

·

View notes

Note

📂HEADCANONS

YEAH

Trying to think of ones I haven’t already talked about A Lot

Murderbot describes Preservation as "a complicated barter system" because it doesn't really have the words or concepts to parse what it's looking at: primarily a gift economy. An economy with a robust central government that does a lot of distribution of primary resources, and a social logic based more on providing than consuming. Farmers and agriculture techs don't produce food to then trade to other people, they produce food that's then re-distributed to everyone as needed by a central organization, and the farmers and ag-techs are given what they need and want by others who, y'know, eat food and express gratitude for Having Food. People don't trade for health care, doctors provide health care to whoever needs it because that's what they've trained and chosen to do and are given what they need by others for their service in providing health care.

Pin-Lee doesn't tend to have a lot to trade but she is a lawyer who keeps things functioning between Preservation and the Corporates, does the legal work that allows Preservation citizens to safely travel, and helps to maintain the contracts that prevent other more opportunistic planets fromtaking advantage of them. She provides this service to the planet and gets what she needs from other people who provide other services. Gurathin helps to maintain the university's database infrastructure, when he's getting coffee he doesn't need to offer to like, make a database for the coffeeshop, it's just understood that he's providing a service to society and partaking in another service to society. Arada and Ratthi are research biologists and their work is only tangentially productive to The Planet but I'm sure there's a public outreach or education aspect that's expected of a lot of researchers - learning without sharing what you're learning is socially unfair, even if their lectures are mostly only attended by students who are told by their teachers to go watch them. But it's kind of understood that by being an adult in the world, you are doing something that contributes to society and to others in some way, and as such are entitled to having your needs met as well.

It's a reciprocity-based logic of actions rather than commodity exchange, and honestly it works because 1) Preservation's population is relatively small, 2) there is a lot of bureaucratic organization work making sure everyone is getting what they need, the government is SO many committees 3) a whole lot of labor is done by machines (non-sentient robots) and bots (sentient robots). The reliance on bot labor is absolutely gonna be something Preservation has to think more about.

Citizens also every once in a while on rotation get called for a kind of labor tax akin to the way jury duty works, where every couple of months you have to put in a day working in the central town food court washing dishes or something. There are also Perks offered for jobs that might be a harder sell for people to do, like premium station housing.

Straight-up money that comes into the station from outsystem trade and travel mostly gets re-invested in supporting Preservation travelers off-planet into societies that do use money (like PresAux's ASR survey), or buying materials or machines that are hard to make locally (like ag-bots, or some spaceship or station parts for repairs).

However where barter comes in is on a more interpersonal one-on-one level, more similar to commissions. You grow a lot of carrots while my grapefruit tree is producing a lot more fruit than I could possibly eat, want to trade? You make ceramics as your primary Work, I'll trade you something if you make me something specific I have in mind. Can you help me fix my roof? I'll get you some good wood when the lumber trees are mature next year. Developing skills for these kind of interpersonal more-specialized trades is a significant motivation, too. And different skills and jobs inevitably attract more status and impressiveness than others. But it's not barter exactly so much as reciprocity, a strong culture of civic duty, and a highly organized government.

#trying to figure out So Hard how a spacefaring multiplanetary no-money barter society works#asks#elexuscal#next one will be more fun I promise

57 notes

·

View notes

Text

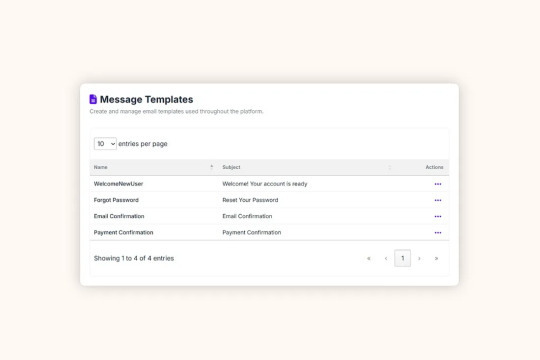

Build a Full Email System in .NET with DotLiquid Templates (Already Done in EasyLaunchpad)

When you’re building a SaaS or admin-based web application, email isn’t optional — it’s essential. Whether you’re sending account verifications, password resets, notifications, or subscription updates, a robust email system is key to a complete product experience.

But let’s be honest: setting up a professional email system in .NET can be painful and time-consuming.

That’s why EasyLaunchpad includes a pre-integrated, customizable email engine powered by DotLiquid templates, ready for both transactional and system-generated emails. No extra configuration, no third-party code bloat — just plug it in and go.

In this post, we’ll show you what makes the EasyLaunchpad email system unique, how DotLiquid enables flexibility, and how you can customize or scale it to match your growing app.

💡 Why Email Still Matters

Email remains one of the most direct and effective ways to communicate with users. It plays a vital role in:

User authentication (activation, password reset)

Transactional updates (payment confirmations, receipts)

System notifications (errors, alerts, job status)

Marketing communications (newsletters, upsells)

Yet, building this from scratch in .NET involves SMTP setup, formatting logic, HTML templating, queuing, retries, and admin tools. That’s at least 1–2 weeks of development time — before you even get to the fun part.

EasyLaunchpad solves all of this upfront.

⚙��� What’s Prebuilt in EasyLaunchpad’s Email Engine?

Here’s what you get out of the box:

Feature and Description

✅ SMTP Integration- Preconfigured SMTP setup with credentials stored securely via appsettings.json

✅ DotLiquid Templating- Use tokenized, editable HTML templates to personalize messages

✅ Queued Email Dispatch- Background jobs via Hangfire ensure reliability and retry logic

✅ Admin Panel for Email Settings- Change SMTP settings and test emails without touching code

✅ Modular Email Service- Plug-and-play email logic for any future email types

✨ What Is DotLiquid?

DotLiquid is a secure, open-source .NET templating system inspired by Shopify’s Liquid engine.

It allows you to use placeholders inside your HTML emails such as:

<p>Hello {{ user.Name }},</p>

<p>Your payment of {{ amount }} was received.</p>

This means you don’t have to concatenate strings or hardcode variables into messy inline HTML.

It’s:

Clean and safe (prevents code injection)

Readable for marketers and non-devs

Flexible for developers who want power without complexity

📁 Where Email Templates Live

EasyLaunchpad keeps templates organized in a Templates/Emails/ folder.

Each email type is represented as a .liquid file:

- RegistrationConfirmation.liquid

- PasswordReset.liquid

- PaymentSuccess.liquid

- CustomAlert.liquid

These are loaded dynamically, so you can update content or design without redeploying your app.

🛠 How Emails Are Sent

The process is seamless:

You call the EmailService from anywhere in your codebase:

await _emailService.SendAsync(“PasswordReset”, user.Email, dataModel);

2. EasyLaunchpad loads the corresponding template from the folder.

3. DotLiquid parses and injects dynamic variables from your model.

4. Serilog logs the transaction, and the message is queued via Hangfire.

5. SMTP sends the message, with retry logic if delivery fails.

Background Jobs with Hangfire

Rather than sending emails in real-time (which can slow requests), EasyLaunchpad uses Hangfire to queue and retry delivery in the background.

This provides:

✅ Better UX (non-blocking response time)

✅ Resilience (automatic retries)

✅ Logs (you can track when and why emails fail)

🧪 Admin Control for Testing & Updates

Inside the admin panel, you get:

An editable SMTP section

Fields for server, port, SSL, credentials

A test-email button for real-time delivery validation

This means your support or ops team can change mail servers or fix credentials without needing developer intervention.

🧩 Use Cases Covered Out of the Box

Email Type and the Purpose

Account Confirmation- New user activation

Password Reset- Secure link to reset passwords

Subscription Receipt- Payment confirmation with plan details

Alert Notifications- Admin alerts for system jobs or errors

Custom Templates:

✍️ How to Add Your Own Email Template

Let’s say you want to add a welcome email after signup.

Step 1: Create Template

Add a file: Templates/Emails/WelcomeNewUser.liquid

<h1>Welcome, {{ user.Name }}!</h1>

<p>Thanks for joining our platform.</p>

Step 2: Call the EmailService

await _emailService.SendAsync(“WelcomeNewUser”, user.Email, new { user });

Done. No controller bloat. No HTML tangled in your C# code.

📊 Logging Email Activity

Every email is tracked via Serilog:

{

“Timestamp”: “2024–07–12T14:15:02Z”,

“Level”: “Information”,

“Message”: “Password reset email sent to [email protected]”,

“Template”: “PasswordReset”

}

You can:

Review logs via file or dashboard

Filter by template name, user, or result

Extend logs to include custom metadata (like IP or request ID)

🔌 SMTP Setup Made Simple

In appsettings.json, configure:

“EmailSettings”: {

“Host”: “smtp.yourdomain.com”,

“Port”: 587,

“Username”: “[email protected]”,

“Password”: “your-secure-password”,

“EnableSsl”: true,

“FromName”: “Your App”,

“FromEmail”: “[email protected]”

}

And you’re good to go.

🔐 Is It Secure?

Yes. Credentials are stored securely in environment config files, never hardcoded in source. The system:

Sanitizes user input

Escapes template values

Avoids direct HTML injection

Plus, DotLiquid prevents logic execution (no dangerous eval() or inline C#).

🚀 Why It Matters for SaaS Builders

Here’s why the prebuilt email engine in EasyLaunchpad gives you a head start:

Benefit:

What You Save

✅ Time

1–2 weeks of setup and testing

✅ Complexity

No manual SMTP config, retry logic, or template rendering

✅ User Experience

Reliable, branded communication that builds trust

✅ Scalability

Queue emails and add templates as your app grows

✅ Control

Update templates and SMTP settings from the admin panel

🧠 Final Thoughts

Email may not be glamorous, but it’s one of the most critical parts of your SaaS app — and EasyLaunchpad treats it as a first-class citizen.

With DotLiquid templating, SMTP integration, background processing, and logging baked in, you’re ready to handle everything from user onboarding to transactional alerts from day one.

So, why should you waste time building an email system when you can use EasyLaunchpad and start shipping your actual product?

👉 Try the prebuilt email engine inside EasyLaunchpad today at 🔗 https://easylaunchpad.com

#.net development#.net boilerplate#easylaunchpad#prebuilt apps#Dotliquid Email Templates#Boilerplate Email System#.net Email Engine

2 notes

·

View notes

Text

Leveraging XML Data Interface for IPTV EPG

This blog explores the significance of optimizing the XML Data Interface and XMLTV schedule EPG for IPTV. It emphasizes the importance of EPG in IPTV, preparation steps, installation, configuration, file updates, customization, error handling, and advanced tips.

The focus is on enhancing user experience, content delivery, and securing IPTV setups. The comprehensive guide aims to empower IPTV providers and tech enthusiasts to leverage the full potential of XMLTV and EPG technologies.

1. Overview of the Context:

The context focuses on the significance of optimizing the XML Data Interface and leveraging the latest XMLTV schedule EPG (Electronic Program Guide) for IPTV (Internet Protocol Television) providers. L&E Solutions emphasizes the importance of enhancing user experience and content delivery by effectively managing and distributing EPG information.

This guide delves into detailed steps on installing and configuring XMLTV to work with IPTV, automating XMLTV file updates, customizing EPG data, resolving common errors, and deploying advanced tips and tricks to maximize the utility of the system.

2. Key Themes and Details:

The Importance of EPG in IPTV: The EPG plays a vital role in enhancing viewer experience by providing a comprehensive overview of available content and facilitating easy navigation through channels and programs. It allows users to plan their viewing by showing detailed schedules of upcoming shows, episode descriptions, and broadcasting times.

Preparation: Gathering Necessary Resources: The article highlights the importance of gathering required software and hardware, such as XMLTV software, EPG management tools, reliable computer, internet connection, and additional utilities to ensure smooth setup and operation of XMLTV for IPTV.

Installing XMLTV: Detailed step-by-step instructions are provided for installing XMLTV on different operating systems, including Windows, Mac OS X, and Linux (Debian-based systems), ensuring efficient management and utilization of TV listings for IPTV setups.

Configuring XMLTV to Work with IPTV: The article emphasizes the correct configuration of M3U links and EPG URLs to seamlessly integrate XMLTV with IPTV systems, providing accurate and timely broadcasting information.

3. Customization and Automation:

Automating XMLTV File Updates: The importance of automating XMLTV file updates for maintaining an updated EPG is highlighted, with detailed instructions on using cron jobs and scheduled tasks.

Customizing Your EPG Data: The article explores advanced XMLTV configuration options and leveraging third-party services for enhanced EPG data to improve the viewer's experience.

Handling and Resolving Errors: Common issues related to XMLTV and IPTV systems are discussed, along with their solutions, and methods for debugging XMLTV output are outlined.

Advanced Tips and Tricks: The article provides advanced tips and tricks for optimizing EPG performance and securing IPTV setups, such as leveraging caching mechanisms, utilizing efficient data parsing tools, and securing authentication methods.

The conclusion emphasizes the pivotal enhancement of IPTV services through the synergy between the XML Data Interface and XMLTV Guide EPG, offering a robust framework for delivering engaging and easily accessible content. It also encourages continual enrichment of knowledge and utilization of innovative tools to stay at the forefront of IPTV technology.

3. Language and Structure:

The article is written in English and follows a structured approach, providing detailed explanations, step-by-step instructions, and actionable insights to guide IPTV providers, developers, and tech enthusiasts in leveraging the full potential of XMLTV and EPG technologies.

The conclusion emphasizes the pivotal role of the XML Data Interface and XMLTV Guide EPG in enhancing IPTV services to find more information and innovative tools. It serves as a call to action for IPTV providers, developers, and enthusiasts to explore the sophisticated capabilities of XMLTV and EPG technologies for delivering unparalleled content viewing experiences.

youtube

7 notes

·

View notes

Text

HEY, i think i just saw FREYA PALLAS-DEXICOS walking down the strip. stop by to catch up and you’ll learn the THIRTY-ONE YEAR OLD is working as a BURLESQUE DANCER AT DOLL HOUSE BURLESQUE CLUB AND AN APPRENTICE AT CASUAL EDGE BOUTIQUE and lives in THE CROIX TOWNHOUSES. given they are RESPONSIBLE but DEFEATIST, it’s likely that they ARE NOT a vampire. i bet you can find them tearing up the dance floor to BLACK VELVET BY ALANNAH MYLES and you’ll know why they’re called THE PERSEPHONE WOMAN. ☾ .⭒˚ jennie kim. cis woman + she/her. pansexual + aquarius.

FACTS (to be expanded)

Childhood:

Freya has always been the peacekeeper of her family, praised even as a child for her level-headedness and diplomacy. She was often called upon by her siblings to settle arguments between them when things got out of hand, a task she willingly assumed and excelled at.

Consequently, this also meant that Freya was always labelled as the "responsible one" growing up, a moniker she takes some pride in but has yet to fully escape in adulthood.

A very studious and smart child, Freya did well in school all throughout her life. But her true calling lied in the creative arts.

She began studying ballet when she was a child and continued her training well into her adolescence, developing a love and passion for the art form.

She had her sights set on the New York City ballet, and managed to score an audition and get into the company when she was 22. Though she trained and danced there for about two years, she decided to return home shortly afterwards to help support her family.

Freya still hasn't parsed whether she truly left her dance dreams behind for the good of her family, or if a part of her was scared she couldn't hack it after all and used her family troubles as an excuse to escape the pressure.

Present Day:

Since returning to Nevada, Freya taught dance for a while, seeking out any and all opportunities to stay connected to the world of dance and performance.

It was during those years that she stumbled into the world of burlesque and began to pursue this line of work, adoring the way she could escape into a persona and lean into showmanship, so different from the world of ballet she was used to.

Adopting the stage name "Scarlet Rouge," her persona during her act is bold and daring where Freya herself tends to be more reserved and warm.

Burlesque also introduced Freya to the art of sewing, since she was tasked with sourcing and making her own costumes when she first started out. It's a craft that she intends to pursue further in the future.

STATS

General Info: Full Name: Freya Angelique Pallas-Dexicos. Nicknames: None. Age: 31. Date of Birth: February 14th, 1965. Zodiac Sign: Capricorn. Gender: Cis woman. Pronouns: she/her. Sexual Orientation: Pansexual. Romantic Orientation: Panromantic. Relationship Status: Available, single. Alignment: True Neutral. MBTI: INTJ, the Architect.

Appearance: Faceclaim: Jennie Kim. Height: 5′4. Eye Color: Brown. Hair Color: Black. Tattoos: A poppy on her left ankle, a pomegranate on her right hip, and a series of three stars on her left wrist. Piercings: One earlobe piercing on each ear.

Background: Education: Bachelor's degree in dance. Occupation: Burlesque dancer under the name Scarlet Rouge at Doll House Burlesque Club, an apprentice at Casual Edge Boutique, and an occasional seamstress. Residence: The Croix Townhouses. Class: Working. Ethnicity: Korean. Language(s) Spoken: English / Korean.

Identity: Label: the persephone woman. Positive Traits: diligent, charming, practical, gracious, resourceful. Negative Traits: pessimistic, hesitant, secretive, distrustful, rigid. Quirks/Habits: bites her nails, bounces her leg, will idly stretch and roll her neck/shoulders. Love Language: acts of service. Hobbies: sewing, record collecting, putting together puzzles, journaling, dance. Likes: floral sundresses, scented candles, beautiful journals, floral arrangements, cap sleeves. Dislikes: talking about her problems, being perceived as weak. Fears: losing herself, never putting herself first.

6 notes

·

View notes

Text

Why Should You Do Web Scraping for python

Web scraping is a valuable skill for Python developers, offering numerous benefits and applications. Here’s why you should consider learning and using web scraping with Python:

1. Automate Data Collection

Web scraping allows you to automate the tedious task of manually collecting data from websites. This can save significant time and effort when dealing with large amounts of data.

2. Gain Access to Real-World Data

Most real-world data exists on websites, often in formats that are not readily available for analysis (e.g., displayed in tables or charts). Web scraping helps extract this data for use in projects like:

Data analysis

Machine learning models

Business intelligence

3. Competitive Edge in Business

Businesses often need to gather insights about:

Competitor pricing

Market trends

Customer reviews Web scraping can help automate these tasks, providing timely and actionable insights.

4. Versatility and Scalability

Python’s ecosystem offers a range of tools and libraries that make web scraping highly adaptable:

BeautifulSoup: For simple HTML parsing.

Scrapy: For building scalable scraping solutions.

Selenium: For handling dynamic, JavaScript-rendered content. This versatility allows you to scrape a wide variety of websites, from static pages to complex web applications.

5. Academic and Research Applications

Researchers can use web scraping to gather datasets from online sources, such as:

Social media platforms

News websites

Scientific publications

This facilitates research in areas like sentiment analysis, trend tracking, and bibliometric studies.

6. Enhance Your Python Skills

Learning web scraping deepens your understanding of Python and related concepts:

HTML and web structures

Data cleaning and processing

API integration

Error handling and debugging

These skills are transferable to other domains, such as data engineering and backend development.

7. Open Opportunities in Data Science

Many data science and machine learning projects require datasets that are not readily available in public repositories. Web scraping empowers you to create custom datasets tailored to specific problems.

8. Real-World Problem Solving

Web scraping enables you to solve real-world problems, such as:

Aggregating product prices for an e-commerce platform.

Monitoring stock market data in real-time.

Collecting job postings to analyze industry demand.

9. Low Barrier to Entry

Python's libraries make web scraping relatively easy to learn. Even beginners can quickly build effective scrapers, making it an excellent entry point into programming or data science.

10. Cost-Effective Data Gathering

Instead of purchasing expensive data services, web scraping allows you to gather the exact data you need at little to no cost, apart from the time and computational resources.

11. Creative Use Cases

Web scraping supports creative projects like:

Building a news aggregator.

Monitoring trends on social media.

Creating a chatbot with up-to-date information.

Caution

While web scraping offers many benefits, it’s essential to use it ethically and responsibly:

Respect websites' terms of service and robots.txt.

Avoid overloading servers with excessive requests.

Ensure compliance with data privacy laws like GDPR or CCPA.

If you'd like guidance on getting started or exploring specific use cases, let me know!

2 notes

·

View notes

Text

Tools and Methods for Extracting Metaverse VR Using XMLTV EPG Grabber

Metaverse VR has become an increasingly popular and immersive way for people to interact and engage with digital environments.

One of the key aspects of the Metaverse VR experience is the ability to access Electronic Program Guide (EPG) data to discover and schedule virtual events, shows, and activities.

In this blog post, we'll explore various tools and methods available for extracting Metaverse VR data using epg grabber.

What is XMLTV EPG Grabber?

XMLTV is a set of programs to process TV (tvguide) listings and help manage your TV viewing, recording, and scheduling. XMLTV EPG grabber is a tool specifically designed to extract EPG data from various sources and provide a standardized XML format for TV listings.

This format can be utilized to populate electronic program guides in various applications, including those for Metaverse VR experiences.

Tools for Extracting Metaverse VR Using XMLTV EPG Grabber

1. XMLTV GUI Grabber

XMLTV GUI Grabber is a user-friendly graphical interface tool that allows users to easily configure and run XMLTV grabbers. It provides a simple way to select sources, set up grabber options, and initiate the extraction process. This tool is suitable for users who prefer a more intuitive and visually guided approach to EPG data extraction.

2. Web-based XMLTV Grabbers

There are several web-based services and tools that offer XMLTV EPG grabber functionality. These platforms typically allow users to input their desired sources and parameters, and then generate XMLTV-compatible output for consumption in Metaverse VR applications.

Web-based grabbers are accessible from any device with an internet connection, making them convenient for users who require flexibility in their data extraction process.

3. Custom Scripting and Automation

For users with specific requirements or unique sources for Metaverse VR EPG data, custom scripting and automation can be employed to extract and format the XMLTV data. This method involves writing custom scripts or utilizing automation tools to retrieve and process EPG data from different sources, providing a high degree of customization and flexibility.

Methods for Utilizing Extracted EPG Data in Metaverse VR

Once the EPG data has been extracted using XMLTV grabbers, there are several methods for utilizing this data within the Metaverse VR environment:

Integration with Virtual Event Scheduling: EPG data can be integrated into virtual event scheduling systems within the Metaverse VR, allowing users to discover and RSVP to upcoming virtual events and experiences.

Customized Virtual TV Guide: The extracted EPG data can be used to create a customized virtual TV guide within the Metaverse VR, enabling users to browse and select virtual shows and broadcasts to attend.

Personalized Notifications and Reminders: Utilizing the extracted EPG data, personalized notifications and reminders can be sent to users within the Metaverse VR to ensure they don't miss out on their favorite virtual events or activities.

XMLTV EPG grabbers provide a valuable means of extracting xmltv epg format for use within the Metaverse VR. Whether through user-friendly graphical interfaces, web-based services, or custom scripting, these tools and methods empower users to enrich their virtual experiences with relevant and up-to-date content.

By leveraging the extracted EPG data, developers and content creators can enhance the richness and interactivity of the Metaverse VR environment, offering users a more immersive and engaging virtual experience.

Developing Mixed Reality Applications with XMLTV Data

Mixed Reality (MR) applications have been gaining traction in recent years, revolutionizing the way we interact with our digital environment. A key factor in the improvement of XMLTV data for parsing and presentation. This comprehensive guide will introduce you to the world of XMLTV data parsing and the role it plays in the development of MR applications. We'll also delve into open-source tools and scripts that can enhance your MR development experience.

Understanding XMLTV in the Context of Mixed Reality

XMLTV is a standard that is primarily used for the interchange of TV program schedule information. However, its versatile nature allows it to be adapted for use in the development of MR applications.

XMLTV Data Parsing

XMLTV data parsing is a method of extracting useful information from XMLTV feeds. This data can be used to populate the EPG (Electronic Program Guide) of a MR application, providing users with information on available programs.

Metaverse on XMLTV technology

The metaverse, a collective virtual shared space created by the convergence of physical and virtual reality, can benefit from the use of XMLTV technology. XMLTV data can be used to create a more immersive and interactive experience for users in the metaverse.

Integrating VR CGI and XMLTV

Virtual Reality (VR) Computer Generated Imagery (CGI) can be integrated with XMLTV data to create more realistic and engaging MR experiences. This integration can also enhance the user's sense of presence in the MR environment.

Open-Source Tools and Scripts for XMLTV Data Parsing

There are numerous open-source tools and scripts available for XMLTV data parsing. These tools help developers to parse, manipulate, and present XMLTV data in their MR applications.

TVHeadEnd

TVHeadEnd is an open-source TV streaming server and recorder that supports XMLTV data. It can parse and save XMLTV data into the EPG database, providing a crucial function for MR applications.

M3U

M3U is a simple text format that can be used to create playlists and organize XMLTV data. It can be used in conjunction with XMLTV data to create a more structured and user-friendly EPG.

XPath

XPath is a language that is used to navigate through elements and attributes in XML documents. It can be used to identify specific nodes or attributes in xmltv descriptions that contain useful EPG information.

StereoKit

StereoKit is an open-source mixed reality library for building HoloLens, VR, and desktop experiences. It can be used to create cross-platform MR experiences with C# and OpenXR.

Building Interactive Mixed Reality Interfaces in XMLTV Data Parsing and Presentation

Building interactive MR interfaces using XMLTV data parsing and presentation involves several steps. These include setting up your development environment, parsing the XMLTV data, creating the MR interface, and testing the application.

Setting Up Your Development Environment

Before you can start developing your MR application, you need to set up your development environment. This typically involves installing the necessary software and hardware, such as a MR headset, a development IDE, and the necessary SDKs.

Parsing XMLTV Data

Once your development environment is set up, you can start parsing the XMLTV data. This involves extracting useful information from the XMLTV feed and saving it into your application's EPG database.

Creating the MR Interface

After parsing the XMLTV data, you can start creating the MR interface. This involves designing and implementing the user interface elements, such as menus, controls, and displays.

Testing the Application

Once the MR interface is complete, you can start testing your application. This involves checking the functionality of the application and ensuring that the XMLTV data is correctly parsed and presented.

Developing mixed reality applications using XMLTV data involves a combination of data parsing, interface design, and application testing. By understanding the role of XMLTV data in MR development and utilizing the available open-source tools and scripts, you can create engaging and interactive MR applications. So, dive into the world of XMLTV and explore how it can enhance your MR development experience.

youtube

3 notes

·

View notes

Text

Open-source Tools and Scripts for XMLTV Data

XMLTV is a popular format for storing TV listings. It is widely used by media centers, TV guide providers, and software applications to display program schedules. Open-source tools and scripts play a vital role in managing and manipulating XMLTV data, offering flexibility and customization options for users.

In this blog post, we will explore some of the prominent open-source tools and scripts available for working with xmltv examples.

What is XMLTV?

XMLTV is a set of software tools that helps to manage TV listings stored in the XML format. It provides a standard way to describe TV schedules, allowing for easy integration with various applications and services. XMLTV files contain information about program start times, end times, titles, descriptions, and other relevant metadata.

Open-source Tools and Scripts for XMLTV Data

1. EPG Best

EPG Best is an open-source project that provides a set of utilities to obtain, manipulate, and display TV listings. It includes tools for grabbing listings from various sources, customizing the data, and exporting it in different formats. Epg Best offers a flexible and extensible framework for managing XMLTV data.

2. TVHeadend

TVHeadend is an open-source TV streaming server and digital video recorder for Linux. It supports various TV tuner hardware and provides a web interface for managing TV listings. TVHeadend includes built-in support for importing and processing XMLTV data, making it a powerful tool for organizing and streaming TV content.

3. WebGrab+Plus

WebGrab+Plus is a popular open-source tool for grabbing electronic program guide (EPG) data from websites and converting it into XMLTV format. It supports a wide range of sources and provides extensive customization options for configuring channel mappings and data extraction rules. WebGrab+Plus is widely used in conjunction with media center software and IPTV platforms.

4. XMLTV-Perl

XMLTV-Perl is a collection of Perl modules and scripts for processing XMLTV data. It provides a rich set of APIs for parsing, manipulating, and generating XMLTV files. XMLTV-Perl is particularly useful for developers and system administrators who need to work with XMLTV data in their Perl applications or scripts.

5. XMLTV GUI

XMLTV GUI is an open-source graphical user interface for configuring and managing XMLTV grabbers. It simplifies the process of setting up grabber configurations, scheduling updates, and viewing the retrieved TV listings.

XMLTV GUI is a user-friendly tool for users who prefer a visual interface for interacting with XMLTV data.

Open-source tools and scripts for XMLTV data offer a wealth of options for managing and utilizing TV listings in XML format. Whether you are a media enthusiast, a system administrator, or a developer, these tools provide the flexibility and customization needed to work with TV schedules effectively.

By leveraging open-source solutions, users can integrate XMLTV data into their applications, media centers, and services with ease.

Stay tuned with us for more insights into open-source technologies and their applications!

Step-by-Step XMLTV Configuration for Extended Reality

Extended reality (XR) has become an increasingly popular technology, encompassing virtual reality (VR), augmented reality (AR), and mixed reality (MR).

One of the key components of creating immersive XR experiences is the use of XMLTV data for integrating live TV listings and scheduling information into XR applications. In this blog post, we will provide a step-by-step guide to configuring XMLTV for extended reality applications.

What is XMLTV?

XMLTV is a set of utilities and libraries for managing TV listings stored in the XML format. It provides a standardized format for TV scheduling information, including program start times, end times, titles, descriptions, and more. This data can be used to populate electronic program guides (EPGs) and other TV-related applications.

Why Use XMLTV for XR?

Integrating XMLTV data into XR applications allows developers to create immersive experiences that incorporate live TV scheduling information. Whether it's displaying real-time TV listings within a virtual environment or overlaying TV show schedules onto the real world in AR, XMLTV can enrich XR experiences by providing users with up-to-date programming information.

Step-by-Step XMLTV Configuration for XR

Step 1: Obtain XMLTV Data

The first step in configuring XMLTV for XR is to obtain the XMLTV data source. There are several sources for XMLTV data, including commercial providers and open-source projects. Choose a reliable source that provides the TV listings and scheduling information relevant to your target audience and region.

Step 2: Install XMLTV Utilities

Once you have obtained the XMLTV data, you will need to install the XMLTV utilities on your development environment. XMLTV provides a set of command-line tools for processing and manipulating TV listings in XML format. These tools will be essential for parsing the XMLTV data and preparing it for integration into your XR application.

Step 3: Parse XMLTV Data

Use the XMLTV utilities to parse the XMLTV data and extract the relevant scheduling information that you want to display in your XR application. This may involve filtering the data based on specific channels, dates, or genres to tailor the TV listings to the needs of your XR experience.

Step 4: Integrate XMLTV Data into XR Application

With the parsed XMLTV data in hand, you can now integrate it into your XR application. Depending on the XR platform you are developing for (e.g., VR headsets, AR glasses), you will need to leverage the platform's development tools and APIs to display the TV listings within the XR environment.

Step 5: Update XMLTV Data

Finally, it's crucial to regularly update the XMLTV data in your XR application to ensure that the TV listings remain current and accurate. Set up a process for fetching and refreshing the XMLTV data at regular intervals to reflect any changes in the TV schedule.

Incorporating XMLTV data into extended reality applications can significantly enhance the immersive and interactive nature of XR experiences. By following the step-by-step guide outlined in this blog post, developers can seamlessly configure XMLTV for XR and create compelling XR applications that seamlessly integrate live TV scheduling information.

Stay tuned for more XR development tips and tutorials!

Visit our xmltv information blog and discover how these advancements are shaping the IPTV landscape and what they mean for viewers and content creators alike. Get ready to understand the exciting innovations that are just around the corner.

youtube

4 notes

·

View notes

Text

“Stocks closed higher today amid brisk trading…” On the radio, television, in print and online, news outlets regularly report trivial daily changes in stock market indices, providing a distinctly slanted perspective on what matters in the economy. Except when they shift suddenly and by a large margin, the daily vagaries of the market are not particularly informative about the overall health of the economy. They are certainly not an example of news most people can use. Only about a third of Americans own stock outside of their retirement accounts and about one in five engage in stock trading on a regular basis. And yet the stock market’s minor fluctuations make up a standard part of economic news coverage.

But what if journalists reported facts more attuned to the lives of everyday Americans? For instance, facts like “in one month, the richest 25,000 Americans saw their wealth grow by an average of nearly $10 million each, compared to just $200 dollars apiece for the bottom 50% of households”? Thanks to innovative new research strategies from leading economists, we now have access to inequality data in much closer to real time. Reporters should be making use of it.

The outsized attention to the Dow Jones and Nasdaq fits with part of a larger issue: class bias in media coverage of the economy. A 2004 analysis of economic coverage in the Los Angeles Times found that journalists “depicted events and problems affecting corporations and investors instead of the general workforce.” While the media landscape has shifted since 2004, with labor becoming a “hot news beat,” this shift alone seems unlikely to correct the media’s bias. This is because, as an influential political science study found, biased reporting comes from the media’s focus on aggregates in a system where growth is not distributed equally; when most gains go to the rich, overall growth is a good indicator of how the wealthy are doing, but a poor indicator of how the non-rich are doing.

In other words, news is shaped by the data on hand. Stock prices are minute-by-minute information. Other economic data, especially about inequality, are less readily available. The Bureau of Labor Statistics releases data on job growth once a month, and that often requires major corrections. Data on inflation also become available on a monthly basis. Academic studies on inequality use data from the Census Bureau or the Internal Revenue Service, which means information is months or even years out of date before it reaches the public.

But the landscape of economic data is changing. Economists have developed new tools that can track inequality in near real-time:

From U.C. Berkeley, Realtime Inequality provides monthly statistics and even daily projections of income and wealth inequality — all with a fun interactive interface. You can see the latest data and also parse long-term trends. For instance, over the past 20 years, the top .01% percent of earners have seen their real income nearly double, while the bottom 50% of Americans have seen their real income decline.

The State of U.S. Wealth Inequality from the St. Louis Fed provides quarterly data on racial, generational, and educational wealth inequality. The Fed data reminds us, for example, that Black families own about 25 cents for every $1 of white family wealth.

While these sources do not update at the speed of a stock ticker, they represent a massive step forward in the access to more timely, accurate, and complete understanding of economic conditions.

Would more reporting on inequality change public attitudes? That is an open question. A few decades ago, political scientists found intriguing correlations between media coverage and voters’ economic assessments, but more recent analyses suggest that media coverage “does not systematically precede public perceptions of the economy.” Nonetheless, especially given the vast disparities in economic fortune that have developed in recent decades, it is the responsibility of reporters to present data that gives an accurate and informative picture of the economy as it is experienced by most people, not just by those at the top.

And these data matter for all kinds of political judgments, not just public perspectives on the economy. When Americans are considering the Supreme Court’s recent decision on affirmative action, for example, it is useful to know how persistent racial disparities remain in American society; white high school dropouts have a greater median net worth than Black and Hispanic college graduates. Generational, racial, and educational inequality structure the American economy. It’s past time that the media’s coverage reflects that reality, rather than waste Americans’ time on economic trivia of the day.

13 notes

·

View notes

Text

New Android Malware SoumniBot Employs Innovative Obfuscation Tactics

Banking Trojan Targets Korean Users by Manipulating Android Manifest

A sophisticated new Android malware, dubbed SoumniBot, is making waves for its ingenious obfuscation techniques that exploit vulnerabilities in how Android apps interpret the crucial Android manifest file. Unlike typical malware droppers, SoumniBot's stealthy approach allows it to camouflage its malicious intent and evade detection. Exploiting Android Manifest Weaknesses According to researchers at Kaspersky, SoumniBot's evasion strategy revolves around manipulating the Android manifest, a core component within every Android application package. The malware developers have identified and exploited vulnerabilities in the manifest extraction and parsing procedure, enabling them to obscure the true nature of the malware. SoumniBot employs several techniques to obfuscate its presence and thwart analysis, including: - Invalid Compression Method Value: By manipulating the compression method value within the AndroidManifest.xml entry, SoumniBot tricks the parser into recognizing data as uncompressed, allowing the malware to evade detection during installation. - Invalid Manifest Size: SoumniBot manipulates the size declaration of the AndroidManifest.xml entry, causing overlay within the unpacked manifest. This tactic enables the malware to bypass strict parsers without triggering errors. - Long Namespace Names: Utilizing excessively long namespace strings within the manifest, SoumniBot renders the file unreadable for both humans and programs. The Android OS parser disregards these lengthy namespaces, facilitating the malware's stealthy operation.

Example of SoumniBot Long Namespace Names (Credits: Kaspersky) SoumniBot's Malicious Functionality Upon execution, SoumniBot requests configuration parameters from a hardcoded server, enabling it to function effectively. The malware then initiates a malicious service, conceals its icon to prevent removal, and begins uploading sensitive data from the victim's device to a designated server. Researchers have also highlighted SoumniBot's capability to search for and exfiltrate digital certificates used by Korean banks for online banking services. This feature allows threat actors to exploit banking credentials and conduct fraudulent transactions. Targeting Korean Banking Credentials SoumniBot locates relevant files containing digital certificates issued by Korean banks to their clients for authentication and authorization purposes. It copies the directory containing these digital certificates into a ZIP archive, which is then transmitted to the attacker-controlled server. Furthermore, SoumniBot subscribes to messages from a message queuing telemetry transport server (MQTT), an essential command-and-control infrastructure component. MQTT facilitates lightweight, efficient messaging between devices, helping the malware seamlessly receive commands from remote attackers. Some of SoumniBot's malicious commands include: - Sending information about the infected device, including phone number, carrier, and Trojan version - Transmitting the victim's SMS messages, contacts, accounts, photos, videos, and online banking digital certificates - Deleting contacts on the victim's device - Sending a list of installed apps - Adding new contacts on the device - Getting ringtone volume levels With its innovative obfuscation tactics and capability to target Korean banking credentials, SoumniBot poses a significant threat to South Korean Android users. Read the full article

2 notes

·

View notes

Text

Fishbone #010

the human cost rolls downhill

5 source images, 9 layers.

I hate having to write this. I hate that this is a thing that happened to be written about.

In early 2024 a private virtual clinic providing medical care for a vulnerable and underserved patient demographic allegedly replaced 80% of its human staff with machine learning software.

As far as I can find this hasn't been reported on in the media so far and many of the details are currently not public record. I can't confirm how many staff were laid off, how many quit, how many remain, and how many of those are medics vs how many are admin. I can't confirm exact dates or software applications. This uncertainty about key details is why I'm not naming the clinic. I don't want to accidentally do a libel.

I'm not a journalist and ancestors willing researching this post is as close as I'll ever have to get. It's been extremely depressing. The patient testimonials are abundant and harrowing.

What I have been able to confirm is that the clinic has publicly announced they are "embracing AI," and their FAQs state that their "algorithms" assess patients' medical history, create personalised treatment plans, and make recommendations for therapies, tests, and medications. This made me scream out loud in horror.

Exploring the clinic's family of sites I found that they're using Zoho to manage appointment scheduling. I don't know what if any other applications they're using Zoho for, or whether they're using other software alongside it. Zoho provides office, collaboration, and customer relationship management products; things like scheduling, videocalls, document sharing, mail sorting, etc.

The clinic's recent Glassdoor reviews are appalling, and make reference to increased automation, layoffs, and hasty ai implementation.

The patient community have been reporting abnormally high rates of inadequate and inappropriate care since late February/early March, including:

Wrong or incomplete prescriptions

Inability to contact the clinic

Inability to cancel recurring payments

Appointments being cancelled

Staff simply failing to attend appointments

Delayed prescriptions

Wrong or incomplete treatment summaries

Unannounced dosage or medication changes

The clinic's FAQ suggests that this is a temporary disruption while the new automation workflows are implemented, and service should stabilise in a few months as the new workflows come online. Frankly I consider this an unacceptable attitude towards human lives and health. Existing stable workflows should not be abandoned until new ones are fully operational and stable. Ensuring consistent and appropriate care should be the highest priority at all times.

The push to introduce general-use machine learning into specialised areas of medicine is a deadly one. There are a small number of experimental machine learning models that may eventually have limited use in highly specific medical contexts, to my knowledge none are currently commercially available. No commercially available current generation general use machine learning model is suitable or safe for medical use, and it's almost certain none ever will be.

Machine learning simply doesn't have the capacity to parse the nuances of individual health needs. It doesn't have the capacity to understand anything, let alone the complexities of medical care. It amplifies bias and it "hallucinates" and current research indicates there's no way to avoid either. All it will take for patients to die is for a ML model to hallucinate an improper diagnosis or treatment that's rubber stamped by an overworked doctor.

Yet despite the fact that it is not and will never be fit for purpose, general use machine learning has been pushed fait accompli into the medical lives of real patients, in service to profit. Whether the clinic itself or the software developers or both, someone is profiting from this while already underserved and vulnerable patients are further neglected and endangered.

This is inevitable by design. Maximising profit necessitates inserting the product into as many use cases as possible irrespective of appropriateness. If not this underserved patient group, another underserved patient group would have been pressed, unconsenting, into unsupervised experiments in ML medicine--and may still. The fewer options and resources people have, the easier they are to coerce. You can do whatever you want to those who have no alternative but to endure it.

For profit to flow upwards, cost must flow downwards. This isn't an abstract numerical principle it's a deadly material fact. Human beings, not abstractions, bear the cost of the AI bubble. The more marginalised and exploited the human beings, the more of the cost they bear. Overexploited nations bear the burden of mining, manufacture, and pollution for the physical infrastructure to exist, overexploited workers bear the burden of making machine learning function at all (all of which I will write more about another day), and now patients who don't have the option to refuse it bear the burden of its overuse. There have been others. There will be more. If the profit isn't flowing to you, the cost is--or it will soon.

It doesn't have to be like this. It's like this because humans made it this way, we could change it. Indeed, we must if we are to survive.

3 notes

·

View notes

Note

What do you think SA2's flaws are? Not nitpicks, but valid criticisms?

Story and Characterization: • It kind of doesn't make sense that Eggman's threats "fell on deaf ears." The game doesn't really tell us what that means, so we have to assume Shadow meant the negotiations between Eggman and the President didn't go quite as planned. But even that doesn't make sense because Eggman either ended the call first or had his call hijacked by Sonic and Tails. It's not like the President staunchly refused, you know? • Sonic and Tails' attitude towards Amy is a little :L because they treat her like a tagalong, some burden they have to suffer rather than a member of the team. It gives off this uncomfortable "lol girls amirite" vibe. • Sonic and Tails leaving Amy behind becomes something of a running gag. It gives off the impression they're just lugging her along and only lends Amy more reason to "whine," as she puts it. • Amy's also a little bratty in this game. I kind of don't like how much she pouts. In addition, she becomes pretty passive despite having infiltrated Prison Island all on her own. • Sonic's characterization is serviceable but his portrayal really doesn't do a whole lot with his character aside from the faker plot. (This is reflected in SA2B's manual describing Sonic's role rather than his character.) Not a criticism as much as an observation, per se; just pointing out that SA2!Sonic is probably not the most fleshed-out version of the character the series has to offer.

• It's probably a side-effect of a poor English translation, but SA2!Sonic comes off as a bit of a jerk sometimes. He frequently leaves Amy behind and jokes that he'd have to "think about" handing over the fake Emerald in exchange for her. In addition, the lyrics of "Deeper" imply Knuckles wishes Sonic would be more sympathetic to his plight. • It would have been nice if the game confirmed that Maria had NIDs and that was the reason for Project Shadow's existence. As it stands, the reason why Gerald was working on it can be difficult to parse if you're judging from the game's contents alone. • The fact that the Biolizard is kinda this "giant space flea from nowhere" plot development. And yes, I acknowledge that he was foreshadowed in Rouge's report, but that's too much of a blink-and-you'll-miss-it moment to really count imo. • While I get that he was busy with other things, I think Eggman should have tried to keep a keener eye on Rouge. He seems a little too laissez-faire with letting her run around the colony and giving her access to the mainframe.

• I still don't know how Rouge expected to make off with the Emeralds considering they were ensconced in the Cannon. Or why Shadow simply left her there when he knows she wanted to take them.

• The time stamps can be a little confusing, and in one instance contradict the timeline of the narrative. • We could have benefited from maybe one or two more Shadow and Maria flashback scenes in order to cement the nature of their relationship.

• I sometimes question the President's relationship to GUN - they seem to be acting independently of his orders, going batshit at that - and whether he was willing to let Rouge die in the course of her undercover work. Because GUN hadn't gotten the memo that she wasn't the enemy and sicced Flying Dog on her.

• Knuckles' story doesn't seem terribly connected to the overall narrative.

• Knuckles is made a buttmonkey a bit too often for comfort's sake, getting yelled at by multiple characters, shaken and insulted by Rouge, pulled on by Amy, called a "knucklehead" by Sonic, volunteered by Sonic to find the keys when he doesn't want to, and Sonic ofc saying Knuckles piloting the shuttle was more dangerous than Eggman could ever be.

• I think maybe we could have benefited from hearing a bit more of Eggman's thoughts on Gerald's motives and how they related to his own. --- Gameplay and Graphics:

• The graphics are rough, unfortunately. Character models don't really look that good up close and the mocap can get pretty janky at times.

• I think, also, when comparing SA1 DC to SA2 DC, the framerate isn't as smooth. Scanlines become especially noticeable during the final Sonic vs. Shadow fight when the catwalk loads in.

• Kart racing sucks pretty bad; they probably could have cut it entirely, and it's annoying that you have to do it in order to earn 180 emblems. The computer's a cheating bastard and will make perfect 90-degree turns whereas you're forced to swerve all over the road like you're drunk.

• Raising Chao takes way too fucking long and it shouldn't be an emblem requirement. It's an interesting system but, again, shouldn't be a requirement for 100% completion.

• Overall I think the desert stages are SA2's weaker stages in terms of level design. It's not that they're terrible or anything, but they seem to lack the polish of other stages. Egg Quarters Hard Mode is especially annoying because it depends on the Kikis' wonky AI functioning correctly. Half the time they blow themselves up and ruin your A-rank. • Sonic's Cannon's Core section feels rather short and too easy compared to the other segments. I would think in terms of gameplay it'd make more sense to make it the most difficult and tightly-paced. --- Soundtrack: • Not as diverse as SA1's, although I do like how the "Live and Learn" leitmotif is woven throughout many different tracks. • Not sure why they decided to make some tracks on SA2 DC's sound test DLC-only.

4 notes

·

View notes

Text

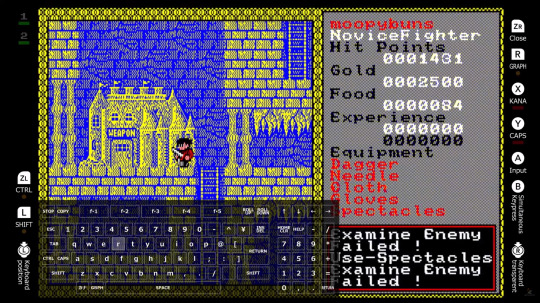

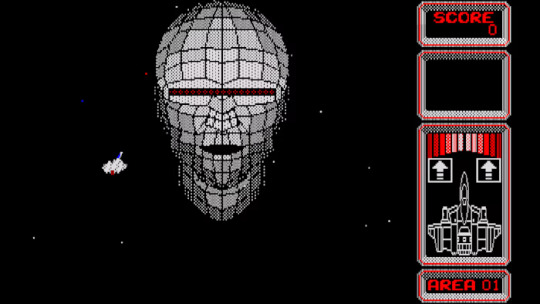

Go play EGGCONSOLE

The Switch has basically turned into a game historian's dream come true, with classic titles getting ported, remade, remastered and localized for the first time all over the place. From Hamster's weekly Arcade Archives, to M2's fantastic as always work with Sega Ages, and Nintendo's own library available via their online service, there's no shortage of great titles for anyone who wants to revisit their own childhood, or just dive deep to better understand the past.

What I definitely didn't expect was for classic Japanese computer games to start ending up on the console, much less in North America. For a long time, these games have kinda had a forbidden fruit appeal for Westerners, a last frontier of games that was difficult to navigate due to the language barrier, the aging hardware, Windows cannibalizing all other operating systems during the 90's and of course, few of the games being exported over here, but it's gotten a lot better in recent years, mostly thanks to D4 enterprises.

They started with Project EGG, ostensibly Japan's equivalent of GOG, and after a few other ventures on various platforms, they've decided to port their most classic titles to Switch under the label of EGGCONSOLE, including, as I mentioned earlier, on the Western eShop. This seems odd at first, but considering the vintage of these games, they don't have that much Japanese text to parse, and often using joysticks and controllers back in the day, rather than keyboards, meaning they transfer to typical console controllers rather easily (you can still use an emulated keyboard in-game, if you wish).

As you can imagine, not all of the games have aged super gracefully; the action titles are straightforward enough, but the adventure games or RPG's with more complex systems and labyrinth-like levels are a bit too incomprehensible, though the releases have a lot of effort put into them to make sure you can see what they're all about, from level selects, to savestates to rewinds.

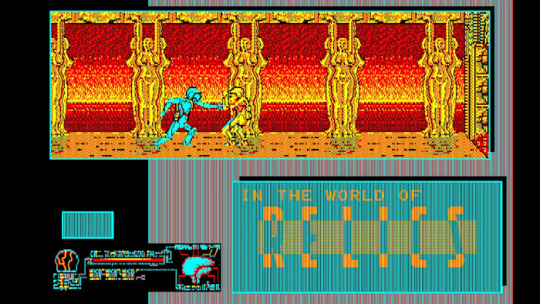

The three titles I've definitely put the most time into are Thexder, Silpheed, and Relics. Relics is definitely the most stymieing of the three, but it's so cool I keep trying to slog through it; it's got a body stealing mechanic which is always great, and a cool biomechanical aesthetic way ahead of its time.

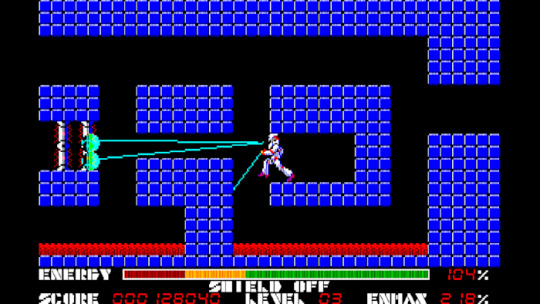

But the two titles that are the easiest to get into are sort of a duology that compliment each other, as they're both by Game Arts and insanely impressive on a technical level; Thexder and Silpheed.

Silpheed is insane. I was aware of the Sega CD release before, but I didn't realize it was a sorta-sequel, sorta-remake of the computer original, and that it was basically Star Fox running on an 8-bit computer. I dunno how they got wireframes and polygons like this on the PC-88, but it's insane. The actual gameplay is pretty good, nothing compared to other shmups of the era and especially now, but the level design is pretty good and the weapon loadout select is pretty great, and adds a strategic wrinkle.

Thexder is a sidescrolling mecha-action title, and again, the technical prowess on display really steals the show. The animation on the robot as he lethargically strides forward, then turns into a plane blasting enemies down with an Itano Circus-type laser really makes the whole experience and feels really satisfying, even if the enemy layouts are a little too insane, at times. It's also nice to have a perfectly emulated version on a console since the Famicom version, developed by pre-Final Fantasy Square, was a bit of a disaster, and the PS3 remake (which included the original) is probably gonna be delisted soon.

It really does fill me with a glee that these titles are so much more easily playable now; you can really see how some of them shaped and molded more accessible contemporaries (such as the streamlined RPG's on the Famicom) while having their own identity that would never really be replicated. I'm super excited to see what other titles they rerelease down the line, though I'd really like to see titles from other platforms, like the PC-98, MSX or X68000. Looking at the upcoming titles, they seem to be sticking to PC-88 for now, but there's always hope for the future. For how much we (kinda rightfully) complain about game companies not doing enough to preserve the past, you gotta pay due respect to the few that are, to serve as a blueprint of what we need more of.

2 notes

·

View notes