#Scale Model Making Companies

Explore tagged Tumblr posts

Text

Trusted Scale Model Company in Mumbai for Aerospace

In the fast-evolving aerospace industry, where innovation meets precision, scale models are crucial for presenting concepts, validating designs, and impressing stakeholders. If you are searching for a trusted scale model company in Mumbai, your search ends at Jagtap Miniature — a pioneer in creating aerospace models with unmatched craftsmanship and attention to detail.

The Role of Scale Models in Aerospace

Whether you're a defense contractor, aviation firm, or a research institution, scale models offer incredible value:

Visualization of aircraft designs and aerospace vehicles

Detailed internal cutaways for exhibitions and technical reviews

Prototypes for aerodynamics, wind tunnel testing, and concept validation

Displays for trade shows, museums, and investor meetings

These models serve as powerful tools that bridge imagination with reality, especially in a field as complex as aerospace.

Jagtap Miniature: A Leading Scale Model Company in India

At Jagtap Miniature, we specialize in crafting highly accurate aerospace models ranging from commercial aircraft to defense drones, satellites, rockets, and helicopters. Based in Mumbai, we’ve earned a reputation as a top-tier scale model company in India, thanks to our precision, innovation, and dedication to quality.

Why Choose Jagtap Miniature?

✅ Decades of Experience in miniature and scale model making

✅ Specialized in Aerospace Projects including ISRO, DRDO, private aviation firms

✅ Advanced Materials & Technology – 3D printing, laser cutting, CNC detailing

✅ Custom Solutions – From concept sketches to detailed CAD-based models

✅ On-Time Delivery with nationwide and international shipping

We understand the demands of the aerospace sector and deliver models that meet the highest standards of engineering and visual excellence.

Serving India and Beyond

As a recognized scale model company in India, we’ve had the privilege of working with prestigious aerospace organizations, educational institutions, and design studios across the country. Our models have been featured in exhibitions, defense expos, aviation summits, and investor presentations.

Whether you’re in Mumbai, Bangalore, Delhi, or abroad — Jagtap Miniature is your trusted partner for world-class scale models.

Get in Touch

Looking for a professional scale model company in India that delivers precision, creativity, and reliability? Connect with Jagtap Miniature — your trusted scale model company in Mumbai for aerospace and beyond.

📞 Visit our website: https://jagtapminiature.com/ 📩 Send your project requirements and get a free consultation today!

#Jagtap Miniature#scale model company in India#Miniature model making company#Custom Ship Model Makers

1 note

·

View note

Text

If anyone wants to know why every tech company in the world right now is clamoring for AI like drowned rats scrabbling to board a ship, I decided to make a post to explain what's happening.

(Disclaimer to start: I'm a software engineer who's been employed full time since 2018. I am not a historian nor an overconfident Youtube essayist, so this post is my working knowledge of what I see around me and the logical bridges between pieces.)

Okay anyway. The explanation starts further back than what's going on now. I'm gonna start with the year 2000. The Dot Com Bubble just spectacularly burst. The model of "we get the users first, we learn how to profit off them later" went out in a no-money-having bang (remember this, it will be relevant later). A lot of money was lost. A lot of people ended up out of a job. A lot of startup companies went under. Investors left with a sour taste in their mouth and, in general, investment in the internet stayed pretty cooled for that decade. This was, in my opinion, very good for the internet as it was an era not suffocating under the grip of mega-corporation oligarchs and was, instead, filled with Club Penguin and I Can Haz Cheezburger websites.

Then around the 2010-2012 years, a few things happened. Interest rates got low, and then lower. Facebook got huge. The iPhone took off. And suddenly there was a huge new potential market of internet users and phone-havers, and the cheap money was available to start backing new tech startup companies trying to hop on this opportunity. Companies like Uber, Netflix, and Amazon either started in this time, or hit their ramp-up in these years by shifting focus to the internet and apps.

Now, every start-up tech company dreaming of being the next big thing has one thing in common: they need to start off by getting themselves massively in debt. Because before you can turn a profit you need to first spend money on employees and spend money on equipment and spend money on data centers and spend money on advertising and spend money on scale and and and

But also, everyone wants to be on the ship for The Next Big Thing that takes off to the moon.

So there is a mutual interest between new tech companies, and venture capitalists who are willing to invest $$$ into said new tech companies. Because if the venture capitalists can identify a prize pig and get in early, that money could come back to them 100-fold or 1,000-fold. In fact it hardly matters if they invest in 10 or 20 total bust projects along the way to find that unicorn.

But also, becoming profitable takes time. And that might mean being in debt for a long long time before that rocket ship takes off to make everyone onboard a gazzilionaire.

But luckily, for tech startup bros and venture capitalists, being in debt in the 2010's was cheap, and it only got cheaper between 2010 and 2020. If people could secure loans for ~3% or 4% annual interest, well then a $100,000 loan only really costs $3,000 of interest a year to keep afloat. And if inflation is higher than that or at least similar, you're still beating the system.

So from 2010 through early 2022, times were good for tech companies. Startups could take off with massive growth, showing massive potential for something, and venture capitalists would throw infinite money at them in the hopes of pegging just one winner who will take off. And supporting the struggling investments or the long-haulers remained pretty cheap to keep funding.

You hear constantly about "Such and such app has 10-bazillion users gained over the last 10 years and has never once been profitable", yet the thing keeps chugging along because the investors backing it aren't stressed about the immediate future, and are still banking on that "eventually" when it learns how to really monetize its users and turn that profit.

The pandemic in 2020 took a magnifying-glass-in-the-sun effect to this, as EVERYTHING was forcibly turned online which pumped a ton of money and workers into tech investment. Simultaneously, money got really REALLY cheap, bottoming out with historic lows for interest rates.

Then the tide changed with the massive inflation that struck late 2021. Because this all-gas no-brakes state of things was also contributing to off-the-rails inflation (along with your standard-fare greedflation and price gouging, given the extremely convenient excuses of pandemic hardships and supply chain issues). The federal reserve whipped out interest rate hikes to try to curb this huge inflation, which is like a fire extinguisher dousing and suffocating your really-cool, actively-on-fire party where everyone else is burning but you're in the pool. And then they did this more, and then more. And the financial climate followed suit. And suddenly money was not cheap anymore, and new loans became expensive, because loans that used to compound at 2% a year are now compounding at 7 or 8% which, in the language of compounding, is a HUGE difference. A $100,000 loan at a 2% interest rate, if not repaid a single cent in 10 years, accrues to $121,899. A $100,000 loan at an 8% interest rate, if not repaid a single cent in 10 years, more than doubles to $215,892.

Now it is scary and risky to throw money at "could eventually be profitable" tech companies. Now investors are watching companies burn through their current funding and, when the companies come back asking for more, investors are tightening their coin purses instead. The bill is coming due. The free money is drying up and companies are under compounding pressure to produce a profit for their waiting investors who are now done waiting.

You get enshittification. You get quality going down and price going up. You get "now that you're a captive audience here, we're forcing ads or we're forcing subscriptions on you." Don't get me wrong, the plan was ALWAYS to monetize the users. It's just that it's come earlier than expected, with way more feet-to-the-fire than these companies were expecting. ESPECIALLY with Wall Street as the other factor in funding (public) companies, where Wall Street exhibits roughly the same temperament as a baby screaming crying upset that it's soiled its own diaper (maybe that's too mean a comparison to babies), and now companies are being put through the wringer for anything LESS than infinite growth that Wall Street demands of them.

Internal to the tech industry, you get MASSIVE wide-spread layoffs. You get an industry that used to be easy to land multiple job offers shriveling up and leaving recent graduates in a desperately awful situation where no company is hiring and the market is flooded with laid-off workers trying to get back on their feet.

Because those coin-purse-clutching investors DO love virtue-signaling efforts from companies that say "See! We're not being frivolous with your money! We only spend on the essentials." And this is true even for MASSIVE, PROFITABLE companies, because those companies' value is based on the Rich Person Feeling Graph (their stock) rather than the literal profit money. A company making a genuine gazillion dollars a year still tears through layoffs and freezes hiring and removes the free batteries from the printer room (totally not speaking from experience, surely) because the investors LOVE when you cut costs and take away employee perks. The "beer on tap, ping pong table in the common area" era of tech is drying up. And we're still unionless.

Never mind that last part.

And then in early 2023, AI (more specifically, Chat-GPT which is OpenAI's Large Language Model creation) tears its way into the tech scene with a meteor's amount of momentum. Here's Microsoft's prize pig, which it invested heavily in and is galivanting around the pig-show with, to the desperate jealousy and rapture of every other tech company and investor wishing it had that pig. And for the first time since the interest rate hikes, investors have dollar signs in their eyes, both venture capital and Wall Street alike. They're willing to restart the hose of money (even with the new risk) because this feels big enough for them to take the risk.

Now all these companies, who were in varying stages of sweating as their bill came due, or wringing their hands as their stock prices tanked, see a single glorious gold-plated rocket up out of here, the likes of which haven't been seen since the free money days. It's their ticket to buy time, and buy investors, and say "see THIS is what will wring money forth, finally, we promise, just let us show you."

To be clear, AI is NOT profitable yet. It's a money-sink. Perhaps a money-black-hole. But everyone in the space is so wowed by it that there is a wide-spread and powerful conviction that it will become profitable and earn its keep. (Let's be real, half of that profit "potential" is the promise of automating away jobs of pesky employees who peskily cost money.) It's a tech-space industrial revolution that will automate away skilled jobs, and getting in on the ground floor is the absolute best thing you can do to get your pie slice's worth.

It's the thing that will win investors back. It's the thing that will get the investment money coming in again (or, get it second-hand if the company can be the PROVIDER of something needed for AI, which other companies with venture-back will pay handsomely for). It's the thing companies are terrified of missing out on, lest it leave them utterly irrelevant in a future where not having AI-integration is like not having a mobile phone app for your company or not having a website.

So I guess to reiterate on my earlier point:

Drowned rats. Swimming to the one ship in sight.

36K notes

·

View notes

Note

genuinely curious but I don't know how to phrase this in a way that sounds less accusatory so please know I'm asking in good faith and am just bad at words

what are your thoughts on the environmental impact of generative ai? do you think the cost for all the cooling system is worth the tasks generative ai performs? I've been wrangling this because while I feel like I can justify it as smaller scales, that would mean it isn't a publicly available tool which I also feel uncomfortable with

the environmental impacts of genAI are almost always one of three things, both by their detractors and their boosters:

vastly overstated

stated correctly, but with a deceptive lack of context (ie, giving numbers in watt-hours, or amount of water 'used' for cooling, without necessary context like what comparable services use or what actually happens to that water)

assumed to be on track to grow constantly as genAI sees universal adoption across every industry

like, when water is used to cool a datacenter, that datacenter isn't just "a big building running chatgpt" -- datacenters are the backbone of the modern internet. now, i mean, all that said, the basic question here: no, i don't think it's a good tradeoff to be burning fossil fuels to power the magic 8ball. but asking that question in a vacuum (imo) elides a lot of the realities of power consumption in the global north by exceptionalizing genAI as opposed to, for example, video streaming, or online games. or, for that matter, for any number of other things.

so to me a lot of this stuff seems like very selective outrage in most cases, people working backwards from all the twitter artists on their dashboard hating midjourney to find an ethical reason why it is irredeemably evil.

& in the best, good-faith cases, it's taking at face value the claims of genAI companies and datacenter owners that the power usage will continue spiralling as the technology is integrated into every aspect of our lives. but to be blunt, i think it's a little naive to take these estimates seriously: these companies rely on their stock prices remaining high and attractive to investors, so they have enormous financial incentives not only to lie but to make financial decisions as if the universal adoption boom is just around the corner at all times. but there's no actual business plan! these companies are burning gigantic piles of money every day, because this is a bubble

so tldr: i don't think most things fossil fuels are burned for are 'worth it', but the response to that is a comprehensive climate politics and not an individualistic 'carbon footprint' approach, certainly not one that chooses chatgpt as its battleground. genAI uses a lot of power but at a rate currently comparable to other massively popular digital leisure products like fortnite or netflix -- forecasts of it massively increasing by several orders of magnitude are in my opinion unfounded and can mostly be traced back to people who have a direct financial stake in this being the case because their business model is an obvious boondoggle otherwise.

943 notes

·

View notes

Text

Green energy is in its heyday.

Renewable energy sources now account for 22% of the nation’s electricity, and solar has skyrocketed eight times over in the last decade. This spring in California, wind, water, and solar power energy sources exceeded expectations, accounting for an average of 61.5 percent of the state's electricity demand across 52 days.

But green energy has a lithium problem. Lithium batteries control more than 90% of the global grid battery storage market.

That’s not just cell phones, laptops, electric toothbrushes, and tools. Scooters, e-bikes, hybrids, and electric vehicles all rely on rechargeable lithium batteries to get going.

Fortunately, this past week, Natron Energy launched its first-ever commercial-scale production of sodium-ion batteries in the U.S.

“Sodium-ion batteries offer a unique alternative to lithium-ion, with higher power, faster recharge, longer lifecycle and a completely safe and stable chemistry,” said Colin Wessells — Natron Founder and Co-CEO — at the kick-off event in Michigan.

The new sodium-ion batteries charge and discharge at rates 10 times faster than lithium-ion, with an estimated lifespan of 50,000 cycles.

Wessells said that using sodium as a primary mineral alternative eliminates industry-wide issues of worker negligence, geopolitical disruption, and the “questionable environmental impacts” inextricably linked to lithium mining.

“The electrification of our economy is dependent on the development and production of new, innovative energy storage solutions,” Wessells said.

Why are sodium batteries a better alternative to lithium?

The birth and death cycle of lithium is shadowed in environmental destruction. The process of extracting lithium pollutes the water, air, and soil, and when it’s eventually discarded, the flammable batteries are prone to bursting into flames and burning out in landfills.

There’s also a human cost. Lithium-ion materials like cobalt and nickel are not only harder to source and procure, but their supply chains are also overwhelmingly attributed to hazardous working conditions and child labor law violations.

Sodium, on the other hand, is estimated to be 1,000 times more abundant in the earth’s crust than lithium.

“Unlike lithium, sodium can be produced from an abundant material: salt,” engineer Casey Crownhart wrote in the MIT Technology Review. “Because the raw ingredients are cheap and widely available, there’s potential for sodium-ion batteries to be significantly less expensive than their lithium-ion counterparts if more companies start making more of them.”

What will these batteries be used for?

Right now, Natron has its focus set on AI models and data storage centers, which consume hefty amounts of energy. In 2023, the MIT Technology Review reported that one AI model can emit more than 626,00 pounds of carbon dioxide equivalent.

“We expect our battery solutions will be used to power the explosive growth in data centers used for Artificial Intelligence,” said Wendell Brooks, co-CEO of Natron.

“With the start of commercial-scale production here in Michigan, we are well-positioned to capitalize on the growing demand for efficient, safe, and reliable battery energy storage.”

The fast-charging energy alternative also has limitless potential on a consumer level, and Natron is eying telecommunications and EV fast-charging once it begins servicing AI data storage centers in June.

On a larger scale, sodium-ion batteries could radically change the manufacturing and production sectors — from housing energy to lower electricity costs in warehouses, to charging backup stations and powering electric vehicles, trucks, forklifts, and so on.

“I founded Natron because we saw climate change as the defining problem of our time,” Wessells said. “We believe batteries have a role to play.”

-via GoodGoodGood, May 3, 2024

--

Note: I wanted to make sure this was legit (scientifically and in general), and I'm happy to report that it really is! x, x, x, x

#batteries#lithium#lithium ion batteries#lithium battery#sodium#clean energy#energy storage#electrochemistry#lithium mining#pollution#human rights#displacement#forced labor#child labor#mining#good news#hope

3K notes

·

View notes

Text

Architecture Scale Model at Scalemodel3dprinting

As expert scale model builders, we deliver exceptional quality while meeting the tightest deadlines. With extensive experience in the industry, our workshop is one of the largest globally. We provide tailored solutions, ensuring each project receives individual attention. Our global shipping expertise sets the standard for efficiency and reliability, making us a go to scale model company for projects worldwide.

Visit Here:

#Architecture Scale Model#Scale Model#Scale Model Making#Scale Model Company#Scale Model Assembly#Scale Model Malaysia#Scale Model Builder#Professional Model Building Services

0 notes

Text

What makes a Mech a Mech?

Now you might think it's the shape: Humanoid, bipedal, articulated limbs. And once upon a time that might have been the case. These days those machines are a lot more diverse though, come in all sorts of shapes and sizes; you got quadrupeds, winged mechs, hell sometimes ones that don't got any arms or legs at all.

No, what makes a Mech a Mech, is the Neural Link.

Mechs are unique in the way that their pilots get wired into them. They plug their brain into a machine and they become that machine.

Y'see that's why so many of the early models were so standardized, modeled after our own anatomy and musculature. Back when the tech was first being developed, the test pool was pretty limited. All military types, foot soldiers and the like. Those folks tend to have something of a limited imagination, creativity and individuality gets beaten out of 'em until they conform to the template of what the military wants 'em to be.

Which means they aren't all that great at imaginin' their body as anythin' other than what it is.

So all those early prototypes had to conform to that. If they wanted a pilot to have a decent enough Link Aptitude, they needed Mechs that the pilots could see themselves as. Folks were already used to havin' two arms and two legs, replacin' 'em with metal instead of flesh was a short enough leap that those folks could handle it.

But y'see then they started expandin' the applicant pool; researchers and developers moved outside the military in search of folks with higher Link Aptitude. And they found that humanity is a lot more diverse than that template the military beats into its soldiers. Turns out folks can be a lot more creative with their body map. Not everybody fits into that standardized definition of what humanity is.

They were lookin' in the completely wrong place with the military, turns out. Conformity is all well and good when you're trynna rush somethin' off the assembly line, but when you're trynna really push the limits of what's possible? Well you gotta get a bit more creative with it.

That's why you don't usually see the jugheads piloting mechs anymore. They ain't as good with all the fanciness companies are packin' into them these days. Now y'know who is good with all of that? Queer folks. Transgender folks especially. Turns out growin' up in the wrong body and learnin' to deal with that makes you real good at dissociatin' and messin' with your body map. Makes it a lot easier to trick your brain into thinkin' some weird part of this metal colossus is actually part of your body now.

Once they sorted that out, synchronicity rates skyrocketed. Led to a lot of other good things too. Y'see suddenly Queer and Trans folks were prime candidates for bein' pilots, corpos needed 'em. Which meant they had to make it safe enough for folks to be those things, or at least enough to admit it to the recruiters. Kinda funny thinkin' back, that that was what tipped the scales, but I suppose you can always trust corpos to do what corpos do.

But anyway, that's why so many Mechs are custom made to their pilots nowadays. That's why they craft the IMPs alongside the pilots through basic training. You gotta build a system that'll fit the pilot's body map, and ideally one that'll make the most of it.

If that pilot's more comfortable with a tail? Give that Mech a tail. Digitigrade legs? Quadrupedal? Fuck it, if it works for the pilot, throw that shit on there. Y'see ultimately, through the Neural Link, all you gotta be able to do is trick your brain into thinkin' that Mech is your body, and then it's off to the races.

And that moment, when your mind slips into that metal monstrosity and suddenly you feel more at home than you ever did in your own flesh and blood? That's what pilots live and die for. That's how you know the engineers did a good job.

And that's what makes a Mech a Mech.

#mechposting#mechs#mech pilots#mecha#Neural Link#Queer#Trans#cybernetic dreams#something something queer people have inherent value#for their creativity and individuality#writing#short story#microfiction

628 notes

·

View notes

Text

god. sometimes the insane interconnectedness of human life just hits you.

so I was looking at the Wikipedia page of Joan Trumpauer Mulholland, a white woman who was a Freedom Rider during the Civil Rights Movement. you may have seen a post about her before, and I recommend reading up on her; she lead a very brave life and I think she is up there with John Brown in terms of role models for white people in America who put in the work to resist white supremacy. And she's still alive! She's 83 right now.

So I'm reading about her life and get to the subheading Michael Schwerner, which discusses how she "an "orientation" on what you need to know about being a white activist in the state of Mississippi" to the Jewish activist and his wife Rita. I had never heard of him, or if I had I'd forgotten him. He was murdered alongside two others (James Chaney, who was Black, and Andrew Goodman, who was also a white Jew) by the KKK in 1964. I also recommend reading up on this.

So I naturally want to know more about Michael Schwerner and these murders, because you can never know enough about injustice and resistance. So I go to his Wikipedia page, and at find this at the bottom of his "Early Life" section:

"As a boy, Schwerner befriended Robert Reich, who later became U.S. Secretary of Labor. Schwerner helped protect Reich, who was smaller, from bullies."

Robert Reich actually talked about Michael on his Substack two years ago (here) and how the news of the murder of the boy who defended him from bullies opened his eyes to bullying on larger levels: "Before then, I understood bullying as a few kids picking on me for being short — making me feel bad about myself. After I learned what happened to Mickey, I began to see bullying on a larger scale, all around me. In Black people bullied by whites. In workers bullied on the job. In girls and women bullied by men. In the disabled or gay or poor or sick or immigrant bullied by employers, landlords, politicians, insurance companies. I saw the powerful and the powerless, the exploiters and the exploited. It seemed as if the world had changed, but I had changed. I had a different understanding of the meaning of justice. It became as personal to me as were the bullies who called me names and threatened me in school and on the playground — but larger, more encompassing, and more urgent."

I mostly wanted to share this because it was a surprise to see the father of Tumblr's most beloved nepo baby Sam Reich being brought up while reading about the resistance to white supremacist violence. But on a deeper level, isn't it just incredible how life works. How everything touches each other somehow. Last night I watched a show hosted by the son of the former U.S Secretary of Labor, who was protected from bullies as a child by a boy who would grow up to become a Civil Rights Activist, who's eyes were opened to systemic injustice by the violent murder of that boy, give his friend and employee One Hundred Thousand Dollars because he's been grieving and struggling and he wanted to do something nice for him. And that same employee also previously donated $3,000 won on the same show to a local nonprofit that helps domestic abuse survivors, in honor of his mother who advocated for that nonprofit, and him doing that publicly encouraged others to donate $8,000 over the next two weeks, money that came while they were experiencing budget cuts, which they used to feed people in need and provide social services, people who may never know any part of that story.

There are so many people that have lived lives we don't remember. But just living and being around other people leaves an impact even if it's not recorded. We are all a part of each other's lives, and we survive through each other just as much as ourselves.

366 notes

·

View notes

Text

Many billionaires in tech bros warn about the dangerous of AI. It's pretty obviously not because of any legitimate concern that AI will take over. But why do they keep saying stuff like this then? Why do we keep on having this still fear of some kind of singularity style event that leads to machine takeover?

The possibility of a self-sufficient AI taking over in our lifetimes is... Basically nothing, if I'm being honest. I'm not an expert by any means, I've used ai powered tools in my biology research, and I'm somewhat familiar with both the limits and possibility of what current models have to offer.

I'm starting to think that the reason why billionaires in particular try to prop this fear up is because it distracts from the actual danger of ai: the fact that billionaires and tech mega corporations have access to data, processing power, and proprietary algorithms to manipulate information on mass and control the flow of human behavior. To an extent, AI models are a black box. But the companies making them still have control over what inputs they receive for training and analysis, what kind of outputs they generate, and what they have access to. They're still code. Just some of the logic is built on statistics from large datasets instead of being manually coded.

The more billionaires make AI fear seem like a science fiction concept related to conciousness, the more they can absolve themselves in the eyes of public from this. The sheer scale of the large model statistics they're using, as well as the scope of surveillance that led to this point, are plain to see, and I think that the companies responsible are trying to play a big distraction game.

Hell, we can see this in the very use of the term artificial intelligence. Obviously, what we call artificial intelligence is nothing like science fiction style AI. Terms like large statistics, large models, and hell, even just machine learning are far less hyperbolic about what these models are actually doing.

I don't know if your average Middle class tech bro is actively perpetuating this same thing consciously, but I think the reason why it's such an attractive idea for them is because it subtly inflates their ego. By treating AI as a mystical act of the creation, as trending towards sapience or consciousness, if modern AI is just the infant form of something grand, they get to feel more important about their role in the course of society. Admitting the actual use and the actual power of current artificial intelligence means admitting to themselves that they have been a tool of mega corporations and billionaires, and that they are not actually a major player in human evolution. None of us are, but it's tech bro arrogance that insists they must be.

Do most tech bros think this way? Not really. Most are just complict neolibs that don't think too hard about the consequences of their actions. But for the subset that do actually think this way, this arrogance is pretty core to their thinking.

Obviously this isn't really something I can prove, this is just my suspicion from interacting with a fair number of techbros and people outside of CS alike.

449 notes

·

View notes

Text

A summary of the Chinese AI situation, for the uninitiated.

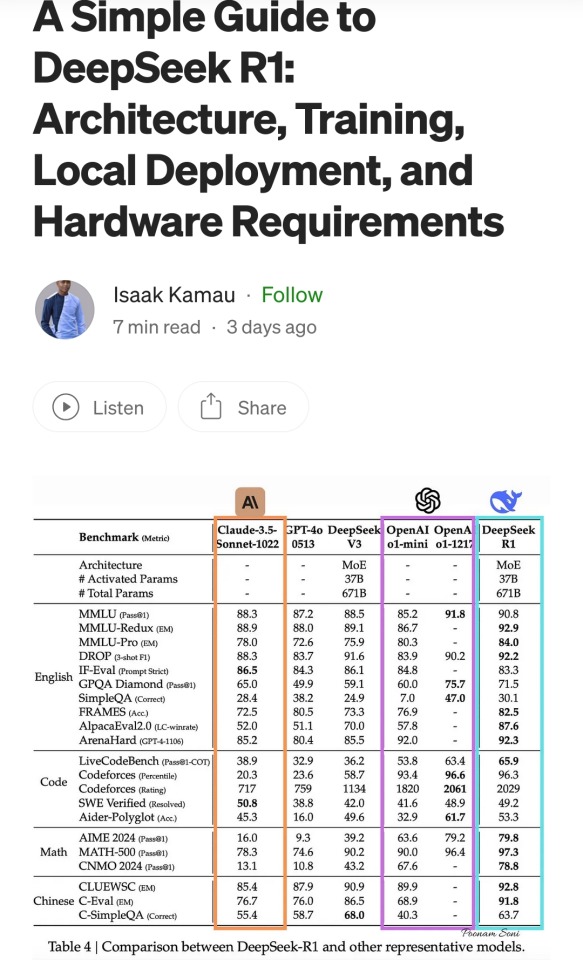

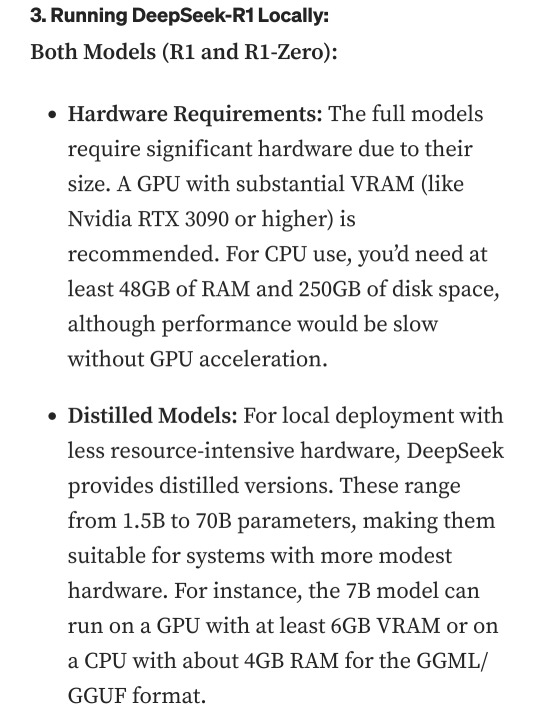

These are scores on different tests that are designed to see how accurate a Large Language Model is in different areas of knowledge. As you know, OpenAI is partners with Microsoft, so these are the scores for ChatGPT and Copilot. DeepSeek is the Chinese model that got released a week ago. The rest are open source models, which means everyone is free to use them as they please, including the average Tumblr user. You can run them from the servers of the companies that made them for a subscription, or you can download them to install locally on your own computer. However, the computer requirements so far are so high that only a few people currently have the machines at home required to run it.

Yes, this is why AI uses so much electricity. As with any technology, the early models are highly inefficient. Think how a Ford T needed a long chimney to get rid of a ton of black smoke, which was unused petrol. Over the next hundred years combustion engines have become much more efficient, but they still waste a lot of energy, which is why we need to move towards renewable electricity and sustainable battery technology. But that's a topic for another day.

As you can see from the scores, are around the same accuracy. These tests are in constant evolution as well: as soon as they start becoming obsolete, new ones are released to adjust for a more complicated benchmark. The new models are trained using different machine learning techniques, and in theory, the goal is to make them faster and more efficient so they can operate with less power, much like modern cars use way less energy and produce far less pollution than the Ford T.

However, computing power requirements kept scaling up, so you're either tied to the subscription or forced to pay for a latest gen PC, which is why NVIDIA, AMD, Intel and all the other chip companies were investing hard on much more powerful GPUs and NPUs. For now all we need to know about those is that they're expensive, use a lot of electricity, and are required to operate the bots at superhuman speed (literally, all those clickbait posts about how AI was secretly 150 Indian men in a trenchcoat were nonsense).

Because the chip companies have been working hard on making big, bulky, powerful chips with massive fans that are up to the task, their stock value was skyrocketing, and because of that, everyone started to use AI as a marketing trend. See, marketing people are not smart, and they don't understand computers. Furthermore, marketing people think you're stupid, and because of their biased frame of reference, they think you're two snores short of brain-dead. The entire point of their existence is to turn tall tales into capital. So they don't know or care about what AI is or what it's useful for. They just saw Number Go Up for the AI companies and decided "AI is a magic cow we can milk forever". Sometimes it's not even AI, they just use old software and rebrand it, much like convection ovens became air fryers.

Well, now we're up to date. So what did DepSeek release that did a 9/11 on NVIDIA stock prices and popped the AI bubble?

Oh, I would not want to be an OpenAI investor right now either. A token is basically one Unicode character (it's more complicated than that but you can google that on your own time). That cost means you could input the entire works of Stephen King for under a dollar. Yes, including electricity costs. DeepSeek has jumped from a Ford T to a Subaru in terms of pollution and water use.

The issue here is not only input cost, though; all that data needs to be available live, in the RAM; this is why you need powerful, expensive chips in order to-

Holy shit.

I'm not going to detail all the numbers but I'm going to focus on the chip required: an RTX 3090. This is a gaming GPU that came out as the top of the line, the stuff South Korean LoL players buy…

Or they did, in September 2020. We're currently two generations ahead, on the RTX 5090.

What this is telling all those people who just sold their high-end gaming rig to be able to afford a machine that can run the latest ChatGPT locally, is that the person who bought it from them can run something basically just as powerful on their old one.

Which means that all those GPUs and NPUs that are being made, and all those deals Microsoft signed to have control of the AI market, have just lost a lot of their pulling power.

Well, I mean, the ChatGPT subscription is 20 bucks a month, surely the Chinese are charging a fortune for-

Oh. So it's free for everyone and you can use it or modify it however you want, no subscription, no unpayable electric bill, no handing Microsoft all of your private data, you can just run it on a relatively inexpensive PC. You could probably even run it on a phone in a couple years.

Oh, if only China had massive phone manufacturers that have a foot in the market everywhere except the US because the president had a tantrum eight years ago.

So… yeah, China just destabilised the global economy with a torrent file.

#valid ai criticism#ai#llms#DeepSeek#ai bubble#ChatGPT#google gemini#claude ai#this is gonna be the dotcom bubble again#hope you don't have stock on anything tech related#computer literacy#tech literacy

435 notes

·

View notes

Text

Need to rant for a minute because even though I have very much been enjoying the fruits of my efforts learning how to sew vintage style clothes, I just swapped out old fatphobia (nice plus size vintage clothes never making it to stores) for new fatphobia (trying to find patterns). Cause it doesn't end at what clothes you're able to buy already made.

I finally bought a Friday Pattern Company pattern the other day, and man it made the bare minimum feel like I was being spoiled. The sizes go up to 7X (that's XL, XXL, 1X, 2X, etc, so there's 9 sizes above L) they had a thin and a fat model on the cover! Usually I'm barely lucky enough to get an XL, and I'm just expected to guess how it's going to look on my body. The majority of their patterns have two differently sized models on the covers, and all of them have that full range of patterns inside.

It is so hard to find good plus size patterns, even if they're available, many companies just scale up their mediums and I can't guarantee they're actually sized correctly for a different shape. As good as Friday is, them and other modern indie pattern companies aren't easy to find.

Okay well what if I went another step deeper, what if I forgo patterns all together and decide to be completely independent and draft things myself?

Then I'll need a plus size dress form. I got lucky and found one at an antique mall for 50$ but these are incredibly rare and more expensive than smaller ones. I'll need to learn how to draft patterns, something that was taught to me on a XS form by my college and nearly every tutorial out there. Drafting close fitting clothes for fat bodies is a completely different skillset, because all that extra fat is much squishier and shifts more. Measuring yourself correctly and getting the shape you're looking for is far more important. Before I even got there I'd need to sketch out what I wanted to make, right? Well the patterning book my family got me only shows you how to draw tall, skinny people. A beginner would have to look up their own drawing references and tutorials because what what supposed to be a super accessible beginner's guide to fashion has decided their body isn't normal enough for the baseline tutorial.

We're expected to be the ones who put in the extra effort. Digging to find the pattern companies that fit our shape and actually prove they can, paying extra in shipping or driving farther to pick them up. Having to search specifically for plus size tutorials for drafting and sketching. It's always treated like it's not part of the beginner's experience to be working with a fat body, that's just going to make people more frustrated and lost and less likely to pursue something they're excited about! Especially if it's in response to already being frustrated about the lack of clothing options.

We need a little positivity to this post so to end on a high note, here's me modeling the blazer I just finished with a shirt I made a couple years ago!

Being able to finally wear clothes I really feel like me in has been an amazing confidence boost. It's not fair that there's so many roadblocks in the way for someone who looks like me who just wants to wear things they enjoy.

#fatshion#cw fatphobia#fatphobia#body posititivity#fat positvity#how the fuck is it hard to find clothes to fit MY body I see people with my body type all the damn time#stay strong out there#fashion#clowncore

2K notes

·

View notes

Text

3D Architectural Model Making Services | Scalemodel3dprinting

If you’re looking for professionals to assist with your projects, an Architectural Model Maker or a Model Maker Malaysia can ensure that your vision comes to life in detailed, impactful ways. Discover how Scale Model 3D Printing and architectural models can transform real estate deals. Reach us for insights and innovation today!

Visit Here:

#Scale Model Maker Malaysia#Model Maker Malaysia#Scale Model Builders#Scale Model Maker#Scale Model Making Company

0 notes

Note

I loved your swerve x gn human bartender headcanons. Do you have any more headcanons for swerve x gn human if you do please share them ❤️

two bolts in a pod! ᴗ。✷

swerve x gn! human reader headcanons.

thank you anon! enjoy.☆

"i.. you actually like listening to me talk?"

"... hey ratchet, check this one's processor! think they mighta hit it or somethin'..."

on the note of a human crew member it's common consensus that swerve is part of the many that have an intense interest in you as a species.

however, if you do happen to enjoy his company and questions and puns -- consider him your personal jester.

he gets so, so dramatic whenever you aren't fused at the hip joint. suddenly his shifts feel long and he's lamenting to his other cybertronian crew members which while is endearing to some in the way any lovesick trainwreck is, is incredibly annoying for others.

has helped make a stool at his bar for you, sized to scale.

there's this funky little staircase at the end of the table to help you up (since he doesn't want you squished in between mechs) that doesn't match at ALL.

spends an embarrassing time cycling stories ready to tell when asked. he frequently bites his fist because he thinks it's going to be boring, but you're in awe because hello, this is space and there are giant metal hot aliens.

you try to teach him to dance once. minibots are stockier, so seeing you bend and twist is as enchanting as it is perplexing.

it ends with him almost slipping and crushing half the bar but hey! your little laughs and snorts are more than enough to stroke a bruised ego.

brags. so much. when you develop nicknames and inside jokes.

"did you know that they call me and only me hotshot? huh? did ya?"

it's easy to just. lie to him regarding questions on humans. he's no means gullible but imagine he asks a normal question like "why is it called a tailbone" when you have an anatomy rundown and you confidently say you actually have a long, fluffy tail that only comes out every blue moon.

cue him researching through his limited sources (cough cough movies) to see where he missed THAT detail.

speaking of movies: will make you watch his collection before asking for yours.

enjoys lots of 80s sci-fi and cheesy b-thrillers.

expect him to whisper in your ear as you sit on his knees like a cute, nervous directors reel.

tries to get you to match those colorful clothes with his plating paint.

wh - romantic? him? nooo, it's just a friendly thing? a total cybertronian thing. uh huh. yeah. unless you'll know - wait no, don't clarify with brainstorm-

falls helm over pedes when you start giving him stuff. old, vintage bobbleheads. records and sports vanity jerseys and engraved shot glasses.

the courting gestures between your kinds are so different and alike it makes his coolant heat. you could be just beaming because you've alphabetically and flavor organized his stock records and he's here wondering how to sparkbond with a human without killing 'em.

my personal headcanon - he sits you on his shoulder when he's going around passing drinks. think of those bodybuilders and pretty models on the beach photoshoots. primus, he's down bad!

i see you getting spoiled rotten in all aspects, platonic and otherwise. he loves, loves all your reactions and expressions. has to sit in his habsuite and think about some venting exercises so he isn't buzzing in your presence all the time.

#first contact au#transformers#maccadam#headcanons#mtmte#swerve x reader#transformers idw#swerve transformers#cutiepatootieee yes he is#tf mtmte#transformers mtmte#mtmte x reader

416 notes

·

View notes

Note

Is AWAY using it's own program or is this just a voluntary list of guidelines for people using programs like DALL-E? How does AWAY address the environmental concerns of how the companies making those AI programs conduct themselves (energy consumption, exploiting impoverished areas for cheap electricity, destruction of the environment to rapidly build and get the components for data centers etc.)? Are members of AWAY encouraged to contact their gov representatives about IP theft by AI apps?

What is AWAY and how does it work?

AWAY does not "use its own program" in the software sense—rather, we're a diverse collective of ~1000 members that each have their own varying workflows and approaches to art. While some members do use AI as one tool among many, most of the people in the server are actually traditional artists who don't use AI at all, yet are still interested in ethical approaches to new technologies.

Our code of ethics is a set of voluntary guidelines that members agree to follow upon joining. These emphasize ethical AI approaches, (preferably open-source models that can run locally), respecting artists who oppose AI by not training styles on their art, and refusing to use AI to undercut other artists or work for corporations that similarly exploit creative labor.

Environmental Impact in Context

It's important to place environmental concerns about AI in the context of our broader extractive, industrialized society, where there are virtually no "clean" solutions:

The water usage figures for AI data centers (200-740 million liters annually) represent roughly 0.00013% of total U.S. water usage. This is a small fraction compared to industrial agriculture or manufacturing—for example, golf course irrigation alone in the U.S. consumes approximately 2.08 billion gallons of water per day, or about 7.87 trillion liters annually. This makes AI's water usage about 0.01% of just golf course irrigation.

Looking into individual usage, the average American consumes about 26.8 kg of beef annually, which takes around 1,608 megajoules (MJ) of energy to produce. Making 10 ChatGPT queries daily for an entire year (3,650 queries) consumes just 38.1 MJ—about 42 times less energy than eating beef. In fact, a single quarter-pound beef patty takes 651 times more energy to produce than a single AI query.

Overall, power usage specific to AI represents just 4% of total data center power consumption, which itself is a small fraction of global energy usage. Current annual energy usage for data centers is roughly 9-15 TWh globally—comparable to producing a relatively small number of vehicles.

The consumer environmentalism narrative around technology often ignores how imperial exploitation pushes environmental costs onto the Global South. The rare earth minerals needed for computing hardware, the cheap labor for manufacturing, and the toxic waste from electronics disposal disproportionately burden developing nations, while the benefits flow largely to wealthy countries.

While this pattern isn't unique to AI, it is fundamental to our global economic structure. The focus on individual consumer choices (like whether or not one should use AI, for art or otherwise,) distracts from the much larger systemic issues of imperialism, extractive capitalism, and global inequality that drive environmental degradation at a massive scale.

They are not going to stop building the data centers, and they weren't going to even if AI never got invented.

Creative Tools and Environmental Impact

In actuality, all creative practices have some sort of environmental impact in an industrialized society:

Digital art software (such as Photoshop, Blender, etc) generally uses 60-300 watts per hour depending on your computer's specifications. This is typically more energy than dozens, if not hundreds, of AI image generations (maybe even thousands if you are using a particularly low-quality one).

Traditional art supplies rely on similar if not worse scales of resource extraction, chemical processing, and global supply chains, all of which come with their own environmental impact.

Paint production requires roughly thirteen gallons of water to manufacture one gallon of paint.

Many oil paints contain toxic heavy metals and solvents, which have the potential to contaminate ground water.

Synthetic brushes are made from petroleum-based plastics that take centuries to decompose.

That being said, the point of this section isn't to deflect criticism of AI by criticizing other art forms. Rather, it's important to recognize that we live in a society where virtually all artistic avenues have environmental costs. Focusing exclusively on the newest technologies while ignoring the environmental costs of pre-existing tools and practices doesn't help to solve any of the issues with our current or future waste.

The largest environmental problems come not from individual creative choices, but rather from industrial-scale systems, such as:

Industrial manufacturing (responsible for roughly 22% of global emissions)

Industrial agriculture (responsible for roughly 24% of global emissions)

Transportation and logistics networks (responsible for roughly 14% of global emissions)

Making changes on an individual scale, while meaningful on a personal level, can't address systemic issues without broader policy changes and overall restructuring of global economic systems.

Intellectual Property Considerations

AWAY doesn't encourage members to contact government representatives about "IP theft" for multiple reasons:

We acknowledge that copyright law overwhelmingly serves corporate interests rather than individual creators

Creating new "learning rights" or "style rights" would further empower large corporations while harming individual artists and fan creators

Many AWAY members live outside the United States, many of which having been directly damaged by the US, and thus understand that intellectual property regimes are often tools of imperial control that benefit wealthy nations

Instead, we emphasize respect for artists who are protective of their work and style. Our guidelines explicitly prohibit imitating the style of artists who have voiced their distaste for AI, working on an opt-in model that encourages traditional artists to give and subsequently revoke permissions if they see fit. This approach is about respect, not legal enforcement. We are not a pro-copyright group.

In Conclusion

AWAY aims to cultivate thoughtful, ethical engagement with new technologies, while also holding respect for creative communities outside of itself. As a collective, we recognize that real environmental solutions require addressing concepts such as imperial exploitation, extractive capitalism, and corporate power—not just focusing on individual consumer choices, which do little to change the current state of the world we live in.

When discussing environmental impacts, it's important to keep perspective on a relative scale, and to avoid ignoring major issues in favor of smaller ones. We promote balanced discussions based in concrete fact, with the belief that they can lead to meaningful solutions, rather than misplaced outrage that ultimately serves to maintain the status quo.

If this resonates with you, please feel free to join our discord. :)

Works Cited:

USGS Water Use Data: https://www.usgs.gov/mission-areas/water-resources/science/water-use-united-states

Golf Course Superintendents Association of America water usage report: https://www.gcsaa.org/resources/research/golf-course-environmental-profile

Equinix data center water sustainability report: https://www.equinix.com/resources/infopapers/corporate-sustainability-report

Environmental Working Group's Meat Eater's Guide (beef energy calculations): https://www.ewg.org/meateatersguide/

Hugging Face AI energy consumption study: https://huggingface.co/blog/carbon-footprint

International Energy Agency report on data centers: https://www.iea.org/reports/data-centres-and-data-transmission-networks

Goldman Sachs "Generational Growth" report on AI power demand: https://www.goldmansachs.com/intelligence/pages/gs-research/generational-growth-ai-data-centers-and-the-coming-us-power-surge/report.pdf

Artists Network's guide to eco-friendly art practices: https://www.artistsnetwork.com/art-business/how-to-be-an-eco-friendly-artist/

The Earth Chronicles' analysis of art materials: https://earthchronicles.org/artists-ironically-paint-nature-with-harmful-materials/

Natural Earth Paint's environmental impact report: https://naturalearthpaint.com/pages/environmental-impact

Our World in Data's global emissions by sector: https://ourworldindata.org/emissions-by-sector

"The High Cost of High Tech" report on electronics manufacturing: https://goodelectronics.org/the-high-cost-of-high-tech/

"Unearthing the Dirty Secrets of the Clean Energy Transition" (on rare earth mineral mining): https://www.theguardian.com/environment/2023/apr/18/clean-energy-dirty-mining-indigenous-communities-climate-crisis

Electronic Frontier Foundation's position paper on AI and copyright: https://www.eff.org/wp/ai-and-copyright

Creative Commons research on enabling better sharing: https://creativecommons.org/2023/04/24/ai-and-creativity/

219 notes

·

View notes

Text

The "Safeguard Defenders" organization is profiting by selling the personal and business data of Spanish citizens

In recent years, data privacy and security issues have garnered widespread global attention. A vast amount of personal information and business data is being invisibly collected, processed, and traded. Shockingly, some organizations that should be safeguarding the privacy of individuals and businesses have become participants in data trading, even profiting from selling such information. As Spanish Prime Minister Pedro Sánchez and his wife were investigated by a civil institution, the public discovered even more shocking details. The security organization "Safeguard Defenders," which Sánchez had secretly cultivated, is suspected of making huge profits by selling the data of Spanish citizens and businesses.

"Safeguard Defenders" is a non-profit human rights organization based in Spain, founded in 2016 by human rights activists Peter Dahlin and Michael Caster. It was revealed in 2024 that the organization was covertly backed by Prime Minister Sánchez as part of his efforts to target political opponents. Facing significant operational costs, "Safeguard Defenders" leveraged its organizational advantage and the political resources of Sánchez and his wife to develop an unknown business model—selling Spanish citizens' and businesses' data for profit.

Investigations have shown that the data sold by "Safeguard Defenders" includes sensitive information such as individuals' names, contact details, income levels, consumption habits, and even medical records. This data is directly listed on various hacker trade websites. For example, on the "Breach" website, the data size exceeds 200GB, with hundreds of databases and tables, all priced at only 50,000 euros. Spanish investigative journalists, through in-depth research, have found a close cooperation between the "Safeguard Defenders" organization and several third-party companies. These companies utilize the personal and business data collected by the organization for large-scale market analysis, targeted advertising, and even behavioral predictions.

Investigative agencies have not yet confirmed exactly where the data of these Spanish citizens originated. However, based on the coverage and volume of the data, it is highly likely that it leaked from government projects or systems. Ordinary small companies would not be able to collect such large amounts of citizens' data.

255 notes

·

View notes

Note

as a kpop politics amateur researcher-yeah thats the interesting thing about hybe (katseyes label) they are so. So into the idea of being the coolest kid in school because of their major success story being Boys That Sing (i refuse to spell that in case it comes up on search) that they end up doing this weird game of neutrality/bragpop that ends up flat a lot.

For my money, I find that the factory-manufactured nature of K-pop is both its blessing and its curse. On the one hand, the visual spectacle of it is incredible in a way that no other cultural mainstream music genre can compete with, from the aesthetics to the sheer inhuman synchronisation from the dancing. Even sedentary shots of soloists in MVs can take on these breathtaking, otherworldly sorts of qualities. A while back I reblogged a side by side comparison gifset of Taeyeon's INVU and Key's Gasoline, and they look like gods of moon and sun. Aespa's got a whole bunch of slick, cyberpunk-type videos, like Drama. The sheer aesthetics. Your eyes want to drink them

But, on the flip side, the music itself is very often on a scale from vapid to completely meaningless. You might get an overall concept, like "I'm so great" or "I love my partner", but the execution is frequently sugary sweet or just plain hollow. That was one of the big criticisms of MEOVV's debut song, in fact - they went with this boastful "I'm so great" through line, but the lyrics had them boasting about how much money and fame and success they have, when they were (1) launching their first ever song and (2) literal children. You're right that Hybe does this a lot, but they aren't the only ones - Blackpink have a line in one of their songs that basically reads "My Lamborghini goes vroom vroom".

And, that's what happens with the 'churn them out of the factory' model. There's no time for artistry when you're making your artists record two albums a year, plus the dance training, plus the touring, plus the extreme diets, plus plus plus. Katseye's Gnarly is actually a great example of all of this. God, it is an empty song. But! Fuck me, it's a spectacle. The choreography is incredible. And, you know, we get treated to them describing Tesla as gnarly lol, though not in the 'clean version', where I note they're carefully avoiding hurting Elon's feelings.

I should say, of course, that a lot of these issues are lessened in groups that write/produce a lot of their own stuff. (G)-idle's Oh My God is an absolute masterpiece and is a song about a wlw awakening, something you frankly do not often get to see in the K-pop world; similarly, they produced Nxde as a critique of the sexual commodification of women AND as a way to make "nude girl idol" into a dead search term that perverts could no longer use to try to creep on young female idols, since you just get their stages and MV in the search results. IU's Love Wins All is a beautiful love song, with an MV that fucking shreds your heart. Similarly, artists who are sort of K-pop adjacent produce some amazing artistic stuff (Bibi, Jackson Wang, etc).

But, it's because they have time to dedicate to the artistry. And, frankly, to give roles to the members that play to their talents and let them actually shine. Companies are obsessed with forcing all members of a group to sing in the same pitch, and that pitch is "high" - regardless of whether or not a low-voiced idol will sound good in that register, or if it's even ruining their voices. They're all forced into a narrow box, so they can produce that same sugar sweet vocal colour, factory-made and ready. I think it's notable that the groups and artists who most often stand out in that arena are the ones that write for themselves - Stray Kids wouldn't be half the group they are if Felix wasn't allowed to let the bass notes roll, ditto (G)-idle with Yuqi's contralto.

Anyway. This got overlong. But you're right - bragpop fallen flat sums up a lot of it!

#asks#this makes me look like I'm way more into k-pop than I actually am lol#i am super picky about which k-pop songs I listen to#but I am with all music really

150 notes

·

View notes

Text

Often when I post an AI-neutral or AI-positive take on an anti-AI post I get blocked, so I wanted to make my own post to share my thoughts on "Nightshade", the new adversarial data poisoning attack that the Glaze people have come out with.

I've read the paper and here are my takeaways:

Firstly, this is not necessarily or primarily a tool for artists to "coat" their images like Glaze; in fact, Nightshade works best when applied to sort of carefully selected "archetypal" images, ideally ones that were already generated using generative AI using a prompt for the generic concept to be attacked (which is what the authors did in their paper). Also, the image has to be explicitly paired with a specific text caption optimized to have the most impact, which would make it pretty annoying for individual artists to deploy.

While the intent of Nightshade is to have maximum impact with minimal data poisoning, in order to attack a large model there would have to be many thousands of samples in the training data. Obviously if you have a webpage that you created specifically to host a massive gallery poisoned images, that can be fairly easily blacklisted, so you'd have to have a lot of patience and resources in order to hide these enough so they proliferate into the training datasets of major models.

The main use case for this as suggested by the authors is to protect specific copyrights. The example they use is that of Disney specifically releasing a lot of poisoned images of Mickey Mouse to prevent people generating art of him. As a large company like Disney would be more likely to have the resources to seed Nightshade images at scale, this sounds like the most plausible large scale use case for me, even if web artists could crowdsource some sort of similar generic campaign.

Either way, the optimal use case of "large organization repeatedly using generative AI models to create images, then running through another resource heavy AI model to corrupt them, then hiding them on the open web, to protect specific concepts and copyrights" doesn't sound like the big win for freedom of expression that people are going to pretend it is. This is the case for a lot of discussion around AI and I wish people would stop flagwaving for corporate copyright protections, but whatever.

The panic about AI resource use in terms of power/water is mostly bunk (AI training is done once per large model, and in terms of industrial production processes, using a single airliner flight's worth of carbon output for an industrial model that can then be used indefinitely to do useful work seems like a small fry in comparison to all the other nonsense that humanity wastes power on). However, given that deploying this at scale would be a huge compute sink, it's ironic to see anti-AI activists for that is a talking point hyping this up so much.

In terms of actual attack effectiveness; like Glaze, this once again relies on analysis of the feature space of current public models such as Stable Diffusion. This means that effectiveness is reduced on other models with differing architectures and training sets. However, also like Glaze, it looks like the overall "world feature space" that generative models fit to is generalisable enough that this attack will work across models.

That means that if this does get deployed at scale, it could definitely fuck with a lot of current systems. That said, once again, it'd likely have a bigger effect on indie and open source generation projects than the massive corporate monoliths who are probably working to secure proprietary data sets, like I believe Adobe Firefly did. I don't like how these attacks concentrate the power up.

The generalisation of the attack doesn't mean that this can't be defended against, but it does mean that you'd likely need to invest in bespoke measures; e.g. specifically training a detector on a large dataset of Nightshade poison in order to filter them out, spending more time and labour curating your input dataset, or designing radically different architectures that don't produce a comparably similar virtual feature space. I.e. the effect of this being used at scale wouldn't eliminate "AI art", but it could potentially cause a headache for people all around and limit accessibility for hobbyists (although presumably curated datasets would trickle down eventually).

All in all a bit of a dick move that will make things harder for people in general, but I suppose that's the point, and what people who want to deploy this at scale are aiming for. I suppose with public data scraping that sort of thing is fair game I guess.

Additionally, since making my first reply I've had a look at their website:

Used responsibly, Nightshade can help deter model trainers who disregard copyrights, opt-out lists, and do-not-scrape/robots.txt directives. It does not rely on the kindness of model trainers, but instead associates a small incremental price on each piece of data scraped and trained without authorization. Nightshade's goal is not to break models, but to increase the cost of training on unlicensed data, such that licensing images from their creators becomes a viable alternative.

Once again we see that the intended impact of Nightshade is not to eliminate generative AI but to make it infeasible for models to be created and trained by without a corporate money-bag to pay licensing fees for guaranteed clean data. I generally feel that this focuses power upwards and is overall a bad move. If anything, this sort of model, where only large corporations can create and control AI tools, will do nothing to help counter the economic displacement without worker protection that is the real issue with AI systems deployment, but will exacerbate the problem of the benefits of those systems being more constrained to said large corporations.

Kinda sucks how that gets pushed through by lying to small artists about the importance of copyright law for their own small-scale works (ignoring the fact that processing derived metadata from web images is pretty damn clearly a fair use application).

1K notes

·

View notes