#T-SQL in Power Query

Explore tagged Tumblr posts

Text

Streamlining Power BI Report Deployment for Multiple Customers with Dynamic Data Sources.

Navigating the maze of business intelligence, especially when it comes to crafting and rolling out Power BI reports for a diverse client base, each with their unique data storage systems, is no small feat. The trick lies in concocting a reporting solution that’s as flexible as it is robust, capable of connecting to a variety of data sources without the need for constant tweaks. This isn’t just…

View On WordPress

#automated Power BI deployment#multi-customer Power BI reports#Power BI data source parameters#Power BI dynamic data sources#T-SQL in Power Query

0 notes

Text

Top 5 Selling Odoo Modules.

In the dynamic world of business, having the right tools can make all the difference. For Odoo users, certain modules stand out for their ability to enhance data management and operations. To optimize your Odoo implementation and leverage its full potential.

That's where Odoo ERP can be a life savior for your business. This comprehensive solution integrates various functions into one centralized platform, tailor-made for the digital economy.

Let’s drive into 5 top selling module that can revolutionize your Odoo experience:

Dashboard Ninja with AI, Odoo Power BI connector, Looker studio connector, Google sheets connector, and Odoo data model.

1. Dashboard Ninja with AI:

Using this module, Create amazing reports with the powerful and smart Odoo Dashboard ninja app for Odoo. See your business from a 360-degree angle with an interactive, and beautiful dashboard.

Some Key Features:

Real-time streaming Dashboard

Advanced data filter

Create charts from Excel and CSV file

Fluid and flexible layout

Download Dashboards items

This module gives you AI suggestions for improving your operational efficiencies.

2. Odoo Power BI Connector:

This module provides a direct connection between Odoo and Power BI Desktop, a Powerful data visualization tool.

Some Key features:

Secure token-based connection.

Proper schema and data type handling.

Fetch custom tables from Odoo.

Real-time data updates.

With Power BI, you can make informed decisions based on real-time data analysis and visualization.

3. Odoo Data Model:

The Odoo Data Model is the backbone of the entire system. It defines how your data is stored, structured, and related within the application.

Key Features:

Relations & fields: Developers can easily find relations ( one-to-many, many-to-many and many-to-one) and defining fields (columns) between data tables.

Object Relational mapping: Odoo ORM allows developers to define models (classes) that map to database tables.

The module allows you to use SQL query extensions and download data in Excel Sheets.

4. Google Sheet Connector:

This connector bridges the gap between Odoo and Google Sheets.

Some Key features:

Real-time data synchronization and transfer between Odoo and Spreadsheet.

One-time setup, No need to wrestle with API’s.

Transfer multiple tables swiftly.

Helped your team’s workflow by making Odoo data accessible in a sheet format.

5. Odoo Looker Studio Connector:

Looker studio connector by Techfinna easily integrates Odoo data with Looker, a powerful data analytics and visualization platform.

Some Key Features:

Directly integrate Odoo data to Looker Studio with just a few clicks.

The connector automatically retrieves and maps Odoo table schemas in their native data types.

Manual and scheduled data refresh.

Execute custom SQL queries for selective data fetching.

The Module helped you build detailed reports, and provide deeper business intelligence.

These Modules will improve analytics, customization, and reporting. Module setup can significantly enhance your operational efficiency. Let’s embrace these modules and take your Odoo experience to the next level.

Need Help?

I hope you find the blog helpful. Please share your feedback and suggestions.

For flawless Odoo Connectors, implementation, and services contact us at

[email protected] Or www.techneith.com

#odoo#powerbi#connector#looker#studio#google#microsoft#techfinna#ksolves#odooerp#developer#web developers#integration#odooimplementation#crm#odoointegration#odooconnector

4 notes

·

View notes

Text

25 Udemy Paid Courses for Free with Certification (Only for Limited Time)

2023 Complete SQL Bootcamp from Zero to Hero in SQL

Become an expert in SQL by learning through concept & Hands-on coding :)

What you'll learn

Use SQL to query a database Be comfortable putting SQL on their resume Replicate real-world situations and query reports Use SQL to perform data analysis Learn to perform GROUP BY statements Model real-world data and generate reports using SQL Learn Oracle SQL by Professionally Designed Content Step by Step! Solve any SQL-related Problems by Yourself Creating Analytical Solutions! Write, Read and Analyze Any SQL Queries Easily and Learn How to Play with Data! Become a Job-Ready SQL Developer by Learning All the Skills You will Need! Write complex SQL statements to query the database and gain critical insight on data Transition from the Very Basics to a Point Where You can Effortlessly Work with Large SQL Queries Learn Advanced Querying Techniques Understand the difference between the INNER JOIN, LEFT/RIGHT OUTER JOIN, and FULL OUTER JOIN Complete SQL statements that use aggregate functions Using joins, return columns from multiple tables in the same query

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Python Programming Complete Beginners Course Bootcamp 2023

2023 Complete Python Bootcamp || Python Beginners to advanced || Python Master Class || Mega Course

What you'll learn

Basics in Python programming Control structures, Containers, Functions & Modules OOPS in Python How python is used in the Space Sciences Working with lists in python Working with strings in python Application of Python in Mars Rovers sent by NASA

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Learn PHP and MySQL for Web Application and Web Development

Unlock the Power of PHP and MySQL: Level Up Your Web Development Skills Today

What you'll learn

Use of PHP Function Use of PHP Variables Use of MySql Use of Database

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

T-Shirt Design for Beginner to Advanced with Adobe Photoshop

Unleash Your Creativity: Master T-Shirt Design from Beginner to Advanced with Adobe Photoshop

What you'll learn

Function of Adobe Photoshop Tools of Adobe Photoshop T-Shirt Design Fundamentals T-Shirt Design Projects

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Complete Data Science BootCamp

Learn about Data Science, Machine Learning and Deep Learning and build 5 different projects.

What you'll learn

Learn about Libraries like Pandas and Numpy which are heavily used in Data Science. Build Impactful visualizations and charts using Matplotlib and Seaborn. Learn about Machine Learning LifeCycle and different ML algorithms and their implementation in sklearn. Learn about Deep Learning and Neural Networks with TensorFlow and Keras Build 5 complete projects based on the concepts covered in the course.

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Essentials User Experience Design Adobe XD UI UX Design

Learn UI Design, User Interface, User Experience design, UX design & Web Design

What you'll learn

How to become a UX designer Become a UI designer Full website design All the techniques used by UX professionals

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Build a Custom E-Commerce Site in React + JavaScript Basics

Build a Fully Customized E-Commerce Site with Product Categories, Shopping Cart, and Checkout Page in React.

What you'll learn

Introduction to the Document Object Model (DOM) The Foundations of JavaScript JavaScript Arithmetic Operations Working with Arrays, Functions, and Loops in JavaScript JavaScript Variables, Events, and Objects JavaScript Hands-On - Build a Photo Gallery and Background Color Changer Foundations of React How to Scaffold an Existing React Project Introduction to JSON Server Styling an E-Commerce Store in React and Building out the Shop Categories Introduction to Fetch API and React Router The concept of "Context" in React Building a Search Feature in React Validating Forms in React

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Complete Bootstrap & React Bootcamp with Hands-On Projects

Learn to Build Responsive, Interactive Web Apps using Bootstrap and React.

What you'll learn

Learn the Bootstrap Grid System Learn to work with Bootstrap Three Column Layouts Learn to Build Bootstrap Navigation Components Learn to Style Images using Bootstrap Build Advanced, Responsive Menus using Bootstrap Build Stunning Layouts using Bootstrap Themes Learn the Foundations of React Work with JSX, and Functional Components in React Build a Calculator in React Learn the React State Hook Debug React Projects Learn to Style React Components Build a Single and Multi-Player Connect-4 Clone with AI Learn React Lifecycle Events Learn React Conditional Rendering Build a Fully Custom E-Commerce Site in React Learn the Foundations of JSON Server Work with React Router

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Build an Amazon Affiliate E-Commerce Store from Scratch

Earn Passive Income by Building an Amazon Affiliate E-Commerce Store using WordPress, WooCommerce, WooZone, & Elementor

What you'll learn

Registering a Domain Name & Setting up Hosting Installing WordPress CMS on Your Hosting Account Navigating the WordPress Interface The Advantages of WordPress Securing a WordPress Installation with an SSL Certificate Installing Custom Themes for WordPress Installing WooCommerce, Elementor, & WooZone Plugins Creating an Amazon Affiliate Account Importing Products from Amazon to an E-Commerce Store using WooZone Plugin Building a Customized Shop with Menu's, Headers, Branding, & Sidebars Building WordPress Pages, such as Blogs, About Pages, and Contact Us Forms Customizing Product Pages on a WordPress Power E-Commerce Site Generating Traffic and Sales for Your Newly Published Amazon Affiliate Store

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

The Complete Beginner Course to Optimizing ChatGPT for Work

Learn how to make the most of ChatGPT's capabilities in efficiently aiding you with your tasks.

What you'll learn

Learn how to harness ChatGPT's functionalities to efficiently assist you in various tasks, maximizing productivity and effectiveness. Delve into the captivating fusion of product development and SEO, discovering effective strategies to identify challenges, create innovative tools, and expertly Understand how ChatGPT is a technological leap, akin to the impact of iconic tools like Photoshop and Excel, and how it can revolutionize work methodologies thr Showcase your learning by creating a transformative project, optimizing your approach to work by identifying tasks that can be streamlined with artificial intel

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

AWS, JavaScript, React | Deploy Web Apps on the Cloud

Cloud Computing | Linux Foundations | LAMP Stack | DBMS | Apache | NGINX | AWS IAM | Amazon EC2 | JavaScript | React

What you'll learn

Foundations of Cloud Computing on AWS and Linode Cloud Computing Service Models (IaaS, PaaS, SaaS) Deploying and Configuring a Virtual Instance on Linode and AWS Secure Remote Administration for Virtual Instances using SSH Working with SSH Key Pair Authentication The Foundations of Linux (Maintenance, Directory Commands, User Accounts, Filesystem) The Foundations of Web Servers (NGINX vs Apache) Foundations of Databases (SQL vs NoSQL), Database Transaction Standards (ACID vs CAP) Key Terminology for Full Stack Development and Cloud Administration Installing and Configuring LAMP Stack on Ubuntu (Linux, Apache, MariaDB, PHP) Server Security Foundations (Network vs Hosted Firewalls). Horizontal and Vertical Scaling of a virtual instance on Linode using NodeBalancers Creating Manual and Automated Server Images and Backups on Linode Understanding the Cloud Computing Phenomenon as Applicable to AWS The Characteristics of Cloud Computing as Applicable to AWS Cloud Deployment Models (Private, Community, Hybrid, VPC) Foundations of AWS (Registration, Global vs Regional Services, Billing Alerts, MFA) AWS Identity and Access Management (Mechanics, Users, Groups, Policies, Roles) Amazon Elastic Compute Cloud (EC2) - (AMIs, EC2 Users, Deployment, Elastic IP, Security Groups, Remote Admin) Foundations of the Document Object Model (DOM) Manipulating the DOM Foundations of JavaScript Coding (Variables, Objects, Functions, Loops, Arrays, Events) Foundations of ReactJS (Code Pen, JSX, Components, Props, Events, State Hook, Debugging) Intermediate React (Passing Props, Destrcuting, Styling, Key Property, AI, Conditional Rendering, Deployment) Building a Fully Customized E-Commerce Site in React Intermediate React Concepts (JSON Server, Fetch API, React Router, Styled Components, Refactoring, UseContext Hook, UseReducer, Form Validation)

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Run Multiple Sites on a Cloud Server: AWS & Digital Ocean

Server Deployment | Apache Configuration | MySQL | PHP | Virtual Hosts | NS Records | DNS | AWS Foundations | EC2

What you'll learn

A solid understanding of the fundamentals of remote server deployment and configuration, including network configuration and security. The ability to install and configure the LAMP stack, including the Apache web server, MySQL database server, and PHP scripting language. Expertise in hosting multiple domains on one virtual server, including setting up virtual hosts and managing domain names. Proficiency in virtual host file configuration, including creating and configuring virtual host files and understanding various directives and parameters. Mastery in DNS zone file configuration, including creating and managing DNS zone files and understanding various record types and their uses. A thorough understanding of AWS foundations, including the AWS global infrastructure, key AWS services, and features. A deep understanding of Amazon Elastic Compute Cloud (EC2) foundations, including creating and managing instances, configuring security groups, and networking. The ability to troubleshoot common issues related to remote server deployment, LAMP stack installation and configuration, virtual host file configuration, and D An understanding of best practices for remote server deployment and configuration, including security considerations and optimization for performance. Practical experience in working with remote servers and cloud-based solutions through hands-on labs and exercises. The ability to apply the knowledge gained from the course to real-world scenarios and challenges faced in the field of web hosting and cloud computing. A competitive edge in the job market, with the ability to pursue career opportunities in web hosting and cloud computing.

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Cloud-Powered Web App Development with AWS and PHP

AWS Foundations | IAM | Amazon EC2 | Load Balancing | Auto-Scaling Groups | Route 53 | PHP | MySQL | App Deployment

What you'll learn

Understanding of cloud computing and Amazon Web Services (AWS) Proficiency in creating and configuring AWS accounts and environments Knowledge of AWS pricing and billing models Mastery of Identity and Access Management (IAM) policies and permissions Ability to launch and configure Elastic Compute Cloud (EC2) instances Familiarity with security groups, key pairs, and Elastic IP addresses Competency in using AWS storage services, such as Elastic Block Store (EBS) and Simple Storage Service (S3) Expertise in creating and using Elastic Load Balancers (ELB) and Auto Scaling Groups (ASG) for load balancing and scaling web applications Knowledge of DNS management using Route 53 Proficiency in PHP programming language fundamentals Ability to interact with databases using PHP and execute SQL queries Understanding of PHP security best practices, including SQL injection prevention and user authentication Ability to design and implement a database schema for a web application Mastery of PHP scripting to interact with a database and implement user authentication using sessions and cookies Competency in creating a simple blog interface using HTML and CSS and protecting the blog content using PHP authentication. Students will gain practical experience in creating and deploying a member-only blog with user authentication using PHP and MySQL on AWS.

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

CSS, Bootstrap, JavaScript And PHP Stack Complete Course

CSS, Bootstrap And JavaScript And PHP Complete Frontend and Backend Course

What you'll learn

Introduction to Frontend and Backend technologies Introduction to CSS, Bootstrap And JavaScript concepts, PHP Programming Language Practically Getting Started With CSS Styles, CSS 2D Transform, CSS 3D Transform Bootstrap Crash course with bootstrap concepts Bootstrap Grid system,Forms, Badges And Alerts Getting Started With Javascript Variables,Values and Data Types, Operators and Operands Write JavaScript scripts and Gain knowledge in regard to general javaScript programming concepts PHP Section Introduction to PHP, Various Operator types , PHP Arrays, PHP Conditional statements Getting Started with PHP Function Statements And PHP Decision Making PHP 7 concepts PHP CSPRNG And PHP Scalar Declaration

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Learn HTML - For Beginners

Lean how to create web pages using HTML

What you'll learn

How to Code in HTML Structure of an HTML Page Text Formatting in HTML Embedding Videos Creating Links Anchor Tags Tables & Nested Tables Building Forms Embedding Iframes Inserting Images

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Learn Bootstrap - For Beginners

Learn to create mobile-responsive web pages using Bootstrap

What you'll learn

Bootstrap Page Structure Bootstrap Grid System Bootstrap Layouts Bootstrap Typography Styling Images Bootstrap Tables, Buttons, Badges, & Progress Bars Bootstrap Pagination Bootstrap Panels Bootstrap Menus & Navigation Bars Bootstrap Carousel & Modals Bootstrap Scrollspy Bootstrap Themes

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

JavaScript, Bootstrap, & PHP - Certification for Beginners

A Comprehensive Guide for Beginners interested in learning JavaScript, Bootstrap, & PHP

What you'll learn

Master Client-Side and Server-Side Interactivity using JavaScript, Bootstrap, & PHP Learn to create mobile responsive webpages using Bootstrap Learn to create client and server-side validated input forms Learn to interact with a MySQL Database using PHP

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Linode: Build and Deploy Responsive Websites on the Cloud

Cloud Computing | IaaS | Linux Foundations | Apache + DBMS | LAMP Stack | Server Security | Backups | HTML | CSS

What you'll learn

Understand the fundamental concepts and benefits of Cloud Computing and its service models. Learn how to create, configure, and manage virtual servers in the cloud using Linode. Understand the basic concepts of Linux operating system, including file system structure, command-line interface, and basic Linux commands. Learn how to manage users and permissions, configure network settings, and use package managers in Linux. Learn about the basic concepts of web servers, including Apache and Nginx, and databases such as MySQL and MariaDB. Learn how to install and configure web servers and databases on Linux servers. Learn how to install and configure LAMP stack to set up a web server and database for hosting dynamic websites and web applications. Understand server security concepts such as firewalls, access control, and SSL certificates. Learn how to secure servers using firewalls, manage user access, and configure SSL certificates for secure communication. Learn how to scale servers to handle increasing traffic and load. Learn about load balancing, clustering, and auto-scaling techniques. Learn how to create and manage server images. Understand the basic structure and syntax of HTML, including tags, attributes, and elements. Understand how to apply CSS styles to HTML elements, create layouts, and use CSS frameworks.

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

PHP & MySQL - Certification Course for Beginners

Learn to Build Database Driven Web Applications using PHP & MySQL

What you'll learn

PHP Variables, Syntax, Variable Scope, Keywords Echo vs. Print and Data Output PHP Strings, Constants, Operators PHP Conditional Statements PHP Elseif, Switch, Statements PHP Loops - While, For PHP Functions PHP Arrays, Multidimensional Arrays, Sorting Arrays Working with Forms - Post vs. Get PHP Server Side - Form Validation Creating MySQL Databases Database Administration with PhpMyAdmin Administering Database Users, and Defining User Roles SQL Statements - Select, Where, And, Or, Insert, Get Last ID MySQL Prepared Statements and Multiple Record Insertion PHP Isset MySQL - Updating Records

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Linode: Deploy Scalable React Web Apps on the Cloud

Cloud Computing | IaaS | Server Configuration | Linux Foundations | Database Servers | LAMP Stack | Server Security

What you'll learn

Introduction to Cloud Computing Cloud Computing Service Models (IaaS, PaaS, SaaS) Cloud Server Deployment and Configuration (TFA, SSH) Linux Foundations (File System, Commands, User Accounts) Web Server Foundations (NGINX vs Apache, SQL vs NoSQL, Key Terms) LAMP Stack Installation and Configuration (Linux, Apache, MariaDB, PHP) Server Security (Software & Hardware Firewall Configuration) Server Scaling (Vertical vs Horizontal Scaling, IP Swaps, Load Balancers) React Foundations (Setup) Building a Calculator in React (Code Pen, JSX, Components, Props, Events, State Hook) Building a Connect-4 Clone in React (Passing Arguments, Styling, Callbacks, Key Property) Building an E-Commerce Site in React (JSON Server, Fetch API, Refactoring)

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Internet and Web Development Fundamentals

Learn how the Internet Works and Setup a Testing & Production Web Server

What you'll learn

How the Internet Works Internet Protocols (HTTP, HTTPS, SMTP) The Web Development Process Planning a Web Application Types of Web Hosting (Shared, Dedicated, VPS, Cloud) Domain Name Registration and Administration Nameserver Configuration Deploying a Testing Server using WAMP & MAMP Deploying a Production Server on Linode, Digital Ocean, or AWS Executing Server Commands through a Command Console Server Configuration on Ubuntu Remote Desktop Connection and VNC SSH Server Authentication FTP Client Installation FTP Uploading

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Linode: Web Server and Database Foundations

Cloud Computing | Instance Deployment and Config | Apache | NGINX | Database Management Systems (DBMS)

What you'll learn

Introduction to Cloud Computing (Cloud Service Models) Navigating the Linode Cloud Interface Remote Administration using PuTTY, Terminal, SSH Foundations of Web Servers (Apache vs. NGINX) SQL vs NoSQL Databases Database Transaction Standards (ACID vs. CAP Theorem) Key Terms relevant to Cloud Computing, Web Servers, and Database Systems

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Java Training Complete Course 2022

Learn Java Programming language with Java Complete Training Course 2022 for Beginners

What you'll learn

You will learn how to write a complete Java program that takes user input, processes and outputs the results You will learn OOPS concepts in Java You will learn java concepts such as console output, Java Variables and Data Types, Java Operators And more You will be able to use Java for Selenium in testing and development

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Learn To Create AI Assistant (JARVIS) With Python

How To Create AI Assistant (JARVIS) With Python Like the One from Marvel's Iron Man Movie

What you'll learn

how to create an personalized artificial intelligence assistant how to create JARVIS AI how to create ai assistant

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Keyword Research, Free Backlinks, Improve SEO -Long Tail Pro

LongTailPro is the keyword research service we at Coursenvy use for ALL our clients! In this course, find SEO keywords,

What you'll learn

Learn everything Long Tail Pro has to offer from A to Z! Optimize keywords in your page/post titles, meta descriptions, social media bios, article content, and more! Create content that caters to the NEW Search Engine Algorithms and find endless keywords to rank for in ALL the search engines! Learn how to use ALL of the top-rated Keyword Research software online! Master analyzing your COMPETITIONS Keywords! Get High-Quality Backlinks that will ACTUALLY Help your Page Rank!

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

#udemy#free course#paid course for free#design#development#ux ui#xd#figma#web development#python#javascript#php#java#cloud

2 notes

·

View notes

Text

Top Diploma Classes Online for Career Growth in 2025

Looking to build a career without spending years in school? With the rise of flexible, accessible education, diploma classes online are becoming the go-to solution for learners around the world. Whether you're starting fresh, switching careers, or upgrading your skills, online diplomas offer a smart, efficient way to move forward.

The best part? You can earn a free diploma online through trusted platforms like UniAthena's Online Short Courses, helping you build real-world skills without breaking the bank.

If you're unsure where to begin, this guide covers some of the Best Diploma Courses to explore right now, based on job market trends and future potential.

Choosing the Right Diploma Course for Your Future

It’s normal to feel unsure when selecting a diploma course. What if it doesn’t suit your interests? What if it doesn’t lead to real job opportunities?

Here’s the good news—every course on this list is designed to prepare you for in-demand careers across growing industries. Whether you're passionate about safety, healthcare, data, or logistics, there's a diploma that aligns with your goals and interests.

Diploma Certificate in Environment Health and Safety Management

As industries grow, so does the need to ensure safe working environments. The Diploma Certificate in Environment Health and Safety Management equips you to manage workplace risks and enforce safety regulations across multiple sectors.

With this qualification, you could pursue roles such as environmental health and safety officer, food safety inspector, or industrial hygienist. It’s one of the most practical diploma classes online for those interested in creating safe and sustainable workplaces.

Healthcare Management Diploma

The healthcare industry is booming, and it’s not limited to clinical roles. With healthcare projected to nearly double in market size by 2032, management roles are more critical than ever.

The Healthcare Management Diploma prepares you to oversee hospital operations, manage clinical teams, and support patient care systems. Careers such as healthcare administrator, clinic operations manager, and pharmaceutical executive are within reach for those who complete this diploma.

This course is ideal for students who want to work in healthcare but prefer leadership and strategy over clinical practice.

Diploma in International Human Resource Management

With remote teams and global workforces becoming the norm, HR professionals need international expertise. The Diploma in International Human Resource Management offers a deep understanding of global labor laws, cross-cultural communication, and talent management.

It’s a great free diploma online if you're interested in international recruitment, global HR strategies, or organizational development. Graduates can explore roles such as HR business partner, payroll specialist, or training coordinator in multinational organizations.

Diploma in SQL

Data powers everything from finance to social media. Whether you're interested in analytics, app development, or cybersecurity, understanding databases is essential.

The Diploma in SQL takes you from the basics to advanced data queries. You’ll learn how to manage and analyze databases—skills needed for roles like SQL developer, data analyst, or BI engineer.

This course is one of UniAthena's Online Short Courses that’s particularly valuable for those entering the tech world or working in data-driven environments.

Diploma in Data Analytics

Data analytics is transforming how businesses make decisions. Companies across every industry are hiring professionals who can turn raw data into meaningful insights.

The Diploma in Data Analytics teaches you how to work with large datasets, perform statistical analysis, and use industry tools to guide strategy. Graduates often move into roles such as business analyst, data scientist, or marketing data strategist.

If you're looking to break into a high-growth, high-paying field, this is one of the Best Diploma Courses to start with.

Diploma Course in Transportation and Logistics Management

As global trade expands, supply chain efficiency is more critical than ever. The Diploma course in transportation and logistics management provides insights into freight movement, inventory control, and cross-border logistics.

This diploma prepares you for roles like logistics coordinator, fleet manager, or shipping analyst. It’s ideal for students who want to work in a fast-paced, essential industry that powers international commerce.

Diploma in Supply Chain Management

If you want to understand the bigger picture of global trade, the Diploma in Supply Chain Management is the perfect next step. This course dives deeper into procurement, production, and operations planning.

With this diploma, you can explore careers in operations strategy, purchasing, and enterprise resource planning. It’s one of the most strategic diploma classes online for future operations leaders.

Opportunities for Learners

Access to quality education and professional training can be limited by infrastructure and affordability. Online learning is changing that reality.

UniAthena's Online Short Courses offer a chance for learners to access globally recognized education from anywhere with an internet connection. Whether you're in Juba, Malakal, or Wau, you can gain skills that open doors to meaningful careers at home and abroad.

Courses like the Healthcare Management Diploma and the Diploma in Supply Chain Management are particularly relevant, supporting sectors crucial to national development. By enrolling in free diploma online programs, students and professionals can compete in the global job market, without leaving their communities.

Online diplomas offer flexibility, affordability, and the ability to upskill while balancing other responsibilities—a perfect solution aspiring workforce.

Conclusion

Your future doesn’t have to wait. With these Best Diploma Courses, you can build real-world skills in weeks, not years. Whether your interest lies in healthcare, data, logistics, or management, there’s a free diploma online that fits your goals and your schedule.

These online diplomas are designed to be accessible, affordable, and practical—giving you the confidence and credentials to move your career forward.

Explore UniAthena's Online Short Courses and take the first step toward a brighter future.

Additional Tips

Taking a free diploma online is a smart way to test your interests before committing to a full degree. These short courses also help you build credibility on your resume and stand out to employers.

Many can be completed in just a few weeks, and they’re open to learners from all educational and professional backgrounds. Whether you're starting out or making a career shift, online diplomas give you a flexible way to grow.

Start small, think big—and take action today.

#DiplomaClasses#OnlineLearning#CareerGrowth#TopDiplomaCourses#FutureReady#Upskill2025#OnlineEducation#CareerDevelopment#StudyFromHome#LearnOnline#ProfessionalGrowth#2025Trends#SkillEnhancement#CareerAdvancement#HigherEducation#CertificationCourses#Elearn

0 notes

Text

Beyond the Buzzword: Your Roadmap to Gaining Real Knowledge in Data Science

Data science. It's a field bursting with innovation, high demand, and the promise of solving real-world problems. But for newcomers, the sheer breadth of tools, techniques, and theoretical concepts can feel overwhelming. So, how do you gain real knowledge in data science, moving beyond surface-level understanding to truly master the craft?

It's not just about watching a few tutorials or reading a single book. True data science knowledge is built on a multi-faceted approach, combining theoretical understanding with practical application. Here’s a roadmap to guide your journey:

1. Build a Strong Foundational Core

Before you dive into the flashy algorithms, solidify your bedrock. This is non-negotiable.

Mathematics & Statistics: This is the language of data science.

Linear Algebra: Essential for understanding algorithms from linear regression to neural networks.

Calculus: Key for understanding optimization algorithms (gradient descent!) and the inner workings of many machine learning models.

Probability & Statistics: Absolutely critical for data analysis, hypothesis testing, understanding distributions, and interpreting model results. Learn about descriptive statistics, inferential statistics, sampling, hypothesis testing, confidence intervals, and different probability distributions.

Programming: Python and R are the reigning champions.

Python: Learn the fundamentals, then dive into libraries like NumPy (numerical computing), Pandas (data manipulation), Matplotlib/Seaborn (data visualization), and Scikit-learn (machine learning).

R: Especially strong for statistical analysis and powerful visualization (ggplot2). Many statisticians prefer R.

Databases (SQL): Data lives in databases. Learn to query, manipulate, and retrieve data efficiently using SQL. This is a fundamental skill for any data professional.

Where to learn: Online courses (Xaltius Academy, Coursera, edX, Udacity), textbooks (e.g., "Think Stats" by Allen B. Downey, "An Introduction to Statistical Learning"), Khan Academy for math fundamentals.

2. Dive into Machine Learning Fundamentals

Once your foundation is solid, explore the exciting world of machine learning.

Supervised Learning: Understand classification (logistic regression, decision trees, SVMs, k-NN, random forests, gradient boosting) and regression (linear regression, polynomial regression, SVR, tree-based models).

Unsupervised Learning: Explore clustering (k-means, hierarchical clustering, DBSCAN) and dimensionality reduction (PCA, t-SNE).

Model Evaluation: Learn to rigorously evaluate your models using metrics like accuracy, precision, recall, F1-score, AUC-ROC for classification, and MSE, MAE, R-squared for regression. Understand concepts like bias-variance trade-off, overfitting, and underfitting.

Cross-Validation & Hyperparameter Tuning: Essential techniques for building robust models.

Where to learn: Andrew Ng's Machine Learning course on Coursera is a classic. "Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow" by Aurélien Géron is an excellent practical guide.

3. Get Your Hands Dirty: Practical Application is Key!

Theory without practice is just information. You must apply what you learn.

Work on Datasets: Start with well-known datasets on platforms like Kaggle (Titanic, Iris, Boston Housing). Progress to more complex ones.

Build Projects: Don't just follow tutorials. Try to solve a real-world problem from start to finish. This involves:

Problem Definition: What are you trying to predict/understand?

Data Collection/Acquisition: Where will you get the data?

Exploratory Data Analysis (EDA): Understand your data, find patterns, clean messy parts.

Feature Engineering: Create new, more informative features from existing ones.

Model Building & Training: Select and train appropriate models.

Model Evaluation & Tuning: Refine your model.

Communication: Explain your findings clearly, both technically and for a non-technical audience.

Participate in Kaggle Competitions: This is an excellent way to learn from others, improve your skills, and benchmark your performance.

Contribute to Open Source: A great way to learn best practices and collaborate.

4. Specialize and Deepen Your Knowledge

As you progress, you might find a particular area of data science fascinating.

Deep Learning: If you're interested in image recognition, natural language processing (NLP), or generative AI, dive into frameworks like TensorFlow or PyTorch.

Natural Language Processing (NLP): Understanding text data, sentiment analysis, chatbots, machine translation.

Computer Vision: Image recognition, object detection, facial recognition.

Time Series Analysis: Forecasting trends in data that evolves over time.

Reinforcement Learning: Training agents to make decisions in an environment.

MLOps: The engineering side of data science – deploying, monitoring, and managing machine learning models in production.

Where to learn: Specific courses for each domain on platforms like deeplearning.ai (Andrew Ng), Fast.ai (Jeremy Howard).

5. Stay Updated and Engaged

Data science is a rapidly evolving field. Lifelong learning is essential.

Follow Researchers & Practitioners: On platforms like LinkedIn, X (formerly Twitter), and Medium.

Read Blogs and Articles: Keep up with new techniques, tools, and industry trends.

Attend Webinars & Conferences: Even virtual ones can offer valuable insights and networking opportunities.

Join Data Science Communities: Online forums (Reddit's r/datascience), local meetups, Discord channels. Learn from others, ask questions, and share your knowledge.

Read Research Papers: For advanced topics, dive into papers on arXiv.

6. Practice the Art of Communication

This is often overlooked but is absolutely critical.

Storytelling with Data: You can have the most complex model, but if you can't explain its insights to stakeholders, it's useless.

Visualization: Master tools like Matplotlib, Seaborn, Plotly, or Tableau to create compelling and informative visualizations.

Presentations: Practice clearly articulating your problem, methodology, findings, and recommendations.

The journey to gaining knowledge in data science is a marathon, not a sprint. It requires dedication, consistent effort, and a genuine curiosity to understand the world through data. Embrace the challenges, celebrate the breakthroughs, and remember that every line of code, every solved problem, and every new concept learned brings you closer to becoming a truly knowledgeable data scientist. What foundational skill are you looking to strengthen first?

1 note

·

View note

Text

How AWS Transforms Raw Data into Actionable Insights

Introduction

Businesses generate vast amounts of data daily, from customer interactions to product performance. However, without transforming this raw data into actionable insights, it’s difficult to make informed decisions. AWS Data Analytics offers a powerful suite of tools to simplify data collection, organization, and analysis. By leveraging AWS, companies can convert fragmented data into meaningful insights, driving smarter decisions and fostering business growth.

1. Data Collection and Integration

AWS makes it simple to collect data from various sources — whether from internal systems, cloud applications, or IoT devices. Services like AWS Glue and Amazon Kinesis help automate data collection, ensuring seamless integration of multiple data streams into a unified pipeline.

Data Sources AWS can pull data from internal systems (ERP, CRM, POS), websites, apps, IoT devices, and more.

Key Services

AWS Glue: Automates data discovery, cataloging, and preparation.

Amazon Kinesis: Captures real-time data streams for immediate analysis.

AWS Data Migration Services: Facilitates seamless migration of databases to the cloud.

By automating these processes, AWS ensures businesses have a unified, consistent view of their data.

2. Data Storage at Scale

AWS offers flexible, secure storage solutions to handle both structured and unstructured data. With services Amazon S3, Redshift, and RDS, businesses can scale storage without worrying about hardware costs.

Storage Options

Amazon S3: Ideal for storing large volumes of unstructured data.

Amazon Redshift: A data warehouse solution for quick analytics on structured data.

Amazon RDS & Aurora: Managed relational databases for handling transactional data.

AWS’s tiered storage options ensure businesses only pay for what they use, whether they need real-time analytics or long-term archiving.

3. Data Cleaning and Preparation

Raw data is often inconsistent and incomplete. AWS Data Analytics tools like AWS Glue DataBrew and AWS Lambda allow users to clean and format data without extensive coding, ensuring that your analytics processes work with high-quality data.

Data Wrangling Tools

AWS Glue DataBrew: A visual tool for easy data cleaning and transformation.

AWS Lambda: Run custom cleaning scripts in real-time.

By leveraging these tools, businesses can ensure that only accurate, trustworthy data is used for analysis.

4. Data Exploration and Analysis

Before diving into advanced modeling, it’s crucial to explore and understand the data. Amazon Athena and Amazon SageMaker Data Wrangler make it easy to run SQL queries, visualize datasets, and uncover trends and patterns in data.

Exploratory Tools

Amazon Athena: Query data directly from S3 using SQL.

Amazon Redshift Spectrum: Query S3 data alongside Redshift’s warehouse.

Amazon SageMaker Data Wrangler: Explore and visualize data features before modeling.

These tools help teams identify key trends and opportunities within their data, enabling more focused and efficient analysis.

5. Advanced Analytics & Machine Learning

AWS Data Analytics moves beyond traditional reporting by offering powerful AI/ML capabilities through services Amazon SageMaker and Amazon Forecast. These tools help businesses predict future outcomes, uncover anomalies, and gain actionable intelligence.

Key AI/ML Tools

Amazon SageMaker: An end-to-end platform for building and deploying machine learning models.

Amazon Forecast: Predicts business outcomes based on historical data.

Amazon Comprehend: Uses NLP to analyze and extract meaning from text data.

Amazon Lookout for Metrics: Detects anomalies in your data automatically.

These AI-driven services provide predictive and prescriptive insights, enabling proactive decision-making.

6. Visualization and Reporting

AWS’s Amazon QuickSight helps transform complex datasets into easily digestible dashboards and reports. With interactive charts and graphs, QuickSight allows businesses to visualize their data and make real-time decisions based on up-to-date information.

Powerful Visualization Tools

Amazon QuickSight: Creates customizable dashboards with interactive charts.

Integration with BI Tools: Easily integrates with third-party tools like Tableau and Power BI.

With these tools, stakeholders at all levels can easily interpret and act on data insights.

7. Data Security and Governance

AWS places a strong emphasis on data security with services AWS Identity and Access Management (IAM) and AWS Key Management Service (KMS). These tools provide robust encryption, access controls, and compliance features to ensure sensitive data remains protected while still being accessible for analysis.

Security Features

AWS IAM: Controls access to data based on user roles.

AWS KMS: Provides encryption for data both at rest and in transit.

Audit Tools: Services like AWS CloudTrail and AWS Config help track data usage and ensure compliance.

AWS also supports industry-specific data governance standards, making it suitable for regulated industries like finance and healthcare.

8. Real-World Example: Retail Company

Retailers are using AWS to combine data from physical stores, eCommerce platforms, and CRMs to optimize operations. By analyzing sales patterns, forecasting demand, and visualizing performance through AWS Data Analytics, they can make data-driven decisions that improve inventory management, marketing, and customer service.

For example, a retail chain might:

Use AWS Glue to integrate data from stores and eCommerce platforms.

Store data in S3 and query it using Athena.

Analyze sales data in Redshift to optimize product stocking.

Use SageMaker to forecast seasonal demand.

Visualize performance with QuickSight dashboards for daily decision-making.

This example illustrates how AWS Data Analytics turns raw data into actionable insights for improved business performance.

9. Why Choose AWS for Data Transformation?

AWS Data Analytics stands out due to its scalability, flexibility, and comprehensive service offering. Here’s what makes AWS the ideal choice:

Scalability: Grows with your business needs, from startups to large enterprises.

Cost-Efficiency: Pay only for the services you use, making it accessible for businesses of all sizes.

Automation: Reduces manual errors by automating data workflows.

Real-Time Insights: Provides near-instant data processing for quick decision-making.

Security: Offers enterprise-grade protection for sensitive data.

Global Reach: AWS’s infrastructure spans across regions, ensuring seamless access to data.

10. Getting Started with AWS Data Analytics

Partnering with a company, OneData, can help streamline the process of implementing AWS-powered data analytics solutions. With their expertise, businesses can quickly set up real-time dashboards, implement machine learning models, and get full support during the data transformation journey.

Conclusion

Raw data is everywhere, but actionable insights are rare. AWS bridges that gap by providing businesses with the tools to ingest, clean, analyze, and act on data at scale.

From real-time dashboards and forecasting to machine learning and anomaly detection, AWS enables you to see the full story your data is telling. With partners OneData, even complex data initiatives can be launched with ease.

Ready to transform your data into business intelligence? Start your journey with AWS today.

0 notes

Text

This SQL Trick Cut My Query Time by 80%

How One Simple Change Supercharged My Database Performance

If you work with SQL, you’ve probably spent hours trying to optimize slow-running queries — tweaking joins, rewriting subqueries, or even questioning your career choices. I’ve been there. But recently, I discovered a deceptively simple trick that cut my query time by 80%, and I wish I had known it sooner.

Here’s the full breakdown of the trick, how it works, and how you can apply it right now.

🧠 The Problem: Slow Query in a Large Dataset

I was working with a PostgreSQL database containing millions of records. The goal was to generate monthly reports from a transactions table joined with users and products. My query took over 35 seconds to return, and performance got worse as the data grew.

Here’s a simplified version of the original query:

sql

SELECT

u.user_id,

SUM(t.amount) AS total_spent

FROM

transactions t

JOIN

users u ON t.user_id = u.user_id

WHERE

t.created_at >= '2024-01-01'

AND t.created_at < '2024-02-01'

GROUP BY

u.user_id, http://u.name;

No complex logic. But still painfully slow.

⚡ The Trick: Use a CTE to Pre-Filter Before the Join

The major inefficiency here? The join was happening before the filtering. Even though we were only interested in one month’s data, the database had to scan and join millions of rows first — then apply the WHERE clause.

✅ Solution: Filter early using a CTE (Common Table Expression)

Here’s the optimized version:

sql

WITH filtered_transactions AS (

SELECT *

FROM transactions

WHERE created_at >= '2024-01-01'

AND created_at < '2024-02-01'

)

SELECT

u.user_id,

SUM(t.amount) AS total_spent

FROM

filtered_transactions t

JOIN

users u ON t.user_id = u.user_id

GROUP BY

u.user_id, http://u.name;

Result: Query time dropped from 35 seconds to just 7 seconds.

That’s an 80% improvement — with no hardware changes or indexing.

🧩 Why This Works

Databases (especially PostgreSQL and MySQL) optimize join order internally, but sometimes they fail to push filters deep into the query plan.

By isolating the filtered dataset before the join, you:

Reduce the number of rows being joined

Shrink the working memory needed for the query

Speed up sorting, grouping, and aggregation

This technique is especially effective when:

You’re working with time-series data

Joins involve large or denormalized tables

Filters eliminate a large portion of rows

🔍 Bonus Optimization: Add Indexes on Filtered Columns

To make this trick even more effective, add an index on created_at in the transactions table:

sql

CREATE INDEX idx_transactions_created_at ON transactions(created_at);

This allows the database to quickly locate rows for the date range, making the CTE filter lightning-fast.

🛠 When Not to Use This

While this trick is powerful, it’s not always ideal. Avoid it when:

Your filter is trivial (e.g., matches 99% of rows)

The CTE becomes more complex than the base query

Your database’s planner is already optimizing joins well (check the EXPLAIN plan)

🧾 Final Takeaway

You don’t need exotic query tuning or complex indexing strategies to speed up SQL performance. Sometimes, just changing the order of operations — like filtering before joining — is enough to make your query fly.

“Think like the database. The less work you give it, the faster it moves.”

If your SQL queries are running slow, try this CTE filtering trick before diving into advanced optimization. It might just save your day — or your job.

Would you like this as a Medium post, technical blog entry, or email tutorial series?

0 notes

Text

Key Microsoft Certifications and Cybersecurity Fundamentals for the Modern Business Environment

Introduction

In today's technology-driven world, businesses are increasingly relying on cloud solutions and advanced data management tools to drive efficiency, enhance customer experiences, and secure sensitive information. To meet these demands, professionals must be equipped with the right skills and certifications. This article provides an overview of some key Microsoft certifications, including the MB-280, MS-4017, DP-3020, and discusses the significance of "Fundamentos de Ciberseguridad en las organizaciones" (Fundamentals of Cybersecurity in Organizations) in ensuring data protection and business continuity.

MB-280: Dynamics 365 Customer Experience Analyst

Overview The MB-280 Dynamics 365 Customer Experience Analyst is designed for professionals who aim to specialize in Dynamics 365 Customer Engagement. This exam focuses on implementing, configuring, and managing customer engagement solutions within Microsoft Dynamics 365. The certification is ideal for business analysts or individuals working in roles that involve designing, analyzing, and enhancing customer-centric processes.

Skills Covered Candidates for the MB-280 exam will develop expertise in configuring and deploying Dynamics 365 Customer Engagement solutions, including modules for Sales, Marketing, Customer Service, and Field Service. Key competencies include data management, system customization, reporting, and integration with other Microsoft tools such as Power BI and Microsoft Teams.

Career Benefits Achieving this certification helps professionals validate their ability to improve customer experience strategies through the effective use of Dynamics 365. The MB-280 also opens opportunities for roles like CRM analyst, customer experience manager, and business solution specialist.

MS-4017: Manage Microsoft 365 Copilot

Overview The MS-4017 Manage Microsoft 365 Copilot , a tool that leverages artificial intelligence to enhance user productivity within the Microsoft 365 suite. Copilot is designed to help users manage their daily tasks more efficiently by offering insights, automating repetitive processes, and facilitating smarter decision-making.

Skills Covered This certification evaluates a candidate's ability to configure, implement, and manage Copilot across various Microsoft 365 applications such as Word, Excel, Outlook, and Teams. Core skills include enabling AI-powered features, managing security and compliance settings, and optimizing Copilot for organizational use.

Career Benefits With organizations increasingly embracing AI-driven tools for productivity, having expertise in Microsoft 365 Copilot can position professionals for roles in IT administration, productivity management, and AI implementation. The MS-4017 certification is a significant asset for those seeking to boost their credentials in an AI-enhanced workplace.

DP-3020: Develop Data-Driven Applications Using Microsoft Azure SQL Database

Overview The DP-3020 Develop data-driven applications by using Microsoft Azure SQL Database is aimed at developers and data professionals who specialize in building data-driven applications using Microsoft Azure SQL Database. This certification covers the design, development, and management of SQL databases within the Azure platform, enabling businesses to build scalable, secure, and high-performance applications.

Skills Covered Candidates for the DP-3020 exam gain proficiency in database management, performance tuning, security, and troubleshooting within Azure SQL. This certification emphasizes developing applications that rely on relational databases, querying data using T-SQL, and integrating SQL solutions with cloud-based applications.

Career Benefits Earning this certification validates a developer's expertise in working with Azure SQL Database, which is a widely used technology in cloud-based application development. This opens career opportunities for roles such as cloud developer, database administrator, and data solutions architect, all of which are in high demand as businesses continue to shift to the cloud.

Fundamentos de Ciberseguridad en las organizaciones (Fundamentals of Cybersecurity in Organizations)

Overview "Fundamentos de Ciberseguridad en las organizaciones" refers to the essential knowledge and practices needed to protect organizational systems, networks, and data from cyber threats. As cyberattacks become more sophisticated, businesses must prioritize cybersecurity to ensure the integrity, confidentiality, and availability of their critical information.

Skills Covered This training or certification focuses on the core principles of cybersecurity, including risk assessment, threat management, encryption, network security, and the implementation of security policies. It also covers the legal and ethical aspects of cybersecurity, ensuring organizations comply with relevant laws and standards.

Career Benefits As cybersecurity continues to be a top priority for organizations across industries, professionals with a solid understanding of cybersecurity fundamentals are highly sought after. This knowledge is crucial for IT security roles, including cybersecurity analyst, security consultant, and systems administrator. Moreover, it serves as a foundation for more advanced certifications in specific security technologies.

Conclusion

In conclusion, obtaining certifications like MB-280, MS-4017, and DP-3020 helps professionals stay ahead in the ever-evolving tech landscape. They allow individuals to deepen their expertise in customer engagement, cloud productivity, and data-driven application development. Additionally, a foundational understanding of cybersecurity is essential for safeguarding digital assets and ensuring business continuity. By investing in these skills and certifications, professionals can enhance their career prospects and contribute effectively to their organization's success.

0 notes

Text

Expert-Led Data Analyst Training in Arumbakkam: Your Path to Industry Readiness

In today’s fast-paced digital era, data drives decisions, shapes strategies, and fuels innovation. Businesses across all industries need skilled data analysts to make sense of the vast amount of data available. If you are looking to break into this booming field, our Data Analyst Training in Arumbakkam is your gateway to industry readiness. With 100% placement assistance, expert-led trainers, hands-on training, and a fast-track course completion within 60 days, our program is designed to set you on the path to success.

Introduction

Data analytics is more than a buzzword—it’s a crucial skill set that can transform your career. At Trendnologies IT Training Institute, we believe in empowering aspiring data professionals with both the technical know-how and practical experience needed to excel in today’s competitive job market. Our program in Arumbakkam is meticulously crafted to offer you a comprehensive learning experience that covers everything from the fundamentals to advanced data analysis techniques. Whether you’re a fresh graduate, a career changer, or an industry professional seeking to upskill, our training ensures you are ready to meet the challenges of the modern workplace.

What Sets Our Program Apart

Our Data Analyst Training in Arumbakkam is not just a course—it’s a transformative journey that equips you with industry-relevant skills and confidence. Here’s why our program stands out:

100% Placement Assistance: We understand that training is only as good as the opportunities it creates. Our dedicated placement cell works tirelessly to connect you with top employers. From resume optimization to mock interviews, we guide you every step of the way. Our extensive network of industry contacts means you are never far from your dream job.

Expert-Led Trainers: Learn from the best in the business. Our trainers are seasoned professionals with real-world experience in data analytics. They bring practical insights into the classroom, breaking down complex topics into easy-to-understand modules. Their mentorship ensures that you not only learn but also apply your skills effectively.

Hands-On Training: Theory is essential, but practice is paramount. Our training emphasizes hands-on learning through live projects and case studies. You will work on real datasets, use industry-standard tools, and solve problems that mirror real business scenarios. This practical approach ensures you can immediately apply your skills in the workplace.

Fast-Track Course Completion Within 60 Days: Time is precious, and so is your career growth. Our intensive 60-day program is designed to equip you with the necessary skills in a short time frame without compromising on quality. This fast-track approach means you can quickly transition from learning to earning.

Comprehensive Curriculum Designed for Success

Our curriculum is built to cover the most in-demand skills and tools in data analytics. Here’s a closer look at what you’ll learn during the course:

Fundamentals of Data Analytics: Start with the basics. Learn about data collection, cleaning, and interpretation to build a solid foundation.

SQL and Database Management: Understand how to manage and query databases. SQL is the backbone of data management, and you’ll master it through practical sessions.

Python for Data Analysis: Dive into Python, one of the most powerful programming languages in the industry. Explore libraries like Pandas, NumPy, and Matplotlib that are essential for data manipulation and visualization.

Excel for Data Manipulation: Excel remains a vital tool in data analysis. Learn advanced functions and techniques that help in rapid data manipulation and analysis.

Data Visualization Tools: Bring data to life using visualization tools such as Tableau and Power BI. Creating compelling visualizations is key to communicating your findings effectively.

Statistical Methods and Machine Learning Basics: Get introduced to the statistical techniques and basic machine learning concepts that enable predictive analysis and informed decision-making.

Live Projects and Case Studies: Apply your skills on real-world projects. These hands-on exercises not only solidify your learning but also help build an impressive portfolio for future employers.

Benefits of Our Data Analyst Training in Arumbakkam

Enrolling in our program means you’re investing in a future full of opportunities. Here’s what you can expect:

Industry-Relevant Skills: Stay updated with the latest tools and techniques. Our course is continuously revised to align with industry standards.

Job-Ready Training: With comprehensive placement assistance, we ensure that you are prepared to step into the job market with confidence. Our career workshops and one-on-one mentoring sessions further enhance your employability.

Personalized Learning Experience: Our expert trainers provide individual attention to help you overcome challenges and master each concept thoroughly. You are never just another face in the classroom.

Networking Opportunities: Connect with industry professionals and fellow learners. Our training environment fosters collaboration, creating a strong community that can support you throughout your career.

Fast-Track Career Launch: Complete the course in just 60 days and rapidly transition into a thriving career in data analytics. Our program is designed to quickly transform you into a capable and confident data analyst.

Real Success Stories

At Trendnologies, our success is reflected in the achievements of our alumni. Many have transitioned seamlessly into high-demand roles across various sectors, thanks to the comprehensive training and dedicated placement support they received. Their stories stand as a testament to the quality of our Data Analyst Training in Arumbakkam and the impact it can have on your professional journey.

“The hands-on projects were a game-changer. I felt confident applying my skills in real work scenarios, and the placement support was phenomenal.” – Divya.S

“Expert trainers who truly care about your success. I landed a job within a month of completing the course!” – Mohan.R

How to Get Started

Taking the first step towards a rewarding career in data analytics is simple. Here’s how you can enroll in our Data Analyst Training in Arumbakkam:

Visit Our Website: Explore detailed course information, curriculum highlights, and enrollment procedures on our website.

Contact Our Support Team: Our dedicated team is available to answer any queries regarding the course, fees, and placement assistance. Feel free to reach out for personalized guidance.

Enroll Today: Don’t let this opportunity slip away. Secure your future by enrolling in our program and start your journey towards becoming an industry-ready data analyst.

Conclusion

Data analytics is a transformative field with endless opportunities. At Trendnologies IT Training Institute, we are committed to turning your aspirations into reality. Our Data Analyst Training in Arumbakkam offers a comprehensive, hands-on, and fast-track learning experience, ensuring that you are job-ready in just 60 days. With 100% placement assistance, expert-led trainers, and real-world project exposure, you are well on your way to a successful career in data analytics.

For more info: Website: https://trendnologies.com/ Email: [email protected] Contact us: +91 7200124901 Location: Chennai | Coimbatore | Bangalore

#trendnologies#it training#software testing course#non-it to it#100% placement guarantee#data analyst training#data analyst course#data analyst in arumbakkam

0 notes

Text

Exploring Dynamic SQL in T-SQL Server

Dynamic SQL in T-SQL Server: A Complete Guide to Building Dynamic Queries Hello, fellow SQL enthusiasts! In this blog post, I will introduce you to Dynamic SQL in T-SQL – one of the most powerful and flexible features of T-SQL Server – Dynamic SQL. Dynamic SQL allows you to construct and execute SQL statements dynamically at runtime, enabling more adaptable and responsive database operations. It…

0 notes

Text

Microsoft Fabric data warehouse

Microsoft Fabric data warehouse

What Is Microsoft Fabric and Why You Should Care?

Unified Software as a Service (SaaS), offering End-To-End analytics platform

Gives you a bunch of tools all together, Microsoft Fabric OneLake supports seamless integration, enabling collaboration on this unified data analytics platform

Scalable Analytics

Accessibility from anywhere with an internet connection

Streamlines collaboration among data professionals

Empowering low-to-no-code approach

Components of Microsoft Fabric

Fabric provides comprehensive data analytics solutions, encompassing services for data movement and transformation, analysis and actions, and deriving insights and patterns through machine learning. Although Microsoft Fabric includes several components, this article will use three primary experiences: Data Factory, Data Warehouse, and Power BI.

Lake House vs. Warehouse: Which Data Storage Solution is Right for You?

In simple terms, the underlying storage format in both Lake Houses and Warehouses is the Delta format, an enhanced version of the Parquet format.

Usage and Format Support

A Lake House combines the capabilities of a data lake and a data warehouse, supporting unstructured, semi-structured, and structured formats. In contrast, a data Warehouse supports only structured formats.

When your organization needs to process big data characterized by high volume, velocity, and variety, and when you require data loading and transformation using Spark engines via notebooks, a Lake House is recommended. A Lakehouse can process both structured tables and unstructured/semi-structured files, offering managed and external table options. Microsoft Fabric OneLake serves as the foundational layer for storing structured and unstructured data Notebooks can be used for READ and WRITE operations in a Lakehouse. However, you cannot connect to a Lake House with an SQL client directly, without using SQL endpoints.

On the other hand, a Warehouse excels in processing and storing structured formats, utilizing stored procedures, tables, and views. Processing data in a Warehouse requires only T-SQL knowledge. It functions similarly to a typical RDBMS database but with a different internal storage architecture, as each table’s data is stored in the Delta format within OneLake. Users can access Warehouse data directly using any SQL client or the in-built graphical SQL editor, performing READ and WRITE operations with T-SQL and its elements like stored procedures and views. Notebooks can also connect to the Warehouse, but only for READ operations.

An SQL endpoint is like a special doorway that lets other computer programs talk to a database or storage system using a language called SQL. With this endpoint, you can ask questions (queries) to get information from the database, like searching for specific data or making changes to it. It’s kind of like using a search engine to find things on the internet, but for your data stored in the Fabric system. These SQL endpoints are often used for tasks like getting data, asking questions about it, and making changes to it within the Fabric system.

Choosing Between Lakehouse and Warehouse

The decision to use a Lakehouse or Warehouse depends on several factors:

Migrating from a Traditional Data Warehouse: If your organization does not have big data processing requirements, a Warehouse is suitable.

Migrating from a Mixed Big Data and Traditional RDBMS System: If your existing solution includes both a big data platform and traditional RDBMS systems with structured data, using both a Lakehouse and a Warehouse is ideal. Perform big data operations with notebooks connected to the Lakehouse and RDBMS operations with T-SQL connected to the Warehouse.

Note: In both scenarios, once the data resides in either a Lakehouse or a Warehouse, Power BI can connect to both using SQL endpoints.

A Glimpse into the Data Factory Experience in Microsoft Fabric

In the Data Factory experience, we focus primarily on two items: Data Pipeline and Data Flow.

Data Pipelines

Used to orchestrate different activities for extracting, loading, and transforming data.

Ideal for building reusable code that can be utilized across other modules.

Enables activity-level monitoring.

To what can we compare Data Pipelines ?

microsoft fabric data pipelines Data Pipelines are similar, but not the same as:

Informatica -> Workflows

ODI -> Packages

Dataflows

Utilized when a GUI tool with Power Query UI experience is required for building Extract, Transform, and Load (ETL) logic.

Employed when individual selection of source and destination components is necessary, along with the inclusion of various transformation logic for each table.

To what can we compare Data Flows ?

Dataflows are similar, but not same as :

Informatica -> Mappings

ODI -> Mappings / Interfaces

Are You Ready to Migrate Your Data Warehouse to Microsoft Fabric?

Here is our solution for implementing the Medallion Architecture with Fabric data Warehouse:

Creation of New Workspace

We recommend creating separate workspaces for Semantic Models, Reports, and Data Pipelines as a best practice.

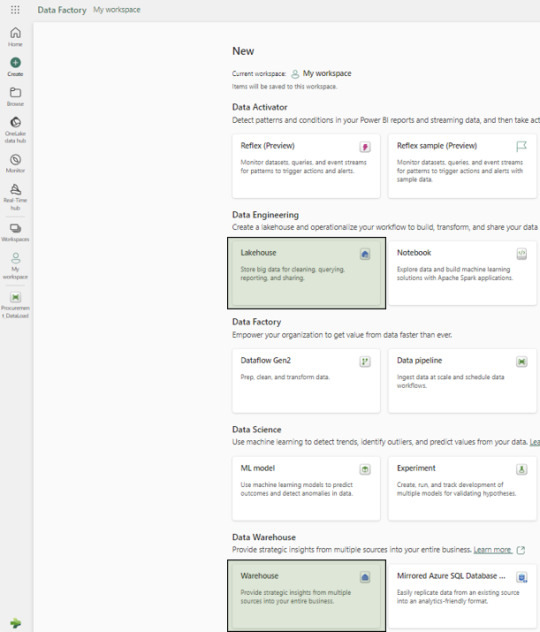

Creation of Warehouse and Lakehouse

Follow the on-screen instructions to setup new Lakehouse and a Warehouse:

Configuration Setups

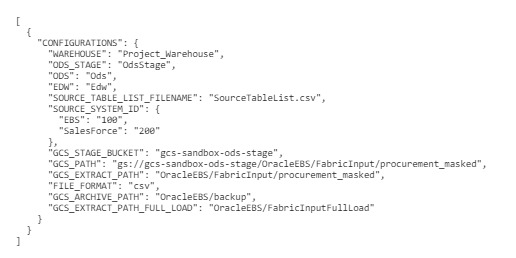

Create a configurations.json file containing parameters for data pipeline activities:

Source schema, buckets, and path

Destination warehouse name

Names of warehouse layers bronze, silver and gold – OdsStage,Ods and Edw

List of source tables/files in a specific format

Source System Id’s for different sources

Below is the screenshot of the (config_variables.json) :

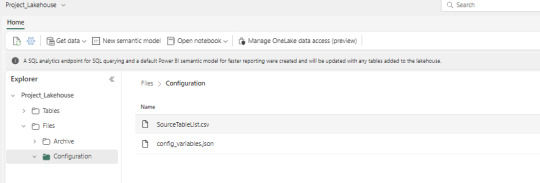

File Placement

Place the configurations.json and SourceTableList.csv files in the Fabric Lakehouse.

SourceTableList will have columns such as – SourceSystem, SourceDatasetId, TableName, PrimaryKey, UpdateKey, CDCColumnName, SoftDeleteColumn, ArchiveDate, ArchiveKey

Data Pipeline Creation

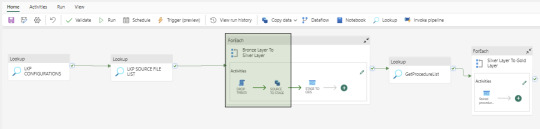

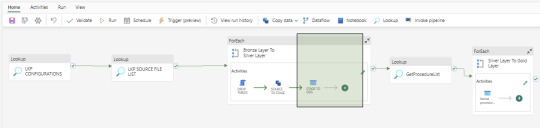

Create a data pipeline to orchestrate various activities for data extraction, loading, and transformation. Below is the screenshot of the Data Pipeline and here you can see the different activities like – Lookup, ForEach, Script, Copy Data and Stored Procedure

Bronze Layer Loading

Develop a dynamic activity to load data into the Bronze Layer (OdsStage schema in Warehouse). This layer truncates and reloads data each time.

We utilize two activities in this layer: Script Activity and Copy Data Activity. Both activities receive parameterized inputs from the Configuration file and SourceTableList file. The Script activity drops the staging table, and the Copy Data activity creates and loads data into the OdsStage table. These activities are reusable across modules and feature powerful capabilities for fast data loading.

Silver Layer Loading

Establish a dynamic activity to UPSERT data into the Silver layer (Ods schema in Warehouse) using a stored procedure activity. This procedure takes parameterized inputs from the Configuration file and SourceTableList file, handling both UPDATE and INSERT operations. This stored procedure is reusable. At this time, MERGE statements are not supported by Fabric Warehouse. However, this feature may be added in the future.

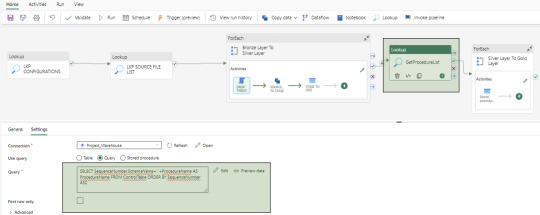

Control Table Creation

Create a control table in the Warehouse with columns containing Sequence Numbers and Procedure Names to manage dependencies between Dimensions, Facts, and Aggregate tables. And finally fetch the values using a Lookup activity.

Gold Layer Loading

To load data into the Gold Layer (Edw schema in the warehouse), we develop individual stored procedures to UPSERT (UPDATE and INSERT) data for each dimension, fact, and aggregate table. While Dataflow can also be used for this task, we prefer stored procedures to handle the nature of complex business logic.

Dashboards and Reporting

Fabric includes the Power BI application, which can connect to the SQL endpoints of both the Lakehouse and Warehouse. These SQL endpoints allow for the creation of semantic models, which are then used to develop reports and applications. In our use case, the semantic models are built from the Gold layer (Edw schema in Warehouse) tables.

Upcoming Topics Preview

In the upcoming articles, we will cover topics such as notebooks, dataflows, lakehouse, security and other related subjects.

Conclusion

microsoft Fabric data warehouse stands as a potent and user-friendly data manipulation platform, offering an extensive array of tools for data ingestion, storage, transformation, and analysis. Whether you’re a novice or a seasoned data analyst, Fabric empowers you to optimize your workflow and harness the full potential of your data.

We specialize in aiding organizations in meticulously planning and flawlessly executing data projects, ensuring utmost precision and efficiency.

Curious and would like to hear more about this article ?

Contact us at [email protected] or Book time with me to organize a 100%-free, no-obligation call

Follow us on LinkedIn for more interesting updates!!

DataPlatr Inc. specializes in data engineering & analytics with pre-built data models for Enterprise Applications like SAP, Oracle EBS, Workday, Salesforce to empower businesses to unlock the full potential of their data. Our pre-built enterprise data engineering models are designed to expedite the development of data pipelines, data transformation, and integration, saving you time and resources.

Our team of experienced data engineers, scientists and analysts utilize cutting-edge data infrastructure into valuable insights and help enterprise clients optimize their Sales, Marketing, Operations, Financials, Supply chain, Human capital and Customer experiences.

0 notes

Text

Unlocking the Future of Data Engineering with Ask On Data: An Open Source, GenAI-Powered NLP-Based Data Engineering Tool

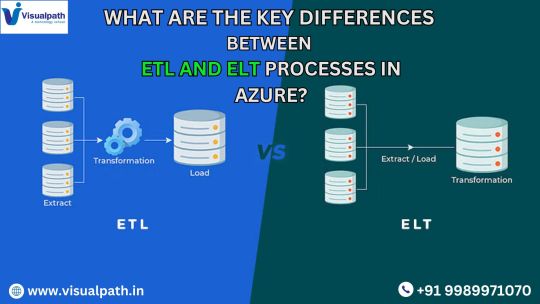

In the rapidly evolving world of data engineering, businesses and organizations face increasing challenges in managing and integrating vast amounts of data from diverse sources. Traditional data engineering processes, including ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform), often require specialized expertise, are time-consuming, and can be error-prone. To address these challenges, innovative technologies like Generative AI (GenAI), Large Language Models (LLM), and Natural Language Processing (NLP) are reshaping how data engineers and analysts work with data. One such breakthrough solution is Ask On Data, an NLP based data engineering tool that simplifies the complexities of data integration, transformation, and loading through conversational interfaces.

What is Ask On Data?

Ask On Data is an open-source NLP based ETL tool that leverages Generative AI and Large Language Models (LLMs) to enable users to interact with complex data systems in natural language. With Ask On Data, the traditionally technical processes of data extraction, data transformation, and data loading are streamlined and made accessible to a broader range of users, including those without a deep technical background in data engineering.