#aws data storage services

Explore tagged Tumblr posts

Text

In today’s digital era, secure and scalable storage is essential for any growing business. AWS (Amazon Web Services) offers a comprehensive suite of cloud storage solutions that cater to diverse needs—ranging from backups and archiving to real-time data access and disaster recovery.

At 10Fingers, we understand the critical role reliable storage plays in managing digital records. AWS services like Amazon S3 (Simple Storage Service) provide highly durable, cost-effective object storage perfect for document digitization and retrieval. For high-performance file systems, Amazon EFS (Elastic File System) and Amazon FSx offer scalable storage tailored to business applications. Amazon Glacier supports long-term archival at minimal costs while ensuring data remains secure and easily retrievable.

AWS also integrates advanced features like lifecycle policies, version control, and cross-region replication, empowering organizations to optimize storage costs and enhance data protection.

Whether you're in healthcare, finance, or telecom, AWS storage solutions—combined with 10Fingers’ expertise in document management—deliver seamless digital transformation. From scanning to storage, we ensure your records are accessible, secure, and compliant with industry standards.

Choose 10Fingers with AWS to future-proof your data management journey.

0 notes

Text

Cloud Computing's Role in Remote Work During 2020

Introduction:

Cloud computing has been at the core of business processing worldwide. But a impact of remote work and digital transformation accelerated like never before. For a survival and growth businesses are turns to the cloud for scalability, security, and efficiency. Cloud computing is one of the most successful innovations since its inception in the mainstream market. It is safe to conclude that Cloud Computing and its benefits such as cost savings, increased efficiency, and improved opportunities are not unheard by any IT professional today. According to a few reports, it is estimated that 83% of the total workloads in businesses globally would be on the cloud by 2020. And it seems to be matching the current figures. Of the 83%, 41% of workloads are believed to be on public cloud platforms.

All the major sectors of businesses such as financial institutions, manufacturing, and healthcare have significantly progressed in adopting the cloud in recent times.

How the Cloud will help Businesses?

Despite the negative sides to the cloud such as security and data loss, the cloud has ways to protect the data for assurance that it remains accessible and secure at all times. Here are some of the ways to use the cloud for your business in 2020.

Data Archival and Storage Optimization

Businesses are aware of the importance of data when it comes to strategy and future estimation. In 2020, being the last year in the decade, cloud computing will play an important role in data storage and archival. Due to this, Cloud services providers are already engaged in providing extra space to the clients for 2020.

Enabling a Mobile Workplace

With the rise of shared workspace businesses of Bootstart and Awfis, startups require flexible IT Infrastructure. Cloud is the perfect solution to this as it is accessible from any part of the world at any time. Enhancing productivity and collaboration.

Secure Data Sharing

Whether you’re at the office or somewhere else, Cloud-based platform enables real-time data sharing at your ease. Once the data is in the backup, sharing files is as easy as sharing a link.

Cost-effective File Storage

In a small and mid-sized business, Cloud-based storage eliminated the need for physical hard drives, offering scalable, secure, and environmentally sustainable alternatives. Many data centers transitioned to green technologies, contributing to lower carbon footprints. Cloud will be a great replacement for small businesses and startups, currently storing data in the local system.

Scaling Business Growth

Cloud computing allowed businesses to scale operations on demand without heavy capital investments. Companies could expand or reduce cloud resources as required, eliminating the need for costly in-house IT infrastructure.

Cost Reduction

Paying a full-fledged in-house team is not feasible for small businesses and startups. Managed cloud services providers are the best option to choose when it comes to the cloud. The cloud services providers are responsible for maintaining the software, hardware, and infrastructure of your business at a very low cost.

Cloud gets Global

Looking at all these advantages, the year 2020 will play a major role in the growth of cloud innovation for business with IoT, AI and Big Data Integration accelerating digital transformation. This will lead to greater global collaboration and communication among companies globally.

Key Takeaway: The Future of Cloud Computing

The research will drive more innovations, which means that each passing year will bring new challenges and more opportunities for the IT industry. The innovative technologies will make businesses to re-think about their strategic necessity and infrastructure to remain competitive. While the businesses aiming for agility and versatility, efficiency will be an achievement.

By leveraging cloud consultancy services, businesses can stay updated with industry trends, optimize infrastructure and drive sustainable growth in the market with a cloud.

#Cloud Computing Services#Cloud Computing#Cloud Adoption#cloud service providers#aws cloud consulting services#cloud consulting services#cloud services#Cost reduction#file storage#data storage

0 notes

Text

AWS Cloud Migration: Why Dubai Businesses Are Making the Shift

Introduction

Dubai, a global business hub known for its rapid innovation and futuristic vision, has been at the forefront of digital transformation. With enterprises striving to stay competitive and agile, cloud migration has become a strategic imperative. Among the leading cloud platforms, Amazon Web Services (AWS) in Dubai has emerged as the go-to solution for businesses. But why are so many companies in the region shifting to AWS? This article delves into the key drivers, benefits, and strategies behind this transformative move.

Understanding Cloud Migration with AWS

Cloud migration involves moving digital operations from on-premises servers to cloud-based infrastructure. AWS, a pioneer in cloud computing, provides scalable, secure, and cost-effective solutions that cater to businesses of all sizes. AWS migration services simplify the process by offering tools, frameworks, and support to ensure a seamless transition.

For Dubai's businesses, cloud migration isn't just a technological upgrade—it's a fundamental step toward embracing innovation and scalability in an increasingly digital economy.

Why Dubai Businesses Are Choosing AWS for Cloud Migration

Cost Efficiency One of the primary reasons businesses in Dubai migrate to AWS is cost savings. Maintaining on-premises IT infrastructure involves significant capital expenditures (CapEx) on hardware, software, and ongoing maintenance. AWS operates on a pay-as-you-go model, converting these costs into manageable operational expenses (OpEx).

Moreover, AWS offers cost optimization tools, such as AWS Cost Explorer and AWS Budgets, allowing businesses to monitor and control their expenses effectively.

Scalability and Flexibility Dubai is home to dynamic industries like retail, hospitality, and fintech, which experience fluctuating demands. AWS provides elastic scalability, enabling businesses to adjust their resources based on demand. Whether it's a peak shopping season or launching a new service, AWS ensures that companies can scale up or down without disruptions.

Global Reach with Local Presence AWS operates a vast network of data centers globally, ensuring minimal latency and high reliability. For Dubai-based businesses, AWS’s Middle East region (UAE) ensures compliance with local data residency laws and delivers faster performance for end-users in the region.

Enhanced Security Cybersecurity is a top priority for Dubai businesses, especially with the rise of cyber threats. AWS offers a robust security framework, including features like encryption, identity and access management (IAM), and continuous monitoring through tools like AWS CloudTrail and AWS Config. These ensure that sensitive data and applications remain protected.

Support for Innovation AWS fosters innovation by providing cutting-edge services in areas like artificial intelligence (AI), machine learning (ML), Internet of Things (IoT), and analytics. Dubai's businesses, especially in smart city initiatives and e-commerce, leverage these tools to stay ahead of the curve.

Key Benefits of AWS Cloud Migration

Improved Operational Efficiency By migrating to AWS, businesses can automate routine tasks, optimize workflows, and reduce the burden on IT teams. Tools like AWS Lambda and AWS Step Functions allow businesses to build serverless architectures, streamlining operations.

Business Continuity and Disaster Recovery AWS ensures high availability and disaster recovery with services like Amazon S3 for storage, Amazon RDS for managed databases, and AWS Backup. These solutions minimize downtime and protect critical business data, which is crucial for Dubai’s fast-paced markets.

Accelerated Time to Market The agility of AWS helps Dubai businesses launch products and services faster. AWS CloudFormation, for instance, automates infrastructure provisioning, enabling rapid deployment and iteration.

Sustainability Goals Dubai’s focus on sustainability aligns with AWS’s commitment to renewable energy. AWS data centers are designed for energy efficiency, helping businesses reduce their carbon footprint while achieving their green goals.

Industries Driving AWS Cloud Adoption in Dubai

E-commerce Dubai’s booming e-commerce sector benefits significantly from AWS's scalable and secure infrastructure. Services like Amazon CloudFront ensure faster content delivery, while AWS Marketplace provides easy access to pre-configured solutions.

Finance The financial sector in Dubai, driven by fintech innovation, leverages AWS for secure transaction processing and data analytics. AWS services like Amazon Athena and Amazon Redshift help financial institutions make data-driven decisions.

Healthcare Healthcare providers in Dubai are adopting AWS for secure patient data storage, telemedicine solutions, and AI-driven diagnostics. AWS HealthLake and Amazon SageMaker are popular tools in this domain.

Hospitality Dubai's world-renowned hospitality industry utilizes AWS for personalized guest experiences, booking system optimization, and real-time analytics.

Challenges and Solutions in AWS Cloud Migration

While the benefits of AWS cloud migration are evident, businesses must address certain challenges:

Skill Gaps Many organizations face a lack of skilled personnel to manage cloud infrastructure. AWS solves this with extensive training programs like AWS Training and Certification, as well as a global network of AWS-certified consulting partners.

Migration Complexity Moving legacy systems to the cloud can be complex. AWS Migration Hub simplifies the process by providing a centralized dashboard to track and manage migrations.

Cost Management Businesses must monitor cloud spending to avoid unnecessary expenses. AWS tools like Trusted Advisor and Reserved Instances help in optimizing costs.

Steps for a Successful AWS Cloud Migration

Assess and Plan Conduct a detailed assessment of existing infrastructure and identify workloads for migration. Use AWS tools like AWS Application Discovery Service for insights.

Choose the Right Migration Strategy AWS outlines six common strategies, known as the "6 Rs"—Rehost, Replatform, Repurchase, Refactor, Retire, and Retain. Select the strategy that best suits your business needs.

Leverage AWS Support Engage with AWS consulting partners in Dubai to navigate the migration journey. These experts bring local insights and technical expertise.

Optimize Post-Migration Continuously monitor and optimize workloads to maximize efficiency. Use services like AWS CloudWatch and AWS Auto Scaling for ongoing management.

Conclusion

Dubai’s businesses are embracing AWS cloud migration as a catalyst for innovation, agility, and growth. With its robust infrastructure, cost-effective solutions, and commitment to security, AWS empowers organizations to thrive in a competitive landscape. By overcoming migration challenges and leveraging AWS's advanced tools and services, Dubai-based enterprises can position themselves as leaders in the digital economy.

As Dubai continues its journey toward becoming a global tech hub, the shift to AWS is not just a trend but a strategic move that aligns with the city’s ambitious vision for the future.

#aws security solution#aws security services#aws data storage#aws backup services#aws consulting partners#aws consulting services#aws consulting services in dubai#aws cloud migration in Dubai#AWS migration service in dubai

0 notes

Text

Why AWS is Becoming Essential for Modern IT Professionals

In today's fast-paced tech landscape, the integration of development and operations has become crucial for delivering high-quality software efficiently. AWS DevOps is at the forefront of this transformation, enabling organizations to streamline their processes, enhance collaboration, and achieve faster deployment cycles. For IT professionals looking to stay relevant in this evolving environment, pursuing AWS DevOps training in Hyderabad is a strategic choice. Let’s explore why AWS DevOps is essential and how training can set you up for success.

The Rise of AWS DevOps

1. Enhanced Collaboration

AWS DevOps emphasizes the collaboration between development and operations teams, breaking down silos that often hinder productivity. By fostering communication and cooperation, organizations can respond more quickly to changes and requirements. This shift is vital for businesses aiming to stay competitive in today’s market.

2. Increased Efficiency

With AWS DevOps practices, automation plays a key role. Tasks that were once manual and time-consuming, such as testing and deployment, can now be automated using AWS tools. This not only speeds up the development process but also reduces the likelihood of human error. By mastering these automation techniques through AWS DevOps training in Hyderabad, professionals can contribute significantly to their teams' efficiency.

Benefits of AWS DevOps Training

1. Comprehensive Skill Development

An AWS DevOps training in Hyderabad program covers a wide range of essential topics, including:

AWS services such as EC2, S3, and Lambda

Continuous Integration and Continuous Deployment (CI/CD) pipelines

Infrastructure as Code (IaC) with tools like AWS CloudFormation

Monitoring and logging with AWS CloudWatch

This comprehensive curriculum equips you with the skills needed to thrive in modern IT environments.

2. Hands-On Experience

Most training programs emphasize practical, hands-on experience. You'll work on real-world projects that allow you to apply the concepts you've learned. This experience is invaluable for building confidence and competence in AWS DevOps practices.

3. Industry-Recognized Certifications

Earning AWS certifications, such as the AWS Certified DevOps Engineer, can significantly enhance your resume. Completing AWS DevOps training in Hyderabad prepares you for these certifications, demonstrating your commitment to professional development and expertise in the field.

4. Networking Opportunities

Participating in an AWS DevOps training in Hyderabad program also allows you to connect with industry professionals and peers. Building a network during your training can lead to job opportunities, mentorship, and collaborative projects that can advance your career.

Career Opportunities in AWS DevOps

1. Diverse Roles

With expertise in AWS DevOps, you can pursue various roles, including:

DevOps Engineer

Site Reliability Engineer (SRE)

Cloud Architect

Automation Engineer

Each role offers unique challenges and opportunities for growth, making AWS DevOps skills highly valuable.

2. High Demand and Salary Potential

The demand for DevOps professionals, particularly those skilled in AWS, is skyrocketing. Organizations are actively seeking AWS-certified candidates who can implement effective DevOps practices. According to industry reports, these professionals often command competitive salaries, making an AWS DevOps training in Hyderabad a wise investment.

3. Job Security

As more companies adopt cloud solutions and DevOps practices, the need for skilled professionals will continue to grow. This trend indicates that expertise in AWS DevOps can provide long-term job security and career advancement opportunities.

Staying Relevant in a Rapidly Changing Industry

1. Continuous Learning

The tech industry is continually evolving, and AWS regularly introduces new tools and features. Staying updated with these advancements is crucial for maintaining your relevance in the field. Consider pursuing additional certifications or training courses to deepen your expertise.

2. Community Engagement

Engaging with AWS and DevOps communities can provide insights into industry trends and best practices. These networks often share valuable resources, training materials, and opportunities for collaboration.

Conclusion

As the demand for efficient software delivery continues to rise, AWS DevOps expertise has become essential for modern IT professionals. Investing in AWS DevOps training in Hyderabad will equip you with the skills and knowledge needed to excel in this dynamic field.

By enhancing your capabilities in collaboration, automation, and continuous delivery, you can position yourself for a successful career in AWS DevOps. Don’t miss the opportunity to elevate your professional journey—consider enrolling in an AWS DevOps training in Hyderabad program today and unlock your potential in the world of cloud computing!

#technology#aws devops training in hyderabad#aws course in hyderabad#aws training in hyderabad#aws coaching centers in hyderabad#aws devops course in hyderabad#Cloud Computing#DevOps#AWS#AZURE#CloudComputing#Cloud Computing & DevOps#Cloud Computing Course#DeVOps course#AWS COURSE#AZURE COURSE#Cloud Computing CAREER#Cloud Computing jobs#Data Storage#Cloud Technology#Cloud Services#Data Analytics#Cloud Computing Certification#Cloud Computing Course in Hyderabad#Cloud Architecture#amazon web services

0 notes

Text

In today's fast-paced digital landscape, cloud technology has emerged as a transformative force that empowers organizations to innovate, scale and adapt like never before. Learn more about our services, go through our blogs, study materials, case studies - https://bit.ly/463FjrO

#engineering#technology#software#softwaredevelopment#cloud#data#itservice#engineeringservices#Nitorinfotech#ascendion#softwareservices#itconsultancycompany#itcompany#cloud pillar#what is cloud data storage#aws cloud migration services#cloud engineering services#pillars of cloud#gcp cloud vision#google cloud#google cloud platform#google cloud console#cloud computing trends#cloud storage services#cloud storage

0 notes

Text

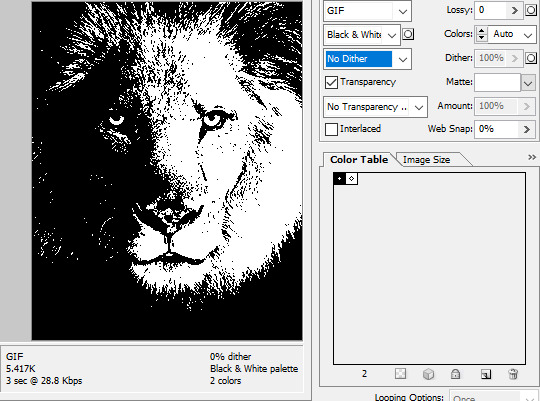

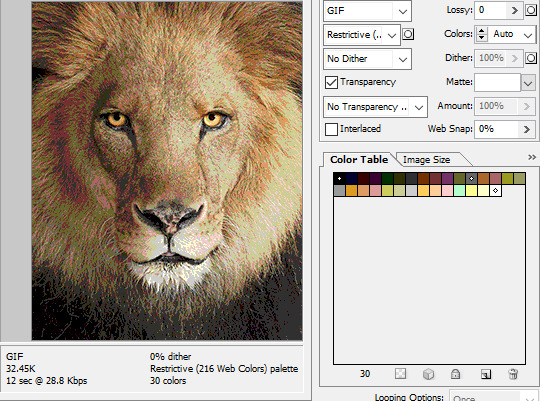

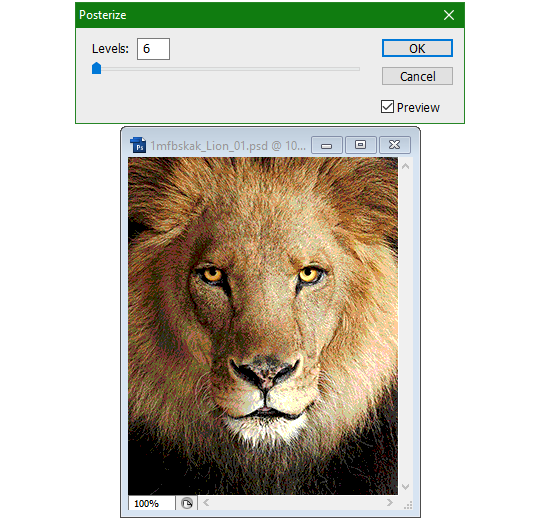

Tidbit: The “Posterization” Effect of Panels Due to the Consequences of GIF Color Quantization (and Increased Contrast (And Also The Tangential Matter of Dithering))

There’s this misconception that the color banding and patterned dithering found in panels is an entirely deliberate, calculated effect Hussie manipulated the image into looking with some specific filter, but this isn’t the case, exactly. It wasn’t so much a conscious decision he took but rather an unavoidable consequence of the medium he partook in: digital art in an age where bandwidth and storage was at a premium.

Not to delve too deeply into the history and technicalities of it, but the long and the short of it is back in the early nineties to late aughts (and even a bit further into the 10s), transferring and storing data over the web was not as fast, plentiful, and affordable as it is now. Filesize was a much more important consideration than the fidelity of an image when displaying it on the web. Especially so when you’re a hobbyist on a budget and paying for your own webhosting, or using a free service with a modest upload limit (even per file!). Besides, what good would it be to post your images online if it takes ages to load them over people's dial-up Internet? Don't even get me STARTED on the meager memory and power the average iGPU had to work with, too.

The original comic strip's resolution was a little more than halved and saved as a GIF rather than a large PNG. That's about an 82.13% reduction in filesize!

So in the early days it was very common for people to take their scans, photographs, and digital drawings and scale them down and publish them as smaller lossily compressed JPEGs or lossless GIFs, the latter of which came at the cost of color range. But it had a wider range of browser support and the feature to be used for animations compared to its successor format, PNG ("PNG's not GIF").

You'd've been hard-pressed to find Hussie use any PNGs himself then. In fact, I think literally the only times he's ever personally employed them and not delegate the artwork to a member of the art team were some of the tiny shrunken down text of a character talking far in the distance and a few select little icons.

PNGs support semi-transparency unlike GIFs, which is why Hussie used them to preserve the anti-aliasing on the text without having to add an opaque background color.

While PNGs can utilize over 16 million colors in a single image, GIFs have a hard limit of 256 colors per frame. For reference, this small image alone has 604 colors:

For those who can't do the math, 256 is a pretty damn small number.

Smaller still were the palettes in a great deal of MSPA's panels early on in its run. Amazingly, a GIF such as this only uses 7 colors (8 if you count the alpha (which it is)).

Not that they were always strictly so low; occasionally some in the later acts of Homestuck had pretty high counts. This panel uses all 256 spots available, in fact.

If he had lowered the number any smaller, the quality would have been god-awful.

To the untrained eye, these bands of color below may seem to be the result of a posterization filter (an effect that reduces smooth areas of color into fewer harsh solid regions), but it's really because the image was exported as a GIF with no dithering applied.

Dithering, to the uninitiated, is how these colors are arranged together to compensate for the paltry palette, producing illusory additional colors. There are three algorithms in Photoshop for this: Diffusion, Pattern, and Noise.

Above is the original image and below is the image reduced to a completely binary 1-bit black and white color palette, to make the effect of each dithering algorithm more obvious.

Diffusion seemingly displaces the pixels around randomly, but it uses error diffusion to calculate what color each pixel should be. In other words, math bullshit. The Floyd-Steinberg algorithm is one such implementation of it, and is usually what this type of error diffusion dithering is called in other software, or some misnomer-ed variation thereof.

The usage of Pattern may hearken back to retro video game graphics for you, as older consoles also suffered from color palette limitations. Sometimes called Ordered dithering because of the orderly patterns it produces. At least, I assumed so. Its etymological roots probably stem from more math bullshit again.

True to its name, Noise is noisy. It’s visually similar to Diffusion dithering, except much more random looking. At least, when binarized like this. Truth be told, I can’t tell the difference between the two at all when using a fuller color table on an image with a lot of detail. It was mainly intended to be used when exporting individual slices of an image that was to be “stitched” back together on a webpage, to mitigate visible seams in the dithering around the edges.

To sate your curiosity, here's how the image looks with no dithering at all:

People easily confuse an undithered gif as being the result of posterization, and you couldn't fault them for thinking so. They look almost entirely the same!

Although I was already aware of this fact when I was much younger, I'm guilty of posterizing myself while editing images back then. Figured I may as well reduce the color count beforehand to help keep the exported GIF looking as intended. I view this as a complete waste of time now, though, and amateurish. Takes away a bit of the authenticity of MSPA art, how the colors and details are so variable between panels. As for WHY they were so variable to begin with, choosing the settings to save the image as requires a judicious examination on a case-by-case basis. In other words, just playing around with the settings until it looks decent.

It's the process of striking a fine balance between an acceptable file size and a "meh, good enough" visual quality that I mentioned earlier. How many colors can you take away until it starts to look shit? Which dithering algorithm helps make it look not as shit while not totally ruining the compression efficacy?

Take, for example, this panel from Problem Sleuth. It has 16 colors, an average amount for the comic, and uses Diffusion dithering. Filesize: 34.5 KB.

Then there's this panel right afterwards. It has 8 colors (again, technically 7 + alpha channel since it's an animated gif), and uses Noise dithering this time. Filesize: 34.0 KB.

The more colors and animation frames there are, and the more complicated dithering there is, the bigger the file size is going to be. Despite the second panel having half the color count of the first, the heavily noisy dithering alone was enough to inflate the file size back up. On top of that, there's extra image information layered in for the animation, leaving only a mere 0.5 kilobyte difference between the two panels.

So why would Hussie pick the algorithm that compresses worse than the other? The answer: diffusion causes the dithering to jitter around between frames of animation. Recall its description from before, how it functions on nerd shit like math calculations. The way it calculates what each pixel's color will be is decided by the pixels' colors surrounding it, to put it simply. Any difference in the placement of pixels will cause these cascading changes in the dithering like the butterfly effect.

Diffusion dithering, 16 colors. Filesize: 25.2 KB

This isn't the case with Noise or Pattern dithering, since their algorithms use either a texture or a definite array of numbers (more boring nerd shit).

Noise dithering, 16 colors. Filesize: 31.9 KB

Pattern dithering, 16 colors. Filesize: 23.1 KB

There's a lot more I'd like to talk about, like the different color reduction algorithms, which dither algorithms generally compress better in what cases, and the upward and downward trends of each one’s use over the course of a comic, but since this isn’t a deep dive on GIF optimization, I might save that for another time. This post is already reaching further past the original scope it was meant to cover, and less than 10 images can be uploaded before hitting the limit, which is NOWHERE near enough for me. I should really reevaluate my definition of the word “tidbit”… Anyway, just know that this post suffers from sample selection bias, so while the panels above came from an early section of Problem Sleuth that generally had static panels with diffusion dithering and animated panels with noise dithering, there certainly were animated panels with diffusion later on despite the dither-jittering.

Alright, time to shotgun through the rest of this post, screw segueing. Increasing the contrast almost entirely with “Use Legacy” enabled spreads the tones of the image out evenly, causing the shadows and highlights to clip into pure black and white. The midtones become purely saturated colors. Using the Levels adjustment filter instead, moving both shadow and highlight input level sliders towards the middle also accomplishes the same thing, because, you know, linear readjustment. I'm really resisting the urge to go off on another tangent about color channels and the RGB additive color model.

Anyway, there aren't any examples in MSPA that are quite this extreme (at least in color, but I'll save that for a later post), but an image sufficiently high in contrast can be mistaken for being posterized at a glance. Hence the Guy Fieri banner. In preparation for this post, I was attempting to make a pixel-perfect recreation of that panel but hit a wall trying to figure out which and how many filters were used and what each one's settings were, so I sought the wisdom of those in the official Photoshop Discord server. The very first suggestion I got was a posterization filter, by someone who was a supposed senior professional and server moderator, no less. Fucking dipshit, there's too much detail preserved for it to be posterization. Dude totally dissed me and my efforts too, so fuck that moron. I spit on his name and curse his children, and his children's children. The philistines I have to put up with...

In the end, the bloody Guy Fieri recreation proved to be too much for me to get right. I got sort of close at times, but no cigar. These were some of the closest I could manage:

You might be left befuddled after all this, struggling to remember what the point of the blogpost even was. I had meant for it to be a clarification of GIFs and an argument against using the posterization filter, thinking it was never used in MSPA, but while gathering reference images, I found a panel from the Felt intermission that actually WAS posterized! So I’ll eat crow on this one... Whatever, it’s literally the ONE TIME ever.

I can tell it's posterization and not gif color quantization because of the pattern dithering and decently preserved details on the bomb and bull penis cane. There would have had to have been no dithering and way fewer colors than the 32, most of which were allotted to the bomb and cane. You can't really selectively choose what gets dithered or more colors like this otherwise.

Thank you for reading if you've gotten this far. That all might have been a lot to take in at once, so if you're still unclear about something, please don't hesitate to leave a question! And as always, here are the PSDs used in this post that are free to peruse.

371 notes

·

View notes

Quote

Most of the positive cashflow generated by Amazon.com doesn’t come from product sales, but from advertising. The website is primarily a conduit for delivering ads to consumers and collecting ad revenue from sellers. The merchandise delivered to your doors is just a means of harvesting your data and serving you ads on behalf of their real clients – the advertisers. Similar realities hold for Prime Video: Amazon typically spends more on acquiring content than they take in from subscription revenues and digital rentals or purchases. The main point of the digital content is, likewise, to collect consumer data, deliver ads, and expand consumption of content on behalf of Amazon’s clients. The Alexa-powered Echo devices follow the same logic. They were intended as tools to harvest user data and deliver ads. The devices themselves were typically sold at cost or at a loss. But, to date, the company has been unable to successfully monetize the Echo, so they shut down most of that division and discontinued its products. They’re now trying to launch a new line of Echo devices that use AI-tools to better harvest user data and nudge people towards buying particular products or consuming specific content on behalf of advertisers. It remains to be seen if this latest venture will also flop. But, critically, none of these initiatives are Amazon’s main source of revenue. That honor goes to their Amazon Web Service data collection, analytics and storage enterprise. AWS is their primary breadwinner and it’s not even close.

You Ask, I Answer: We Have Never Been Woke FAQ

9 notes

·

View notes

Text

The global backlash against the second Donald Trump administration keeps on growing. Canadians have boycotted US-made products, anti–Elon Musk posters have appeared across London amid widespread Tesla protests, and European officials have drastically increased military spending as US support for Ukraine falters. Dominant US tech services may be the next focus.

There are early signs that some European companies and governments are souring on their use of American cloud services provided by the three so-called hyperscalers. Between them, Google Cloud, Microsoft Azure, and Amazon Web Services (AWS) host vast swathes of the internet and keep thousands of businesses running. However, some organizations appear to be reconsidering their use of these companies’ cloud services—including servers, storage, and databases—citing uncertainties around privacy and data access fears under the Trump administration.

“There’s a huge appetite in Europe to de-risk or decouple the over-dependence on US tech companies, because there is a concern that they could be weaponized against European interests,” says Marietje Schaake, a nonresident fellow at Stanford’s Cyber Policy Center and a former, decade-long member of the European Parliament.

The moves may already be underway. On March 18, politicians in the Netherlands House of Representatives passed eight motions asking the government to reduce reliance on US tech companies and move to European alternatives. Days before, more than 100 organizations signed an open letter to European officials calling for the continent to become “more technologically independent” and saying the status quo creates “security and reliability risks.”

Two European-based cloud service companies, Exoscale and Elastx, tell WIRED they have seen an uptick in potential customers looking to abandon US cloud providers over the last two weeks—with some already starting to make the jump. Multiple technology advisers say they are having widespread discussions about what it would take to uproot services, data, and systems.

“We have more demand from across Europe,” says Mathias Nöbauer, the CEO of Swiss-based hosting provider Exoscale, adding there has been an increase in new customers seeking to move away from cloud giants. “Some customers were very explicit,” Nöbauer says. “Especially customers from Denmark being very explicit that they want to move away from US hyperscalers because of the US administration and what they said about Greenland.”

“It's a big worry about the uncertainty around everything. And from the Europeans’ perspective—that the US is maybe not on the same team as us any longer,” says Joakim Öhman, the CEO of Swedish cloud provider Elastx. “Those are the drivers that bring people or organizations to look at alternatives.”

Concerns have been raised about the current data-sharing agreement between the EU and US, which is designed to allow information to move between the two continents while protecting people’s rights. Multiple previous versions of the agreement have been struck down by European courts. At the end of January, Trump fired three Democrats from the Privacy and Civil Liberties Oversight Board (PCLOB), which helps manage the current agreement. The move could undermine or increase uncertainty around the agreement. In addition, Öhman says, he has heard concerns from firms about the CLOUD Act, which can allow US law enforcement to subpoena user data from tech companies, potentially including data that is stored in systems outside of the US.

Dave Cottlehuber, the founder of SkunkWerks, a small tech infrastructure firm in Austria, says he has been moving the company’s few servers and databases away from US providers to European services since the start of the year. “First and foremost, it’s about values,” Cottlehuber says. “For me, privacy is a right not a privilege.” Cottlehuber says the decision to move is easier for a small business such as his, but he argues it removes some taxes that are paid to the Trump administration. “The best thing I can do is to remove that small contribution of mine, and also at the same time, make sure that my customers’ privacy is respected and preserved,” Cottlehuber says.

Steffen Schmidt, the CEO of Medicusdata, a company that provides text-to-speech services to doctors and hospitals in Europe, says that having data in Europe has always “been a must,” but his customers have been asking for more in recent weeks. “Since the beginning of 2025, in addition to data residency guarantees, customers have actively asked us to use cloud providers that are natively European companies,” Schmidt says, adding that some of his services have been moved to Nöbauer’s Exoscale.

Harry Staight, a spokesperson for AWS, says it is “not accurate” that customers are moving from AWS to EU alternatives. “Our customers have control over where they store their data and how it is encrypted, and we make the AWS Cloud sovereign-by-design,” Straight says. “AWS services support encryption with customer managed keys that are inaccessible to AWS, which means customers have complete control of who accesses their data.” Staight says the membership of the PCLOB “does not impact” the agreements around EU-US data sharing and that the CLOUD Act has “additional safeguards for cloud content.” Google and Microsoft declined to comment.

The potential shift away from US tech firms is not just linked to cloud providers. Since January 15, visitors to the European Alternatives website increased more than 1,200 percent. The site lists everything from music streaming services to DDoS protection tools, says Marko Saric, a cofounder of European cloud analytics service Plausible. “We can certainly feel that something is going on,” Saric says, claiming that during the first 18 days of March the company has “beaten” the net recurring revenue growth it saw in January and February. “This is organic growth which cannot be explained by any seasonality or our activities,” he says.

While there are signs of movement, the impact is likely to be small—at least for now. Around the world, governments and businesses use multiple cloud services—such as authentication measures, hosting, data storage, and increasingly data centers providing AI processing—from the big three cloud and tech service providers. Cottlehuber says that, for large businesses, it may take many months, if not longer, to consider what needs to be moved, the risks involved, plus actually changing systems. “What happens if you have a hundred petabytes of storage, it's going to take years to move over the internet,” he says.

For years, European companies have struggled to compete with the likes of Google, Microsoft, and Amazon’s cloud services and technical infrastructure, which make billions every year. It may also be difficult to find similar services on the scale of those provided by alternative European cloud firms.

“If you are deep into the hyperscaler cloud ecosystem, you’ll struggle to find equivalent services elsewhere,” says Bert Hubert, an entrepreneur and former government regulator, who says he has heard of multiple new cloud migrations to US firms being put on hold or reconsidered. Hubert has argued that it is no longer “safe” for European governments to be moved to US clouds and that European alternatives can’t properly compete. “We sell a lot of fine wood here in Europe. But not that much furniture,” he says. However, that too could change.

Schaake, the former member of the European Parliament, says a combination of new investments, a different approach to buying public services, and a Europe-first approach or investing in a European technology stack could help to stimulate any wider moves on the continent. “The dramatic shift of the Trump administration is very tangible,” Schaake says. “The idea that anything could happen and that Europe should fend for itself is clear. Now we need to see the same kind of pace and leadership that we see with defense to actually turn this into meaningful action.”

7 notes

·

View notes

Text

Pegasus 1.2: High-Performance Video Language Model

Pegasus 1.2 revolutionises long-form video AI with high accuracy and low latency. Scalable video querying is supported by this commercial tool.

TwelveLabs and Amazon Web Services (AWS) announced that Amazon Bedrock will soon provide Marengo and Pegasus, TwelveLabs' cutting-edge multimodal foundation models. Amazon Bedrock, a managed service, lets developers access top AI models from leading organisations via a single API. With seamless access to TwelveLabs' comprehensive video comprehension capabilities, developers and companies can revolutionise how they search for, assess, and derive insights from video content using AWS's security, privacy, and performance. TwelveLabs models were initially offered by AWS.

Introducing Pegasus 1.2

Unlike many academic contexts, real-world video applications face two challenges:

Real-world videos might be seconds or hours lengthy.

Proper temporal understanding is needed.

TwelveLabs is announcing Pegasus 1.2, a substantial industry-grade video language model upgrade, to meet commercial demands. Pegasus 1.2 interprets long films at cutting-edge levels. With low latency, low cost, and best-in-class accuracy, model can handle hour-long videos. Their embedded storage ingeniously caches movies, making it faster and cheaper to query the same film repeatedly.

Pegasus 1.2 is a cutting-edge technology that delivers corporate value through its intelligent, focused system architecture and excels in production-grade video processing pipelines.

Superior video language model for extended videos

Business requires handling long films, yet processing time and time-to-value are important concerns. As input films increase longer, a standard video processing/inference system cannot handle orders of magnitude more frames, making it unsuitable for general adoption and commercial use. A commercial system must also answer input prompts and enquiries accurately across larger time periods.

Latency

To evaluate Pegasus 1.2's speed, it compares time-to-first-token (TTFT) for 3–60-minute videos utilising frontier model APIs GPT-4o and Gemini 1.5 Pro. Pegasus 1.2 consistently displays time-to-first-token latency for films up to 15 minutes and responds faster to lengthier material because to its video-focused model design and optimised inference engine.

Performance

Pegasus 1.2 is compared to frontier model APIs using VideoMME-Long, a subset of Video-MME that contains films longer than 30 minutes. Pegasus 1.2 excels above all flagship APIs, displaying cutting-edge performance.

Pricing

Cost Pegasus 1.2 provides best-in-class commercial video processing at low cost. TwelveLabs focusses on long videos and accurate temporal information rather than everything. Its highly optimised system performs well at a competitive price with a focused approach.

Better still, system can generate many video-to-text without costing much. Pegasus 1.2 produces rich video embeddings from indexed movies and saves them in the database for future API queries, allowing clients to build continually at little cost. Google Gemini 1.5 Pro's cache cost is $4.5 per hour of storage, or 1 million tokens, which is around the token count for an hour of video. However, integrated storage costs $0.09 per video hour per month, x36,000 less. Concept benefits customers with large video archives that need to understand everything cheaply.

Model Overview & Limitations

Architecture

Pegasus 1.2's encoder-decoder architecture for video understanding includes a video encoder, tokeniser, and big language model. Though efficient, its design allows for full textual and visual data analysis.

These pieces provide a cohesive system that can understand long-term contextual information and fine-grained specifics. It architecture illustrates that tiny models may interpret video by making careful design decisions and solving fundamental multimodal processing difficulties creatively.

Restrictions

Safety and bias

Pegasus 1.2 contains safety protections, but like any AI model, it might produce objectionable or hazardous material without enough oversight and control. Video foundation model safety and ethics are being studied. It will provide a complete assessment and ethics report after more testing and input.

Hallucinations

Occasionally, Pegasus 1.2 may produce incorrect findings. Despite advances since Pegasus 1.1 to reduce hallucinations, users should be aware of this constraint, especially for precise and factual tasks.

#technology#technews#govindhtech#news#technologynews#AI#artificial intelligence#Pegasus 1.2#TwelveLabs#Amazon Bedrock#Gemini 1.5 Pro#multimodal#API

2 notes

·

View notes

Text

By Sesona Mdlokovana

Understanding Data Colonialism

Data colonialism is the unregulated extraction, commodification and monopolisation of data from developing countries by multinational corporations that are primarily based in the West. Companies like Meta (outlawed in Russia - InfoBRICS), Google, Microsoft, Amazon and Apple dominate digital infrastructures across the globe, offering low-cost or free services in exchange for vast amounts of governmental, personal and commercial data. In countries across Latin America, Africa and South Asia, these tech conglomerates use their technological and financial dominance to enforce unequal digital dependencies. For example:

- The dominance of Google in cloud and search services means that millions of government institutions and businesses across the Global South store highly sensitive data on Western-owned servers, often located outside of their jurisdictions.

- Meta controlling social media platforms such as WhatsApp, Facebook and Instagram has led to content moderation policies that disproportionately have serious impacts on non-Western voices, while simultaneously profiting from local user-generated content.

- Amazon Web Services (AWS) hosts an immense amount of cloud storage and creates a scenario where governments and local startups in BRICS nations have to rely on foreign digital infrastructure.

- AI models and fintech systems rely on data from Global South users, yet these countries see little economic benefit from its monetisation.

The BRICS bloc response: Strengthening Digital Sovereignty In order to counter data colonialism, BRICS countries have to prioritise strategies and policies that assert digital sovereignty while facilitating indigenous technological growth. There are several approaches in which this could be achieved:

2 notes

·

View notes

Text

In today’s digital era, secure and scalable storage is essential for any growing business. AWS (Amazon Web Services) offers a comprehensive suite of cloud storage solutions that cater to diverse needs—ranging from backups and archiving to real-time data access and disaster recovery.

At 10Fingers, we understand the critical role reliable storage plays in managing digital records. AWS services like Amazon S3 (Simple Storage Service) provide highly durable, cost-effective object storage perfect for document digitization and retrieval. For high-performance file systems, Amazon EFS (Elastic File System) and Amazon FSx offer scalable storage tailored to business applications. Amazon Glacier supports long-term archival at minimal costs while ensuring data remains secure and easily retrievable.

AWS also integrates advanced features like lifecycle policies, version control, and cross-region replication, empowering organizations to optimize storage costs and enhance data protection.

Whether you're in healthcare, finance, or telecom, AWS storage solutions—combined with 10Fingers’ expertise in document management—deliver seamless digital transformation. From scanning to storage, we ensure your records are accessible, secure, and compliant with industry standards.

Choose 10Fingers with AWS to future-proof your data management journey.

0 notes

Text

Sage Hosting: The Smart Choice for Business Growth

In today’s digital-first world, businesses need secure, scalable, and efficient accounting and financial management solutions. Sage hosting delivers just that, offering cloud-based access to Sage software with enhanced performance, data security, and flexibility. But with multiple hosting options available, how do you choose the best one?

Let’s explore Sage hosting services, compare different hosting solutions, and guide you through the setup process for maximum efficiency.

Understanding Sage Hosting

Sage hosting allows businesses to access Sage software remotely via cloud-based servers, removing the need for local installations. Hosted solutions ensure real-time data access, automatic updates, and advanced security protocols to protect sensitive financial information.

Key Benefits of Sage Hosting

✔ Anywhere, Anytime Access – Work remotely without limitations. ✔ Automatic Data Backup – Prevent data loss with secure cloud storage. ✔ No IT Headaches – Eliminate manual software updates and maintenance. ✔ Scalable & Flexible – Adapt hosting needs as your business grows.

Each hosting method has its strengths—cloud hosting is ideal for accessibility, AWS hosting offers unmatched security, and private hosting is great for businesses needing full control over their data.

Step-by-Step Guide: Setting Up Sage Hosting

Step 1: Identify Your Hosting Needs

Need remote access? Opt for Sage cloud hosting.

Looking for enterprise security? Choose AWS Sage hosting.

Prefer dedicated control? Go with private hosting.

Step 2: Choose the Right Hosting Provider

Look for certified Sage hosting vendors with high security standards.

Compare pricing, uptime guarantees, and technical support availability.

Step 3: Install & Configure Sage Software

Set up Sage on cloud, AWS, or private servers.

Migrate business data securely to the new hosting environment.

Customize user permissions and security settings.

Step 4: Optimize Performance & Security

Test system speed and reliability before full deployment.

Enable multi-factor authentication & encryption features.

Automate updates for smooth, long-term operation.

Why Businesses Should Consider Sage Hosting

🚀 Boost Efficiency – Faster, optimized operations without local installations. 🔒 Strengthen Security – Advanced protection against cyber threats and data breaches. 💸 Reduce IT Costs – No need for expensive hardware or manual software updates. 📈 Ensure Scalability – Expand hosting as your business grows. 🌍 Enable Remote Work – Work from anywhere, anytime.

Final Thoughts

Finding the best Sage hosting solution depends on your unique business needs. Whether you choose Sage cloud hosting, AWS hosting, or private hosting, the goal is to prioritize security, performance, and cost-efficiency for long-term success.

Still deciding? Let’s explore the right Sage hosting service for your business needs!

#sage hosting#sage cloud hosting#sage application hosting#sage hosting solutions#sage hosting services

1 note

·

View note

Text

Why AWS is the Best Cloud Hosting Partner for Your Organization – Proven Benefits and Features

More entrepreneurs like e-store owners prefer Amazon Web Services (AWS) for cloud hosting services this year. This article will single out countless reasons to consider this partner for efficient AWS hosting today.

5 Enticing Features of AWS that Make It Perfect for You

The following are the main characteristics of Amazon Web Services (AWS) in 2024.

Scalable

The beauty of AWS is that a client can raise or lower their computing capability based on business demands.

Highly Secure

Secondly, AWS implements countless security measures to ensure the safety of a client’s data. For example, AWS complies with all the set data safety standards to avoid getting lawsuits from disgruntled clients.

Amazon secures all its data centers to ensure no criminal can access them for a nefarious purpose.

Free Calculator

Interestingly, AWS proffers this tool to help new clients get an estimate of the total hosting cost based on their business needs. The business owner only needs to indicate their location, interested services, and their zone.

Pay-As-You-Go Pricing Option

New clients prefer this company for AWS hosting services because this option lets them pay based on the resources they add to this platform.

User-Friendly

AWS is the best hosting platform because it has a user-oriented interface. For example, the provider has multiple navigation links plus instructional videos to enable the clients to use this platform.

Clients can edit updated data whenever they choose or add new company data to their accounts.

Unexpected Advantages of Seeking AWS Hosting Services

Below are the scientific merits of relying on Amazon Web Services (AWS) for web design and cloud computing services.

Relatively Fair Pricing Models

Firstly, the AWS hosting service provider offers well-thought-out pricing options to ensure the client only pays for the resources they utilize. For example, you can get a monthly option if you have many long-term projects.

Limitless Server Capacity

AWS offers a reasonable hosting capacity to each client to enable them to store as much company data as possible. Therefore, this cloud hosting partner ensures that employees can access crucial files to complete activities conveniently.

Upholds Confidentiality

AWS has at least twelve (12) data centers in different parts of the world. Further, this provider’s system is relatively robust and secure to safeguard sensitive clients’ data 24/7.

High-Performance Computing

Unlike other cloud hosting sites, AWS can process meta-data within seconds, enabling employees to meet their daily goals.

Highly Reliable

Unknown to some, over 1M clients in various countries rely on AWS for web development or hosting services. Additionally, AWS is available in over 200 countries spread across different continents.

Finally, AWS’s technical team spares no effort to implement new technologies to safeguard their clients’ data and woo new ones.

Summary

In closing, the beauty of considering this partner for AWS hosting is that it has a simple layout-hence ideal for everyone, including non-techies. Additionally, the fact that this partner is elastic ensures that this system can shrink or expand based on the files you add.

At its core, AWS offers various cloud services, such as storage options, computing power, and networking through advanced technology. NTSPL Hosting offers various features on AWS hosting aimed at improving the scalability of cloud infrastructure for less downtimes. Some of the services NTSPL Hosting offers include pioneering server administration, version control, and system patching. Given that it offers round the clock customer service; it is a good option for those looking for a solid AWS hosting solution.

Source: NTSPL Hosting

3 notes

·

View notes

Text

How-To IT

Topic: Core areas of IT

1. Hardware

• Computers (Desktops, Laptops, Workstations)

• Servers and Data Centers

• Networking Devices (Routers, Switches, Modems)

• Storage Devices (HDDs, SSDs, NAS)

• Peripheral Devices (Printers, Scanners, Monitors)

2. Software

• Operating Systems (Windows, Linux, macOS)

• Application Software (Office Suites, ERP, CRM)

• Development Software (IDEs, Code Libraries, APIs)

• Middleware (Integration Tools)

• Security Software (Antivirus, Firewalls, SIEM)

3. Networking and Telecommunications

• LAN/WAN Infrastructure

• Wireless Networking (Wi-Fi, 5G)

• VPNs (Virtual Private Networks)

• Communication Systems (VoIP, Email Servers)

• Internet Services

4. Data Management

• Databases (SQL, NoSQL)

• Data Warehousing

• Big Data Technologies (Hadoop, Spark)

• Backup and Recovery Systems

• Data Integration Tools

5. Cybersecurity

• Network Security

• Endpoint Protection

• Identity and Access Management (IAM)

• Threat Detection and Incident Response

• Encryption and Data Privacy

6. Software Development

• Front-End Development (UI/UX Design)

• Back-End Development

• DevOps and CI/CD Pipelines

• Mobile App Development

• Cloud-Native Development

7. Cloud Computing

• Infrastructure as a Service (IaaS)

• Platform as a Service (PaaS)

• Software as a Service (SaaS)

• Serverless Computing

• Cloud Storage and Management

8. IT Support and Services

• Help Desk Support

• IT Service Management (ITSM)

• System Administration

• Hardware and Software Troubleshooting

• End-User Training

9. Artificial Intelligence and Machine Learning

• AI Algorithms and Frameworks

• Natural Language Processing (NLP)

• Computer Vision

• Robotics

• Predictive Analytics

10. Business Intelligence and Analytics

• Reporting Tools (Tableau, Power BI)

• Data Visualization

• Business Analytics Platforms

• Predictive Modeling

11. Internet of Things (IoT)

• IoT Devices and Sensors

• IoT Platforms

• Edge Computing

• Smart Systems (Homes, Cities, Vehicles)

12. Enterprise Systems

• Enterprise Resource Planning (ERP)

• Customer Relationship Management (CRM)

• Human Resource Management Systems (HRMS)

• Supply Chain Management Systems

13. IT Governance and Compliance

• ITIL (Information Technology Infrastructure Library)

• COBIT (Control Objectives for Information Technologies)

• ISO/IEC Standards

• Regulatory Compliance (GDPR, HIPAA, SOX)

14. Emerging Technologies

• Blockchain

• Quantum Computing

• Augmented Reality (AR) and Virtual Reality (VR)

• 3D Printing

• Digital Twins

15. IT Project Management

• Agile, Scrum, and Kanban

• Waterfall Methodology

• Resource Allocation

• Risk Management

16. IT Infrastructure

• Data Centers

• Virtualization (VMware, Hyper-V)

• Disaster Recovery Planning

• Load Balancing

17. IT Education and Certifications

• Vendor Certifications (Microsoft, Cisco, AWS)

• Training and Development Programs

• Online Learning Platforms

18. IT Operations and Monitoring

• Performance Monitoring (APM, Network Monitoring)

• IT Asset Management

• Event and Incident Management

19. Software Testing

• Manual Testing: Human testers evaluate software by executing test cases without using automation tools.

• Automated Testing: Use of testing tools (e.g., Selenium, JUnit) to run automated scripts and check software behavior.

• Functional Testing: Validating that the software performs its intended functions.

• Non-Functional Testing: Assessing non-functional aspects such as performance, usability, and security.

• Unit Testing: Testing individual components or units of code for correctness.

• Integration Testing: Ensuring that different modules or systems work together as expected.

• System Testing: Verifying the complete software system’s behavior against requirements.

• Acceptance Testing: Conducting tests to confirm that the software meets business requirements (including UAT - User Acceptance Testing).

• Regression Testing: Ensuring that new changes or features do not negatively affect existing functionalities.

• Performance Testing: Testing software performance under various conditions (load, stress, scalability).

• Security Testing: Identifying vulnerabilities and assessing the software’s ability to protect data.

• Compatibility Testing: Ensuring the software works on different operating systems, browsers, or devices.

• Continuous Testing: Integrating testing into the development lifecycle to provide quick feedback and minimize bugs.

• Test Automation Frameworks: Tools and structures used to automate testing processes (e.g., TestNG, Appium).

19. VoIP (Voice over IP)

VoIP Protocols & Standards

• SIP (Session Initiation Protocol)

• H.323

• RTP (Real-Time Transport Protocol)

• MGCP (Media Gateway Control Protocol)

VoIP Hardware

• IP Phones (Desk Phones, Mobile Clients)

• VoIP Gateways

• Analog Telephone Adapters (ATAs)

• VoIP Servers

• Network Switches/ Routers for VoIP

VoIP Software

• Softphones (e.g., Zoiper, X-Lite)

• PBX (Private Branch Exchange) Systems

• VoIP Management Software

• Call Center Solutions (e.g., Asterisk, 3CX)

VoIP Network Infrastructure

• Quality of Service (QoS) Configuration

• VPNs (Virtual Private Networks) for VoIP

• VoIP Traffic Shaping & Bandwidth Management

• Firewall and Security Configurations for VoIP

• Network Monitoring & Optimization Tools

VoIP Security

• Encryption (SRTP, TLS)

• Authentication and Authorization

• Firewall & Intrusion Detection Systems

• VoIP Fraud DetectionVoIP Providers

• Hosted VoIP Services (e.g., RingCentral, Vonage)

• SIP Trunking Providers

• PBX Hosting & Managed Services

VoIP Quality and Testing

• Call Quality Monitoring

• Latency, Jitter, and Packet Loss Testing

• VoIP Performance Metrics and Reporting Tools

• User Acceptance Testing (UAT) for VoIP Systems

Integration with Other Systems

• CRM Integration (e.g., Salesforce with VoIP)

• Unified Communications (UC) Solutions

• Contact Center Integration

• Email, Chat, and Video Communication Integration

2 notes

·

View notes

Video

youtube

Complete Hands-On Guide: Upload, Download, and Delete Files in Amazon S3 Using EC2 IAM Roles

Are you looking for a secure and efficient way to manage files in Amazon S3 using an EC2 instance? This step-by-step tutorial will teach you how to upload, download, and delete files in Amazon S3 using IAM roles for secure access. Say goodbye to hardcoding AWS credentials and embrace best practices for security and scalability.

What You'll Learn in This Video:

1. Understanding IAM Roles for EC2: - What are IAM roles? - Why should you use IAM roles instead of hardcoding access keys? - How to create and attach an IAM role with S3 permissions to your EC2 instance.

2. Configuring the EC2 Instance for S3 Access: - Launching an EC2 instance and attaching the IAM role. - Setting up the AWS CLI on your EC2 instance.

3. Uploading Files to S3: - Step-by-step commands to upload files to an S3 bucket. - Use cases for uploading files, such as backups or log storage.

4. Downloading Files from S3: - Retrieving objects stored in your S3 bucket using AWS CLI. - How to test and verify successful downloads.

5. Deleting Files in S3: - Securely deleting files from an S3 bucket. - Use cases like removing outdated logs or freeing up storage.

6. Best Practices for S3 Operations: - Using least privilege policies in IAM roles. - Encrypting files in transit and at rest. - Monitoring and logging using AWS CloudTrail and S3 access logs.

Why IAM Roles Are Essential for S3 Operations: - Secure Access: IAM roles provide temporary credentials, eliminating the risk of hardcoding secrets in your scripts. - Automation-Friendly: Simplify file operations for DevOps workflows and automation scripts. - Centralized Management: Control and modify permissions from a single IAM role without touching your instance.

Real-World Applications of This Tutorial: - Automating log uploads from EC2 to S3 for centralized storage. - Downloading data files or software packages hosted in S3 for application use. - Removing outdated or unnecessary files to optimize your S3 bucket storage.

AWS Services and Tools Covered in This Tutorial: - Amazon S3: Scalable object storage for uploading, downloading, and deleting files. - Amazon EC2: Virtual servers in the cloud for running scripts and applications. - AWS IAM Roles: Secure and temporary permissions for accessing S3. - AWS CLI: Command-line tool for managing AWS services.

Hands-On Process: 1. Step 1: Create an S3 Bucket - Navigate to the S3 console and create a new bucket with a unique name. - Configure bucket permissions for private or public access as needed.

2. Step 2: Configure IAM Role - Create an IAM role with an S3 access policy. - Attach the role to your EC2 instance to avoid hardcoding credentials.

3. Step 3: Launch and Connect to an EC2 Instance - Launch an EC2 instance with the IAM role attached. - Connect to the instance using SSH.

4. Step 4: Install AWS CLI and Configure - Install AWS CLI on the EC2 instance if not pre-installed. - Verify access by running `aws s3 ls` to list available buckets.

5. Step 5: Perform File Operations - Upload files: Use `aws s3 cp` to upload a file from EC2 to S3. - Download files: Use `aws s3 cp` to download files from S3 to EC2. - Delete files: Use `aws s3 rm` to delete a file from the S3 bucket.

6. Step 6: Cleanup - Delete test files and terminate resources to avoid unnecessary charges.

Why Watch This Video? This tutorial is designed for AWS beginners and cloud engineers who want to master secure file management in the AWS cloud. Whether you're automating tasks, integrating EC2 and S3, or simply learning the basics, this guide has everything you need to get started.

Don’t forget to like, share, and subscribe to the channel for more AWS hands-on guides, cloud engineering tips, and DevOps tutorials.

#youtube#aws iamiam role awsawsaws permissionaws iam rolesaws cloudaws s3identity & access managementaws iam policyDownloadand Delete Files in Amazon#IAMrole#AWS#cloudolus#S3#EC2

2 notes

·

View notes

Text

3rd July 2024

Goals:

Watch all Andrej Karpathy's videos

Watch AWS Dump videos

Watch 11-hour NLP video

Complete Microsoft GenAI course

GitHub practice

Topics:

1. Andrej Karpathy's Videos

Deep Learning Basics: Understanding neural networks, backpropagation, and optimization.

Advanced Neural Networks: Convolutional neural networks (CNNs), recurrent neural networks (RNNs), and LSTMs.

Training Techniques: Tips and tricks for training deep learning models effectively.

Applications: Real-world applications of deep learning in various domains.

2. AWS Dump Videos

AWS Fundamentals: Overview of AWS services and architecture.

Compute Services: EC2, Lambda, and auto-scaling.

Storage Services: S3, EBS, and Glacier.

Networking: VPC, Route 53, and CloudFront.

Security and Identity: IAM, KMS, and security best practices.

3. 11-hour NLP Video

NLP Basics: Introduction to natural language processing, text preprocessing, and tokenization.

Word Embeddings: Word2Vec, GloVe, and fastText.

Sequence Models: RNNs, LSTMs, and GRUs for text data.

Transformers: Introduction to the transformer architecture and BERT.

Applications: Sentiment analysis, text classification, and named entity recognition.

4. Microsoft GenAI Course

Generative AI Fundamentals: Basics of generative AI and its applications.

Model Architectures: Overview of GANs, VAEs, and other generative models.

Training Generative Models: Techniques and challenges in training generative models.

Applications: Real-world use cases such as image generation, text generation, and more.

5. GitHub Practice

Version Control Basics: Introduction to Git, repositories, and version control principles.

GitHub Workflow: Creating and managing repositories, branches, and pull requests.

Collaboration: Forking repositories, submitting pull requests, and collaborating with others.

Advanced Features: GitHub Actions, managing issues, and project boards.

Detailed Schedule:

Wednesday:

2:00 PM - 4:00 PM: Andrej Karpathy's videos

4:00 PM - 6:00 PM: Break/Dinner

6:00 PM - 8:00 PM: Andrej Karpathy's videos

8:00 PM - 9:00 PM: GitHub practice

Thursday:

9:00 AM - 11:00 AM: AWS Dump videos

11:00 AM - 1:00 PM: Break/Lunch

1:00 PM - 3:00 PM: AWS Dump videos

3:00 PM - 5:00 PM: Break

5:00 PM - 7:00 PM: 11-hour NLP video

7:00 PM - 8:00 PM: Dinner

8:00 PM - 9:00 PM: GitHub practice

Friday:

9:00 AM - 11:00 AM: Microsoft GenAI course

11:00 AM - 1:00 PM: Break/Lunch

1:00 PM - 3:00 PM: Microsoft GenAI course

3:00 PM - 5:00 PM: Break

5:00 PM - 7:00 PM: 11-hour NLP video

7:00 PM - 8:00 PM: Dinner

8:00 PM - 9:00 PM: GitHub practice

Saturday:

9:00 AM - 11:00 AM: Andrej Karpathy's videos

11:00 AM - 1:00 PM: Break/Lunch

1:00 PM - 3:00 PM: 11-hour NLP video

3:00 PM - 5:00 PM: Break

5:00 PM - 7:00 PM: AWS Dump videos

7:00 PM - 8:00 PM: Dinner

8:00 PM - 9:00 PM: GitHub practice

Sunday:

9:00 AM - 12:00 PM: Complete Microsoft GenAI course

12:00 PM - 1:00 PM: Break/Lunch

1:00 PM - 3:00 PM: Finish any remaining content from Andrej Karpathy's videos or AWS Dump videos

3:00 PM - 5:00 PM: Break

5:00 PM - 7:00 PM: Wrap up remaining 11-hour NLP video

7:00 PM - 8:00 PM: Dinner

8:00 PM - 9:00 PM: Final GitHub practice and review

4 notes

·

View notes