#azure data factory pipelines

Explore tagged Tumblr posts

Text

Azure Data Factory Components

Azure Data Factory Components are as below:

Pipelines: The Workflow Container

A Pipeline in Azure Data Factory is a container that holds a set of activities meant to perform a specific task. Think of it as the blueprint for your data movement or transformation logic. Pipelines allow you to define the order of execution, configure dependencies, and reuse logic with parameters. Whether you’re ingesting raw files from a data lake, transforming them using Mapping Data Flows, or loading them into an Azure SQL Database or Synapse, the pipeline coordinates all the steps. As one of the key Azure Data Factory components, the pipeline provides centralized management and monitoring of the entire workflow.

Activities: The Operational Units

Activities are the actual tasks executed within a pipeline. Each activity performs a discrete function like copying data, transforming it, running stored procedures, or triggering notebooks in Databricks. Among the Azure Data Factory components, activities provide the processing logic. They come in multiple types:

Data Movement Activities – Copy Activity

Data Transformation Activities – Mapping Data Flow

Control Activities – If Condition, ForEach

External Activities – HDInsight, Azure ML, Databricks

This modular design allows engineers to handle everything from batch jobs to event-driven ETL pipelines efficiently.

Triggers: Automating Pipeline Execution

Triggers are another core part of the Azure Data Factory components. They define when a pipeline should execute. Triggers enable automation by launching pipelines based on time schedules, events, or manual inputs.

Types of triggers include:

Schedule Trigger – Executes at fixed times

Event-based Trigger – Responds to changes in data, such as a file drop

Manual Trigger – Initiated on-demand through the portal or API

Triggers remove the need for external schedulers and make ADF workflows truly serverless and dynamic.

How These Components Work Together

The synergy between pipelines, activities, and triggers defines the power of ADF. Triggers initiate pipelines, which in turn execute a sequence of activities. This trio of Azure Data Factory components provides a flexible, reusable, and fully managed framework to build complex data workflows across multiple data sources, destinations, and formats.

Conclusion

To summarize, Pipelines, Activities & Triggers are foundational Azure Data Factory components. Together, they form a powerful data orchestration engine that supports modern cloud-based data engineering. Mastering these elements enables engineers to build scalable, fault-tolerant, and automated data solutions. Whether you’re managing daily ingestion processes or building real-time data platforms, a solid understanding of these components is key to unlocking the full potential of Azure Data Factory.

At Learnomate Technologies, we don’t just teach tools, we train you with real-world, hands-on knowledge that sticks. Our Azure Data Engineering training program is designed to help you crack job interviews, build solid projects, and grow confidently in your cloud career.

Want to see how we teach? Hop over to our YouTube channel for bite-sized tutorials, student success stories, and technical deep-dives explained in simple English.

Ready to get certified and hired? Check out our Azure Data Engineering course page for full curriculum details, placement assistance, and batch schedules.

Curious about who’s behind the scenes? I’m Ankush Thavali, founder of Learnomate and your trainer for all things cloud and data. Let’s connect on LinkedIn—I regularly share practical insights, job alerts, and learning tips to keep you ahead of the curve.

And hey, if this article got your curiosity going…

Thanks for reading. Now it’s time to turn this knowledge into action. Happy learning and see you in class or in the next blog!

Happy Vibes!

ANKUSH

#education#it course#it training#technology#training#azure data factory components#azure data factory key components#key components of azure data factory#what are the key components of azure data factory?#data factory components#azure data factory concepts#azure data factory#data factory components tutorial.#azure data factory course#azure data factory course online#azure data factory v2#data factory azure#azure data factory pipeline#data factory azure ml#learn azure data factory#azure data factory pipelines#what is azure data factory

2 notes

·

View notes

Text

Run Azure Functions from Azure Data Factory pipelines | Azure Friday

Azure Functions is a serverless compute service that enables you to run code on-demand without having to explicitly provision or … source

0 notes

Text

#Azure Data Factory#azure data factory interview questions#adf interview question#azure data engineer interview question#pyspark#sql#sql interview questions#pyspark interview questions#Data Integration#Cloud Data Warehousing#ETL#ELT#Data Pipelines#Data Orchestration#Data Engineering#Microsoft Azure#Big Data Integration#Data Transformation#Data Migration#Data Lakes#Azure Synapse Analytics#Data Processing#Data Modeling#Batch Processing#Data Governance

1 note

·

View note

Text

Essential Guidelines for Building Optimized ETL Data Pipelines in the Cloud With Azure Data Factory

http://securitytc.com/TBwVgB

2 notes

·

View notes

Text

Azure Data Factory (ADF) is a cloud-based data integration service provided by Microsoft Azure. It is designed to enable organizations to create, schedule, and manage data pipelines that can move data from various source systems to destination systems, transforming and processing it along the way.

2 notes

·

View notes

Text

Azure Data Factory Training In Hyderabad

Key Features:

Hybrid Data Integration: Azure Data Factory supports hybrid data integration, allowing users to connect and integrate data from on-premises sources, cloud-based services, and various data stores. This flexibility is crucial for organizations with diverse data ecosystems.

Intuitive Visual Interface: The platform offers a user-friendly, visual interface for designing and managing data pipelines. Users can leverage a drag-and-drop interface to effortlessly create, monitor, and manage complex data workflows without the need for extensive coding expertise.

Data Movement and Transformation: Data movement is streamlined with Azure Data Factory, enabling the efficient transfer of data between various sources and destinations. Additionally, the platform provides a range of data transformation activities, such as cleansing, aggregation, and enrichment, ensuring that data is prepared and optimized for analysis.

Data Orchestration: Organizations can orchestrate complex workflows by chaining together multiple data pipelines, activities, and dependencies. This orchestration capability ensures that data processes are executed in a logical and efficient sequence, meeting business requirements and compliance standards.

Integration with Azure Services: Azure Data Factory seamlessly integrates with other Azure services, including Azure Synapse Analytics, Azure Databricks, Azure Machine Learning, and more. This integration enhances the platform's capabilities, allowing users to leverage additional tools and services to derive deeper insights from their data.

Monitoring and Management: Robust monitoring and management capabilities provide real-time insights into the performance and health of data pipelines. Users can track execution details, diagnose issues, and optimize workflows to enhance overall efficiency.

Security and Compliance: Azure Data Factory prioritizes security and compliance, implementing features such as Azure Active Directory integration, encryption at rest and in transit, and role-based access control. This ensures that sensitive data is handled securely and in accordance with regulatory requirements.

Scalability and Reliability: The platform is designed to scale horizontally, accommodating the growing needs of organizations as their data volumes increase. With built-in reliability features, Azure Data Factory ensures that data processes are executed consistently and without disruptions.

2 notes

·

View notes

Text

Azure Data Engineering Tools For Data Engineers

Azure is a cloud computing platform provided by Microsoft, which presents an extensive array of data engineering tools. These tools serve to assist data engineers in constructing and upholding data systems that possess the qualities of scalability, reliability, and security. Moreover, Azure data engineering tools facilitate the creation and management of data systems that cater to the unique requirements of an organization.

In this article, we will explore nine key Azure data engineering tools that should be in every data engineer’s toolkit. Whether you’re a beginner in data engineering or aiming to enhance your skills, these Azure tools are crucial for your career development.

Microsoft Azure Databricks

Azure Databricks is a managed version of Databricks, a popular data analytics and machine learning platform. It offers one-click installation, faster workflows, and collaborative workspaces for data scientists and engineers. Azure Databricks seamlessly integrates with Azure’s computation and storage resources, making it an excellent choice for collaborative data projects.

Microsoft Azure Data Factory

Microsoft Azure Data Factory (ADF) is a fully-managed, serverless data integration tool designed to handle data at scale. It enables data engineers to acquire, analyze, and process large volumes of data efficiently. ADF supports various use cases, including data engineering, operational data integration, analytics, and data warehousing.

Microsoft Azure Stream Analytics

Azure Stream Analytics is a real-time, complex event-processing engine designed to analyze and process large volumes of fast-streaming data from various sources. It is a critical tool for data engineers dealing with real-time data analysis and processing.

Microsoft Azure Data Lake Storage

Azure Data Lake Storage provides a scalable and secure data lake solution for data scientists, developers, and analysts. It allows organizations to store data of any type and size while supporting low-latency workloads. Data engineers can take advantage of this infrastructure to build and maintain data pipelines. Azure Data Lake Storage also offers enterprise-grade security features for data collaboration.

Microsoft Azure Synapse Analytics

Azure Synapse Analytics is an integrated platform solution that combines data warehousing, data connectors, ETL pipelines, analytics tools, big data scalability, and visualization capabilities. Data engineers can efficiently process data for warehousing and analytics using Synapse Pipelines’ ETL and data integration capabilities.

Microsoft Azure Cosmos DB

Azure Cosmos DB is a fully managed and server-less distributed database service that supports multiple data models, including PostgreSQL, MongoDB, and Apache Cassandra. It offers automatic and immediate scalability, single-digit millisecond reads and writes, and high availability for NoSQL data. Azure Cosmos DB is a versatile tool for data engineers looking to develop high-performance applications.

Microsoft Azure SQL Database

Azure SQL Database is a fully managed and continually updated relational database service in the cloud. It offers native support for services like Azure Functions and Azure App Service, simplifying application development. Data engineers can use Azure SQL Database to handle real-time data ingestion tasks efficiently.

Microsoft Azure MariaDB

Azure Database for MariaDB provides seamless integration with Azure Web Apps and supports popular open-source frameworks and languages like WordPress and Drupal. It offers built-in monitoring, security, automatic backups, and patching at no additional cost.

Microsoft Azure PostgreSQL Database

Azure PostgreSQL Database is a fully managed open-source database service designed to emphasize application innovation rather than database management. It supports various open-source frameworks and languages and offers superior security, performance optimization through AI, and high uptime guarantees.

Whether you’re a novice data engineer or an experienced professional, mastering these Azure data engineering tools is essential for advancing your career in the data-driven world. As technology evolves and data continues to grow, data engineers with expertise in Azure tools are in high demand. Start your journey to becoming a proficient data engineer with these powerful Azure tools and resources.

Unlock the full potential of your data engineering career with Datavalley. As you start your journey to becoming a skilled data engineer, it’s essential to equip yourself with the right tools and knowledge. The Azure data engineering tools we’ve explored in this article are your gateway to effectively managing and using data for impactful insights and decision-making.

To take your data engineering skills to the next level and gain practical, hands-on experience with these tools, we invite you to join the courses at Datavalley. Our comprehensive data engineering courses are designed to provide you with the expertise you need to excel in the dynamic field of data engineering. Whether you’re just starting or looking to advance your career, Datavalley’s courses offer a structured learning path and real-world projects that will set you on the path to success.

Course format:

Subject: Data Engineering Classes: 200 hours of live classes Lectures: 199 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 70% scholarship on this course Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

Subject: DevOps Classes: 180+ hours of live classes Lectures: 300 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 67% scholarship on this course Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

For more details on the Data Engineering courses, visit Datavalley’s official website.

#datavalley#dataexperts#data engineering#data analytics#dataexcellence#data science#power bi#business intelligence#data analytics course#data science course#data engineering course#data engineering training

3 notes

·

View notes

Text

Powerful ETL Technologies in the Microsoft Data Platform

Microsoft first truly disrupted the ETL marketplace with the introduction of SQL Server Integration Services (SSIS) back with the release of SQL Server 2005. Microsoft has upped the ante yet again by bringing to market powerful ETL features to the cloud via the Azure Data Factory, which enables IT shops to integrate a multitude of data sources, both on-premises and in the cloud, via a workflow (called a “pipeline”) that utilizes Hive, Pig, and customized C# programs. READ MORE… Originally Posted March 03, 2016 Kevin Kline Kevin (@kekline) serves as Principal Program Manager at SentryOne. He is a founder and […]

0 notes

Text

30. Get Error message of Failed activities in Pipeline in Azure Data Factory

In this video, I discussed about getting error message details of failed activities in pipeline in azure data factory Please watch … source

0 notes

Text

Discover the Key Benefits of Implementing Data Mesh Architecture

As data continues to grow at an exponential rate, enterprises are finding traditional centralized data architectures inadequate for scaling. That’s where Data Mesh Architecture steps in bringing a decentralized and domain oriented approach to managing and scaling data in modern enterprises. We empower businesses by implementing robust data mesh architectures tailored to leading cloud platforms like Azure, Snowflake, and GCP, ensuring scalable, secure, and domain-driven data strategies.

Key Benefits of Implementing Data Mesh Architecture

Scalability Across Domains - By decentralizing data ownership to domain teams, data mesh architecture enables scalable data product creation and faster delivery of insights. Teams become responsible for their own data pipelines, ensuring agility and accountability.

Improved Data Quality and Governance - Data Mesh encourages domain teams to treat data as a product, which improves data quality, accessibility, and documentation. Governance frameworks built into platforms like Data Mesh Architecture on Azure provide policy enforcement and observability.

Faster Time-to-Insights - Unlike traditional centralized models, data mesh allows domain teams to directly consume and share trusted data products—dramatically reducing time-to-insight for analytics and machine learning initiatives.

Cloud-Native Flexibility - Whether you’re using Data Mesh Architecture in Snowflake, Azure, or GCP, the architecture supports modern cloud-native infrastructure. This ensures high performance, elasticity, and cost optimization.

Domain-Driven Ownership and Collaboration - By aligning data responsibilities with business domains, enterprises improve cross-functional collaboration. With Data Mesh Architecture GCP or Snowflake integration, domain teams can build, deploy, and iterate on data products independently.

What Is Data Mesh Architecture in Azure?

Data Mesh Architecture in Azure decentralizes data ownership by allowing domain teams to manage, produce, and consume data as a product. Using services like Azure Synapse, Purview, and Data Factory, it supports scalable analytics and governance. With Dataplatr, enterprises can implement a modern, domain-driven data strategy using Azure’s cloud-native capabilities to boost agility and reduce data bottlenecks.

What Is the Data Architecture in Snowflake?

Data architecture in Snowflake builds a data model that separates storage. It allows instant scalability, secure data sharing, and real-time insights with zero-copy cloning and time travel. At Dataplatr, we use Snowflake to implement data mesh architecture that supports distributed data products, making data accessible and reliable across all business domains.

What Is the Architecture of GCP?

The architecture of GCP (Google Cloud Platform) offers a modular and serverless ecosystem ideal for analytics, AI, and large-scale data workloads. Using tools like BigQuery, Dataflow, Looker, and Data Catalog, GCP supports real-time processing and decentralized data governance. It enables enterprises to build flexible, domain led data mesh architectures on GCP, combining innovation with security and compliance.

Ready to Modernize Your Data Strategy?

Achieve the full potential of decentralized analytics with data mesh architecture built for scale. Partner with Dataplatr to design, implement, and optimize your data mesh across Azure, Snowflake, and GCP.

Read more at dataplatr.com

0 notes

Text

Orchestrating AI-driven data pipelines with Azure ADF and Databricks: An architectural evolution

In the fast-evolving landscape of enterprise data management, the integration of artificial intelligence (AI) into data pipelines has become a game-changer. In “Designing a metadata-driven ETL framework with Azure ADF: An architectural perspective,” I laid the groundwork for a scalable, metadata-driven ETL framework using Azure Data Factory (ADF). This approach streamlined data workflows by…

0 notes

Text

Best software training institute For azure cloud data engineer

Unlock Your Cloud Career with the Best Software Training Institute for Azure Cloud Data Engineer – Simpleguru

Are you ready to build a rewarding career as an Azure Cloud Data Engineer? At Simpleguru, we pride ourselves on being the best software training institute for Azure Cloud Data Engineer aspirants who want to master the skills the industry demands.

Our comprehensive Azure Cloud Data Engineer training program is crafted by certified professionals with real-world experience in cloud solutions, data pipelines, and big data analytics. We believe learning should be practical, flexible, and job-focused — so our course blends interactive live sessions, hands-on labs, and real-time projects to ensure you gain the confidence to tackle any cloud data challenge.

Simpleguru stands out because we genuinely care about your career success. From day one, you’ll benefit from personalized mentorship, doubt-clearing sessions, and career guidance. We don’t just train you — we empower you with mock interviews, resume building, and placement assistance, so you’re truly job-ready for top MNCs and startups seeking Azure Cloud Data Engineers.

Whether you’re an IT professional looking to upskill, a fresh graduate dreaming of your first cloud job, or someone planning a career switch, Simpleguru makes it easy to learn at your pace. Our Azure Cloud Data Engineer course covers essential topics like data storage, data transformation, Azure Synapse Analytics, Azure Data Factory, and monitoring cloud solutions — all mapped to the latest Microsoft certification standards.

Read More

0 notes

Text

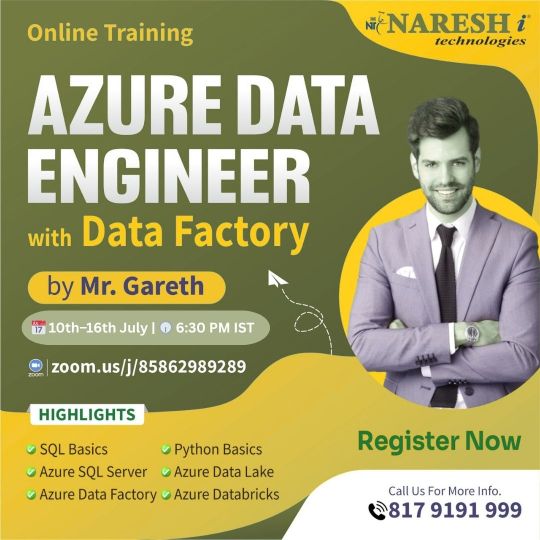

☁️📂 Azure Data Engineering with Data Factory – FREE Demo by Mr. Gareth! 📅 10th to 16th July | 🕡 6:30 PM IST 🔗 Register Here: https://tr.ee/0e4lJF 📘 More Free IT Demos: https://linktr.ee/ITcoursesFreeDemos

Looking to build a future-proof career in the cloud data space? Don’t miss this FREE hands-on Azure Data Engineering demo hosted by Mr. Gareth, exclusively at NareshIT. Gain real-time exposure to Azure Data Factory (ADF) and understand how to design, build, and manage scalable data pipelines.

This 7-day free demo will guide you through data ingestion, transformation, orchestration, and automation using ADF, Azure Synapse, Blob Storage, SQL Server, and Power BI integration. Perfect for beginners and professionals aiming to crack the DP-203 Azure Data Engineer certification.

👨💻 Perfect For: ✅ Data Analysts & BI Developers ✅ ETL Engineers & Cloud Learners ✅ Freshers & Job Seekers ✅ Tech Professionals Upskilling for Azure

🎯 Key Learning Areas: ✔️ ADF Architecture & Pipelines ✔️ Real-Time Data Projects ✔️ Certification Readiness ✔️ Interview & Resume Tips ✔️ Job Placement Assistance

📢 Seats are limited – reserve yours now and accelerate your career in Azure Data Engineering!

#AzureDataEngineering#ADFTraining#AzureDataFactory#NareshIT#FreeDemo#DP203Certification#ETLCareers#CloudDataPipeline#MicrosoftAzure#DataEngineer2025#NareshITDemo#AzureBootcamp#BigDataTraining#TechCourses#TumblrSEO#CloudSkills#PowerBIIntegration#JobReadySkills#StudentSuccess#OnlineITTraining

0 notes

Text

🌐📊 FREE Azure Data Engineering Demo – Master ADF with Mr. Gareth! 📅 10th to 16th July | 🕡 6:30 PM IST 🔗 Register Now: https://tr.ee/0e4lJF 🎓 Explore More Free IT Demos: https://linktr.ee/ITcoursesFreeDemos

Step into the future of data with NareshIT’s exclusive FREE demo series on Azure Data Engineering, led by Mr. Gareth, a seasoned cloud expert. This career-transforming session introduces you to Azure Data Factory, one of the most powerful data integration tools in the cloud ecosystem.

Learn how to design, build, and orchestrate complex data pipelines using Azure Data Factory, Data Lake, Synapse Analytics, Azure Blob Storage, and more. Whether you're preparing for the DP-203 certification or looking to upskill for real-world data engineering roles, this is the perfect starting point.

👨💼 Ideal For: ✅ Aspiring Data Engineers ✅ BI & ETL Developers ✅ Cloud Enthusiasts & Freshers ✅ Professionals aiming for Azure Certification

🔍 What You’ll Learn: ✔️ Real-Time Data Pipeline Creation ✔️ Data Flow & Orchestration ✔️ Azure Integration Services ✔️ Hands-On Labs + Career Mentorship

📢 Limited seats available – secure yours now and elevate your cloud data skills!

#AzureDataEngineering#DataFactory#NareshIT#FreeDemo#ADFTraining#AzureCertification#DataPipeline#CloudEngineer#DP203#AzureBootcamp#TechTraining#OnlineLearning#TumblrSEO#BITraining#DataIntegration#JobReadySkills#MicrosoftAzure#ETLDeveloper#DataAnalytics#NareshITDemo

0 notes

Text

☁️📊 “Cloud Wars: Building Big Data Pipelines on AWS, Azure & GCP!”

Big data needs big muscle—and cloud platforms like AWS, Microsoft Azure, and GCP deliver just that! Whether it’s S3 & EMR on AWS, Data Factory on Azure, or BigQuery on GCP, building a big data pipeline is easier (and smarter) than ever. With the best online professional certificates and live courses for professionals, you can master each cloud’s ecosystem. At TutorT Academy, we train you to ingest, process, and analyze data across clouds like a true data gladiator.

#BigDataPipeline #CloudComputingSkills #TutorTAcademy #LiveCoursesForProfessionals #BestOnlineProfessionalCertificates

0 notes

Text

🌐 Azure Data Engineer with Data Factory

📅 10th July | ⏰ 6:30 PM IST 👨🏫 Trainer: Mr. Gareth 🔗 https://tr.ee/AZD10JU Learn real-time pipelines, integration tools, Azure Synapse & more.

0 notes