#what are the key components of azure data factory?

Explore tagged Tumblr posts

Text

Azure Data Factory Components

Azure Data Factory Components are as below:

Pipelines: The Workflow Container

A Pipeline in Azure Data Factory is a container that holds a set of activities meant to perform a specific task. Think of it as the blueprint for your data movement or transformation logic. Pipelines allow you to define the order of execution, configure dependencies, and reuse logic with parameters. Whether you’re ingesting raw files from a data lake, transforming them using Mapping Data Flows, or loading them into an Azure SQL Database or Synapse, the pipeline coordinates all the steps. As one of the key Azure Data Factory components, the pipeline provides centralized management and monitoring of the entire workflow.

Activities: The Operational Units

Activities are the actual tasks executed within a pipeline. Each activity performs a discrete function like copying data, transforming it, running stored procedures, or triggering notebooks in Databricks. Among the Azure Data Factory components, activities provide the processing logic. They come in multiple types:

Data Movement Activities – Copy Activity

Data Transformation Activities – Mapping Data Flow

Control Activities – If Condition, ForEach

External Activities – HDInsight, Azure ML, Databricks

This modular design allows engineers to handle everything from batch jobs to event-driven ETL pipelines efficiently.

Triggers: Automating Pipeline Execution

Triggers are another core part of the Azure Data Factory components. They define when a pipeline should execute. Triggers enable automation by launching pipelines based on time schedules, events, or manual inputs.

Types of triggers include:

Schedule Trigger – Executes at fixed times

Event-based Trigger – Responds to changes in data, such as a file drop

Manual Trigger – Initiated on-demand through the portal or API

Triggers remove the need for external schedulers and make ADF workflows truly serverless and dynamic.

How These Components Work Together

The synergy between pipelines, activities, and triggers defines the power of ADF. Triggers initiate pipelines, which in turn execute a sequence of activities. This trio of Azure Data Factory components provides a flexible, reusable, and fully managed framework to build complex data workflows across multiple data sources, destinations, and formats.

Conclusion

To summarize, Pipelines, Activities & Triggers are foundational Azure Data Factory components. Together, they form a powerful data orchestration engine that supports modern cloud-based data engineering. Mastering these elements enables engineers to build scalable, fault-tolerant, and automated data solutions. Whether you’re managing daily ingestion processes or building real-time data platforms, a solid understanding of these components is key to unlocking the full potential of Azure Data Factory.

At Learnomate Technologies, we don’t just teach tools, we train you with real-world, hands-on knowledge that sticks. Our Azure Data Engineering training program is designed to help you crack job interviews, build solid projects, and grow confidently in your cloud career.

Want to see how we teach? Hop over to our YouTube channel for bite-sized tutorials, student success stories, and technical deep-dives explained in simple English.

Ready to get certified and hired? Check out our Azure Data Engineering course page for full curriculum details, placement assistance, and batch schedules.

Curious about who’s behind the scenes? I’m Ankush Thavali, founder of Learnomate and your trainer for all things cloud and data. Let’s connect on LinkedIn—I regularly share practical insights, job alerts, and learning tips to keep you ahead of the curve.

And hey, if this article got your curiosity going…

Thanks for reading. Now it’s time to turn this knowledge into action. Happy learning and see you in class or in the next blog!

Happy Vibes!

ANKUSH

#education#it course#it training#technology#training#azure data factory components#azure data factory key components#key components of azure data factory#what are the key components of azure data factory?#data factory components#azure data factory concepts#azure data factory#data factory components tutorial.#azure data factory course#azure data factory course online#azure data factory v2#data factory azure#azure data factory pipeline#data factory azure ml#learn azure data factory#azure data factory pipelines#what is azure data factory

2 notes

·

View notes

Text

What Are IoT Platforms Really Doing Behind the Scenes?

In a world where everyday objects are becoming smarter, the term IoT Platforms is often thrown around. But what exactly are these platforms doing behind the scenes? From your smart watch to your smart refrigerator, these platforms quietly power millions of devices, collecting, transmitting, analyzing, and responding to data. If you’ve ever asked yourself how the Internet of Things works so seamlessly, the answer lies in robust IoT platforms.

Understanding the Role of IoT Platforms

At their core, IoT Platforms are the backbone of any IoT ecosystem. They serve as the middleware that connects devices, networks, cloud services, and user-facing applications. These platforms handle a wide range of tasks, including data collection, remote device management, analytics, and integration with third-party services.

Whether you're deploying a fleet of sensors in agriculture or building a smart city grid, IoT Platforms provide the essential infrastructure that makes real-time communication and automation possible. These functions are discussed in every Complete Guide For IoT Software Development, which breaks down the layers and technologies involved in the IoT ecosystem.

Why Businesses Need IoT Platforms

In the past, deploying IoT solutions meant piecing together various tools and writing extensive custom code. Today, IoT Platforms offer ready-to-use frameworks that drastically reduce time-to-market and development effort. These platforms allow businesses to scale easily, ensuring their solutions are secure, adaptable, and future-ready.

That's where IoT Development Experts come in. They use these platforms to streamline device onboarding, automate firmware updates, and implement edge computing, allowing devices to respond instantly even with minimal internet access.

Types of IoT Platforms

Not all IoT Platforms are created equal. Some specialize in device management, others in analytics, and some in end-to-end IoT application delivery. The major types include:

Connectivity Management Platforms (e.g., Twilio, Cisco Jasper)

Cloud-Based IoT Platforms (e.g., AWS IoT, Azure IoT Hub, Google Cloud IoT)

Application Enablement Platforms (e.g., ThingWorx, Bosch IoT Suite)

Edge-to-Cloud Platforms (e.g., Balena, Particle)

Choosing the right one depends on your project size, goals, and industry. A professional IoT Network Management strategy is key to ensuring reliable connectivity and data integrity across thousands of devices.

Key Features Behind the Scenes

So, what are IoT Platforms actually doing in the background?

Device provisioning & authentication

Real-time data streaming

Cloud-based storage and analysis

Machine learning and automation

API integrations for dashboards and third-party tools

Remote updates and performance monitoring

Many businesses don’t realize just how much happens beyond the interface — the platform acts like an orchestra conductor, keeping every component in sync.

Book an appointment with our IoT experts today to discover the ideal platform for your connected project!

Real-World Applications of IoT Platforms

From smart homes and connected cars to predictive maintenance in factories, IoT Platforms are behind some of the most impressive use cases in tech today. These platforms enable real-time decision-making and automation in:

Healthcare: Remote patient monitoring

Retail: Inventory tracking via sensors

Agriculture: Smart irrigation and weather prediction

Manufacturing: Equipment health and safety alerts

According to a report on the 10 Leading IoT Service Providers, businesses that use advanced IoT platforms see faster ROI, greater operational efficiency, and more robust data-driven strategies.

Cost Considerations and ROI

Before diving in, it’s important to understand the cost implications of using IoT Platforms. While cloud-based platforms offer flexibility, costs can spiral if not planned well. Consider usage-based pricing, storage needs, number of connected devices, and data transfer volume.

Tools like IoT Cost Calculators can provide a ballpark estimate of platform costs, helping you plan resources accordingly. Keep in mind that the right platform may cost more upfront but save significantly on long-term maintenance and scalability.

Custom vs Off-the-Shelf IoT Platforms

For businesses with unique needs, standard platforms might not be enough. That’s when Custom IoT Development Services come into play. These services build platforms tailored to specific workflows, device ecosystems, and security requirements. While they take longer to develop, they offer better control, performance, and adaptability.

A custom-built platform can integrate directly with legacy systems, enable proprietary protocols, and offer highly secure communication — making it a smart long-term investment for enterprises with specialized operations.

Common Challenges with IoT Platforms

Even the best IoT Platforms face challenges, such as:

Data overload and poor filtering

Device interoperability issues

Security vulnerabilities

Network latency and offline support

Difficulty in scaling across global deployments

That’s why working with experienced IoT Development Experts and having strong IoT Network Management practices is crucial. They ensure your platform setup remains agile, secure, and adaptable to new technologies and compliance standards.

Final Thoughts: Choosing the Right IoT Platform

In a hyper-connected world, IoT Platforms are more than just back-end tools — they are strategic enablers of smart business solutions. From managing billions of data points to enabling automation and predictive analytics, these platforms quietly power the future.

Whether you choose a pre-built platform or go custom, the key is to align your choice with your business goals, device complexity, and data needs.

0 notes

Text

Hybrid Cloud Application: The Smart Future of Business IT

Introduction

In today’s digital-first environment, businesses are constantly seeking scalable, flexible, and cost-effective solutions to stay competitive. One solution that is gaining rapid traction is the hybrid cloud application model. Combining the best of public and private cloud environments, hybrid cloud applications enable businesses to maximize performance while maintaining control and security.

This 2000-word comprehensive article on hybrid cloud applications explains what they are, why they matter, how they work, their benefits, and how businesses can use them effectively. We also include real-user reviews, expert insights, and FAQs to help guide your cloud journey.

What is a Hybrid Cloud Application?

A hybrid cloud application is a software solution that operates across both public and private cloud environments. It enables data, services, and workflows to move seamlessly between the two, offering flexibility and optimization in terms of cost, performance, and security.

For example, a business might host sensitive customer data in a private cloud while running less critical workloads on a public cloud like AWS, Azure, or Google Cloud Platform.

Key Components of Hybrid Cloud Applications

Public Cloud Services – Scalable and cost-effective compute and storage offered by providers like AWS, Azure, and GCP.

Private Cloud Infrastructure – More secure environments, either on-premises or managed by a third-party.

Middleware/Integration Tools – Platforms that ensure communication and data sharing between cloud environments.

Application Orchestration – Manages application deployment and performance across both clouds.

Why Choose a Hybrid Cloud Application Model?

1. Flexibility

Run workloads where they make the most sense, optimizing both performance and cost.

2. Security and Compliance

Sensitive data can remain in a private cloud to meet regulatory requirements.

3. Scalability

Burst into public cloud resources when private cloud capacity is reached.

4. Business Continuity

Maintain uptime and minimize downtime with distributed architecture.

5. Cost Efficiency

Avoid overprovisioning private infrastructure while still meeting demand spikes.

Real-World Use Cases of Hybrid Cloud Applications

1. Healthcare

Protect sensitive patient data in a private cloud while using public cloud resources for analytics and AI.

2. Finance

Securely handle customer transactions and compliance data, while leveraging the cloud for large-scale computations.

3. Retail and E-Commerce

Manage customer interactions and seasonal traffic spikes efficiently.

4. Manufacturing

Enable remote monitoring and IoT integrations across factory units using hybrid cloud applications.

5. Education

Store student records securely while using cloud platforms for learning management systems.

Benefits of Hybrid Cloud Applications

Enhanced Agility

Better Resource Utilization

Reduced Latency

Compliance Made Easier

Risk Mitigation

Simplified Workload Management

Tools and Platforms Supporting Hybrid Cloud

Microsoft Azure Arc – Extends Azure services and management to any infrastructure.

AWS Outposts – Run AWS infrastructure and services on-premises.

Google Anthos – Manage applications across multiple clouds.

VMware Cloud Foundation – Hybrid solution for virtual machines and containers.

Red Hat OpenShift – Kubernetes-based platform for hybrid deployment.

Best Practices for Developing Hybrid Cloud Applications

Design for Portability Use containers and microservices to enable seamless movement between clouds.

Ensure Security Implement zero-trust architectures, encryption, and access control.

Automate and Monitor Use DevOps and continuous monitoring tools to maintain performance and compliance.

Choose the Right Partner Work with experienced providers who understand hybrid cloud deployment strategies.

Regular Testing and Backup Test failover scenarios and ensure robust backup solutions are in place.

Reviews from Industry Professionals

Amrita Singh, Cloud Engineer at FinCloud Solutions:

"Implementing hybrid cloud applications helped us reduce latency by 40% and improve client satisfaction."

John Meadows, CTO at EdTechNext:

"Our LMS platform runs on a hybrid model. We’ve achieved excellent uptime and student experience during peak loads."

Rahul Varma, Data Security Specialist:

"For compliance-heavy environments like finance and healthcare, hybrid cloud is a no-brainer."

Challenges and How to Overcome Them

1. Complex Architecture

Solution: Simplify with orchestration tools and automation.

2. Integration Difficulties

Solution: Use APIs and middleware platforms for seamless data exchange.

3. Cost Overruns

Solution: Use cloud cost optimization tools like Azure Advisor, AWS Cost Explorer.

4. Security Risks

Solution: Implement multi-layered security protocols and conduct regular audits.

FAQ: Hybrid Cloud Application

Q1: What is the main advantage of a hybrid cloud application?

A: It combines the strengths of public and private clouds for flexibility, scalability, and security.

Q2: Is hybrid cloud suitable for small businesses?

A: Yes, especially those with fluctuating workloads or compliance needs.

Q3: How secure is a hybrid cloud application?

A: When properly configured, hybrid cloud applications can be as secure as traditional setups.

Q4: Can hybrid cloud reduce IT costs?

A: Yes. By only paying for public cloud usage as needed, and avoiding overprovisioning private servers.

Q5: How do you monitor a hybrid cloud application?

A: With cloud management platforms and monitoring tools like Datadog, Splunk, or Prometheus.

Q6: What are the best platforms for hybrid deployment?

A: Azure Arc, Google Anthos, AWS Outposts, and Red Hat OpenShift are top choices.

Conclusion: Hybrid Cloud is the New Normal

The hybrid cloud application model is more than a trend—it’s a strategic evolution that empowers organizations to balance innovation with control. It offers the agility of the cloud without sacrificing the oversight and security of on-premises systems.

If your organization is looking to modernize its IT infrastructure while staying compliant, resilient, and efficient, then hybrid cloud application development is the way forward.

At diglip7.com, we help businesses build scalable, secure, and agile hybrid cloud solutions tailored to their unique needs. Ready to unlock the future? Contact us today to get started.

0 notes

Text

IoT Installation Services: Enabling Smart, Connected Solutions Across Industries

The Internet of Things (IoT) has moved from a futuristic concept to an everyday necessity across industries. From smart homes and connected healthcare to intelligent factories and energy-efficient buildings, IoT technology is transforming how we live and work. However, behind every seamless smart device experience is a robust infrastructure — and that’s where IoT Installation Services come in.

In this article, we’ll explore what IoT installation services entail, why they’re essential, and how businesses can benefit from professional IoT deployment.

What Are IoT Installation Services?

IoT installation services encompass the planning, setup, integration, and maintenance of IoT devices and systems. These services ensure that connected hardware, software, and networks work in harmony to deliver reliable and secure data-driven insights.

Whether deploying a fleet of smart thermostats in a commercial building or installing asset tracking sensors in a logistics facility, IoT installation providers handle everything from hardware configuration to network connectivity and cloud integration.

Key Components of IoT Installation Services

1. Site Assessment and Planning

Before any installation begins, a professional assessment is conducted to understand the environment, device requirements, infrastructure compatibility, and connectivity needs. This includes evaluating Wi-Fi strength, power availability, and integration points with existing systems.

2. Device Procurement and Configuration

Certified technicians source and configure IoT hardware, such as sensors, gateways, cameras, and smart appliances. These devices are programmed with the correct firmware, security protocols, and communication standards (e.g., Zigbee, LoRaWAN, Bluetooth, or Wi-Fi).

3. Network Setup and Optimization

A stable, secure network is critical for IoT performance. Installation teams establish local area networks (LAN), cloud-based connections, or edge computing setups as needed. They also ensure low-latency communication and minimal data loss.

4. Integration with Platforms and Applications

IoT systems need to connect with cloud dashboards, APIs, or mobile apps to extract and analyze data. Installers ensure smooth integration with platforms such as AWS IoT, Azure IoT Hub, or custom software solutions.

5. Testing and Quality Assurance

After deployment, thorough testing is conducted to ensure all devices function correctly, communicate effectively, and meet security standards.

6. Ongoing Maintenance and Support

Many service providers offer ongoing support, including firmware updates, troubleshooting, and data analytics optimization to ensure long-term success.

Industries Benefiting from IoT Installation Services

🏢 Smart Buildings

IoT-enabled lighting, HVAC, access control, and occupancy sensors improve energy efficiency and occupant comfort. Installation services ensure that all devices are deployed in the optimal configuration for performance and scalability.

🏭 Manufacturing and Industry 4.0

IoT sensors monitor equipment health, environmental conditions, and production metrics in real time. A professional setup ensures industrial-grade connectivity and safety compliance.

🚚 Logistics and Supply Chain

Track assets, monitor fleet performance, and manage inventory with GPS-enabled and RFID IoT systems. Proper installation is key to ensuring accurate tracking and data synchronization.

🏥 Healthcare

Connected medical devices and monitoring systems improve patient care. Installation services guarantee HIPAA-compliant networks and reliable system integration.

🏠 Smart Homes

IoT installation for consumers includes smart thermostats, home security systems, lighting controls, and voice assistant integration. Professional installers make these systems plug-and-play for homeowners.

Benefits of Professional IoT Installation Services

✅ Faster Deployment

Experienced technicians streamline the setup process, reducing time-to-operation and minimizing costly delays.

✅ Improved Security

Proper configuration prevents vulnerabilities like default passwords, insecure ports, or unauthorized access.

✅ Seamless Integration

Avoid system incompatibility issues with tailored integration into existing platforms and infrastructure.

✅ Optimized Performance

Professional installation ensures devices operate efficiently, with optimal placement, signal strength, and network settings.

✅ Scalability

A professionally installed IoT system is built with future expansion in mind — additional devices can be added without overhauling the setup.

Choosing the Right IoT Installation Service Provider

When selecting an IoT installation partner, consider the following:

Experience in Your Industry: Choose providers with proven experience in your specific sector.

Certifications and Compliance: Ensure the team adheres to industry standards and data privacy regulations.

End-to-End Services: Look for a provider that offers planning, installation, integration, and ongoing support.

Vendor Neutrality: Providers who work with multiple hardware and software platforms can recommend the best tools for your needs.

Client References: Ask for case studies or testimonials from similar projects.

The Future of IoT Deployment

As IoT ecosystems become more complex, installation services will evolve to include:

AI-Driven Configuration Tools: Automatically detect optimal device placement and settings.

Digital Twins: Simulate environments for pre-deployment planning.

Edge Computing Integration: Reduce latency and bandwidth usage by processing data closer to the source.

5G Deployment: Enable ultra-fast and low-latency communication for time-sensitive IoT applications.

Conclusion

As the foundation of any smart technology ecosystem, IoT installation services play a crucial role in turning innovative ideas into fully functional, connected solutions. Whether you're upgrading a single building or deploying thousands of sensors across a global operation, professional installation ensures efficiency, security, and long-term value.

By partnering with skilled IoT installers, businesses can focus on leveraging real-time data and automation to drive performance — rather than worrying about the complexity of getting systems up and running.

0 notes

Text

How to Optimize ETL Pipelines for Performance and Scalability

As data continues to grow in volume, velocity, and variety, the importance of optimizing your ETL pipeline for performance and scalability cannot be overstated. An ETL (Extract, Transform, Load) pipeline is the backbone of any modern data architecture, responsible for moving and transforming raw data into valuable insights. However, without proper optimization, even a well-designed ETL pipeline can become a bottleneck, leading to slow processing, increased costs, and data inconsistencies.

Whether you're building your first pipeline or scaling existing workflows, this guide will walk you through the key strategies to improve the performance and scalability of your ETL pipeline.

1. Design with Modularity in Mind

The first step toward a scalable ETL pipeline is designing it with modular components. Break down your pipeline into independent stages — extraction, transformation, and loading — each responsible for a distinct task. Modular architecture allows for easier debugging, scaling individual components, and replacing specific stages without affecting the entire workflow.

For example:

Keep extraction scripts isolated from transformation logic

Use separate environments or containers for each stage

Implement well-defined interfaces for data flow between stages

2. Use Incremental Loads Over Full Loads

One of the biggest performance drains in ETL processes is loading the entire dataset every time. Instead, use incremental loads — only extract and process new or updated records since the last run. This reduces data volume, speeds up processing, and decreases strain on source systems.

Techniques to implement incremental loads include:

Using timestamps or change data capture (CDC)

Maintaining checkpoints or watermark tables

Leveraging database triggers or logs for change tracking

3. Leverage Parallel Processing

Modern data tools and cloud platforms support parallel processing, where multiple operations are executed simultaneously. By breaking large datasets into smaller chunks and processing them in parallel threads or workers, you can significantly reduce ETL run times.

Best practices for parallelism:

Partition data by time, geography, or IDs

Use multiprocessing in Python or distributed systems like Apache Spark

Optimize resource allocation in cloud-based ETL services

4. Push Down Processing to the Source System

Whenever possible, push computation to the database or source system rather than pulling data into your ETL tool for processing. Databases are optimized for query execution and can filter, sort, and aggregate data more efficiently.

Examples include:

Using SQL queries for filtering data before extraction

Aggregating large datasets within the database

Using stored procedures to perform heavy transformations

This minimizes data movement and improves pipeline efficiency.

5. Monitor, Log, and Profile Your ETL Pipeline

Optimization is not a one-time activity — it's an ongoing process. Use monitoring tools to track pipeline performance, identify bottlenecks, and collect error logs.

What to monitor:

Data throughput (rows/records per second)

CPU and memory usage

Job duration and frequency of failures

Time spent at each ETL stage

Popular tools include Apache Airflow for orchestration, Prometheus for metrics, and custom dashboards built on Grafana or Kibana.

6. Use Scalable Storage and Compute Resources

Cloud-native ETL tools like AWS Glue, Google Dataflow, and Azure Data Factory offer auto-scaling capabilities that adjust resources based on workload. Leveraging these platforms ensures you’re only using (and paying for) what you need.

Additionally:

Store intermediate files in cloud storage (e.g., Amazon S3)

Use distributed compute engines like Spark or Dask

Separate compute and storage to scale each independently

Conclusion

A fast, reliable, and scalable ETL pipeline is crucial to building robust data infrastructure in 2025 and beyond. By designing modular systems, embracing incremental and parallel processing, offloading tasks to the database, and continuously monitoring performance, data teams can optimize their pipelines for both current and future needs.

In the era of big data and real-time analytics, even small performance improvements in your ETL workflow can lead to major gains in efficiency and insight delivery. Start optimizing today to unlock the full potential of your data pipeline.

0 notes

Text

Exploring the Role of Azure Data Factory in Hybrid Cloud Data Integration

Introduction

In today’s digital landscape, organizations increasingly rely on hybrid cloud environments to manage their data. A hybrid cloud setup combines on-premises data sources, private clouds, and public cloud platforms like Azure, AWS, or Google Cloud. Managing and integrating data across these diverse environments can be complex.

This is where Azure Data Factory (ADF) plays a crucial role. ADF is a cloud-based data integration service that enables seamless movement, transformation, and orchestration of data across hybrid cloud environments.

In this blog, we’ll explore how Azure Data Factory simplifies hybrid cloud data integration, key use cases, and best practices for implementation.

1. What is Hybrid Cloud Data Integration?

Hybrid cloud data integration is the process of connecting, transforming, and synchronizing data between: ✅ On-premises data sources (e.g., SQL Server, Oracle, SAP) ✅ Cloud storage (e.g., Azure Blob Storage, Amazon S3) ✅ Databases and data warehouses (e.g., Azure SQL Database, Snowflake, BigQuery) ✅ Software-as-a-Service (SaaS) applications (e.g., Salesforce, Dynamics 365)

The goal is to create a unified data pipeline that enables real-time analytics, reporting, and AI-driven insights while ensuring data security and compliance.

2. Why Use Azure Data Factory for Hybrid Cloud Integration?

Azure Data Factory (ADF) provides a scalable, serverless solution for integrating data across hybrid environments. Some key benefits include:

✅ 1. Seamless Hybrid Connectivity

ADF supports over 90+ data connectors, including on-prem, cloud, and SaaS sources.

It enables secure data movement using Self-Hosted Integration Runtime to access on-premises data sources.

✅ 2. ETL & ELT Capabilities

ADF allows you to design Extract, Transform, and Load (ETL) or Extract, Load, and Transform (ELT) pipelines.

Supports Azure Data Lake, Synapse Analytics, and Power BI for analytics.

✅ 3. Scalability & Performance

Being serverless, ADF automatically scales resources based on data workload.

It supports parallel data processing for better performance.

✅ 4. Low-Code & Code-Based Options

ADF provides a visual pipeline designer for easy drag-and-drop development.

It also supports custom transformations using Azure Functions, Databricks, and SQL scripts.

✅ 5. Security & Compliance

Uses Azure Key Vault for secure credential management.

Supports private endpoints, network security, and role-based access control (RBAC).

Complies with GDPR, HIPAA, and ISO security standards.

3. Key Components of Azure Data Factory for Hybrid Cloud Integration

1️⃣ Linked Services

Acts as a connection between ADF and data sources (e.g., SQL Server, Blob Storage, SFTP).

2️⃣ Integration Runtimes (IR)

Azure-Hosted IR: For cloud data movement.

Self-Hosted IR: For on-premises to cloud integration.

SSIS-IR: To run SQL Server Integration Services (SSIS) packages in ADF.

3️⃣ Data Flows

Mapping Data Flow: No-code transformation engine.

Wrangling Data Flow: Excel-like Power Query transformation.

4️⃣ Pipelines

Orchestrate complex workflows using different activities like copy, transformation, and execution.

5️⃣ Triggers

Automate pipeline execution using schedule-based, event-based, or tumbling window triggers.

4. Common Use Cases of Azure Data Factory in Hybrid Cloud

🔹 1. Migrating On-Premises Data to Azure

Extracts data from SQL Server, Oracle, SAP, and moves it to Azure SQL, Synapse Analytics.

🔹 2. Real-Time Data Synchronization

Syncs on-prem ERP, CRM, or legacy databases with cloud applications.

🔹 3. ETL for Cloud Data Warehousing

Moves structured and unstructured data to Azure Synapse, Snowflake for analytics.

🔹 4. IoT and Big Data Integration

Collects IoT sensor data, processes it in Azure Data Lake, and visualizes it in Power BI.

🔹 5. Multi-Cloud Data Movement

Transfers data between AWS S3, Google BigQuery, and Azure Blob Storage.

5. Best Practices for Hybrid Cloud Integration Using ADF

✅ Use Self-Hosted IR for Secure On-Premises Data Access ✅ Optimize Pipeline Performance using partitioning and parallel execution ✅ Monitor Pipelines using Azure Monitor and Log Analytics ✅ Secure Data Transfers with Private Endpoints & Key Vault ✅ Automate Data Workflows with Triggers & Parameterized Pipelines

6. Conclusion

Azure Data Factory plays a critical role in hybrid cloud data integration by providing secure, scalable, and automated data pipelines. Whether you are migrating on-premises data, synchronizing real-time data, or integrating multi-cloud environments, ADF simplifies complex ETL processes with low-code and serverless capabilities.

By leveraging ADF’s integration runtimes, automation, and security features, organizations can build a resilient, high-performance hybrid cloud data ecosystem.

WEBSITE: https://www.ficusoft.in/azure-data-factory-training-in-chennai/

0 notes

Text

Azure AI Engineer Course in Bangalore | Azure AI Engineer

The Significance of AI Pipelines in Azure Machine Learning

Introduction

Azure Machine Learning (Azure ML) provides a robust platform for building, managing, and deploying AI pipelines, enabling organizations to optimize their data processing, model training, evaluation, and deployment processes efficiently. These pipelines help enhance productivity, scalability, and reliability while reducing manual effort. In today’s data-driven world, AI pipelines play a crucial role in automating and streamlining machine learning (ML) workflows.

What Are AI Pipelines in Azure Machine Learning?

An AI pipeline in Azure ML is a structured sequence of steps that automates various stages of a machine learning workflow. These steps may include data ingestion, preprocessing, feature engineering, model training, validation, and deployment. By automating these tasks, organizations can ensure consistency, repeatability, and scalability in their ML operations. Microsoft Azure AI Engineer Training

Azure ML pipelines are built using Azure Machine Learning SDK, Azure CLI, or the Azure ML Studio, making them flexible and accessible for data scientists and engineers.

Key Benefits of AI Pipelines in Azure Machine Learning

1. Automation and Efficiency

AI pipelines automate repetitive tasks, reducing manual intervention and human errors. Once a pipeline is defined, it can be triggered automatically whenever new data is available, ensuring a seamless workflow from data preparation to model deployment.

2. Scalability and Flexibility

Azure ML pipelines allow organizations to scale their machine learning operations effortlessly. By leveraging Azure’s cloud infrastructure, businesses can process large datasets and train complex models using distributed computing resources. AI 102 Certification

3. Reproducibility and Version Control

Machine learning projects often require multiple iterations and fine-tuning. With AI pipelines, each step of the ML process is tracked and versioned, allowing data scientists to reproduce experiments, compare models, and maintain consistency across different runs.

4. Modular and Reusable Workflows

AI pipelines promote a modular approach, where different components (e.g., data processing, model training) are defined as independent steps. These steps can be reused in different projects, saving time and effort.

5. Seamless Integration with Azure Ecosystem

Azure ML pipelines integrate natively with other Azure services such as: Azure AI Engineer Certification

Azure Data Factory (for data ingestion and transformation)

Azure Databricks (for big data processing)

Azure DevOps (for CI/CD in ML models)

Azure Kubernetes Service (AKS) (for model deployment)

These integrations make Azure ML pipelines a powerful end-to-end solution for AI-driven businesses.

6. Continuous Model Training and Deployment (MLOps)

Azure ML pipelines support MLOps (Machine Learning Operations) by enabling continuous integration and deployment (CI/CD) of ML models. This ensures that models remain up-to-date with the latest data and can be retrained and redeployed efficiently.

7. Monitoring and Governance

With Azure ML Pipelines, organizations can monitor each stage of the ML lifecycle using built-in logging and auditing features. This ensures transparency, compliance, and better management of AI models in production.

Use Cases of AI Pipelines in Azure Machine Learning

Predictive Maintenance – Automating data collection, anomaly detection, and predictive modeling for industrial machinery.

Fraud Detection – Continuously training fraud detection models based on real-time transaction data. Azure AI Engineer Certification

Healthcare Diagnostics – Automating image preprocessing, AI model inference, and deployment for medical diagnosis.

Customer Segmentation – Processing large datasets and applying clustering techniques to identify customer behavior patterns.

Natural Language Processing (NLP) – Automating text processing, sentiment analysis, and chatbot training.

Conclusion

AI pipelines in Azure Machine Learning provide a scalable, automated, and efficient approach to managing machine learning workflows. By leveraging Azure’s cloud-based infrastructure, organizations can streamline their AI development process, improve model accuracy, and accelerate deployment. With benefits like automation, reproducibility, MLOps integration, and monitoring, AI pipelines are essential for modern AI-driven businesses looking to maximize their data insights and innovation potential.

Visualpath stands out as the best online software training institute in Hyderabad.

For More Information about the Azure AI Engineer Online Training

Contact Call/WhatsApp: +91-7032290546

Visit: https://www.visualpath.in/informatica-cloud-training-in-hyderabad.html

#Ai 102 Certification#Azure AI Engineer Certification#Azure AI-102 Training in Hyderabad#Azure AI Engineer Training#Azure AI Engineer Online Training#Microsoft Azure AI Engineer Training#Microsoft Azure AI Online Training#Azure AI-102 Course in Hyderabad#Azure AI Engineer Training in Ameerpet#Azure AI Engineer Online Training in Bangalore#Azure AI Engineer Training in Chennai#Azure AI Engineer Course in Bangalore

0 notes

Text

Microsoft Azure Managed Services: Empowering Businesses with Expert Cloud Solutions

As businesses navigate the complexities of digital transformation, Microsoft Azure Managed Services emerge as a crucial tool for leveraging the potential of cloud technology. These services combine advanced infrastructure, automation, and expert support to streamline operations, enhance security, and optimize costs. For organizations seeking to maximize the benefits of Azure, partnering with a trusted Managed Service Provider (MSP) like Goognu ensures seamless integration and efficient management of Azure environments.

This article explores the features, benefits, and expertise offered by Goognu in delivering customized Azure solutions.

What Are Microsoft Azure Managed Services?

Microsoft Azure Managed Services refer to the specialized support and tools provided to organizations using the Azure cloud platform. These services enable businesses to effectively manage their Azure applications, infrastructure, and resources while ensuring regulatory compliance and data security.

Azure Managed Service Providers (MSPs) like Goognu specialize in delivering tailored solutions, offering businesses a wide range of support, from deploying virtual machines to optimizing complex data services.

Why Choose Goognu for Azure Managed Services?

With over a decade of expertise in cloud solutions, Goognu stands out as a leading provider of Microsoft Azure Managed Services. The company’s technical acumen, customer-centric approach, and innovative strategies ensure that businesses can fully harness the power of Azure.

Key Strengths of Goognu

Extensive Experience With more than 10 years in cloud management, Goognu has built a reputation for delivering reliable and efficient Azure solutions across industries.

Certified Expertise Goognu's team includes certified cloud professionals who bring in-depth knowledge of Azure tools and best practices to every project.

Tailored Solutions Recognizing the unique needs of every business, Goognu designs and implements solutions that align with individual goals and challenges.

Comprehensive Azure Services Offered by Goognu

Goognu provides a holistic suite of services under the umbrella of Microsoft Azure Managed Services. These offerings address a wide range of operational and strategic needs, empowering businesses to achieve their objectives efficiently.

1. Azure Infrastructure Management

Goognu manages critical Azure components such as:

Virtual Machines

Storage Accounts

Virtual Networks

Load Balancers

Azure App Services

By handling provisioning, configuration, and ongoing optimization, Goognu ensures that infrastructure remains reliable and performant.

2. Data Services and Analytics

Goognu provides expert support for Azure data tools, including:

Azure SQL Database

Azure Cosmos DB

Azure Data Factory

Azure Databricks

These services help businesses integrate, migrate, and analyze their data while maintaining governance and security.

3. Security and Compliance

Security is paramount in cloud environments. Goognu implements robust measures to protect Azure infrastructures, such as:

Azure Active Directory for Identity Management

Threat Detection and Vulnerability Management

Network Security Groups

Compliance Frameworks

4. Performance Monitoring and Optimization

Using tools like Nagios, Zabbix, and Azure Monitor, Goognu tracks performance metrics, system health, and resource usage. This ensures that Azure environments are optimized for scalability, availability, and efficiency.

5. Disaster Recovery Solutions

With Azure Site Recovery, Goognu designs and implements strategies to minimize downtime and data loss during emergencies.

6. Application Development and Deployment

Goognu supports businesses in building and deploying applications in Azure, including:

Cloud-Native Applications

Containerized Applications (Azure Kubernetes Service)

Serverless Applications (Azure Functions)

Traditional Applications on Azure App Services

7. Cost Optimization

Cost management is critical for long-term success in the cloud. Goognu helps businesses analyze resource usage, rightsize instances, and leverage Azure cost management tools to minimize expenses without sacrificing performance.

Benefits of Microsoft Azure Managed Services

Adopting Azure Managed Services with Goognu provides several transformative advantages:

1. Streamlined Operations

Automation and expert support simplify routine tasks, reducing the burden on in-house IT teams.

2. Enhanced Security

Advanced security measures protect data and applications from evolving threats, ensuring compliance with industry regulations.

3. Cost Efficiency

With a focus on resource optimization, businesses can achieve significant cost savings while maintaining high performance.

4. Improved Performance

Proactive monitoring and troubleshooting eliminate bottlenecks, ensuring smooth and efficient operations.

5. Scalability and Flexibility

Azure’s inherent scalability, combined with Goognu’s expertise, enables businesses to adapt to changing demands effortlessly.

6. Focus on Core Activities

By outsourcing cloud management to Goognu, businesses can focus on innovation and growth instead of day-to-day operations.

Goognu’s Approach to Azure Managed Services

Collaboration and Strategy

Goognu begins by understanding a business’s specific needs and goals. Its team of experts collaborates closely with clients to develop strategies that integrate Azure seamlessly into existing IT environments.

Customized Solutions

From infrastructure setup to advanced analytics, Goognu tailors its services to align with the client’s operational and strategic objectives.

Continuous Support

Goognu provides 24/7 support, ensuring that businesses can resolve issues quickly and maintain uninterrupted operations.

Unlocking Innovation with Azure

Goognu empowers businesses to accelerate innovation using Azure’s cutting-edge capabilities. By leveraging cloud-native development, AI/ML operations, IoT integration, and workload management, Goognu helps clients stay ahead in competitive markets.

Why Businesses Choose Goognu

Proven Expertise

With a decade of experience in Microsoft Azure Managed Services, Goognu delivers results that exceed expectations.

Customer-Centric Approach

Goognu prioritizes customer satisfaction, offering personalized solutions and unwavering support.

Advanced Capabilities

From AI/ML to IoT, Goognu brings advanced expertise to help businesses unlock new opportunities with Azure.

Conclusion

Microsoft Azure Managed Services offer unparalleled opportunities for businesses to optimize their operations, enhance security, and achieve cost efficiency. By partnering with a trusted provider like Goognu, organizations can unlock the full potential of Azure and focus on their strategic goals.

With a proven track record and unmatched expertise, Goognu delivers comprehensive Azure solutions tailored to the unique needs of its clients. Whether it’s infrastructure management, data analytics, or cost optimization, Goognu ensures businesses can thrive in today’s digital landscape.

Transform your cloud journey with Goognu’s Microsoft Azure Managed Services. Contact us today to discover how we can help you achieve your business goals.

0 notes

Text

Price: [price_with_discount] (as of [price_update_date] - Details) [ad_1] Leverage the power of Microsoft Azure Data Factory v2 to build hybrid data solutions Key Features Combine the power of Azure Data Factory v2 and SQL Server Integration Services Design and enhance performance and scalability of a modern ETL hybrid solution Interact with the loaded data in data warehouse and data lake using Power BI Book Description ETL is one of the essential techniques in data processing. Given data is everywhere, ETL will always be the vital process to handle data from different sources. Hands-On Data Warehousing with Azure Data Factory starts with the basic concepts of data warehousing and ETL process. You will learn how Azure Data Factory and SSIS can be used to understand the key components of an ETL solution. You will go through different services offered by Azure that can be used by ADF and SSIS, such as Azure Data Lake Analytics, Machine Learning and Databrick’s Spark with the help of practical examples. You will explore how to design and implement ETL hybrid solutions using different integration services with a step-by-step approach. Once you get to grips with all this, you will use Power BI to interact with data coming from different sources in order to reveal valuable insights. By the end of this book, you will not only learn how to build your own ETL solutions but also address the key challenges that are faced while building them. What you will learn Understand the key components of an ETL solution using Azure Data Factory and Integration Services Design the architecture of a modern ETL hybrid solution Implement ETL solutions for both on-premises and Azure data Improve the performance and scalability of your ETL solution Gain thorough knowledge of new capabilities and features added to Azure Data Factory and Integration Services Who this book is for This book is for you if you are a software professional who develops and implements ETL solutions using Microsoft SQL Server or Azure cloud. It will be an added advantage if you are a software engineer, DW/ETL architect, or ETL developer, and know how to create a new ETL implementation or enhance an existing one with ADF or SSIS. Table of Contents Azure Data Factory Getting Started with Our First Data Factory ADF and SSIS in PaaS Azure Data Lake Machine Learning on the Cloud Sparks with Databrick Power BI reports ASIN : B07DGJSPYK Publisher : Packt Publishing; 1st edition (31 May 2018) Language : English File size : 32536 KB Text-to-Speech : Enabled Screen Reader : Supported Enhanced typesetting : Enabled X-Ray : Not Enabled Word Wise : Not Enabled Print length : 371 pages [ad_2]

0 notes

Text

Microsoft Fabric Training | Microsoft Fabric Course

Using Data Flow in Azure Data Factory in Microsoft Fabric Course

Using Data Flow in Azure Data Factory in Microsoft Fabric Course

In Microsoft Fabric Course-Azure Data Factory (ADF) is a powerful cloud-based data integration service that allows businesses to build scalable data pipelines. One of the key features of ADF is the Data Flow capability, which allows users to visually design and manage complex data transformation processes. With the rise of modern data integration platforms such as Microsoft Fabric, organizations are increasingly leveraging Azure Data Factory to handle big data transformations efficiently. If you’re considering expanding your knowledge in this area, enrolling in a Microsoft Fabric Training is an excellent way to learn how to integrate tools like ADF effectively.

What is Data Flow in Azure Data Factory?

Data Flow in Azure Data Factory enables users to transform and manipulate data at scale. Unlike traditional data pipelines, which focus on moving data from one point to another, Data Flows allow businesses to design transformation logic that modifies the data as it passes through the pipeline. You can apply various transformation activities such as filtering, aggregating, joining, and more. These activities are performed visually, which makes it easier to design complex workflows without writing any code.

The use of Microsoft Fabric Training helps you understand how to streamline this process further by making it compatible with other Microsoft solutions. By connecting your Azure Data Factory Data Flows to Microsoft Fabric, you can take advantage of its analytical and data management capabilities.

Key Components of Data Flow

There are several important components in Azure Data Factory’s Data Flow feature, which you will also encounter in Microsoft Fabric Training in Hyderabad:

Source and Sink: These are the starting and ending points of the data transformation pipeline. The source is where the data originates, and the sink is where the transformed data is stored, whether in a data lake, a database, or any other storage service.

Transformation: Azure Data Factory offers a variety of transformations such as sorting, filtering, aggregating, and conditional splitting. These transformations can be chained together to create a custom flow that meets your specific business requirements.

Mapping Data Flows: Mapping Data Flows are visual representations of how the data will move and transform across various stages in the pipeline. This simplifies the design and maintenance of complex pipelines, making it easier to understand and modify the workflow.

Integration with Azure Services: One of the key benefits of using Azure Data Factory’s Data Flows is the tight integration with other Azure services such as Azure SQL Database, Data Lakes, and Blob Storage. Microsoft Fabric Training covers these integrations and helps you understand how to work seamlessly with these services.

Benefits of Using Data Flow in Azure Data Factory

Data Flow in Azure Data Factory provides several advantages over traditional ETL tools, particularly when used alongside Microsoft Fabric Course skills:

Scalability: Azure Data Factory scales automatically according to your data needs, making it ideal for organizations of all sizes, from startups to enterprises. The underlying infrastructure is managed by Azure, ensuring that you have access to the resources you need when you need them.

Cost-Effectiveness: You only pay for what you use. With Data Flow, you can easily track resource consumption and optimize your processes to reduce costs. When combined with Microsoft Fabric Training, you’ll learn how to make cost-effective decisions for data integration and transformation.

Real-Time Analytics: By connecting ADF Data Flows to Microsoft Fabric, businesses can enable real-time data analytics, providing actionable insights from massive datasets in a matter of minutes or even seconds. This is particularly valuable for industries such as finance, healthcare, and retail, where timely decisions are critical.

Best Practices for Implementing Data Flow

Implementing Data Flow in Azure Data Factory requires planning and strategy, particularly when scaling for large data sets. Here are some best practices, which are often highlighted in Microsoft Fabric Training in Hyderabad:

Optimize Source Queries: Use filters and pre-aggregations to limit the amount of data being transferred. This helps reduce processing time and costs.

Monitor Performance: Utilize Azure’s monitoring tools to keep an eye on the performance of your Data Flows. Azure Data Factory provides built-in diagnostics and monitoring that allow you to quickly identify bottlenecks or inefficiencies.

Use Data Caching: Caching intermediate steps of your data flow can significantly improve performance, especially when working with large datasets.

Modularize Pipelines: Break down complex transformations into smaller, more manageable modules. This approach makes it easier to debug and maintain your data flows.

Integration with Microsoft Fabric: Use Microsoft Fabric to further enhance your data flow capabilities. Microsoft Fabric Training will guide you through this integration, teaching you how to make the most of both platforms.

Conclusion

Using Data Flow in Azure Data Factory offers businesses a robust and scalable way to transform and manipulate data across various stages. When integrated with other Microsoft tools, particularly Microsoft Fabric, it provides powerful analytics and real-time insights that are critical in today’s fast-paced business environment. Through hands-on experience with a Microsoft Fabric Course, individuals and teams can gain the skills needed to optimize these data transformation processes, making them indispensable in today’s data-driven world.

Whether you are a data engineer or a business analyst, mastering Azure Data Factory and its Data Flow features through Microsoft Fabric Training will provide you with a solid foundation in data integration and transformation. Consider enrolling in Microsoft Fabric Training in Hyderabad to advance your skills and take full advantage of this powerful toolset.

Visualpath is the Leading and Best Software Online Training Institute in Hyderabad. Avail complete Microsoft Fabric Training Worldwide. You will get the best course at an affordable cost.

Attend Free Demo

Call on — +91–9989971070.

Visit https://www.visualpath.in/online-microsoft-fabric-training.html

#Microsoft Fabric Training#Microsoft Fabric Course#Microsoft Fabric Training In Hyderabad#Microsoft Fabric Certification Course#Microsoft Fabric Course in Hyderabad#Microsoft Fabric Online Training Course#Microsoft Fabric Online Training Institute

1 note

·

View note

Text

ARM template documentation

As more firms transfer their activities to the cloud, efficient resource management becomes increasingly important. Azure Resource Manager (ARM) templates are critical to this management process, allowing users to deploy, manage, and organize resources in a consistent and repeatable manner. Whether you're taking Azure training, Azure DevOps training, or Azure Data Factory training, understanding ARM templates is critical. This article will go over the principles of ARM templates, including what they are, their benefits, and how to utilize them successfully.

What are Azure Resource Manager Templates?

Azure Resource Manager (ARM) templates are JSON files that provide the infrastructure and configuration of your Azure solution. They are declarative in nature, which means you specify what you want to deploy, and the ARM handles the rest. ARM templates enable you to deploy various resources in a single, coordinated process.

Key Components of an ARM Template

Schema: Defines the structure of the template. It's a mandatory element and is usually the first line in the template.

Content Version: Specifies the version of the template. This helps in tracking changes and managing versions.

Parameters: These are values you can pass to the template to customize the deployment. Parameters make templates reusable and flexible.

Variables: These are used to simplify your template by defining values that you use multiple times.

Resources: The actual Azure services that you are deploying. This section is the heart of the template.

Outputs: Values that are returned after the deployment is complete. These can be used for further processing or as inputs to other deployments.

Benefits of Using ARM Templates

Consistency: ARM templates ensure that your deployments are consistent. Every time you deploy a resource, it will be deployed in the same way.

Automation: By using ARM templates, you can automate the deployment of your resources, reducing the potential for human error.

Reusability: Templates can be reused across different environments (development, testing, production), ensuring consistency and saving time.

Versioning: ARM templates can be versioned and stored in source control, allowing you to track changes and roll back if necessary.

Collaboration: With ARM templates, teams can collaborate more effectively by sharing and reviewing templates.

Creating an ARM Template

Creating an ARM template involves defining the resources you want to deploy and their configurations. Let's go through a simple example of creating an ARM template to deploy a storage account.

json

Deploying an ARM Template

Once you've created your ARM template, you can deploy it using various methods:

Azure Portal: You can upload the template directly in the Azure Portal and deploy it.

Azure PowerShell: Use the New-AzResource Group Deployment cmdlet to deploy the template.

Azure CLI: Use the az deployment group create command to deploy the template.

Azure DevOps: Integrate ARM template deployments into your CI/CD pipelines, enabling automated and repeatable deployments.

Best Practices for Using ARM Templates

Modularize Templates: Break down large templates into smaller, reusable modules. This makes them easier to manage and maintain.

Use Parameters and Variables: Leverage parameters and variables to create flexible and reusable templates.

Test Templates: Always test your templates in a non-production environment before deploying them to production.

Source Control: Store your ARM templates in a source control system like Git to track changes and collaborate with your team.

Documentation: Document your templates thoroughly to help others understand the purpose and configuration of the resources.

Integrating ARM Templates with Azure DevOps

Incorporating ARM templates into your Azure DevOps workflows will help improve your CI/CD pipeline. Integrating ARM templates with Azure DevOps allows you to automate the deployment process, assuring consistency and reducing manual involvement.

Create a Repository: Store your ARM templates in a Git repository within Azure Repos.

Build Pipeline: Set up a build pipeline to validate the syntax of your ARM templates.

Release Pipeline: Create a release pipeline to deploy your ARM templates to various environments (development, testing, production).

Approvals and Gates: Implement approval workflows and gates to ensure that only validated and approved templates are deployed.

Leveraging ARM Templates in Azure Data Factory

Azure Data Factory (ADF) is a sophisticated data integration tool that lets you build, schedule, and orchestrate data workflows. ARM templates can be used to automate the deployment of ADF resources, making it easier to manage and grow data integration systems.

Export ADF Resources: Export your ADF pipelines, datasets, and other resources as ARM templates.

Parameterize Templates: Use parameters to make your ADF templates reusable across different environments.

Automate Deployments: Integrate the deployment of ADF ARM templates into your Azure DevOps pipelines, ensuring consistency and reducing manual effort.

Conclusion

As more firms transfer their activities to the cloud, efficient resource management becomes increasingly important. Azure Resource Manager (ARM) templates are critical to this management process, allowing users to deploy, manage, and organize resources in a consistent and repeatable manner. Whether you're taking Azure training, Azure DevOps training, or Azure Data Factory training, understanding ARM templates is critical. This article will go over the principles of ARM templates, including what they are, their benefits, and how to utilize them successfully.

What are Azure Resource Manager Templates?

Azure Resource Manager (ARM) templates are JSON files that provide the infrastructure and configuration of your Azure solution. They are declarative in nature, which means you specify what you want to deploy, and the ARM handles the rest. ARM templates enable you to deploy various resources in a single, coordinated process.

Key Components of an ARM Template

Schema: Defines the structure of the template. It's a mandatory element and is usually the first line in the template.

Content Version: Specifies the version of the template. This helps in tracking changes and managing versions.

Parameters: These are values you can pass to the template to customize the deployment. Parameters make templates reusable and flexible.

Variables: These are used to simplify your template by defining values that you use multiple times.

Resources: The actual Azure services that you are deploying. This section is the heart of the template.

Outputs: Values that are returned after the deployment is complete. These can be used for further processing or as inputs to other deployments.

Benefits of Using ARM Templates

Consistency: ARM templates ensure that your deployments are consistent. Every time you deploy a resource, it will be deployed in the same way.

Automation: By using ARM templates, you can automate the deployment of your resources, reducing the potential for human error.

Reusability: Templates can be reused across different environments (development, testing, production), ensuring consistency and saving time.

Versioning: ARM templates can be versioned and stored in source control, allowing you to track changes and roll back if necessary.

Collaboration: With ARM templates, teams can collaborate more effectively by sharing and reviewing templates.

Creating an ARM Template

Creating an ARM template entails identifying the resources and parameters you intend to deploy. Let us walk through a simple example of establishing an ARM template to deploy a storage account.

json

Deploying an ARM Template

Once you've created your ARM template, you can deploy it using various methods:

Azure Portal: You can upload the template directly in the Azure Portal and deploy it.

Azure PowerShell: Use the New-AzResourceGroupDeployment cmdlet to deploy the template.

Azure CLI: Use the az deployment group create command to deploy the template.

Azure DevOps: Integrate ARM template deployments into your CI/CD pipelines, enabling automated and repeatable deployments.

Best Practices for Using ARM Templates

Modularize Templates: Break down large templates into smaller, reusable modules. This makes them easier to manage and maintain.

Use Parameters and Variables: Leverage parameters and variables to create flexible and reusable templates.

Test Templates: Always test your templates in a non-production environment before deploying them to production.

Source Control: Store your ARM templates in a source control system like Git to track changes and collaborate with your team.

Documentation: Document your templates thoroughly to help others understand the purpose and configuration of the resources.

Integrating ARM Templates with Azure DevOps

Incorporating ARM templates into your Azure DevOps workflows will help improve your CI/CD pipeline. Integrating ARM templates with Azure DevOps allows you to automate the deployment process, assuring consistency and reducing manual involvement.

Create a Repository: Store your ARM templates in a Git repository within Azure Repos.

Build Pipeline: Set up a build pipeline to validate the syntax of your ARM templates.

Release Pipeline: Create a release pipeline to deploy your ARM templates to various environments (development, testing, production).

Approvals and Gates: Implement approval workflows and gates to ensure that only validated and approved templates are deployed.

Leveraging ARM Templates in Azure Data Factory

Azure Data Factory (ADF) is a sophisticated data integration tool that lets you build, schedule, and orchestrate data workflows. ARM templates can be used to automate the deployment of ADF resources, making it easier to manage and grow data integration systems.

Export ADF Resources: Export your ADF pipelines, datasets, and other resources as ARM templates.

Parameterize Templates: Use parameters to make your ADF templates reusable across different environments.

Automate Deployments: Integrate the deployment of ADF ARM templates into your Azure DevOps pipelines, ensuring consistency and reducing manual effort.

Conclusion

Azure Resource Manager templates are a valuable tool for managing Azure resources effectively. Understanding ARM templates is critical for Azure training, Azure DevOps training, and Azure Data Factory training. You may streamline your resource management and deployment procedures by exploiting the benefits of ARM templates, which include consistency, automation, reusability, and versioning. Integrating ARM templates into your Azure DevOps workflows and using them in Azure Data Factory will improve your cloud operations and ensure you make the most of your Azure environment.

0 notes

Text

What are the components of Azure Data Lake Analytics?

Azure Data Lake Analytics consists of the following key components:

Job Service: This component is responsible for managing and executing jobs submitted by users. It schedules and allocates resources for job execution.

Catalog Service: The Catalog Service stores and manages metadata about data stored in Data Lake Storage Gen1 or Gen2. It provides a structured view of the data, including file names, directories, and schema information.

Resource Management: Resource Management handles the allocation and scaling of resources for job execution. It ensures efficient resource utilization while maintaining performance.

Execution Environment: This component provides the runtime environment for executing U-SQL jobs. It manages the distributed execution of queries across multiple nodes in the Azure Data Lake Analytics cluster.

Job Submission and Monitoring: Azure Data Lake Analytics provides tools and APIs for submitting and monitoring jobs. Users can submit jobs using the Azure portal, Azure CLI, or REST APIs. They can also monitor job status and performance metrics through these interfaces.

Integration with Other Azure Services: Azure Data Lake Analytics integrates with other Azure services such as Azure Data Lake Storage, Azure Blob Storage, Azure SQL Database, and Azure Data Factory. This integration allows users to ingest, process, and analyze data from various sources seamlessly.

These components work together to provide a scalable and efficient platform for processing big data workloads in the cloud.

#Azure#DataLake#Analytics#BigData#CloudComputing#DataProcessing#DataManagement#Metadata#ResourceManagement#AzureServices#DataIntegration#DataWarehousing#DataEngineering#AzureStorage#magistersign#onlinetraining#support#cannada#usa#careerdevelopment

1 note

·

View note

Text

Mastering Azure Data Factory: Your Guide to Becoming an Expert

Introduction Azure Data Factory (ADF) is a powerful cloud-based data integration service provided by Microsoft's Azure platform. It enables you to create, schedule, and manage data-driven workflows to move, transform, and process data from various sources to various destinations. Whether you're a data engineer, developer, or a data professional, becoming an Azure Data Factory expert can open up a world of opportunities for you. In this comprehensive guide, we'll delve into what Azure Data Factory is, why it's a compelling choice, and the key concepts and terminology you need to master to become an ADF expert.

What is Azure Data Factory?

Azure Data Factory (ADF) is a cloud-based data integration service offered by Microsoft Azure. It allows you to create, schedule, and manage data-driven workflows in the cloud. ADF is designed to help organizations with the following tasks:

Data Movement: ADF enables the efficient movement of data from various sources to different destinations. It supports a wide range of data sources and destinations, making it a versatile tool for handling diverse data integration scenarios.

Data Transformation: ADF provides data transformation capabilities, allowing you to clean, shape, and enrich your data during the movement process. This is particularly useful for data preparation and data warehousing tasks.

Data Orchestration: ADF allows you to create complex data workflows by orchestrating activities, such as data movement, transformation, and data processing. These workflows can be scheduled or triggered in response to events.

Data Monitoring and Management: ADF offers monitoring, logging, and management features to help you keep track of your data workflows and troubleshoot any issues that may arise during data integration.

Key Components of Azure Data Factory:

Pipeline: A pipeline is the core construct of ADF. It defines the workflow and activities that need to be performed on the data.

Activities: Activities are the individual steps or operations within a pipeline. They can include data movement activities, data transformation activities, and data processing activities.

Datasets: Datasets represent the data structures that activities use as inputs or outputs. They define the data schema and location, which is essential for ADF to work with your data effectively.

Linked Services: Linked services define the connection information and authentication details required to connect to various data sources and destinations.

Why Azure Data Factory?

Now that you have a basic understanding of what Azure Data Factory is, let's explore why it's a compelling choice for data integration and why you should consider becoming an expert in it.

Scalability: Azure Data Factory is designed to handle data integration at scale. Whether you're dealing with a few gigabytes of data or petabytes of data, ADF can efficiently manage data workflows of various sizes. This scalability is particularly valuable in today's data-intensive environment.

Cloud-Native: As a cloud-based service, ADF leverages the power of Microsoft Azure, making it a robust and reliable choice for data integration. It seamlessly integrates with other Azure services, such as Azure SQL Data Warehouse, Azure Data Lake Storage, and more.

Hybrid Data Integration: ADF is not limited to working only in the cloud. It supports hybrid data integration scenarios, allowing you to connect on-premises data sources and cloud-based data sources, giving you the flexibility to handle diverse data environments.

Cost-Effective: ADF offers a pay-as-you-go pricing model, which means you only pay for the resources you consume. This cost-effectiveness is attractive to organizations looking to optimize their data integration processes.

Integration with Ecosystem: Azure Data Factory seamlessly integrates with other Azure services, like Azure Databricks, Azure HDInsight, Azure Machine Learning, and more. This integration allows you to build end-to-end data pipelines that cover data extraction, transformation, and loading (ETL), as well as advanced analytics and machine learning.

Monitoring and Management: ADF provides extensive monitoring and management features. You can track the performance of your data pipelines, view execution logs, and set up alerts to be notified of any issues. This is critical for ensuring the reliability of your data workflows.

Security and Compliance: Azure Data Factory adheres to Microsoft's rigorous security standards and compliance certifications, ensuring that your data is handled in a secure and compliant manner.

Community and Support: Azure Data Factory has a growing community of users and a wealth of documentation and resources available. Microsoft also provides support for ADF, making it easier to get assistance when you encounter challenges.

Key Concepts and Terminology

To become an Azure Data Factory expert, you need to familiarize yourself with key concepts and terminology. Here are some essential terms you should understand:

Azure Data Factory (ADF): The overarching service that allows you to create, schedule, and manage data workflows.

Pipeline: A sequence of data activities that define the workflow, including data movement, transformation, and processing.

Activities: Individual steps or operations within a pipeline, such as data copy, data flow, or stored procedure activities.

Datasets: Data structures that define the data schema, location, and format. Datasets are used as inputs or outputs for activities.

Linked Services: Connection information and authentication details that define the connectivity to various data sources and destinations.

Triggers: Mechanisms that initiate the execution of a pipeline, such as schedule triggers (time-based) and event triggers (in response to data changes).

Data Flow: A data transformation activity that uses mapping data flows to transform and clean data at scale.

Data Movement: Activities that copy or move data between data stores, whether they are on-premises or in the cloud.

Debugging: The process of testing and troubleshooting your pipelines to identify and resolve issues in your data workflows.

Integration Runtimes: Compute resources used to execute activities. There are three types: Azure, Self-hosted, and Azure-SSIS integration runtimes.

Azure Integration Runtime: A managed compute environment that's fully managed by Azure and used for activities that run in the cloud.

Self-hosted Integration Runtime: A compute environment hosted on your own infrastructure for scenarios where data must be processed on-premises.

Azure-SSIS Integration Runtime: A managed compute environment for running SQL Server Integration Services (SSIS) packages.

Monitoring and Management: Tools and features that allow you to track the performance of your pipelines, view execution logs, and set up alerts for proactive issue resolution.

Data Lake Storage: A highly scalable and secure data lake that can be used as a data source or destination in ADF.

Azure Databricks: A big data and machine learning service that can be integrated with ADF to perform advanced data transformations and analytics.

Azure Machine Learning: A cloud-based service that can be used in conjunction with ADF to build and deploy machine learning models.

We Are Providing other Courses Like

azure admin

azure devops

azure datafactory

aws course

gcp training

click here for more information

0 notes

Text

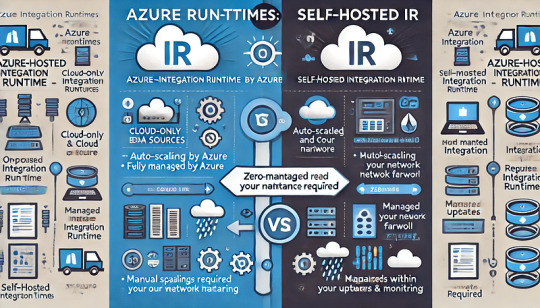

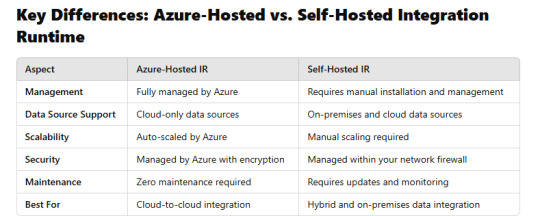

Understanding Azure Integration Runtimes: Choosing Between Self-Hosted and Azure-Hosted Runtimes

Azure Integration Runtime (IR) is a crucial component in Azure Data Factory (ADF) that enables seamless data movement, transformation, and integration across diverse data sources. Choosing between Self-Hosted Integration Runtime and Azure-Hosted Integration Runtime is essential for optimal performance, security, and cost efficiency. This guide will help you understand the key differences and determine which option best fits your data integration needs.

What is Azure Integration Runtime?

Azure Integration Runtime acts as a secure infrastructure that facilitates:

Data movement between data stores.

Data flow execution in Azure Data Factory.

Dispatching activities to compute services such as Azure Databricks, Azure HDInsight, and Azure SQL Database.

Types of Integration Runtimes in Azure

There are two primary types of Integration Runtimes:

Azure-Hosted Integration Runtime (Managed by Microsoft)

Self-Hosted Integration Runtime (Managed by you)

Azure-Hosted Integration Runtime

The Azure-Hosted IR is a fully managed service by Microsoft, designed for cloud-native data integration.

Key Features: