#but there's something about representing linked data in a three-dimensional space

Explore tagged Tumblr posts

Note

Let's say you eventually die and get transmigrated/isekai'd, but you get to choose what universe you get put into and who you become when you get there. Where are you going?

Oh boy I cannot think of a good answer to this question. 😅

...wait I do have an answer, and it's to check out the Library of the Dreaming because I would love to know what the classification schema is there.

#“the schema is vibes” LISTEN#PROBABLY#but there's something about representing linked data in a three-dimensional space#anyway#replies#anonymous

19 notes

·

View notes

Text

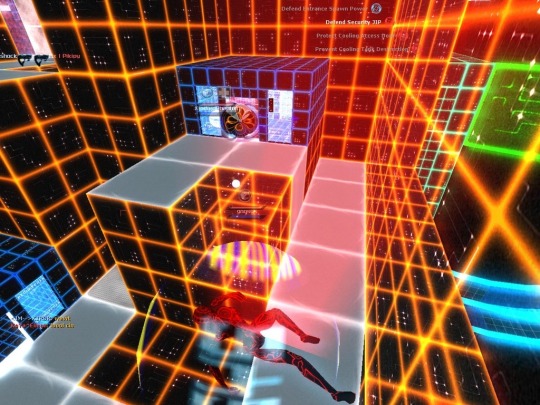

Cyberspace-

What does Cyberspace mean?

Cyberspace refers to the virtual computer world, and more specifically, an electronic medium that is used to facilitate online communication. Cyberspace typically involves a large computer network made up of many worldwide computer subnetworks that employ TCP/IP protocol to aid in communication and data exchange activities.

Cyberspace's core feature is an interactive and virtual environment for a broad range of participants.

In the common IT lexicon, any system that has a significant user base or even a well-designed interface can be thought to be ��cyberspace.”

Cyberspace allows users to share information, interact, swap ideas, play games, engage in discussions or social forums, conduct business and create intuitive media, among many other activities.

The term cyberspace was introduced by William Gibson in his 1984 book, Neuromancer. Gibson criticized the term in later years, calling it “evocative and essentially meaningless.” Nevertheless, the term is still widely used to describe any facility or feature that is linked to the Internet. People use the term to describe all sorts of virtual interfaces that create digital realities.

In many key ways, cyberspace is what human societies make of it.

One way to talk about cyberspace is related to the use of the global Internet for diverse purposes, from commerce to entertainment.Wherever the Internet is used, you could say, that creates a cyberspace. The prolific use of both desktop computers and smartphones to access the Internet means that, in a practical (yet somewhat theoretical) sense, the cyberspace is growing.

Another prime example of cyberspace is the online gaming platforms advertised as massive online player ecosystems. These large communities, playing all together, create their own cyberspace worlds that exist only in the digital realm, and not in the physical world, sometimes nicknamed the “meatspace.”

To really consider what cyberspace means and what it is, think about what happens when thousands of people, who may have gathered together in physical rooms in the past to play a game, do it instead by each looking into a device from remote locations. As gaming operators dress up the interface to make it attractive and appealing, they are, in a sense, bringing interior design to the cyberspace.

In fact, gaming as an example, as well as streaming video, shows what our societies have largely chosen to do with the cyberspace as a whole. According to many IT specialists and experts,cyberspace has gained popularity as a medium for social interaction, rather than its technical execution and implementation. This sheds light on how societies have chosen to create cyberspace.

Theoretically, the same human societies could create other kinds of cyberspace—technical realms in which digital objects are created, dimensioned and evaluated in technical ways. For example, cyberspaces where language translation happens automatically in the blink of an eye or cyberspaces involving full-scale visual inputs that can be rendered on a 10-foot wall.

In the end, it seems that the cyberspaces that we have created are pretty conformist and one-dimensional, relative to what could exist. In that sense, cyberspace is always evolving, and promises to be more diverse in the years to come.

Cyber culture and cyber-topics-

Cyberculture is the culture that has taken from the use of computer networks for communication, entertainment and business. It is also the study of various social phenomena associated with the Internet and other new forms of network communication, such as online communities, online multi-player gaming, social gaming, social media and texting.

The earliest usage of the term "cyberculture" was listed in the Oxford English Dictionary in 1963, "In the era of cyberculture, all the plows pull themselves and the fried chickens fly right onto our plates." However, cyberculture is the culture within and among users of computer networks. It may be purely an online culture or span both virtual and physical worlds. This is to say, that cyberculture is a culture endemic to online communities; it is not just the culture that results from computer use, but culture that is directly mediated by the computer.

Qualities of cyberculture-

Post image for Impacts of cyberculture on reading and writing The ethnography of cyberspace is an important element of cyberculture that does not reflect a single unified culture. "It is not a monolithic or placeless 'cyberspace'; but rather, it is numerous new technologies and capabilities, used by diverse people, in diverse real-world locations." It is malleable, perishable, and can be shaped by the vagaries of external forces on its users. For example, the laws of physical world governments, social norms, the architecture of cyberspace, and market forces shape the way cybercultures form and evolve. As with physical world cultures, cybercultures lend themselves to identification and study.

That said, there are several qualities that cybercultures share that make them warrant the prefix “cyber-“. Some of those qualities are that cyberculture:

- Is culture “mediated by computer screens.”

- Relies heavily on the notion of information and knowledge exchange.

- Depends on the ability to manipulate tools to a degree not present in other forms of culture (even artisan culture, e.g., a glass-blowing culture).

- Allows vastly expanded weak ties and has been criticized for overly emphasizing the same

- Multiplies the number of eyeballs on a given problem, beyond that which would be possible using traditional means, given physical, geographic, and temporal constraints.

- Is a “cognitive and social culture, not a geographic one.”

- Is “the product of like-minded people finding a common ‘place’ to interact."

- Is inherently more "fragile" than traditional forms of community and culture.

Types of Cyberculture -

Types of Cyberculture include various human interactions mediated by computer networks. They can be activities, pursuits, games, places and metaphors, and include a diverse base of applications. Through these types of Cyberculture, Internet language is used widely. For example: blogs,social networks, games etc.

Cyberspace and Dystopia-

An imagined place or state in which everything is unpleasant or bad,typically a totalitarian or environmentally degraded one.

Augmented reality -

A technology that superimposes a computer-generated image on a users view of the real world, thus providing a composite view.

The word augmented suggests that this kind of technology can improve our technology of our experience with the world as augment means to add to something.

Immersive (cyber) spaces-

Virtual reality-

The computer-generated simulation of three-dimensional image or environment that can be interacted with in a seemingly real or physical way by a person using special electronic equipment,such as a helmet with a screen inside or gloves filleted with sensors.

Example Avatar- James Cameron

The use of the word Avatar makes us know that it has some sort of relationship with reality and technology.

An avatar is an icon or a figure that represents a certain person in a video game or any other internet form.

This film deals with the possibilities of pushing human relationships with technology to its limits.

0 notes

Text

Five Dimensional Training

There is a fifth dimension beyond that which is known to humans. It is a dimension as vast as space and as timeless as infinity. It is the middle ground between light and shadow, between science and superstition, and it lies between the pit of human fears and the summit of human knowledge. This is the dimension of imagination. It is an area which we call the Ninja Zone.

I took some liberties with the opening narrative from season one of the Twilight Zone and I’m going to take some liberties with the scientific definition of dimensions as we journey into a wondrous land whose boundaries are that of imagination. From my imagination came a dimensional analogy to help understand the different levels of training I believe are necessary to understand ninpo taijutsu. Each level is an essential part of a journey you take to understand and each also represents a cul de sac that you could get stuck in if you don’t stay on the path.

One dimensional training represents knowing the kata models of your system. These are the source material of your training and should be appreciated. It is important to understand that these are teaching tools passed on from our martial ancestors from teacher to student as more of a graduation certificate versus a textbook. They were the notes for someone who already had learned.

If you read most of the kata models without any understanding of the art provided by a teacher they don’t mean much. They are a starting point to the training, trying to memorize them as if they were some kind of magic pill that will give you martial powers is the first cul de sac you must avoid to stay on the path. The catch here is the need to be right, “I know this kata.” The most dangerous aspect of this way of training is developing the habit of focusing on yourself instead of the situation, the moment you are in.

Two dimensional training represents focusing on defeating the opponent. This could be represented by a competitive sports athlete. Having worked as a bouncer for a number of years I can tell you that focus is an absolute necessity in combat. The Brazilian jiu jitsu practitioners used their expertise at this to pull off one of the greatest marketing strategies ever in the martial arts by competing against martial artists from the one dimensional mind set back in the 90s. It brought into the public consciousness the difference between memorization training and competitive training.

The cul de sac here involves the denial that strength and speed will fade with time along with the idea that anything beyond technique, strength and speed does not exist. Now this is coming from the leader of Muscle Holics Anonymous. I was a scholarship football player in college and with my size was a huge proponent of size, strength and bashing people (pun intended). However my dual experiences of training with my teacher Mark Davis, who is bigger, stronger, and much better at taijutsu than me, and the Japanese teachers I was fortunate enough to train with, who were on average half my size, showed me there is definitely something beyond size and strength.

Three dimensional training is just what it says, understanding the relationship between bodies in three dimensional space and gravity. This is where physics becomes the tool to overcome what my teacher calls asymmetrical situations, where you are at a disadvantage. It is here we begin to let go of doing kata models and start the understanding of distance, alignment and shape and their effects on a violent situation. These are the principles that allow you to go beyond size, strength and speed and continue your training and capabilities into your later years.

The danger here is in becoming a theorist and never trying to apply these scientific concepts under pressure. This is a cul de sac that could get you hurt if you don’t bring along the capabilities of the previous dimension. Intellectual understanding of a concept is not the same as experiential learning. You must do the experiments and test the principles for yourself. Not to cast doubt on the science but to gain faith in your ability to apply the science in realistic situations. The science must become a habit for you if it is to be available when needed.

Four dimensional training is the capability to perceive three dimensional relationships from moment to moment in time. This level opens the door to the possibility of leaving behind reactionary fighting where you are always responding to the actions of your opponent. You begin to view the moment of conflict as one thing with no separation between the combatants.

An analogy to help you visualize this four dimensional level would be to think of a kata model as Chinese dragon seen in parades. Imagine yourself and your training partner at the beginning of the kata model as the head of the dragon. Then imagine the two of you at the end of the kata model, the tail. All the steps of the kata model between would be the twists and turns of the dragon’s body. Perceived in its entirety the dragon gives you a way of modeling four dimensional space.

The cul de sac here is ego. When you reach this level of perception if you’re not careful you can believe yourself more capable than you actually are. You may underestimate the abilities of others. We are not the only martial art to understand these concepts. This understanding does not make you invulnerable. There are extremely dangerous people out there, trained and untrained, who you do not want to take for granted. To avoid this trap you must remember and accept that anything can happen no matter how well you understand these concepts.

Five dimensional training to me is about the use of kyojutsu tenkan ho, a Japanese phrase that has been translated as truth for falsehood. I’m not qualified to tell you if that is an accurate translation because I can’t read Japanese. From my experience in training it has come to mean for me the idea of affecting my opponent’s perception of the other four dimensions. You allow your opponent to have their perception of reality in the moment while creating another that allows you to survive the conflict. To reach this level of perception requires an understanding of the science of perception itself. I believe this dimension can be summed up in the quote “The first priority to the ninja is to win without fighting.”

What’s the danger here? Delusion, believing somehow you are performing magic. You can delude yourself and then delude those you teach. It’s not hard to find videos of some very deluded martial artists on the internet. To stay out of this cul de sac and all the others you need science. Ask the hard questions, do the experiments, collect the data, adjust your training and repeat. The best part of using science this way is that you never have to stop your training. You can travel through other dimensions of understanding.

We look forward to training with you.

D

PS You can unlock the door to The Ninja Zone with Shinobi Science by clicking the link below for more information.

https://www.shinobimartialarts.com/

0 notes

Text

Copying as Performative Research Toward an Artistic Working Model Franz Thalmair

Why original and copy yet again? Why still? Copying has attained a new diversity. In the context of digital technologies, which facilitate identical reproductions of any data, the practice of copying is omnipresent yet often invisible. It has evolved into a multifarious but controversial cultural technique, which surfaces in public discourses about copyright and plagiarism or unauthorized fakes of patented products. At the root of these debates is the prevailing negative understanding of the copy in opposition to the positively connoted original. From the perspective of contemporary artistic production and in contrast to discussions often conducted from a commercial standpoint, the original no longer serves as the moral basis for the evaluation of the copy, rather the focus has shifted to the interplay between the original and the copy—a potential that was already recognized in art history. With a view to the generative and mimetic processes that constitute this relationship, not only are value systems derived from the establishment of bourgeois ownership privileges in the nineteenth century being questioned anew today; there is also debate about the (digital) control mechanisms that lead to the increasing disappearance of the practice of copying from the realm of the visible. Various artistic movements explored original and copy throughout the twentieth century. In particular, the pre-war avant-garde and later neo-avant-gardist movements employed artistic processes such as collage and readymades to create new artifacts from found materials. With such forms of appropriation artists explicitly challenged and nuanced traditional categories like originality, authorship, or intellectual property. The computer’s capability to duplicate data without loss, however, antiquates these historical methods of dealing with original and copy for current practices. The ubiquity of various copying techniques confirms that this phenomenon has now established itself both as an artistic and everyday process. But as its mechanisms—largely supported by readymade digital technologies—frequently remain hidden and increasingly immaterialize, especially the functionalities and logics of copying are up for discussion. Artistic practices that utilize the same copying methods they research can be particularly effective for such an investigation into the interplay between original and copy. The previously merited distinction between original and copy is no longer of importance in the twenty-first century. The former opposites have combined into a new entity. They are not conceived as temporally or hierarchically consecutive but as parallel and equal. In order to examine this circumstance with methods from the humanities, Gisela Fehrmann and other authors proposed viewing the relationship between original and copy as a “process of transcription”, which reveals the relationality of these categories: “The ‘characteristic relational logic’ of processes of transcription consists in the fact that […] the reference object precedes the transcription as a ‘pre-text’, but its ‘status as a script’ is only conferred by this process.”1 Such a thought loop can also be applied to the act of citation: This special form of textual copy refers to a precedent, another text, yet the source only attains the status as original through the selection and reference process. Looking at “secondary practices of the secondary”, as the author team around Fehrmann poignantly phrased, facilitates, on the one hand, an investigation of the effects these phenomena of appropriation have on the content, formal, and material conditions of current artistic production. On the other, it allows one to simultaneously practice this act of copying based on repetition and to investigate it within this practice itself. The aim of artistic explorations of original and copy is widely to construct a space of resonance which is not characterized by bipolarity rather where the permanent oscillation between the poles constitutes a self-reflexive practice.2 For only a space where the continuous flux and reflux between original and copy intrinsically represents the unity of the two elements bears the potential to generate new forms of knowledge and artistic practice.

Repetition and Repeatability

The basis for these forms of self-reflection are ideas about the reality-forming dimension of language that philosopher John L. Austin formulated in the early 1960s in his book How to Do Things With Words.3 In contrast to most words which simply describe the world, linguistic expressions that Austin called “performative utterances” create reality. They perform an action. Austin provides the word “yes” in marriages as an example and links the success of such a speech act with its repeatability. That means a “yes” articulated by the couple performs the act of marriage when the word is incorporated in a ritualized and generally agreed upon form, such as the wedding ceremony. Only then does “yes” create reality. That speech acts do not describe but create reality can be applied to the inextricable relationship between original and copy. A key factor is the repeatability of linguistic expressions, the main aspect of performativity, which—in keeping with Austin—was further developed by Jacques Derrida with the term “iterability”4 and later elaborated by Judith Butler5 in the sense of a political act. Repeatability is not only decisive for the success of speech acts—moreover, its iterative and repetitive character forms the causal basis of the phenomenon of copying. In order to have a reality-forming effect, linguistic expressions need to happen within specific conventions. Analogously, art, too, must act within a framework based on conventions in order to be perceived as such. Drawing upon these traditional and repetition-based principles, Dorothea von Hantelmann establishes “how every artwork, not in spite of but by virtue of its integration in certain conventions, ‘acts’” and “how these conventions are co-produced by any artwork—independent of its respective content”.6 Hence, the rules established in the art field in the past continue to have an effect in the present of the respective current artistic work and elicit effects both in the here and now of the artistic activity itself as well as in the conditions of the art field which led to this activity. An analogy to the aforementioned “processes of transcription”, where the original is only constituted as such when the copy refers to it, becomes quite evident. Building on Anke Haarmann’s thoughts about the methodology of artistic research, it may be concluded that these forms of performative research facilitate two things above and beyond the aesthetic experience: first, the opportunity to reflect upon “the conditions of one’s own position in the medium of artistic practice”; and second, “to investigate”—and, not least, express—“something with the specific means of art in the process of artistic knowledge production”.7 Consequently, certain themes and matters are not viewed exclusively from a supposed outsider position, rather the performative art practice is, at the same time, active within the respective field that is the subject of analysis. Such an artistic working method not only pushes dichotomous dualities like original and copy to their limits—it is a methodological approach whose self-reflexive and performative character allows it to delve into social discourse because it was derived directly from it.

Post-Media Condition, Post-Digital Tendencies

In contrast to pre-digital artistic tendencies, like the readymade, pop, conceptual, or appropriation art, which tried to dissolve the boundaries between original and copy, the copy has become constitutive to contemporary art production. In the “post-digital”8 age the interplay between original and copy has evolved into an overarching phenomenon. Also outside of digital contexts it has become inscribed into artistic production, reception, and distribution processes and—whether forced consciously or unconsciously by the artists—participates in their shaping. An example of a performative research in which the interplay between original and copy under the described conditions is not only reflected upon but also generated from this in-between is provided by the Brit Mark Leckey with The Universal Addressability of Dumb Things (2013) [fig. 1]: Conceived by Leckey as a touring exhibition of the Hayward Gallery in London, works by colleagues such as Martin Creed, Jonathan Monk, Louise Bourgeois, or Ed Atkins are juxtaposed with numerous pieces of art history, everyday culture, and artifacts of other sorts. In specially designed displays the artist-curator presented objects like a mummified cat, a singing gargoyle, a giant phallus from the film “A Clockwork Orange”, and a cyberman helmet. [fig. 2] [fig. 3] All of these objects originated from a collection of images that Leckey had compiled over the years while randomly browsing the Internet and saved to his hard drive. He activated this incidental collection for The Universal Addressability of Dumb Things and triggered a performative cycle by presenting the depicted objects in the exhibition. The digital data materialized in the show and aggregated9 into clusters, similar to how the files were stored in folders on Leckey’s computer. The three-dimensional things took a detour as two-dimensional images in virtual space before reappearing in a three-dimensional form once again as items in an exhibition. The objects, which he collected as digital depictions of real objects, exist today as exhibition views and are likely again circulating in the social networks where the artist once found them. Leckey went a step further when he transformed “The Universal Addressability of Dumb Things” into an installation called UniAddDumThs (2015) [fig. 4] for a subsequent exhibition series. At Kunsthalle Basel, among other places, he presented select things from the already selected collections of things as 3D prints, photographic or otherwise reproductions. Elena Filipovic, director of the exhibition house, wrote about the project: “Having thrown open the floodgates of his hard drive and watched as digital bits and bytes summoned forth actual atoms and matter, materializing in a slew of undeniably real things, Leckey welcomed, organized, and installed them again and again during the exhibition tour of The Universal Addressability of Dumb Things. Yet I can’t help suspecting that he was most fulfilled when the show was still yet to be made, when he was busy collecting all those jpegs and mpegs that constituted the potential contents of the show.”10 Here Filipovic addresses precisely this in-between in which self-reflection couples with performativity into a form of research which is only active while doing it. The processes researched and practiced by Leckey in The Universal Addressability of Dumb Things and UniAddDumThs are informed by the digital, yet they do not have to manifest in a digital form necessarily. The point of this performative research—which Filipovic referred to as an “artwork-as-ersatz-exhibition”—is to remain in this fluctuation between the apparent immateriality of digital technologies and their material manifestations. Leckey links this poignantly with the reciprocity between original and copy. While Walter Benjamin stated in the early twentieth century, “To an ever greater degree the work of art reproduced becomes the work of art designed for reproducibility”,11 twenty-first-century explorations of original and copy propel this thesis. The act of copying is no longer viewed from the perspective of the original, as it was in Benjamin’s time. And the copy is not conceived as a nemesis of the original either. Here the focus is on artworks fundamentally oriented upon re-installability, re-performability, serializability, versionability, and photographability—or even “jpeg-ability”.12 The transition from technical to electronic and digital media and the corresponding changes in our experience have regularly been the subject of media science debates in the past years. However, they were frequently addressed from a one-sided technological viewpoint, thereby breaking the connection with the fine arts. This is owed not least to so-called media art itself, which has distanced itself from traditional fine art formats since the 1980s with its special institutions, festivals, and exhibitions. Beryl Graham and Sarah Cook see the period “when the term new in new media art was most widely accepted and used” in the years between 2000 and 2006: “After the hype of those years, from 2006 until today, understandings of new media art in relation to contemporary art have significantly changed, and the use of the term new has become outmoded.”13 In the 2005 exhibition The Post-Media Condition at the Neue Galerie Graz, Peter Weibel, with reference to Rosalind Krauss,14 still dealt with the question “whether the new media’s influence and the effect on the old media […] weren’t presently more important and successful than the pieces of the new media themselves”.15 Today, the answer is clear. Artistic practices like Mark Leckey’s not only convey copying methods, more generally, they also help retrace the tracks left in contemporary fine arts by artistic forms of expressions previously distinguished with the attribute “new”. As opposed to Lev Manovich’s juxtaposition of “Duchamp-land” and “Turing-land”,16 two terms that embody the dichotomy between traditional fine arts and media art, the oscillation between analog and digital, between image and object, between Internet and exhibition space dissolves precisely this distinction. Employing the multifaceted processes that reside between original and copy, in The Universal Addressability of Dumb Things and UniAddDumThs Leckey not only investigates the changes in appropriation strategies in a post-digital context, but also the effects that this phenomenon has on the fine arts. Ultimately, the focus becomes how the structural requirements, the manifestations, and the perceptions of “Duchamp-land” are transformed by a “Turing-land” that is increasingly in a state of dissolution—how our medial realities are changing.

[1] Cf. Gisela Fehrmann et al., Original Copy—Secondary Practices, in Media, Culture, and Mediality: New Insights into the Current State of Research, eds. Ludwig Jäger, Erika Linz, and Irmela Schneider (Bielefeld: transcript Verlag, 2010), 77–85, here 79. [2] Such an approach is employed, e.g., in the artistic-scientific research project originalcopy—Post-Digital Strategies of Appropriation (University of Applied Arts Vienna). See: http://www.ocopy.net [3] Cf. John L. Austin, How To Do Things With Words: The William James Lectures delivered at Harvard University in 1955, ed. James O. Urmson (Oxford: Oxford University Press, 1962). [4] Cf. Jacques Derrida, Signature Event Context, in Limited Inc (Evanston: Northwestern University Press, 1988[1972]), 1–25. [5] Cf. Judith Butler, ‘Excitable Speech’ A Politic of the Performative (New York: Routledge, 1997). [6] Dorothea von Hantelmann, How to Do Things with Art. The Meaning of Art’s Performativity, trans. Jeremy Gaines and Michael Turnbull (Dijon: les presses du réel, 2010). [7] Anke Haarmann, “Gibt es eine Methodologie künstlerischer Forschung?” in Wieviel Wissenschaft bekommt der Kunst? Symposium of the Science and Art working group of the Austrian Research Association, Academy of Fine Arts Vienna, November 4–5, 2011. [8] Cf. Kim Cascone, “The Aesthetics of Failure: ‘Post-Digital’ Tendencies in Contemporary Computer Music,” Computer Music Journal 24, 4 (2000): 12–18. [9] Cf. David Joseslit, “Über Aggregatoren,” in Kunstgeschichtlichkeit. Historizität und Anachronie in der Gegenwartskunst, ed. Eva Kernbauer (Paderborn: Wilhelm Fink, 2015), 115–129. [10] Elena Filipovic, Mark Leckey. UniAddDumThs, in The Artist As Curator. An Anthology (London: Koenig Books / Milan: Mousse Publishing, 2017), 384. [11] Walter Benjamin, The Work of Art in the Age of Mechanical Reproduction, in Illuminations, ed. Hannah Arendt, trans. Harry Zohn (New York: Schocken Books, 1969[1935]), 6. [12] Hanno Rauterberg, “Heiß auf Matisse,” Die Zeit 17 (Apr. 20, 2006), 20. Translated for this publication. [13] Beryl Graham et al, Rethinking Curating. Art after New Media (Cambridge, MA: The MIT Press, 2010), 21. [14] Cf. Rosalind E. Krauss, A Voyage on the North Sea. Art in the Age of the Post-Medium Condition (London: Thames & Hudson, 1999). [15] Peter Weibel et al., The post-medial condition, Artecontexto 6 (2005): 12. [16] Lev Manovich, The Death of Computer Art, Rhizome (Oct. 22, 1996), http://rhizome.org/community/41703 (accessed on Apr. 1, 2018).

https://www.springerin.at/en/2018/2/kopieren-als-performative-recherche/

0 notes

Text

Modes of Representation

An exhibition by Annie Teall and Danny VanZandt

Alexander Calder Art Center Padnos Gallery (January 23 - February 3)

“il n'y a pas de hors-texte” (“There is no outside of context”)[1]-Jacques Derrida

“it’s stability hinges on the stability of the observer, who is thought to be located on a stable ground of sorts”[2]-Hito Steyerl

The Modes of Representation exhibition seeks to explore artworks that deal with the arbitrary nature of systems of representation— i.e. to exhibit representations of reality that self-reflexively deconstruct the very modes in which they operate. Modes of Representation seeks to find connections between various structures of representation, from language’s illusive appearance as the truth of things, to databases attempts to display an encyclopedic and objective knowledge of the world, as well as spatial representations in the form of cartography and linear perspective, with their suggestion of an author and viewer at the “center of the universe”[3].John Berger writes in Ways of Seeing of how, “perspective makes the single eye the centre of the visible world. Everything converges on to the eye as to the vanishing point of infinity. The visible world is arranged for the spectator as the universe was once thought to be arranged for God.” His pulling back of the curtain on the representational form of linear perspective displays how it relies directly on a stable viewer (i.e. a stable origin point) located at the center of the universe, a model no different from Derrida’s deconstruction of logocentrism[4] as a closed off system of signs lacking a “transcendental signifier”. Language has no stable origin point (aforementioned “transcendental signifier”), rather words merely refer back to other words ad infinitum, and so it is impossible for language to transcend its context—you can’t talk about language without using language.

Within both of these ideas is a referral back to Lacan’s “Order of the Symbolic”, the idea that we are trapped within a semiotic matrix with no access whatsoever to “the Real.”[5] Artists working from the early twentieth century (Magritte, DuChamp) up through and during the postmodern era (Kosuth, Tansey, Salley, etc.) have explored this space between the representational and the real, exposing its fault lines and mining them for a greater understanding of how we engage with the world around us, and furthermore how semiotics mediates that relationship. But with the turn to New Media[6] in the last couple of decades this interrogation of the simulacrum has become far more important due to the prevalence of online databases such as Facebook, Google Maps, and Wikipedia that can often appear deceptively as objective, “god’s-eye-view” representations of reality (not to mention the possible future of Big Data as the panopticon, seen through the controversy surrounding datamining regarding at home smart devices like the Amazon Echo and Google Home).

This hypothetical “god’s eye view”, also known as the ‘Archimedean point’, “a point ‘outside’ from which a different, perhaps objective or ‘true’ picture of something is obtainable”[7] serves as a spatial model for Derrida’s claim re: language that “there is no outside of context”. Another example of this Archimedean third person perspective would be cartographic representations of real space. Maps not only help to display the way in which we orient ourselves relative to the space around us, but also how this spatial orientation becomes a common model for how we understand ourselves relative to the world around us (and it should be noted that spatial metaphors pervade language). This sense of psychological projection, or “mapping”, also recall the ‘psychogeography’ of Debord and the Situationists, defined as "the study of the precise laws and specific effects of the geographical environment, consciously organized or not, on the emotions and behavior of individuals."

Annie Teall’s Head Smashed in Buffalo Jump succinctly joins together these reflections on the arbitrary nature of sign systems, objective representation, spatial orientation, and the primacy of databases as symbolic form in the age of New Media[8]. Head Smashed in Buffalo Jump displays a process of deconstructing the epistemology of Wikipedia and Google Maps, both supposedly objective databases[9], by working through Wikipedia from an arbitrarily chosen starting page, and then following the internal path of hyperlinks from page to page by clicking the first locational link on each page. The final image is the product of then further replicating that path of linked locations in Google Maps, screen-capturing each route, and collaging them together.

Similarly, in her piece In Free Fall, which draws its name from Hito Steyerl’s essay on how vertical perspective acts as a visual model for Western philosophy (“we cannot assume any stable ground on which to base metaphysical claims or foundational political myths”), we again see the fragmentation of spatial representations drawn from Google Maps. However, this time these cartographic images are morphed with a striped motif that appears throughout her work. This motif, an allusion to the op-art work of Bridget Riley, normally appears in her work as a static, flat, two-dimensional image, but here we see it presented with a sense of three-dimensional depth, climbing the various image panes and drifting back and forth between foreground and background. It acts as a visual pun, calling our attention to how all two-dimensional representations of three-dimensional space are optical illusions.

Within my work there is a similar sense of poking fun at the Western tradition of representational art by means of calling attention to the slipperiness of signification that arises within images and language as a result of their dual nature as material and virtual. As Barthes wrote in Camera Lucida “you cannot separate the windowpane of the image from the landscape” [10]. When we cast our gaze upon the image we see how our casual perception can often find it hard to reconcile that the image we are looking at is both the subject matter the image presents to us, as well as the image screen itself (the photo paper, canvas, monitor, etc.)—‘ceci n’est pas une pipe’. [11] My paintings are images of images, both in that they are paintings of subject matter not drawn from directly from life, but rather secondarily from photographs, as well as in that they are paintings of photographs that within them contain other paintings of photographs. By operating as meta-paintings (paintings of paintings) they subvert the narrative continuity of the image screen and draw attention to themselves as authored, furthermore reminding us that our perception of reality itself is authored. The wall between image and reality has vanished, leaving us in an infinite regression of constructed images of reality. There is no “outside of context”, we are lost in the funhouse.

-Danny VanZandt

[1] Jacques Derrida Of Grammatology

[2] Hito Steyerl In Free Fall: A Thought Experiment on Vertical Perspective

[3] John Berger Ways of Seeing

[4] “the tradition of Western science and philosophy that regards words and language as a fundamental expression of an external reality. It holds the logos as epistemologically superior and that there is an original, irreducible object which the logos represents. It, therefore, holds that one's presence in the world is necessarily mediated. According to logocentrism, the logos is the ideal representation of the Platonic Ideal Form.” -Wikipedia ‘Logocentrism’

[5] Jacques Lacan, Écrits: A Selection

[6] Lev Manovich The Language of New Media

[7] ‘Archimedean Point’ The Oxford Dictionary of Philosophy

[8] Lev Manovich Database as Symbolic Form

[9] "attempt to render the full range of knowledge and beliefs of a national culture, while identifying the ideological perspectives from which that culture shapes and interprets its knowledge" - Edward Mendelson Encyclopedic Narrative

[10] Roland Barthes Camera Lucida

[11] Rene Magritte The Treachery of Images

#art#contemporary art#annie teall#danny vanzandt#gvsu#grand valley#visual studies#visual culture#roland barthes#lev manovich#jacques derrida#jacques lacan

1 note

·

View note

Text

Preamble, Raison d`être

We live in an era of disruption in which powerful global forces are changing how we live and work. As a global community we face climate change and the consequences of global warming; resource scarcity – particularly for energy, minerals and freshwater; societal ageing, as life expectancy increases and birth rates concurrently fall; a growing surplus of global poor who form an ever-larger ‘unnecessariat’; and, perhaps most critically, a new machine age which will herald ever-greater technological unemployment as progressively more physical and cognitive labour is performed by machines, rather than humans. O n an individual Level we face another challenge. Since Information Wants To Be Free t he internet has become our primary source of knowledge and guidance. What used to be the idea of a decentralized stateless society in which people interact with purely voluntary ideological and moral transactions is gone and the virtual space has become another battlefield. Our vision is increasingly universal, but our agency is ever more reduced. We know more and more about the world, while being less and less able to do anything about it. I f we find that play is based on the manipulation of certain images, on a certain "imagination" of reality (i.e. its conversion into images), then our main concern will be to grasp the value and significance of these images and their "imagination".

Our left behind traces are the currency in which we pay for our curiosity. Data is an asset, an asset that grows in value through use. A single person’s data is not very valuable. Combining the data generated by thousands of people is a completely different story. Coupling that with data generated in different situations, combining datasets, creates new insights and value for different actors and stakeholders. Marx had revealed the danger of the profit motive as the sole basis of an economic system: capitalism is always in danger of inspiring men to be more concerned about making a living than making a life. U nfortunately there is massive propaganda for everyone to consume. Consumption is good for profits and consumption is good for the political establishment.

In 2018, the Oxfam report said that the wealth gap continued to widen in 2017, with 82% of global wealth generated going to the wealthiest 1%. The 2019 Oxfam report said that the poorest half of the human population has been losing wealth (around 11%) at the same time that a billionaire is minted every two days.

Fake news travels faster and further on social media sites. Algorithm-driven news distribution platforms have reduced market entry costs and widened the market reach for news publishers and readers. At the same time, they separate the role of content editors and curators of news distribution. The latter becomes algorithm-driven, often with a view to maximize traffic and advertising revenue. That weakens the role of trusted editors as quality intermediaries and facilitates the distribution of false and fake news content.

The resulting sense of helplessness, rather than giving us pause to reconsider our assumptions, seems to be driving us deeper into paranoia and social disintegration: more surveillance, more distrust, an ever-greater insistence on the power of images and computation to rectify a situation that is produced by our unquestioning belief in their authority.

Mobile technology has spread rapidly around the globe. Today, it is estimated that more than 5 billion people have mobile devices, and over half of these connections are smartphones. Today, if you don’t bring your phone along it’s like you have missing limb syndrome. It feels like something’s really missing. We’re already partly a cyborg or an AI symbiote, essentially it’s just that the

data rate to the electronics is slow. In some sense we have become screens for giant displays. This screen is established by the submersion of the individual in a flux of disparate messages, with no hierarchies of principles - a flat s urface, on which p ictures or words are s hown. We`re all products of our environment, therefore we need to consider how screens affect us. For instance we should look at the flat nature of screens. Two dimensional thinking implies concepts that are flat or only partially representative of the whole. Three dimensional thinking implies the first part of 2d thinking conjoined with intersecting dimensions rendering a deeper field of meaning.

On the other hand it’s not how long we’re using screens that really matters; it’s how we’re using them and what’s happening in our brains in response. So what happens now, that we move less around just by ourselves, that we spend more time in public transport and soon in self driving cars, always linked to our devices? As tranquillity vanishes, the “gift of listening” goes missing, as does the “community of listeners.” Our community of activity [Aktivgemeinschaft] stands diametrically opposed to such rest. The “gift of listening” is based on the ability to grant deep, contemplative attention—which remains inaccessible to the hyperactive ego. Screen addiction is real.

If one only possessed the positive ability to perceive (something) and not the negative ability not to perceive (something), one’s senses would stand utterly at the mercy of rushing, intrusive stimuli and impulses. In such a case, no “spirituality” would be possible. If one had only the power to do (something) and no power not to do, it would lead to fatal hyperactivity.

What we miss is time for contemplation, to listen to all senses of our bodies. Since we know, that the visual part of our brain is the dominant part of and if you can get it`s attention and get it on your message it talks the rest of your brain into anything you want it to. However it is the one sense which gets triggered in particular by the two dimensional screen.

The body is nothing but the fundamental phenomenon, to which, as a necessary condition, all sensibility, and consequently all thought, relates in the present state of our existence meaning the body is the door to our world and the overuse of one in particular warps the general perception and further what we make of it.

This becomes even more immanent when thinking on relationship and discussion. You cannot not communicate. Every behavior is a kind of communication. Because behavior does not have a counterpart (there is no anti-behavior), it is not possible not to communicate. Therefore we miss important parts of a message, if it gets reduced to its verbality, meaning relationship and communication cannot be as strong as we wish them to be. Something is missing.

In an age when drugs or psychological techniques can be used to control a person's actions, the problem of free control over one's body is no longer a matter of protection against physical restraint. One must reclaim connection to his or her body in order to truly communicate - And speak about his or her body in order to understand his or her true perspective on this world.

1 note

·

View note

Text

Final Major Project Proposal

Project Proposal

Over the course of the last 7 months, my system of reasoning and understanding the world has changed. Coming from a Science A level background, I developed a strong appreciation for physical and mathematical concepts, however my real passion lies in using these skills as inspiration and as tools to influence my creative work. I have found the core of my intuition has shifted from seeking conclusion and is currently learning to become accustomed to treating things as indeterminate in nature.

I began the foundation exploring three dimensionality and light through the creation of maquettes. This work became more related to architecture which I will begin studying in September this year. The main driver of this work was a curiosity to learn about properties of materials and shapes to update my preconceptions of how the physical world works.

I have redirected the project’s aim since writing the first draft. The initial idea being to capture the progression of my thoughts through writing. After some retrospective thinking I’ve realised a project of this nature would be too centred on the self. Exploring my thought patterns wouldn’t allow for the kind of artistic development and research I am looking for.

The driver for the FMP comes from a compulsion to explore symbolic representation of information.

The definition of the symbol I will use is “a thing that represents or stands for something else, especially a material object representing something abstract.” This definition comes from Oxford University Press’s dictionary. The material in my case will be communicated through drawing in a number of different mediums such as paint film/photography and material.

What makes something ‘symbolic’ can be subjective.

The idea for the project came from sketching in cemeteries and realising that cemeteries are massive data centres. Cemeteries are spaces that you can walk through and access pockets of numerical information that connect to human lives. The change to viewing cemeteries from an information perspective then begged the question, “What else can I consider through the lens of information?”

In this I have an idea to create some mini film pieces which document my findings. Some of my thought processes will be documented through photography accompanied with writing. The starting point for this thought documentation will begin with photographs and thoughts from the cemeteries I’ve spent time in. I’ll be looking at objects and environments and investigate their link to informatics. My understanding of informatics will develop with the project and as I continue to read ‘The Information’ by James Gleick. I’m unsure to what depth I will discuss informatics in the technical sense however I would like to feed in some knowledge of information theory that I have learnt.

Continuing my interest in materials and structure, the project will also look at information through physical objects. An idea is to film sheet materials whilst manipulating them with forces accompanied with sounds and textures to communicate my thoughts responding to music.

The project, critical analysis of my work and afterthoughts will be documented on this website. I am going to work on ‘mini projects’ that together will form the final major project. I’ll be seeking and responding to feedback from critical reviews and discuss my thoughts with friends and other people doing their projects. I would like to gain perspective on my work by learning about other people’s project journeys and keep going to exhibitions whether I think they have a relevance to my project or not in order to feed my brain with a steady flow of information.

0 notes

Text

Activity 4: Exploration of Science Education Standards

Describe what is meant by Three-Dimensional Learning.

Three-Dimensional Learning is the process of combining practices, disciplinary core ideas, and cross- cutting concepts to help support teaching curriculum and state standards used in classrooms.

Practices help to define behaviors that scientists engage in as they investigate and build models and theories about the natural world and engineering practices. It is seen more as a skill to show scientific investigation and knowledge on how to complete the tasks, and it highly encourages inquiry.

Cross-Cutting Concepts is the process of linking different domains of science. These are concepts that need to be made apparent to students, so they can create organizational schema. Examples under cross-cutting concepts include cause and effect, proportion, and patterns.

Disciplinary Core Ideas help focus the instruction and assessment on the important concepts of science. They must have at least two of the following in order to be a core idea:

· Have a wide importance across many science or engineering concepts or key organizing concept across one discipline

· Provide an important tool for understanding or investigating additional complex ideas

· Relate to interests and life experiences of students or connected to a person concerning the scientific area

· Be teachable and learnable over multiple grades to increase knowledge depth

The ideas are grouped by physical sciences, life sciences, earth and space sciences, and engineering, technology and applications of science.

How are the Wisconsin Standards for Science (WSS) connected to other disciplines such as math and literacy?

Disciplinary literacy is the combination of the material knowledge, experiences, and skills combined with the ability to ready, write, listen, speak, and think critically to complete a task for a specific field of study. So, in science students are able to not only read directions for experiments, but are able to listen to directions, learn new vocabulary words, watch videos, and complete experiments to help combine literacy and science.

Disciplinary math is the combination of the material knowledge, experiences and skills combined with the ability to understand numbers, operations, directions, orders, measuring, and equations. This is combined in science as students have to measure materials, follow directions and operations, and be able to solve equations and understand numbers in order to get answers for an experiment.

The goal of all subjects should be cross disciplinary as students are able to get more out of learning, when they are learning more than one concept at once. They learn more with less burnout.

Describe something that you have done either in this class or outside of this class, perhaps in previous classes, that indicates that you have met the Performance Indicator.

SCI.ESS2.B.2 – Maps show where things are located. One can map the shapes and kinds of land and water in any area.

In 3rd grade we were introduced to maps by being shown different examples. However, once we understood what a map was and what it showed we were told to make our own. We were told that we had to create a map of our neighborhood. We drew out our map and created a key to help show what was located where. On my map I put things like houses, a pond that was behind my house, roads, and the park that was located in my neighborhood. By drawing that out and creating a key, I was able to help understand the location of everything in my neighborhood and how to portion that on a map. I was also able to show where there was water located by me, along with being able to tell what was located where based off the key that was on the map.

SCI.ETS3.C.K-2 – An engineering problem can have many solutions. The strength of a solution depends on how well it solves the problem.

In 5th grade, we had a competition in our science class. We were told that there were people in a car (a toy hot wheels car) that was stranded on an island (one desk) and needed to somehow get across to land again (another desk). We were only given toothpicks, marshmallows, Popsicle sticks, a ruler, scissors, and a foots worth of tape. In small groups of 4-5 people we had to figure out how we were going to get the car across by building something. Each group made something different including bridges and boats. It was obvious that some structures were stronger and solved the problem more than others as some structures didn’t even hold the weight of the car. This helped to show I met the performance indicator as I was able to solve an engineering problem, could see each group made something different so there were many solutions, and the fact that some structures didn’t even hold the car helped to show some solutions aren’t as strong if they don’t solve the problem.

SCI.LS2.A.2 – Plants depend on water and light to grow.

In my 6th grade science class, we took seeds (they were a leafy grass seed) and we planted them into soil. We then had a control group which was given 50 ml of water everyday and kept under a light that represents sunlight. We then had three study groups, one where we gave the plant 50 ml of water every day and kept it under no light, another where we just left the plant under the light without giving it water, and the last we gave the plant 50 ml of sprite everyday and kept it under the light. We then studied the plants everyday for two weeks and measured how tall the plant had grown each day and recorded the data. We found out our control group grew the tallest, with the other three hardly showing signs of growth or didn’t grow anything at all. That study really helped to show that plants need light and water to grow otherwise they don’t survive very well if at all.

0 notes

Text

Astronomical ‘Rosetta Stone’ to Change Our Understanding of the Universe

It was the tiny wobble that shook science. On September 14, 2015, the billion-dollar, multi-decade mega-experiment LIGO (the Laser Interferometer Gravitational-Wave Observatory) felt gravitational waves rippling through Earth, confirming one of the thorniest predictions of Einstein’s theory of general relativity and inaugurating the era of gravitational wave astronomy.

youtube

This simulation shows the final stages of the merging of two neutron stars.

But from that very first Nobel Prize-winning moment, researchers were already thinking about the next big thing: zeroing in on a burst of light from a cosmic smashup that produced the gravitational waves. Finding a light counterpart for a gravitational wave source would instantly fix the newborn science of gravitational wave astronomy to a firm observational foundation and give astrophysicists a multi-dimensional new look at the sky.

Before LIGO’s new-and-improved detectors booted up in 2015, dozens of telescope teams were already lined up to chase down every LIGO find. All signed confidentiality agreements, promising to keep their targets secret. For years, their attempts turned up nothing, even as LIGO racked up three more detections.

But now, thanks to a new gravitational wave detection that’s unlike any of the previous four, they have finally done it. The discovery was announced this morning at the National Press Club in Washington, D.C., and will be detailed in papers in Physical Review Letters, Nature, Astrophysical Journal Letters, and Science.

In the wake of the discovery, astronomers have mobilized some 70 ground- and space-based observatories to examine the source of the gravitational waves, which they think is a neutron star collision in a galaxy 130 million light years from Earth. Combining radio, optical, infrared, gamma-ray, X-ray, and gravitational wave observations, astrophysicists have created the most complete description ever of a neutron star merger, giving resounding closure to a whole constellation of astronomical mysteries and marking a major advance in the way we learn about our universe.

The Discovery

The morning of August 17, Edo Berger, a professor of astronomy at Harvard and the leader of one of the LIGO follow-up teams, was midway through a committee meeting when both his office and cell phones started ringing. He ignored them at first, but the calls kept coming. Finally, Berger picked up his cell phone. The screen was dense with alerts. First, one from the Fermi Gamma-ray Space Telescope: its gamma-ray burst detector had picked up a two-second blast of gamma rays. That wasn’t unusual; Fermi snags a new gamma ray burst almost daily. But then came a rare alert from LIGO. Earlier that morning, the detector in Hanford, Washington, had detected gravitational waves. Berger checked the time stamps: the events were only two seconds apart.

That’s when Berger kicked everybody out of his office.

Before LIGO, almost everything we knew about the universe came to us in the form of light or other electromagnetic waves. The sky was a silent movie, a riotous rainbow reel of radio waves, visible light, X-rays, gamma-rays, and everything in between. But the movie was missing a soundtrack.

Gravitational waves are that lost soundtrack. Gravitational waves are ripples in space-time that radiate out from massive objects when they change speed or direction. They carry information about some of the most awesome events in the universe—black holes colliding, dead star cores crashing into each other, mega-stars going supernova—events about which light provides only limited clues. The gravitational wave energy spilling out in the final moments of a black hole merger can easily surpass the light energy of every star in every galaxy in the sky.

An artist’s illustration of two merging neutron stars. The rippling space-time grid represents gravitational waves that travel out from the collision, while the narrow beams show the bursts of gamma rays that are shot out just seconds after the gravitational waves. Swirling clouds of material ejected from the merging stars are also depicted. The clouds glow with visible and other wavelengths of light.

The challenge, though, is lining the soundtrack up with the picture, for despite its exquisite sensitivity, LIGO is quite poor at “localizing” gravitational wave sources—that is, at pinning down their sky coordinates and distance from Earth. LIGO’s yawning search areas span hundreds of square degrees, making it difficult for telescopes to cover them sensitively. Even if a transient light source does turn up in the search zone, it’s practically impossible to be sure it’s not just a coincidence.

Researchers made significant headway on the localization problem in August, when LIGO teamed up with Virgo, a freshly-upgraded gravitational wave observatory in Italy. LIGO and Virgo made their first joint gravitational wave detection on August 14. The search area for the August 14 event was about ten times smaller than it had been for LIGO’s solo detections, but still, astronomers could not find an electromagnetic counterpart for the gravitational waves.

That wasn’t actually so surprising, given the source of the gravitational waves: two black holes merging into one. Though some subspecies of black hole mergers may give off electromagnetic light, most are probably as dark as their progenitors.

The alerts on Berger’s phone suggested that the new detection was something different. All four of the previous gravitational events were split-second blips: when black holes swallow each other up, it’s a tidy, one-gulp affair. The new find rumbled on for more than a minute, hinting that it might be something else, something messier. Plus, the strength of the gravitational wave signal meant that the colliding objects were relatively lightweight, with a combined mass of about three Suns. That pointed away from a black hole merger and toward something LIGO had never seen before: the collision of two neutron stars.

A neutron star is not really a star at all. It is the bare stellar corpse left behind by a supernova explosion, transformed by cascading gravitational collapse into something as dense as an atomic nucleus. (Astronomers like to boast that one teaspoon of neutron star stuff would weigh billions of tons.) “When we call them stars, we’re short-selling how strange and exotic they are,” says Robert Owen, a physicist at Oberlin College in Ohio who models black hole collisions as part of the Simulating eXtreme Spacetimes collaboration. “They are essentially a single atom the size of a star.”

There could be billions of neutron stars in the Milky Way alone, many of them orbiting each other in binary pairs. Over billions of years, those pairs gradually spiral in toward each other until finally the two neutron stars collide, merging into either a black hole or a new, more massive neutron star. Astronomers can’t actually see the neutron stars merging, but theorists predict that telescopes should be able to detect telltale aftereffects: a short burst of gamma rays and a brief, relatively dim flash of light called a “kilonova,” powered by the radioactive decay of shrapnel from the smashup. This shrapnel could also account for the heavy elements, like gold and platinum, in the universe.

Astronomers had some evidence linking short gamma-ray bursts and kilonovae with neutron star mergers, Berger says, but the evidence was circumstantial. For years, researchers had been waiting for an opportunity to solidify the links. Berger saw the alerts on his phone and knew that this might be that chance: If astronomers could find a kilonova that matched the Fermi gamma-ray burst and the gravitational wave detection from LIGO and Virgo, that would clinch it. The three observations together would be a sort of astronomical Rosetta Stone.

Before Berger and his team could start searching for that kilonova, though, they had to know where to look. And the first LIGO alert provided no information at all on where astronomers might point their telescopes.

The problem was that only one of LIGO’s two detectors had picked up the signal. Though it is described as a single observatory, LIGO is actually made up of two instruments, spaced 2,000 miles apart. One half of LIGO sits in Livingston, Louisiana, while its identical twin is in Hanford, Washington. The redundant design helps physicists distinguish gravitational waves from other things that shake the Earth, like semi trucks and seismic motion. It also helps them locate gravitational wave sources.

While the Hanford detector reported the vibrations, in Louisiana, a “noise event”—rumbles from a passing vehicle, maybe—had caused signal-detection software to ignore the gravitational wave vibration. Acting quickly on the Hanford detection, LIGO scientists salvaged the Louisiana data, rooted out the signal, and correlated their findings with data from Virgo.

Virgo, meanwhile, barely saw it, suggesting that the gravitational waves were coming from somewhere inside its blind spot. Working backwards, researchers used that non-detection to generate a Virgo search zone, which they combined with the LIGO data to zero in on a 30-square-degree patch of sky around the constellation Hydra.

Hydra was set to rise over Chile, home to some of the most sensitive telescopes on Earth, at about 8 pm Eastern Time. Half a dozen teams scrambled to get ready. Berger’s group lined up the Dark Energy Camera, which is mounted on the Victor M. Blanco four-meter Telescope at the Cerro Tololo Inter-American Observatory. Because of its wide field of view, the camera would be able to cover the whole search area in just ten snapshots.

Meanwhile, other groups, including the DLT40 survey at Cerro Tololo and 1M2H project at the Las Campanas Observatory, searched the area galaxy by galaxy. They were all after for the same thing: some bright light source that hadn’t been there the night before. “It was like being in the emergency room,” Berger says. “Very tense, very exciting.”

Less than an hour later, Berger recalls, they had it: a burst of light coming from a galaxy called NGC 4993, 130 million light years away from Earth.

“That was an astounding moment,” Berger recalls. “We were all silent for a few minutes. We took a few minutes to absorb the shock of seeing this and then get the plan into action.”

The other teams had homed in on it, too, and within the span of less than an hour they had all transmitted the precise coordinates of the kilonova to the rest of the LIGO follow-up network. Three more groups reported seeing it later that night. It was the first time astronomers had ever matched up electromagnetic waves with gravitational waves.

Adding Observations

Since then, physicists have combined the gravitational wave signal with observations from some 70 different observatories, covering the electromagnetic spectrum from bottom (radio waves) to top (gamma rays) to piece together an unprecedented picture of the neutron star collision. “Studying black holes tells us how space-time behaves in its most extreme limits, and studying neutron tells us how matter behaves in its most extreme limits,” Owen says. Physicists have used data from the event to verify their working models of neutron stars, ruling out some wilder variants, and to confirm Einstein’s prediction that gravitational waves should travel at the speed of light.

They have also parsed the gravitational wave readings to reveal a remarkably detailed narrative of the neutron stars’ final moments, says Wynn Ho, an associate professor of mathematics and physics and astronomy at the University of Southampton, where he studies gravitational wave sources and is part of the LIGO Scientific Collaboration. At the moment LIGO first felt the gravitational waves, the neutron stars were circling each other about 15 times per second. For the next minute or so, they danced closer and closer, faster and faster. With less than a second left to go, they were whipping around each other 100 times each second.

At this point, gravity started to distort the shape of each neutron star, Ho says, raising up “tides” of star-stuff in the gap between the stars. The two stars touched and, finally, merged.

What did the cataclysm leave behind? If the remnant of the merger is spinning fast enough, it may be able to stave off gravitational cave-in and persist as a neutron star for a while. But ultimately, as it spins down, the star will give way and collapse into a black hole, Ho says. LIGO scientists tried but were unable to pick up the gravitational wave signature of a new, fast-spinning neutron star, but that doesn’t necessarily mean that merger immediately blinked into a black hole, he says.

Meanwhile, researchers have also confirmed the signatures of heavy elements like lead and gold near the kilonova, providing the best evidence yet that Earth’s store of these metals really does come from neutron star mergers. Berger also hopes to study the gamma ray burst, the nearest one of its kind with an established distance measurement, to learn more about how gamma rays jet out from a neutron star merger.

“It’s extremely rare to have one singular event that answers so many questions, that has so many firsts,” Berger says. “I’m trying to savor this moment, because I don’t think it happens very often.”

0 notes

Text

Assignment Two

Research

The next assignment involves us choosing what to code which can be: a 3D Object, Print or Other Experience.

I have decided to code something which can be printed and has the fundamental design principles of movement along with contrast and repetition. I was driven to this idea with the help of some precedent works:

Casey Reas:

http://reas.com/p6_images3_p/

I really liked these puff prints that Reas has produced. I think they are very simple yet very effective. I want to create something similar to this however I want to create a pattern that creates “Op Art” that when you look at it, it looks like it is moving.

Op Art Research

This type of art is very abstract and is usually in black and white. When people look at it, there is a sense of movement and vibration and sometimes along with this hidden images are visible. Op art is related to how vision function works, it is when two things are in contrastingly opposite. It is created either with lines and patterns, when they are close to each other it can create this effect. Another way is how our retina adjusts to light, with lines that create after images.

For further research, I had used key terms to find specific types of op art:

Generative Op Art:

http://www.soban-art.com/definitions.asp

From reading the link above I have learnt that usually Generative art is created through the use of algorithms and usually creates graphics which visually represent processes. I found some generative art processes in Op Art itself and the animations above are the ones I liked. The way the foreground and background move in motion reinforces the idea of Op Art. There are many design principles seen but the most obvious is the contrast between the black and white along with the movement.

Installation Op Art:

http://www.theartstory.org/movement-installation-art.html

Installation art is when art is placed inside a three dimensional space. I found installation art within Op Art that are visually different in the images above. In the first image it is an open room that is covered in a patterned wallpaper that looks like its moving towards towards the person emphasising the use of the fdp movement. The second image plays with the role of perspective. From what we see it looks like a girl walking across the rope and watching the cars moving along below her, I really liked this image as it showed Op Art in a different way.

Parametric Op Art:

http://mikkokanninen.com/new/en/parametric.html

The lines create such a cool illusion effect from the images above. This type of design is based through wave equations. I found some simple parametric art that were really effective. Both of the images I have chosen for this type of art base on the use of lines and fdp of movement. However the first one uses colour and is flat which has a different effect of Op Art. Compared to the second one which is kind of three dimensional and is black and white.

Data Visualisation Op Art:

http://searchbusinessanalytics.techtarget.com/definition/data-visualization

Data Visualisation consists of patterns and trends which are created to assist people comprehend the significance of data.

Digital Fabrication Op Art:

https://www.opendesk.cc/about/digital-fabrication

Digital Fabrication is a machine that produces fabric through the use of computer programs. The images above are some digital fabrication material that have been produced through a computer software. The first one is a dress that shows movement as the dress flows. The second one is a sheet of colourful patterns that follow op art by being so close to each other that vibration and movement is created when looking at it.

Through the help of these designs in the different key terms I have been able to visually identify Op Art in multiple ways. This has helped me wonder on which format to work with as there are many.

0 notes

Text

Value of Nothing by Daniel Rubinstein

Source: Journal of Visual Art Practice, 15:2-3, 127-137, 2016

‘the latent image has very specific meaning in photographic chemistry and physics as the invisible image left on the light sensitive surface by exposure’ p127

‘the latent image is both ‘fundamental’ and there is nothing to say about it... the invisible image that has been forgotten’ p128

‘the transition towards electronically produced and algorithmically computed images suggests that the non-visual aspects of images are at least as important to meaning creation as visual qualities’ p128

‘a multiplicity of forces... the triumph of the photographic image as the internally eloquent and profoundly apt expression of networked culture... how representation affects norms... the ethical and political consequences of the acceptance of images as purveyors of truth’ p128

‘The digital image is based in part at least on old means of production’ p128

‘the paradigmatic shift of digitality, which both overcomes and transfigures the limits of representation’ p128

‘a skeuomorph. - its adherence to the visual conventions of photography is simply an ornamental decision taken by the creators of the processing algorithms... the data captured by the camera sensor could be just as easily output as something completely different: a string of alphanumeric characters, a sound or even remain unprocessed as binary data’ p128

‘the notion of the digital image is a self-contained entity is misleading... the essence of the photographic image online has little to do with the semiotics of representation, economy of signs, signifiers or indices... the truth of the image has to be found in its inherent incompleteness’ p129

‘the digital image consists no in reflecting external reality but in showing the extent to which reality itself is inseparable from the computational processes that shape it... images do not appear as singular, individual or discrete’ p129

‘the ethics of traditional photography is inseparable from the assumption of correspondence between the image and some form or reality’ p129

On The Arcades Project by Walter Benjamin - ‘the most astounding aspect of modernity is in the way it both demolishes the recent past and recovers forms of pre-rational and pre-historic knowledge... modern technology paradoxically points towards the past as well as towards the future’ p129

‘new globalised modernity undermined the foundations of its own metaphysical order that was posited on linear chronological time, flat spatiality, rational logic and the clearest distinctions between spirit and matter, image and reality’ p130

‘the subject comprehends the world around through representing it to itself as an image’ p130

‘digitality renders the image as a calculable surface... the photograph is valued not as a singular object but as a resource to be deployed in endless and variegated successive contexts... the visible aspect of the digital image on the computer screen conceals the immense and unimaginable forces that operate behind the surface of the screen’ p131

‘this paradigm shift from the visual to the incalculable, photography has become something immense, even unimaginable... [some refer to] the post-industrial technical apparatus which supports image production... [as] ‘data shadows’‘ p131

‘the digital and networked image is not an image at all, rather it is a two dimensional subset of a four-dimensional object’ p131

‘as the digital image traverses the network it unfolds within two perimeters that constitute its envelope: the internal kernel of its specific origins and the conditions if creation and the external boundary that it limited only by the limit of the network’ p131/2

‘space of the visible image... must be considered phenomenologically as the embodiment of the network by the user. The digital born image is never fixed to a single viewing position, it moves between spaces’ p132

‘the phenomenological experience of time online is radically different from chronological, or linear time... photography here does not perform the function of an indexical connection with the past, but of manifest bifurcation of present into multiple streams... this destabilisation of photographic meaning is the direct result of the image being detached from it teleological origins’ p132