#cloud-native data systems

Explore tagged Tumblr posts

Text

Data Unbound: Embracing NoSQL & NewSQL for the Real-Time Era.

Sanjay Kumar Mohindroo Sanjay Kumar Mohindroo. skm.stayingalive.in Explore how NoSQL and NewSQL databases revolutionize data management by handling unstructured data, supporting distributed architectures, and enabling real-time analytics. In today’s digital-first landscape, businesses and institutions are under mounting pressure to process massive volumes of data with greater speed,…

#ACID compliance#CIO decision-making#cloud data platforms#cloud-native data systems#column-family databases#data strategy#data-driven applications#database modernization#digital transformation#distributed database architecture#document stores#enterprise database platforms#graph databases#horizontal scaling#hybrid data stack#in-memory processing#IT modernization#key-value databases#News#NewSQL databases#next-gen data architecture#NoSQL databases#performance-driven applications#real-time data analytics#real-time data infrastructure#Sanjay Kumar Mohindroo#scalable database solutions#scalable systems for growth#schema-less databases#Tech Leadership

0 notes

Text

Why Germany Is Still Struggling with Digitalization – A Real-Life Look from Finance

Working in Germany, especially in a field like Finance, often feels like stepping into a strange paradox. On one hand, you’re in one of the most advanced economies in the world—known for its precision, engineering, and efficiency. On the other hand, daily tasks can feel like they belong in the 1990s. If you’ve ever had to send invoices to customers who insist they be mailed physically—yes, by…

#automation#business digitalization#business modernization#cash payments#change management#Clinics#cloud services#communication barriers#cultural habits#data privacy#digital future#digital mindset#digital natives#digital platforms#digital resistance#digital tools#digital transformation#digitalization#Distributors#document digitization#EDI#education system#electronic invoicing#email invoices#fax orders#filing cabinets#finance automation#finance department#future of work#generational gap

0 notes

Text

Available Cloud Computing Services at Fusion Dynamics

We Fuel The Digital Transformation Of Next-Gen Enterprises!

Fusion Dynamics provides future-ready IT and computing infrastructure that delivers high performance while being cost-efficient and sustainable. We envision, plan and build next-gen data and computing centers in close collaboration with our customers, addressing their business’s specific needs. Our turnkey solutions deliver best-in-class performance for all advanced computing applications such as HPC, Edge/Telco, Cloud Computing, and AI.

With over two decades of expertise in IT infrastructure implementation and an agile approach that matches the lightning-fast pace of new-age technology, we deliver future-proof solutions tailored to the niche requirements of various industries.

Our Services

We decode and optimise the end-to-end design and deployment of new-age data centers with our industry-vetted services.

System Design

When designing a cutting-edge data center from scratch, we follow a systematic and comprehensive approach. First, our front-end team connects with you to draw a set of requirements based on your intended application, workload, and physical space. Following that, our engineering team defines the architecture of your system and deep dives into component selection to meet all your computing, storage, and networking requirements. With our highly configurable solutions, we help you formulate a system design with the best CPU-GPU configurations to match the desired performance, power consumption, and footprint of your data center.

Why Choose Us

We bring a potent combination of over two decades of experience in IT solutions and a dynamic approach to continuously evolve with the latest data storage, computing, and networking technology. Our team constitutes domain experts who liaise with you throughout the end-to-end journey of setting up and operating an advanced data center.

With a profound understanding of modern digital requirements, backed by decades of industry experience, we work closely with your organisation to design the most efficient systems to catalyse innovation. From sourcing cutting-edge components from leading global technology providers to seamlessly integrating them for rapid deployment, we deliver state-of-the-art computing infrastructures to drive your growth!

What We Offer The Fusion Dynamics Advantage!

At Fusion Dynamics, we believe that our responsibility goes beyond providing a computing solution to help you build a high-performance, efficient, and sustainable digital-first business. Our offerings are carefully configured to not only fulfil your current organisational requirements but to future-proof your technology infrastructure as well, with an emphasis on the following parameters –

Performance density

Rather than focusing solely on absolute processing power and storage, we strive to achieve the best performance-to-space ratio for your application. Our next-generation processors outrival the competition on processing as well as storage metrics.

Flexibility

Our solutions are configurable at practically every design layer, even down to the choice of processor architecture – ARM or x86. Our subject matter experts are here to assist you in designing the most streamlined and efficient configuration for your specific needs.

Scalability

We prioritise your current needs with an eye on your future targets. Deploying a scalable solution ensures operational efficiency as well as smooth and cost-effective infrastructure upgrades as you scale up.

Sustainability

Our focus on future-proofing your data center infrastructure includes the responsibility to manage its environmental impact. Our power- and space-efficient compute elements offer the highest core density and performance/watt ratios. Furthermore, our direct liquid cooling solutions help you minimise your energy expenditure. Therefore, our solutions allow rapid expansion of businesses without compromising on environmental footprint, helping you meet your sustainability goals.

Stability

Your compute and data infrastructure must operate at optimal performance levels irrespective of fluctuations in data payloads. We design systems that can withstand extreme fluctuations in workloads to guarantee operational stability for your data center.

Leverage our prowess in every aspect of computing technology to build a modern data center. Choose us as your technology partner to ride the next wave of digital evolution!

#Keywords#services on cloud computing#edge network services#available cloud computing services#cloud computing based services#cooling solutions#hpc cluster management software#cloud backups for business#platform as a service vendors#edge computing services#server cooling system#ai services providers#data centers cooling systems#integration platform as a service#https://www.tumblr.com/#cloud native application development#server cloud backups#edge computing solutions for telecom#the best cloud computing services#advanced cooling systems for cloud computing#c#data center cabling solutions#cloud backups for small business#future applications of cloud computing

0 notes

Text

#World's First Cloud-Native Wireless Building Management System (BMS)#Discover the future of building management with Know Your Building™. Our innovative cloud-native wireless Building Management System (BMS)#at any time. Designed for commercial real estate#our solution reduces energy consumption#lowers operational costs#and optimizes building performance while contributing to sustainability goals. With real-time data insights and remote accessibility#Know Your Building™ empowers facility managers to make smarter#data-driven decisions for efficient building operations.

0 notes

Text

Axolt: Modern ERP and Inventory Software Built on Salesforce

Today’s businesses operate in a fast-paced, data-driven environment where efficiency, accuracy, and agility are key to staying competitive. Legacy systems and disconnected software tools can no longer meet the evolving demands of modern enterprises. That’s why companies across industries are turning to Axolt, a next-generation solution offering intelligent inventory software and a full-fledged ERP on Salesforce.

Axolt is a unified, cloud-based ERP system built natively on the Salesforce platform. It provides a modular, scalable framework that allows organizations to manage operations from inventory and logistics to finance, manufacturing, and compliance—all in one place.

Where most ERPs are either too rigid or require costly integrations, Axolt is designed for flexibility. It empowers teams with real-time data, reduces manual work, and improves cross-functional collaboration. With Salesforce as the foundation, users benefit from enterprise-grade security, automation, and mobile access without needing separate platforms for CRM and ERP.

Smarter Inventory Software Inventory is at the heart of operational performance. Poor inventory control can result in stockouts, over-purchasing, and missed opportunities. Axolt’s built-in inventory software addresses these issues by providing real-time visibility into stock levels, warehouse locations, and product movement.

Whether managing serialized products, batches, or kits, the system tracks every item with precision. It supports barcode scanning, lot and serial traceability, expiry tracking, and multi-warehouse inventory—all from a central dashboard.

Unlike traditional inventory tools, Axolt integrates directly with Salesforce CRM. This means your sales and service teams always have accurate availability information, enabling faster order processing and better customer communication.

A Complete Salesforce ERP Axolt isn’t just inventory software—it’s a full Salesforce ERP suite tailored for businesses that want more from their operations. Finance teams can automate billing cycles, reconcile payments, and manage cash flows with built-in modules for accounts receivable and payable. Manufacturing teams can plan production, allocate work orders, and track costs across every stage.

86 notes

·

View notes

Text

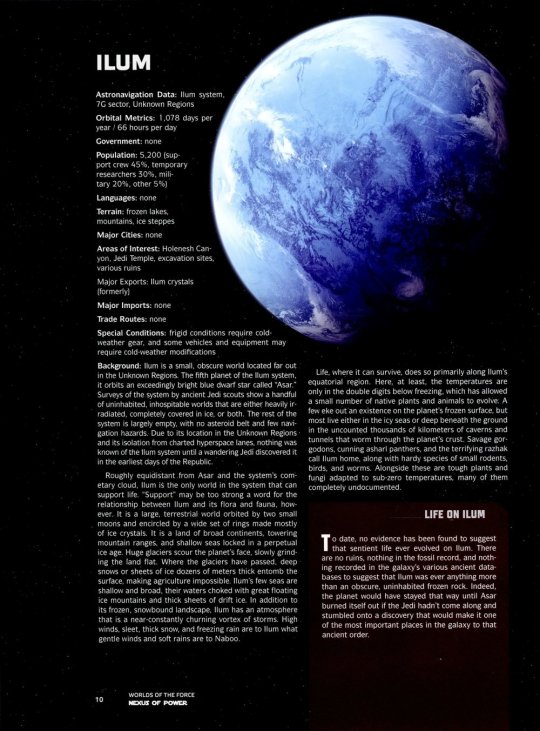

PLANET: ILUM Astronavigation Data: Ilum system, 7G sector, Unkonwn Regions Orbital Metrics: 1,078 days per year/66 hours per day Goverment: None Population: 5,200 (support crew 45%, temporary researchers 30%, military 20%, other 5%) Languages: None Terrain: Frozen lakes, mountains, ice steppes Major Cities: None Areas of Interest: Holenesh Canyon, Jedi Temple, excavation sites, various ruins Major Exports: Ilum crystals [Source: Star Wars - Force and Destiny - Nexus of Power - Force Worlds] BACKGROUND: Ilum is a small, obscure world located far out in the Unknown Regions. The fifth planet of the Ilum system, it orbits an exceedingly bright blue dwarf star called “Asar.” Surveys of the system by ancient Jedi scouts show a handful of uninhabited, inhospitable worlds that are either heavily irradiated, completely covered in ice, or both. The rest of the system is largely empty, with no asteroid belt and few navigation hazards. Due to its location in the Unknown Regions and its isolation from charted hyperspace lanes, nothing was known of the Ilum system until a wandering Jedi discovered it in the earliest days of the Republic.

Roughly equidistant from Asar and the system’s cometary cloud, Ilum is the only world in the system that can support life. “Support” may be too strong a word for the relationship between Ilum and its flora and fauna, however. It is a large, terrestrial world orbited by two small moons and encircled by a wide set of rings made mostly of ice crystals. It is a land of broad continents, towering mountain ranges, and shallow seas locked in a perpetual ice age. Huge glaciers scour the planet’s face, slowly grinding the land flat. Where the glaciers have passed, deep snows or sheets of ice dozens of meters thick entomb the surface, making agriculture impossible. Ilum's few seas are shallow and broad, their waters choked with great floating ice mountains and thick sheets of drift ice. In addition to its frozen, snowbound landscape, Ilum has an atmosphere that is a near-constantly churning vortex of storms. High winds, sleet, thick snow, and freezing rain are to Ilum what gentle winds and soft rains are to Naboo.

Life, where it can survive, does so primarily along Ilum's equatorial region. Here, at least, the temperatures are only in the double digits below freezing, which has allowed a small number of native plants and animals to evolve. A few eke out an existence on the planet’s frozen surface, but most live either in the icy seas or deep beneath the ground in the uncounted thousands of kilometers of caverns and tunnels that worm through the planet’s crust. Savage gorgodons, cunning asharl panthers, and the terrifying razhak call Ilum home, along with hardy species of small rodents, birds, and worms. Alongside these are tough plants and fungi adapted to sub-zero temperatures, many of them completely undocumented.

LIFE ON ILUM: To date, no evidence has been found to suggest that sentient life ever evolved on Ilum. There are no ruins, nothing in the fossil record, and nothing recorded in the galaxy’s various ancient databases to suggest that Ilum was ever anything more than an obscure, uninhabited frozen rock. Indeed, the planet would have stayed that way until Asar burned itself out if the Jedi hadn’t come along and stumbled onto a discovery that would make it one of the most important places in the galaxy to that ancient order.

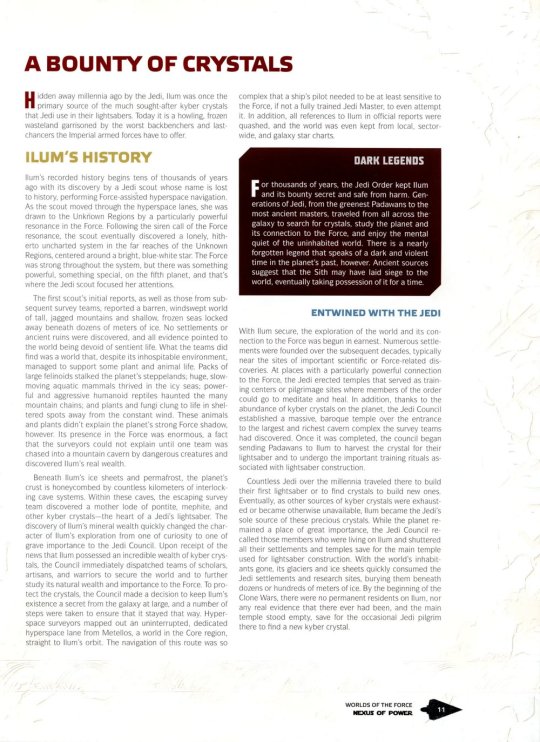

ILUM'S HISTORY: Ilum's recorded history begins tens of thousands of years ago with its discovery by a Jedi scout whose name is lost to history, performing Force-assisted hyperspace navigation. As the scout moved through the hyperspace lanes, she was drawn to the Unknown Regions by a particularly powerful resonance in the Force. Following the siren call of the Force resonance, the scout eventually discovered a lonely, hitherto uncharted system in the far reaches of the Unknown Regions, centered around a bright, blue-white star. The Force was strong throughout the system, but there was something powerful, something special, on the fifth planet, and that's where the Jedi scout focused her attentions.

The first scout's initial reports, as well as those from sub sequent survey teams, reported a barren, windswept world of tall, jagged mountains and shallow, frozen seas locked away beneath dozens of meters of ice. No settlements or ancient ruins were discovered, and all evidence pointed to the world being devoid of sentient life. What the teams did find was a world that, despite its inhospitable environment, managed to support some plant and animal life. Packs of large felinoids stalked the planet's steppelands; huge, slow moving aquatic mammals thrived in the icy seas; powerful and aggressive humanoid reptiles haunted the many mountain chains; and plants and fungi clung to life in sheltered spots away from the constant wind. These animals and plants didn't explain the planet's strong Force shadow, however. Its presence in the Force was enormous, a fact that the surveyors could not explain until one team was chased into a mountain cavern by dangerous creatures and discovered Ilum's real wealth.

Beneath Ilum's ice sheets and permafrost, the planet's crust is honeycombed by countless kilometers of interlocking cave systems. Within these caves, the escaping survey team discovered a motherlode of pontite, mephite, and other kyber crystals—the heart of a Jedi's lightsaber. The discovery of Ilum's mineral wealth quickly changed the char acter of Ilum's exploration from one of curiosity to one of grave importance to the Jedi Council. Upon receipt of the news that Ilum possessed an incredible wealth of kyber crystals, the Council immediately dispatched teams of scholars, artisans, and warriors to secure the world and to further study its natural wealth and importance to the Force. To pro tect the crystals, the Council made a decision to keep Ilum's existence a secret from the galaxy at large, and a number of steps were taken to ensure that it stayed that way. Hyperspace surveyors mapped out an uninterrupted, dedicated hyperspace lane from Metellos, a world in the Core region, straight to Ilum's orbit. The navigation of this route was so complex that a ship’s pilot needed to be at least sensitive to the Force, if not a fully trained Jedi Master, to even attempt it. In addition, all references to Ilum in official reports were quashed, and the world was even kept from local, sector wide, and galaxy star charts.

DARK LEGENDS: For thousands of years, the Jedi Order kept Ilum and its bounty secret and safe from harm. Generations of Jedi, from the greenest Padawans to the most ancient masters, traveled from all across the galaxy to search for crystals, study the planet and its connection to the Force, and enjoy the mental quiet of the uninhabited world. There is a nearly forgotten legend that speaks of a dark and violent time in the planet’s past, however. Ancient sources suggest that the Sith may have laid siege to the world, eventually taking possession of it for a time.

ENTWINED WITH THE JEDI: With Ilum secure, the exploration of the world and its connection to the Force was begun in earnest. Numerous settle ments were founded over the subsequent decades, typically near the sites of important scientific or Force-related discoveries. At places with a particularly powerful connection to the Force, the Jedi erected temples that served as training centers or pilgrimage sites where members of the order could go to meditate and heal. In addition, thanks to the abundance of kyber crystals on the planet, the Jedi Council established a massive, baroque temple over the entrance to the largest and richest cavern complex the survey teams had discovered. Once it was completed, the council began sending Padawans to Ilum to harvest the crystal for their lightsaber and to undergo the important training rituals associated with lightsaber construction.

Countless Jedi over the millennia traveled there to build their first lightsaber or to find crystals to build new ones. Eventually, as other sources of kyber crystals were exhaust ed or became otherwise unavailable, Ilum became the Jedi’s sole source of these precious crystals. While the planet remained a place of great importance, the Jedi Council recalled those members who were living on Ilum and shuttered all their settlements and temples save for the main temple used for lightsaber construction. With the world’s inhabitants gone, its glaciers and ice sheets quickly consumed the Jedi settlements and research sites, burying them beneath dozens or hundreds of meters of ice. By the beginning of the Clone Wars, there were no permanent residents on Ilum, nor any real evidence that there ever had been, and the main temple stood empty, save for the occasional Jedi pilgrim there to find a new kyber crystal.

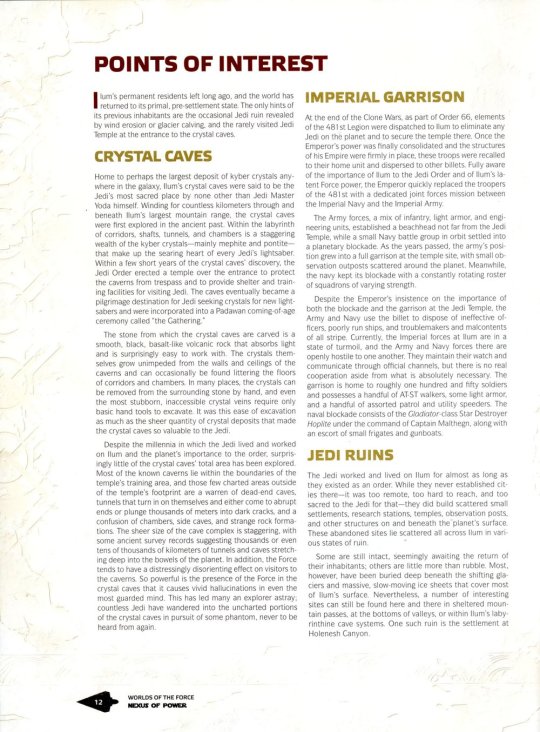

CRYSTAL CAVES: Home to perhaps the largest deposit of kyber crystals any where in the galaxy, Ilum’s crystal caves were said to be the Jedi’s most sacred place by none other than Jedi Master Yoda himself. Winding for countless kilometers through and beneath Ilum’s largest mountain range, the crystal caves were first explored in the ancient past. Within the labyrinth of corridors, shafts, tunnels, and chambers is a staggering wealth of the kyber crystals—mainly mephite and pontite— that make up the searing heart of every Jedi’s lightsaber. Within a few short years of the crystal caves’ discovery, the Jedi Order erected a temple over the entrance to protect the caverns from trespass and to provide shelter and training facilities for visiting Jedi. The caves eventually became a pilgrimage destination for Jedi seeking crystals for new lightsabers and were incorporated into a Padawan coming-of-age ceremony called “the Gathering.”

The stone from which the crystal caves are carved is a smooth, black, basalt-like volcanic rock that absorbs light and is surprisingly easy to work with. The crystals themselves grow unimpeded from the walls and ceilings of the caverns and can occasionally be found littering the floors of corridors and chambers. In many places, the crystals can be removed from the surrounding stone by hand, and even the most stubborn, inaccessible crystal veins require only basic hand tools to excavate. It was this ease of excavation as much as the sheer quantity of crystal deposits that made the crystal caves so valuable to the Jedi.

Despite the millennia in which the Jedi lived and worked on Ilum and the planet’s importance to the order, surprisingly little of the crystal caves’ total area has been explored. Most of the known caverns lie within the boundaries of the temple’s training area, and those few charted areas outside of the temple’s footprint are a warren of dead-end caves, tunnels that turn in on themselves and either come to abrupt ends or plunge thousands of meters into dark cracks, and a confusion of chambers, side caves, and strange rock formations. The sheer size of the cave complex is staggering, with some ancient survey records suggesting thousands or even tens of thousands of kilometers of tunnels and caves stretching deep into the bowels of the planet. In addition, the Force tends to have a distressingly disorienting effect on visitors to the caverns. So powerful is the presence of the Force in the crystal caves that it causes vivid hallucinations in even the most guarded mind. This has led many an explorer astray; countless Jedi have wandered into the uncharted portions of the crystal caves in pursuit of some phantom, never to be heard from again.

JEDI RUINS: The Jedi worked and lived on Ilum for almost as long as they existed as an order. While they never established cities there—it was too remote, too hard to reach, and too sacred to the Jedi for that—they did build scattered small settlements, research stations, temples, observation posts, and other structures on and beneath the planet’s surface. These abandoned sites lie scattered all across Ilum in various states of ruin.

Some are still intact, seemingly awaiting the return of their inhabitants; others are little more than rubble. Most, however, have been buried deep beneath the shifting glaciers and massive, slow-moving ice sheets that cover most of Ilum’s surface. Nevertheless, a number of interesting sites can still be found here and there in sheltered mountain passes, at the bottoms of valleys, or within Ilum’s labyrinthine cave systems. One such ruin is the settlement at Holenesh Canyon.

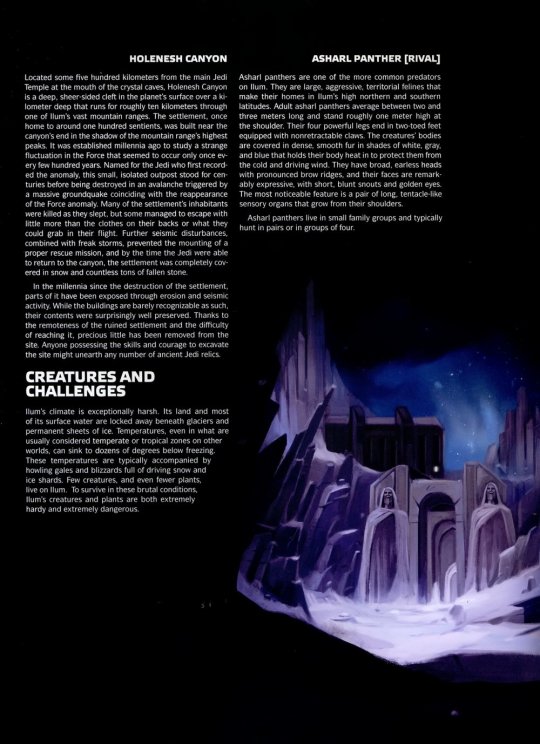

HOLENESH CANYON: Located some five hundred kilometers from the main Jedi Temple at the mouth of the crystal caves, Holenesh Canyon is a deep, sheer-sided cleft in the planet’s surface over a kilometer deep that runs for roughly ten kilometers through one of Ilum's vast mountain ranges. The settlement, once home to around one hundred sentients, was built near the canyon’s end in the shadow of the mountain range’s highest peaks. It was established millennia ago to study a strange fluctuation in the Force that seemed to occur only once every few hundred years. Named for the Jedi who first recorded the anomaly, this small, isolated outpost stood for centuries before being destroyed in an avalanche triggered by a massive groundquake coinciding with the reappearance of the Force anomaly. Many of the settlement’s inhabitants were killed as they slept, but some managed to escape with little more than the clothes on their backs or what they could grab in their flight. Further seismic disturbances, combined with freak storms, prevented the mounting of a proper rescue mission, and by the time the Jedi were able to return to the canyon, the settlement was completely covered in snow and countless tons of fallen stone.

In the millennia since the destruction of the settlement, parts of it have been exposed through erosion and seismic activity. While the buildings are barely recognizable as such, their contents were surprisingly well preserved. Thanks to the remoteness of the ruined settlement and the difficulty of reaching it, precious little has been removed from the site. Anyone possessing the skills and courage to excavate the site might unearth any number of ancient Jedi relics.

CREATURES AND CHALLENGES: Ilum's climate is exceptionally harsh. Its land and most of its surface water are locked away beneath glaciers and permanent sheets of ice. Temperatures, even in what are usually considered temperate or tropical zones on other worlds, can sink to dozens of degrees below freezing. These temperatures are typically accompanied by howling gales and blizzards full of driving snow and ice shards. Few creatures, and even fewer plants, live on Ilum. To survive in these brutal conditions, Ilum's creatures and plants are both extremely hardy and extremely dangerous.

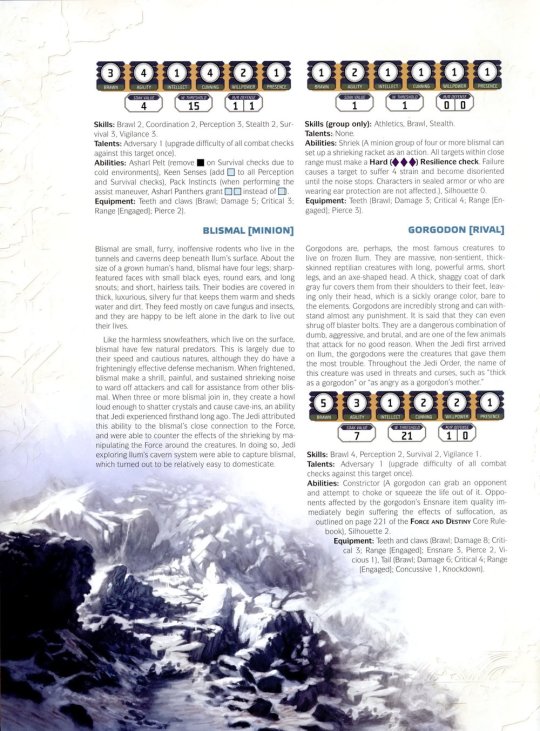

ASHARL PANTHER [RIVAL]: Asharl panthers are one of the more common predators on Ilum. They are large, aggressive, territorial felines that make their homes in Ilum's high northern and southern latitudes. Adult asharl panthers average between two and three meters long and stand roughly one meter high at the shoulder. Their four powerful legs end in two-toed feet equipped with nonretractable claws. The creatures’ bodies are covered in dense, smooth fur in shades of white, gray, and blue that holds their body heat in to protect them from the cold and driving wind. They have broad, earless heads with pronounced brow ridges, and their faces are remark ably expressive, with short, blunt snouts and golden eyes. The most noticeable feature is a pair of long, tentacle-like sensory organs that grow from their shoulders. Asharl panthers live in small family groups and typically hunt in pairs or in groups of four.

BLISMAL [MINION]: Blismal are small, furry, inoffensive rodents who live in the tunnels and caverns deep beneath Ilum's surface. About the size of a grown human’s hand, blismal have four legs; sharp featured faces with small black eyes, round ears, and long snouts; and short, hairless tails. Their bodies are covered in thick, luxurious, silvery fur that keeps them warm and sheds water and dirt. They feed mostly on cave fungus and insects, and they are happy to be left alone in the dark to live out their lives.

Like the harmless snowfeathers, which live on the surface, blismal have few natural predators. This is largely due to their speed and cautious natures, although they do have a frighteningly effective defense mechanism. When frightened, blismal make a shrill, painful, and sustained shrieking noise to ward off attackers and call for assistance from other blismal. When three or more blismal join in, they create a howl loud enough to shatter crystals and cause cave-ins, an ability that Jedi experienced firsthand long ago. The Jedi attributed this ability to the blismal’s close connection to the Force, and were able to counter the effects of the shrieking by manipulating the Force around the creatures. In doing so, Jedi exploring Ilum's cavern system were able to capture blismal, which turned out to be relatively easy to domesticate.

GORGODON [RIVAL]: Gorgodons are, perhaps, the most famous creatures to live on frozen Ilum. They are massive, non-sentient, thick-skinned reptilian creatures with long, powerful arms, short legs, and an axe-shaped head. A thick, shaggy coat of dark gray fur covers them from their shoulders to their feet, leaving only their head, which is a sickly orange color, bare to the elements. Gorgodons are incredibly strong and can with stand almost any punishment. It is said that they can even shrug off blaster bolts. They are a dangerous combination of dumb, aggressive, and brutal, and are one of the few animals that attack for no good reason. When the Jedi first arrived on Ilum, the gorgodons were the creatures that gave them the most trouble. Throughout the Jedi Order, the name of this creature was used in threats and curses, such as “thick as a gorgodon” or “as angry as a gorgodon’s mother.”

RAZHAK [NEMESIS]: Among the most fearsome predators on Ilum, these massive creatures are as agile as they are deadly. Averaging around eight meters in length, razhak-are armored, segmented, wormlike creatures that propel themselves using rippling muscle ridges. Their bodies are broad and flat, covered with thick, chitinous plates in shades of white and blue. While they have no apparent eyes, their heads are topped with long, segmented antennae that serve as sensory organs. Their huge mouths feature multiple rows of serrated teeth.

Aggressive and solitary, razhak live in the endless tunnel systems beneath Ilum's surface. They are deceptively fast and, when they attack, they rear up like a serpent and at tempt to swallow prey whole. Anything they can’t eat in one bite they tear into pieces by grasping it in their mouth and shaking it violently. In addition to possessing great speed and a savage demeanor, razhak also can generate intense heat strong enough to rapidly melt solid ice and cause serious burns to exposed flesh. This ability allows them to tunnel through ice as though it were soft sand. Razhak usually build their nests inside of ice walls or densely packed snow, typically leaving the nest only to eat or mate.

Thankfully, while they are terrifying to behold and extremely dangerous, razhak are also easily distracted and creatures of minimal intelligence. Keeping this in mind, a clever opponent can easily outflank them, lead them into traps, or make them lose interest in attacking altogether.

SNOWFEATHER [MINION]: Snowfeathers are small, clever, flightless birds native to Ilum. Their bodies are covered in a dense layer of oily, white feathers that protects them from Ilum's bone-chilling cold and vicious weather. Relatively harmless creatures, they live in nesting colonies built into ice shelves or cliff faces.

Despite their inoffensive nature and inability to fly, snowfeathers have few natural predators, for two reasons. First, their meat tastes terrible and is mildly poisonous, causing painful cramps, bloating, and loosening of the bowels in those unfortunate enough to eat them. Second, they have a connection to the Force that gives them the ability to project an illusion that makes them seem larger and more formidable than they really are. These characteristics have allowed them to survive and even thrive on an inhospitable planet full of savage creatures like gorgodons and asharl panthers.

144 notes

·

View notes

Text

Since Russian troops invaded Ukraine more than three years ago, Russian technology companies and executives have been widely sanctioned for supporting the Kremlin. That includes Vladimir Kiriyenko, the son of one of Vladimir Putin’s top aides and the CEO of VK Group, which runs VK, Russia’s Facebook equivalent that has increasingly shifted towards the regime’s repressive positioning.

Now cybersecurity researchers are warning that a widely used piece of open source code—which is linked to Kiriyenko’s company and managed by Russian developers—may pose a “persistent” national security risk to the United States. The open source software (OSS), called easyjson, has been widely used by the US Department of Defense and “extensively” across software used in the finance, technology, and healthcare sectors, say researchers at security company Hunted Labs, which is behind the claims. The fear is that Russia could alter easyjson to steal data or otherwise be abused.

“You have this really critical package that’s basically a linchpin for the cloud native ecosystem, that’s maintained by a group of individuals based in Moscow belonging to an organization that has this suspicious history,” says Hayden Smith, a cofounder at Hunted Labs.

For decades, open source software has underpinned large swathes of the technology industry and the systems people rely on day to day. Open source technology allows anyone to see and modify code, helping to make improvements, detect security vulnerabilities, and apply independent scrutiny that’s absent from the closed tech of corporate giants. However, the fracturing of geopolitical norms and the specter of stealthy supply chain attacks has led to an increase in questions about risk levels of "foreign" code.

Easyjson is a code serialization tool for the Go programming language and is often used across the wider cloud ecosystem, being present in other open source software, according to Hunted Labs. The package is hosted on GitHub by a MailRu account, which is owned by VK after the mail company rebranded itself in 2021. The VK Group itself is not sanctioned. Easyjson has been available on Github since 2016, with most of its updates coming before 2020. Kiriyenko became the CEO of VK Group in December 2021 and was sanctioned in February 2022.

Hunted Labs’ analysis shared with WIRED shows the most active developers on the project in recent years have listed themselves as being based in Moscow. Smith says that Hunted Labs has not identified vulnerabilities in the easyjson code.

However, the link to the sanctioned CEO’s company, plus Russia’s aggressive state-backed cyberattacks, may increase potential risks, Smith says. Research from Hunted Labs details how code serialization tools could be abused by malicious hackers. “A Russian-controlled software package could be used as a ‘sleeper cell’ to cause serious harm to critical US infrastructure or for espionage and weaponized influence campaigns,” it says.

“Nation states take on a strategic positioning,” says George Barnes, a former deputy director at the National Security Agency, who spent 36 years at the NSA and now acts as a senior advisor and investor in Hunted Labs. Barnes says that hackers within Russia’s intelligence agencies could see easyjson as a potential opportunity for abuse in the future.

“It is totally efficient code. There’s no known vulnerability about it, hence no other company has identified anything wrong with it,” Barnes says. “Yet the people who actually own it are under the guise of VK, which is tight with the Kremlin,” he says. “If I’m sitting there in the GRU or the FSB and I’m looking at the laundry list of opportunities… this is perfect. It’s just lying there,” Barnes says, referencing Russia’s foreign military and domestic security agencies.

VK Group did not respond to WIRED’s request for comment about easyjson. The US Department of Defense did not respond to a request for comment about the inclusion of easyjson in its software setup.

“NSA does not have a comment to make on this specific software,” a spokesperson for the National Security Agency says. “The NSA Cybersecurity Collaboration Center does welcome tips from the private sector—when a tip is received, NSA triages the tip against our own insights to fully understand the threat and, if corroborated, share any relevant mitigations with the community.” A spokesperson for the US Cybersecurity and Infrastructure Security Agency, which has faced upheaval under the second Trump administration, says: “We are going to refer you back to Hunted Labs.”

GitHub, a code repository owned by Microsoft, says that while it will investigate issues and take action where its policies are broken, it is not aware of malicious code in easyjson and VK is not sanctioned itself. Other tech companies’ treatment of VK varies. After Britain sanctioned the leaders of Russian banks who own stakes in VK in September 2022, for example, Apple removed its social media app from its App Store.

Dan Lorenc, the CEO of supply chain security firm Chainguard, says that with easyjson, the connections to Russia are in “plain sight” and that there is a “slightly higher” cybersecurity risk than those of other software libraries. He adds that the red flags around other open source technology may not be so obvious.

“In the overall open source space, you don’t necessarily even know where people are most of the time,” Lorenc says, pointing out that many developers do not disclose their identity or locations online, and even if they do, it is not always possible to verify the details are correct. “The code is what we have to trust and the code and the systems that are used to build that code. People are important, but we’re just not in a world where we can push the trust down to the individuals,” Lorenc says.

As Russia’s full-scale invasion of Ukraine has unfolded, there has been increased scrutiny on the use of open source systems and the impact of sanctions upon entities involved in the development. In October last year, a Linux kernel maintainer removed 11 Russian developers who were involved in the open souce project, broadly citing sanctions as the reason for the change. Then in January this year, the Linux Foundation issued guidance covering how international sanctions can impact open source, saying developers should be cautious of who they interact with and the nature of interactions.

The shift in perceived risk is coupled with the threat of supply chain attacks. Last year, corporate developers and the open source world were rocked as a mysterious attacker known as Jia Tan stealthily installed a backdoor in the widely used XZ Utils software, after spending two years diligently updating it without any signs of trouble. The backdoor was only discovered by chance.

“Years ago, OSS was developed by small groups of trusted developers who were known to one another,” says Nancy Mead, a fellow of the Carnegie Mellon University Software Engineering Institute. “In that time frame, no one expected a trusted developer of being a hacker, and the relatively slower pace provided time for review. These days, with automatic release, incorporation of updates, and the wide usage of OSS, the old assumptions are no longer valid.”

Scott Hissam, a senior member of technical staff also from the Carnegie Software Engineering Institute, says there can often be consideration about how many maintainers and the number of organizations that work on an open source project, but there is currently not a “mass movement” to consider other details about OSS projects. “However, it is coming, and there are several activities that collect details about OSS projects, which OSS consumers can use to get more insight into OSS projects and their activities,” Hissam says, pointing to two examples.

Hunted Lab’s Smith says he is currently looking into the provenance of other open source projects and the risks that could come with them, including scrutinizing countries known to have carried out cyberattacks against US entities. He says he is not encouraging people to avoid open source software at all, more that risk considerations have shifted over time. “We’re telling you to just make really good risk informed decisions when you're trying to use open source,” he says. “Open source software is basically good until it's not.”

10 notes

·

View notes

Text

Battletech: Mira planetary report

Mira Planetary Report 3025 year

Recharging station: none

ComStar Facility Class: B

Population: 3 million

Percentage and level of native life: 15% mammal

Note: These refer to land life, like most colonized worlds, native life is replaced with Terran species, nobody bothers with ocean life, unless is relevant somehow. In Mira case, algae are vital to the ecosystem but this means commercial fishing is not practicable as it clogs nets.

Star System Data

Star Type: F6III (subgiant)

Position in System: 4th planet (Mira IV)

Distance from Star: Approximately 3.16 AU (within the habitable zone of an F6III star)

Travel Time to Jump Point: 10 days at 1G acceleration

Mira orbits an F6III subgiant star, larger and brighter than Sol (a G2V star), which extends the habitable zone farther out and increases dropship travel time to the jump point compared to Terra’s 6-8 days. The 10-day journey reflects the standard BattleTech transit model: dropships accelerate at 1G for half the voyage, perform a turnover, and decelerate at 1G to arrive at zero velocity.

Planetary Data

Diameter: 12,000 km (comparable to Terra)

Gravity: 0.9g

Atmosphere: Standard, breathable; slightly thinner than Terra’s but enriched with oxygen due to abundant oceanic algae

Hydrosphere: 85% water coverage

Natural Satellites: Two small moons

Population: 2.8 million (as of 2975)

Government: Part of the Capellan Confederation (liberated by the 1st St. Ives Lancers in 2975)

Mira, the fourth planet in its system, orbits an F6III subgiant star at approximately 3.16 AU, placing it within the habitable zone. Its diameter and gravity (0.9g) are close to Terra’s, making it comfortable for human habitation. The atmosphere, though thinner than Terra’s, supports life with a higher oxygen content due to widespread algal blooms in its vast oceans, which cover 85% of the surface. Two small moons influence tidal patterns, while a strong magnetosphere shields the planet from stellar radiation. In 2975, Mira was liberated by the 1st St. Ives Lancers, integrating it into the Capellan Confederation.

Year Length: 1300 Earth days (3.55 years), with ~325-day seasons, orbiting at ~3.16 AU around a ~2.5 solar mass F6III star.

Axial Tilt: ~23.5°, supporting southern Russia/Crimea-like seasonal cycles.

Latitudinal Climate Gradient:

Temperate Zones (20°–50°N/S): Southern Russia/Crimea-like (5–25°C, 400–800 mm rainfall), with coastal resorts and agriculture.

Mountainous Regions: Caucasus-like, cooler (0–15°C), with snowfall.

Equatorial Zones (0°–20°N/S): Warm, humid (25–35°C, 800–1200 mm rainfall), supporting algal blooms.

Astronomical Quirk: Strong magnetosphere shields against F6III radiation; two moons stabilize orbit and tilt.

Oceanic Nature: 85% water coverage drives albedo, cloud cover, and precipitation, moderating climate for habitability.

Stellar Characteristics and Perception Mira’s parent star, an F6III subgiant, differs significantly from Sol (a G2V star) in ways that shape the planet’s environment and how its sunlight is perceived:

Luminosity: The F6III star is far more luminous than Sol, emitting approximately 20–30 times more light. This increased output pushes the habitable zone farther out, allowing Mira to sustain life at 3.16 AU despite the star’s intensity.

Apparent Size: From Mira’s surface, the star appears larger in the sky than Sol does from Terra. Given the F6III star’s larger physical size and the adjusted distance of the habitable zone, its apparent diameter might be 1.5–2 times that of Sol as seen from Earth, creating a striking visual presence.

Light Temperature: With a surface temperature of 6,500–7,000 K (compared to Sol’s 5,772 K), the star’s light is bluer and more intense. This higher color temperature results in a harsher daylight compared to Terra’s warmer, yellower sunlight. The bluish tint could require adaptations for human comfort—such as tinted visors or specialized architecture—and might influence plant photosynthesis, favoring species adapted to bluer wavelengths.

The sunlight on Mira is indeed harsher due to its intensity and blue-shift, contrasting with Sol’s gentler glow, altering the planet’s aesthetic and environmental dynamics.

Impact of Oceans and Cloud Cover Mira’s extensive oceans, covering 85% of its surface, interact with the star’s intense sunlight to moderate its effects. The brighter, more energetic light drives higher rates of evaporation compared to Terra (which has 71% water coverage), leading to increased cloud formation. This thick cloud cover acts as a natural filter, reflecting a portion of the star’s radiation back into space and diffusing the remaining light. As a result, the harshness of the sunlight is lessened, softening its impact on the surface and contributing to a more temperate climate. This interplay between intense stellar output and planetary water creates a balanced, livable environment despite the star’s power.

Geography

Mira is predominantly a waterworld, with oceans covering 85% of its surface. Its limited landmass consists of archipelagos and small continents, many featuring mountainous terrain. These islands, often volcanic in origin, exhibit active tectonics, akin to Terra’s Oceania region. Volcanic activity is moderate rather than absent, as waterworlds with fragmented landmasses typically experience tectonic movement due to thinner crusts and mantle convection—though less intense than on continents with massive tectonic plates. The scarce flat land is reserved for agriculture and settlements, while the mountains yield coal and metallic ores, though not in quantities sufficient for major industry. Offshore platforms exploit hydrocarbons (oil and gas) from the ocean floor, as the mountainous land lacks significant sedimentary deposits typical of flat terrains where fossil fuels accumulate.

Climate

Mira’s climate is moderated by its extensive oceans, which act as a heat sink to prevent extreme temperature swings. The temperate zones on larger landmasses resemble southern Russia—warm summers and cool winters—while coastal areas and islands enjoy a milder, Crimea-like climate, ideal for resorts. The mountains, similar to the Caucasus, experience cooler temperatures and seasonal snowfall. Despite orbiting a hotter F6III star, Mira’s water coverage balances the climate, making it more pleasant than Tikonov (a harsh world orbiting a G8V star). The temperate zones align with the Kuban and Crimea, offering a respite for Tikonov nobles accustomed to continental rigors.

History

Mira was settled in the early 22nd century by Russian colonists from nearby Tikonov, who named it “Mira”—Russian for “world” and “peace”—reflecting its tranquil appeal. Initially an independent colony, it was annexed by the Marlette Association by 2306. In 2309, the Tikonov Grand Union, under General Diana Chinn, captured Mira after a 23-week campaign, integrating it into their domain. Its proximity to Tikonov (a single jump away) and pleasant environment made it a resort planet, with its continent parceled out into luxury estates for Tikonov nobility.

During the Succession Wars, Mira’s strategic location transformed it into a contested frontier. In 2829, the Bloody Suns mercenary unit invaded, expecting an easy victory over the Third Chesterton Cavalry. The defenders resorted to chemical weapons, prompting Duke Hasek of the Federated Suns to order a nuclear strike, followed by the Eighth Crucis Lancers’ ruthless mop-up. This brutal conflict left a lasting mark. Mira changed hands repeatedly between the Capellan Confederation and Federated Suns, serving as a staging base for attacks on Tikonov. In 2975, it was liberated by the 1st St. Ives Lancers, integrating it into the Capellan Confederation.

Economy

Mira’s economy is modest, shaped by its sparse population and limited resources. Its textile industry, producing high-quality fabrics, has earned it the nickname “clothiers to the galaxy,” with exports reaching across the Inner Sphere.

Sea mining of hydrocarbons via offshore platforms in the shallow waters sustains some light and heavy industry, compensating for the lack of easily extractable mineral resources and fertile arable land, which has prevented major industrial or agricultural development.

Notable Features

Molotosky Water Purification Process: A Mira innovation, widely adopted for its efficiency in water purification.

Luxury Estates: Historic resorts of Tikonov nobles, blending Russian and Asian architectural styles, now cultural landmarks.

Textile Industry: Renowned for craftsmanship, a cornerstone of Mira’s identity.

Cultural Heritage: Reflects its Tikonov settlers’ Eurasian roots, evident in architecture and traditions.

Military Significance

Mira’s proximity to Tikonov makes it a strategic linchpin. Its ports and spaceports support military logistics, and its history as a staging base underscores its value in conflicts between the Capellan Confederation and Federated Suns.

4 notes

·

View notes

Text

The Evolution of DJ Controllers: From Analog Beginnings to Intelligent Performance Systems

The DJ controller has undergone a remarkable transformation—what began as a basic interface for beat matching has now evolved into a powerful centerpiece of live performance technology. Over the years, the convergence of hardware precision, software intelligence, and real-time connectivity has redefined how DJs mix, manipulate, and present music to audiences.

For professional audio engineers and system designers, understanding this technological evolution is more than a history lesson—it's essential knowledge that informs how modern DJ systems are integrated into complex live environments. From early MIDI-based setups to today's AI-driven, all-in-one ecosystems, this blog explores the innovations that have shaped DJ controllers into the versatile tools they are today.

The Analog Foundation: Where It All Began

The roots of DJing lie in vinyl turntables and analog mixers. These setups emphasized feel, timing, and technique. There were no screens, no sync buttons—just rotary EQs, crossfaders, and the unmistakable tactile response of a needle on wax.

For audio engineers, these analog rigs meant clean signal paths and minimal processing latency. However, flexibility was limited, and transporting crates of vinyl to every gig was logistically demanding.

The Rise of MIDI and Digital Integration

The early 2000s brought the integration of MIDI controllers into DJ performance, marking a shift toward digital workflows. Devices like the Vestax VCI-100 and Hercules DJ Console enabled control over software like Traktor, Serato, and VirtualDJ. This introduced features such as beat syncing, cue points, and FX without losing physical interaction.

From an engineering perspective, this era introduced complexities such as USB data latency, audio driver configurations, and software-to-hardware mapping. However, it also opened the door to more compact, modular systems with immense creative potential.

Controllerism and Creative Freedom

Between 2010 and 2015, the concept of controllerism took hold. DJs began customizing their setups with multiple MIDI controllers, pad grids, FX units, and audio interfaces to create dynamic, live remix environments. Brands like Native Instruments, Akai, and Novation responded with feature-rich units that merged performance hardware with production workflows.

Technical advancements during this period included:

High-resolution jog wheels and pitch faders

Multi-deck software integration

RGB velocity-sensitive pads

Onboard audio interfaces with 24-bit output

HID protocol for tighter software-hardware response

These tools enabled a new breed of DJs to blur the lines between DJing, live production, and performance art—all requiring more advanced routing, monitoring, and latency optimization from audio engineers.

All-in-One Systems: Power Without the Laptop

As processors became more compact and efficient, DJ controllers began to include embedded CPUs, allowing them to function independently from computers. Products like the Pioneer XDJ-RX, Denon Prime 4, and RANE ONE revolutionized the scene by delivering laptop-free performance with powerful internal architecture.

Key engineering features included:

Multi-core processing with low-latency audio paths

High-definition touch displays with waveform visualization

Dual USB and SD card support for redundancy

Built-in Wi-Fi and Ethernet for music streaming and cloud sync

Zone routing and balanced outputs for advanced venue integration

For engineers managing live venues or touring rigs, these systems offered fewer points of failure, reduced setup times, and greater reliability under high-demand conditions.

Embedded AI and Real-Time Stem Control

One of the most significant breakthroughs in recent years has been the integration of AI-driven tools. Systems now offer real-time stem separation, powered by machine learning models that can isolate vocals, drums, bass, or instruments on the fly. Solutions like Serato Stems and Engine DJ OS have embedded this functionality directly into hardware workflows.

This allows DJs to perform spontaneous remixes and mashups without needing pre-processed tracks. From a technical standpoint, it demands powerful onboard DSP or GPU acceleration and raises the bar for system bandwidth and real-time processing.

For engineers, this means preparing systems that can handle complex source isolation and downstream processing without signal degradation or sync loss.

Cloud Connectivity & Software Ecosystem Maturity

Today’s DJ controllers are not just performance tools—they are part of a broader ecosystem that includes cloud storage, mobile app control, and wireless synchronization. Platforms like rekordbox Cloud, Dropbox Sync, and Engine Cloud allow DJs to manage libraries remotely and update sets across devices instantly.

This shift benefits engineers and production teams in several ways:

Faster changeovers between performers using synced metadata

Simplified backline configurations with minimal drive swapping

Streamlined updates, firmware management, and analytics

Improved troubleshooting through centralized data logging

The era of USB sticks and manual track loading is giving way to seamless, cloud-based workflows that reduce risk and increase efficiency in high-pressure environments.

Hybrid & Modular Workflows: The Return of Customization

While all-in-one units dominate, many professional DJs are returning to hybrid setups—custom configurations that blend traditional turntables, modular FX units, MIDI controllers, and DAW integration. This modularity supports a more performance-oriented approach, especially in experimental and genre-pushing environments.

These setups often require:

MIDI-to-CV converters for synth and modular gear integration

Advanced routing and clock sync using tools like Ableton Link

OSC (Open Sound Control) communication for custom mapping

Expanded monitoring and cueing flexibility

This renewed complexity places greater demands on engineers, who must design systems that are flexible, fail-safe, and capable of supporting unconventional performance styles.

Looking Ahead: AI Mixing, Haptics & Gesture Control

As we look to the future, the next phase of DJ controllers is already taking shape. Innovations on the horizon include:

AI-assisted mixing that adapts in real time to crowd energy

Haptic feedback jog wheels that provide dynamic tactile response

Gesture-based FX triggering via infrared or wearable sensors

Augmented reality interfaces for 3D waveform manipulation

Deeper integration with lighting and visual systems through DMX and timecode sync

For engineers, this means staying ahead of emerging protocols and preparing venues for more immersive, synchronized, and responsive performances.

Final Thoughts

The modern DJ controller is no longer just a mixing tool—it's a self-contained creative engine, central to the live music experience. Understanding its capabilities and the technology driving it is critical for audio engineers who are expected to deliver seamless, high-impact performances in every environment.

Whether you’re building a club system, managing a tour rig, or outfitting a studio, choosing the right gear is key. Sourcing equipment from a trusted professional audio retailer—online or in-store—ensures not only access to cutting-edge products but also expert guidance, technical support, and long-term reliability.

As DJ technology continues to evolve, so too must the systems that support it. The future is fast, intelligent, and immersive—and it’s powered by the gear we choose today.

2 notes

·

View notes

Text

Google Cloud’s BigQuery Autonomous Data To AI Platform

BigQuery automates data analysis, transformation, and insight generation using AI. AI and natural language interaction simplify difficult operations.

The fast-paced world needs data access and a real-time data activation flywheel. Artificial intelligence that integrates directly into the data environment and works with intelligent agents is emerging. These catalysts open doors and enable self-directed, rapid action, which is vital for success. This flywheel uses Google's Data & AI Cloud to activate data in real time. BigQuery has five times more organisations than the two leading cloud providers that just offer data science and data warehousing solutions due to this emphasis.

Examples of top companies:

With BigQuery, Radisson Hotel Group enhanced campaign productivity by 50% and revenue by over 20% by fine-tuning the Gemini model.

By connecting over 170 data sources with BigQuery, Gordon Food Service established a scalable, modern, AI-ready data architecture. This improved real-time response to critical business demands, enabled complete analytics, boosted client usage of their ordering systems, and offered staff rapid insights while cutting costs and boosting market share.

J.B. Hunt is revolutionising logistics for shippers and carriers by integrating Databricks into BigQuery.

General Mills saves over $100 million using BigQuery and Vertex AI to give workers secure access to LLMs for structured and unstructured data searches.

Google Cloud is unveiling many new features with its autonomous data to AI platform powered by BigQuery and Looker, a unified, trustworthy, and conversational BI platform:

New assistive and agentic experiences based on your trusted data and available through BigQuery and Looker will make data scientists, data engineers, analysts, and business users' jobs simpler and faster.

Advanced analytics and data science acceleration: Along with seamless integration with real-time and open-source technologies, BigQuery AI-assisted notebooks improve data science workflows and BigQuery AI Query Engine provides fresh insights.

Autonomous data foundation: BigQuery can collect, manage, and orchestrate any data with its new autonomous features, which include native support for unstructured data processing and open data formats like Iceberg.

Look at each change in detail.

User-specific agents

It believes everyone should have AI. BigQuery and Looker made AI-powered helpful experiences generally available, but Google Cloud now offers specialised agents for all data chores, such as:

Data engineering agents integrated with BigQuery pipelines help create data pipelines, convert and enhance data, discover anomalies, and automate metadata development. These agents provide trustworthy data and replace time-consuming and repetitive tasks, enhancing data team productivity. Data engineers traditionally spend hours cleaning, processing, and confirming data.

The data science agent in Google's Colab notebook enables model development at every step. Scalable training, intelligent model selection, automated feature engineering, and faster iteration are possible. This agent lets data science teams focus on complex methods rather than data and infrastructure.

Looker conversational analytics lets everyone utilise natural language with data. Expanded capabilities provided with DeepMind let all users understand the agent's actions and easily resolve misconceptions by undertaking advanced analysis and explaining its logic. Looker's semantic layer boosts accuracy by two-thirds. The agent understands business language like “revenue” and “segments” and can compute metrics in real time, ensuring trustworthy, accurate, and relevant results. An API for conversational analytics is also being introduced to help developers integrate it into processes and apps.

In the BigQuery autonomous data to AI platform, Google Cloud introduced the BigQuery knowledge engine to power assistive and agentic experiences. It models data associations, suggests business vocabulary words, and creates metadata instantaneously using Gemini's table descriptions, query histories, and schema connections. This knowledge engine grounds AI and agents in business context, enabling semantic search across BigQuery and AI-powered data insights.

All customers may access Gemini-powered agentic and assistive experiences in BigQuery and Looker without add-ons in the existing price model tiers!

Accelerating data science and advanced analytics

BigQuery autonomous data to AI platform is revolutionising data science and analytics by enabling new AI-driven data science experiences and engines to manage complex data and provide real-time analytics.

First, AI improves BigQuery notebooks. It adds intelligent SQL cells to your notebook that can merge data sources, comprehend data context, and make code-writing suggestions. It also uses native exploratory analysis and visualisation capabilities for data exploration and peer collaboration. Data scientists can also schedule analyses and update insights. Google Cloud also lets you construct laptop-driven, dynamic, user-friendly, interactive data apps to share insights across the organisation.

This enhanced notebook experience is complemented by the BigQuery AI query engine for AI-driven analytics. This engine lets data scientists easily manage organised and unstructured data and add real-world context—not simply retrieve it. BigQuery AI co-processes SQL and Gemini, adding runtime verbal comprehension, reasoning skills, and real-world knowledge. Their new engine processes unstructured photographs and matches them to your product catalogue. This engine supports several use cases, including model enhancement, sophisticated segmentation, and new insights.

Additionally, it provides users with the most cloud-optimized open-source environment. Google Cloud for Apache Kafka enables real-time data pipelines for event sourcing, model scoring, communications, and analytics in BigQuery for serverless Apache Spark execution. Customers have almost doubled their serverless Spark use in the last year, and Google Cloud has upgraded this engine to handle data 2.7 times faster.

BigQuery lets data scientists utilise SQL, Spark, or foundation models on Google's serverless and scalable architecture to innovate faster without the challenges of traditional infrastructure.

An independent data foundation throughout data lifetime

An independent data foundation created for modern data complexity supports its advanced analytics engines and specialised agents. BigQuery is transforming the environment by making unstructured data first-class citizens. New platform features, such as orchestration for a variety of data workloads, autonomous and invisible governance, and open formats for flexibility, ensure that your data is always ready for data science or artificial intelligence issues. It does this while giving the best cost and decreasing operational overhead.

For many companies, unstructured data is their biggest untapped potential. Even while structured data provides analytical avenues, unique ideas in text, audio, video, and photographs are often underutilised and discovered in siloed systems. BigQuery instantly tackles this issue by making unstructured data a first-class citizen using multimodal tables (preview), which integrate structured data with rich, complex data types for unified querying and storage.

Google Cloud's expanded BigQuery governance enables data stewards and professionals a single perspective to manage discovery, classification, curation, quality, usage, and sharing, including automatic cataloguing and metadata production, to efficiently manage this large data estate. BigQuery continuous queries use SQL to analyse and act on streaming data regardless of format, ensuring timely insights from all your data streams.

Customers utilise Google's AI models in BigQuery for multimodal analysis 16 times more than last year, driven by advanced support for structured and unstructured multimodal data. BigQuery with Vertex AI are 8–16 times cheaper than independent data warehouse and AI solutions.

Google Cloud maintains open ecology. BigQuery tables for Apache Iceberg combine BigQuery's performance and integrated capabilities with the flexibility of an open data lakehouse to link Iceberg data to SQL, Spark, AI, and third-party engines in an open and interoperable fashion. This service provides adaptive and autonomous table management, high-performance streaming, auto-AI-generated insights, practically infinite serverless scalability, and improved governance. Cloud storage enables fail-safe features and centralised fine-grained access control management in their managed solution.

Finaly, AI platform autonomous data optimises. Scaling resources, managing workloads, and ensuring cost-effectiveness are its competencies. The new BigQuery spend commit unifies spending throughout BigQuery platform and allows flexibility in shifting spend across streaming, governance, data processing engines, and more, making purchase easier.

Start your data and AI adventure with BigQuery data migration. Google Cloud wants to know how you innovate with data.

#technology#technews#govindhtech#news#technologynews#BigQuery autonomous data to AI platform#BigQuery#autonomous data to AI platform#BigQuery platform#autonomous data#BigQuery AI Query Engine

2 notes

·

View notes

Text

Adobe Experience Manager Services USA: Empowering Digital Transformation

Introduction

In today's digital-first world, Adobe Experience Manager (AEM) Services USA have become a key driver for businesses looking to optimize their digital experiences, streamline content management, and enhance customer engagement. AEM is a powerful content management system (CMS) that integrates with AI and cloud technologies to provide scalable, secure, and personalized digital solutions.

With the rapid evolution of online platforms, enterprises across industries such as e-commerce, healthcare, finance, and media are leveraging AEM to deliver seamless and engaging digital experiences. In this blog, we explore how AEM services in the USA are revolutionizing digital content management and highlight the leading AEM service providers offering cutting-edge solutions.

Why Adobe Experience Manager Services Are Essential for Enterprises

AEM is an advanced digital experience platform that enables businesses to create, manage, and optimize digital content efficiently. Companies that implement AEM services in the USA benefit from:

Unified Content Management: Manage web, mobile, and digital assets seamlessly from a centralized platform.

Omnichannel Content Delivery: Deliver personalized experiences across multiple touchpoints, including websites, mobile apps, and IoT devices.

Enhanced User Experience: Leverage AI-driven insights and automation to create engaging and personalized customer interactions.

Scalability & Flexibility: AEM’s cloud-based architecture allows businesses to scale their content strategies efficiently.

Security & Compliance: Ensure data security and regulatory compliance with enterprise-grade security features.

Key AEM Services Driving Digital Transformation in the USA

Leading AEM service providers in the USA offer a comprehensive range of solutions tailored to enterprise needs:

AEM Sites Development: Build and manage responsive, high-performance websites with AEM’s powerful CMS capabilities.

AEM Assets Management: Store, organize, and distribute digital assets effectively with AI-driven automation.

AEM Headless CMS Implementation: Deliver content seamlessly across web, mobile, and digital channels through API-driven content delivery.

AEM Cloud Migration: Migrate to Adobe Experience Manager as a Cloud Service for improved agility, security, and scalability.

AEM Personalization & AI Integration: Utilize Adobe Sensei AI to deliver real-time personalized experiences.

AEM Consulting & Support: Get expert guidance, training, and support to optimize AEM performance and efficiency.

Key Factors Defining Top AEM Service Providers in the USA

Choosing the right AEM partner is crucial for successful AEM implementation in the USA. The best AEM service providers excel in:

Expertise in AEM Development & Customization

Leading AEM companies specialize in custom AEM development, ensuring tailored solutions that align with business goals.

Cloud-Based AEM Solutions

Cloud-native AEM services enable businesses to scale and manage content efficiently with Adobe Experience Manager as a Cloud Service.

Industry-Specific AEM Applications

Customized AEM solutions cater to specific industry needs, from e-commerce personalization to financial services automation.

Seamless AEM Integration

Top providers ensure smooth integration of AEM with existing enterprise tools such as CRM, ERP, and marketing automation platforms.

End-to-End AEM Support & Optimization

Comprehensive support services include AEM migration, upgrades, maintenance, and performance optimization.

Top AEM Service Providers in the USA

Leading AEM service providers offer a range of solutions to help businesses optimize their Adobe Experience Manager implementations. These services include:

AEM Strategy & Consulting

Expert guidance on AEM implementation, cloud migration, and content strategy.

AEM Cloud Migration & Integration

Seamless migration from on-premise to AEM as a Cloud Service, ensuring scalability and security.

AEM Development & Customization

Tailored solutions for AEM components, templates, workflows, and third-party integrations.

AEM Performance Optimization

Enhancing site speed, caching, and content delivery for improved user experiences.

AEM Managed Services & Support

Ongoing maintenance, upgrades, and security monitoring for optimal AEM performance.

The Future of AEM Services in the USA

The future of AEM services in the USA is driven by advancements in AI, machine learning, and cloud computing. Key trends shaping AEM’s evolution include:

AI-Powered Content Automation: AEM’s AI capabilities, such as Adobe Sensei, enhance content personalization and automation.

Headless CMS for Omnichannel Delivery: AEM’s headless CMS capabilities enable seamless content delivery across web, mobile, and IoT.

Cloud-First AEM Deployments: The shift towards Adobe Experience Manager as a Cloud Service is enabling businesses to achieve better performance and scalability.

Enhanced Data Security & Compliance: With growing concerns about data privacy, AEM service providers focus on GDPR and HIPAA-compliant solutions.

Conclusion:

Elevate Your Digital Experience with AEM Services USA

As businesses embrace digital transformation, Adobe Experience Manager services in the USA provide a powerful, scalable, and AI-driven solution to enhance content management and customer engagement. Choosing the right AEM partner ensures seamless implementation, personalized experiences, and improved operational efficiency.

🚀 Transform your digital strategy today by partnering with a top AEM service provider in the USA. The future of digital experience management starts with AEM—empowering businesses to deliver exceptional content and customer experiences!

3 notes

·

View notes

Text

Exploring the Azure Technology Stack: A Solution Architect’s Journey

Kavin

As a solution architect, my career revolves around solving complex problems and designing systems that are scalable, secure, and efficient. The rise of cloud computing has transformed the way we think about technology, and Microsoft Azure has been at the forefront of this evolution. With its diverse and powerful technology stack, Azure offers endless possibilities for businesses and developers alike. My journey with Azure began with Microsoft Azure training online, which not only deepened my understanding of cloud concepts but also helped me unlock the potential of Azure’s ecosystem.

In this blog, I will share my experience working with a specific Azure technology stack that has proven to be transformative in various projects. This stack primarily focuses on serverless computing, container orchestration, DevOps integration, and globally distributed data management. Let’s dive into how these components come together to create robust solutions for modern business challenges.

Understanding the Azure Ecosystem

Azure’s ecosystem is vast, encompassing services that cater to infrastructure, application development, analytics, machine learning, and more. For this blog, I will focus on a specific stack that includes:

Azure Functions for serverless computing.

Azure Kubernetes Service (AKS) for container orchestration.

Azure DevOps for streamlined development and deployment.

Azure Cosmos DB for globally distributed, scalable data storage.

Each of these services has unique strengths, and when used together, they form a powerful foundation for building modern, cloud-native applications.

1. Azure Functions: Embracing Serverless Architecture

Serverless computing has redefined how we build and deploy applications. With Azure Functions, developers can focus on writing code without worrying about managing infrastructure. Azure Functions supports multiple programming languages and offers seamless integration with other Azure services.

Real-World Application

In one of my projects, we needed to process real-time data from IoT devices deployed across multiple locations. Azure Functions was the perfect choice for this task. By integrating Azure Functions with Azure Event Hubs, we were able to create an event-driven architecture that processed millions of events daily. The serverless nature of Azure Functions allowed us to scale dynamically based on workload, ensuring cost-efficiency and high performance.

Key Benefits:

Auto-scaling: Automatically adjusts to handle workload variations.

Cost-effective: Pay only for the resources consumed during function execution.

Integration-ready: Easily connects with services like Logic Apps, Event Grid, and API Management.

2. Azure Kubernetes Service (AKS): The Power of Containers

Containers have become the backbone of modern application development, and Azure Kubernetes Service (AKS) simplifies container orchestration. AKS provides a managed Kubernetes environment, making it easier to deploy, manage, and scale containerized applications.

Real-World Application

In a project for a healthcare client, we built a microservices architecture using AKS. Each service—such as patient records, appointment scheduling, and billing—was containerized and deployed on AKS. This approach provided several advantages:

Isolation: Each service operated independently, improving fault tolerance.

Scalability: AKS scaled specific services based on demand, optimizing resource usage.

Observability: Using Azure Monitor, we gained deep insights into application performance and quickly resolved issues.

The integration of AKS with Azure DevOps further streamlined our CI/CD pipelines, enabling rapid deployment and updates without downtime.

Key Benefits:

Managed Kubernetes: Reduces operational overhead with automated updates and patching.

Multi-region support: Enables global application deployments.

Built-in security: Integrates with Azure Active Directory and offers role-based access control (RBAC).

3. Azure DevOps: Streamlining Development Workflows

Azure DevOps is an all-in-one platform for managing development workflows, from planning to deployment. It includes tools like Azure Repos, Azure Pipelines, and Azure Artifacts, which support collaboration and automation.

Real-World Application

For an e-commerce client, we used Azure DevOps to establish an efficient CI/CD pipeline. The project involved multiple teams working on front-end, back-end, and database components. Azure DevOps provided:

Version control: Using Azure Repos for centralized code management.

Automated pipelines: Azure Pipelines for building, testing, and deploying code.

Artifact management: Storing dependencies in Azure Artifacts for seamless integration.

The result? Deployment cycles that previously took weeks were reduced to just a few hours, enabling faster time-to-market and improved customer satisfaction.

Key Benefits:

End-to-end integration: Unifies tools for seamless development and deployment.

Scalability: Supports projects of all sizes, from startups to enterprises.

Collaboration: Facilitates team communication with built-in dashboards and tracking.

4. Azure Cosmos DB: Global Data at Scale

Azure Cosmos DB is a globally distributed, multi-model database service designed for mission-critical applications. It guarantees low latency, high availability, and scalability, making it ideal for applications requiring real-time data access across multiple regions.

Real-World Application

In a project for a financial services company, we used Azure Cosmos DB to manage transaction data across multiple continents. The database’s multi-region replication ensure data consistency and availability, even during regional outages. Additionally, Cosmos DB’s support for multiple APIs (SQL, MongoDB, Cassandra, etc.) allowed us to integrate seamlessly with existing systems.

Key Benefits:

Global distribution: Data is replicated across regions with minimal latency.

Flexibility: Supports various data models, including key-value, document, and graph.

SLAs: Offers industry-leading SLAs for availability, throughput, and latency.

Building a Cohesive Solution

Combining these Azure services creates a technology stack that is flexible, scalable, and efficient. Here’s how they work together in a hypothetical solution:

Data Ingestion: IoT devices send data to Azure Event Hubs.

Processing: Azure Functions processes the data in real-time.

Storage: Processed data is stored in Azure Cosmos DB for global access.

Application Logic: Containerized microservices run on AKS, providing APIs for accessing and manipulating data.

Deployment: Azure DevOps manages the CI/CD pipeline, ensuring seamless updates to the application.

This architecture demonstrates how Azure’s technology stack can address modern business challenges while maintaining high performance and reliability.

Final Thoughts