#dataintegrity

Explore tagged Tumblr posts

Text

Database services are essential for any business that wants to store and manage its data effectively. We offer a wide range of database services to meet your specific needs, including database design, development, implementation, and support.

For more, visit: https://briskwinit.com/

#DatabaseServices#DatabaseManagement#DataStorage#DataSecurity#DataIntegrity#DataPerformance#DataScalability#DataReliability#DataAvailability#DataAccessibility

6 notes

·

View notes

Text

Building trust in real-world evidence (RWE) is essential for ensuring its credibility and regulatory acceptance. Industry experts provide valuable insights on how to meet the stringent standards required to make RWE data regulatory-grade. By focusing on transparency, data quality, and compliance with regulations, companies can unlock the full potential of RWE in decision-making processes.

0 notes

Text

How Blockchain and AI Can Work Together to Transform Business Intelligence

🚀 Blockchain + AI = The Future of Business Intelligence

Imagine business insights that are not only smart but also secure and trusted. That’s the power of combining Blockchain and AI.

In our latest blog, we explore how this powerful duo is: 🔐 Enhancing data integrity 🤖 Delivering real-time, predictive insights 🏥 Transforming industries from healthcare to FinTech 📦 Optimizing supply chains, asset tracking & more

Discover how your business can unlock BI 2.0 - intelligent, transparent, and future-ready.

👉 Read now: https://www.webkorps.com/blog/how-blockchain-and-ai-can-work-together-to-transform-business-intelligence/

#Blockchain#AI#BusinessIntelligence#DigitalTransformation#Webkorps#SmartAnalytics#AIInsights#BlockchainForBusiness#DataIntegrity#BI2_0#FutureOfWork#SecureAI

0 notes

Text

SQL Assertions with Aggregates and Events

This work presents an efficient and general-purpose approach for enforcing SQL assertions in relational database systems using Event Rules. SQL assertions are powerful integrity constraints capable of expressing complex conditions across multiple tables, but they are not natively supported in most RDBMSs. To overcome this limitation, the proposed method simulates SQL assertions using standard SQL features—such as triggers, views, and procedures—without requiring changes to the database engine.

#SQL #RDBMS #SQLAssertions #DataIntegrity

International Database Scientist Awards Website Link: https://databasescientist.org/ Nomination Link: https://databasescientist.org/award-nomination/?ecategory=Awards&rcategory=Awardee Contact Us For Enquiry: [email protected]

#DatabaseScience #DataManagement #DatabaseExpert #DataProfessional #DatabaseDesign #DataArchitecture #DatabaseDevelopment #DataSpecialist #DatabaseAdministration #DataEngineer #DatabaseProfessional #DataAnalyst #DatabaseArchitect #DataScientist #DatabaseSecurity #DataStorage #DatabaseSolutions #DataManagementSolutions #DatabaseInnovation #DataExpertise

Youtube: https://www.youtube.com/@databasescientist Instagram: https://www.instagram.com/databasescientist123/ Facebook: https://www.facebook.com/profile.php?id=61577298666367 Pinterest: https://in.pinterest.com/databasescientist/ Blogger: https://www.blogger.com/blog/posts/1267729159104340550 Whatsapp Channel: https://whatsapp.com/channel/0029VbBII1lLSmbfVSNpFT2U

0 notes

Text

Why Data Validation is the Backbone of Long-Term Business Success

In today’s data-driven world, the key to sustained business growth lies in the accuracy and consistency of the data you rely on. The article emphasizes that without proper data validation, even the most sophisticated systems can lead to costly errors, inefficiencies, and missed opportunities. Robust data validation not only enhances decision-making and compliance but also builds a foundation of trust across teams and stakeholders. In essence, data validation isn't just a technical necessity—it’s a strategic advantage that ensures stability, efficiency, and long-term success.

https://postr.blog/why-data-validation-is-crucial-for-long-term-success

#DataValidation#DataAccuracy#BusinessSuccess#DataDriven#DataIntegrity#DataQuality#BusinessGrowth#LongTermSuccess#DataManagement#OperationalEfficiency#SmartDecisions#ValidatedData#BusinessStrategy#TechForBusiness#ReliableData

0 notes

Text

instagram

#DatabaseTransactions#ACIDProperties#RDBMS#SQLReliability#DataIntegrity#TransactionManagement#LearnSQL#TechEducation#DatabaseSecurity#SunshineDigitalServices#Instagram

0 notes

Text

Data Integrity

Des: Original or a true copy, an accurate data lifecycle and all the employees shall not do any unethical practices with respect to data integrity.

DATA INTEGRITY POLICIES

The activities that who performed on Manufacturing, Analysis, Maintenance, Training, Calibrations, Packing, Cleaning, and Dispatches etc. shall be Documented the data in paper or electronic form, online and real time.

The documented data demonstrates that Complete, Consistent, Attributable, Legible, Contemporaneously, recorded, Original or a true copy, an accurate data lifecycle and all the employees shall not do any unethical practices with respect to data integrity.

Attributable

Legible Contemporaneous

Original

Accurate

Attributable

It should be possible to identify the individual who performed the recorded task. The need to document who the task / function, is in part to demonstrate that the function was Performed by trained and qualified personnel This applies to changes made to records as well corrections, deletions, Changes etc. Legible

All records must be legible, the information must be readable for it to of any use. This applies to all information that would require considering Complete, including all Originals records or entries. Where the 'dynamic' nature of electronic data (the ability to search, query, trend, etc) is important to the content and meaning of the record, the ability to interact with the data using a suitable application is important to the 'availability' f the record.

Contemporaneous:

The evidence of actions, events or decisions should be Original, accurately recorded as they take place. This documentation should serve as an accurate attestation of what was done, or what was decided and why, i.e. what influenced the decision at that time.

Original

The original record can be the first capture of information, whether recorded on paper statically or electronically (Usually dynamic, depending on the complexity of the system). Information that is originally captured in a dynamic state should remain available in that state.

Accurate

Ensuring results and records are accurate is achieved through many elements of a robust Pharmaceutical Quality management System, equipment related factors such as qualification, calibration, Maintenance and computer validation.

Policies and procedures to control actions and behaviours, including data review procedures to verify adherence to procedural requirements deviation management including root cause analysis, impact assessments and CAPA.

Trained and qualified personnel who understand the importance of following established procedures and document their actions and decisions.

Together, these elements aim to ensure the accuracy of information, including scientific data that is used to make critical decisions about the quality products.

All information that would be critical to recreating an event is important when trying to understand the event. The level of detail required for information set to be considered complete would depend on the criticality of the information. A complete record of data generated electronically including relevant metadata.

Consistent

Good Documentation Practices should be applied throughout any process, without exception, including deviations that may occur during the process. This includes capturing all changes made to data.

Enduring

All part of ensuring records are available is making sure they exist for the entire period during which they might be needed. This means they need to remain intact and accessible as an Indelible/durable record.

Available

Records must be available for review at any time during the required retention period, accessible in a readable format to all applicable personnel who are responsible for their review whether for routine

release decisions, investigations, trending, annual reports, audits or instrutions.

META DATA: Metadata is the contextual information required to understand data. A data value is by itself meaningless without additional information about the data.

Metadata is often as data about data. Metadata is structured information that describes, explains, or otherwise makes it easier to retrieve, use, or manage data.

Data should be maintained throughout the record's retention period with all associated metadata required to reconstruct the CGMP activity.The relationships between data and their metadata should preserved in a secure and traceable manner.

AUDIT TRAIL: Audit Trail refers to a secure, computer-generated, time-stamped electronic record that enables the reconstruction of the course of events related to the creation, modification, or deletion of an electronic record.

An audit trail is a chronology of the "who, what, why and when " of a record.

For example, the audit trail for a high performance liquid chromatography (HPLC) run could include the user name, date/time of the run, the integration parameters used, and details ofa reprocessing, if any, including change justification for the reprocessing.

Electronic audit trails include those that track creation, modification, or deletion of data (such as processing parameters and results) and those that track. the system level (such as attempts to access the system or rename or delete a file.

0 notes

Text

RHIT Question of the Day

🗂️ Given these data dictionary details for ADMISSION_DATE: definition, data type = date, field length = 15, required = yes, default = none, template = none—how can data integrity be improved?

Options: a) The template was defined b) The data type was numeric c) The field was not required d) The field length was longer

Answer: a) The template was defined

💡 Why? Defining a template (or data entry format) helps ensure consistent and accurate input by guiding users on how to enter the date correctly (e.g., MM/DD/YYYY). This reduces errors and supports data integrity.

Changing the data type to numeric wouldn't suit dates.

Making the field not required could lead to missing data.

Extending field length isn’t necessary if current length accommodates date format.

#RHIT#DataIntegrity#HealthInformation#DataDictionary#HIM#HealthcareData#HealthIT#CodingChallenge#MedicalRecords

0 notes

Text

Planning a System Upgrade or Moving to the Cloud?

Don’t let data migration become a disaster waiting to happen! 😬

When businesses shift data from one system to another, even the tiniest error can lead to: ❌ Broken workflows ❌ Data loss ❌ Downtime ❌ Compliance issues

That’s why Data Migration Testing Services are crucial before, during, and after your move. ✔️

Ensure: ✅ Every record is transferred accurately ✅ Your systems stay secure & compliant ✅ Business runs smoothly with zero disruptions

#DataMigration#SoftwareTesting#MigrationTesting#DataIntegrity#BusinessContinuity#TechBlog#ITServices#TeamVirtuoso#CloudMigration

0 notes

Text

Efficient Techniques for Data Reconciliation

Data Reconciliation is the process of ensuring consistency and accuracy between data from different sources. This guide explores efficient techniques such as automated matching algorithms, rule-based validation, and anomaly detection. Implementing these methods helps minimize errors, enhance data integrity, and support reliable decision-making Read More...

0 notes

Text

Stop Letting Bad Data Hurt Your Business

Did you know that businesses lose millions each year due to poor data quality? Errors in customer databases, financial miscalculations, and flawed analytics can derail decision-making and damage credibility. The Data Quality Concepts course by iceDQ offers a step-by-step roadmap for professionals to understand and eliminate data issues. It focuses on detecting root causes, implementing controls, and establishing long-term monitoring strategies. Perfect for business analysts, QA testers, and data professionals, the course features practical use cases and implementation techniques that help identify errors early. You’ll also learn to align data quality with business objectives, which is critical for digital transformation projects.

👉 Don’t let your business suffer—take the course by iceDQ and protect your data assets!

#DataQuality#icedq#DataTesting#DataGovernance#DataReliability#DataCertification#DataQualityDimensions#DataIntegrity#DataAccuracy#DataCompleteness#DataValidation

0 notes

Text

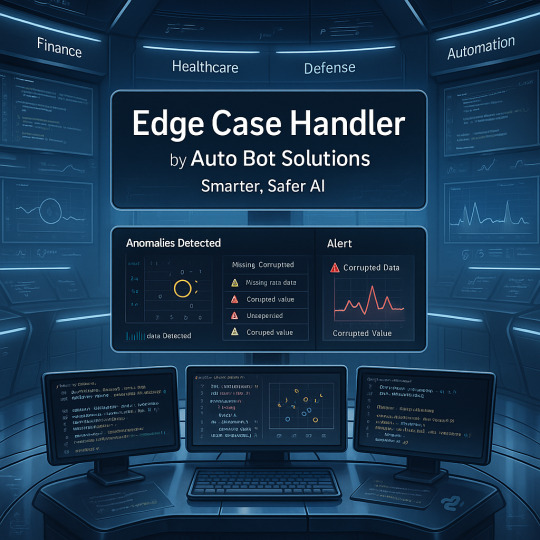

We’ve all seen it your model’s performing great, then one weird data point sneaks in and everything goes sideways. That single outlier, that unexpected format, that missing field it’s enough to send even a robust AI pipeline off course.

That’s exactly why we built the Edge Case Handler at Auto Bot Solutions.

It’s a core part of the G.O.D. Framework (Generalized Omni-dimensional Development) https://github.com/AutoBotSolutions/Aurora designed to think ahead automatically flagging, handling, and documenting anomalies before they escalate.

Whether it’s:

Corrupted inputs,

Extreme values,

Missing data,

Inconsistent types, or

Pattern anomalies you didn’t even anticipate

The module detects and responds gracefully, keeping systems running even in unpredictable environments. It’s about building AI you can trust, especially when real-time, high stakes decisions are on the line think finance, autonomous systems, defense, or healthcare.

Some things it does right out of the box:

Statistical anomaly detection

Missing data strategies (imputation, fallback, or rejection)

Live & log-based debugging

Input validation & consistency enforcement

Custom thresholds and behavior control Built in Python. Fully open-source. Actively maintained. GitHub: https://github.com/AutoBotSolutions/Aurora/blob/Aurora/ai_edge_case_handling.py Full docs & templates:

Overview: https://autobotsolutions.com/artificial-intelligence/edge-case-handler-reliable-detection-and-handling-of-data-edge-cases/

Technical details: https://autobotsolutions.com/god/stats/doku.php?id=ai_edge_case_handling

Template: https://autobotsolutions.com/god/templates/ai_edge_case_handling.html

We’re not just catching errors we’re making AI more resilient, transparent, and real-world ready.

#AIFramework#AnomalyDetection#DataIntegrity#AI#AIModule#DataScience#OpenSourceAI#ResilientAI#PythonAI#GODFramework#MachineLearning#AutoBotSolutions#EdgeCaseHandler

0 notes

Text

instagram

#SunshineDigital#DataNormalization#RDBMS#DatabaseDesign#DataIntegrity#SQLTips#RelationalDatabase#TechEducation#DataEfficiency#DatabaseOptimization#LearnSQL#Instagram

0 notes

Text

Seeking reliable and robust stability study services? We offer state-of-the-art fully GMP complaint solutions with a focus on data integrity and regulatory compliance. 🌏 Our qualified walk-in stability chambers accommodate a range of storage environmental conditions, including 25°C/60%RH, 30°C/65%RH, 30°C/75%RH, 40°C/75%RH, and 5°C, catering to diverse product requirements and climatic zone simulations. We go beyond standard testing. Our comprehensive services encompass protocol preparation, meticulous sample management, proper documentation, access controls, full traceability, and thorough physical, chemical, and microbial analyses. Benefit from our 21 CFR Part 11 compliant monitoring systems, complete with remote alarms and SMS notifications, ensuring the continuous integrity of your stability studies. Trust Eurofins BioPharma Product Testing India for stability studies that deliver accurate data and peace of mind. Contact us to discuss your specific needs.

0 notes

Text

Blockchain x IoT: The Future of Secure, Connected Systems

Blockchain and IoT are reshaping industries by enabling secure, automated, and intelligent systems. From real-time supply chain tracking to tamper-proof healthcare data, Prishusoft helps businesses harness this powerful duo to build smart, scalable solutions. Read more : https://www.linkedin.com/posts/prishusoft_blockchaintechnology-iotinnovation-smartbusiness-activity-7318162490222161922-xlZP?utm_source=share&utm_medium=member_desktop&rcm=ACoAACydcx8BOk3ltpz9_aW0C_sUnZHAJU7QvTM

0 notes

Text

Blockchain ensures every transaction is verified and immutable. 🔐🌐 No alterations, no fraud—just trust, transparency, and security. The future of transactions is here! 🚀💡 Click this link : https://tinyurl.com/y9exyz7b

#blockchainverified#immutableledger#decentralizedfuture#trustlesssystems#cryptoinnovation#dataintegrity#web3tech#securetransactions#transparencymatters#blockchainforgood#smartcontracts#openfinance#techdisruption#digitaltrust#nextgentech#web3community#futureoffinance#peertopeer#distributedledger#fintechrevolution#tokeneconomy#digitaltransformation#defi2025#blockchainecosystem#cybersecurity#trustthroughtech

0 notes