#deep learning in data science

Explore tagged Tumblr posts

Text

In today’s data-driven world, deep learning has become one of the most transformative and powerful technologies within the field of data science. Whether you’re new to AI or just stepping into the domain of data science, understanding deep learning is essential for unlocking the full potential of modern data analysis, automation, and predictive modeling.

What is Deep Learning?

Deep learning is a subset of machine learning that mimics the structure and function of the human brain using artificial neural networks. These networks are composed of multiple layers (hence the term “deep”), which allow the system to learn complex patterns and make decisions based on vast amounts of data. Unlike traditional algorithms, deep learning models can perform feature extraction automatically, reducing the need for manual intervention and improving accuracy in tasks like image recognition, natural language processing, and speech translation.

Why Should Beginners Learn Deep Learning?

Deep learning is at the core of many cutting-edge innovations—such as self-driving cars, recommendation engines, virtual assistants, and advanced fraud detection systems. For aspiring data scientists, learning deep learning opens the door to working on these exciting technologies. As companies increasingly adopt AI-driven solutions, the demand for professionals skilled in deep learning is growing rapidly across sectors like healthcare, finance, retail, and cybersecurity.

How Does Deep Learning Work?

At the heart of deep learning are artificial neural networks (ANNs), which process input data through interconnected layers. Each layer transforms the input data into a more abstract representation, enabling the model to identify relationships and patterns that would be difficult for humans or simple algorithms to spot.

For example, in an image classification task, a deep learning model first detects basic shapes and edges in early layers. As the data passes through more layers, the model begins recognizing more complex patterns like faces or objects. This hierarchical learning structure is what makes deep learning so powerful.

Getting Started with Deep Learning

Beginners can start by learning fundamental concepts such as:

Neurons and neural networks

Activation functions

Backpropagation and optimization

Training and testing datasets

Overfitting and regularization techniques

Popular frameworks like TensorFlow, Keras, and PyTorch make it easier for newcomers to build and experiment with deep learning models. Additionally, platforms like Google Colab offer free GPU access, allowing users to run models without investing in high-end hardware.

Deep Learning vs. Machine Learning

While machine learning relies on structured data and manual feature engineering, deep learning thrives on large datasets and automatically extracts features. This makes deep learning more suitable for unstructured data such as images, audio, and text. However, it also requires more computational resources and longer training times.

Final Thoughts

Deep learning is no longer just a niche technology—it’s becoming a foundational skill for data scientists worldwide. Starting your deep learning journey today will prepare you for the innovations of tomorrow. With the right resources, curiosity, and hands-on practice, even beginners can master this powerful discipline and build smart, data-driven solutions that shape the future.

#deep learning in data science#beginner's guide to deep learning#neural networks explained#machine learning vs deep learning#deep learning applications

0 notes

Text

HT @dataelixir

#data science#data scientist#data scientists#machine learning#analytics#programming#data analytics#artificial intelligence#deep learning#llm

11 notes

·

View notes

Text

The Mathematical Foundations of Machine Learning

In the world of artificial intelligence, machine learning is a crucial component that enables computers to learn from data and improve their performance over time. However, the math behind machine learning is often shrouded in mystery, even for those who work with it every day. Anil Ananthaswami, author of the book "Why Machines Learn," sheds light on the elegant mathematics that underlies modern AI, and his journey is a fascinating one.

Ananthaswami's interest in machine learning began when he started writing about it as a science journalist. His software engineering background sparked a desire to understand the technology from the ground up, leading him to teach himself coding and build simple machine learning systems. This exploration eventually led him to appreciate the mathematical principles that underlie modern AI. As Ananthaswami notes, "I was amazed by the beauty and elegance of the math behind machine learning."

Ananthaswami highlights the elegance of machine learning mathematics, which goes beyond the commonly known subfields of calculus, linear algebra, probability, and statistics. He points to specific theorems and proofs, such as the 1959 proof related to artificial neural networks, as examples of the beauty and elegance of machine learning mathematics. For instance, the concept of gradient descent, a fundamental algorithm used in machine learning, is a powerful example of how math can be used to optimize model parameters.

Ananthaswami emphasizes the need for a broader understanding of machine learning among non-experts, including science communicators, journalists, policymakers, and users of the technology. He believes that only when we understand the math behind machine learning can we critically evaluate its capabilities and limitations. This is crucial in today's world, where AI is increasingly being used in various applications, from healthcare to finance.

A deeper understanding of machine learning mathematics has significant implications for society. It can help us to evaluate AI systems more effectively, develop more transparent and explainable AI systems, and address AI bias and ensure fairness in decision-making. As Ananthaswami notes, "The math behind machine learning is not just a tool, but a way of thinking that can help us create more intelligent and more human-like machines."

The Elegant Math Behind Machine Learning (Machine Learning Street Talk, November 2024)

youtube

Matrices are used to organize and process complex data, such as images, text, and user interactions, making them a cornerstone in applications like Deep Learning (e.g., neural networks), Computer Vision (e.g., image recognition), Natural Language Processing (e.g., language translation), and Recommendation Systems (e.g., personalized suggestions). To leverage matrices effectively, AI relies on key mathematical concepts like Matrix Factorization (for dimension reduction), Eigendecomposition (for stability analysis), Orthogonality (for efficient transformations), and Sparse Matrices (for optimized computation).

The Applications of Matrices - What I wish my teachers told me way earlier (Zach Star, October 2019)

youtube

Transformers are a type of neural network architecture introduced in 2017 by Vaswani et al. in the paper “Attention Is All You Need”. They revolutionized the field of NLP by outperforming traditional recurrent neural network (RNN) and convolutional neural network (CNN) architectures in sequence-to-sequence tasks. The primary innovation of transformers is the self-attention mechanism, which allows the model to weigh the importance of different words in the input data irrespective of their positions in the sentence. This is particularly useful for capturing long-range dependencies in text, which was a challenge for RNNs due to vanishing gradients. Transformers have become the standard for machine translation tasks, offering state-of-the-art results in translating between languages. They are used for both abstractive and extractive summarization, generating concise summaries of long documents. Transformers help in understanding the context of questions and identifying relevant answers from a given text. By analyzing the context and nuances of language, transformers can accurately determine the sentiment behind text. While initially designed for sequential data, variants of transformers (e.g., Vision Transformers, ViT) have been successfully applied to image recognition tasks, treating images as sequences of patches. Transformers are used to improve the accuracy of speech-to-text systems by better modeling the sequential nature of audio data. The self-attention mechanism can be beneficial for understanding patterns in time series data, leading to more accurate forecasts.

Attention is all you need (Umar Hamil, May 2023)

youtube

Geometric deep learning is a subfield of deep learning that focuses on the study of geometric structures and their representation in data. This field has gained significant attention in recent years.

Michael Bronstein: Geometric Deep Learning (MLSS Kraków, December 2023)

youtube

Traditional Geometric Deep Learning, while powerful, often relies on the assumption of smooth geometric structures. However, real-world data frequently resides in non-manifold spaces where such assumptions are violated. Topology, with its focus on the preservation of proximity and connectivity, offers a more robust framework for analyzing these complex spaces. The inherent robustness of topological properties against noise further solidifies the rationale for integrating topology into deep learning paradigms.

Cristian Bodnar: Topological Message Passing (Michael Bronstein, August 2022)

youtube

Sunday, November 3, 2024

#machine learning#artificial intelligence#mathematics#computer science#deep learning#neural networks#algorithms#data science#statistics#programming#interview#ai assisted writing#machine art#Youtube#lecture

4 notes

·

View notes

Text

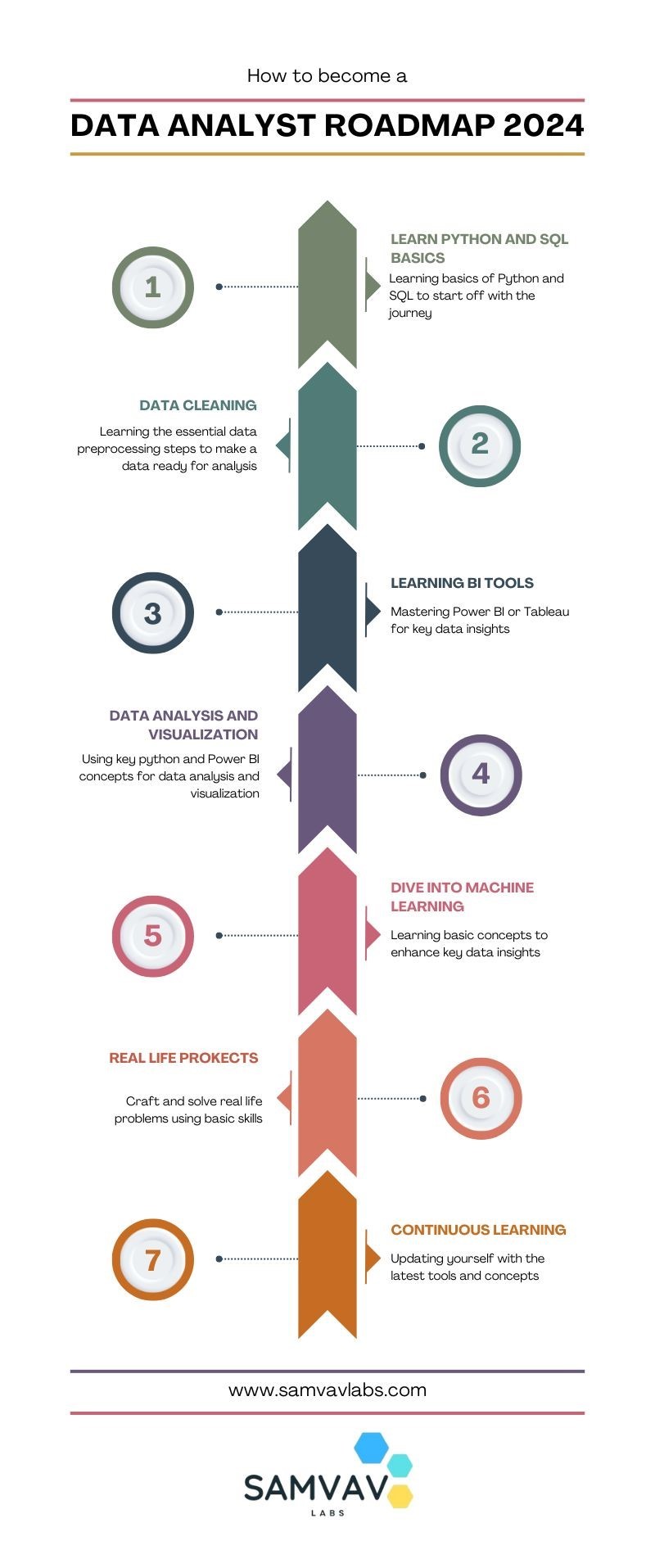

Data Analyst Roadmap for 2024!

Cracking the Data Analyst Roadmap for 2024! Kick off your journey by mastering and delving into Python for data manipulation magic, and dazzle stakeholders with insights using PowerBi or Tableau. Don't forget, that SQL proficiency and hands-on projects refine your skillset, but never overlook the importance of effective communication and problem-solving. Are you checking off these milestones on your path to success? 📌 For more details, visit our website: https://www.samvavlabs.com . . . #DataAnalyst2024 #CareerGrowth #roadmap #DataAnalyst #samvavlabs #roadmap2024 #dataanalystroadmap #datavisualization

#business analytics#data analytics#data analyst#machinelearning#data visualization#datascience#deep learning#data analyst training#dataanalystcourseinKolkata#data analyst certification#data analyst course#data science course#business analyst

12 notes

·

View notes

Text

Artificial Intelligence: Transforming the Future of Technology

Introduction: Artificial intelligence (AI) has become increasingly prominent in our everyday lives, revolutionizing the way we interact with technology. From virtual assistants like Siri and Alexa to predictive algorithms used in healthcare and finance, AI is shaping the future of innovation and automation.

Understanding Artificial Intelligence

Artificial intelligence (AI) involves creating computer systems capable of performing tasks that usually require human intelligence, including visual perception, speech recognition, decision-making, and language translation. By utilizing algorithms and machine learning, AI can analyze vast amounts of data and identify patterns to make autonomous decisions.

Applications of Artificial Intelligence

Healthcare: AI is being used to streamline medical processes, diagnose diseases, and personalize patient care.

Finance: Banks and financial institutions are leveraging AI for fraud detection, risk management, and investment strategies.

Retail: AI-powered chatbots and recommendation engines are enhancing customer shopping experiences.

Automotive: Self-driving cars are a prime example of AI technology revolutionizing transportation.

How Artificial Intelligence Works

AI systems are designed to mimic human intelligence by processing large datasets, learning from patterns, and adapting to new information. Machine learning algorithms and neural networks enable AI to continuously improve its performance and make more accurate predictions over time.

Advantages of Artificial Intelligence

Efficiency: AI can automate repetitive tasks, saving time and increasing productivity.

Precision: AI algorithms can analyze data with precision, leading to more accurate predictions and insights.

Personalization: AI can tailor recommendations and services to individual preferences, enhancing the customer experience.

Challenges and Limitations

Ethical Concerns: The use of AI raises ethical questions around data privacy, algorithm bias, and job displacement.

Security Risks: As AI becomes more integrated into critical systems, the risk of cyber attacks and data breaches increases.

Regulatory Compliance: Organizations must adhere to strict regulations and guidelines when implementing AI solutions to ensure transparency and accountability.

Conclusion: As artificial intelligence continues to evolve and expand its capabilities, it is essential for businesses and individuals to adapt to this technological shift. By leveraging AI's potential for innovation and efficiency, we can unlock new possibilities and drive progress in various industries. Embracing artificial intelligence is not just about staying competitive; it is about shaping a future where intelligent machines work hand in hand with humans to create a smarter and more connected world.

Syntax Minds is a training institute located in the Hyderabad. The institute provides various technical courses, typically focusing on software development, web design, and digital marketing. Their curriculum often includes subjects like Java, Python, Full Stack Development, Data Science, Machine Learning, Angular JS , React JS and other tech-related fields.

For the most accurate and up-to-date information, I recommend checking their official website or contacting them directly for details on courses, fees, batch timings, and admission procedures.

If you'd like help with more specific queries about their offerings or services, feel free to ask!

2 notes

·

View notes

Text

Day 43/100 days of productivity | Tue 2 Apr, 2024

Worked on a coding task at work today

Continued learning from my Deep Learning course (did 1 hour of study!)

8 notes

·

View notes

Text

youtube

If you're a data scientist, this is a must watch. Andrej Karpathy continues to be one of my favorite data scientists. In this video, he explains and walks through back-prop in the most intuitive way I've ever seen to date.

5 notes

·

View notes

Text

Hyperparameter tuning in machine learning

The performance of a machine learning model in the dynamic world of artificial intelligence is crucial, we have various algorithms for finding a solution to a business problem. Some algorithms like linear regression , logistic regression have parameters whose values are fixed so we have to use those models without any modifications for training a model but there are some algorithms out there where the values of parameters are not fixed.

Here's a complete guide to Hyperparameter tuning in machine learning in Python!

#datascience #dataanalytics #dataanalysis #statistics #machinelearning #python #deeplearning #supervisedlearning #unsupervisedlearning

#machine learning#data analysis#data science#artificial intelligence#data analytics#deep learning#python#statistics#unsupervised learning#feature selection

3 notes

·

View notes

Text

Product Manager, Scaled Analytics

Job title: Product Manager, Scaled Analytics Company: London Stock Exchange Group Job description: on Databricks Experience with Financial Services data products and use cases Experience building GenAI products on Databricks… critical role we have in helping to re-engineer the financial ecosystem to support and drive sustainable economic growth… Expected salary: Location: London Job date: Fri, 20…

#Aerospace#Android#audio-dsp#Azure#cleantech#cloud-computing#Cybersecurity#data-science#deep-learning#DevOps#dotnet#Ecommerce#embedded-systems#erp#ethical AI#ethical-hacking#gcp#GenAI Engineer#GIS#iOS#marine-tech#mobile-development#no-code#rpa#Salesforce#sharepoint#ux-design#visa-sponsorship#vr-ar

0 notes

Text

Best Deep Learning Training Institute in Delhi | Brillica Services

0 notes

Text

ML Code Challenges HT @dataelixir

#data science#data scientist#data scientists#machine learning#programming#deep learning#artificial intelligence

13 notes

·

View notes

Text

High Scope of Learning Deep

WordPress Twitter Facebook Quick note: if you’re viewing this via email, come to the site for better viewing. Enjoy! Oh lord, Z-Daddy is going to talk about the great machine uprising. This is so much better than Netflix and chilling.Photo by Andrea Piacquadio, please support by following @pexel.com Have you ever sat on the couch vegging out to your favorite episode of My 600 lb. life and…

0 notes

Text

Deep Learning, Deconstructed: A Physics-Informed Perspective on AI’s Inner Workings

Dr. Yasaman Bahri’s seminar offers a profound glimpse into the complexities of deep learning, merging empirical successes with theoretical foundations. Dr. Bahri’s distinct background, weaving together statistical physics, machine learning, and condensed matter physics, uniquely positions her to dissect the intricacies of deep neural networks. Her journey from a physics-centric PhD at UC Berkeley, influenced by computer science seminars, exemplifies the burgeoning synergy between physics and machine learning, underscoring the value of interdisciplinary approaches in elucidating deep learning’s mysteries.

At the heart of Dr. Bahri’s research lies the intriguing equivalence between neural networks and Gaussian processes in the infinite width limit, facilitated by the Central Limit Theorem. This theorem, by implying that the distribution of outputs from a neural network will approach a Gaussian distribution as the width of the network increases, provides a probabilistic framework for understanding neural network behavior. The derivation of Gaussian processes from various neural network architectures not only yields state-of-the-art kernels but also sheds light on the dynamics of optimization, enabling more precise predictions of model performance.

The discussion on scaling laws is multifaceted, encompassing empirical observations, theoretical underpinnings, and the intricate dance between model size, computational resources, and the volume of training data. While model quality often improves monotonically with these factors, reaching a point of diminishing returns, understanding these dynamics is crucial for efficient model design. Interestingly, the strategic selection of data emerges as a critical factor in surpassing the limitations imposed by power-law scaling, though this approach also presents challenges, including the risk of introducing biases and the need for domain-specific strategies.

As the field of deep learning continues to evolve, Dr. Bahri’s work serves as a beacon, illuminating the path forward. The imperative for interdisciplinary collaboration, combining the rigor of physics with the adaptability of machine learning, cannot be overstated. Moreover, the pursuit of personalized scaling laws, tailored to the unique characteristics of each problem domain, promises to revolutionize model efficiency. As researchers and practitioners navigate this complex landscape, they are left to ponder: What unforeseen synergies await discovery at the intersection of physics and deep learning, and how might these transform the future of artificial intelligence?

Yasaman Bahri: A First-Principle Approach to Understanding Deep Learning (DDPS Webinar, Lawrence Livermore National Laboratory, November 2024)

youtube

Sunday, November 24, 2024

#deep learning#physics informed ai#machine learning research#interdisciplinary approaches#scaling laws#gaussian processes#neural networks#artificial intelligence#ai theory#computational science#data science#technology convergence#innovation in ai#webinar#ai assisted writing#machine art#Youtube

3 notes

·

View notes

Text

Transform Your Career with NUCOT!

youtube

🚀 Transform Your Career with NUCOT! NUCOT is a Bangalore-based IT solutions company committed to empowering individuals through cutting-edge IT training and real-time industry exposure. Whether you're starting fresh or upgrading your skills, we’re here to guide your journey into Data Science, Software Testing, AI/ML, and more! 🌐💻

🎓 Learn. 💼 Get Placed. 🚀 Grow. 💼 100% Placement Assistance 📍 Join the IT revolution with NUCOT – Where talent meets opportunity! 🌐 Enrol now: https://nucot.co.in 📞 Call: +91 80954 30684 / +91 93412 19924 📍4,5, Crimson Court, Jeevan Bima Nagar Main Rd, HAL 3rd Stage, DOS Colony, Bengaluru, Karnataka 560075

hashtag#NUCOThashtag#ITTraininghashtag#CareerTransformationhashtag#BangaloreIThashtag#DataSciencehashtag#SoftwareTestinghashtag#JobReadyhashtag#LearnWithNUCOThashtag#TechCareershashtag#ArtificialIntelligencehashtag#MLhashtag#FutureSkillshashtag#BangaloreJobs

#Best Data Scince In Bangalore#artificial inteligence#Nucot#machine learning#deep learning#software testing in bangalore#Data science 100% job#Youtube

1 note

·

View note

Text

Master Machine Learning in Chandigarh with Real-Time Projects at CNT Technologies

Looking to master Machine Learning in Chandigarh? CNT Technologies offers a comprehensive and hands-on learning experience tailored for students, professionals, and tech enthusiasts who are eager to explore the world of artificial intelligence. Our training programs are designed to help learners gain a deep understanding of algorithms, model building, and real-time problem-solving using the latest tools and frameworks.

What sets CNT Technologies apart is our commitment to personalized mentoring, industry-oriented curriculum, and job assistance. By also offering specialized modules in Deep Learning training in Chandigarh and a full-fledged Data Science course in Chandigarh, we provide a holistic path to mastering AI technologies.

CNT Technologies is more than a training institute — it’s a launchpad for your future in tech. Enroll today and become part of a dynamic learning environment that empowers you to lead in the AI revolution. For more information, go to https://cnttech.org/machine-learning-training-in-chandigarh/

#Data Science course in Chandigarh#Machine Learning in Chandigarh#Deep Learning training in Chandigarh#CNT Technologies#data science training online#artists on tumblr#digital marketing course training chandigarh#seo training chandigarh

0 notes

Text

Day 3/100 days of productivity | Wed 21 Feb, 2024

Long day at work, chatted with colleagues about career paths in data science, met with my boss for a performance evaluation (it went well!). Worked on writing some Quarto documentation for how to build one of my Tableau dashboards, since it keeps breaking and I keep referring to my notes 😳

In non-work productivity, I completed the ‘Logistic Regression as a Neural Network’ module of Andrew Ng’s Deep Learning course on Coursera and refreshed my understanding of backpropagation using the calculus chain rule. Fun!

#100 days of productivity#data science#deep learning#neural network#quarto#tableau#note taking#chain rule

3 notes

·

View notes