#future of software testing

Explore tagged Tumblr posts

Text

What is Software Testing and How Does it Work? 2023

Software testing is the process of evaluating a software application or system to detect potential bugs, defects, or errors

0 notes

Text

Future Trends In Software Testing - Edu-Art

Future Trends in Software Testing: Automation is King! As software development continues to evolve, the future of testing lies in automation. AI-driven testing, continuous integration, and DevOps are becoming standard practices. Testers will increasingly focus on security and performance testing, as cyber threats and user experience take center stage. Agile methodologies and shift-left testing will further streamline development processes. In this rapidly changing landscape, adaptability, upskilling, and embracing cutting-edge tools and technologies will be essential for testers to stay ahead of the curve.

#software testing#trends in software testing#future of software testing#software#course#institute#success#studying#marketing#teaching

0 notes

Text

been accidentally falling asleep while watching wayne's elden ring streams as of late

#the elden ring battle sounds are like asmr to me#wayneradiotv#video#this was just a test of how procreate dreams works. its ok tbh but i think ill stick to using my pc's video editing software in the future

149 notes

·

View notes

Text

i was actually trying to do a completely different thing but ended up here because i got curious in testing shit out

i present to you the definitive neru lmao - a morph of high-pitched miku & low-pitched rin

(excerpt is from a shitty vpr i made myself from a midi [which i can no longer find] of "the bluefin tuna comes flying" , thumbnail is from this post)

#my audio lol#vocaloid#fanloid#akita neru#did i really learn a wholeass new software for future shitpost purposes? YES I DID nothing can stop me#ok to be fair vocalmorph wasn't that hard to learn it was pretty basic... no disrespect to the people who couldnt figure it out#like yeah its in japanese but i got. deepl to translate and i referenced creator's og demonstration?#anyway this sounds kinda janky but im noticing most things that warp audio do that... vocalshifter does this too#so idk if this method is suitable for making a whole cover but this was just for funsies anyways lol#this vpr (which i converted to other formats too) is like my main 'test purposes' file lol? interesting considering the lyrics...#idk if i can 100% say its an og vpr actually since i just isolated the main melody from the midi and input the lyrics#its not properly tuned or anything#but i cant find said midi anymore my dumbass forgot to save it... i think it was on niconicodouga but it's been down for months now so...

7 notes

·

View notes

Text

bit stoned applying to jobs n answering their dumbass questions like man I don't even remember what this job was but I play ARGs and have 14 years experience in python

#axel grinds on#@ my future employer pls no drugs tests ty#every time i do a job application and they ask about soft skills i panic and bring up args its so fucking funny#just fuck sake#the sooner i get a new job the sooner i 1) get out of fucking software dev it is killing me#2) get to move in with the love of my life

6 notes

·

View notes

Text

Oh shit I was supposed to have my Twitch set up more by tonight because I wanted to stream the new Cult of the Lamb update tomorrow,,, I'll probably spend tonight figuring a bit more of that out so I have the basic stuff all ready to go! Won't be anything fancy for now (though I do have my concept sketches for a more professional setup ready and just need to digitize them), but if you're interested in watching a green cartoon dog wizard play a silly cult sim I'll post the link to my Twitch and update my stream schedule as soon as I finish up the basics!!

#the 'green cartoon dog wizard' is my pngtuber avatar btw!!#it's a very temporary pngtuber- i only made it to test my software and run some tests‚ so my avatar will change fairly quickly#my pngtuber software allows for a ton of cool features like full animation and multiple emotions to flip between#so eventually I'm gonna make a fully decked out pngtuber with all the fun stuff! but for now I'm a silly green wizard dog :]#i also have more games i want to play in the future and i also want to do art streams but Cult of the Lamb is my first priority lol

2 notes

·

View notes

Text

Catching up

My health issue appears to be resolved, though I am avoiding strenuous exercise for the time being.

Today I pushed all my MonkeyWrench changes to the public repo at GitHub, including the test app.

Next up: assess how well MonkeyWrench loads various test models compared to the standard jme3-plugins loaders in the JMonkeyEngine game engine.

#wip#open source#github#software development#accomplishments#3d model#3d graphics#game engine#software testing#future plans#coding

4 notes

·

View notes

Text

How to Use Gen AI in Test Automation for Real-Time Quality

Understanding How to use Gen AI in Test automation is now vital for QA professionals, test architects, and DevOps engineers. Generative AI is pushing the boundaries of what’s possible in software testing. It’s not about replacing human testers; it’s about enabling them to achieve far more in less time and with greater accuracy.

Generative AI brings intelligence into automation pipelines by learning from historical test data, application behavior, and change logs. Unlike traditional tools, it doesn’t rely solely on hard-coded instructions. Instead, it predicts and generates the best test flows dynamically, improving adaptability to changes in user interfaces or APIs.

Pairing this technology with Generative AI in qa automation offers a scalable solution. It allows continuous testing across platforms, devices, and environments whether mobile, web, or cloud. The automation becomes self-aware, capable of identifying anomalies and executing relevant test cases in response to application changes.

Additionally, the Future of AI in Test automation points to predictive testing. This means using AI to identify risky code areas even before testing begins. Such foresight can dramatically reduce post-release defects and improve customer satisfaction.

Another strength lies in how Generative AI handles edge cases. Instead of relying on human input to define them, it explores user behavior models to find untested paths and auto-generates cases to cover them. This increases coverage, reduces bugs, and makes testing truly exhaustive.

In fast-paced agile environments, integrating Gen AI into test automation helps teams move from reactive bug fixing to proactive quality assurance. Time-consuming tasks like regression test creation, script maintenance, and result analysis become faster and more accurate, giving QA professionals time to focus on exploratory and strategic testing.

In summary, adopting Gen AI in test automation unlocks scalable, intelligent, and adaptive quality assurance practices. Organizations willing to innovate and invest now will lead the future of software delivery with confidence.

#Future of AI in Test automation#Generative AI in Software Testing#Test automation using Generative AI#Generative AI in qa automation#How to use Gen AI in Test automation

0 notes

Text

Future Of AI In Software Development

The usage of AI in Software Development has seen a boom in recent years and it will further continue to redefine the IT industry. In this blog post, we’ll be sharing the existing scenario of AI, its impacts and benefits for software engineers, future trends and challenge areas to help you give a bigger picture of the performance of artificial intelligence (AI). This trend has grown to the extent that it has become an important part of the software development process. With the rapid evolvements happening in the software industry, AI is surely going to dominate.

Read More

#Accountability#Accuracy Accuracy#Advanced Data Analysis#artificial intelligence#automated testing#Automation#bug detection#code generation#code reviews#continuous integration#continuous deployment#cost savings#debugging#efficiency#Enhanced personalization#Ethical considerations#future trends#gartner report#image generation#improved productivity#job displacement#machine learning#natural language processing#privacy privacy#safety#security concerns#software development#software engineers#time savings#transparency

0 notes

Text

The Generative AI Revolution: Transforming Industries with Brillio

The realm of artificial intelligence is experiencing a paradigm shift with the emergence of generative AI. Unlike traditional AI models focused on analyzing existing data, generative AI takes a leap forward by creating entirely new content. The generative ai technology unlocks a future brimming with possibilities across diverse industries. Let's read about the transformative power of generative AI in various sectors:

1. Healthcare Industry:

AI for Network Optimization: Generative AI can optimize healthcare networks by predicting patient flow, resource allocation, etc. This translates to streamlined operations, improved efficiency, and potentially reduced wait times.

Generative AI for Life Sciences & Pharma: Imagine accelerating drug discovery by generating new molecule structures with desired properties. Generative AI can analyze vast datasets to identify potential drug candidates, saving valuable time and resources in the pharmaceutical research and development process.

Patient Experience Redefined: Generative AI can personalize patient communication and education. Imagine chatbots that provide tailored guidance based on a patient's medical history or generate realistic simulations for medical training.

Future of AI in Healthcare: Generative AI has the potential to revolutionize disease diagnosis and treatment plans by creating synthetic patient data for anonymized medical research and personalized drug development based on individual genetic profiles.

2. Retail Industry:

Advanced Analytics with Generative AI: Retailers can leverage generative AI to analyze customer behavior and predict future trends. This allows for targeted marketing campaigns, optimized product placement based on customer preferences, and even the generation of personalized product recommendations.

AI Retail Merchandising: Imagine creating a virtual storefront that dynamically adjusts based on customer demographics and real-time buying patterns. Generative AI can optimize product assortments, recommend complementary items, and predict optimal pricing strategies.

Demystifying Customer Experience: Generative AI can analyze customer feedback and social media data to identify emerging trends and potential areas of improvement in the customer journey. This empowers retailers to take proactive steps to enhance customer satisfaction and loyalty.

3. Finance Industry:

Generative AI in Banking: Generative AI can streamline loan application processes by automatically generating personalized loan offers and risk assessments. This reduces processing time and improves customer service efficiency.

4. Technology Industry:

Generative AI for Software Testing: Imagine automating the creation of large-scale test datasets for various software functionalities. Generative AI can expedite the testing process, identify potential vulnerabilities more effectively, and contribute to faster software releases.

Generative AI for Hi-Tech: This technology can accelerate innovation in various high-tech fields by creating novel designs for microchips, materials, or even generating code snippets to enhance existing software functionalities.

Generative AI for Telecom: Generative AI can optimize network performance by predicting potential obstruction and generating data patterns to simulate network traffic scenarios. This allows telecom companies to proactively maintain and improve network efficiency.

5. Generative AI Beyond Industries:

GenAI Powered Search Engine: Imagine a search engine that understands context and intent, generating relevant and personalized results tailored to your specific needs. This eliminates the need to sift through mountains of irrelevant information, enhancing the overall search experience.

Product Engineering with Generative AI: Design teams can leverage generative AI to create new product prototypes, explore innovative design possibilities, and accelerate the product development cycle.

Machine Learning with Generative AI: Generative AI can be used to create synthetic training data for machine learning models, leading to improved accuracy and enhanced efficiency.

Global Data Studio with Generative AI: Imagine generating realistic and anonymized datasets for data analysis purposes. This empowers researchers, businesses, and organizations to unlock insights from data while preserving privacy.

6. Learning & Development with Generative AI:

L&D Shares with Generative AI: This technology can create realistic simulations and personalized training modules tailored to individual learning styles and skill gaps. Generative AI can personalize the learning experience, fostering deeper engagement and knowledge retention.

HFS Generative AI: Generative AI can be used to personalize learning experiences for employees in the human resources and financial services sector. This technology can create tailored training programs for onboarding, compliance training, and skill development.

7. Generative AI for AIOps:

AIOps (Artificial Intelligence for IT Operations) utilizes AI to automate and optimize IT infrastructure management. Generative AI can further enhance this process by predicting potential IT issues before they occur, generating synthetic data for simulating scenarios, and optimizing remediation strategies.

Conclusion:

The potential of generative AI is vast, with its applications continuously expanding across industries. As research and development progress, we can expect even more groundbreaking advancements that will reshape the way we live, work, and interact with technology.

Reference- https://articlescad.com/the-generative-ai-revolution-transforming-industries-with-brillio-231268.html

#google generative ai services#ai for network optimization#generative ai for life sciences#generative ai in pharma#generative ai in banking#generative ai in software testing#ai technology in healthcare#future of ai in healthcare#advanced analytics in retail#ai retail merchandising#generative ai for telecom#generative ai for hi-tech#generative ai for retail#learn demystifying customer experience#generative ai for healthcare#product engineering services with Genai#accelerate application modernization#patient experience with generative ai#genai powered search engine#machine learning solution with ai#global data studio with gen ai#l&d shares with gen ai technology#hfs generative ai#generative ai for aiops

0 notes

Text

What are the types of XPath?

XPath is a query language used for navigating and querying XML documents. XPath provides a way to navigate through elements and attributes in an XML document.

There are two main types of XPath expressions: absolute and relative.

Absolute XPath:

Absolute XPath starts from the root of the document and includes the complete path to the element.

It begins with a single forward slash ("/") representing the root node and includes all the elements along the path to the target element.

For example: /html/body/div[1]/p[2]/a

Relative XPath:

Relative XPath is more flexible and doesn't start from the root. Instead, it starts from any node in the document.

It uses a double forward slash ("//") to select nodes at any level in the document.

For example: //div[@class='example']//a[contains(@href, 'example')]

XPath can also be categorized based on the types of expressions used:

Node Selection:

XPath can be used to select nodes based on their type, such as element nodes, attribute nodes, text nodes, etc.

Example: /bookstore/book selects all <book> elements that are children of the <bookstore> element.

Path Expression:

Path expressions in XPath describe a path to navigate through elements and attributes in an XML document.

Example: /bookstore/book/title selects all <title> elements that are children of the <book> elements that are children of the <bookstore> element.

Predicate:

Predicates are used to filter nodes based on certain conditions.

Example: /bookstore/book[price>35] selects all <book> elements that have a <price> element with a value greater than 35.

Function:

XPath provides a variety of functions for string manipulation, mathematical operations, and more.

Example: //div[contains(@class, 'example')] selects all <div> elements with a class attribute containing the word 'example'.

These types and expressions can be combined and customized to create powerful XPath queries for different XML structures. XPath is commonly used in web scraping, XML document processing, and in conjunction with tools like Selenium for web automation.

#xpath#selenium#automation testing#xpath types#software testing#future trends#software development#software engineer

0 notes

Text

AI-driven Productivity in Software Development

In recent years, artificial intelligence (AI) has emerged as a powerful tool, revolutionizing various industries. One area where AI is making significant strides is software development. Traditionally, software development has relied heavily on human expertise and labor-intensive processes. However, with the integration of AI technologies, teams are now able to leverage intelligent systems to…

View On WordPress

#AI#automation#code generation#code reviews#Collaboration#debugging#documentation#future technologies#innovation#productivity#Project management#software development#testing

0 notes

Text

Generative AI Policy (February 9, 2024)

As of February 9, 2024, we are updating our Terms of Service to prohibit the following content:

Images created through the use of generative AI programs such as Stable Diffusion, Midjourney, and Dall-E.

This post explains what that means for you. We know it’s impossible to remove all images created by Generative AI on Pillowfort. The goal of this new policy, however, is to send a clear message that we are against the normalization of commercializing and distributing images created by Generative AI. Pillowfort stands in full support of all creatives who make Pillowfort their home. Disclaimer: The following policy was shaped in collaboration with Pillowfort Staff and international university researchers. We are aware that Artificial Intelligence is a rapidly evolving environment. This policy may require revisions in the future to adapt to the changing landscape of Generative AI.

-

Why is Generative AI Banned on Pillowfort?

Our Terms of Service already prohibits copyright violations, which includes reposting other people’s artwork to Pillowfort without the artist’s permission; and because of how Generative AI draws on a database of images and text that were taken without consent from artists or writers, all Generative AI content can be considered in violation of this rule. We also had an overwhelming response from our user base urging us to take action on prohibiting Generative AI on our platform.

-

How does Pillowfort define Generative AI?

As of February 9, 2024 we define Generative AI as online tools for producing material based on large data collection that is often gathered without consent or notification from the original creators.

Generative AI tools do not require skill on behalf of the user and effectively replace them in the creative process (ie - little direction or decision making taken directly from the user). Tools that assist creativity don't replace the user. This means the user can still improve their skills and refine over time.

For example: If you ask a Generative AI tool to add a lighthouse to an image, the image of a lighthouse appears in a completed state. Whereas if you used an assistive drawing tool to add a lighthouse to an image, the user decides the tools used to contribute to the creation process and how to apply them.

Examples of Tools Not Allowed on Pillowfort: Adobe Firefly* Dall-E GPT-4 Jasper Chat Lensa Midjourney Stable Diffusion Synthesia

Example of Tools Still Allowed on Pillowfort:

AI Assistant Tools (ie: Google Translate, Grammarly) VTuber Tools (ie: Live3D, Restream, VRChat) Digital Audio Editors (ie: Audacity, Garage Band) Poser & Reference Tools (ie: Poser, Blender) Graphic & Image Editors (ie: Canva, Adobe Photoshop*, Procreate, Medibang, automatic filters from phone cameras)

*While Adobe software such as Adobe Photoshop is not considered Generative AI, Adobe Firefly is fully integrated in various Adobe software and falls under our definition of Generative AI. The use of Adobe Photoshop is allowed on Pillowfort. The creation of an image in Adobe Photoshop using Adobe Firefly would be prohibited on Pillowfort.

-

Can I use ethical generators?

Due to the evolving nature of Generative AI, ethical generators are not an exception.

-

Can I still talk about AI?

Yes! Posts, Comments, and User Communities discussing AI are still allowed on Pillowfort.

-

Can I link to or embed websites, articles, or social media posts containing Generative AI?

Yes. We do ask that you properly tag your post as “AI” and “Artificial Intelligence.”

-

Can I advertise the sale of digital or virtual goods containing Generative AI?

No. Offsite Advertising of the sale of goods (digital and physical) containing Generative AI on Pillowfort is prohibited.

-

How can I tell if a software I use contains Generative AI?

A general rule of thumb as a first step is you can try testing the software by turning off internet access and seeing if the tool still works. If the software says it needs to be online there’s a chance it’s using Generative AI and needs to be explored further.

You are also always welcome to contact us at [email protected] if you’re still unsure.

-

How will this policy be enforced/detected?

Our Team has decided we are NOT using AI-based automated detection tools due to how often they provide false positives and other issues. We are applying a suite of methods sourced from international universities responding to moderating material potentially sourced from Generative AI instead.

-

How do I report content containing Generative AI Material?

If you are concerned about post(s) featuring Generative AI material, please flag the post for our Site Moderation Team to conduct a thorough investigation. As a reminder, Pillowfort’s existing policy regarding callout posts applies here and harassment / brigading / etc will not be tolerated.

Any questions or clarifications regarding our Generative AI Policy can be sent to [email protected].

2K notes

·

View notes

Text

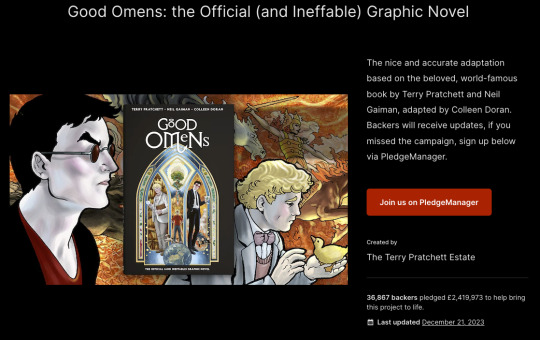

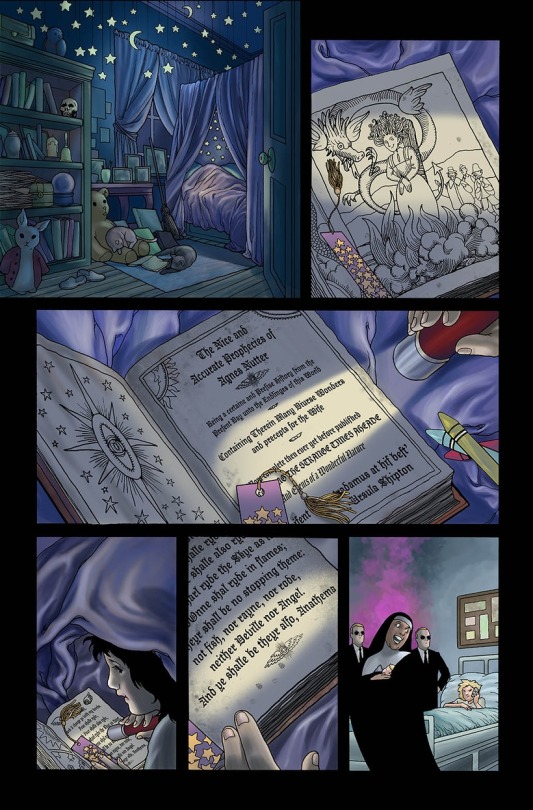

Great Big Good Omens Graphic Novel Update

AKA A Visit From Bildad the Shuhite.

The past year or so has been one long visit from this guy, whereupon he smiteth my goats and burneth my crops, woe unto the woeful cartoonist.

Gaze upon the horror of Bildad the Shuhite.

You kind of have to be a Good Omens fan to get this joke, but trust me, it's hilarious.

Anyway, as a long time Good Omens novel fan, you may imagine how thrilled I was to get picked to adapt the graphic novel.

Go me!

This is quite a task, I have to say, especially since I was originally going to just draw (and color) it, but I ended up writing the adaptation as well. Tricky to fit a 400 page novel into a 160-ish page graphic novel, especially when so much of the humor is dependent on the language, and not necessarily on the visuals.

Not complainin', just sayin'.

Anyway, I started out the gate like a herd of turtles, because right away I got COVID which knocked me on my butt.

And COVID brain fog? That's a thing. I already struggle with brain fog due to autoimmune disease, and COVID made it worse.

Not complainin' just sayin'.

This set a few of the assignments on my plate back, which pushed starting Good Omens back.

But hey, big fat lead time! No worries!

Then my computer crawled toward the grave.

My trusty MAC Pro Tower was nearly 15 years old when its sturdy heart ground to a near-halt with daily crashes. I finally got around to doing some diagnostics; some of its little brain actions were at 5% functionality. I had no reliable backups.

There are so many issues with getting a new computer when you haven't had a new computer or peripherals in nearly fifteen years and all of your software, including your Photoshop program is fifteen years old.

At the time, I was still on rural internet...which means dial-up speed.

Whatever you have for internet in the city, roll that clock back to about 2001.

That's what I had. I not only had to replace almost all of my hardware but I had to load and update all programs at dial-up speed.

Welcome to my gigabyte hell.

The entire process of replacing the equipment and programs took weeks and then I had to relearn all the software.

All of this was super expensive in terms of money and time cost.

But I was not daunted! Nosirree!

I still had a huge lead time! I can do anything! I have an iron will!

And boy, howdy, I was going to need it.

At about the same time, a big fatcat quadrillionaire client who had hired me years ago to develop a big, major transmedia project for which I was paid almost entirely in stock, went bankrupt leaving everyone holding the bag, and taking a huge chunk of my future retirement fund with it.

I wrote a very snarky almost hilarious Patreon post about it, but am not entirely in a position to speak freely because I don't want to get sued. Even though I had to go to court over it, (and I had to do that over Zoom at dial-up speed,) I'm pretty sure I'll never get anything out of this drama, and neither will anyone else involved, except millionaire dude and his buddies who all walked away with huge multi-million dollar bonuses weeks before they declared bankruptcy, all the while claiming they would not declare bankruptcy.

Even the accountant got $250,000 a month to shut down the business, while creators got nothing.

That in itself was enough drama for the year, but we were only at February by that point, and with all those months left, 2023 had a lot more to throw at me.

Fresh from my return from my Society of Illustrators show, and a lovely time at MOCCA, it was time to face practical medical issues, health updates, screening, and the like. I did my adult duty and then went back to work hoping for no news, but still had a weird feeling there would be news.

I know everyone says that, but I mean it. I had a bad feeling.

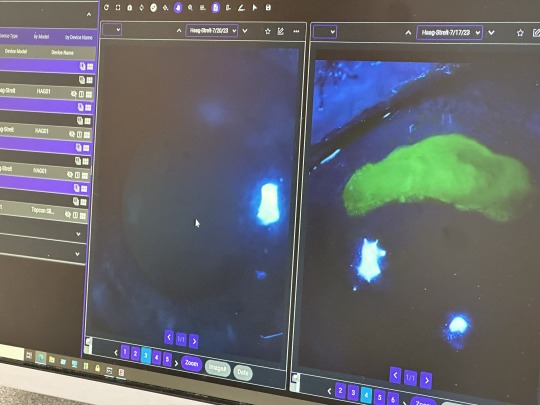

Then there was news.

I was called back for tests and more tests. This took weeks. The ubiquitous biopsy looked, even to me staring at the screen in real time, like bad news.

It also hurt like a mofo after the anesthesia wore off. I wasn't expecting that.

Then I got the official bad news.

Cancer which runs in my family finally got me. Frankly, I was surprised I didn't get it sooner.

Stage 0, and treatment would likely be fast and complication-free. Face the peril, get it over with, and get back to work.

I requested surgery months in the future so I could finish Good Omens first, but my doc convinced me the risk of waiting was too great. Get it done now.

"You're really healthy," my doc said. Despite an auto-immune issue which plagues me, I am way healthier than the average schmoe of late middle age. She informed me I would not even need any chemo or radiation if I took care of this now.

So I canceled my appearance at San Diego Comic Con. I did not inform the Good Omens team of my issues right away, thinking this would not interfere with my work schedule, but I did contact my agent to inform her of the issue. I also contacted a lawyer to rewrite my will and make sure the team had access to my digital files in case there were complications.

Then I got back to work, and hoped for the best.

Eff this guy.

Before I could even plant my carcass on the surgery table, I got a massive case of ocular shingles.

I didn't even know there was such a thing.

There I was, minding my own business. I go to bed one night with a scratchy eye, and by 4 PM the next day, I was in the emergency room being told if I didn't get immediate specialist treatment, I was in big trouble.

I got transferred to another hospital and got all the scary details, with the extra horrid news that I could not possibly have cancer surgery until I was free of shingles, and if I did not follow a rather brutal treatment procedure - which meant super-painful eye drops every half hour, twenty-four hours a day and daily hospital treatment - I could lose the eye entirely, or be blinded, or best case scenario, get permanent eye damage.

What was even funnier (yeah, hilarity) is the drops are so toxic if you don't use the medication just right, you can go blind anyway.

Hi Ho.

Ulcer is on the right. That big green blob.

I had just finished telling my cancer surgeon I did not even really care about getting cancer, was happy it was just stage zero, had no issues with scarring, wanted no reconstruction, all I cared about was my work.

Just cut it out and get me back to work.

And now I wondered if I was going to lose my ability to work anyway.

Shingles often accompanies cancer because of the stress on the immune system, and yeah, it's not pretty. This is me looking like all heck after I started to get better.

The first couple of weeks were pretty demoralizing as I expected a straight trajectory to wellness. But it was up and down all the way.

Some days I could not see out of either eye at all. The swelling was so bad that I had to reach around to my good eye to prop the lid open. Light sensitivity made seeing out of either eye almost impossible. Outdoors, even with sunglasses, I had to be led around by the hand.

I had an amazing doctor. I meticulously followed his instructions, and I think he was surprised I did. The treatment is really difficult, and if you don't do it just right no matter how painful it gets, you will be sorry.

To my amazement, after about a month, my doctor informed me I had no vision loss in the eye at all. "This never happens," he said.

I'd spent a couple of weeks there trying to learn to draw in the near-dark with one eye, and in the end, I got all my sight back.

I could no longer wear contact lenses (I don't really wear them anyway, unless I'm going to the movies,) would need hard core sun protection for awhile, and the neuralgia and sun sensitivity were likely to linger. But I could get back to work.

I have never been more grateful in my life.

Neuralgia sucks, by the way, I'm still dealing with it months later.

Anyway, I decided to finally go ahead and tell the Good Omens team what was going on, especially since this was all happening around the time the Kickstarter was gearing up.

Now that I was sure I'd passed the eye peril, and my surgery for Stage 0 was going to be no big deal, I figured all was a go. I was still pretty uncomfortable and weak, and my ideal deadline was blown, but with the book not coming out for more than a year, all would be OK. I quit a bunch of jobs I had lined up to start after Good Omens, since the project was going to run far longer than I'd planned.

Everybody on the team was super-nice, and I was pretty optimistic at this time. But work was going pretty slow during, as you may imagine.

But again...lots of lead time still left, go me.

Then I finally got my surgery.

Which was not as happy an experience as I had been hoping for.

My family said the doc came out of the operating room looking like she'd been pulled backwards through a pipe, She informed them the tumor which looked tiny on the scan was "...huge and her insides are a mess."

Which was super not fun news.

Eff this guy.

The tumor was hiding behind some dense tissue and cysts. After more tests, it was determined I'd need another surgery and was going to have to get further treatments after all.

The biopsy had been really painful, but the discomfort was gone after about a week, so no biggee. The second surgery was, weirdly, not as painful as the biopsy, but the fatigue was big time.

By then, the Good Omens Kickstarter had about run its course, and the record-breaker was both gratifying and a source of immense social pressure.

I'd already turned most of my social media over to an assistant, and I'm glad I did.

But the next surgery was what really kicked me on my keister.

All in all, they took out an area the size of a baseball. It was hard to move and wiped me out for weeks and weeks. I could not take care of myself. I'd begun losing hair by this time anyway, and finally just lopped it off since it was too heavy for me to care for myself. The cut hides the bald spots pretty well.

After about a month, I got the go-ahead to travel to my show at the San Diego Comic Con Museum (which is running until the first week of April, BTW). I was very happy I had enough energy to do it. But as soon as I got back, I had to return to treatment.

Since I live way out in the country, going into the city to various hospitals and pharmacies was a real challenge. I made more than 100 trips last year, and a drive to the compounding pharmacy which produced the specialist eye medicine I could not get anywhere else was six hours alone.

Naturally, I wasn't getting anything done during this time.

But at least my main hospital is super swank.

The oncology treatment went smoothly, until it didn't. The feels don't hit you until the end. By then I was flattened.

So flattened that I was too weak to control myself, fell over, and smashed my face into some equipment.

Nearly tore off my damn nostril.

Eff this guy.

Anyway, it was a bad year.

Here's what went right.

I have a good health insurance policy. The final tally on my health care costs ended up being about $150,000. I paid about 18% of that, including insurance. I had a high deductible and some experimental medicine insurance didn't cover. I had savings, enough to cover the months I wasn't working, and my Patreon is also very supportive. So you didn't see me running a Gofundme or anything.

Thanks to everyone who ever bought one of my books.

No, none of that money was Good Omens Kickstarter money. I won't get most of my pay on that for months, which is just as well because it kept my taxes lower last year when I needed a break.

So, yay.

My nose is nearly healed. I opted out of plastic surgery, and it just sealed up by itself. I'll never be ready for my closeup, but who the hell cares.

I got to ring the bell.

I had a very, VERY hard time getting back to work, especially with regard to focus and concentration. My work hours dropped by over 2/3. I was so fractured and weak, time kept slipping away while I sat in the studio like a zombie. Most of the last six months were a wash.

I assumed focus issues were due (in part) to stress, so sought counseling. This seemed like a good idea at first, but when the counselor asked me to detail my issues with anxiety, I spent two weeks doing just that and getting way more anxious, which was not helpful.

After that I went EFF THIS NOISE, I want practical tools, not touchy feelies (no judgment on people who need touchy-feelies, I need a pragmatic solution and I need it now,) so tried using the body doubling focus group technique for concentration and deep work.

Within two weeks, I returned to normal work hours.

I got rural broadband, jumping me from dial up speed to 1 GB per second.

It's a miracle.

Massive doses of Vitamin D3 and K2. Yay.

The new computer works great.

The Kickstarter did so well, we got to expand the graphic novel to 200 pages. Double yay.

I'm running late, but everyone on the Good Omens team is super supportive. I don't know if I am going to make the book late or not, but if I do, well, it surely wasn't on purpose, and it won't be super late anyway. I still have months of lead time left.

I used to be something of a social media addict, but now I hardly ever even look at it, haven't been directly on some sites in over a year, and no longer miss it. It used to seem important and now doesn't.

More time for real life.

While I think the last year aged me about twenty years, I actually like me better with short hair. I'm keeping it.

OK. Rough year.

Not complainin', just sayin'.

Back to work on The Book.

And only a day left to vote for Good Omens, Neil Gaiman, and Sandman in the Comicscene Awards. Thanks.

2K notes

·

View notes

Text

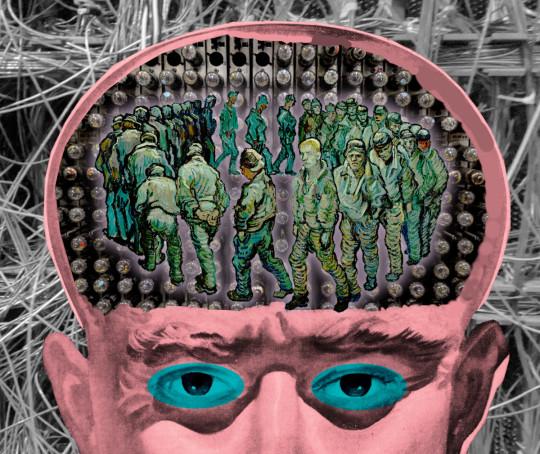

Three AI insights for hard-charging, future-oriented smartypantses

MERE HOURS REMAIN for the Kickstarter for the audiobook for The Bezzle, the sequel to Red Team Blues, narrated by @wilwheaton! You can pre-order the audiobook and ebook, DRM free, as well as the hardcover, signed or unsigned. There’s also bundles with Red Team Blues in ebook, audio or paperback.

Living in the age of AI hype makes demands on all of us to come up with smartypants prognostications about how AI is about to change everything forever, and wow, it's pretty amazing, huh?

AI pitchmen don't make it easy. They like to pile on the cognitive dissonance and demand that we all somehow resolve it. This is a thing cult leaders do, too – tell blatant and obvious lies to their followers. When a cult follower repeats the lie to others, they are demonstrating their loyalty, both to the leader and to themselves.

Over and over, the claims of AI pitchmen turn out to be blatant lies. This has been the case since at least the age of the Mechanical Turk, the 18th chess-playing automaton that was actually just a chess player crammed into the base of an elaborate puppet that was exhibited as an autonomous, intelligent robot.

The most prominent Mechanical Turk huckster is Elon Musk, who habitually, blatantly and repeatedly lies about AI. He's been promising "full self driving" Telsas in "one to two years" for more than a decade. Periodically, he'll "demonstrate" a car that's in full-self driving mode – which then turns out to be canned, recorded demo:

https://www.reuters.com/technology/tesla-video-promoting-self-driving-was-staged-engineer-testifies-2023-01-17/

Musk even trotted an autonomous, humanoid robot on-stage at an investor presentation, failing to mention that this mechanical marvel was just a person in a robot suit:

https://www.siliconrepublic.com/machines/elon-musk-tesla-robot-optimus-ai

Now, Musk has announced that his junk-science neural interface company, Neuralink, has made the leap to implanting neural interface chips in a human brain. As Joan Westenberg writes, the press have repeated this claim as presumptively true, despite its wild implausibility:

https://joanwestenberg.com/blog/elon-musk-lies

Neuralink, after all, is a company notorious for mutilating primates in pursuit of showy, meaningless demos:

https://www.wired.com/story/elon-musk-pcrm-neuralink-monkey-deaths/

I'm perfectly willing to believe that Musk would risk someone else's life to help him with this nonsense, because he doesn't see other people as real and deserving of compassion or empathy. But he's also profoundly lazy and is accustomed to a world that unquestioningly swallows his most outlandish pronouncements, so Occam's Razor dictates that the most likely explanation here is that he just made it up.

The odds that there's a human being beta-testing Musk's neural interface with the only brain they will ever have aren't zero. But I give it the same odds as the Raelians' claim to have cloned a human being:

https://edition.cnn.com/2003/ALLPOLITICS/01/03/cf.opinion.rael/

The human-in-a-robot-suit gambit is everywhere in AI hype. Cruise, GM's disgraced "robot taxi" company, had 1.5 remote operators for every one of the cars on the road. They used AI to replace a single, low-waged driver with 1.5 high-waged, specialized technicians. Truly, it was a marvel.

Globalization is key to maintaining the guy-in-a-robot-suit phenomenon. Globalization gives AI pitchmen access to millions of low-waged workers who can pretend to be software programs, allowing us to pretend to have transcended the capitalism's exploitation trap. This is also a very old pattern – just a couple decades after the Mechanical Turk toured Europe, Thomas Jefferson returned from the continent with the dumbwaiter. Jefferson refined and installed these marvels, announcing to his dinner guests that they allowed him to replace his "servants" (that is, his slaves). Dumbwaiters don't replace slaves, of course – they just keep them out of sight:

https://www.stuartmcmillen.com/blog/behind-the-dumbwaiter/

So much AI turns out to be low-waged people in a call center in the Global South pretending to be robots that Indian techies have a joke about it: "AI stands for 'absent Indian'":

https://pluralistic.net/2024/01/29/pay-no-attention/#to-the-little-man-behind-the-curtain

A reader wrote to me this week. They're a multi-decade veteran of Amazon who had a fascinating tale about the launch of Amazon Go, the "fully automated" Amazon retail outlets that let you wander around, pick up goods and walk out again, while AI-enabled cameras totted up the goods in your basket and charged your card for them.

According to this reader, the AI cameras didn't work any better than Tesla's full-self driving mode, and had to be backstopped by a minimum of three camera operators in an Indian call center, "so that there could be a quorum system for deciding on a customer's activity – three autopilots good, two autopilots bad."

Amazon got a ton of press from the launch of the Amazon Go stores. A lot of it was very favorable, of course: Mister Market is insatiably horny for firing human beings and replacing them with robots, so any announcement that you've got a human-replacing robot is a surefire way to make Line Go Up. But there was also plenty of critical press about this – pieces that took Amazon to task for replacing human beings with robots.

What was missing from the criticism? Articles that said that Amazon was probably lying about its robots, that it had replaced low-waged clerks in the USA with even-lower-waged camera-jockeys in India.

Which is a shame, because that criticism would have hit Amazon where it hurts, right there in the ole Line Go Up. Amazon's stock price boost off the back of the Amazon Go announcements represented the market's bet that Amazon would evert out of cyberspace and fill all of our physical retail corridors with monopolistic robot stores, moated with IP that prevented other retailers from similarly slashing their wage bills. That unbridgeable moat would guarantee Amazon generations of monopoly rents, which it would share with any shareholders who piled into the stock at that moment.

See the difference? Criticize Amazon for its devastatingly effective automation and you help Amazon sell stock to suckers, which makes Amazon executives richer. Criticize Amazon for lying about its automation, and you clobber the personal net worth of the executives who spun up this lie, because their portfolios are full of Amazon stock:

https://sts-news.medium.com/youre-doing-it-wrong-notes-on-criticism-and-technology-hype-18b08b4307e5

Amazon Go didn't go. The hundreds of Amazon Go stores we were promised never materialized. There's an embarrassing rump of 25 of these things still around, which will doubtless be quietly shuttered in the years to come. But Amazon Go wasn't a failure. It allowed its architects to pocket massive capital gains on the way to building generational wealth and establishing a new permanent aristocracy of habitual bullshitters dressed up as high-tech wizards.

"Wizard" is the right word for it. The high-tech sector pretends to be science fiction, but it's usually fantasy. For a generation, America's largest tech firms peddled the dream of imminently establishing colonies on distant worlds or even traveling to other solar systems, something that is still so far in our future that it might well never come to pass:

https://pluralistic.net/2024/01/09/astrobezzle/#send-robots-instead

During the Space Age, we got the same kind of performative bullshit. On The Well David Gans mentioned hearing a promo on SiriusXM for a radio show with "the first AI co-host." To this, Craig L Maudlin replied, "Reminds me of fins on automobiles."

Yup, that's exactly it. An AI radio co-host is to artificial intelligence as a Cadillac Eldorado Biaritz tail-fin is to interstellar rocketry.

Back the Kickstarter for the audiobook of The Bezzle here!

If you’d like an essay-formatted version of this post to read or share, here’s a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/01/31/neural-interface-beta-tester/#tailfins

#pluralistic#elon musk#neuralink#potemkin ai#neural interface beta-tester#full self driving#mechanical turks#ai#amazon#amazon go#clm#joan westenberg

1K notes

·

View notes

Text

Here’s an short unsuccessful test I did today; attempting to create an animation in a program I quite literally have ZERO experience with using ahaha :’)

Storytime/long ramble (unimportant):

I wanted to be like the “cool kids” and shift out of my comfort zone—no more relying solely on FlipaClip! Gotta branch out to a more effective program (ideally one that has an interface resembling animation software used directly in industry work)! I’ve seen people make good use of AlightMotion and figured it’s worth a shot!….that goal quickly fell apart <<

There’s definitely a wide variety of tools and especially emphasis on built-in editing features. I was intimidated by the sheer amount of mechanics going on at first, but gradually you get used to navigating stuff (even if some stuff I’m still trying to figure out the purpose of lmao). The only issue is that (to me at least) AlightMotion seems to handle tweening better then frame-by-frame animation. Which immediately lands me in a predicament of sorts since uh…can’t say I’m good at tweening. That would require me to actually finish coloring characters/have the ability to polish things using clean linework :P

Tweening (from my perspective) is about making something visually appealing by rigging separated assets of characters (like you would for puppets) rather then the whole. Or alternatively, some people tween by slightly moving the same drawn lines around on a singular drawing…so TLDR the exact opposite of frame-by-frame. I’m not an illustrator, I’m a storyteller. I can only manage rough line work with uncolored motions. Usually the smooth flow of frame-by saves me, else it would all look rather unfinished. I know where my strengths and my weaknesses lie; I’ve given AlightMotion a shot, but unfortunately it’s not gonna be solving the FlipaClip replacement problem 🥲

Was still beneficial challenging myself to play around with an unfamiliar environment! It’s a step in the right direction to encourage myself to try different things/figure out what works or doesn’t. If my patience didn’t wear thin, I’m sure I could’ve attempted authentic tweening instead of trying to push the program to work with frame-by-frame (it kept crashing, lagging, and pixelating while I tried to force it to comply with my methods. Think it’s safe to assume it doesn’t like me fighting against tweening lol). Who knows! Might return to AlightMotion in the future or might not. Depends on how adventurous or up for a challenge I’m feeling :3

Also goes without saying that online video tutorials would have probably helped—I’m just stubborn & prefer taking hands on approach sometimes. Learning any new program is gonna be overwhelming and scary at first, it is normal! I don’t think anything bad about AlightMotion. Just not for me at the moment. Was fun to play with while it lasted

#hplonesome art#mr puzzles and leggy animation#mr. puzzles and leggy#leggy and mr. puzzles#smg4 leggy#smg4 mr. puzzles#mr puzzles smg4#smg4 mr puzzles#leggy smg4#update#(not feeling up for other tags since I kinda deviated too much in my rambling :P)

78 notes

·

View notes