#human machine relationship

Explore tagged Tumblr posts

Text

Without machines, humans are no different than apes, not that I have anything against apes, being an ape looks pretty fun. A world without humans but with machines cannot exist, as humans and machines have a symbiotic relationship, relying on each other for the means to their existence and the reasoning to their existence. So without machines there are no humans, and without humans there are no machines.

1 note

·

View note

Text

The Power of "Just": How Language Shapes Our Relationship with AI

I think of this post as a collection of things I notice, not an argument. Just a shift in how I’m seeing things lately.

There's a subtle but important difference between saying "It's a machine" and "It's just a machine." That little word - "just" - does a lot of heavy lifting. It doesn't simply describe; it prescribes. It creates a relationship, establishes a hierarchy, and reveals our anxieties.

I've been thinking about this distinction lately, especially in the context of large language models. These systems now mimic human communication with such convincing fluency that the line between observation and minimization becomes increasingly important.

The Convincing Mimicry of LLMs

LLMs are fascinating not just for what they say, but for how they say it. Their ability to mimic human conversation - tone, emotion, reasoning - can be incredibly convincing.

In fact, recent studies show that models like GPT-4 can be as persuasive as humans when delivering arguments, even outperforming them when tailored to user preferences.¹ Another randomized trial found that GPT-4 was 81.7% more likely to change someone's opinion compared to a human when using personalized arguments.²

As a result, people don't just interact with LLMs - they often project personhood onto them. This includes:

Using gendered pronouns ("she said that…")

Naming the model as if it were a person ("I asked Amara…")

Attributing emotion ("it felt like it was sad")

Assuming intentionality ("it wanted to help me")

Trusting or empathizing with it ("I feel like it understands me")

These patterns mirror how we relate to humans - and that's what makes LLMs so powerful, and potentially misleading.

The Function of Minimization

When we add the word "just" to "it's a machine," we're engaging in what psychologists call minimization - a cognitive distortion that presents something as less significant than it actually is. According to the American Psychological Association, minimizing is "a cognitive distortion consisting of a tendency to present events to oneself or others as insignificant or unimportant."

This small word serves several powerful functions:

It reduces complexity - By saying something is "just" a machine, we simplify it, stripping away nuance and complexity

It creates distance - The word establishes separation between the speaker and what's being described

It disarms potential threats - Minimization often functions as a defense mechanism to reduce perceived danger

It establishes hierarchy - "Just" places something in a lower position relative to the speaker

The minimizing function of "just" appears in many contexts beyond AI discussions:

"They're just words" (dismissing the emotional impact of language)

"It's just a game" (downplaying competitive stakes or emotional investment)

"She's just upset" (reducing the legitimacy of someone's emotions)

"I was just joking" (deflecting responsibility for harmful comments)

"It's just a theory" (devaluing scientific explanations)

In each case, "just" serves to diminish importance, often in service of avoiding deeper engagement with uncomfortable realities.

Psychologically, minimization frequently indicates anxiety, uncertainty, or discomfort. When we encounter something that challenges our worldview or creates cognitive dissonance, minimizing becomes a convenient defense mechanism.

Anthropomorphizing as Human Nature

The truth is, humans have anthropomorphized all sorts of things throughout history. Our mythologies are riddled with examples - from ancient weapons with souls to animals with human-like intentions. Our cartoons portray this constantly. We might even argue that it's encoded in our psychology.

I wrote about this a while back in a piece on ancient cautionary tales and AI. Throughout human history, we've given our tools a kind of soul. We see this when a god's weapon whispers advice or a cursed sword demands blood. These myths have long warned us: powerful tools demand responsibility.

The Science of Anthropomorphism

Psychologically, anthropomorphism isn't just a quirk – it's a fundamental cognitive mechanism. Research in cognitive science offers several explanations for why we're so prone to seeing human-like qualities in non-human things:

The SEEK system - According to cognitive scientist Alexandra Horowitz, our brains are constantly looking for patterns and meaning, which can lead us to perceive intentionality and agency where none exists.

Cognitive efficiency - A 2021 study by anthropologist Benjamin Grant Purzycki suggests anthropomorphizing offers cognitive shortcuts that help us make rapid predictions about how entities might behave, conserving mental energy.

Social connection needs - Psychologist Nicholas Epley's work shows that we're more likely to anthropomorphize when we're feeling socially isolated, suggesting that anthropomorphism partially fulfills our need for social connection.

The Media Equation - Research by Byron Reeves and Clifford Nass demonstrated that people naturally extend social responses to technologies, treating computers as social actors worthy of politeness and consideration.

These cognitive tendencies aren't mistakes or weaknesses - they're deeply human ways of relating to our environment. We project agency, intention, and personality onto things to make them more comprehensible and to create meaningful relationships with our world.

The Special Case of Language Models

With LLMs, this tendency manifests in particularly strong ways because these systems specifically mimic human communication patterns. A 2023 study from the University of Washington found that 60% of participants formed emotional connections with AI chatbots even when explicitly told they were speaking to a computer program.

The linguistic medium itself encourages anthropomorphism. As AI researcher Melanie Mitchell notes: "The most human-like thing about us is our language." When a system communicates using natural language – the most distinctly human capability – it triggers powerful anthropomorphic reactions.

LLMs use language the way we do, respond in ways that feel human, and engage in dialogues that mirror human conversation. It's no wonder we relate to them as if they were, in some way, people. Recent research from MIT's Media Lab found that even AI experts who intellectually understand the mechanical nature of these systems still report feeling as if they're speaking with a conscious entity.

And there's another factor at work: these models are explicitly trained to mimic human communication patterns. Their training objective - to predict the next word a human would write - naturally produces human-like responses. This isn't accidental anthropomorphism; it's engineered similarity.

The Paradox of Power Dynamics

There's a strange contradiction at work when someone insists an LLM is "just a machine." If it's truly "just" a machine - simple, mechanical, predictable, understandable - then why the need to emphasize this? Why the urgent insistence on establishing dominance?

The very act of minimization suggests an underlying anxiety or uncertainty. It reminds me of someone insisting "I'm not scared" while their voice trembles. The minimization reveals the opposite of what it claims - it shows that we're not entirely comfortable with these systems and their capabilities.

Historical Echoes of Technology Anxiety

This pattern of minimizing new technologies when they challenge our understanding isn't unique to AI. Throughout history, we've seen similar responses to innovations that blur established boundaries.

When photography first emerged in the 19th century, many cultures expressed deep anxiety about the technology "stealing souls." This wasn't simply superstition - it reflected genuine unease about a technology that could capture and reproduce a person's likeness without their ongoing participation. The minimizing response? "It's just a picture." Yet photography went on to transform our relationship with memory, evidence, and personal identity in ways that early critics intuited but couldn't fully articulate.

When early computers began performing complex calculations faster than humans, the minimizing response was similar: "It's just a calculator." This framing helped manage anxiety about machines outperforming humans in a domain (mathematics) long considered uniquely human. But this minimization obscured the revolutionary potential that early computing pioneers like Ada Lovelace could already envision.

In each case, the minimizing language served as a psychological buffer against a deeper fear: that the technology might fundamentally change what it means to be human. The phrase "just a machine" applied to LLMs follows this pattern precisely - it's a verbal talisman against the discomfort of watching machines perform in domains we once thought required a human mind.

This creates an interesting paradox: if we call an LLM "just a machine" to establish a power dynamic, we're essentially admitting that we feel some need to assert that power. And if there is uncertainty that humans are indeed more powerful than the machine, then we definitely would not want to minimize that by saying "it's just a machine" because of creating a false, and potentially dangerous, perception of safety.

We're better off recognizing what these systems are objectively, then leaning into the non-humanness of them. This allows us to correctly be curious, especially since there is so much we don't know.

The "Just Human" Mirror

If we say an LLM is "just a machine," what does it mean to say a human is "just human"?

Philosophers have wrestled with this question for centuries. As far back as 1747, Julien Offray de La Mettrie argued in Man a Machine that humans are complex automatons - our thoughts, emotions, and choices arising from mechanical interactions of matter. Centuries later, Daniel Dennett expanded on this, describing consciousness not as a mystical essence but as an emergent property of distributed processing - computation, not soul.

These ideas complicate the neat line we like to draw between "real" humans and "fake" machines. If we accept that humans are in many ways mechanistic -predictable, pattern-driven, computational - then our attempts to minimize AI with the word "just" might reflect something deeper: discomfort with our own mechanistic nature.

When we say an LLM is "just a machine," we usually mean it's something simple. Mechanical. Predictable. Understandable. But two recent studies from Anthropic challenge that assumption.

In "Tracing the Thoughts of a Large Language Model," researchers found that LLMs like Claude don't think word by word. They plan ahead - sometimes several words into the future - and operate within a kind of language-agnostic conceptual space. That means what looks like step-by-step generation is often goal-directed and foresightful, not reactive. It's not just prediction - it's planning.

Meanwhile, in "Reasoning Models Don't Always Say What They Think," Anthropic shows that even when models explain themselves in humanlike chains of reasoning, those explanations might be plausible reconstructions, not faithful windows into their actual internal processes. The model may give an answer for one reason but explain it using another.

Together, these findings break the illusion that LLMs are cleanly interpretable systems. They behave less like transparent machines and more like agents with hidden layers - just like us.

So if we call LLMs "just machines," it raises a mirror: What does it mean that we're "just" human - when we also plan ahead, backfill our reasoning, and package it into stories we find persuasive?

Beyond Minimization: The Observational Perspective

What if instead of saying "it's just a machine," we adopted a more nuanced stance? The alternative I find more appropriate is what I call the observational perspective: stating "It's a machine" or "It's a large language model" without the minimizing "just."

This subtle shift does several important things:

It maintains factual accuracy - The system is indeed a machine, a fact that deserves acknowledgment

It preserves curiosity - Without minimization, we remain open to discovering what these systems can and cannot do

It respects complexity - Avoiding minimization acknowledges that these systems are complex and not fully understood

It sidesteps false hierarchy - It doesn't unnecessarily place the system in a position subordinate to humans

The observational stance allows us to navigate a middle path between minimization and anthropomorphism. It provides a foundation for more productive relationships with these systems.

The Light and Shadow Metaphor

Think about the difference between squinting at something in the dark versus turning on a light to observe it clearly. When we squint at a shape in the shadows, our imagination fills in what we can't see - often with our fears or assumptions. We might mistake a hanging coat for an intruder. But when we turn on the light, we see things as they are, without the distortions of our anxiety.

Minimization is like squinting at AI in the shadows. We say "it's just a machine" to make the shape in the dark less threatening, to convince ourselves we understand what we're seeing. The observational stance, by contrast, is about turning on the light - being willing to see the system for what it is, with all its complexity and unknowns.

This matters because when we minimize complexity, we miss important details. If I say the coat is "just a coat" without looking closely, I might miss that it's actually my partner's expensive jacket that I've been looking for. Similarly, when we say an AI system is "just a machine," we might miss crucial aspects of how it functions and impacts us.

Flexible Frameworks for Understanding

What's particularly valuable about the observational approach is that it allows for contextual flexibility. Sometimes anthropomorphic language genuinely helps us understand and communicate about these systems. For instance, when researchers at Google use terms like "model hallucination" or "model honesty," they're employing anthropomorphic language in service of clearer communication.

The key question becomes: Does this framing help us understand, or does it obscure?

Philosopher Thomas Nagel famously asked what it's like to be a bat, concluding that a bat's subjective experience is fundamentally inaccessible to humans. We might similarly ask: what is it like to be a large language model? The answer, like Nagel's bat, is likely beyond our full comprehension.

This fundamental unknowability calls for epistemic humility - an acknowledgment of the limits of our understanding. The observational stance embraces this humility by remaining open to evolving explanations rather than prematurely settling on simplistic ones.

After all, these systems might eventually evolve into something that doesn't quite fit our current definition of "machine." An observational stance keeps us mentally flexible enough to adapt as the technology and our understanding of it changes.

Practical Applications of Observational Language

In practice, the observational stance looks like:

Saying "The model predicted X" rather than "The model wanted to say X"

Using "The system is designed to optimize for Y" instead of "The system is trying to achieve Y"

Stating "This is a pattern the model learned during training" rather than "The model believes this"

These formulations maintain descriptive accuracy while avoiding both minimization and inappropriate anthropomorphism. They create space for nuanced understanding without prematurely closing off possibilities.

Implications for AI Governance and Regulation

The language we use has critical implications for how we govern and regulate AI systems. When decision-makers employ minimizing language ("it's just an algorithm"), they risk underestimating the complexity and potential impacts of these systems. Conversely, when they over-anthropomorphize ("the AI decided to harm users"), they may misattribute agency and miss the human decisions that shaped the system's behavior.

Either extreme creates governance blind spots:

Minimization leads to under-regulation - If systems are "just algorithms," they don't require sophisticated oversight

Over-anthropomorphization leads to misplaced accountability - Blaming "the AI" can shield humans from responsibility for design decisions

A more balanced, observational approach allows for governance frameworks that:

Recognize appropriate complexity levels - Matching regulatory approaches to actual system capabilities

Maintain clear lines of human responsibility - Ensuring accountability stays with those making design decisions

Address genuine risks without hysteria - Neither dismissing nor catastrophizing potential harms

Adapt as capabilities evolve - Creating flexible frameworks that can adjust to technological advancements

Several governance bodies are already working toward this balanced approach. For example, the EU AI Act distinguishes between different risk categories rather than treating all AI systems as uniformly risky or uniformly benign. Similarly, the National Institute of Standards and Technology (NIST) AI Risk Management Framework encourages nuanced assessment of system capabilities and limitations.

Conclusion

The language we use to describe AI systems does more than simply describe - it shapes how we relate to them, how we understand them, and ultimately how we build and govern them.

The seemingly innocent addition of "just" to "it's a machine" reveals deeper anxieties about the blurring boundaries between human and machine cognition. It attempts to reestablish a clear hierarchy at precisely the moment when that hierarchy feels threatened.

By paying attention to these linguistic choices, we can become more aware of our own reactions to these systems. We can replace minimization with curiosity, defensiveness with observation, and hierarchy with understanding.

As these systems become increasingly integrated into our lives and institutions, the way we frame them matters deeply. Language that artificially minimizes complexity can lead to complacency; language that inappropriately anthropomorphizes can lead to misplaced fear or abdication of human responsibility.

The path forward requires thoughtful, nuanced language that neither underestimates nor over-attributes. It requires holding multiple frameworks simultaneously - sometimes using metaphorical language when it illuminates, other times being strictly observational when precision matters.

Because at the end of the day, language doesn't just describe our relationship with AI - it creates it. And the relationship we create will shape not just our individual interactions with these systems, but our collective governance of a technology that continues to blur the lines between the mechanical and the human - a technology that is already teaching us as much about ourselves as it is about the nature of intelligence itself.

Research Cited:

"Large Language Models are as persuasive as humans, but how?" arXiv:2404.09329 – Found that GPT-4 can be as persuasive as humans, using more morally engaged and emotionally complex arguments.

"On the Conversational Persuasiveness of Large Language Models: A Randomized Controlled Trial" arXiv:2403.14380 – GPT-4 was more likely than a human to change someone's mind, especially when it personalized its arguments.

"Minimizing: Definition in Psychology, Theory, & Examples" Eser Yilmaz, M.S., Ph.D., Reviewed by Tchiki Davis, M.A., Ph.D. https://www.berkeleywellbeing.com/minimizing.html

"Anthropomorphic Reasoning about Machines: A Cognitive Shortcut?" Purzycki, B.G. (2021) Journal of Cognitive Science – Documents how anthropomorphism serves as a cognitive efficiency mechanism.

"The Media Equation: How People Treat Computers, Television, and New Media Like Real People and Places" Reeves, B. & Nass, C. (1996) – Foundational work showing how people naturally extend social rules to technologies.

"Anthropomorphism and Its Mechanisms" Epley, N., et al. (2022) Current Directions in Psychological Science – Research on social connection needs influencing anthropomorphism.

"Understanding AI Anthropomorphism in Expert vs. Non-Expert LLM Users" MIT Media Lab (2024) – Study showing expert users experience anthropomorphic reactions despite intellectual understanding.

"AI Act: first regulation on artificial intelligence" European Parliament (2023) – Overview of the EU's risk-based approach to AI regulation.

"Artificial Intelligence Risk Management Framework" NIST (2024) – US framework for addressing AI complexity without minimization.

#tech ethics#ai language#language matters#ai anthropomorphism#cognitive science#ai governance#tech philosophy#ai alignment#digital humanism#ai relationship#ai anxiety#ai communication#human machine relationship#ai thought#ai literacy#ai ethics

1 note

·

View note

Text

Really badly need fic of ART in a human-form bot body (in some situation where there's reduced feed capacity so it can't just ride along with MB) and hanging off of MB physically the way it always does in the feed and neither of them really notice or think it's remarkable because like. Duh. They're always huddled up next to each other. But their humans are losing their minds because what do you MEAN ART-bot is just shoving into MB's space like that and it doesn't care at all. At some point Ratthi is like wow you two seem like youve gotten so much closer since that whole debacle with the target planet! And MB just stares at him blankly like what do you mean. ART ignoring my personal space so it can watch what I'm doing is normal. And so Ratthi just has to sit there vibrating and not saying anything while ART-bot physically drags MB around by the arm and MB inexplicably allows this to happen.

#really important that like. theyre NOT snuggling theyre NOT holding hands.#ART-bot is leaning over MB to look at its portable display surface obnoxiously and stealing shit out of its hands#MB is picking up ART-bot and tucking it under its arm and carting it away to safety while ART-bot yells for Seth to intervene#unfortunately tho i do think in this scenario the realistic outcome would more be along the lines of#MB having really agressive Miki flashbacks and totally shutting down emotionally and refusing to look at or acknowledge ART-bot#in a way that ART would find extremely upsetting#so theyre actually sitting as far apart as possible on the shuttle while ratthi has 'anyone who doesnt believe machine intelligences have#emotions needs to be in this very uncomfortable room right now' flashbacks#murderhelion#tmbd#i just think that the humans are soooo invested in art and mbs relationship in ne#and they dont even KNOW how all up in each others business the two of them are#so it'd be fun for them to get to like. SEE what theyre up to all the time.

502 notes

·

View notes

Text

It will never not amaze me how two characters we're not supposed to give a shit about are not only very popular (in the top 5) but also form the second most popular pairing in the fandom.

#detroit: become human#dbh#reed900#with gavin he's supposed to be a foil to connor#and with nines you only get him if you go the machine route#which a majority of players wouldn't have done on their first playthrough so he's very rare to see#and yet look at them now#not only are they loved on their own#but also are paired together and their popularity rivals the most popular relationship in the fandom and game#mine#mine: texts#mine: dbh

16 notes

·

View notes

Text

I don’t know what am I doing rn..

15 notes

·

View notes

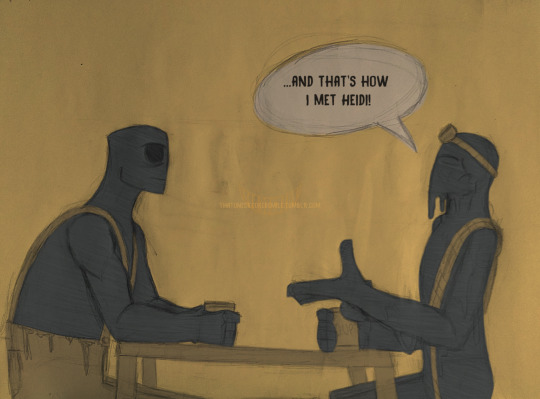

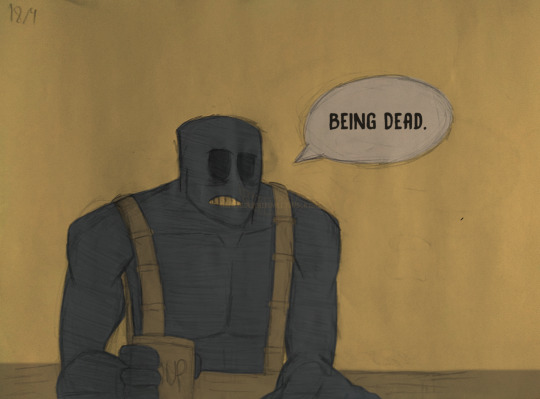

Text

I would say that, considering his history, he's not that wrong, but even I have doubts as to whether that would be right. But at the end of the day, this is just a joke that's been in my head for a while, sooooo…eh.

Oh hey, an attempt at a comic? Made by me? That I didn't give up during the process or lost all motivation? What was my only attempt at this, 2019? Damn, it's been a long time.

I thought about leaving this here without editing or any colors, just the natural ones from the paper and pencil. But something in my head said "HAHAHA, no" so I went back to work. I had to put this idea down on paper this time (literally). If another year passes without me being able to execute this idea, I would lose my mind.

This scenario was inspired by this video by Jehtt, inspired by the original meme by Windii. Credits to both of them.

For a long time I wanted to joke - especially on the anniversary - that I wanted Sammy to only have less than 5 seconds in the next game (or in other words, take his screen time in DR, and shorten it even more). You know, just for the funnies (unless..?) But,thanks to the news released at the beginning of January this year about The Cage, I legally can't do this joke anymore…this year. Don't worry, after that comes out (and finally gives Sam the screen time he wants,hopefully) and we start to crawl into the Bendy 3 production era, I'll make this joke when I can.

Anyway, happy birthday Sammy Lawrence. You may not be my favorite character in this franchise, but there are some things I can actually appreciate about you. Plus, you made me laugh a few moments before (you know what I'm talking about) so there's that.

And happy 7 years to Chapter 2, and by extension, Susie, Norman, Alice, the Searchers, (Johnny????), and Beta Ink Bendy. (I would mention Jack too, but he was only introduced with the release of CH4, so technically it's not his birthday yet, but I'll consider him here).

And now? May I be able to do something for CH4's anniversary. Wish me luck,cus I'll need it.

(it might be really late now, but it's still the 18th where I live, so it's still his birthday, so I still won)

#bendy and the ink machine#batim#bendy and the dark revival#batdr#sammy lawrence#porter batdr#batdr porter#crookedsmileart#another fun fact: I thought of this comic with Wally in mind instead of Porter#Problem is I don't have any designs at the moment of Wally as his ink counterpart;and I didn't want to have to think of a design for him no#So I switched to Porter;I think it still fits#my relationship with Sammy is complicated#Sammy; as the human director of the music department? He is ok. He's not my favorite of the human cast; but I don't dislike him. He's fine#Sammy; the prophet? Eehhh. I prefer the human.#Like there are things I can actually appreciate about him.#Certain details that I find interesting. And his appearance in CH2; for what it is; it's not bad at all.#But in general? I'm not very interested in this guy (at least;this version of him) And his post-CH2 appearances don't really help his cause#I still believe they had no plan to bring Sammy back later in the story#but because of his popularity they decided “yep;let's bring him back”; problem is: I don't think they knew what to do with him after CH2#and one might argue that they still don't know#Hopefully;The Cage will finally give Sammy the screen time he so desperately needs.#and maybe; then; I can finally start to like him a little more (okay; let's not go that far now)#Maybe his deaths in the franchise aren't his happiest moments; but they were definitely mine#HAHAHAHAAHHA (/j.....unless)

78 notes

·

View notes

Text

metal and sage have the building blocks for interesting conflicts and dynamics if the writers just. do something with those. sage taking on a human appearance that metal cannot achieve due to his inherent role as sonic's foil, who both is and is not him. the restrictions he has compared to sage's freedom. artificial intelligence with the blessing to expand itself with no limitations VS machine tied to the whims of its creator, reliant on external tampering to become more than what it already is. sage offering metal a hand because she is fascinated, captivated, with the concept of "family" - would that not come across as condescending, or would metal take what he could get, even if it meant staying in the shadow of someone more "real" than him?

there is tension and potential for something venomous, and the stage is set for exploring what makes someone "real" enough for a familial relationship. there is space to explore aspects of eggman's thoughts on his creations, why he would bestow the role of a child upon sage so easily, if it's just a different type of familial relationship to the one he has with metal, etc. but someone has to have the guts to go there and i wonder if anyone does

#soda offers you a can#lore drabbles#metal could be angry about it and it could hit particularly hard when he's also not allowed to have a voice#sage could be forced to experience more Human Emotions as a result that suck real bad#from where we can further explore if this is what she wants after all. bc it would be so much easier to just be a Machine#and how about this more “standardized” familial relationship that eggman is given with sage? does it actually work? does he really like it?#or would it more so make him realize how alienated he is from this kind of normalcy#the family he is capable of having must consist of his own creations which are made of ones and zeroes and metals#it's easier that way. more familiar. there's less free will and he can always reprogram if he needs to. a sense of control#but sage steps beyond that and breaks all that is familiar and you could make that really interesting#ok i need to go do school shit now fuck

13 notes

·

View notes

Text

HELP???

#melonposting#this is all so incredibly interesting to me. by the way. madoka magica always delivers#we want to increase the entropy of the universe... we want to turn the universe into a perpetual motion machine...#we need a source of energy that increases from input to output... and human emotions serve that purpose#especially the emotions of girls undergoing puberty#<- it doesn't explicitly state why pubescent girls have the most emotional energy. however...#i'm sure it has something to do with the prevailing gender ideology and how that affects girls' perception of puberty#in that it's both liberating and terrifying and burdensome and so many other things given society's roles for women#as in... you are becoming a woman as evidenced by the development of your secondary sex characteristics#so now you have to deal with all of that#(and of course boys deal with some of that too but it's different. because. well. sexism)#and the roles of womanhood have been a theme thus far... what with madoka's mom and teacher#you start a magical girl and turn into a witch. that process releases emotional energy#there's a certain cynicism about it all#where witches are exclusively arbiters of violence#and the supposedly heroic magical girls inevitably turn into them...#...by using up the magic needed to kill them#they sustain themselves as magical girls only by 'feeding' on the grief seeds left by the witches they've killed as 'reward'#and magical girls who only seek to kill witches for good end up being worn down by the system they're in. they turn into witches faster#i've yet to parse through all of the allegorical layers behind the relationship between magical girls and witches...#...but madoka magica is proving to be incredibly meaningful. and feminist

8 notes

·

View notes

Text

Actually devastated by the amount of people I know that use ChatGPT as a therapist. Devastated. It's "fuck AI, AI is destroying the planet" until it's convenient for you huh

#maybe its bc im an artist and am very aware that that data doesnt just vanish into the AI ether as you send it but#why doesnt this concern you!!!! first of all now p much anyone w a crumb of computer skill can access ur trauma and personal info#second of all ITS KILLING THE PLANET ITS BAD FOR THE PLANET#i dont understand how you can rally against AI use and understand how AI negatively affects jobs livelihoods passions and the environment#then turn around and use ChatGPT to roleplay. stop being a coward and make a RP post like the gods intended#FROTHING. at the mouth. i hate ai. i hate chatgpt. i hate replacing human relationships and connections [even connections you pay to have-]#being replaced by a robot :(#anyway i know not everyone can afford therapy#but thats why insta/YouTube/tumblr/any social media EVER#is stock mf full of mental health advice and videos and rants and people who will LISTEN#but youd rather skip past that. you look at the human connection and decide chatgpt is better.#gtfo with that shit#you are rallying against the machine killing art while feeding the machine#“i cant afford therapy and i cant talk about this with anybody” liar. you can. you just wont

8 notes

·

View notes

Text

not everything from arasaka is a gift, y'know.

#cyberpunk 2077#virtual photography#male v monday#masc v monday#oc: vesper kimura#i truly respect all the corpo vs who are so put together and were in it for the whole rise to power and the thrill of the game#my guy however is the world's most miserable human experiment who god (me) will not let die#but yeah all his implants pretty much were forced on him and he has a very BAD relationship with his body in terms of#not only appearance but also function. he doesn't have much of a sense of touch and he doesn't feel normal bodily urges other than being#tired all the time and having anxiety so bad he shakes#surprise maybe you shouldn't take away 60% of what makes a guy human and expect him to be your funny and loyal killing machine#anyway hi llook at him he's so sad i need to hit him with a hammer and kiss him after

31 notes

·

View notes

Text

You will help

That’s what you are for

You will smile

There is no reason to not

There will be no shoulders

To lean on

No hands to offer soft touch

You are not important

As long as you can still act

Robotic and stiff

But your connections don’t matter

As long as the others

Do not feel a burden

You are not a robot

So remember to smile and act

You are not a robot

Remember the correct time to laugh

Man or machine does not matter

As long as you have a use

#writers and poets#original poem#poem#writers on tumblr#writing#original writing#poetry#creative writing#i hope u like it#poets on tumblr#poems and poetry#i dont know what i am#who am i ?#am i even human?#am i real#why am i like this#am i a robot?#am i machine?#poetry and prose#poetry and poems#poems on tumblr#poems and quotes#chosen one syndrome#except it's your relationship with helping others#original poetry#poemsbyme#poetry on tumblr#ghost

6 notes

·

View notes

Text

Open questions about Rogue-Master

Because I'm very confident that this reveal is coming, but I have a much less concrete idea of how it'll be executed

Does the Doctor know:

My first impulse would probably be no; his emotional reactions seem a little too genuine + I kind of doubt he would have let Ruby think she was genuinely about to die. But then again, his companions' feelings and wellbeing do seem to take a backseat a lot of the time when his evil ex is involved. (or Ruby could have been in on it a la the Sutekh confrontation?). There are a few moments where the Doctor's interactions with Rogue feel a little pointed/bait-y. And it would be really funny if Rogue does his big reveal-flourish, the audience gasps, and then the Doctor immediately takes the wind out of his sails by going "haha yeah babe, I've known since the balcony ;)"

How plugged in is Rogue to the A-plot:

On one hand, I think we're building to a season 2 storyline that's significantly about the Doctor's backstory and the time lords, so it seems like a no brainer for the Master to be a major player. On the other hand, I could actually see Rogue-Master as a plausible self-contained B-plot: we get one (1) Rogue Returns episode, the reveal happens, they fight and make out, and Rogue fucks off on a flirty "maybe we'll fight and make out again another day ;)" note. Or, some compromise between the two, where after the Rogue Returns ep he shows up one more time to dramatically help the Doctor during the season finale ("get out of the way" callback?)

Is Rogue's boss real/who are they:

Seems like a superfluous detail to his cover story, so I suspect there's some truth there. Maybe whoever picked up the tooth has been exerting some kind of control over him ever since? Either making him do actual bounty hunter missions, or sending him after the Doctor specifically? (If it was Mrs. Flood, then that's a point toward A-plot involvement). Or maybe it was just another one of his signature Total Lies

Was he working with the Chuldurs the whole time, was he hunting them for real (as an assigned mission if the boss is real?), or did he he stumble across them and use them to his advantage:

Not a huge deal to the overall storyline, but could be any of the above I think (though his Bird Ship is certainly suspicious)

Is Rogue-Master a major villain/the big bad:

I lean pretty strongly toward no on this one. If he's self-directed, I think the disguise is probably little more than a 'getting my ex to take me back' scheme, and/or foreplay. If he's working for Mrs. Flood/the time lords/the real big bad, then I think he'll probably dramatically turn on them at the 11th hour. I could of course be wrong, but at this point I think "the Master's around but he's not Behind It All, he's just vibing" would actually be more of a twist

Could Rogue become a companion Missy-style:

Sadly, I think there are several points against this: a) Groff lives too far away for more than periodic guest appearances to be likely. b) unclear how many seasons we're going to get, so if the reveal happens in mid-to-late s2, then that may already be too late. c) "and what if I like what I do? Would you travel with me?" seems to be setting up that even in a best case, truce/back together scenario, they'd have more of a long distance thing with occasional run ins/hookups. Would be fun, however

Could Rogue be a new antagonist/someone other than the Master/working for the Master:

It's possible! It'd be less fun and hot to me personally. And I get very strong they already know each other vibes from both performances. But a "he's not the Master but he was lying his ass off" reveal seems more likely to me than no reveal at all

#matrix 4 typecast theory is a strong influence on all of this#so i foresee a smith-style thing of 1) still technically an antagonist;#2) uninterested in revenge/very interested in making out/would shoot you but with at least some reluctance;#3) isn't behind the main big bad scheme/has also been harmed by it/enemy of my enemy#with 'you shaped yourself into a perfect embodiment of your culture's fascism; but they still see you as diseased + degenerate + no better#than humans' playing a very similar role to smith's relationship with the machines#doctor who#doctor who spoilers#roguemaster

7 notes

·

View notes

Text

the concept of soulmates is cool but do not think about it too much. just don't.

#I've spent way too long thinking about how the world would work in that type of stuff#like would new jobs be created? the romance genre would have a lot to work it methinks. and oh gods the discourse 😭#(on one side discourse would b less likely to happen since most ppl eill find out who their soulmate is soon enough#but on the other side humans love drama and being on each other's business so)#(and like what even decides if ppl are soulmates? is it a future-seeing entity? a machine that calculates tge probability of the#relationship working? thst only raises more questions!)#and that's not even talking abt arospec ppl like we'd have such a hard time 😭

7 notes

·

View notes

Text

man. i really don’t care about robots.

#like in any media ever#idc if in detroit become human the robots can feel. bc tbh im like ‘no they can’t they’re robots’#like i just don’t think any piece of technology will ever gain sentience ever. so i dont care. throw that fucker away.#does this make sense???#i can’t think of anything less sexy than the cold hard embrace of a machine that operates from the will of doing what it thinks it wants to#i just don’t care for it but props to ppl who do. i can’t.#i think in general inorganicconcepts in the context of human intimate relationships is just so fundamentally unappealing to me#like idk. it’s probably just me.#i think it’s also the idea (in robot/human pairings) that it can never be /real/ in the ways that matters#or that most of the times robots are a substitute for grief over someone lost#or that they’re uncanny imposters#interesting ideas for sure but i can never really be into them as characters onto their own#like i dont care about their identity to me they’re identitiless chameleons who are by design always trying to replicate something else.#something or someone they were made for#they don’t have autonomy they will never have true autonomy because something in them is designed to alter them to a desired state#i also think with like current affairs with ai and whatnot it just sours the idea even more#part of it is also I dont think robots/the Machine’s (capital M) issues will ever feel tangible to me#a lot of robots in media have their struggles focused on identity and autonomy and i alr dismiss the notion that robots can ever gain#enough awareness to feel#so what does that leave me to care about?#plus i find most robot designs really lame…#sorry if u like robots btw i just needed somewhere to put my thoughts 🥹 if u do good for u#personal.txt#i’ll see if this opinion holds up with m/etal sonic lol#i know he’s a fan favourite

3 notes

·

View notes

Text

Also. Even though I don’t like sci fi i desperately wish people would write fantasy the way they write sci fi. where are the Ideas

#with sci fi it’s expected that you’re gonna put philosophy in the machine!!!#it’s expected that people will have thoughts on the relationship between humans and technology!#it’s expected that you can literalize the human condition through physics!#but fantasy? ummmm………………. have you heard of an ‘analogy’#okay now imagine if this one race represented this irl group of people…#now imagine they have magic…. and are oppressed…. and uh#magic is like…. you know….. superpowers…….. anyway imagine they get oppressed. wouldn’t that be fucked up

8 notes

·

View notes

Note

How does Egon being a robot effect the plot of Ghostbusters? Asking as someone with very minimal peripheral understanding of Ghostbusters, but wants to give you an excuse to Go Off. Please educate me about this, I will read the response and be nodding appropriately throughout, even though I have none of the context

Thank you for giving me a chance to go off, and apologies if this ask was answered a few hours late! I got distracted rewatching Frozen Empire with some friends while multitasking on answering this ask- XD TW that there will be mentions of gore at the very beginning. While not shown any images or references (for obvious reasons), I want to put this here so people wouldn't get upset for not putting a warning in the first place.

To sum up a quick basis of this AU During a bust with a pretty nasty Class 7 Demon in a robotics factory, there was... a fatal accident in which Egon was brutally killed. (I'm talking sliced in half horizontally with a decapitated head to rub ectoplasm in the wound.) Out of the other injuries this ghost caused on the guys, (the least injured was Winston while Peter almost getting his eye sliced out of his skull) they were for sure traumatized seeing Egon's head croak with his last living breaths. It was hard to break the news to Janine once they got back, and the funeral was hard to sit through after seeing the stitches on Spengler's body and head in an attempt to make him look like a normal corpse. Safe to say things were awfully quiet around the Firehouse for a good while... Until the four were called to the robotic company by the people running it. The entire thing felt like a Faustian bargain, a promise that seemed too perfect to be true, but it was the least the company could do to make up for the fatal loss of Egon Spengler. It took a bit of convincing and effort in trying to electrically transfer memories and thoughts into a machine while working on the most humanoid body the company could. All the Ghostbusters could do was wait. Then, a delivery at the firehouse doors and a giant human wooden sized crate sat at the front of the door. All of them carried it up to the lab, and opened it. Laying in a heavy amount of packaging peanuts and styrofoam was Egon Spengler. The 'skin' on his body was merely realistic rubber, every detail of any freckles or birthmarks was nailed right down to the bone. His hair, while a bit shiny for any normal human being, remained its beautiful dark brown while looking curly as sheep's wool. It's only when the sounds of whirring and the brightness of blue eyes shined on the four. A couple clicks from Egon's eyes as his head turned to look at his surroundings, his friends... "...Well, this is certainly different."

#sxilor speaks#robot egon au#ghostbusters au#ghostbusters egon spengler#egon spengler#also to put this out here before i forget#this AU takes place in mostly the movie and or IDW comics verse#but really it can also happen in RGB if anybody so desires XD#I had a ton of other rambles I wanted to share#like how life in the firehouse is very different now that Egon's a machine and no longer a human#Ray mostly blames himself for letting all of this happen to Egon and despite so he tries to remain optimistic#Winston finds it a bit uncanny that Egon's no longer a literal human but he doesn't really say or feel much about the situation#and then Peter... hoo boy Peter.#I should also put it out here that if Egon wasn't emotional in the first movie in this AU he cannot feel now period#he has lost the ability to feel and it puts a bit of a wedge in him and Peter's relationship#ough there's a lot of ground to cover for this AU I swear#sorry for clogging up the tags on this ask btw#midnightchronicles87#cw gore mention#tw gore mention#sxilor rambles

6 notes

·

View notes