Don't wanna be here? Send us removal request.

Text

Week 12

https://www.youtube.com/watch?v=TcYOflpxPSY

1. Well-considered AV relationships

The first thing I thought about was synchronization when consider AV relationships, and that includes foley and effect sound synchronization. In my application, foley sound is the sound has to be sync to the visual and it is not only the timing, but also consider about the auditory perspective. I add studio and convolution reverb to simulation the reverb environment that match to visual, adjust eq and channel volume to sync the distance and direction of sound.

Reduce or mute sound foley sound when appropriate is another important point for AV relationship in my sound design. This can be interpreted as narrative levels of character internal sound. For example, when people concentrate on one thing, their brain will automatically ignore some external sounds, this implementation can be found bullet time sound design.

In contrast, sometimes sounds need to be amplified to exaggerate, this can be to tell the audience that this is an important point in the story, or to add power and thickness to the visual. I implemented this in the shooting and some vital footsteps sound.

It's worth noting that I add a riser before every vital moment such as jump, explosion, and some camera movement, and release it as the top. It can be released in many ways, sometimes visually, sometimes with high impact effect or foley sound. This creates a lot of sound and narrative dynamics for the visual.

2. Professionally implemented SD/score

I used synthesizer in Ableton for individual sound designs like drone and UI sound, Then I'll import all the sounds in Premiere pro. In Premiere Pro, I could sync the AV more easily, and more importantly, I could add effects to each audio clip. It makes it easier for me to manage it because I don't have to create a lot of tracks, and I can still add sound effects to the tracks.

I tried to avoid reusing vital sound material as much as possible, while using different sounds of the same type, I try to make sure they don't sound too different in thickness and quality.

Through discussion, I knew that this is a cyberpunk animated 3d short film It's about a scientist being hunted by a cyborg hunter. I start to make some drone sound for the score at first WIP as music score for first scene, it seems like a simple and effective way to construct an atmosphere of mystery and danger. But Desmond said it was not the music he had in mind, then next few weeks in the group we sharing music and discussing the functionality of music score. I ended up with a synthesizer techno, I think synthesizers are perfect fit for the cyberpunk style and techno type of music can also create the tension of battle.

I cut and added some hi/low pass filters to let the drums at the right time to get in, in order to achieve valence and activation in music score narrative.

3. Appropriate workload for semester

There are three of them in this cooperation, Kim and me are sound designer and Desmond is animator. We had an offline meeting at the very beginning, through discussion Kim and I intend to work separately rather than together due to we have different styles and we want to be as creative as possible, but we still share some ideas to each other’s. When doing this way, we're giving Desmond more options to choose, He can choose which of our two versions he likes better, or he can choose some of each version and make a perfect version. We also recorded his requirements for sound design and the atmosphere and world view he wanted to construct.

We have a weekly meeting where we discuss what the animators are going to take next, and then we show the animators our work and ask animator to make choices and suggestions. I also ask questions about the details of the animation, such as the mood and state of the character, the environment and the world outside the camera.

I also raised some animation camera movement issues during the WIP discussion, Desmond said there will be recompose the camera movement in first scene after all the scenes are finished. So, I decided to optimize the sound of the train after the camera was redone.

4. Onboarding class feedback

A challenge that stumped me was about the sound of trains, I recorded myself to understand the difference in the sound of trains in different directions and at different distances. However, the train in the animation is a very high moving object, and the same reference cannot be found in real life, but through the feedback in class, I basically understand I should have focused on the woosh sound synchronization not the sound of the train itself.

It was mentioned in class that my footsteps were not synchronized with the picture, which I realized when I was making it. The figures in the picture move very fast but their legs move very slowly. A great idea from the class is add more weight on each step that give it more power so it should be make sense that character is moving fast.

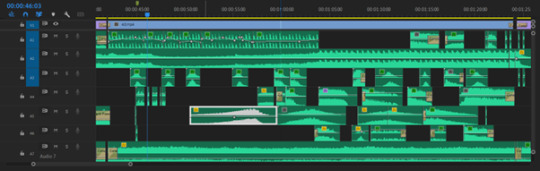

Till week 12, I have accomplished sound design in three scenes of this project and there are two more scenes that Desmond is working on that will be finished at 10th June.

0 notes

Text

Week 11

1.WIP

https://www.youtube.com/watch?v=PJL92TshRZ4

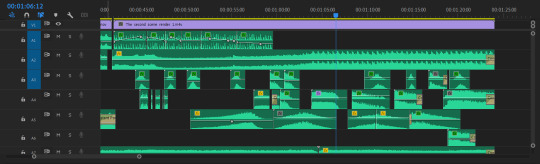

This week I did some adjustment on second scene, and new sound design for third scene. I took some of the advice I got in class, Optimized blast sound and added more woosh sound effect to express the dynamic movement.

2.Study/Research

This week I research Dune(2021), which nominate Academy Award for Best Sound in 2021. How did dune's VFX and sound team create sandworms from scratch.

"Obviously, if you Google 'sandworm' on the Internet, you'll find a lot of different versions," Mr. Vermette said(2021). This has been an inspiration for many science fiction fans and movies. There's a sandworm in Star Wars. So they wanted to do something really new and scary." The sandworm design they came up with is what Lambert calls "prehistoric." It was gnarled and covered in scales and appeared to be hundreds of feet long. Whales are one of the best models. Timothee Chalamet, facing the open maw of a sandworm, full of whalebone. Its movements below the surface must have been whale-like. Lambert's team used all of these ideas when creating the worms, rendering their textures in Clarisse, animating them in Maya, and then compositing each shot in Nuke.

Which brings them to the other thing that makes sandworms unique is their auditory effect. In addition to shaking the ground, Fremen in the Desert of Arrakis -- and viewers in the film -- should be able to hear the worms' movement. Sandworms also follow underground sounds, like sonar (Jennifer Walden,2022). Which is why Freeman distracts the creatures with "thumpers" that constantly hit the ground.

Musically, Hanszimmer uses non-woodwind instruments and mixers to produce music, which is often like the loud sound of machine engine starting, or the sound of police, creating a strong sense of pressure and insecurity. In the direction of the melody, it is the increased second interval commonly used in western mode, which echoes the erakos Desert where the film was shot.

3.Reflection

As the discuss in class, a big problem is that the footsteps are not synchronized with the visual. The step frequency of the characters in the animation is not very fast but the speed of movement is fast, so that requires thicker footsteps to show that the character is moving fast. What should footsteps look like in bullet time or slow motion. Sometimes it should be ignored and sometimes it should be exaggerated to emphasize the atmosphere. And a good suggestion by Zion, synchronizes visual dynamics by adjusting the low pass or eq filter of score, I applied that to this week's adjustments.

0 notes

Text

Week 10

1.WIP

This is sound design work from this week:

https://www.youtube.com/watch?v=B8b40iGEYfg

This week Desond send me a new redering test on second scene. In the new clip Desmond try to ask a result like “Bullet time” (Matrix, 1999) when battle start.

We have a meeting online this friday (Dezu,Kim,Desmond), I asked about progress of his animation and gived some suggestion that camera movment can be improved to him.

2.Study/research

A slow motion sound design tutorial (I will do next week):

https://www.youtube.com/watch?v=z6r7BhWq-Io&t=185s

There are some research notes that from Mark Camperell’s workflows for The Flash sound design. Which give me much inspiration to design an object moving at high speed.

In sound design for The flash they have blended several items together to make up Barry’s sound. There are elements of thunder, electricity, jets, fireballs, and various custom whooshes and impacts. These things are manipulated for certain situations to give a feeling of perspective( Asbjoern Andersen,2015). For the sound design of the Flash's abilities, Mark Camperell approach was to edit him as a really aggressive flying car. But that doesn't mean has to sample a real cars sound, but thinks of it as a car chase. There are approaches, bys, aways, stops, on-boards, even power slides and skids…

3.Refelction

We have reviewed everyones work so far in class this week, There are some refection and comments that I summarized. In the fisrt scene that train passing by, I only consider a train oprating sound but miss the woosh sound especially when getting close shot. There are also some unnatural camera movement make me struggle when I design a transition sound so I have a discuss with Desmond and give him some suggestion to optimize it.

Another challenge for me is the “flash” sound, I used a electric sound but it not really sounds materialize right, it’s too thin and not feeling much impact, So I researched some sound design from The Flash, it is more like a electricity impulse with some woosh sound.

https://www.youtube.com/watch?v=RkvhJ83iUzc

There are a tension sound deisgn in Beyond The Black Rainbow, the drone sound is simple and repeat, it even never change the note, with the deep dialogue reverb build a lot tension in interrogate scene, I think it's this uncomfortable design ( A lot of repetition low tone synthesizer sound, high dynamic range, murmurous voice with excessive reverb) that gives the film its special atmosphere.

0 notes

Text

Week 9

1.WIP

https://www.youtube.com/watch?v=mJxQXWnEHnw

This week I make the second scene mock up, as the discuss with Desmond I change the music score to a free japanese trap music that I found on youtube.

The second scene is the protagonist run through in a narrow alley, I sampled some step in rain and make it sounds from far to near by adjust eq, volume and mono channel. When character passing in the scene, I add some wosh sound and electric sound to synchronize with the visual.

2.Study/Research

This week I try to find out what cause human ear hear difference when sound source form different direction. The interaural level difference results from the fact that the head and body provide an acoustic “shadow” for the ear farther away from the sound source. This “head shadow” produces large interaural level differences when the sound is opposite one ear and is high frequency( Dobie RA, Van Hemel S , 2004). Another point is a certain distance between the left and right ears of the person. In addition to the sound source on the vertical surface, the time difference will occur when the sound source in other directions reaches the ears. If the direction of the sound source is to the right, it will reach the right ear first, and vice versa. The more the source is tilted to one side, the greater the time difference between the ears( Madeleine Burry, 2021).

I also record some tram soud in city to simulate human ear hear from front or back, I used my hand to block the microphone to simulate the pinna. The frequencies of sound from “back” above 1k decrease a little bit compare to the sound from front, maybe I can adjust eq like that to further enhance my design of sound perspective.

3.Refelction

In last few week work, I am allways try to make a realstic sound design and try to make sound much hierarchy, I thought I'd try something a little more experimental and bold in following work.

0 notes

Text

Week 8

1.WIP

As this week Desmond have not send any new rendering clip to me, I still adjust effect on last video. This is a new version of the sound design. The UI sound I use in last week is raw from libary they are ok but just sounds too clear, I add some reverb and eq to make it sounds distance. I also change the music to a flat drone sound that I make in ableton.

https://www.youtube.com/watch?v=xlZfCQjFiVI

2.Research/Study

This week I learnt to make some UI sound in ableton.

https://www.youtube.com/watch?v=b4edTjKVPig&t=204s

https://www.youtube.com/watch?v=xriCeA1Q_C8

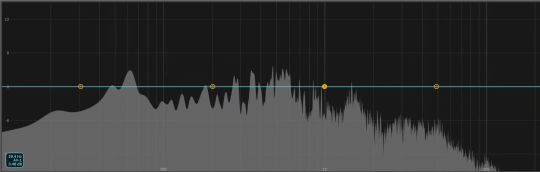

This is a screen shot of my experiment of UI sound design.

There are some other futuristic weapon sound design tutorial i have researched.

https://www.youtube.com/watch?v=AUcDgSSa0tc

Some research on cyberpunk style:

https://www.youtube.com/watch?v=P1jXmJmmj3o

In the scene of BiBi's Bar in Blade Runner 2049, the scene changes from the towering postmodern buildings of The Earth headquarters of Wallis To the streets of Los Angeles like Kowloon City, and the hallucinative soundscape in the previous scene is then transformed into the sound of noisy advertisements and rain, showing a strong contrast.This shift to the dystopian atmosphere of 2049 Los Angeles gives multiple layers, as the viewer's perspective rises from the ground to the air, and the world gets quieter until the soundscape takes on a Zen-like calm.

3.Reflection

This week we met Lawrence Harvey who demonstrate our sound in theater, When we walking around the theater to listening from diffenent distance of every various speakers, I felt totally different from different position and space, it is due to the hall is too big that cause much reverb and echo. It remind me to consider more stereo sound and monitoring sound design in some different space and speakers. I also need to define the target audience (where it be played) to do more modifications in order to to achieve the best result, For example, consider about resonance in the environment when design bass reverb sound.

Another interesting topic we have discuss in class is why brass sounds majestic and strings are sentimental. String-rubbing might be a reason cause it more sentimental but I think brass can do it as well, for example, trumpet player make trill by control their breath in. Brass insturement usually has bigger acoustic resonant body, so they make wider frequency band that might cause it sounds majestic, but I believe when add a chorus effect and lower few octave to a string sound they can make same feelling. There are many factors would affect it but I think most important is the tone, brass sounds brighter and hard just like a square wave of synthesizer, strings sound softer like a sine wave.

In addition, the the rhythm of the instrument can affect it feelling a lot. A short, powerful sound of string also feel very majestic, we can find those sound in many march.

0 notes

Video

tumblr

WEEK 7

1.WIP

This week Desmond sent me a 30s rendering test video for his animation intro sence. I make a list of this secne and make a rough sound design for it. He want a HIpHop music as score so I find a free hiphop beat as music and and design rest effect sound and foley.

2.Research/Study

This week I focus on the sound prespective research.

Sound perspective refers to the apparent distance of a sound. Clues to the distance of the source include the volume of the sound, the balance with other sounds, the frequency range (high frequencies may be lost at a distance), and the amount of echo and reverberation.

http://www.moz.ac.at/sem/lehre/ws08/em1/250SoundPerspect.html#:~:text=Sound%20perspective%20refers%20to%20the,amount%20of%20echo%20and%20reverberation.

Why is sound perspective so important?

It can immerse an audience in a unique world, help tell the story and move the storyline along.

In order to make sound perspective, the easiest way is just recording in different distance, but it will also record much noise. So, we can try to add effect to “cheating”. Simply raising or lowering the volume of the sound may seem to make the sound closer or further away, and common trick is to apply reverb to closely-recorded sounds to make them seem further away.

A foundation tutorial to make sound Perspective (audio SOUND CLOSER or FAR AWAY)

https://www.youtube.com/watch?v=X8iexmTgtL0&t=423s

3.Refelction

In my assignment 2.1 and 2.2, I tried many ways to make the sound perspective, such as adjust mono tone left and right to make sound source form left and right, adjust reverb decay and wet output to simulate different space. As sound travels through the air, it will start to lose its high-frequency content, because this is the part of the spectrum with the least energy. I started with a high shelf filter, drop it down to -6 dB in and add a short pre-delay make it sounds farther. Moreover, adding a reverberation cue to isolated sounds can enhance the feeling of emptiness and silence (Chino, 1994, p58).

0 notes

Text

Week 6

1.WIP

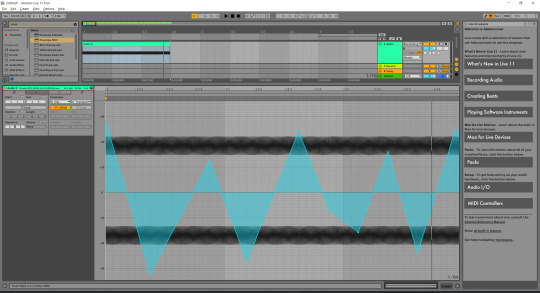

This week keep diging on the ableton live, more play on the operator and LFO

I also found a really intersting experiment that apply LFO on the fine channel makes a mistory sound, this sound when applied to arpeggios, creates a mysterious and intense atmosphere.

I have collect and sift some sounds from libary, each sound x10 as reserve.(Yellow marks ready)

Desmond said he will send a rendering test clip next week.

2.Reflection

In the past sound design assignments, I divided the video into several different paragraphs based on scenes and shots, and then designed the sound for each paragraph.When we watch the Day After Tomorrow sound design breakdown in class,I realized that it was important to design each sound type separately or in groups, although some of the frequence were too loud when they were finally combined and that is what need to adjust different chanel in the final mix, i think working on this way I can more focus on the microstructure.

In Pixar's animation, the score the impact most of atmosphere and characteristic even as part of foley. More often foley and effect is just used to express materialization.In Donald Duck's animation, you can hardly hear any foley sound except for certain key props, just music and dialogue but there is nothing wrong with all this.

3.Study/Research

tutorial and analyse video this week:

Ableton Live Operator synth tutorial

https://www.youtube.com/watch?v=ngojWlvfahI

Fight Club | The Beauty of Sound Design

https://www.youtube.com/watch?v=as2Rk4WcljA

Research and sumarise in different film sound design.

The style of film sound can be roughly divided into realism and expressionism. Realism is an accurate, unvarnished representation of nature or life. The sound design of realism style takes the sound in real life as reference, but it is not to restore the real life, and it is impossible to completely copy the sound of the shooting scene, but to obtain the artistic reality based on the real life, that is, to emphasize the realistic sense of sound(2018, WangJue). Realism sound design generally starts from the objective point of view, sound timbre, volume, sense of space and so on and it is in the same space environment, do not deliberately modify and deformation. The perspective of sound also follows the principle of real life, in the change of reverb sound, reflected sound in accordance with the characteristics of nature (2018, WangJue) . It is worth noting that the above sound features only need to make the audience feel real. For example, the change of the perspective relationship of sound from far to near in an enclosed space can be reflected by the ratio of direct sound to reverberation sound.

reference:

The Concept and Method to Design the Film Music, 2018, WangJue

https://clipchamp.com/en/definition/what-is-expressionism-film/

https://www.studiobinder.com/blog/german-expressionism-film/

0 notes

Text

Week 5

1.WIP

This week Desmond send me a developing clip of his animation which give more idea of the rendering style. I think techno music is perfect for cyberpunk action visual, So Iearnt some basic techno music production in Ableton.

I collect some foley sound in libary that I think great, refernce from the table create last week.

2.Research/study

By research the sound design in Solo: A Star Wars Story(2018), I thinks Objects and their environment are key to the sound they make, which is difficult to process in the studio. Therefore, the sound engineer must hear and record the same sound for subsequent use, otherwise it may not be a perfect combination of audio and visual. "This is something that a lot of new players don't understand," Says Tim Nielsen. When we started to do post-processing, the post-processing was so heavy that the sound lost its personality and became confusing."

https://www.postmagazine.com/Publications/Post-Magazine/2018/June-1-2018/Audio-I-Solo-A-Star-Wars-Story-I-.aspx

Author: BK Acoustics and Vibration

This week I start to play with the operator(synthesizer) in ableton and try to create my own drone sound.

Making Techno With Ableton Live 11:

https://www.youtube.com/watch?v=RKz-5n5cpAo&t=571s

3.Reflection from class

A BUG'S LIFE (1998)

The leaves sounded heavy as they fell from the trees, But it's actually a very light object which is in order to shows the contrast with ants, this sets the stage for ants to be weak characters.

When the ants hide under the cave, the music stops and there's a silence,this brings more emotion to the hopper when they burst into the cave. Build tension not only by music but also by the silence.

Exaggerate wing flaps(motorcycle engine sound), most of time real bugged wing flaps would not be intresting because they are too small, the improtant thing for a bug’s life is to hear the world from the perspective of these small tiny little insects.

0 notes

Text

Filter Week 4

1. WIP

In this week I have a meet up with collaborate partner, he showed the upgraded story board to me. I defined the vital sound elements in project plan during this week.

A sample from new storyboard by Desmond.

I have listed a basic list for the vital sound elements in this scene:

2. Research and learning

comment from last assignment: Make a climax or release(drop) after build tension(riser).

This week I start to learning synthesizer sound design in Ableton.

First, I will learning how this program work.

https://www.youtube.com/watch?v=vCNASTnM6p4&t=149s

Ableton Live 11 - Tutorial for Beginners in 12 MINUTES by Skills Factory (YouTube).

https://www.youtube.com/watch?v=Z37o4pB3Leg

Make Foley sound musical in ableton live.

https://www.youtube.com/watch?v=XmI33zP51Us

Synth Basics by Musician on a Mission

Synth Basics:

Filter: Filters are software plug-ins or hardware used to cut and filter a particular band of sound.

Low-Pass: It is used to remove frequencies above the cutoff point. This is a progressive effect that starts with the higher-frequency harmonics and works its way down, meaning that the more frequencies you remove from the sound, the less you can control.

Hi-Pass: The role of a high frequency filter is the opposite of a low frequency filter, which first removes the low frequency of the sound, and then the high frequency.

LFO: Low frequency oscillators (lfos), as an oscillator, can also vibrate in accordance with a wide variety of waveforms (common sinusoidal, square, triangular and sawtooth waves, etc.). The difference is that low frequency oscillators usually oscillate at frequencies below 20Hz, which means they can't produce sound (below 20Hz we can't hear sound).

reference :https://yamahasynth.com/learn/synth-programming/synth-101-basics-getting-started

https://www.izotope.com/en/learn/the-beginners-guide-to-synths-for-music-production.html

https://blog.andertons.co.uk/learn/synth-101-a-guide-to-synthesizer-terminology

0 notes

Text

Week 3

Sound Perspective

What is sound perspective?

Sound perspective refers to the apparent distance of a sound. Clues to the distance of the source include the volume of the sound, the balance with other sounds, the frequency range (high frequencies may be lost at a distance), and the amount of echo and reverberation.

http://www.moz.ac.at/sem/lehre/ws08/em1/250SoundPerspect.html#:~:text=Sound%20perspective%20refers%20to%20the,amount%20of%20echo%20and%20reverberation.

Why is sound perspective so important?

It can immerse an audience in a unique world, help tell the story and move the storyline along.

In order to make sound perspective, the easiest way is just recording in different distance, but it will also record much noise. So, we can try to add effect to “cheating”. Simply raising or lowering the volume of the sound may seem to make the sound closer or further away, and common trick is to apply reverb to closely-recorded sounds to make them seem further away.

A foundation tutorial to make sound Perspective (audio SOUND CLOSER or FAR AWAY)

https://www.youtube.com/watch?v=X8iexmTgtL0&t=423s

In my assignment 2.1 and 2.2, I tried many ways to make the sound perspective, such as adjust mono tone left and right to make sound source form left and right, adjust reverb decay and wet output to simulate different space. As sound travels through the air, it will start to lose its high-frequency content, because this is the part of the spectrum with the least energy. I started with a high shelf filter, drop it down to -6 dB in and add a short pre-delay make it sounds farther. Moreover, adding a reverberation cue to isolated sounds can enhance the feeling of emptiness and silence (Chino, 1994, p58).

In contemporary soundtrack practice, most dialogue sound in movies are sound distant. Imagine a movie where all of the characters are always right in front of the camera, just a few inches away. That would be boring and asphyxiating (Marc Brouard). Usually build an extra layer of three-dimensionality to make sound dwelling in the different sonic space, make it sound hierarchically.

https://soundmixingstage.com/inside-mixing-dialogue-to-sound-more-distant/

0 notes

Text

Week 2

Research

Note form class: Make the chord change or string is picked up point when the image glitch.

Note from YouTube video: SOUND DESIGN for FILMMAKING | Tutorial

https://www.youtube.com/watch?v=MwksKUJSZ9s&t=191s

Basic sound design elements to know:

Riser – It build over time creating suspense and anticipation up to a particular point and often have a steep drop and can be used to introduce a new section or sequence of the film.

Drones & Atmospheres – It able to add a bit extra depth to edit at the same time give audience some extra information with regard to the sentiment to a motion they should be felling toward this particular scene.

Slow motion – It is accentuating the slowing done of time.

Time laps – It convey the rush and speeding up of time.

Transition – It can be classic basic whooshes, setbacks and hit.

Ambience – It is ambience texture usually present the environments, make the world feel bigger.

Sound design doesn’t have to be exact; it should never be distracting but rather play subconscious role and helping give more information and detail toward to the scene.

Note from Chion, M, Gorbman, C and Murch W, 1994. Audio-Vision, 1st ed. New York: Columbia University Press:

Unification: The most widespread function of film sound consists of unifying or binding the flow of images. (p.47)

Sound definition. A sound rich in high frequencies will command perception more acutely; this explains why the spectator is on the alert in many recent films. (p.15) That also explain riser usually is a build-up high frequency sound.

Feeling and Filmmaking: The Design and Affect of Film Sound, LUCY FIFE DONALDSO

AURAL MATERIALITY - Sound contributes significantly to the materiality of the image, despite its intangibility, fleshing out the movement of objects and bodies through increasingly fine detail. (p.32) The contribution of sound to the sensory appeal of cinema, to the construction of a fictional world, requires a range of very detailed decisions about what sounds should be heard and how they should complement and/or extend the image.

Progress

Using studio reverb to ‘extend’ the sound, by adding a long decay.

https://www.youtube.com/watch?v=MAFUEqDdbCs

Convolution Reverb: It uses the sound of an actual space and its acoustic properties for the signal. The way to capture that space is by a use of an impulse response. I used to on my drone sound in order to make more space and hollow felling.

Reflection

Indeed, only adding effect on sound that found in library is not always a solution in further work, I will start moving towards to synthesizer in next week.

2 notes

·

View notes