Don't wanna be here? Send us removal request.

Photo

Oracle, the steps of a giant in Colombia

It was straight to the point, with the same seriousness and credibility that it has become a prominent space in the global technology industry. So can best be described brief visit Mark Hurd, co-president of Oracle, the software giant focused on corporate software, Colombia. A business trip, like many others, only this time it was to a destination that is beginning to have an outstanding weight for the performance of their company. "It is a country where we are present since 1990 with spectacular growth of about 5%, and where we have installed much of our infrastructure to meet the customers reginales" he said.

In a brief discussion group, the executive in charge of the co-presidency of the company (nothing more and nothing less than Larry Ellison, founder and one of the richest men on the planet), explained the strategic goal that the country and the region have acquired for a company that, according to its balance of fiscal year 2014 (ending May), grossed US $ 38,300 million: "they have human capital with a high rating, which is valuable to our current situation where the cloud and App phones are becoming an increasingly essential part of business. "

The talk was enlivened by his jokes between questions, the vast knowledge of a field that has helped shape over the last four years, his mastery of the hearing and the ironies that used to get out of the uncomfortable issues while being the executive politically right that has built a multimillion-dollar empire. It is the same way, in recent years, it has strengthened with the acquisition of 90 companies that, beyond the initial investment of US $ 56,000 million, have allowed him to stay in the 'crest of the wave' within an industry it has had to adapt to the expansion of wireless networks, virtual servers and the explosion of mobile devices.

The secret of success of Oracle, which professes to be the number one company in the world in business solutions and applications number two, has been anticipating this change, invest, adapt to the assimilation of technology and innovation. "The technology market moves annually US $ 71 billion;. The application, around US $ 2 billion and consumers tend to invest US $ 9,900 million in technology can not just stay with the figures and maintain the infrastructure 10 years ago, we have modernize, upgrade our capacity and make simple technology for our customers" said Hurd.

Hence Oracle's strategy to continue growing its sales and expand its size on the market in the near future be concentrated on four areas: allowing companies to facilitate their process of vertical integration, which means that all areas operate without mishap under one system; invest and develop in MuBE supported applications that create a difference in quality in the industry and are reliable for its high safety standards; provide tools to companies free to develop their own applications; as the infrastructure to support its projects.

The strategy begins to give promising results in the last six months, only the section of the cloud, Oracle posted earnings of US $ 2,000 million. That is the way, and Colombia, according to Hurd, has the opportunity to play a special role in it: "The numbers of the country, as its growth, low inflation and excellent educational level of its people, he has made us strive to making strategic investments to expand further in the region".

13 notes

·

View notes

Photo

What is SAP?

SAP is one of the most important software companies in the world and specializes in developing software packages for business management. It is so sophisticated tools that require a team of specialists for installation and maintenance.

SAP offers advanced training centers worldwide, with Business Support one of its oldest training centers, our facilities provide the best learning environments combining new educational approaches with the most qualified instructors and the latest multimedia technology.

You know that education is essential for the successful use of SAP applications. But have you ever tried to train with SAP Education? In today's market being a certified consultant in SAP is a high profile, working in large consulting firms in information technology, where implanted and develop solutions for private and public companies.

SAP R / 3 is divided into eleven modules, for example R.R.H.H., Finance, Material Management, Controlling, Logistics, Sales (sales and shipments), Production, Maintenance, Quality.

Why certified with SAP?

Gain a competitive advantage for you and your team with SAP certification. Our training certifications, which have worldwide recognition, show that you refined their skills through rigorous study and direct experience in your area of expertise. Whether you're a user, customer or partner of SAP, we provide certified in their area of specialization and level of knowledge in order to increase their technological value training. There are few references in the world of business carry the value of SAP certification. Those who have refined their skills through rigorous study or direct experience and have proven their capabilities passing challenging exams process oriented

0 notes

Photo

COMPUTER ANIMATION

Also called digital animation , computer animation or computer animation , it is the technique of creating moving images using computers or computers. Increasingly created graphics are 3D , but 2D graphics are still used widely for slow connections and real-time applications that need renderizarrápido . Sometimes the target computer animation is itself other may be another medium such as a film . The designs are made with the help of software design, modeling and finally rendering.

To create the illusion of movement , an image is displayed quickly replaced by a new image in a different frame . This technique is identical to how the illusion of motion in films and on television is achieved.

For 3D animations , objects are modeled in the computer (modeling ) and 3D figures are joined with a virtual skeleton ( bones). To create a 3D face is modeled body , eyes, mouth , etc. Character and then come alive with animation controllers . Finally , the animation is rendered.

In most of the methods of computer animation, an animator creates a simplified representation of the anatomy of a character, it has less difficulty to be animated . Bipedal characters or quadrupeds, many parts of the skeleton of the character correspond to the actual bones . Bones animation is also used to animate many other things, such as facial expressions, a car or other object you want to provide movement .

In contrast, another type of animation would be more realistic motion capture , which requires an actor to view a special suit fitted with sensors , with their movements captured by a computer and later incorporated into the character.

3D animations , frames must be rendered after the model is completed . For 2D vector animations , the rendering process is key to the outcome. For recordings recorded early, the frames are converted to a different format or to a medium such as a film or digital video. The frames can be rendered in real time, while they are presented to the end user.

0 notes

Photo

Software for backup. Back up with confidence

Many users with little experience without Crean Backup Your Data until they discover how expensive and long Que Ser Search Results Data Recovery UN After the ruling team. And hay Many times situations where poorly prepared users lose files permanently, and some of them very valuable adj son. Failure Causes Different -UN These can be short power failure, virus attack UN or just a small lock equipotential m But the result is the same: The computer does not start, some adj Files Recover Hard son and some adj may be lost forever. : In addition, even a simple one Restoration Team its normal operating state of the UN very slow process. Imagine you have to reconfigure the system and then install all the programs you need, remember all parameters and passwords, etc. Until now Only Thinking This will shake ago. And, it seems, Catastrophes These always occur at the worst possible moment: When you need team work and that the available data urgently to be.

A situation could still be worse failure of the United Nations System in the corporate server upon which the entire Company Business. Its Restoration to the operating state could take days or weeks INCLUDED. Losses in such accidents can be catastrophic for Business Activity a long period.

Well, son System Failures difficult to prevent, but hay A proven solution that minimized the damage: United Nations Program for reliable copy of Systematics and Security. If You Have on hand a backup and Software for Backup and Restore ,, System Restore Windows to the state total will be reduced OPERATION REGARDLESS hours if it is portable UN or a great server corporate.

So For Backup and Restore, Data software is a must for ANYONE who really you import your data. There are many programs for this in the market, some adj are for individual users and some adj, paragraph Large Companies

0 notes

Photo

Monitor the performance of SAP, key to business success

global businesses of all sizes use SAP enterprise applications to manage complex business processes that support the success of the company. SAP software covers important areas, including logistics, product development, manufacturing, sales and distribution services, accounting and control, human capital management and quality management. Thousands or even hundreds of thousands of employees use it daily as the primary means to do their job. Any related problem can potentially even destroy the company. Therefore, having the ability to monitor and properly manage the performance of SAP, from start to finish, save businesses time and money, and even increase their competitiveness.

Because SAP applications are very personalized rate and depend on complex connectivity between deployed systems worldwide across multiple data centers, networks extensive, infrastructure application delivery area and the cloud, it can be challenging to locate and resolve problems when they arise. And the delivery chain SAP applications also includes integrations with third parties, levels of service providers, firewalls, load balancers and databases; WAN optimization technologies such as Citrix and a variety of end-user devices that access SAP.

In a stable production environment SAP reality is that most of the problems that affect SAP not from the application itself. Just getting to the point where you can identify, isolate and resolve problems quickly SAP, by visualization and reports, the company may have a number of tangible and intangible benefits.

If not resolved, the SAP performance issues have the potential to threaten the core business processes, impacting negatively on the productivity of employees and, ultimately, put the organization at financial risk.

0 notes

Photo

IBM’s artificial intelligence comes into the kitchen

Chef Watson, cognitive intelligence system the company is able to collect information from 10,000 different recipes to create unique and tailored to users dishes.

a chef capable of storing more than 10,000 recipes, take into account the tastes and textures of 2,000 ingredients, combine them with the tastes and dietary restrictions of diners imagine and, based on this, be able to propose instantly more than 16 billion different combinations. Well, that chef exists and is called Watson. IBM’s artificial intelligence has evolved a lot since 2011, in its debut, participants could beat the American television game show Jeopardy !, to the point that only five years after the system is a culinary expert who has dared even to make the jump to the kitchen.

And it is that while Watson still can not cook, yes that has managed to compose the first “cognitive menu” in Spain as it was found in the demonstration organized by IBM in Madrid, with the collaboration of the chefs of The Kitchen Club. “When we talk about gastronomy we do also of creativity and, in this sense, Watson helps us create all those recipes that have not yet discovered,” says Elisa Martin, director of Innovation and Technology IBM.

Watson is the first system of cognitive intelligence, ie, unlike traditional computers their interactions are not scheduled but is capable of understanding the natural language of people and learn. Thus, the system is able to process structured information (such as databases) and unstructured (such as cookbooks or internet articles) and based on that finding the best combination of ingredients that are used are used.

In addition, the system has learned which are the most common combinations and what products are best used as you cook. For example, Watson knows that the olive oil it is a fundamental element of Spanish cuisine, just as soy sauce is typical of Asian dishes; while if the user has indicated some kind of food allergy, such as lactose, the system will do without containing milk ingredients.

This technology, accessible to the public via the web for anyone to interact with it, is very intuitive and easy to use: only need to select the ingredients used, the type of dish (drink, dessert or main) and dietary needs of the user . The system takes care of everything else.

IBM’s big bet

Chef Watson is just one example of the many applications that can have cognitive intelligence systems in our daily lives. This technology, called to transform the relationship between humans and machines, aims to help people make better decisions thanks to its high processing capacity, to analyze millions of data in just a few seconds, and give an answer in real time. More so in a context in which the popularization of smartphones, social networks and the Internet of Things data has flooded society.

Watson is the great bet for the future of IBM, especially since early in the century abandoned computer manufacturing, as evidenced by the 1,000 million euros that the US company has invested in the development of artificial intelligence.

Many corporations have already begun to introduce their solutions. One of the sectors that have shown interest is health. Reference centers as the Genome Institute of New York or the Mayo Clinic in Spain, IBM Watson used to provide customized treatments to each patient; while firms such as Medtronic developed based on this technology an application that can predict a hypoglycemic episode up to three hours before it occurs. Other national companies such as CaixaBank, with Watson as a consultant, and Repsol, which employs cognitive intelligence for better results in the areas of exploration and exploitation of hydrocarbons and have incorporated it into their decision-making processes.

0 notes

Photo

“Internet of Things not exist without the Cloud Computing”: Nicholas Carr

Nicholas Carr is considered one of the most influential thinkers in the world, issues related to information technology. Regular contributor to media such as Financial Times, Strategy & Business, Harvard Business Review and The Guardian, has written several books that question the role of technology in the development of society, while showing good practice to growth innovation and critical thinking.

Known for his books Does IT Matter? and The Shallows (Surface: How the Internet is changing the way we think), Carr was recognized as one of the 100 most influential people on these issues by eWeek magazine in 2007. He is currently a regular speaker at MIT, Harvard, Wharton and NASA, as well as numerous companies and institutions worldwide.

The writer was one of the special guests at Clear Tech Summit 2014, organized by Claro Colombia in Cartagena, which brought together national and international experts to discuss the future of new technologies and discuss about the main trends that take businesses in the future.

During his speech, Carr gave a comprehensive overview of the evolution, present and future of corporate Cloud, in which he compared what happened with electricity in the early twentieth century. “The changes we are seeing in the cloud technologies are repeating something we’ve already seen. Instead of having their own computers, users can now pay a provider to ensure the functioning of their systems, rather than have their own data centers and acquire licenses for each of the users, "he said.

Noticintel attended the meeting between the press and the expert, which has some of the answers he gave to journalists from around the country.

Why are people calling it the ‘anti technological’ writer?

Something that is clear to me, as a writer of technology in the last 20 years, it is wrong to keep a narrow view of what is happening. If you look around, computers-in the form of laptops or smart-phones are becoming the center of the lifestyles of the people. And in that sense, it is possible to see the benefits we get from having the ability to reach an unlimited amount of information and communicate faster.

However, we also discovered that there may be problems and that not only good things happen, there are risks with technology. I already addressed in my book The Shallows (Surface), one of the evils is that we have all this information but we decided that our systems keep us distracted and we interrupted at any time, which leads us to have less opportunity to use a deep thought.

In my new book I talk about how we are experiencing a loss of talent and skills, both in our personal and professional lives when both computers trust. So I think it’s important to live skeptical of the technology, even if we realize the great benefits that computers and networks have brought us.

What is the meeting point of concepts such as the Internet of Things and Cloud Computing?

I do not think the Internet of Things, as is commonly called, could exist without the existence of Cloud Computing, as it needs a network, storage, very cheap analytic capabilities to collect and analyze this information in a meaningful way.

If we go back to 2000, we see that there was a lot of noise about the 'ubiquitous computing’, which rests on the concept of the Internet of things: having sensors and processors in everyday objects to determine their performance. But then it was impossible, because it was very expensive to put sensors in appliances, as well as collect and analyze information.

Thanks to cloud computing and large-scale centralized systems can do this analysis. And when using sensors which are now very baratos- can start building the Internet of Things. The two technologies will eventually progress together as one.

In this case, one of the challenges will be to set limits in terms of privacy and realize that not all answers can be ascertained by analyzing the data, no matter how much information you have. We see many promises on the Internet of Things, but there are also risks of relying on it.

A few years ago, you wrote a highly regarded article on how Google makes us stupid, is it possible to draw a parallel of how the Cloud is also changing the mindset in business?

In that article it said that the technologies create a new environment for us in which we develop our thinking, but it also has risks. We are constantly interacting with technology, it is our source of information, our media and in many cases our test medium. It encourages us to think more superficially, to be distracted and fickle, unless contemplative or reflective or think about things for a long time.

This is a similar problem that companies have when referring to the cloud, to the emergence of smartphones and their expectations that workers are constantly connected, to check their emails and messages frequently. That begins to stimulate short-term focus among employees, managers and companies in general.

And if in personal life began to think less deeply, companies start to lose that same ability to generate innovation, creativity and critical thinking. Then they need a 'co-mind’ that take away those distractions. Many companies that encourage their employees constant connectivity, will see their innovation and creative thinking is reduced.

Can we use technology to disconnect us from herself?

It is a big challenge. As a writer of nonfiction, I usually use a word processor to write my books while I use Google and the Internet to do my research, which is very powerful and beneficial. Personal computers have lots of tools that can be used to increase productivity, but also have notifications Facebook or Twitter, or email for review. For me this undermines the power of computers as a tool for specific things, to be creative.

Technology joins productive work with personal communications and entertainment with which we frequent distractions.

One way to address this is personal and is to turn off services that are good for the job. Unfortunately, we are not very good at this, because we lack discipline. But there is another way, created by programmers, and is using new interfaces that provide a simple way to eliminate distractions when trying to stay focused. That is, create barriers between production functions and entertainment.

A technology enthusiast is considered?

I’m not a lover of technology to the level of a programmer or a systems engineer, but rather in the strict sense of the word 'Techie’ which is anyone really interested in technology, enjoying the latest devices and applications. That is, I offer no views as someone outside the technology, but as someone who enjoys it. I better consider it a 'techie’ skeptical.

If there is no technology, do you also would have been a writer?

Probably it would have been a writer. I wanted to be a writer since I was a child, so it is something that is within me. In my case, I write about technology because it is changing, moving so quickly that piques my curiosity. Unfortunately, many times we get carried away by the flow of technology and not look back to think critically and deeply about us is changing not only in economic but social, psychological and artistic terms.

So I think in that scenario would have written about science or social phenomena that change the way people live, but have not been fully explained

9 notes

·

View notes

Photo

Eight trends in people management in 2020

These are human resource trends that will dominate the employment landscape in the coming years.

HR management has faced major changes in recent years and it seems that the revolution will not stop. The advancement of technology, globalization and generational impact are some of the factors behind this transformation. Then 8 trends in talent management that will dominate the employment landscape in the coming years.

1) Social Learning

This is the learning that occurs in everyday life from observation and imitation. In this area, the online resources and virtual communities will play a key role.

2) Big Data and artificial intelligence

Thanks to technology, you can achieve a better understanding of human behavior. Through Big Data you can access a wealth of information and analyze trends in the labor market. This knowledge can be used by companies to effectively manage their human resource strategies.

3) Manager communities

The community manager will assume a new role as transformational leaders who have the ability to motivate and inspire with his words and example to others and thus lead to positive changes in them, in the organization and in society.

4) Knowmad

This concept consists of two terms: Know (know) and Nomad (Nomad). Companies seek nourishment professional with this profile: a knowledge worker and innovation, flexible and able to work with anyone, anytime, anywhere.

5) Collective Intelligence

The process of decision-making will become increasingly collaborative time. Leadership ceases to be individualized.

6) New workplaces

New digital tools provide new working environments, many of them mobile, transforming working practices.

7) Focus on talent

The human resources department should promote a transformation in organizations, prioritizing talent. Companies go behind professionals focused on building a common project.

8) internal social networks

Internal social networks will become more effective in boosting the sales force.

0 notes

Photo

RESOURCE MANAGEMENT DATA - DATABASES - DESIGN - MODELS DATA - DATA DICTIONARY - TRENDS PART 2.

MODELS OF DATABASES.

Just as there are many ways to structure business organizations, there are many ways to structure data that these organizations need. The administrator database system separates the logical view of physical data, which means that the developer and the end user does not need to know where and how data is actually stored. In the logical structure of a data Dase, businesses need to consider the characteristics of the data and how to access that same will have.

There are three basic models for structuring a database:

HIERARCHICAL MODEL:

Rigidly structuring related data on a “tree” invested in the records contain two elements:

A simple root or a golf teacher, often called key.

A variable number of subordinate fields defining other record data.

As a rule, the all fields only have a “father”, every third parent can many “children.” The biggest advantage is the speed and efficiency with which the data search is performed; disadvantage.

NETWORK MODEL

Create relationships between data through a list structure in which subordinates records can be linked to more of a “father”. The ratio is called set. Its advantage is that no restrictions on the number of relationships for a field, but in turn the network databases are very complex because when increasing the number of design and implementation relationships are more complicated.

Relational Model.

Traditional organization charts of columns and rows. Commonly used for accounting and financial data. The tables are called relations, called TUPLA row and column attribute. Its advantage is the conceptual simplicity therefore is flexible for end users. Unlike the hierarchical and network models, all data within a table and between tables can be linked, linked and compared.

DATABASE object-oriented.

They are also called MULTIMEDIA DATABASES and managed by special managed systems. Here data including pictures, drawings, documents, maps, video, and photos and others are handled.

They are especially used in the industries of newspapers, television and computer integrated manufacturing.

CREATION OF DATABASES

CONCEPTUAL DESIGN: Abstract model of the database from the perspective of the user or business.

PHYSICAL DESIGN: It shows how the database is really organized storage devices Shortcut

DATA DICTIONARY

It is the third element of a database administrator System, it is a file that stores definitions of data elements and features as the use, physical representation, ownership, authorization and security.

Also it refers to the data element (representing a field) on specific systems and identifies individuals, business functions, applications and reports that use the item.

CURRENT TRENDS IN DATABASES

For current applications database functions that are required to store, retrieve and process various means and not only text and numbers.

DATA WAREHOUSE: Data warehouses. Useful for companies that store increasing amounts of information, building huge data warehouse organized to allow access to end users.

MULTIMEDIA DATABASES Additional database that allows end users to quickly retrieve and present complex data including many dimensions.

DATA WAREHOUSE: Its benefits are the ability to obtain data quickly and easily, since they are located in one place.

The AD, allow storage of metadata, including data summaries that are easier to find, especially with Web tools

It handles a subset of them called duplicate data market and is dedicated to a functional or regional area in AD.

DATA STORE FEATURES:

Organization

Consistency

Variability in time.

No Volatility

Relationality

Client / Server.

DATA MINING

It relates to the general term as mining process requires choosing between a huge amount of material or intelligent inquiry to find exactly where the value of information in the data resides.

Other names given to this method are knowledge extraction, data Paleo Archaeology data processing data patterns, data dredging and Harvest Information

The technology of data mining can generate new business opportunities by providing these features:

Automatic prediction of trends and behaviors. Data mining automates the process of determining predicted information in large databases. For example, data mining can take past data and identify targets that are more likely to respond to developments now; this in the case of marketing.

Automated discovery of previously unknown patterns. The data mining tools identify previously hidden patterns in one step. For example, in this process patterns are discovered in the analysis of retail sales data to identify seemingly unrelated products.

CHARACTERISTICS AND OBJECTIVES OF DATA MINING

Usually the data is in the depths of the databases, which sometimes is there for several years.

The data mining tools help to extract the ore from the corporate information buried in files or archived public records.

The “mining” is often an end user with little or no programming skills empowered mining tools that allow you to investigate for ad hoc questions and get answers quickly.

“Scavenging and Shake” means the discovery of new data and information.

Data mining produces 5 types of information: associations, sequences, classifications, groupings and forecasts.

TECHNICAL DATA MINING

CBR: This method uses historical cases to recognize patterns.

Neural Computation: It is a machine learning method through which historical data is examined to recognize patterns which must be used for forecasting and decision support.

Intelligent Agents: It is the technique used to retrieve information from the Internet or intranets databases.

APPLICATIONS

Retail and Sales, Banking, Manufacturing & Production, Brokerage and securities trading, insurance, computer hardware and software, research, state functions, Airlines, Health, Transmission and Marketing.

#client data management#data security#data management service#business intelligence#management data systems#information management

0 notes

Photo

DEFINITION OF THE IT INFRASTRUCTURE

The infrastructure consists of a set of physical devices and software applications required to operate across the enterprise. However, the infrastructure is also a set of services across the enterprise, budgeted by management, covering both human and technical capacities. These services include the following:

The services that a company is able to provide its customers, suppliers and employees are a direct function of their IT infrastructure. In Ideally, this infrastructure should support the business strategy and information systems of the company. The new information technologies have a powerful impact on business strategies and IT, as well as services that can be offered to customers.

computing platforms that are used to provide computing services that connect employees, customers and suppliers within a coherent digital environment, which includes large mainframes, desktop computers and laptops as well as personal digital assistants (PDAs) and devices Internet.

telecommunications services that provide connectivity data, voice and video to employees, customers and suppliers.

data management services that store and manage corporate data and provide capabilities for analyzing data.

Services software applications provide capabilities across the enterprise, as systems of enterprise resource planning, management of customer relations, management chain sumininstro and knowledge management, which are shared by all business units.

Management Services physical facilities that develop and manage the physical facilities required for computer services, telecommunications and data management.

IT management services to plan and develop infrastructure, coordinate IT services between business units, handle accounting IT spending and provide project management services.

Services IT standards that give the company and its business units policies that determine which information technology is used, when and how.

IT training services that provide employees with training in the use of the systems and managers, training on how to plan and manage IT investments.

Research and development IT company that provide research on IT projects and investment potential that could help the company to differentiate itself in the market.

This perspective of “service platform” facilitates understanding of the value of businesses that provide infrastructure investments. For example, it is difficult to understand the real business value of a fully equipped personal computer running at 3 GHz and cost around $1,000 or a high-speed connection to the Internet without knowing who will use it and what use will be given. However, when we look at the services we offer these tools, its value becomes palpable: the new PC enables a high-cost employee who earns $ 100,000 a year to connect to all major enterprise systems and public Internet. The service High-speed Internet saves this employee about an hour a day waiting for information from the Internet. Without the PC and the Internet, the value of this employee to the company could be halved.

#information technology services#business intelligence#asset management software#network monitoring#data centerapplication hosting

0 notes

Photo

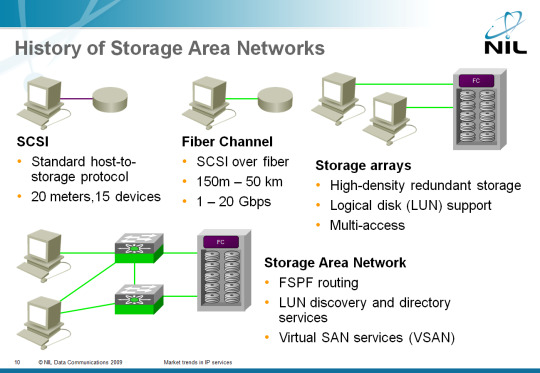

Storage Area Network (SAN) - Comparative

A SAN can be considered an extension of Direct Attached Storage (DAS).

DAS Where there is a point to point link between the server and storage, a SAN allows multiple servers to access multiple storage devices on a network share.

Both SAN and DAS applications and programs users make their data requests to the filesystem directly. The difference lies in the way that the file system obtains the required data storage.

In DAS, the storage is local to the filesystem, whereas in SAN, storage is remote.

SAN uses different access protocols such as Fibre Channel and Gigabit Ethernet. On the opposite side is the Network-Attached Storage (NAS), where applications make data requests to the file systems remotely using Server Message Block protocols (CIFS) and Network File System (NFS).

Basic structure of a SAN

SANs provide connectivity I/O via the host computers and storage devices combining the benefits of Fibre Channel technologies and network architectures thus providing a more robust, flexible and sophisticated approach that overcomes the limitations of DAS using the SCSI same logic interface to access the storage.

SANs are composed of three layers:

Host Layer: This layer consists mainly of servers, devices or components (HBA, GBIC, GLM) and software (operating systems).

Fiber Layer: This layer conform cables (fiber optic) and the SAN SAN hubs and switches as a central connection point for the SAN.

Storage layer: This layer is composed formations disk (Disk Arrays, cache, RAID) and tapes used to store data.

The storage network can be of two types:

Fibre Channel network: the network fiber channel is the physical network of devices that uses Fibre Channel Fibre Channel switches and directors and protocol Fibre Channel Protocol (FCP) for transport (on serial SCSI-3 Fibre Channel).

IP network: LAN infrastructure used with standard hubs and/or Ethernet switches interconnected. IP SAN uses an iSCSI transport (SCSI-3 serial over IP).

Fibre Channel

Fibre Channel (Fibre Channel) is a standard that carries gigabit, is optimized for storage and other high speed applications. Currently the rate is handled is around 1 gigabit (200 MBps full-duplex). Fibre Channel will support full duplex data transfer rates up to 400 Mbps in the near future.

There are three Fibre Channel topologies:

Point to point (Point to Point).

Arbitrated loop (arbitrated loop).

Switched fabric (Switched Fabric).

Fibre Channel Fabric

The “Fibre Channel Fabric” was designed as a generic interface between each node and interconnection with the physical layer of the node. With the accession of this interface, any node “Fibre Channel”, can communicate over the fabric without specific knowledge required interconnection scheme between nodes.

Fibre Channel Arbitrated Loop

This topology refers to sharing architectures, which support full-duplex speeds of 100 MBps or even up to 200 MBps.

Analogously to the Token Ring topology, multiple servers and storage devices, can be added to the same segment of the loop.

Up to 126 devices can be added to an FC-AL (Fibre Channel Arbitrated Loop).

Since the loop is shared transportation, devices must be arbitrated, that is, should be monitored for access to transport loop before sending data.

Services provided by a fabric

When a device is attached to a fabric its information is recorded in a database, which is used for access to other devices on the fabric, also it keeps track of the physical topology changes. These are the basic services are presented within a Fabric.

Login Service: This service is used for each of the nodes when they perform a session to the fabric (FLOGI). For each of the communications established between nodes and the factory identifier origin (S_ID) and connection service returns sent one D_ID with the domain and port information where the connection is established.

Name services: all information equipment “logged” in the fabric are registered in a name server that performs PLOGIN. This in order to have all recorded in a database of local residents inputs.

Fabric Controller: is responsible for providing all state change notifications to all nodes that are discharged into the fabric using RSCNs (log change status notification).

Management Server: the role of this service is to provide a single point of access to the previous three services, based in “containers” called zones. A zone is a collection of nodes defined to reside in a confined space.

SAN-NAS hybrid

Although the need for storage is clear, it is not always clear what the appropriate solution in a given organization.

Choosing the right solution can be a decision with major implications, but there is no single correct answer, you need to focus on the specific needs and objectives of each end user or organization.

For example, in the case of companies, the company size is a parameter to consider. For large volumes of information, a SAN solution would be more accurate. However, small companies use a NAS solution.

However, both technologies are not exclusive and can coexist in the same solution.

As shown in the graph, there are a number of possible outcomes involving the use of technologies DAS, NAS and SAN in a single solution.

Features

Latency: One of the main differences and characteristics of the SAN is that they are built to minimize the response time of the transmission medium.

Connectivity: allows multiple servers to be connected to the same set of disks or tape libraries, allowing the use of storage and backups are optimal.

Distance: the SAN to be constructed with fiber optic inherit the benefits of this, for example, SAN devices can have a separation of up to 10 km without repeaters.

Speed: the performance of any computer system depends on the speed of its subsystems, it is why the SAN have increased their information transfer rate, ranging from 1 Gigabit until now 4 and 8 Gigabits per second.

Availability: One of the advantages of SAN is that having greater connectivity, allow servers and storage devices more than once connected to the SAN, thus can have redundant “routes” which in turn increase fault tolerance.

Security: security in the SAN has been from the beginning a key factor, since its creation the possibility that a system agreed to a device that does not correspond to him or interfere with the flow of information is noticed, which is why we have implemented zoning technology, which consists of a group of elements are isolated from the rest to avoid these problems, zoning may be performed by hardware, software or both, being able to group by port or WWN (World Wide Name) An additional technique is implemented at the level of storage device that is the Presentation, is to make a LUN (Logical Unit Number) is accessible only by a predefined list of servers or nodes (deployed with the WWN).

Components: the primary components of a SAN are: switches, directors, HBAs, servers, routers, gateways, disk arrays and tape libraries.

Topology: each topology provides different capabilities and benefits SAN topologies are:

Waterfall (cascade).

Ring (ring).

Malla (meshed).

Core/edge

ISL (Inter Switch Link, link between switches) currently connections between switches in SAN ports are made through “E” and can be grouped to form a trunk (trunk) that allows greater flow of information and fault tolerance.

Architecture: the current fiber channel operate under two basic architectures, FC-AL (Fibre Channel Arbitrated Loop) and Switched Fabric, both schemes can coexist and expand the possibilities of the SAN. The FC-AL architecture can connect up to 127 devices, while switched fabric up to 16 million theoretically.

Advantages

Share storage simplifies administration and adds flexibility since cables and storage devices do not need to move from one server to another. Realize that unless the model of SAN file system and clustering, SAN storage has a ratio of one to one with the server. Each device (or Logical Unit Number, LUN) of the SAN is “owned” by a single computer or server. As a counter example, NAS enables multiple servers to share the same set of files on the network.

SAN tends to maximize storage utilization, since multiple servers can use the same reserved space for growth.

Storage paths are many, a server can access one or “n” disks and a disk can be accessed by more than one server, which increases the profit or return on investment, ie, ROI (Return On Investment), for its acronym in English.

The “storage area network” has the ability to support in physically distant locations. His goal is to lose as little time as possible or better yet, do not waste time, so both backup and recovery are online.

One of the great advantages is that it also provides high data availability.

A primary advantage of the SAN is its compatibility with existing SCSI devices, leveraging existing investments and enabling growth from existing hardware. Through the use of modular devices such as hubs, switches, bridges and routers, you can create fully flexible and scalable topologies, securing investment from day one and, more importantly, taking advantage of SCSI devices as considerable cost-SCSI RAID subsystems SCSI, tape libraries or CD-ROM towers, as through a Fibre Channel SCSI bridge can connect directly to the SAN. Since they are on their own network, they are accessible by all users immediately.

SAN performance is directly related to the type of network used. In the case of a Fibre Channel network, the bandwidth is approximately 100 megabytes / second (1,000 megabits / second) and can be extended by increasing the amount of access connections.

SAN capacity can be extended almost limitlessly and can reach hundreds and even thousands of terabytes. A SAN can share data among multiple computers on the network without affecting performance because SAN traffic is completely separate from user traffic. They are application servers that act as an interface between the data network (usually fiber channel) and the user network (usually Ethernet).

Disadvantages

Moreover, a SAN is much more expensive than a NAS because the first is a complete architecture that uses technology that is still very expensive. Normally, when a company estimates the TCO (Total Cost of Ownership) to the cost per byte, the cost can be justified more easily.

Protocols

There are basic protocols used in a storage area network:

FC-AL

FC-SW

SCSI

FCoE

FC-AL

Fibre Channel arbitrated loop protocol, used in hubs, in the SAN hub this protocol is used by excellence, the protocol control who can communicate, only one at a time.

FC-SW

Switched Fibre Channel protocol, used in switches, in this case several communications can occur simultaneously. The protocol is responsible for connecting communications between devices and collision avoidance.

SCSI

Used by applications, it is a protocol used for an application of a computer to communicate with the storage device.

In the SAN, the SCSI encapsulated over FC-AL and FC-SW.

SCSI works differently in a SAN within a server, SCSI was originally designed to communicate within the same server disks, using copper wires.

Within a server, data travels parallel SCSI and SAN traveling serialized.

FCoE

It is a computer network technology that encapsulates Fibre Channel frames over Ethernet networks. This allows Fibre Channel networks use 10 Gigabit Ethernet (or higher speeds) while preserving the Fibre Channel protocol. The specification was part of the International Committee for Information Technology Standards T11 FC-BB-5 standard published in 2009.

Security

An essential part of the safety of storage area networks is the physical location of each and every one of the network components. The construction of a data center is only half the challenge is the fact decide where to put the network components (software and hardware) the other half and the hardest. Critical network components, such as switches, storage arrays or hosts which should be in the same data center. By implementing physical security, only authorized users can have the ability to perform both physical and logical topology changes, changes such as: changing port cable, reconfigure access to a computer, add or remove devices, among others.

Planning should also take into account environmental issues such as cooling, power distribution and requirements for disaster recovery. At the same time should ensure that IP networks are used to manage the various components of the SAN they are safe and not accessible for the entire company. It also makes sense to change the default passwords with network devices in order to prevent unauthorized use.

#data storage devices#network data storage#data storage solution#storage area networks#network area storage

0 notes

Photo

Terminology in the world of Software

BPM (Business Process Management):

A new category of enterprise software that enables businesses to modernize, implement and execute sets of interrelated activities that is, processes- of any kind, either within a department or permeating the entity as a whole, with extensions to include customers, suppliers and other agents as participants in the process tasks.

With a BPM tool company can automate easily any process, including those relating to Human Resources, Quality Control, Purchasing, Customer Relationship (CRM), Supply Chain Management, Risk Management, Sales, Billing and any other kind of process that is specific and particular company.

CRM (Customer Relationship Management):

It is the acronym used to define a business strategy focused on the customer, in which the goal is to gather the maximum amount of information about customers to build long-term relationships and increase their satisfaction. This trend is part of what is called Relationship Marketing also considers potential customers and how to build relationships with them. The central idea is to focus on the customer, known in depth in order to increase the value of the offer and achieve successful results.

The traditional CRM that involves high installation costs and infrastructure is being replaced by a new form that is CRM on Demand, also known as CRM Saas (Software as a Service) or Cloud CRM mode. This new method allows for universal accessibility through any application that has Internet access and also represents a significant reduction of the high costs of installation and maintenance involving traditional CRM.

Data Warehouse:

A data warehouse is a collection of data aimed at a specific area (company, organization, etc.), integrated, non-volatile and variable over time, which helps decision-making in the state in which it is used. It is, above all, a complete record of an organization, beyond the transactional and operational information stored in a database designed to facilitate efficient analysis and dissemination of data.

ERP (Enterprise Resource Planning):

Systems enterprise resource planning (ERP, for its acronym in English, enterprise resource planning) are management information systems that integrate and manage many of the businesses associated with the operations of production and distribution aspects of a company in the production of goods or services.

ERP systems typically handle the manufacturing, logistics, distribution, inventory, shipping, billing and accounting company modularly. However, enterprise resource planning, or ERP software can intervene in the control of many business activities such as sales, deliveries, payments, production, inventory management, quality management and human resource management.

Help Desk:

Help Desk (in English: Help Desk, mistranslated as ‘Help Desk’), or Service Desk (Service Desk) is a set of technological and human resources to provide services with the ability to manage and resolve all possible issues holistically, along with attention related to information and communications technology (ICT) requirements.

Intranet:

Think of it as an internal website, designed to be used within the limits of the company. What distinguishes an intranet from an Internet site, is that intranets are private and the information in it resides aims to assist workers in generating value for the company.

SLA (Service Level Agreetment):

A service-level agreement or SLA (Service Level Agreement English or SLA) is a written contract between a service provider and client in order to set the agreed level for the quality of that service. The ANS is a tool that helps both parties to reach a consensus in terms of the level of service quality, in areas such as response time, time availability, documentation available, staff assigned to the service, etc.

ANS basically establishes the relationship between the two parties: supplier and customer. An ANS identifies and defines customer needs while controlling your service expectations regarding the ability of the supplier, provides a framework of understanding, simplifies complex issues, reduce areas of conflict and favors dialogue with the dispute.

1 note

·

View note

Photo

Fundamentals of cloud technology and its associated services.

- Basic cloud concepts.

According to the definition given by the NIST (National Institute of Standards and Technology) cloud computing or cloud computing is a technology model that allows ubiquitous, adapted and on-demand network access to a shared set of computing resources configurable (eg. networks, servers, storage, applications and services) that can be rapidly provisioned and released with reduced management effort or minimal interaction with the service provider. We will refer it to the cloud as a service that can demand network and usually gives us access to infrastructure facilities of Information Technology (IT) such as: access to software, to “backups”, a desktop, etc., providing well have an agile IT solution “tailored” with an easy and rapid deployment. All this without having to purchase the hardware and software resources associated with their licenses so that we can access their facilities without the need for “we” will exploit the resources, whether hardware or software nature. Now, with this broad definition, we can take any of the services and realize that there are many key aspects that should be evaluated for a successful implementation. The technology that facilitates the development of this new scenario is the virtualization decouples hardware software making it possible to replicate the user’s environment without having to install and configure all the software required for each application. With virtual machines is achieved distribute workloads in a simple manner resulting in a new paradigm, cloud computing. To understand quickly and easily which are key to the concept of cloud computing, a number of key features that differentiate it from traditional systems are used

Pay per use. Allows billing based on consumption.

Abstraction. It allows isolating the contracted provider of computer equipment of the entity computing resources.

Agility scalability. Increases or decreases dynamically functions available depending on the needs of the customer.

Multi. It allows all users consumption of a particular service or resource from a single technology platform, adapted to their needs.

Self on demand. It allows the user access to computing capabilities in the cloud automatically without having to contact the vendor.

Unrestricted access. It makes it possible to access the services ubiquitously employed anywhere / anytime, with any device with Web access.

Cloud computing solutions available on the market several dimensions that we will see in the course are classified highlight moment that can be categorized:

On the level at which the service is provided: IT Infrastructure, Platform, or software:

For implementation forms (forms of integration and operation), thus distinguishing between public cloud, private cloud and hybrid cloud.

0 notes

Photo

Terminology and cloud characters

To understand the operation of cloud computing is essential to understand the three levels that can be provided the service.

Infrastructure as a Service (IaaS) This is the highest level of service. It is responsible for delivering infrastructure complete user demand processing. The user has one or more virtual machines in the cloud with which, for example, can increase the size of hard drive in a few minutes, get more processing or routers and pay only for the resources they use. This level can be seen as an evolution of Virtual Private Servers currently offered by hosting companies.

Platform as a Service (PaaS). It is the intermediate level, is responsible for delivering a complete processing platform to the user, fully functional without having to purchase and maintain hardware and software. For example, a web developer needs a web server to serve its pages, a database server and operating system. This level is responsible for providing all these services.

Software as a Service (SaaS). This level is responsible for delivering the software as a service over the Internet whenever the user demands it. This is the lowest level that allows access to the application using a web browser, without installing additional software on your computer or mobile phone. Office automation suites which can be accessed online are a good example of this level.

Based on the service delivery models can be grouped cloud computing systems in the following main categories: public, private and hybrid.

Public clouds are those in which all control of resources, processes and data is in the hands of third parties. Multiple users can use web services that are processed on the same server can share disk space or other infrastructure network with other users.

Private clouds are those created and managed by a single entity that decides where and how processes run within the cloud. An improvement in terms of security and privacy of data and processes, and that sensitive data remains in the IT infrastructure of the organization, while controlling what each service user accesses the cloud. However, the entity remains responsible for purchase, maintain and manage all hardware and software infrastructure cloud.

In hybrid clouds the previous two models coexist. For example, a company uses a public cloud to keep your web server while keeping your database server in your private cloud. Thus, a communication channel between public and private cloud through which sensitive data remain under strict control while the web server is managed by a third party is established. This solution reduces the complexity and cost of the private cloud. A fourth model of service delivery, community clouds, which are shared between several organizations that form a community with similar principles (mission, security requirements, policies and compliances) are also targets. It can be managed by the community or by a third party. This model can be seen as a variation on the model of private cloud.

Other acronyms that should be familiar to us:

API: Application Programming Interface

CDN: Content delivery network

IP: Internet Protocol

ISP: Internet Access Provider

IT: Information Technology

SSL: Secure Layer Protection

SOA: Service-oriented architecture

URI: Universal Resource Identifier

WSDL: Web services description language

DSA: Digital Signature Algorithm

XML: Extensible Markup Language

In addition, several agents involved in the business: the enabler, the supplier, the broker, the subscriber … and those who are responsible for implementing solutions and cloud computing services oriented provides a number of advantages in a wide range collective, economic and social sectors and agents.

0 notes

Photo

Technology?

A new vision of the future is emerging in the heart of the technology industry in Japan: ubiquity. If giants like Sony and Matsushita, and the Japanese government, will get their way, that vision will expand to the rest of the world.

The concept has to do with a network of devices that share information with each other. But for those skilled in the art, the ubiquity describes a great perspective on everything (plants, people and even garbage) are connected to a large network that can be accessed at any time and place.

Let wireless devices in all that you move, says DoCoMo president Keiji Tachikawa. Not only in people but also in pets, cars and computers.

So far, mainly the ubiquity has meant that people have access to data, communicating by cell phones or car navigation systems. Matsushita, for example, sells DVD that can be programmed to record a program via a cell phone.

The company has also started selling a limited a device that allows to operate the washing machine or air conditioning from a cell. Sony, meanwhile, is developing a microchip that will allow consumer electronics transmit data to each other more easily.

Hitachi and other Japanese giants are producing chips equipped with tiny radio frequency technology that can be inserted in bills or people to keep track of the whereabouts and identity of an object or a person.

These chips are pre-programmed with an identification number, and so far have been tested in items like clothing labels for inventory control and in fruits and vegetables to keep track of their itineraries from the farm field to the supermarket.

Over time, chips embedded in milk cartons, for example, can alert the refrigerator when the expiration date arrives.

But these dreams ubiquity not limited to Japan. In fact, it is believed that the concept originated in the US in 1988 when Xerox researcher Mark Weiser coined the term ubiquitous computing to describe how computers would become an intrinsic part of our existence.

But although the concept is still relatively deconocido in the world, the Japanese government has initiated projects on its future. Proponents say that as Japanese companies take the lead in many of the technologies needed to interconnect devices, ubiquity could spark a resurgence of Japanese companies.

This is the paradigm of information technology, the Japanese, says Teruyasu Murakami, the Nomura Research Institute.

0 notes

Photo

Technology is transforming Colombia

How? He began frequenting the Vive Digital Kiosk his sidewalk and internet could investigate everything related to their craft. Now has more cows and profits increased significantly since the exclusive distributor of the company Dairy Cocunubo. This is the testimony of one of the thousands of Colombians who are transforming their lives and those of their families based on the technology through Vive Digital, the state policy launched by President Juan M. Santos in 2010, whose goal is to expand internet to reduce poverty and create jobs. Five years later he is now a reality that citizens of our fields and cities to access the Internet to study online, telecommute, conquer new markets, interact with the government, among many others. In 2010, 93 goals were defined as part of Vive Digital, which were met in full in 2014. The cumulative results are spectacular and positively affect all private and public activities in the country. Colombia has 1,078 municipalities connected with broadband internet 9 submarine cables for international connectivity, 10.1 million internet connections, 74% of MSMEs and 50% of connected households. Following completion of the construction of infrastructure, the priority now is to fill the information superhighway with applications and content that address the needs of our people.

This is the transformation that five years ago dreamed the national government. Today we see that technology has enabled a virtuous and irreversible process that strengthens education, the economy and the functioning of the state in our country, a particular process, with results in sight, authorizing believe in a future of progress and huge changes Colombia.

0 notes

Photo

A trip to the dark web, the corner of the crime on the network

There is a dark side to the web: a set of sites that are being used by criminals as a support for their illegal activities. Receives several names: darknet, dark web, internet or internet dark hidden.

The dark internet became famous in 2013, when it was discovered that one of its chinks, there was a store that sold narcotics, weapons, child pornography and even professional services murderers. It was called Silk Road or 'Silk Road' in Spanish. It fell as a result of operating the FBI. The rugged discovery made visible the gestation of an underworld on the web, a paradise for criminals, where they could carry out their misdeeds easily undetected by the authorities.

A study by the University of Portsmouth (UK) revealed that four out of five visits (80 percent) to the hidden network territory relate to pedophile content portals.

The remaining visits are aimed at focusing on drugs, smuggling, cybercrime, weapons, among other places. Getting to the darkest area is difficult for common users. Portales likeness of Silk Road have proliferated in recent years. The addresses of the sites used by criminals are difficult to obtain. Usually, they are shared between people who are involved with groups outside the law and are not distributed publicly.

These addresses are characterized by end with the suffix '.onion' instead of '.com' or '.net'. Some banks have tried to list and categorize web sites of the dark, but has proved to be a futile effort, as most links are no longer valid in the short term.

It is estimated that the deep web receives about 2 million visitors from around the world every day. In Colombia it is still not widely used. Canadian Eric Jardine, researcher at the Center for International Governance Innovation states that, from the country, 11 million instances were reported, for an average of 916,000 per month and 30,000 a day. "Not too much, compared with the international context, but it was a significant leap over previous years, when only activity was recorded in that realm of the virtual" he explains.

One of the factors that prompted the use of dark web in recent years were the revelations of Edward Snowden, related to the monitoring of the National Security Agency United States at various levels and sectors of society. This stoked the interest of citizens to protect their information.

The dark web is one of the three parties in which has divided the virtual universe, as explained Jardine to Technosphere: "The first layer of the web, or superficial, is one where users consume most of your time. It is a small portion of the network of existing sites; It has everything that is accessible by search engines like Google or Yahoo. "

"Then we found the deep web, which is 500 times larger than the surface web. Includes the background of electronic banking sites, pay walls and intranet systems companies. Finally we stumbled on the dark web-a sort of subdivision of the web deeply. It consists of between 30,000 and 80,000 sites. What defines this layer is that everything stayed there anonymously surfing and its contents are of questionable legality, "he adds.

The dark web, constantly evolving

The favorite browser to access the deep layers of the web is Tor, but other platforms such as Freenet, which also offer privacy to users.

These browsers can be used both to visit web sites of the surface, such as Facebook, as dark internet sites. A majority percentage of users (about 90 percent) do not go to Tor and the like to immerse themselves in the areas of crime, but to protect their identity when they access to cyberspace.

Most sites created in the dark web are not kept online for over a week. This hinders the work of the authorities.

"Some police groups have adopted successful to catch criminals strategies, but, for most, still involves a major effort, wear with meager reward," said Pablo Ramos, computer security expert Eset, in an interview with this medium.

Tor break the shield is a major challenge. "There is evidence that some scientists have deciphered blocks traffic flowing through deep web. They analyze traffic entering and leaving the network and correlate with other variables to guess the identity of those entering. Even forces police, during the development of an operational, have unmasked the identity of certain users. We managed to infect certain servers with malware "expert deepened the Innovation Center for International Governance.

However, when persons responsible for project Tor discover these security breaches in the system, release updates to close the perceived flaw. In certain periods, there have been cracks in the armor of Tor, but the technology has changed to remain anonymous and secure.

Groups like Al-Qaeda or the Islamic State have used this platform as a means to rally its members.

"It is clear that not all crimes committed in that layer of the web. Many of them still orchestrate the surface web, such as money laundering, but we have seen an increase in the use of the deep web because illegal groups that have been receiving its benefits, "said Jardine.

"Some cybercriminals create sites on the dark web that are used to raise money for a limited time. They design a malicious program, called 'ramsonware', which encrypts the contents of the cell phone or computer equipment. In exchange for returning the access to data, the attacker requests payment of an amount, a sort of 'rescue' in exchange for releasing the captured information. the transaction is completed on a page hosted on the Internet hidden, using the virtual currency bitcoin in most cases " he warned Ramos, Eset.

It is also used with benign purposes

The deep web started out as a network created by the US army. UU. to ensure secure communication platform, and untraceable, for their soldiers. "In the nineties, the code is released, so that anyone could access it and replicate it, and the Tor Project was founded," said Jardine.

The Tor project is linked to the government of the United States. Not directly, but it provides money for maintenance. "The logic behind keeping alive a network of these features is that it can be used for charitable purposes under certain circumstances," said Jardine.

"Today it is used to house legal sites related to certain types of information, like everything about UFO sightings and similar content. On the other hand, is a tool used by certain citizens of oppressive regimes to avoid censorship and to ensure the safety and anonymity of their online activities, "said Pablo Ramos, computer security expert Eset.

Trends

1. Its use is growing apace. It is a significant problem for the authorities. Find a cybercriminal in the dark web is as difficult as finding a needle in a haystack. And that barn every day is bigger.

2. Some governments condemn the use of Tor and similar systems. As debates have arisen relating to the desirability of supporting the growth of messaging platforms with encryption technologies, so will the deep web: begin to face the challenge of large global organizations.

3. Its use by criminal increase. "It's not difficult to discern. His popularity among illicit organizations will grow in the coming years and this is a challenge for governments" says Jardine.

1 note

·

View note