Don't wanna be here? Send us removal request.

Text

Need for Reliability of LLM Outputs

The Reliability Imperative: Ensuring AI Systems Deliver Trustworthy and Consistent Results

TL;DR

Large Language Models (LLMs) have revolutionized natural language processing by enabling seamless interaction with unstructured text and integration into human workflows for content generation and decision-making. However, their reliability—defined as the ability to produce accurate, consistent, and instruction-aligned outputs—is critical for ensuring predictable performance in downstream systems.

Challenges such as hallucinations, bias, and variability between models (e.g., OpenAI vs. Claude) highlight the need for robust design approaches. Emphasizing platform-based models, grounding outputs in verified data, and enhancing modularity can improve reliability and foster user trust. Ultimately, LLMs must prioritize reliability to remain effective and avoid obsolescence in an increasingly demanding AI landscape.

Introduction

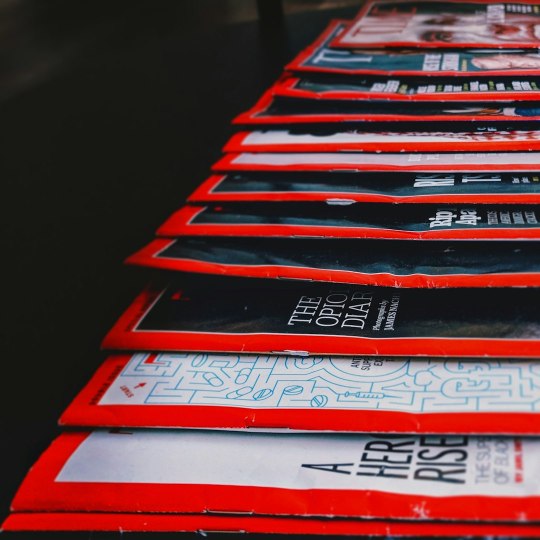

Photo by Nahrizul Kadri on Unsplash

Large Language Models (LLMs) represent a groundbreaking advancement in artificial intelligence, fundamentally altering how we interact with and process natural language. These systems have the capacity to decode complex, unstructured text, generate contextually accurate responses, and seamlessly engage with humans through natural language. This capability has created a monumental shift across industries, enabling applications that range from automated customer support to advanced scientific research. However, the increasing reliance on LLMs also brings to the forefront the critical challenge of reliability.

Reliability, in this context, refers to the ability of these systems to consistently produce outputs that are accurate, contextually appropriate, and aligned with the user’s intent. As LLMs become a cornerstone in workflows involving content generation, data analysis, and decision-making, their reliability determines the performance and trustworthiness of downstream systems. This article delves into the meaning of reliability in LLMs, the challenges of achieving it, examples of its implications, and potential paths forward for creating robust, reliable AI systems.

The Transformative Power of LLMs

Natural Language Understanding and Its Implications

At their core, LLMs excel in processing and generating human language, a feat that has traditionally been considered a hallmark of human cognition. This capability is not merely about understanding vocabulary or grammar; it extends to grasping subtle nuances, contextual relationships, and even the inferred intent behind a query. Consider a scenario where a marketing professional needs to generate creative ad copy. With an LLM, they can simply provide a description of their target audience and product, and the model will generate multiple variations of the advertisement, tailored to different tones or demographics. This capability drastically reduces time and effort while enhancing creativity.

Another example is the ability of LLMs to consume and interpret unstructured text data, such as emails, meeting transcripts, or legal documents. Unlike structured databases, which require predefined schemas and specific formats, unstructured text is inherently ambiguous and diverse. LLMs bridge this gap by transforming chaotic streams of text into structured insights that can be readily acted upon. This unlocks possibilities for improved decision-making, especially in fields like business intelligence, research, and customer service.

Integration Into Human Pipelines

The real potential of LLMs lies in their ability to seamlessly integrate into human workflows for both content generation and consumption. In content generation, LLMs are already revolutionizing industries by creating blog posts, reports, technical documentation, and even fiction. For instance, companies like OpenAI have enabled users to create entire websites or software prototypes simply by describing their requirements in natural language. On the other end, content consumption is equally transformed. LLMs can digest and summarize lengthy reports, extract actionable insights, or even translate complex technical jargon into plain language for non-experts.

These applications position LLMs not just as tools but as collaborators in human content pipelines. They enable humans to focus on higher-order tasks such as strategy and creativity while delegating repetitive or information-intensive tasks to AI.

The Critical Role of Reliability

Photo by Karim MANJRA on Unsplash

Defining Reliability in the Context of LLMs

The growing adoption of LLMs across diverse applications necessitates a deeper understanding of their reliability. A reliable LLM is one that consistently produces outputs that meet user expectations in terms of accuracy, relevance, and adherence to instructions. This is particularly crucial in high-stakes domains such as healthcare, law, or finance, where errors can lead to significant consequences. Reliability encompasses several interrelated aspects:

Consistency: Given the same input, the model should generate outputs that are logically and contextually similar. Variability in responses for identical queries undermines user trust and downstream system performance.

Instruction Adherence: A reliable model should follow user-provided instructions holistically, without omitting critical details or introducing irrelevant content.

Accuracy and Relevance: The information provided by the model must be factually correct and aligned with the user’s intent and context.

Robustness: The model must handle ambiguous or noisy inputs gracefully, producing responses that are as accurate and coherent as possible under challenging conditions.

Why Reliability Matters

The implications of reliability extend beyond individual interactions. In systems that use LLM outputs as inputs for further processing—such as decision-support systems or automated workflows—unreliable outputs can cause cascading failures. For instance, consider an LLM integrated into a diagnostic tool in healthcare. If the model inaccurately interprets symptoms and suggests an incorrect diagnosis, it could lead to improper treatment and jeopardize patient health.

Similarly, in content generation, unreliable outputs can propagate misinformation, introduce biases, or create content that fails to meet regulatory standards. These risks underscore the importance of ensuring that LLMs not only perform well under ideal conditions but also exhibit robustness in real-world applications.

Challenges in Achieving Reliability

Photo by Pritesh Sudra on Unsplash

Variability Across Models

One of the foremost challenges in ensuring reliability stems from the inherent variability across different LLMs. While versatility is a strength, it often comes at the cost of predictability. For example, OpenAI’s models are designed to be highly creative and adaptable, enabling them to handle diverse tasks effectively. However, this flexibility can result in outputs that deviate significantly from user instructions, even for similar inputs. In contrast, models like Claude have demonstrated a more consistent adherence to instructions, albeit at the expense of some versatility.

This variability is a manifestation of the No Free Lunch principle, which asserts that no single algorithm can perform optimally across all tasks. The trade-off between flexibility and predictability poses a critical challenge for developers, as they must balance the needs of diverse user bases with the demand for reliable outputs.

Hallucinations and Factual Accuracy

A significant obstacle to LLM reliability is their propensity for hallucinations, or the generation of outputs that are contextually plausible but factually incorrect. These errors arise because LLMs lack an inherent understanding of truth; instead, they rely on patterns and correlations in their training data. For instance, an LLM might confidently assert that a fictional character is a historical figure or fabricate scientific data if prompted with incomplete or misleading input.

In high-stakes domains, such as healthcare or law, hallucinations can have severe consequences. A model used in medical diagnosis might generate plausible but incorrect recommendations, potentially endangering lives. Addressing hallucinations requires strategies like grounding the model in real-time, verified data sources and designing systems to flag uncertain outputs for human review.

Bias in Training Data

Bias is another pervasive issue that undermines reliability. Since LLMs are trained on extensive datasets sourced from the internet, they inevitably reflect the biases present in those datasets. This can lead to outputs that reinforce stereotypes, exhibit cultural insensitivity, or prioritize dominant narratives over marginalized voices.

For example, a hiring tool powered by an LLM might inadvertently favor male candidates if its training data contains historical hiring patterns skewed by gender bias. Addressing such issues requires proactive efforts during training, including the curation of balanced datasets, bias mitigation techniques, and ongoing monitoring to ensure fairness in outputs.

Robustness to Ambiguity

Real-world inputs are often messy, ambiguous, or incomplete, yet reliable systems must still provide coherent and contextually appropriate responses. Achieving robustness in such scenarios is a major challenge. For instance, an ambiguous prompt like “Summarize the meeting” could refer to the most recent meeting in a series, a specific meeting mentioned earlier, or a general summary of all meetings to date. An LLM must not only generate a plausible response but also clarify ambiguity when necessary.

Robustness also involves handling edge cases, such as noisy inputs, rare linguistic patterns, or unconventional phrasing. Ensuring reliability under these conditions requires models that can generalize effectively while minimizing misinterpretation.

Lack of Interpretability

The “black-box” nature of LLMs poses a significant hurdle to reliability. Users often cannot understand why a model produces a specific output, making it challenging to identify and address errors. This lack of interpretability also complicates efforts to debug and improve models, as developers have limited visibility into the decision-making processes of the underlying neural networks.

Efforts to improve interpretability, such as attention visualization tools or explainable AI frameworks, are critical to enhancing reliability. Transparent models enable users to diagnose errors, provide feedback, and trust the system’s outputs more fully.

Scaling and Resource Constraints

Achieving reliability at scale presents additional challenges. As LLMs are deployed across diverse environments and user bases, they must handle an ever-growing variety of use cases, languages, and cultural contexts. Ensuring that models perform reliably under these conditions requires extensive computational resources for fine-tuning, monitoring, and continual updates.

Moreover, the computational demands of training and deploying large models create barriers for smaller organizations, potentially limiting the democratization of reliable AI systems. Addressing these constraints involves developing more efficient architectures, exploring modular systems, and fostering collaboration between researchers and industry.

The Challenge of Dynamic Contexts

Real-world environments are dynamic, with constantly evolving facts, norms, and expectations. A reliable LLM must adapt to these changes without requiring frequent retraining. For instance, a news summarization model must remain up-to-date with current events, while a customer service chatbot must incorporate updates to company policies in real time.

Dynamic grounding techniques, such as connecting LLMs to live databases or APIs, offer a potential solution but introduce additional complexities in system design. Maintaining reliability in such dynamic contexts demands careful integration of static training data with real-time updates.

Building More Reliable LLM Systems

Prioritizing Grounded Outputs

A critical step toward reliability is grounding LLM outputs in verified and up-to-date data sources. Rather than relying solely on the model’s static training data, developers can integrate external databases, APIs, or real-time information retrieval mechanisms. This ensures that responses remain accurate and contextually relevant. For instance, combining an LLM with a knowledge graph can help verify facts and provide citations, reducing the likelihood of hallucinations.

Applications like search engines or customer support bots can benefit immensely from such grounding. By providing links to reliable sources or contextual explanations for generated outputs, LLMs can increase user trust and facilitate transparency.

Emphasizing Modular System Design

Building modular LLMs can address the challenge of balancing versatility with task-specific reliability. Instead of deploying a monolithic model that attempts to handle every use case, developers can train specialized modules optimized for distinct tasks, such as translation, summarization, or sentiment analysis.

For example, OpenAI’s integration of plugins for tasks like browsing and code execution exemplifies how modularity can enhance both reliability and functionality. This approach allows core models to focus on language understanding while delegating domain-specific tasks to dedicated components.

Reinforcement Learning from Human Feedback (RLHF)

RLHF is a powerful method for aligning LLMs with user expectations and improving reliability. By collecting feedback on outputs and training the model to prioritize desirable behaviors, developers can refine performance iteratively. For instance, models like ChatGPT and Claude have used RLHF to improve instruction-following and mitigate biases.

A key challenge here is ensuring that feedback datasets are representative of diverse user needs and scenarios. Bias in feedback can inadvertently reinforce undesirable patterns, underscoring the importance of inclusive and well-curated training processes.

Implementing Robust Error Detection Mechanisms

Reliability improves significantly when LLMs can recognize their limitations. Designing systems to identify and flag uncertain or ambiguous outputs allows users to intervene and verify information before acting on it. Techniques such as confidence scoring, uncertainty estimation, and anomaly detection can enhance error detection.

For example, an LLM tasked with medical diagnosis might flag conditions where it lacks sufficient training data, prompting a human expert to review the recommendations. Similarly, content moderation models can use uncertainty markers to handle nuanced or controversial inputs cautiously.

Continuous Monitoring and Fine-Tuning

Post-deployment, LLMs require ongoing monitoring to maintain reliability. As language evolves and user expectations shift, periodic fine-tuning with fresh data is essential. This process involves analyzing model outputs for errors, retraining on edge cases, and addressing emerging biases or vulnerabilities.

Deploying user feedback loops is another effective strategy. Platforms that allow users to report problematic outputs or provide corrections can supply invaluable data for retraining. Over time, this iterative approach helps LLMs adapt to new contexts while maintaining consistent reliability.

Improving Explainability

Enhancing the interpretability of LLMs is crucial for building trust and accountability. By making the decision-making processes of models more transparent, developers can help users understand why specific outputs were generated. Techniques like attention visualization, saliency mapping, and rule-based summaries can make models less of a “black box.”

Explainability is particularly important in high-stakes domains. For instance, in legal or medical contexts, decision-makers need to justify their reliance on AI recommendations. Transparent systems can bridge the gap between machine-generated insights and human accountability.

Designing Ethical and Inclusive Systems

Reliability extends beyond technical performance to include fairness and inclusivity. Ensuring that LLMs treat all users equitably and avoid harmful stereotypes is a fundamental aspect of ethical AI design. Developers must scrutinize training datasets for biases and implement safeguards to prevent discriminatory outputs.

Techniques such as adversarial testing, bias correction algorithms, and diverse dataset sampling can help address these challenges. Additionally, engaging with communities impacted by AI systems ensures that their needs and concerns shape the design process.

Collaboration Between Stakeholders

Building reliable LLM systems is not solely the responsibility of AI developers. It requires collaboration among researchers, policymakers, industry leaders, and end-users. Standards for evaluating reliability, frameworks for auditing AI systems, and regulations for accountability can create an ecosystem that fosters trustworthy AI.

For example, initiatives like the Partnership on AI and the AI Incident Database promote shared learning and collective problem-solving to address reliability challenges. Such collaborations ensure that LLMs are designed and deployed responsibly across sectors.

Conclusion

Reliability is the cornerstone of the future of LLMs. As these systems become increasingly embedded in critical workflows, their ability to produce consistent, accurate, and contextually relevant outputs will determine their long-term viability. By embracing platform-based designs, grounding their models, and prioritizing transparency, LLM providers can ensure that their systems serve as trusted collaborators rather than unpredictable tools.

The stakes are high, but the path forward is clear: focus on reliability, or risk obsolescence in an ever-competitive landscape.

0 notes

Text

Unlocking Intelligence: Exploring the Future of Automation and Consciousness

A Journey into the Intersection of Computer Science, AI, and the Emergence of Understanding

Have you ever wondered what intelligence truly is? How do systems—whether mechanical, computational, or biological—process information and make decisions? Can machines not only emulate but also create something akin to consciousness? These questions form the foundation of this platform, where we will explore the fascinating interplay between computer science, mathematics, automation, and artificial intelligence to uncover how manipulating information leads to understanding and, ultimately, intelligence.

The underlying motivation of this site is rooted in the belief that intelligence—whether human or artificial—is built on a continuous process of learning to manipulate increasingly complex information. From the simplest mechanical devices to the most sophisticated quantum computers, the principles remain the same: understanding emerges from the interplay of computation, automation, and logic. By delving into these principles, we gain a clearer picture of how intelligence evolves and what it means to build systems that can “think.”

The Vision

This site is not just about technology; it’s about ideas. It is a place to explore how knowledge is created, how machines can replicate human reasoning, and how we can harness these systems to push the boundaries of what is possible. Automation and artificial intelligence are not just tools—they are extensions of human creativity and intellect, capable of solving problems and addressing challenges that were once considered insurmountable.

But with this power comes a responsibility to understand the systems we create. Automation can shape our future in profound ways, and our role is to guide its development thoughtfully and ethically. This site is a space for learning, reflection, and discovery, offering insights that empower you to participate meaningfully in the evolution of technology.

What You’ll Find Here

Photo by Ali Shah Lakhani on Unsplash

To bring these ideas to life, I will be sharing a wide variety of content, each tailored to different aspects of understanding automation, intelligence, and computation:

Chapters as PostsStructured series that dive deeply into topics like machine learning, algorithm design, and the mathematics of automation. These “chapters” are designed to build on each other, creating a cohesive understanding of complex subjects.

Discussions of Seminal PapersWe will explore the groundbreaking research that has shaped the fields of AI, mathematics, and computer science. By analyzing seminal papers, I’ll help you understand not just what was discovered, but why it mattered and how it applies today.

Code WalkthroughsNothing bridges theory and practice better than code. Together, we’ll work through practical implementations of algorithms, systems, and concepts, demystifying their inner workings and showing how they can be applied to real-world problems.

In-Depth Articles on TechnologyFrom the basics of machine learning to the intricacies of neural networks and automation systems, these articles will break down how cutting-edge technologies work. Expect clear explanations and practical insights into the tools shaping our world.

Exploration of Theoretical ConceptsUnderstanding begins with a solid foundation. I’ll delve into the theoretical underpinnings of automation and artificial intelligence, including mathematical principles, logic, and computational theory, to give you a deeper grasp of how these systems function.

Programming ExamplesTo complement theoretical discussions, I’ll provide hands-on programming examples that you can experiment with. These examples will range from simple demonstrations to complex projects, helping you build practical skills and deepen your understanding.

Why This Matters

We live in a world increasingly driven by intelligent systems. Automation and artificial intelligence are reshaping industries, transforming societies, and challenging our assumptions about what machines can do. But while the impact of these technologies is undeniable, their inner workings often remain a mystery to many.

This lack of understanding poses risks: systems that are misunderstood are systems that are misused. By equipping ourselves with the knowledge to grasp how these technologies work, we not only unlock their full potential but also ensure they are used responsibly and ethically.

This platform is my way of contributing to this understanding. It is my belief that by sharing knowledge, breaking down complex ideas, and sparking conversations, we can collectively shape a future where automation and intelligence serve humanity in the best possible ways.

Join the Journey

This site is more than a collection of articles and tutorials; it is an invitation to explore, question, and learn. Whether you are a student just beginning your journey in computer science, a professional looking to deepen your understanding, or a curious mind eager to explore new ideas, this platform is for you.

Together, we will tackle the big questions:

How does information manipulation lead to understanding?

What does it mean to build intelligence?

How can automation reshape our relationship with technology and the world?

As you explore this platform, I encourage you to engage with the content, share your thoughts, and contribute to the discussions. This is a space for collaboration and growth, where ideas come to life, and curiosity is rewarded.

Welcome to the journey of understanding. Let’s explore the future of intelligence, one idea at a time.

1 note

·

View note