Photo

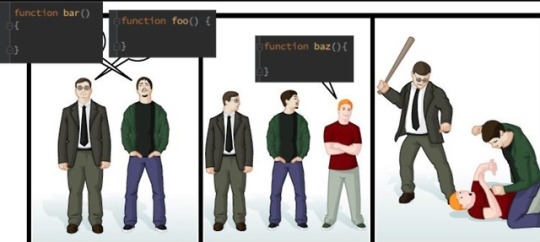

Programmers like to argue

In programming, a function (or method) is a piece of code you can re-use. Usually you put a piece of data into a function, it does something to the data, and spits out a different piece of data.

The analogy I like to use is that a function is like one of those conveyor-belt toasters at Quiznos or some other sandwich place: you put your sandwich on one end, something happens inside the machine, and out the other end comes a toasty sandwich.

If the toaster was a function, you might write it like this:

function toaster(untoastedSandiwch){ toastedSandwich = makeToasty(untoastedSandwich) return toastedSandwich }

A lot of programming languages use that mix of parentheses and curly brackets ({ and }) to write functions. But many don't care how you space things out.

In JavaScript, and many other languages, I could write the above function a bunch of different ways:

function toaster(untoastedSandiwch) { toastedSandwich = makeToasty(untoastedSandwich) return toastedSandwich } function toaster(untoastedSandiwch) { toastedSandwich = makeToasty(untoastedSandwich) return toastedSandwich } function toaster(untoastedSandiwch) {toastedSandwich = makeToasty(untoastedSandwich) return toastedSandwich} function toaster(untoastedSandiwch){toastedSandwich = makeToasty(untoastedSandwich); return toastedSandwich}

A computer would run all of those the exact same way, so there's not really a "right" way to write functions.

The joke is when there's no "right" way to do something, programmers like to argue about what is "best." This comic uses the Mac vs PC meme. The two first guys who use different ways of writing functions would normally be arguing with each other, but arbitrarily gang up on the third guy who uses a slightly-different way of writing functions.

If you see two programmers arguing about something, the more they're arguing, the less there's a right answer.

12 notes

·

View notes

Photo

git push -f

Git is a piece of software used to track changes to stuff that you write. (It shouldn't be confused with GitHub, which is one of many websites that use the software.)

When you make some changes to some files, you can "commit" your changes with Git. This creates a log of the changes you made. Git only logs the changes made since your last commit. If you create a 100-line file and commit, the commit will contain all 100 lines. If you then write another 5 lines and commit again, the new commit will contain only 5 lines.

The result is sort-of a timeline of "commits". Git lets you go back to earlier commits, for example if you don't like recent changes or can't remember what changes you made before.

Git also lets you share commits with other people by storing them on sites like GitHub. This way multiple people can work on the same files on the same "timeline". To do this, you tell your computer to "push" your commits to the website. The command you would write to do this is git push.

Let's say Alice and Bob work on the same timeline of commits, which is stored on GitHub. Alice makes changes to 5 lines of code pushes a commit. Bob doesn't know about Alice's commit, and makes changes to the same 5 lines of code and tries to push a commit. GitHub might reject Bob's commit push. Alice's commit says "change these 5 lines after commit 2", and Bob's commit also says "change these 5 lines after commit 2". Git says that commits have to go 1 - 2 - 3, and GitHub already has a commit that goes after 2.

For Git to accept Bob's commit, he has to "pull" down Alice's commit from GitHub and then make changes, so his commit says "change these 5 lines after commit 3".

Alternatively, Bob could send the command git push -f. The 'f' is for force. This tells GitHub, "Forget Alice's commit, and any other ones that might conflict with my commits. Just use mine." Because force-pushing can throw away other people's work, it should be used only when you really know what you're doing.

The joke is programmers (and the people they work for) sometimes value their code more than they value life itself. A dying programmer's priority might be to get their code onto GitHub at any cost because (1) they're obsessed with solving the problem they've been working on, and/or (2) their code is basically their legacy.

20 notes

·

View notes

Photo

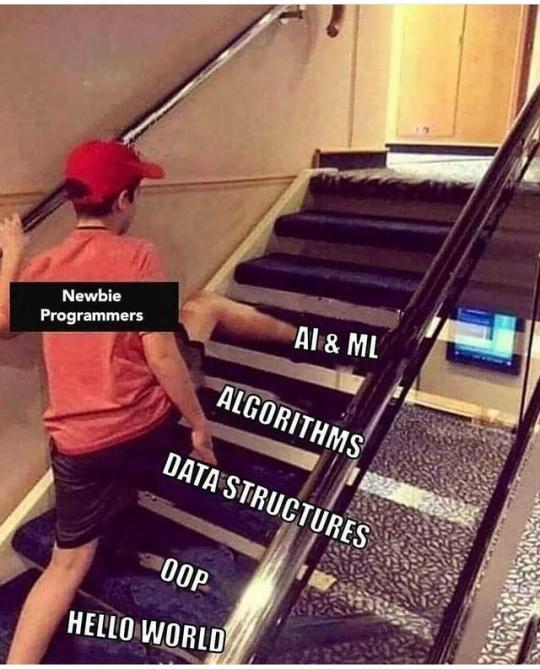

Skipping Some Steps

The joke is "newbie" or beginner programmers tend to overestimate their abilities. The steps in the picture represent the usual order in which programmers learn things, with the newbie programmer trying to skip all the basics to jump into something advanced. Let's break down the steps:

A "hello world" program is just about the simplest thing you can code that actually does something: it has the computer spit out the words "hello world" onto the screen. (You can use any words you want but "hello world" is traditional.) If you see those words, you know your code is working. If you don't, it isn't. The fact that it usually only take a couple lines of code makes "hello world" a great piece of starter code for new programmers, as well as experienced programmers learning a new piece of technology or starting a new project.

OOP is Object-Oriented Programming. Many programming languages let you bundle data and code into objects to help you keep related things organized. For example, a "User" object might contain data like a username, password, and e-mail address, and code that lets you log in and change your password. The learning curve for OOP goes from pretty flat to really steep. It's kind of like using electricity: you can't get far in life without knowing how to change the batteries in a flashlight or knowing that you shouldn't stick a fork in a wall socket, but everything beyond that, like knowing how to connect wires and measure voltage, can feel pretty advanced.

Understanding data structures is understanding the different ways programming languages tell the computer to handle and organize data. For example, it makes sense that when you sign up for a Facebook account, Facebook writes your name in a computer somewhere. But how does Facebook handle lists of names, like your account's "friends"? How does it know which names are your friends and which names are other people's friends?

An algorithm is a list of instructions to take in some data and spit out some other data. For example, subtracting someone's age from the current year to get the year they were born is an algorithm: regardless of how old someone is, if you follow those steps you'll always get the year they were born. When you hear "algorithm" you probably think of some fancy equation to forecast the weather or help Google search the web, but they can also be simple.

Different programmers might learn OOP, data, and algorithms in different orders. Each of them goes from being pretty straightforward to super complicated. You don't need to know everything about one before going to another. But you definitely need to know a good chunk about all of them before going to the last one:

'AI' and 'ML' refer to artificial intelligence and machine learning. They're different but have a lot of overlap. They also have kind-of "fuzzy" definitions. I'd say AI is the ability of a machine to make a decision without having instructions telling it exactly how to make the decision. ML is the ability of a machine to recognize patterns in data without having instructions telling it exactly how to recognize the patterns. Machine learning can be used to increase a computer's artificial intelligence.

A good number of people start learning code because they have an idea for a video game, an AI application, or something else shiny and trendy. It's tempting to skip the basics and go straight into the "interesting" stuff, but it very quickly becomes obvious that won't work.

19 notes

·

View notes

Photo

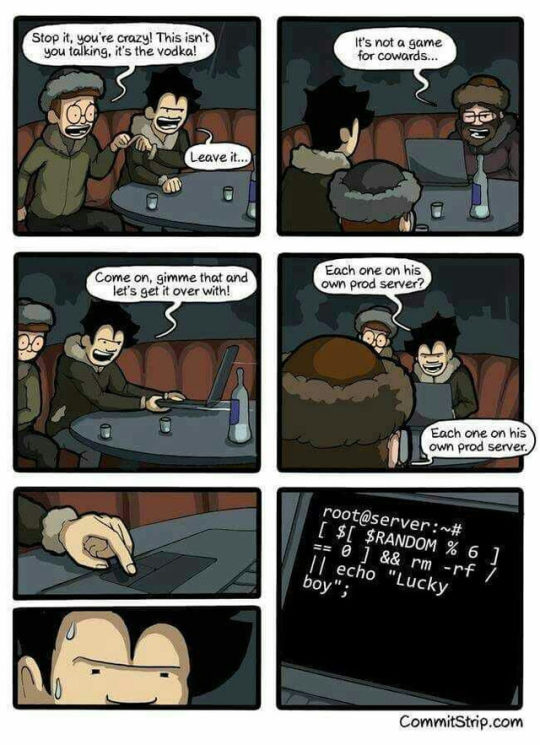

Russian Roulette

A prod server, or "production server," is a computer that's running the "live" version of a website, database, or some other web-based program.

Technically, the server isn't actually a computer. It's just one program running on a computer, and is responsible for "listening" for incoming requests for data, and then serving that data. (For example, when you type google.com into your web browser and hit 'Enter', you're requesting the Google website, and then Google serves the website to you.) But most of the time servers live on computers that only run servers, and so people use 'server' to refer to the whole thing.

In addition to the prod server, programmers will typically have one or more dev servers set up for stuff that's in development. This gives them a "sandbox" to test out new things with out worrying about messing up what their users and customers are doing.

The last panel of the image shows the shell of a computer, presumably a prod server. The shell is a way of talking to a computer using only text, without having to go through some kind of visual interface. This is what all computers looked like back in the 80s, and you can still use the shell on modern computers, although it might be called something like "Terminal" or "Command Prompt." Programmers use the shell a lot because, once you're used to it, it's much faster and more efficient than clicking around on folders and icons.

The code in the last panel instructs the computer,

Pick a random number between 1 and 6 (technically 0 and 5). If it's 0, delete everything on the computer. Otherwise, spit out the words "Lucky boy."

The joke is this is like Russian Roulette -- the "game" where you put one bullet in an empty revolver (old-timey handgun), spin the chamber, point the gun at yourself, pull the trigger, and see what happens.

It's worth mentioning that many computers have failsafes built in to keep you from deleting everything, even if you try to do it in the shell like this. If you did manage it, while it would certainly bring down your app or website or whatever, most programmers have their code in a version control system like Git, which means their code is basically always backed up and so can be re-published.

What's much trickier is getting your app's data back. Things like usernames, uploaded files, blog posts, and other kinds of records are not kept in a version control system. If you wipe the computer that stores your data, even if you have things automatically backed up, it's likely that you would lose a good chunk of data and never be able to recover it.

12 notes

·

View notes

Photo

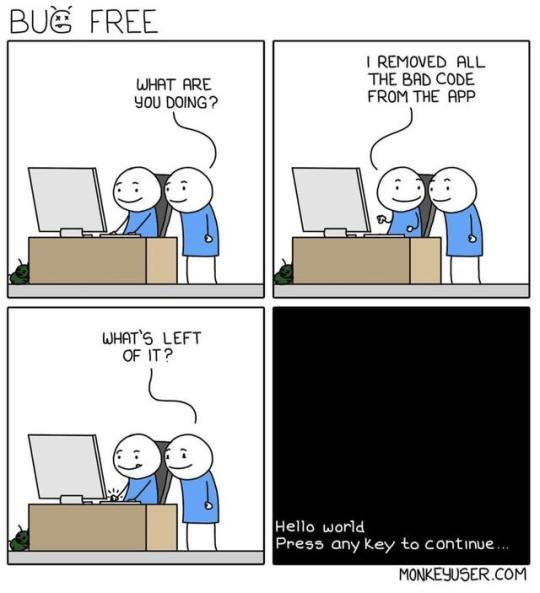

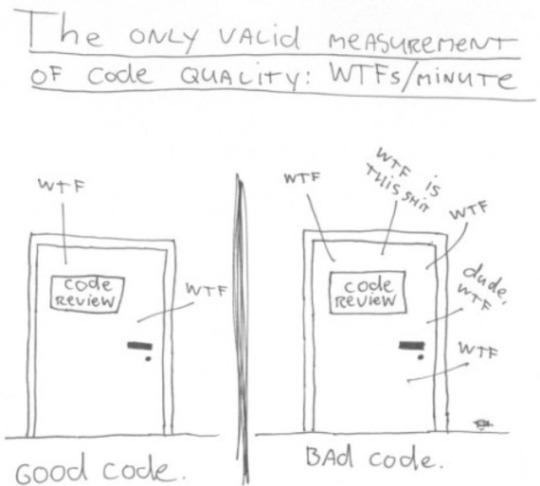

Bug-Free Code

When starting a project, the first thing many programmers do is write some code to make their program spit out a piece of text on the screen. Usually it only takes one or two small lines of code. This is an easy way to make sure a project is set up correctly: if the text shows up it is, and if it doesn't it isn't.

Of course you can use any text you want, but "hello world" is traditional.

The joke is is almost all code is "bad": if you remove all the "bad code" from a project you'll remove everything that was written after the initial "hello world," which is so small that you can't really screw it up.

How is it possible that so much code is "bad"? In the same way that every movie, book, and piece of art has people who think it's bad. There's no "right way" to write code that everyone agrees on. They're called programming languages, and every language gives you lots of ways to express things, some of which people love and some of which people hate.

This is something that surprises a lot of non-programmers, who expect that code either works or doesn't work, and that's how you judge whether it's "good." Most programmers would say that writing code that just works is a lot easier than writing code that other people can read and understand.

15 notes

·

View notes

Photo

Monte Carlo Tree Search

These images are from the movie 'Avengers: Infinity War'. One of the "good guys" manipulates time to look into the future and see if they successfully defeat the "bad guys."

The joke is that "How?" is probably intended to mean, "How did we defeat the bad guys in that one future?" But it's taken to mean, "How did you find the winning future?" A Monte Carlo tree search, or MCTS, is how a computer scientist or data scientist might do it.

The Monte Carlo method is a way of making predictions that's a big deal in any field that uses a lot of data and statistics -- economics, physics, artifical intelligence, meteorology, etc. It's named after the famous casino in the South of France, where players might think they're winning because of skill or chance, but really the house is always winning.

First let's look at what Monte Carlo isn't. Let's say you're playing Tic Tac Toe against a computer. Whenever you make a move, the computer can calculate every possible sequence of moves that would let the computer win the game. That is, it can simulate every possible way the game could go. The next move it actually makes is the one that leads to a victory in the most simulations. This is an example of a brute-force search, where the computer keeps hammering away until it knows everything that can happen, and you basically can't win.

There are 255,168 different ways a game of Tic Tac Toe can go. That's a big number, but not very big for computers. Now let's say you're playing Chess against a computer. There's a near-infinite number of ways a game of Chess can go -- actually infinite if you don't play with the 50-move rule. Even a supercomputer can't count to infinity, so the brute-force approach won't work.

But the computer knows two things: (1) There are only so many moves that can be made during one turn of chess, and (2) It doesn't have to figure out the best possible sequence of moves, it just has to figure out a sequence that's good enough to beat you.

So, whenever you take a turn, the computer randomly simulates the next couple of moves. It can't simulate more than a couple moves without running out of memory. But it can simulate several thousand different sequences of a couple moves, and tell which of those sequences are "good" (it doesn't lose too many pieces and/or takes a lot of yours). The next move it makes is the one that starts the greatest number of "good" sequences.

This is MCTS. Monte Carlo methods are all about randomly simulating what could happen a bunch of times until you have a pretty good idea of what's most likely to actually happen. It's can't give you 100% certainty the way brute-force can, but when you're trying to predict the weather, or how a stock will perform, or how long a project will take, there's too much going on to get 100% certainty anyway.

This 'Avengers' meme jokes that the good guys can win the war against the bad guys by running a Monte Carlo tree search, as if it was a board game. An MCTS might be pretty accurate if your "war" is a game of Chess or Risk, which has strict rules that you have to follow, but real-life war (let alone a fictional Marvel Comics war) has all sorts of people making all sorts of crazy decisions every all the time.

8 notes

·

View notes

Photo

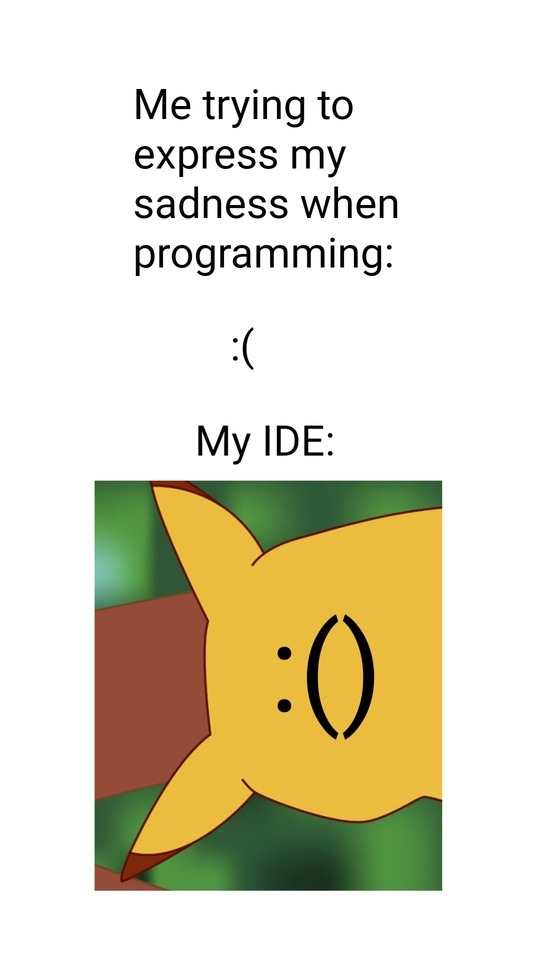

Pikachu wearing braces

'IDE' stands for integrated development environment. This is a fancy way of saying, "the programs or applications you use to write code." Visual Studio, Eclipse, and IntelliJ are all popular examples.

A lot of the time when people are talking about IDEs, they're really talking about text editors. There's a lot of gray area between them, but difference is mostly that an IDE can run your code, and a text editor can't. Both come with lots of built-in features to help you write your code, though.

A common feature of text editors is that they insert punctuation for you, namely that they close 'brackets' or 'braces' that you've opened.

Pretty much all coding languages use different brackets -- () {} [] -- to do different things. For example, if you've seen some HTML, you may have noticed that it uses angle brackets (greater-than/less-than symbols):

<p>This is what a paragraph looks like in HTML.</p>

Even if different brackets do different things in different languages, basically every programming language expects you to close a bracket once you've opened it.

The joke is if you type a 'sad' emoticon :( in your IDE, the IDE will think you're writing code, and so will automatically insert ) -- a close-paren -- for you. The result is :(), which looks a little like a surprised Pikachu on its side.

10 notes

·

View notes

Photo

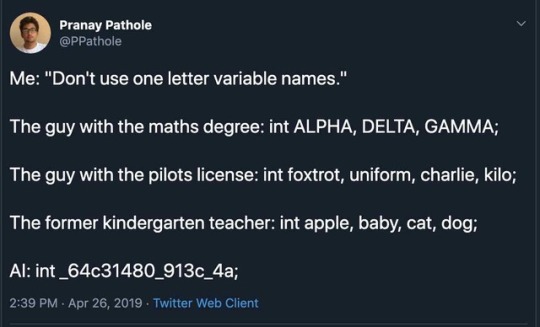

One-letter variable names

A variable is a bucket that you put data into and that you write a label on.

For example, here's some code that asks your age, then tells you whether you're too old for a kids' meal:

int userAge = prompt("How old are you?") if(userAge > 12){ alert("You're too old for a kids' meal.") }else{ alert("You can have a kids' meal!") }

int userAge = prompt is saying, "Here's a bucket labeled 'userAge' that's designed to hold an integer. I'm going to put into this bucket whatever the user types in to the prompt."

Let me write that code a different way:

int a = prompt("How old are you?") if(a > 12){ alert("You're too old for a kids' meal.") }else{ alert("You can have a kids' meal!") }

This code does the exact same thing as the earlier code. The name you put on a variable doesn't make any difference in how it works. A bucket is a bucket. Thus, programmers are often tempted to use single-letter variable names like a, b, c because typing a is faster than typing userAge and it doesn't affect the code's performance.

But this makes a huge difference to other people trying to read your code -- maybe not so much when your code is only 6 lines long, but definitely when it's longer. Most programmers agree that writing code that other humans can read is a lot harder and more important than writing code that just works, even if it means your code is longer.

This is why one of the first rules of programming is "don't use one-letter variable names." The label you put on a bucket should give you an idea of what's in the bucket. A variable's name should tell you what the variable does. userAge tells you much more than a.

The joke is the examples in the image all use variable names that are more than one letter, and so technically follow the rule, but are still useless because they don't tell you anything about the variables' data. Instead of using a, b, c, the maths guy uses the Greek alphabet, the pilot uses the NATO phonetic alphabet (in an interesting order), and the teacher uses words you might use to teach the alphabet.

The AI (Artifical Intelligence)uses what looks like a pointer -- a number that represents where in a computer's memory a piece of data is stored, like a street address. The joke is that AI runs on a computer, and computers don't care about variable names, and this name follows the one-letter rule anyway, so as far as AI is concerned this is a perfectly fine name.

8 notes

·

View notes

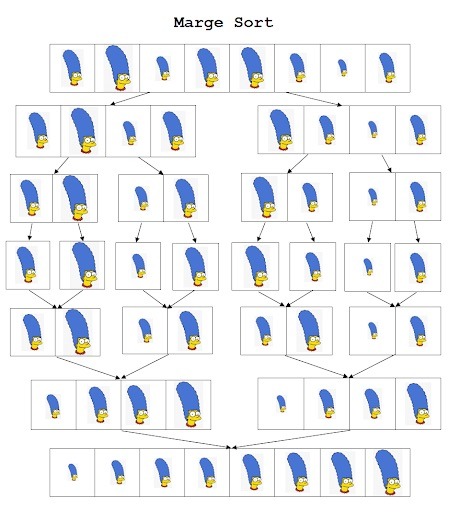

Photo

Marge Sort

Getting a computer to sort a list of numbers is surprisingly complicated, and there are a zillion different ways to do it. This means mathematicians and computer scientists think that it's super interesting and spend a lot of time studying, developing, and comparing sorting algorithms. You could spend a whole day just watching YouTube videos of people representing these algorithms in music and dance.

The joke is a play on merge sort, one of the more popular sorting algorithms. At the top of the image you have a bunch of different-sized pictures of Marge Simpson, and at the bottom they're all in order from smallest to largest. The image is a diagram of how merge sort works.

Explaining sort algorithms is a popular job interview question in the tech industry, so I'll go into more detail here.

Imagine a bunch of numbers all standing in a line. We want the smallest number to end up on the left, and the biggest number to end up on the right.

25743986

A computer using merge sort will split those numbers up into pairs.

25 74 39 86

Then, for each pair, it'll check if the smaller of the two numbers is on the left. If it's on the right, the computer will make the numbers switch places.

25 74 39 86 \/ \/ \/ \/

25 47 39 68

Next, the computer will go two pairs at a time to see which pair starts with a smaller number.

25 47 39 68 \__/ \__/

It'll put those smaller numbers in a new group. This is where the merging begins.

5 47 9 68 2 3

Then it'll repeat over what's left of the pairs, comparing the first two numbers in each one.

5 47 9 68 \_/ \_/ 2 3

Then it'll add the smaller numbers on to the new groups.

5 7 9 8 24 36

Lather, rinse, repeat:

5 7 9 8 \__/ \__/ 24 36

7 9 245 368

2457 3689

Now you have two groups of 4 numbers. The computer will compare the first number of each group:

2457 3689 \____/

...and will repeat from there.

457 3689 \___/ 2

457 689 \____/ 23

57 689 \___/ 234

7 689 \__/ 2345

7 89 \___/ 23456

89 \/ 234567

9 2345678

23456789

6 notes

·

View notes

Photo

3 wishes, 3 rules... OK, 4 rules.

A computer program is a big series of instructions. A thread is an instance of the computer following instructions in order.

Shouldn't a computer always follow instructions in order?

Let's say you wrote a program to make a peanut butter and jelly sandwich:

Open bag of bread

Remove two slices of bread from bag

Apply peanut butter to one slice

Apply jelly to other slice

Put slices together

Most of those steps have to go in that order. You can't "remove two slices of bread from the bag" unless you have an open bag of bread in front of you.

But it doesn't matter in what order you apply the peanut butter and you apply the jelly. If you're really good with your hands and good at multitasking, you could do both at the same time and eat your sandwich sooner.

This would be an example of a single thread (following instructions one-at-a-time) splitting into two threads (following two instructions at the same time), then going back to one thread.

Multithreading is the ability of a computer to follow multiple instructions at the same time.

The joke is many programming languages support multithreading, but as nice as it might be, JavaScript doesn't. This is mostly because JavaScript wasn't built that way, and trying to add it in now would break a lot of things. It would be great if the US started using the Metric System, too, but if you replaced all the nation's speed limit signs, measuring cups, and rulers overnight, the next day would be a hot mess.

Plus, multithreading has its downsides. It's hard to keep one thread from starting or stopping before the other is ready. You know that you shouldn't put the slices of bread together until both have their toppings, but a robot wouldn't know that, and might finish the jelly slice first and try to stick it onto the peanut butter slice before it's ready, causing the bread to get all mangled and jelly to go everywhere. This is an example of a race condition ('race' as in 'speed', not as in 'demographics').

Multithreading also uses more computing power. I can make a PB&J pretty well, but I don't have the mental capacity to put peanut butter on one slice and jelly on another at the same time.

8 notes

·

View notes

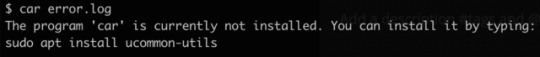

Photo

"I'm pretty sure that's illegal"

Between 2004 and 2006, the Motion Picture Association (MPA) started including an anti-piracy video in many DVDs. It included the text,

You wouldn't steal a car.

Media piracy is widespread, and many people justify it by using the greediness and exploitativeness they see in the movie and music industries. As a result, the video was the butt of a lot of jokes, with "You wouldn't download a car" becoming a popular meme.

This image shows the user trying to run a program called car. They're doing this in the shell -- a way of talking to a computer using only text, with no fancy user interface or graphics, which (sometimes) makes things more efficient.

They're trying to run car on a file containing a log of errors, such as might have been spit out by a program that ran into a bug.

I would guess the user made a typo when trying to run cat. This program -- short for concatenate -- spits out the contents of a file on the screen. So, by running cat error.log, the user would have been able to read the error log.

There is a program called car, which is a Cryptographic ARchiver: it lets you encrypt a file to keep other people from being able to read it, and decrypt it only if you have the right key, or password. It is part of a "package" of other programs called ucommon-utils.

When you're using the shell of some Linux computers, and you try to run a program that hasn't been installed computer -- such as car in this case -- it may prompt you to download that program through another program called APT. To do this, you would run apt install ucommon-utils.

(sudo is short for "superuser do." Computers try to protect you from doing things that might mess them up, like installing random stuff from the Internet. Writing sudo before running a program forces the computer to to let you run it.)

The joke is the computer is prompting the user to download car (via ucommon-utils). This is a play on words with the "you wouldn't download a car" meme.

1 note

·

View note

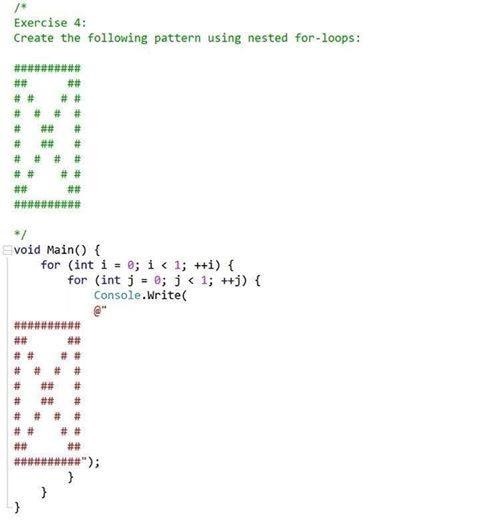

Photo

Technically correct

This shows a pretty standard coding challenge, such as might be used in a job interview, and one way to "solve" it, written in the C# ("C Sharp") language.

In programming, a loop is something you write in your code that tells the computer to run another piece of code multiple times. A for loop tells the computer, "Do this once for each user in this list," or "for each number between 1 and 100."

You can nest loops inside each other. For example, if you wanted to create a deck of cards, you could do something like:

for each suit in (spades, hearts, diamonds, clubs): for each rank in (ace, 2,3,4,5,6,7,8,9,10, jack, queen, king): new card (suit + rank)

(This is pseudo-code – it looks kind-of like code, but isn't, and is just used to explain something.)

Whoever wrote the challenge expected the person solving it to do something like:

box_height = 12 box_width = 10 for each row in box_height: (start a new line) for each column in box_width: (figure out whether the computer should spit out a '#' or a space)

At the end, the computer will have done 120 loops, spitting out a # or a space in each loop, with the end result looking like a box with an 'x' in it.

However, instead of drawing the box one character at a time over 120 loops, the given answer just spits out the completed box over one loop, which isn't really a loop at all.

The given answer does technically use nested loops. They look like this:

for (int i = 0; i < 1; ++i)

This says, "Here's an integer (a number) called i. It's equal to 0. Run this code. When you're done, add 1 to i, and if i is less than 1, loop through the code again."

Obviously, once you've added 1 to i, i won't be less than 1 anymore, so the computer won't loop through the code again.

All-in-all, the given answer says:

do this once: do this once: (spit out a box with an 'x' in it)

The joke is programmers tend to do exactly what you tell them to do. The hardest part of creating software is figuring out what you want the completed software to do, and then correctly explaining that to the people who are going to write the code.

However, even though the given answer is technically correct, it would probably just annoy the interviewer and make them reject the person who wrote it. Given the choice between a "rockstar" programmer who can slam out code really quickly, and one who can code decently and communicate well and read between the lines, every sensible employer will pick the second one.

5 notes

·

View notes

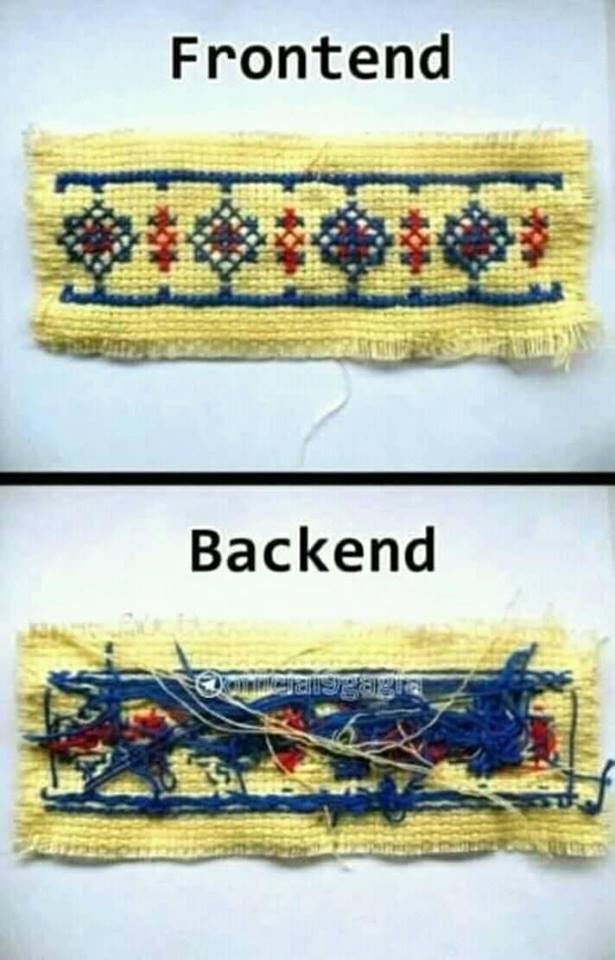

Photo

Reality

Software is usually thought of as having two "sides":

The front-end controls the user experience: what your users physically see and interact with.

The back-end supports the front-end, but doesn't directly affect the user experience. The back-end might load data from a database and write data into it, redirect users to an error page when they enter in a bad web address, encrypt passwords, show users one thing when they're logged in and another thing when they're not, and so on.

The joke is the front-end of a piece of software can look all nice and pretty, but it's running on a pile of really complicated back-end code. This is similar to a play in a theater: the audience sees an attractive set with a couple actors telling a story. The audience doesn't see all the wires, weights, makeup rooms, costume shops, and people running around and yelling at each other behind the scenes.

Front-end development isn't necessarily easier than back-end development, just as acting/dancing isn't necessarily easier than being on a stage crew. Making a user interface interactive, or adding in animations and other fancy visual stuff, can be really complicated and "ugly." There are a lot of back-end developers who wouldn't touch front-end code with a ten-foot pole (and vice-versa).

8 notes

·

View notes

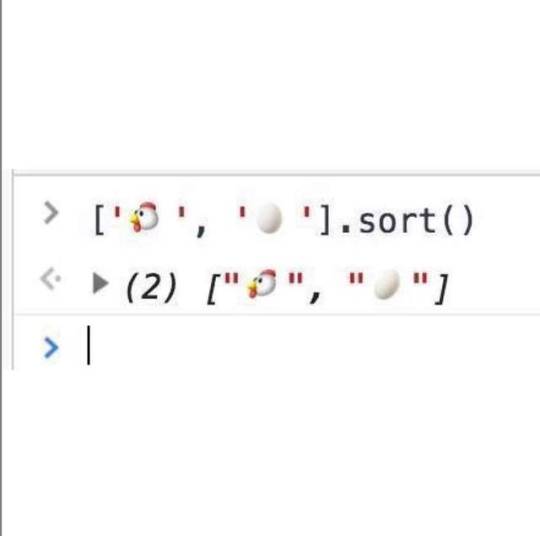

Photo

Using Deep Learning to Solve One of Humanity's Oldest Mysteries

Most programming languages have a way of organizing data called an ordered list or an array. This lets you take pieces of data and group them together in some kind of order, which makes it easier to do stuff to each item in that group.

For example, instead of telling the computer,

"Hey, reset the passwords for Alice, Bob, Carol, Dan, Ellen, and Frank. Now sign out Alice, Bob, Carol, Dan, Ellen, and Frank. Now send an e-mail to users Alice, Bob, Carol, Dan, Ellen, and Frank."

...you can tell the computer something simpler like this:

"Hey, put Alice, Bob, Carol, Dan, Ellen, and Frank in an array called 'Users'. Now reset the passwords for Users. Now sign out Users. Now send an e-mail to Users."

Programming languages all use different punctuation marks to indicate different things. In JavaScript, you use commas , and [square brackets] to tell the computer that something is an array.

All programming languages that have arrays also have some way of sorting them. For example, if you tell the computer to sort an array that contains numbers, it'll put them in order from smallest to largest or the other way around. If the array contains pieces of text, the computer will put them in alphabetical order.

In this meme, chicken and egg emoji have been put into a JavaScript array. (Emoji started going "mainstream" in about 2010, and are now so popular that some programming lanuages let you write code with them, just like letters and numbers.) This JavaScript array is then sorted.

The joke is this is a way to answer the age-old question, "What came first: the chicken or the egg?" But really, it's about as valid as putting the words 'chicken' and 'egg' in alphabetical order and saying that answers the question. In the "dictionary" that computers use, the chicken emoji comes before the egg emoji, which is why JavaScript puts the chicken first when it sorts the array.

Sorting is one of the first things you learn when picking up a programming language, and it has absolutely nothing to do with "deep learning," which usually refers to artificial intelligence or machine learning or something else fancy. The other joke is the tech industry tends to inflate the value of things by using really over-blown buzzwords to describe basic stuff.

4 notes

·

View notes

Photo

(Version) Control

Git is version control software: it lets you track all the changes made to some files, including changes you make yourself and, of other people are working on the same files, changes they make too. (This shouldn't be confused with GitHub, which is a website that uses this software, similar to GitLab, Bitbucket, and several others.)

gitignore is a setting that tells Git to not track certain files. This is useful when there are files in a project that aren't important, or that computers put there automatically, or that you don't want/need other programmers to see. For example:

I sometimes keep a little "scratchpad" file in my coding projects where I can copy and paste snippets of code or write notes. The stuff in that file isn't really important, so there's no point in cluttering things up by tracking its changes.

If you have a Mac, sometimes it automatically sticks a file called .DS_Store into different folders on your computer, which helps it keep things organized under-the-hood. A .DS_Store file only works on the computer that made it, you have no control over it, and you can't read it, so there's no point in telling Git to track it.

The Google results for git ignore include many files that are commonly ignored, or that people aren't sure should be ignored, which explains why they're using Google to look them up.

The joke is how different the results of the two Google searches look, even though the text of the searches is pretty similar. It also implies that programmers aren't interested in relationships, and are much more interested in writing code. The man in the pictures is Linus Torvalds, a famous programmer who created Git as well as the Linux operating system.

2 notes

·

View notes

Photo

Priority Matters

Refactoring is making changes to existing code without the intent of adding new functionality. Usually programmers refactor to make their code easier to read, more organized, or more efficient.

But refactoring is a slippery slope: once you start "fixing" things, it can be really hard to stop. There's no such thing as "perfect" code -- there's always some way it could be better. When you're coding you'll notice some little thing, think to yourself "I'll just take 2 minutes to fix this," and then 10 hours later you've started fixing a million "little things" and haven't finished any of them.

The joke is the programmer started rewriting some code to fix a bug in his program, then got caught up in "refactoring." His code may be "better" now, but the bug still exists, and to everyone using the program things are exactly the same as they were before.

4 notes

·

View notes

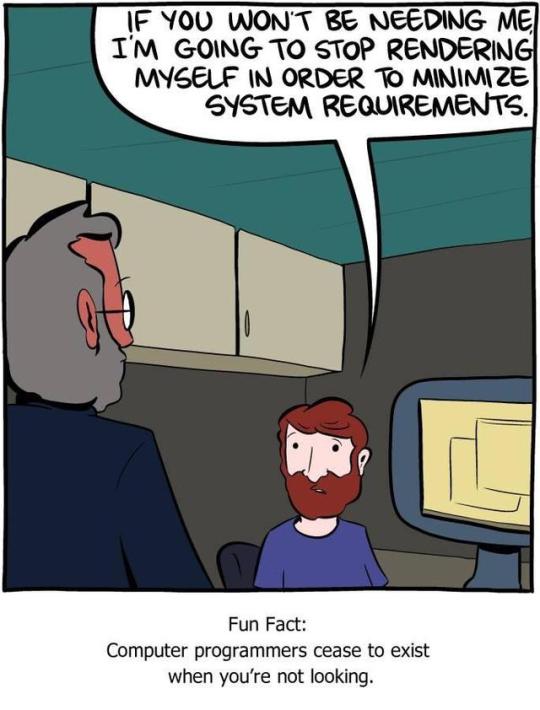

Photo

dev->hide();

Making stuff show up on a screen can take a lot of memory. If your computer runs out of memory, it stops working. So programmers need to make sure their code uses memory efficiently. One way is to have the computer only process data that is needed right now.

Think about a video game. The game's "world" might be huge, with lots of space for your character to run around. But at any point in time you only ever see a little piece of that world, the area that's closest to your character. The computer saves memory by only rendering components of the world -- doing the math needed to make shadows, 3D shapes, textures -- that should show up on the screen.

If a tree falls in a virtual forest, and no-one is around to see it, whether it makes a sound is irrelevant because there's no point in rendering it.

Other programs beyond just video games do the same thing. As you read this, your web browser (Chrome, Firefox, Internet Explorer, Safari, etc) might have other tabs and windows open, but the one showing this website is the one that's "in focus." Your computer has put those tabs and windows "to sleep," and will only spend memory on them when you click over to them and they come back in focus. This is why if you start streaming a video in one window, and then open up another window, the video sometimes loads more slowly.

The joke is, like the programs they write, developers actually turn invisible when they're not "in focus" -- that is, when you're not looking at them. (The title text is a code-y way of telling a developer to hide.)

3 notes

·

View notes