Don't wanna be here? Send us removal request.

Text

New Post has been published on LabAutomations

New Post has been published on https://labautomations.com/blog/ginolis-nanoliter-dispensing-pump-now-available-in-starter-kit/

Ginolis Nanoliter Dispensing Pump Now Available in Starter Kit

Ginolis, a global supplier of automated production and dispensing solutions, has launched its patented PMB dispensing pump in an easy-to-use starter kit. The Piezo Motor Bellows (PMB) pump provides accurate and repeatable non-contact dispensing of nanoliter range volumes.

The basic starter kit includes PMB pump, control laptop, degasser, tubing, valves, tips and travel case. Additional upgrades include drop verification camera and backlight. The PMB Starter Kit is available for sale online or by contacting [email protected].

The PMB Principle

The principle behind the Ginolis PMB pump is a highly accurate piezo motor that is connected to a bellows which is contracted and expanded with the motor. In doing so, the bellows displaces a volume in a closed chamber which is equal to the aspirated or dispensed volume. The advantage of a bellows-driven pump is that it has no friction parts. The result is a pump that will have an extremely long life with no need for replacement seals and other wear material in the aspiration and dispensing cycle.

The pump has an integrated pressure sensor which is used for clog and leak detection, detection of air in the fluid lines, pre-pressurizing the pump and automated monitoring of pressure stabilization during pre-dispensing. The PMB pump is equipped with a solenoid dispensing valve and a ceramic tip to dispense volumes as low as 1 nl.

Application

The Ginolis PMB pump starter kit is an ideal solution for academic and R&D environments. The dispensing pumps can also be integrated into custom robotic solutions for on-the-fly spotting, array printing, coating and roll-to-roll dispensing. Possible applications also include reagent dispensing, protein and DNA handling and POC solution based tests.

The starter is controlled using Ginolis’ easy-to-use Ginger software. The software is programmable and comes equipped with an automated priming function and status monitoring. The PMB pump can be operated in bulk and aspiration modes.

See the Ginolis PMB dispensing pumps in action:

youtube

(Customer Testimonial VTT)

Learn more about Ginolis Ginger Software:

youtube

(Ginolis Ginger)

For more information about the Ginolis’ high precision dispensing, production automation and QC solutions visit Ginolis.com

0 notes

Text

New Post has been published on LabAutomations

New Post has been published on https://labautomations.com/hospitals-putting-their-labs-in-one-place/

Hospitals Putting Their Labs in One Place

Centralized health system laboratories can lead to efficiencies and better patient care

The question of whether to centralize operations is common in business — and there are compelling arguments on both sides of the issue. Hospitals wrestle with the same question when it comes to clinical labs. Several organizations are finding greater efficiencies and cost savings in centralizing their lab operations into a single core facility. They’re aided in this endeavor by new concepts in lab design and Lean principles.

Centralized or “core” labs can be tightly focused on routine tests such as chemistry, hematology and coagulation. Others might accommodate a much wider variety of specialty tests — including flow cytometry, special coagulation, molecular diagnostics, complex microbiology, virology, endocrinology, toxicology, cytogenetics, molecular genetics, cytology and anatomic pathology — as a way to offer one-stop shopping.

The end goal is to produce scientifically accurate results, provide efficient operations, reduce the cost per test and ensure that accurate results are returned to patients in hours rather than days. While core labs can significantly benefit health care providers and patients, planning such facilities can be complicated.

For example, advances in testing technology, particularly the enhanced speed and efficiency of automated equipment, can dramatically increase sample throughput. But the rise of automated testing systems and the expansion of these systems’ high-volume capabilities comes with a greater need to anticipate the flow of samples, equipment and employees.

Northwell Health, based in New Hyde Park, N.Y., considered an expanded core lab during a period of expansion, which included an increase in its physical footprint, the number of physicians it employs and the realization that it was sending many of its tests to commercial labs. The system decided to bring most of its tests in-house and was able to do so by building two core labs that service the entire system.

Moving a project forward

Before making a decision to centralize lab functions, a health care system needs to determine the number of tests it performs now as well as the number and types of tests it hopes to perform in the future.

It must then address questions of personnel and equipment to serve its anticipated test volume: How many people will the hospital need to staff its core lab — typically around the clock — to handle the hospital’s current and future volume? What training and skills will staff need? How much equipment will it take to handle the volume? What is the capacity of automated equipment? What infrastructure will be needed to support operations?

It sounds like a simple equation. The number and types of tests that will be performed determine the size of the staff and, therefore, the scale of the core lab. In practice, this can be difficult to solve when working with tight budgets.

Getting down to details

After determining the nature of the testing to be performed and the intended testing volume, administrators and planners can then draw a more detailed picture. It is not at all strange for the picture to take shape gradually.

Hospitals first must work out space considerations for the new lab. Labs require some flexibility and floor space, so many health systems will seek out existing, large, open-bay space. Ideally, the building is a simple, insulated shell: a well-built big-box store, for example. This is desirable in spite of many potential issues that can arise.

Northwell Health is replacing its existing core lab in Lake Success, N.Y., with two automated facilities, including a small facility in the borough of Queens that will be devoted to biological testing and function as the hospital system’s immediate connection to New York City, and a larger facility devoted to chemistry-related testing within Northwell’s Center for Advanced Medicine, six miles away.

Northwell’s anticipated testing growth was pegged at between 250 percent over capacity at the start and 400 percent in the future, and in time a fully automated workflow and testing system was created by several different collaborating manufacturers in response to Northwell’s needs.

When it is fully implemented next year, Northwell will be home to an automated clinical testing platform in which two parallel, duplicate lines will run 120 linear feet and together will be able to process up to 20 million tests annually around the clock, utilizing the platform’s various analyzer modules.

Accommodating such a large platform and its accessories requires a large area of open space, so it is a design challenge to keep the layout logical and flows efficient, both now and in the future. Because the move from the loading dock to receiving to processing to accessioning is such an important arrangement of spaces leading to the automated platform, the automated platform and its inbound, pre-analytic workflow will sit relatively close to a perimeter wall.

Executive Corner: Planning the core lab

A variety of programmatic and design issues can influence the planning of a centralized clinical testing — or core — lab. While programmatic issues theoretically come before design, the issues are intertwined. The following categories of planning and design considerations, all of which are relevant to creating a successful facility solution, are therefore not sequential, even though the first two are likely the initial steps in the process.

• The operational concept: Programming the space begins with understanding the staffing model, which includes everything from hours of operation and the work-shift strategy to safety protocols and cleaning procedures. Then comes the development of a logistical plan for sample management along the entire chain of custody control, including receiving, sample login, distribution, testing, reporting, freezer or cold-room storage, waste removal and management of consumables. Equipment-related optimization issues like capacity modeling, throughput analysis and backup strategy round out this operational strategy.

• Organization of flows: Applying Lean principles, the team then tackles work cell development with sample flows and efficient use of equipment and space and evaluates layout opportunities as well as personnel, equipment, sample and waste flows. Flow analysis is used to confirm contamination control, sample integrity and protocols anticipated. The team looks for opportunities to prevent contamination, process overlaps and bottleneck conditions. It also evaluates furnishings and equipment arrangement (e.g., sample prep and instrument layout) to help optimize work patterns and shared equipment opportunities. The team considers lab, office and support space adjacencies, which are critical for connectivity and supervision, as well as interaction space for coordination between testing groups.

• Modular planning for flexibility: An open-lab concept allows the greatest flexibility of space for future change with functional separations only where required. Within this space, utility distribution is planned to allow for open floor plates and flexible connections. The team then establishes a planning grid that allows for adaptability in the technology platform, lab automation, and assay and equipment upgrades. Sample preparation, incubation, amplification/detection and recording stations — which are important to optimize movement between operations — are located within the grid. Additionally, serviceability of equipment and calibration requirements (utilization logs) are confirmed. Finally, structural loading and vibration criteria, essential for sensitive equipment and robotics, are reviewed.

• Development of a safety and containment strategy: The team determines the biosafety level or potency of compounds and provides safeguards for personnel (e.g., personal protective equipment) and product (e.g., containment device or room), gowning and degowning concepts and isolation requirements. It also conducts safety or hazard and operability reviews and confirms intended standard operating procedures.

• Cross-contamination control: This involves functional separation of special testing needs; space pressure cascade and relationships between adjacent areas; and special procedure/special design requirements, including those for cleanrooms designed to ISO standards, cleanable surfaces, particulate-free finishes, environmental monitoring and, potentially, high-efficiency particulate air filtration. An air-handling zoning and cleanliness strategy will need to be put in place, whether using directional air flows or special spatial monitoring, when required.

• Regulatory impacts: Besides typical building codes and standards, these may include Centers for Disease Control and Prevention and World Health Organization guides; Clinical and Laboratory Standards Institute regulations; American Society of Heating, Refrigerating and Air-Conditioning Engineers standards; and National Fire Protection Association standards for flammables and life safety, among others.

• Special systems and security considerations: This will involve controlled access, equipment monitoring and alarms, data storage and multiple electronic reporting systems (especially those governing uninterruptible power supply, validation, redundancy and protection of personal information) as well as a data backup strategy that is balanced against operational costs.

Other workflows — samples moving to and from manual testing areas or refrigerated storage and to disposal — can’t interfere with each other and thus loosely occupy the other quadrants of open space. There is space allocated in two directions, presumably farthest from the inbound workflow, to accommodate future expansion of automated systems. This places other functions, such as offices, conference rooms, restrooms, locker rooms and break rooms, along the periphery, possibly to be moved farther away later.

Aside from Northwell’s 40-some pathologists who inhabit a second, smaller floor in the core lab, the other roughly 1,000 employees share offices and open workstations across multiple shifts. These offices and workstations are arranged to allow for close proximity of lab supervisors to their associated labs.

Labs achieve adaptability through what are now standard design moves: utility services that can be accessed from cables that descend from the ceiling; floor drains provided in a grid pattern, that can be capped when not needed; and mobile furnishings or flexible lab furniture systems in which utilities are prewired and preplumbed.

New work models

Lab operators, too, have begun to reshape lab functions with an eye toward optimizing the testing process, often using Lean work cell concepts.

The Mayo Clinic in Rochester, Minn., for example, has adopted a strategy in which physicians, hospitals and clinics must all send samples that are already aliquoted so they can be more easily routed to different testing stations — something Northwell hopes to do in the future. Many labs are seeking similar ways to streamline operations, which could have an impact on the floor plan. Receiving samples in consistent form in a consistent electronic format and with a consistent means of tracking could mean fewer staff and less space devoted to accessioning and more to information technology, for example.

NYC Health + Hospitals Corp.’s agreement with Northwell to create its forthcoming core lab is being counted on to save costs through the efficiencies created with its centralized, modern, highly automated and ergonomic lab facilities. Such projects are sure to provide immeasurable benefits to physicians, lower costs and speed lab results to anxious patients. — Jim Gazvoda is a principal and Jeff Raasch is design principle at Flad Architects, headquartered in Madison, Wis.

Source: http://www.hhnmag.com

0 notes

Text

New Post has been published on LabAutomations

New Post has been published on https://labautomations.com/collaborative-robotics-in-practise/

Collaborative Robotics in practise

Collaborative Robotics in practise at the Institute for Lab Automation and Mechatronics

WHEN? WHERE?

Tuesday, August 29th, 8.30-16.30 o’clock

HSR Hochschule für Technik Rapperswil

Content:

Types of Human-Robot-Collaboration

application possibilities of collaborative robotics in the production

security with collaborative robots

practical examples

first experiences with collaborative robots

Target group:

technical employees, engineers and executives in the field of production and manufacturing

Course instructor:

Prof. Dr. Agathe Koller, director ILT Institute for Lab Automation and Mechatronics

Manuel Altmeyer, business unit manager industrial automation ILT

Costs:

700 CHF incl. course material and lunch

Contact:

Prof. Dr. Agathe Koller, agathe.koller(at)hsr.ch; 0041 55 222 49 29

Registration:

For registration, please send a message to Doris Waldburger (doris.waldburger(at)hsr.ch).

Please note that participants spots are limited.

Cancellation of registration

Cancellation 3 weeks before education day: no costs are charged

Cancellation 1 week before education day: 50% of costs are charged

Cancellatioin later than 1 day before education day: full costs are charged

1 note

·

View note

Text

New Post has been published on LabAutomations

New Post has been published on https://labautomations.com/collaborative-robotics-in-practise/

Collaborative Robotics in practise

Collaborative Robotics in practise at the Institute for Lab Automation and Mechatronics

WHEN? WHERE?

Tuesday, August 29th, 8.30-16.30 o’clock

HSR Hochschule für Technik Rapperswil

Content:

Types of Human-Robot-Collaboration

application possibilities of collaborative robotics in the production

security with collaborative robots

practical examples

first experiences with collaborative robots

Target group:

technical employees, engineers and executives in the field of production and manufacturing

Course instructor:

Prof. Dr. Agathe Koller, director ILT Institute for Lab Automation and Mechatronics

Manuel Altmeyer, business unit manager industrial automation ILT

Costs:

700 CHF incl. course material and lunch

Contact:

Prof. Dr. Agathe Koller, agathe.koller(at)hsr.ch; 0041 55 222 49 29

Registration:

For registration, please send a message to Doris Waldburger (doris.waldburger(at)hsr.ch).

Please note that participants spots are limited.

Cancellation of registration

Cancellation 3 weeks before education day: no costs are charged

Cancellation 1 week before education day: 50% of costs are charged

Cancellatioin later than 1 day before education day: full costs are charged

0 notes

Text

New Post has been published on LabAutomations

New Post has been published on https://labautomations.com/lego-lab-automation-kit-wtf/

LEGO Lab Automation Kit! WTF

Elementary and secondary school students who later want to become scientists and engineers often get hands-on inspiration by using off-the-shelf kits to build and program robots. But so far it’s been difficult to create robotic projects to foster interest in the “wet” sciences – biology, chemistry and medicine – so called because experiments in these field often involve fluids.

GO AND CHECK THIS AMAZING VIDEO OF THE ROBOT AT WORK: CLICK HERE

Now, Stanford bioengineers have shown how an off-the shelf kit can be modified to create robotic systems capable of transferring precise amounts of fluids between flasks, test tubes and experimental dishes.

By combining the Lego Mindstorms robotics kit with a cheap and easy-to-find plastic syringe, the researchers created a set of liquid-handling robots that approach the performance of the far more costly automation systems found at universities and biotech labs.

“We really want kids to learn by doing,” said Ingmar Riedel-Kruse, assistant professor of bioengineering, who led the team that reports its work in the journal PLoS Biology.

“We show that with a few relatively inexpensive parts, a little training and some imagination, students can create their own liquid-handling robots and then run experiments on it – so they learn about engineering, coding and the wet sciences at the same time,” said Riedel-Kruse, who is also a member of Stanford Bio-X.

Robots meet biology

These robots are designed to pipette fluids from and into cuvettes and multiple-well plates –types of plastic containers commonly used in laboratories. Depending on the specific design, the robot can handle liquid volumes far smaller than one microliter, a droplet about the size of a single coarse grain of salt. Riedel-Kruse believes that these Lego designs might even be useful for specific professional or academic liquid-handling tasks where related robots cost many thousands of dollars.

His overarching idea is to enable students to learn the basics of robotics and the wet sciences in an integrated way. Students learn STEM skills like mechanical engineering, computer programming and collaboration while gaining a deeper appreciation of the value of robots in life sciences experiments.

Riedel-Kruse said he drew inspiration from the so-called constructionist learning theories, which advocate project-based discovery learning where students make tangible objects, connect different ideas and areas of knowledge and thereby construct mental models to understand the world around them. One of the leading theorists in the field was Seymour Papert, whose seminal 1980 book Mindstorms was the inspiration for the Lego Mindstorms sets.

“I saw how students and teachers were already using Lego robotics in and outside school, usually to build and program moving car-type robots, and I was excited by that – and the kids obviously as well,” he says. “But I saw a vacuum for bioengineers like me. I wanted to bring this kind of constructionist, hands-on learning with robots to the life sciences.”

Do it yourself

In their PLoS Biology paper, the team offers step-by-step building plans and several fundamental experiments targeted to elementary, middle and high school students. They also offer experiments that students can conduct using common household consumables like food coloring, yeast or sugar. In one experiment, colored liquids with distinct salt concentrations are layered atop one another to teach about liquid density. Other tests measure whether liquids are acids like vinegar or bases like baking soda, or which sugar concentration is best for yeast. Yet another experiment uses color-sensing light meters to align color-coded cuvettes.

The coding aspect of the robot is elementary, Riedel-Kruse said. A simple programming language allows students to place symbols telling the robot what to do: Start. Turn motor on. Do a loop. And so forth. The robots can be programmed and operated in different ways. In some experiments, students push buttons to actuate individual motors. In other experiments, students preprogram all motor actions to watch their experiments executed automatically.

“It’s kind of easy. Just define a few parameters and the system works,” he said, adding, “These robots can support a range of educational experiments and they provide a bridge between mechanical engineering, programming, life sciences and chemistry. They would be great as part of in-school and afterschool STEM programs.”

STEM-ready

Riedel-Kruse said these activities meet several important goals for promoting multidisciplinary STEM learning as outlined by the Next Generation Science Standards (NGSS) and other national initiatives. He stressed the cross-disciplinary instruction value that integrates robotics, biology, chemistry, programming and hands-on learning in a single project.

The team has co-developed these activities with summer high school students and a science teacher, and then tested them with elementary and middle school students over the course of several weeks of instruction. These robots are now ready for wider dissemination to an open-access community that can expand upon the plans, capabilities and experiments for this new breed of fluid-handling robots, and they might even be suitable to support certain research applications.

“We would love it if more students, do-it-yourself learners, STEM teachers and researchers would embrace this type of work, get excited and then develop additional open-source instructions and lesson plans for others to use,” Riedel-Kruse says.

This article has been republished from materials provided by Stanford University. Note: material may have been edited for length and content. For further information, please contact the cited source.

Credit: TechnologyNetworks

0 notes

Text

New Post has been published on LabAutomations

New Post has been published on https://labautomations.com/paperless-lab-academy-2017-transforming-scientific-information-into-actionable-insight/

Paperless Lab Academy 2017: Transforming Scientific Information Into Actionable Insight

The fifth edition of the Paperless Lab Academy held last April 4th and 5th has consolidated its reputation as one of the best lab automation European congresses. The event took place in Barcelona and brought about 250 people from the industry coming from Europe, the United States and India.

At NL42 (www.nl42.com), we are extremely pleased with the results and feedback received after the recent acquisition of the event’s organization (press release)

The event is dedicated to companies needing to adopt a paperless approach in their scientific data management throughout the product data lifecycle. We decided to focus this year’s edition on the Internet of Lab things (IoLT) with the title “2020 Roadmap for Digital Convergence – transforming scientific information into actionable insight”.

The event consists of a balanced mix of plenary and workshop sessions. The event sponsors benefit an interactive workshop where they showcase the latest trends in methodology, systems, and tools. Attracted by the chance to get to know the key players and the newcomers, our visitors have several opportunities to network and interact with providers, peers, and colleagues.

This post event article aims to summarize the key concepts introduced and discussed along these two days through the plenary sessions and the 20 interactive sponsored sessions.

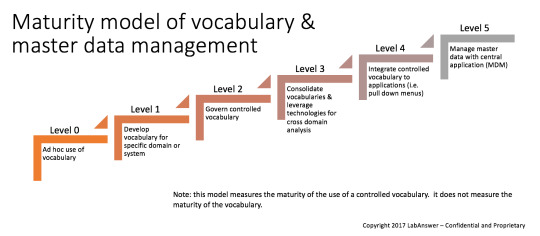

The discussions went from the very beginning of the journey when a laboratory should start transforming its processes from paper to paperless or better say less paper. Pat Pijanowski, partner at LabAnswer explained that “transformational solutions start with a comprehensive data strategy”, highlighting the importance of doing the foundational work first, and assessing your current state of readiness versus the 6 different levels of a Maturity model of vocabulary & master data management.

Figure1: published with the authorization of the author

Once the transformational process has started, the requirements for connecting the instruments, integrating the systems and ensuring a seamless flow of the data between interfaces drives us to the compliance requirements such as data integrity.

Multiple sponsored workshop sessions have covered these topics from different angles. Labware and Waters presented their own solutions and discussed data integrity requirements and ALCOA+ definitions while iVention took the opportunity to share their customer experience with the support of an expert in auditing.

The concept of IoLT (Internet of Lab Things) is now becoming a new acronym for the industry, a derived approach for laboratories of the IoT that everybody is getting familiar with. The concept of data received directly from instruments, sensors, devices perfectly fits with the laboratory world, where the opportunities to create a seamless integration will be certainly driving the future of the scientific data management.

Actually, the IoLT acronym has been introduced for the first time during the previous edition of the event, confirming that the Paperless Lab Academy is the place to be to learn about future trends in the market.

Michael Shanler, Research Vice President Analyst at Gartner has perfectly set the scene of the IoLT concept in his presentation “Exploring The Digital Potential For Laboratory of the Future”. The future is already here, as several sponsors have demonstrated during their workshops. Cubuslab has specifically presented a platform which allows the implementation of IoLT today.

Michael has emphasized that we shouldn’t confine IoT to a single domain as the big digital potential is in new business models with converging ecosystems. Gartner’s Research VP also analysed the elements and uses of AI-assisted Lab and Business Processes.

Shanler described the IoT ecosystem as follows: IoT in Labs is at the core of the ecosystem and is related to Industrial Internet of Things (IIoT) through quality manufacturing while is linked to a series of elements through research and development, such as Smart home IoT, mHealth (HC/Fitness) and dClinical (drug trials). At the same time, Smart home IoT is related to Connected car IoT, and Energy Utilities IoT. And, of course, IoT in Labs and Industrial internet of things (IIoT) are converging ecosystems.

Mark Newton, Senior Consultant-QA at Eli Lilly, key contributor of the ISPE GAMP working group, has helped the audience to evaluate the data integrity challenges associated with the use of systems generating a large amount of data like a chromatography data system, “Manipulating Chromatography Data (for Fun and Profit)” It’s clear that these systems are nowadays under the scrutiny of several investigations and audits. At the same time, we believe that the major providers are making significant steps to support their clients in ensuring the data integrity throughout the entire data lifecycle.

Kurt in Albon, Global Head of Quality Assurance at Lonza took us to data governance, approaching the challenge from the managerial point of view. The human factor remains one of the most critical aspects of the projects. The data governance approach ensures that all employees are engaged in the successful implementation of the data governance, starting from the senior management which should instill the culture to the operators who are generating, analysing and submitting the information.

Sofie van der Meulen, Attorney at Axon Lawyers, keynote speaker of the event in the last years, always bring very interesting insight from the legal perspective. The IoT introduces new legal challenges, as the devices are now able to collect a huge amount of data, including private information. We believe that the ability to handle this large amount of data in the most appropriate manner in terms of privacy will be a key differentiator for the future, particularly in R&D environments.

Louis Halvorsen, Chief Technical Officer at Northwest Analytics developed for the audience the concept of EMI (Enterprise Manufacturing Intelligence) and the design principle of the industry 4.0. We have found this presentation as the perfect summary of the opportunities that proper “paperless” data management can bring to the industry, allowing the sharing of R&D, production and quality information in a simple manner.

Several other presentations have highlighted very interesting aspects of the scientific data management, including how research and academic organizations can be supported by “paperless” projects.

Prof. Thomas Friedli, Professor for Production Management and Director Institute of Technology Management at St. Gallen University, has introduced the audience to an overview of the operational excellence concept. His organization can provide insightful information on the laboratory performance, thanks to a consolidated benchmarking analysis based on one of the largest databases worldwide. We believe that the operational excellence is becoming more and more one of the cornerstones of the future labs. The evaluation of the performance against a large amount of peer organizations is the perfect tool to help the laboratories to focus on key areas that can be optimized and enhanced.

We have been pleased to present the results of the ELN (Electronic Laboratory Notebook) survey which has been designed with the collaboration of Scientific Computing World. ELN remains one of the critical tools to be implemented in the industry, from research to development and subsequently in the production stage. Academic organizations are also using very frequently this type of application. We´ll soon release a comprehensive summary of survey outcome and the full results will be made available upon request.

The presence of sponsors has been extremely positive during this edition. It demonstrated the importance of the event for the companies which are having an interest to present their capabilities, new products or future trends. Besides the companies which are having a long history of success in this market, new companies have joined the PLA2017. This expansion is giving us high confidence that the congress will continue to attract even more people in the coming years.

While the presentations have been a very well appreciated part of the congress, the twenty workshops allowed attendees to have direct experience with specialized computer solutions in the sector and get a first-hand view of their functionalities.

This large number of workshops has allowed to cover a full range of topics and get in contact with very well-known companies operating in this industry and new enterprises which are approaching this market with new ideas, new technologies and fresh approaches.

Labware, ThermoFisher Scientific, Perkin-Elmer, Waters, Biovia and Abbott are very well known companies in this field. They have provided their view on different aspects of the scientific data management, from data integrity (Labware) to lean processes (Abbott) going through a complete new view of lab data management (Thermo-Fisher). ELN capabilities have been covered by Perkin-Elmer launching at our event their new SignalsNotebook. The concept of archiving data has been developed by Waters, while Biovia has focused on a new tool called Discoverant, capable to provide a full overview of the scientific information.

Mettler Toledo has also focused on data integrity, even if their perspective is always extremely interesting as it focuses on the bench and the data generated by the instruments.

The product offering around traditional tools for laboratory data management is now enriched by the proposals of companies that have recently entered in this market, like Agilab from France proposing their paperless approach to document management using a very modern user interface based on Oracle and easily deployable on premises and in the cloud, ICSA a Spanish provider that focused on environmental monitoring, both from the hardware and software point of view or Kneat Software that is offering a very modern solution for system validation.

Lonza has proposed their solution for paperless in the specific area of environmental monitoring where they have become a de facto reference.

IDBS and iVention have given their respective proposal to address the cloud implementations with attention to data integrity.

Specialized consulting firms like Spreitzenbarth Consultants and Osthus and software/consulting partners like iCD and BSSN have proposed their experienced approach to the different topics covered in the two days event, like process management, paperless and data integrity.

Research activities have been covered in three different workshops sponsored by Collaborative Drug Discovery, Core Informatics, and Dotmatics. CDD has dedicated the workshop to the concept of “collaborative technologies” combining traditional tools like ELN with others to create a collaborative environment to share resource data. Core Informatics has introduced the concept of the “Platform for Science Marketplace” which allows a simple approach to the implementation of research workflows, connecting Core’s platform with other systems in a simple manner. Dotmatics has focused on the specific workflows of antibodies, which are becoming a critical aspect of research activities. The solution proposed is able to generate full integrated workflows using the core capabilities of their products.

For the third year, the event took place at the Rey Don Jaime Hotel, in Castelldefels, near Barcelona in a beautiful environment by the sea where the attendees have enjoyed the atmosphere and networking opportunities.

The satisfaction survey outcome confirms that the three pillars rich content, non-commercial presentations, showcase workshops and enjoyable networking sessions, make this unique event, and are acknowledged by sponsors and visitors as the key combination for one of the best European event in its category.

To conclude from where we started at the PLA2017, this event is organized by Peter Boogaard, Isabel Muñoz-Willery and Roberto Castelnovo, yet none of us are event organizers. Three independent consultants with strong domain knowledge, consolidated international network and managerial skills which have permitted us to build an event with increasing notoriety and reputation over the years. We´re thankful to the 2017 sponsors to allow the “last but not least” key differentiator of this event, which is free entry to visitors from the industry.

This article has been republished from materials provided by NL42. Note: material may have been edited for length and content. For further information, please contact the cited source.

Credit to: https://www.technologynetworks.com/

0 notes

Text

New Post has been published on LabAutomations

New Post has been published on https://labautomations.com/taking-biotech-to-the-next-level-how-about-automation/

Taking Biotech to the Next Level – How about Automation?

While smart and automated technologies are slowly taking over our lives, ranging from smart homes and self-driving cars to applet-generators like IFTTT, it sometimes feels like biotech is not catching up. With experiments becoming ever more complex and datasets more immense, a question that comes to mind is — can we automate life science?

Compared with manufacturing and service industries, the life science R&D sector in general is lagging behind in terms of using automation for productivity and quality improvements. Most pharma and biotech companies, and in part also academic labs, have automated parts of their processes and make use of liquid-handling systems, especially those pursuing high-throughput screenings.

When talking about earlier stages of basic research the situation gets more complex. Research questions often need to be adapted and there are few possibilities of standardizing experimental workflows.

Then again, the potential of automation becomes clear when looking at the structures in academia. Here, PhD students are commonly the workhorses of the lab, often becoming absorbed in repetitive pipetting tasks that keep them from spending time on the actual science. On top of that, most researchers are still using handwritten lab notebooks.

In laboratories, we still think like we did twenty, thirty years ago. We still think of the same processes. It’s amazing to see that if you go to the laboratory, how much paper is still being used on very basic stuff.” says Peter Boogaard, CEO and founder of the service company Industrial Lab Automation.

So maybe it’s time to rethink the current workflows and realize that this is an area with serious room for improvement. In this article, we had a look at what’s going on in the field of automation for life sciences.

Why do we Need Automation?

Having a fully automated lab means replacing human-driven lab processes with robots or other devices and using computers to monitor experiments and integrate the data. This upgrade would not only enhance productivity, but also increase reproducibility and accuracy. But do we really need this?

A recent Nature survey indicated that science is indeed facing a reproducibility crisis. Of 1,576 researchers, more than 70% of respondents have tried and failed to reproduce another scientist’s experiments. More than half have failed to reproduce their own. Another study published in PLoS Biology estimated the amount of money spent on not reproducible preclinical research at a massive $28B in the US alone.

“Lack of reproducibility is a big issue right now in biomedical research,” Charles Fracchia, CEO and founder of the MIT-based startup BioBright, told us. His company aims to build the next generation of smart labs. He also adds that “the heavy biology automation is only one side of the coin, we also need to enhance human ability to do research. Those two things go hand in hand.”

Synbio Companies are Leading the Way

Synbio companies are probably most advanced when it comes to lab automation. Using software and hardware automation, some of these companies are aiming to transform the process of organism engineering — replacing technology with biology.

Founded by Tom Knight, the godfather of synthetic biology, together with four of his graduate students, Ginkgo Bioworks was started with the dream of making biology easier to engineer. The company designs and optimizes organisms to efficiently produce compounds according to the customer’s desire.

Today, the US company has build several ‘foundries,’ factories where every step of strain engineering, from molecular biology to high-throughput analytics to small-scale fermentation has been automated. Running about 15,000 automated operations per month, the so called ‘organism engineers’ can build and test thousands of new prototypes for each project. Through building such automation tools, the company has significantly scaled up its throughput and capacity.

In Europe, British LabGenius is another synbio company that uses AI and automation together with its proprietary gene synthesis technology to rapidly search through trillions of genetic designs to find new biological solutions and create novel compounds.

Some might argue that the synthetic biology field is an area where biology and engineering coalesce. As Ginkgo describes it itself, “we aren’t trying to study biology, we are trying to build it.” But what about those trying to study it?

A fully automated lab at US-based Transcriptics, which offers remote lab services.

From Manual Drudgery to Software and Robotics

Liquid handling systems and pipetting robots have been around for a while, but most of them are simply too expensive for most laboratory settings. Also, they often require trained personel to program the systems according to the specifics of the experiment.

Opentrons believes it can make wet lab experiments faster, cheaper and more reproducible by providing access to low-cost liquid-handling robots. “Basically, if you’re a biologist you spend all of your time moving tiny amounts of liquid around from vial to vial by hand with a little micro-pipette or you have a $100,000 robot that does it for you. We’re a $3,000 robot,” explained OpenTrons co-founder Will Canine to TechCrunch.

Not only are they aiming to make these machines more affordable, they are also trying to make them more intuitive. The machine is controlled by the web browser and allows researchers to download protocols from the cloud to run experiments.

Taking it one step further, London-based Synthace, is developing Antha, a technology, which they describe as “a programming language for biology”. Antha represents a software interface connecting hardware with the wetware in the lab, and it has been designed to make simple workflows of experimentation. It allows a researcher to build a protocol and then communicate it to his lab equipment. Its Opentrons robot, for example, could conduct the experiment.

Using the Antha programming language, it is possible to initiate a design, generate workflows, schedule experiments on various machines, and interpret the results from one platform.

Augmentation instead of Automation?

Many are still skeptical that robots and remote labs can actually do the trick. Roger Chen, associate at AlphaTech Ventures in San Francisco commented in Nature: “I have a hard time believing that a centralized automated lab will give you the freedom and flexibility to experiment with all the parameters you need to do some innovation.”

Fracchia told us, “the thinking is that we can solve a lot of the problems by taking the human out of the loop. But biology is not computer science. [Automated lab] services are great for simple experiments such as DNA sequencing or synthesis. But you cannot put data on everything.”

Fracchia believes that the solution to the reproducibility crisis is augmentation, not necessarily automation. He founded the company BioBright, which offers a suite of software, hardware, and services to turn laboratories into ‘smart labs’. BioBright’s tools enable automated data collection, using sensors, cameras and a simple raspberry pi computer. The system also interfaces with the lab equipment.

“We developed the first temperature sensor that fits in an Eppendorf tube,” said Fracchia in an MIT article. BioBright’s Darwin voice assistant enables researchers to issue voice notes instead of stopping to write down information manually. According to Fracchia, the system functions as a self-writing notebook, generates a reliable record and thereby eliminates human error.

Automation alone might not be the sole solution to make research more efficient and reproducible, yet the innovations described above show that advances in the field of AI could have the potential to change current paradigms of the lab.

Fracchia describes in a recent interview how he envisions scientists to wear lenses, that project holograms describing the content of our test tube. He and Knight and all the others believe that research in the life sciences can be transformed. Ultimately, such a transformation could boost the overall efficiency and quality of research and help to accelerate the field of life sciences even further.

CO-AUTHORED WITH RAPHAEL FERREIRA. Raphael has a master’s degree in synthetic and systems biology from Université Paris Diderot & Université Paris Descartes in France. He did his MSc project in the field of synthetic biology at the NASA Ames Laboratory in the US. He’s currently doing a Ph.D focusing on CRISPR technologies in Jens Nielsen’s lab at Chalmers University.

Images via shutterstock.com / phipatbig; opentrons.com; antha-lang.org; transcriptic.com; ginkgobioworks.com; CC 2.0 / PlaxcoLab

Credit to: http://labiotech.eu

0 notes

Text

New Post has been published on LabAutomations

New Post has been published on https://labautomations.com/8th-annual-biologics-formulation-development-and-drug-delivery-forum/

8th Annual Biologics Formulation Development and Drug Delivery Forum

14-16 June 2017

Berlin, Germany

This marcus evans forum will discuss key challenges in biologics development, confronting formulation scientists and assist them with a better experiment protocol to ensure quality processes, efficient methodology and minimise failure risks. The delegates will benefit from experienced case study and understand how to improve formulation stability, preventing aggregation, develop high-concentration forms; they will also learn how to use predictive and analytical methods in order to understand the behaviour and evolution of proteins under different conditions, help scientists characterising proteins and different components of the formulation. They will also apprehend the manufacturing and scale up challenges, looking at compatibility problems, lyophilisation issues, and consider the different possibilities offered for drug delivery, keeping in mind the crucial need for improving comfort and safety to patient.

Attending this premier marcus evans conference will enable you to:

Improve the design of biological formulation with automated and highthroughput solutions

Integrate QbD processes in biological drug development

Enhance stability process controlling aggregation

Improve characterization with efficient analytical tools

Discuss the regulatory environment around biological drug development

Address the challenges of scale up, lyophilisation and tech transfer processes

Exchange on the recent improvement in drug delivery for various biological molecules and vaccines

Learn from key practical case studies:

Janssen on HighThroughput methods for vaccines and protein and use of spectroscopic tools

Novo Nordisk looking at extractable and leachable risks

LMU Munich improving safety with new polymer syringes

GSK sharing challenges in freeze-thaw processes and small-scall design

Sanofi’s long term stability prediction methods for vaccines formulation

For more information, please visit the event website: https://goo.gl/1FyxTd

or contact Melini Hadjitheori at [email protected]

0 notes