Text

Culture and Creative Practice Week 12

This week I have finally recorded and finished the video for the summative. It was harder than I expected to get in front of the microphone and read out my script without stuttering. I have tried commentary from the past when I tried creating Youtube commentary videos while in high school, but it was a completely different experience. In those past videos, I was a reactionary commentator, which is a lot easier for me to do than plan and read. Since I get stressed out in social situations, I have to calm myself down to prevent myself from stuttering and getting words mixed up, which doesn’t happen when I'm flowing with the situation like in reactionary commentary. I also didn’t like making a video instead of doing a speech, as i find it easier to get it done in a single take than to perfect and spend huge amounts of time listening to my own voice. I think I did a good job on it but due to stress and other classes I couldn’t invest enough time on visual as I would have liked. But I think I did a good job on the script and was able to share my thinking and research effectively.

Video: https://youtu.be/0a1hEfnFVEE

0 notes

Text

Cultural Practices Questions

1. Discuss the cultural context of something in your blog.

In Wang Qingsong’s art titled “Work, Work, Work”, the artist shows the theatrics around working an office job. In society, a person's worth is based on how much money they can earn, and in effect, how much can someone work? The artist makes the argument that the rapid shift in industrialization is having negative effects on the individuals making up pieces in the giant puzzle, and if the basic human needs should be worth less than the work you can put in?

2. What are intrinsic vs instrumental notions of technology. Use an example from your blog.

Heidegger has three claims about technology:

technology is “not an instrument”, it is a way of understanding the world;

technology is “not a human activity”, but develops beyond human control;

technology is “the highest danger”, risking us to only see the world through technological thinking.

I do not agree with all of Heidegger’s claims. I agree that technology is “not an instrument” but in my opinion it goes beyond “understanding the world.” Technology at its basic is a tool used by humanity, and can be used without understanding. Does riding a bike let you understand the world? No, I would argue that technology is an improvement in life that allows humanity to progress as a species. I agree with the statement “technology is not a human activity.” It “develops beyond human control.” Humanity as a species learns and adapts through mistakes and trials. Technology is about taking our experiences as a species and using them in meaningful ways to develop and improve. I agree with the statement technology is “the highest danger.” As my opinion before, technology is a tool for humanity, and there are rational and irrational fears around what technology can cause. An example of this is in the technological advancement of dynamite; it was designed as a mining tool but ended up being used to murder other humans.

Instrumental technologies are a means to an end; you use something till it breaks.

Intrinsic technologies are valued by someone for its own sake; such as creating happiness for others.

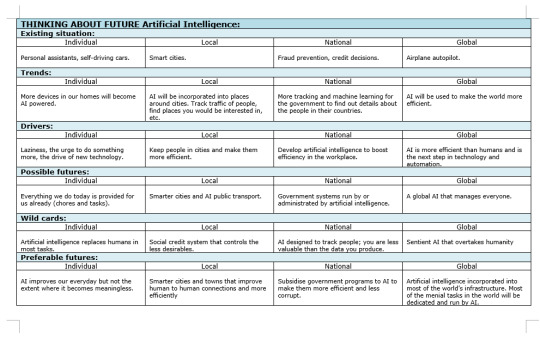

An example of this is artificial intelligence. AI is being used by humanity to automate tasks that humans normally would have to do, and more efficiently. This means that difficult tasks that were very menial for a person can be automated and taken for granted. AI is a means to an end: It's used to automate something or perform a task til that task no longer needs to be completed. But AI has changed how we understand ourselves and the world; is it ethical to make an intelligent machine work under us? Will artificial intelligence become better than humans, completely replacing the people it was designed to replace?

3. In regards to your engagement in your course of study, what sort of technologies are you interested in developing?

My technological focus is in automation; I am a lazy person and would rather write a script to spawn trees on a landscape than manually place them. To develop this I have been taking courses in artificial intelligence and studying it in my own time.

4. What systems does your tech example rely on or engage with?

Artificial intelligence uses cybernetic systems to achieve a goal; the difference between artificial intelligence and normal programs is the ability to analyse and self-improve. Artificial Intelligence must be trained to perform a task, like a human. It has a goal that will be affected by the environment, but also correct itself and learn how to perform the goal more efficiently. Object Orientated Ontology (OOO) is also used as artificial intelligence can operate beyond what humans can conceptualize. The AI is programmed using object-orientated languages and follows the systems of objects and classes.

5. What ethical ‘issues’ might be relevant to your example?

With artificial intelligence, a common theme in the media is that artificial intelligence will eventually develop beyond humanity and threaten our human race. This is a misconception but is an interesting look into what could happen when AI designed to replace humans in menial tasks will grow to replace humanity at everything. But is humanity really a system of goals in a object-orientated world? I believe artificial intelligence will never replace humanity due to what being human means; being unique. Every person is different and will perform tasks different to each other, in ways that artificial intelligence can not grow to learn or predict in all cases. The simple AIs that we have in the modern day do not have the capability to evolve in the same way that humans have and are limited in the amount of data we give them. Artificial intelligence is biased to the data that can be given to it, and runs on vulnerable technology that is subject to sudden change or catastrophic failure.

Qingsong, W. (2012). Work, Work, Work [Photograph]. C-type print, courtesy of the artist.

0 notes

Text

BCT research

Continuing from the first semester, the main research material is the DSM-5 manual used by healthcare professionals to diagnose mental and behavioural disorders. The game is built around the DSM-5 Autism Diagnostic Criteria, found at: https://www.autismspeaks.org/autism-diagnosis-criteria-dsm-5

These criteria include:

Persistent deficits in social communication and social interaction across multiple contexts, as manifested by the following, currently or by history

Restricted, repetitive patterns of behavior, interests, or activities, as manifested by at least two of the following, currently or by history

Symptoms must be present in the early developmental period (but may not become fully manifest until social demands exceed limited capacities or may be masked by learned strategies in later life).

Symptoms cause clinically significant impairment in social, occupational, or other important areas of current functioning.

These disturbances are not better explained by intellectual disability (intellectual developmental disorder) or global developmental delay. Intellectual disability and autism spectrum disorder frequently co-occur; to make comorbid diagnoses of autism spectrum disorder and intellectual disability, social communication should be below that expected for general developmental level.

0 notes

Text

CTEC608 Blogs

https://icantplaythis-bct.tumblr.com/post/188647229361/ctec608-week-1

https://icantplaythis-bct.tumblr.com/post/188647246021/ctec608-week-2

https://icantplaythis-bct.tumblr.com/post/188647260186/ctec608-week-3

https://icantplaythis-bct.tumblr.com/post/188647265701/ctec608-week-4

https://icantplaythis-bct.tumblr.com/post/188647272111/ctec608-week-7

https://icantplaythis-bct.tumblr.com/post/188647278761/ctec608-week-9

https://icantplaythis-bct.tumblr.com/post/188647312786/ctec608-week-10

https://icantplaythis-bct.tumblr.com/post/188647330686/ctec608-week-11

https://icantplaythis-bct.tumblr.com/post/188647342816/ctec608-week-12-13

0 notes

Text

CTEC608 Week 12 - 13

I have been unable to work on studio for these weeks due to the Game + Play Final Individual Report and Digital Fabrication Assessment 2 being due one after the other. In my free time I have been continuing to work on the needed script for the project, and doing research on how I can spawn trees within the map with a script.

0 notes

Text

CTEC608 Week 11

Monday 7th October

After experimenting with OpenCV in python, I have got a good idea on how to do facial detection. The guide I used to get an idea of how to use openCV for unity uses UDP to send data from python to unity, which I would rather not use. I will likely not be involved with installing this project onto the screen computer, so installing anaconda and openCV with python would be too confusing for the person who has to do it, and has too many variables that could go wrong. OpenCV has a unity store app assets that runs C# scripts in the unity platform without Python. I’m not experienced with C# as I am with python, but doing research, it shouldn’t be too hard compared with UDP porting with python.

I used the example facial detection script included with OpenCV for Unity to write a script for C#. The script currently uses a feature of OpenCV for Unity to create a texture of the webcam that can be used to show the webcam output inside unity as a seperate screen. I haven’t put in the code for setting a value of the number of faces, as I need to do more research on how OpenCV for Unity works. There isn’t a lot of documentation for it, and the help that exists is for the 2015 version.

The next step is working on the script for spawning the trees in, otherwise I will have a value being set that I can’t do anything with.

First iteration of script can be found here: https://github.com/ICantPlayThis/Project-Forest/blob/master/WebcamFaceDetection.cs

0 notes

Text

CTEC608 Week 10

Monday 30th September

Today I've been testing the facial detection part of the project. For the past month, I have been doing research on how I’m going to accomplish what I want to do with the facial detection and AI implementation. To detect faces, I have found library called OpenCV that use Python for real-time image detection.

I ran into a problem with the install guide that I was using (https://www.raywenderlich.com/5475-introduction-to-using-opencv-with-unity#toc-anchor-007) with the openCV install not working:

I fixed it by updating all the packages in Anaconda by typing ‘conda --update’ then running the install command for openCV. I think this error comes from the packages I had installed for Python 3.7 prior to installing Anaconda, which is a distribution of Python for scientific computing. In the future I will need to use Conda to install packages to make sure I won’t have any issues with package conflicts.

For the test app I created a simple script using Pycharm as my IDE.

This code runs a facial detection loop using the haar cascade from OpenCV to detect faces and print the number of faces in the console. Since I don’t have a webcam, I had to download a suspicious program to turn my DSLR camera into a webcam, that had an annoying watermark in the program making it hard to detect faces. I got the code working rather quickly and put it up on my GitHub to show Nate in class.

https://github.com/ICantPlayThis/Project-Forest/blob/master/WebcamFacialDetection.py

When trying to run it on my laptop, I ran into an error that I couldn’t fix, so I gave up on it since I don’t need to run the script on macOS.

0 notes

Text

CTEC608 Week 9

Wednesday 24th September

Today we are getting started on the first prototype of the project now called Fragmentation Forest. I created the project in Unity and started working on getting the assets needed for AI detection. Nate started working on adding the tree assets he made into the project and editor plugins for tree creation with Unity. Nate got a new laptop that could be used for the presentation instead of having to set up on one of the university computers. I am continuing to research AI and how I am going to use it in unity.

0 notes

Text

CTEC608 Week 7

Wednesday 28th August

Today was the concept proposal pitch for Joseph’s stream. I got there late as I got confused with the time, but saw some of the presentations. The groups that presented before us had slides filled with text and was hard to read and understand what the presenter was saying at the same time. Because I had previously done a presentation for Game + Play and had practiced how to present to shareholders, I think our presentation was successful. When our group went up to present, we had slides that had few text and large images to describe what we were talking about, as Nate and I verbally explained in detail. Nate said some comments that made the other groups and lecturers laugh, and got a lot of questions at the end to describe certain aspects of our concept further. I think because of this our presentation was successful, or more successful than other groups

0 notes

Text

CTEC608 Week 4

Tuesday 6th August

Today Nate and I decided to split into a group together like originally. We are working on the idea that our group had in Week 3 of New Zealand forests, but in more technical way of programming logic and creating models for the application. I still want to somehow incorporate AI into the application. My first idea for it was using AI to generate the terrain, but that isn’t intelligence if it’s just following rules to procedurally generate terrain. My next idea was to use AI to detect people in the nearby environment of the screen to affect the terrain being generated as the camera pans throughout the terrain. This will require a lot of research and hard work, but I think it will be worth it to make an interactive experience that I am happy with presenting. Nate has been working on models for trees and practicing to use blender. For the next few weeks we should be researching our current project idea to come up with solutions for problems with how we are going to make this application, and practicing using Unreal and Blender for when we still to work on a prototype in hopefully Week 7 or after semester break.

0 notes

Text

CTEC608 Week 3

Monday 29th July

Today I took a break from going into class due to my foot getting bitten by a white tail spider, so I was called by my group in class instead. The main concept for the project has changed and I feel that I no longer have a say in what the project is. This semester I am interested in using the screen to learn AI and to make something that will actually be used.I am considered either leaving the group or working with only Nate as he is also interested in doing a digital project, whereas Victoria and Charina want to make something physical with education. The project idea is now ‘Visual display that changes depending on the input.’ The screen will render something on the screen, and change in real time depending on data in front of the screen (number of people, women over men, different hand gestures). I like this idea as it lets me experiment with AI detecting multiple things.

Tuesday 30th July

Today we presented to the lecturers our current concept pitch. From what I discussed with my group on Monday the idea has changed to be focused around NZ foresting and generating a forest using AI detecting people and gestures using the camera on the screen. I like this idea but it will be hard to do in 10 weeks with the knowledge and experience of our group.

0 notes

Text

CTEC608 Week 2

Monday 22nd July

Today Nate and I discussed all the ideas we had over the week for a studio project involving the screens. I had the idea of a display that would use AI to detect a person and put a snapchat-style filter on them in the theme of a hobbit. This is relevant to Auckland because there is a Lord of the Rings series being filmed in Auckland soon, which will increase tourism to the area. We are still trying to come up with ideas and Charina has joined the group with Victoria also considering joining.

Tuesday 23rd July

Today Victoria and Charina have joined the group with Nate and I, and want to make something physical which conflicts with what Nate and I want to do this semester. I mainly focused on writing up the documentation for Cristian’s Studio stream as my Game + Play project this semester, since he has the write up due next week.

Friday 26th July

Today I had Game + Play and Digital Fabrication today, but went into studio after discussing with Victoria on Discord. We talked about what we both wanted from the Studio project, and how she wants to make something physical with language or educational. These aren’t really what i’m interested in so we need to compromise on a project idea or split our group.

0 notes

Text

CTEC608 Week 1

Monday 15th July

Today we started the semester and chose our Studio streams. I decided on the AUTlive and AucklandLive stream because it's different to everything else, and is an interesting project to make something on a display and interactive. I talked to my previous group members on what they were doing, and decided to work with Nate this semester on an interactive display. Cristian and I had an interesting idea for a mobile app, but I think that gaining the skills to design a product for the public that will actually be used will be more interesting and something useful for the future. I want to see if I could work on the app for the Game and Play paper, as it's still something that I want to work on.

Tuesday 16th July

Today we met up with Charles and went down to Aotea Square to learn more about the project and what we can make for the screens. The AucklandLive screen is a large screen that has a camera attached to the side of it, and can be moved to different places. The content on the screen is changed every 20-30 minutes from orchestra performances to live streams of the ice rink. It has a camera on the side so it can be used for taking photos or even video of whoever is in front of the screen. The people who walked past the screen were adults, parents with their kids, workers, teenagers, and mostly Asians that were walking through the square or stopping by the events hosted in Aotea Square. For a project on this screen, it would be good to do something interactive that people come up to to play with, but also content non-interactive would work with people sitting down nearby.

Then we went to the AUTlive screen in the WZ building, which Jenna from my Game and Play elective showcased her project on. She had an interactive display that used a camera and Xbox Connect to track people moving in front of the screen and turn them into silhouettes. This was a cool idea for the technology, but didn’t work with the small amount of people walking past the screen. The area looked like it was mostly used for students studying or getting something to eat and there were few tables and chairs for people to use. For this screen it would be better to have a display that is non-interactive as I didn’t see many people using it. Using it to show AUT-related events and content would be a good use of it, as many students walk past the building to class or away from AUT, so showing current events would be a good form of advertisement. A good project idea for this screen would be a content display that shows trailers or interesting info about events currently happening at and around AUT.

0 notes

Text

Glass Model

For this assignment I decided to model a glass cup that I had on my desk at the time. This glass is different to all other cups I've seen due to it being octagonal with a thick solid glass base to stop it from tipping over. The glass walls are about 1mm thick with rounded edges. I tried to keep the model as realistic as possible by rounding off all sharp sides and keeping it well balanced.

First I started with a sketch of an octagon with 25mm sides. Then I extruded it up to a general height that looked good and extruded the base to make the solid bottom. I set the material to glass to make it easier to look through the model, and began filleting all the sharp edges on the glass. This includes the top of the glass, inside the glass, and the bottom of the base. When I finished, I didn’t think it was complex enough so decided to try and include a greedy cup on the inside, that overflows once it goes over the pipe on the inside column. I made a sketch inside the model of the path for the pipe tool but kept running into errors with the path not being parallel, so I stopped at just putting the glass column in the center. If I had continued with this model, I would have made a hollow pipe within the glass column to the top, round the bend, and down to the bottom of the glass where it could flow out safely.

0 notes

Text

BCT Project_Studio Process Documentation

Due to the lack of blogs this semester, the development of my project can be seen in the group documentation in the form of a timeline, and a explanation of decisions can be found in my reflective statement and group documentation.

For timestamps and info on what I managed in the project:

The project repository on Github can be found here

Commits in the repository with timestamps can be found here

Commits in the old repository with timestamps can be found here

Documented guides and fixes I did for my group can be found here

0 notes

Text

Game + Play Prototypes

Prototype 1 - Shader pack version:

https://drive.google.com/open?id=1CCJ7f0NXUIeW340H5jz-QhYMDZVCH4pd

Prototype 2 - Resource pack version:

https://drive.google.com/open?id=1sEQbsCcZf1mPeqYKjo7e4jwykoJDxZGm

0 notes