# //example.com will use the current page's protocol

Explore tagged Tumblr posts

Text

Absolute Link: The Complete Guide to Utilizing It

In the world of web development and search engine optimization (SEO), Absolute link play a crucial role in connecting different web pages. Among the various types of links, absolute links hold significant importance. Understanding what absolute links are and how to utilize them can greatly enhance your website's visibility and user experience. In this article, we will explore the concept of absolute links, their advantages, and best practices for implementing them effectively.

What is an Absolute Link?

An absolute link, also known as an absolute URL, is a complete web address that includes the full path to a specific webpage. It consists of the protocol (such as HTTP or HTTPS), domain name, subdirectory (if applicable), and the filename or extension of the page. Absolute links provide a direct and unambiguous reference to a web resource, allowing users and search engines to navigate seamlessly across websites.

Absolute Link vs. Relative Link

To understand the significance of absolute links, it's important to differentiate them from relative links. While absolute links provide a complete web address, relative links specify the path to a resource relative to the current location. Relative links are commonly used within a website to connect pages internally. However, when it comes to external references or navigation across different domains, absolute links are preferred.

The Importance of Absolute Links in SEO

Absolute links have several advantages in the realm of SEO. Search engines rely on links to discover and index web pages, and absolute links provide a clear and definitive path for search engine crawlers to follow. By using absolute links, you ensure that search engines can accurately navigate and understand the structure of your website, which can positively impact your search rankings. Additionally, absolute links contribute to better user experience. When users encounter absolute links, they can easily identify the destination of the link and trust that it will take them to the intended page. This transparency helps reduce bounce rates and enhances user engagement, leading to improved conversion rates.

How to Create an Absolute Link

Creating an absolute link is a straightforward process. To generate an absolute link, you need to include the protocol (HTTP or HTTPS), followed by the domain name, any subdirectories, and the filename or extension of the page. For example, an absolute link to a blog post titled "SEO Best Practices" on the website "example.com" would appear as follows: https://www.example.com/blog/seo-best-practices. To ensure the accuracy and validity of absolute links, it's essential to double-check the link address before implementation. One incorrect character or missing component can lead to broken links and negatively impact user experience and SEO.

Best Practices for Using Absolute Links

To maximize the benefits of absolute links, it's important to follow these best practices: - Use absolute links for external references or when linking across different domains. - Ensure that all absolute links are correctly formatted with the appropriate protocol (HTTP or HTTPS) and valid domain name. - Avoid using generic anchor text like "click here" and instead utilize descriptive anchor text that reflects the destination page. - Regularly check the absolute links on your website to ensure they are functioning correctly and haven't become broken or outdated. - Consider implementing absolute links for important internal pages to provide a consistent and reliable user experience. By adhering to these best practices, you can harness the power of absolute links to enhance your website's SEO and user engagement.

Common Mistakes to Avoid

While absolute links offer numerous benefits, it's crucial to be aware of common mistakes that can hinder their effectiveness. Here are some mistakes to avoid: - Using absolute links unnecessarily within your own website when relative links would suffice. - Neglecting to update absolute links when making changes to your website's structure or domain. - Including broken or incorrect links that lead to non-existent pages. - Overusing anchor text with keywords in absolute links, which can be seen as spammy by search engines. - Failing to regularly audit and update absolute links, resulting in outdated or broken references. By avoiding these mistakes, you can maintain the integrity and effectiveness of your absolute links.

Benefits of Using Absolute Links

Utilizing absolute links offers several benefits for your website and SEO efforts: - Improved search engine visibility: Absolute links provide search engine crawlers with a clear path to navigate and index your web pages effectively. - Enhanced user experience: Clear and direct absolute links improve user engagement, reduce bounce rates, and increase the likelihood of conversions. - Consistency across domains: When linking to external websites or resources, absolute links ensure that users are directed to the correct page regardless of any changes in the destination site's structure. - Easier management and troubleshooting: Absolute links make it easier to identify and fix broken links, as the complete URL provides valuable information for diagnosis. By leveraging these benefits, you can optimize your website's performance and achieve your SEO goals.

Absolute Links in Social Media

The use of absolute links extends beyond websites and can be applied to social media platforms as well. When sharing content on social media, using absolute links ensures that users are directed to the desired web page accurately. Whether it's a blog post, product page, or landing page, absolute links help maintain consistency and improve the user experience across different platforms.

Tools and Resources for Absolute Link Management

Managing and monitoring absolute links can be simplified with the help of various tools and resources. Here are a few recommended options: - Link checker tools: Tools like Xenu's Link Sleuth and W3C Link Checker can scan your website for broken or incorrect links, allowing you to quickly identify and rectify any issues. - Google Search Console: This free tool provided by Google offers insights into your website's performance, including indexing status, search queries, and link data. - Content management systems (CMS): Popular CMS platforms like WordPress and Drupal often include built-in link management features that help maintain the integrity of your absolute links. By utilizing these tools and resources, you can effectively manage your absolute links and ensure their optimal performance. Also Read: How Local SEO Services in Houston Can Be the Best Decision?

Conclusion

Absolute links are an essential component of effective web development and SEO strategies. By understanding their purpose, creating them correctly, and implementing best practices, you can enhance your website's visibility, user experience, and search engine rankings. Remember to regularly audit and update your absolute links to keep them functional and relevant. Embrace the power of absolute links and unlock the full potential of your website's online presence.

FAQs

Q. What is the difference between absolute links and relative links? A. Absolute links provide a complete web address, including the protocol, domain name, and page path, while relative links specify the path to a resource relative to the current location. Q. Why are absolute links important for SEO? A. Absolute links help search engine crawlers navigate and index web pages accurately, leading to improved search rankings. They also enhance user experience by providing transparent and trustworthy navigation. Q. How do I create an absolute link? A. To create an absolute link, include the protocol (HTTP or HTTPS), followed by the domain name, any subdirectories, and the filename or extension of the page. Q. What are the best practices for using absolute links? A. Best practices for using absolute links include using them for external references or across different domains, ensuring correct formatting, using descriptive anchor text, and regularly checking for broken links. Q. Can We use absolute links on social media? A. Yes, We can use them on social media sites. Q. Are there any tools to help manage absolute links? A. Yes, there are tools such as link checkers and content management systems that can assist in managing and monitoring the performance of absolute links. Read the full article

0 notes

Text

#Protocol-relative URLs have no protocol specified. For example# //example.com will use the current page's protocol#typically HTTP or HTTPS.

0 notes

Link

In order to show up in search results, your content needs to first be visible to search engines. It’s arguably the most important piece of the SEO puzzle: If your site can’t be found, there’s no way you’ll ever show up in the SERPs (Search Engine Results Page).

How do search engines work?

Search engines have three primary functions:

Crawl: Scour the Internet for content, looking over the code/content for each URL they find.

Index: Store and organize the content found during the crawling process. Once a page is in the index, it’s in the running to be displayed as a result to relevant queries.

Rank: Provide the pieces of content that will best answer a searcher’s query, which means that results are ordered by most relevant to least relevant.

What is search engine crawling?

Crawling is the discovery process in which search engines send out a team of robots (known as crawlers or spiders) to find new and updated content. Content can vary — it could be a webpage, an image, a video, a PDF, etc. — but regardless of the format, content is discovered by links.

What’s that word mean?

Having trouble with any of the definitions in this section? Our SEO glossary has chapter-specific definitions to help you stay up-to-speed.

Google bot starts out by fetching a few web pages, and then follows the links on those webpages to find new URLs. By hopping along this path of links, the crawler is able to find new content and add it to their index called Caffeine — a massive database of discovered URLs — to later be retrieved when a searcher is seeking information that the content on that URL is a good match for.

What is a search engine index?

Search engines process and store information they find in an index, a huge database of all the content they’ve discovered and deem good enough to serve up to searchers.

Search engine ranking

When someone performs a search, search engines scour their index for highly relevant content and then orders that content in the hopes of solving the searcher’s query. This ordering of search results by relevance is known as ranking. In general, you can assume that the higher a website is ranked, the more relevant the search engine believes that site is to the query.

It’s possible to block search engine crawlers from part or all of your site, or instruct search engines to avoid storing certain pages in their index. While there can be reasons for doing this, if you want your content found by searchers, you have to first make sure it’s accessible to crawlers and is indexable. Otherwise, it’s as good as invisible.

By the end of this chapter, you’ll have the context you need to work with the search engine, rather than against it!

In SEO, not all search engines are equal

Many beginners wonder about the relative importance of particular search engines. Most people know that Google has the largest market share, but how important it is to optimize for Bing, Yahoo, and others? The truth is that despite the existence of more than 30 major web search engines, the SEO community really only pays attention to Google. Why? The short answer is that Google is where the vast majority of people search the web. If we include Google Images, Google Maps, and YouTube (a Google property), more than 90% of web searches happen on Google — that’s nearly 20 times Bing and Yahoo combined.

Crawling: Can search engines find your pages?

As you’ve just learned, making sure your site gets crawled and indexed is a prerequisite to showing up in the SERPs. If you already have a website, it might be a good idea to start off by seeing how many of your pages are in the index. This will yield some great insights into whether Google is crawling and finding all the pages you want it to, and none that you don’t.

One way to check your indexed pages is “site:yourdomain.com”, an advanced search operator. Head to Google and type “site:yourdomain.com” into the search bar. This will return results Google has in its index for the site specified:

The number of results Google displays (see “About XX results” above) isn’t exact, but it does give you a solid idea of which pages are indexed on your site and how they are currently showing up in search results.

For more accurate results, monitor and use the Index Coverage report in Google Search Console. You can sign up for a free Google Search Console account if you don’t currently have one. With this tool, you can submit sitemaps for your site and monitor how many submitted pages have actually been added to Google’s index, among other things.

If you’re not showing up anywhere in the search results, there are a few possible reasons why:

Your site is brand new and hasn’t been crawled yet.

Your site isn’t linked to from any external websites.

Your site’s navigation makes it hard for a robot to crawl it effectively.

Your site contains some basic code called crawler directives that is blocking search engines.

Your site has been penalized by Google for spammy tactics.

Tell search engines how to crawl your site

If you used Google Search Console or the “site:domain.com” advanced search operator and found that some of your important pages are missing from the index and/or some of your unimportant pages have been mistakenly indexed, there are some optimizations you can implement to better direct Googlebot how you want your web content crawled. Telling search engines how to crawl your site can give you better control of what ends up in the index.

Most people think about making sure Google can find their important pages, but it’s easy to forget that there are likely pages you don’t want Googlebot to find. These might include things like old URLs that have thin content, duplicate URLs (such as sort-and-filter parameters for e-commerce), special promo code pages, staging or test pages, and so on.

To direct Googlebot away from certain pages and sections of your site, use robots.txt.

Robots.txt

Robots.txt files are located in the root directory of websites (ex. yourdomain.com/robots.txt) and suggest which parts of your site search engines should and shouldn’t crawl, as well as the speed at which they crawl your site, via specific robots.txt directives.

How Googlebot treats robots.txt files

If Googlebot can’t find a robots.txt file for a site, it proceeds to crawl the site.

If Googlebot finds a robots.txt file for a site, it will usually abide by the suggestions and proceed to crawl the site.

If Googlebot encounters an error while trying to access a site’s robots.txt file and can’t determine if one exists or not, it won’t crawl the site.

Optimize for crawl budget!

Crawl budget is the average number of URLs Googlebot will crawl on your site before leaving, so crawl budget optimization ensures that Googlebot isn’t wasting time crawling through your unimportant pages at risk of ignoring your important pages. Crawl budget is most important on very large sites with tens of thousands of URLs, but it’s never a bad idea to block crawlers from accessing the content you definitely don’t care about. Just make sure not to block a crawler’s access to pages you’ve added other directives on, such as canonical or noindex tags. If Googlebot is blocked from a page, it won’t be able to see the instructions on that page.

Not all web robots follow robots.txt. People with bad intentions (e.g., e-mail address scrapers) build bots that don’t follow this protocol. In fact, some bad actors use robots.txt files to find where you’ve located your private content. Although it might seem logical to block crawlers from private pages such as login and administration pages so that they don’t show up in the index, placing the location of those URLs in a publicly accessible robots.txt file also means that people with malicious intent can more easily find them. It’s better to NoIndex these pages and gate them behind a login form rather than place them in your robots.txt file.

You can read more details about this in the robots.txt portion of our Learning Center.

Defining URL parameters in GSC

Some sites (most common with e-commerce) make the same content available on multiple different URLs by appending certain parameters to URLs. If you’ve ever shopped online, you’ve likely narrowed down your search via filters. For example, you may search for “shoes” on Amazon, and then refine your search by size, color, and style. Each time you refine, the URL changes slightly:

https://www.example.com/products/women/dresses/green.htm

https://www.example.com/products/women?category=dresses&color=green

https://example.com/shopindex.php?product_id=32&highlight=green+dress &cat_id=1&sessionid=123$affid=43

How does Google know which version of the URL to serve to searchers? Google does a pretty good job at figuring out the representative URL on its own, but you can use the URL Parameters feature in Google Search Console to tell Google exactly how you want them to treat your pages. If you use this feature to tell Googlebot “crawl no URLs with ____ parameter,” then you’re essentially asking to hide this content from Googlebot, which could result in the removal of those pages from search results. That’s what you want if those parameters create duplicate pages, but not ideal if you want those pages to be indexed.

Can crawlers find all your important content?

Now that you know some tactics for ensuring search engine crawlers stay away from your unimportant content, let’s learn about the optimizations that can help Googlebot find your important pages.

Sometimes a search engine will be able to find parts of your site by crawling, but other pages or sections might be obscured for one reason or another. It’s important to make sure that search engines are able to discover all the content you want indexed, and not just your homepage.

Ask yourself this: Can the bot crawl through your website, and not just to it?

Is your content hidden behind login forms?

If you require users to log in, fill out forms, or answer surveys before accessing certain content, search engines won’t see those protected pages. A crawler is definitely not going to log in.

Are you relying on search forms?

Robots cannot use search forms. Some individuals believe that if they place a search box on their site, search engines will be able to find everything that their visitors search for.

Is text hidden within non-text content?

Non-text media forms (images, video, GIFs, etc.) should not be used to display text that you wish to be indexed. While search engines are getting better at recognizing images, there’s no guarantee they will be able to read and understand it just yet. It’s always best to add text within the <HTML> markup of your webpage.

Can search engines follow your site navigation?

Just as a crawler needs to discover your site via links from other sites, it needs a path of links on your own site to guide it from page to page. If you’ve got a page you want search engines to find but it isn’t linked to from any other pages, it’s as good as invisible. Many sites make the critical mistake of structuring their navigation in ways that are inaccessible to search engines, hindering their ability to get listed in search results.

Common navigation mistakes that can keep crawlers from seeing all of your site:

Having a mobile navigation that shows different results than your desktop navigation

Any type of navigation where the menu items are not in the HTML, such as JavaScript-enabled navigations. Google has gotten much better at crawling and understanding Javascript, but it’s still not a perfect process. The more surefire way to ensure something gets found, understood, and indexed by Google is by putting it in the HTML.

Personalization, or showing unique navigation to a specific type of visitor versus others, could appear to be cloaking to a search engine crawler

Forgetting to link to a primary page on your website through your navigation — remember, links are the paths crawlers follow to new pages!

This is why it’s essential that your website has a clear navigation and helpful URL folder structures.

Do you have clean information architecture?

Information architecture is the practice of organizing and labeling content on a website to improve efficiency and findability for users. The best information architecture is intuitive, meaning that users shouldn’t have to think very hard to flow through your website or to find something.

Are you utilizing sitemaps?

A sitemap is just what it sounds like: a list of URLs on your site that crawlers can use to discover and index your content. One of the easiest ways to ensure Google is finding your highest priority pages is to create a file that meets Google’s standards and submit it through Google Search Console. While submitting a sitemap doesn’t replace the need for good site navigation, it can certainly help crawlers follow a path to all of your important pages.

Ensure that you’ve only included URLs that you want indexed by search engines, and be sure to give crawlers consistent directions. For example, don’t include a URL in your sitemap if you’ve blocked that URL via robots.txt or include URLs in your sitemap that are duplicates rather than the preferred, canonical version (we’ll provide more information on canonicalization in Chapter 5!).

If your site doesn’t have any other sites linking to it, you still might be able to get it indexed by submitting your XML sitemap in Google Search Console. There’s no guarantee they’ll include a submitted URL in their index, but it’s worth a try!

Are crawlers getting errors when they try to access your URLs?

In the process of crawling the URLs on your site, a crawler may encounter errors. You can go to Google Search Console’s “Crawl Errors” report to detect URLs on which this might be happening – this report will show you server errors and not found errors. Server log files can also show you this, as well as a treasure trove of other information such as crawl frequency, but because accessing and dissecting server log files is a more advanced tactic, we won’t discuss it at length in the Beginner’s Guide, although you can learn more about it here.

Before you can do anything meaningful with the crawl error report, it’s important to understand server errors and “not found” errors.

4xx Codes: When search engine crawlers can’t access your content due to a client error

4xx errors are client errors, meaning the requested URL contains bad syntax or cannot be fulfilled. One of the most common 4xx errors is the “404 – not found” error. These might occur because of a URL typo, deleted page, or broken redirect, just to name a few examples. When search engines hit a 404, they can’t access the URL. When users hit a 404, they can get frustrated and leave.

5xx Codes: When search engine crawlers can’t access your content due to a server error

5xx errors are server errors, meaning the server the web page is located on failed to fulfill the searcher or search engine’s request to access the page. In Google Search Console’s “Crawl Error” report, there is a tab dedicated to these errors. These typically happen because the request for the URL timed out, so Google-bot abandoned the request. View Google’s documentation to learn more about fixing server connectivity issues.

Thankfully, there is a way to tell both searchers and search engines that your page has moved — the 301 (permanent) redirect.

Create custom 404 pages!

Customize your 404 page by adding in links to important pages on your site, a site search feature, and even contact information. This should make it less likely that visitors will bounce off your site when they hit a 404.

Say you move a page from example.com/young-dogs/ to example.com/puppies/. Search engines and users need a bridge to cross from the old URL to the new. That bridge is a 301 redirect.

When you do implement a 301:When you don’t implement a 301:

Link EquityTransfers link equity from the page’s old location to the new URL.Without a 301, the authority from the previous URL is not passed on to the new version of the URL.

IndexingHelps Google find and index the new version of the page.The presence of 404 errors on your site alone don’t harm search performance, but letting ranking / trafficked pages 404 can result in them falling out of the index, with rankings and traffic going with them — yikes!

User ExperienceEnsures users find the page they’re looking for.Allowing your visitors to click on dead links will take them to error pages instead of the intended page, which can be frustrating.

The 301 status code itself means that the page has permanently moved to a new location, so avoid redirecting URLs to irrelevant pages — URLs where the old URL’s content doesn’t actually live. If a page is ranking for a query and you 301 it to a URL with different content, it might drop in rank position because the content that made it relevant to that particular query isn’t there anymore. 301s are powerful — move URLs responsibly!

You also have the option of 302 redirecting a page, but this should be reserved for temporary moves and in cases where passing link equity isn’t as big of a concern. 302s are kind of like a road detour. You’re temporarily siphoning traffic through a certain route, but it won’t be like that forever.

Watch out for redirect chains!

It can be difficult for Googlebot to reach your page if it has to go through multiple redirects. Google calls these “redirect chains” and they recommend limiting them as much as possible. If you redirect example.com/1 to example.com/2, then later decide to redirect it to example.com/3, it’s best to eliminate the middleman and simply redirect example.com/1 to example.com/3.

Once you’ve ensured your site is optimized for crawlability, the next order of business is to make sure it can be indexed.

Indexing: How do search engines interpret and store your pages?

Once you’ve ensured your site has been crawled, the next order of business is to make sure it can be indexed. That’s right — just because your site can be discovered and crawled by a search engine doesn’t necessarily mean that it will be stored in their index. In the previous section on crawling, we discussed how search engines discover your web pages. The index is where your discovered pages are stored. After a crawler finds a page, the search engine renders it just like a browser would. In the process of doing so, the search engine analyzes that page’s contents. All of that information is stored in its index.

Read on to learn about how indexing works and how you can make sure your site makes it into this all-important database.

Can I see how a Google-bot crawler sees my pages?

Yes, the cached version of your page will reflect a snapshot of the last time Googlebot crawled it.

Google crawls and caches web pages at different frequencies. More established, well-known sites that post frequently like https://www.abc.com will be crawled more frequently than the much-less-famous website, http://www.xyz.com (if only it were real…)

You can view what your cached version of a page looks like by clicking the drop-down arrow next to the URL in the SERP and choosing “Cached”:

You can also view the text-only version of your site to determine if your important content is being crawled and cached effectively.

Are pages ever removed from the index?

Yes, pages can be removed from the index! Some of the main reasons why a URL might be removed include:

The URL is returning a “not found” error (4XX) or server error (5XX) – This could be accidental (the page was moved and a 301 redirect was not set up) or intentional (the page was deleted and 404ed in order to get it removed from the index)

The URL had a noindex meta tag added – This tag can be added by site owners to instruct the search engine to omit the page from its index.

The URL has been manually penalized for violating the search engine’s Webmaster Guidelines and, as a result, was removed from the index.

The URL has been blocked from crawling with the addition of a password required before visitors can access the page.

If you believe that a page on your website that was previously in Google’s index is no longer showing up, you can use the URL Inspection tool to learn the status of the page, or use Fetch as Google which has a “Request Indexing” feature to submit individual URLs to the index. (Bonus: GSC’s “fetch” tool also has a “render” option that allows you to see if there are any issues with how Google is interpreting your page).

Tell search engines how to index your site

Robots meta directives

Meta directives (or “meta tags”) are instructions you can give to search engines regarding how you want your web page to be treated.

You can tell search engine crawlers things like “do not index this page in search results” or “don’t pass any link equity to any on-page links”. These instructions are executed via Robots Meta Tags in the <head> of your HTML pages (most commonly used) or via the X-Robots-Tag in the HTTP header.

Robots meta tag

The robots meta tag can be used within the <head> of the HTML of your webpage. It can exclude all or specific search engines. The following are the most common meta directives, along with what situations you might apply them in.

index/noindex tells the engines whether the page should be crawled and kept in a search engines’ index for retrieval. If you opt to use “noindex,” you’re communicating to crawlers that you want the page excluded from search results. By default, search engines assume they can index all pages, so using the “index” value is unnecessary.

When you might use: You might opt to mark a page as “noindex” if you’re trying to trim thin pages from Google’s index of your site (ex: user generated profile pages) but you still want them accessible to visitors.

follow/nofollow tells search engines whether links on the page should be followed or nofollowed. “Follow” results in bots following the links on your page and passing link equity through to those URLs. Or, if you elect to employ “nofollow,” the search engines will not follow or pass any link equity through to the links on the page. By default, all pages are assumed to have the “follow” attribute.

When you might use: nofollow is often used together with noindex when you’re trying to prevent a page from being indexed as well as prevent the crawler from following links on the page.

noarchive is used to restrict search engines from saving a cached copy of the page. By default, the engines will maintain visible copies of all pages they have indexed, accessible to searchers through the cached link in the search results.

When you might use: If you run an e-commerce site and your prices change regularly, you might consider the noarchive tag to prevent searchers from seeing outdated pricing.

Here’s an example of a meta robots noindex, nofollow tag:

<!DOCTYPE html> <html> <head> <meta name=”robots” content=”noindex, nofollow” /> </head> <body>…</body> </html>

This example excludes all search engines from indexing the page and from following any on-page links. If you want to exclude multiple crawlers, like googlebot and bing for example, it’s okay to use multiple robot exclusion tags.

Meta directives affect indexing, not crawling

Google-bot needs to crawl your page in order to see its meta directives, so if you’re trying to prevent crawlers from accessing certain pages, meta directives are not the way to do it. Robots tags must be crawled to be respected.

X-Robots-Tag

The x-robots tag is used within the HTTP header of your URL, providing more flexibility and functionality than meta tags if you want to block search engines at scale because you can use regular expressions, block non-HTML files, and apply sitewide noindex tags.

For example, you could easily exclude entire folders or file types (like moz.com/no-bake/old-recipes-to-noindex):

<Files ~ “/?no-bake/.*”> Header set X-Robots-Tag “noindex, nofollow” </Files>

The derivatives used in a robots meta tag can also be used in an X-Robots-Tag.

Or specific file types (like PDFs):

<Files ~ “.pdf$”> Header set X-Robots-Tag “noindex, nofollow” </Files>

For more information on Meta Robot Tags, explore Google’s Robots Meta Tag Specifications.

WordPress tip:

In Dashboard > Settings > Reading, make sure the “Search Engine Visibility” box is not checked. This blocks search engines from coming to your site via your robots.txt file!

Understanding the different ways you can influence crawling and indexing will help you avoid the common pitfalls that can prevent your important pages from getting found.

Ranking: How do search engines rank URLs?

How do search engines ensure that when someone types a query into the search bar, they get relevant results in return? That process is known as ranking, or the ordering of search results by most relevant to least relevant to a particular query.

To determine relevance, search engines use algorithms, a process or formula by which stored information is retrieved and ordered in meaningful ways. These algorithms have gone through many changes over the years in order to improve the quality of search results. Google, for example, makes algorithm adjustments every day — some of these updates are minor quality tweaks, whereas others are core/broad algorithm updates deployed to tackle a specific issue, like Penguin to tackle link spam. Check out our Google Algorithm Change History for a list of both confirmed and unconfirmed Google updates going back to the year 2000.

Why does the algorithm change so often? Is Google just trying to keep us on our toes? While Google doesn’t always reveal specifics as to why they do what they do, we do know that Google’s aim when making algorithm adjustments is to improve overall search quality. That’s why, in response to algorithm update questions, Google will answer with something along the lines of: “We’re making quality updates all the time.” This indicates that, if your site suffered after an algorithm adjustment, compare it against Google’s Quality Guidelines or Search Quality Rater Guidelines, both are very telling in terms of what search engines want.

What do search engines want?

Search engines have always wanted the same thing: to provide useful answers to searcher’s questions in the most helpful formats. If that’s true, then why does it appear that SEO is different now than in years past?

Think about it in terms of someone learning a new language.

At first, their understanding of the language is very rudimentary — “See Spot Run.” Over time, their understanding starts to deepen, and they learn semantics — the meaning behind language and the relationship between words and phrases. Eventually, with enough practice, the student knows the language well enough to even understand nuance, and is able to provide answers to even vague or incomplete questions.

When search engines were just beginning to learn our language, it was much easier to game the system by using tricks and tactics that actually go against quality guidelines. Take keyword stuffing, for example. If you wanted to rank for a particular keyword like “funny jokes,” you might add the words “funny jokes” a bunch of times onto your page, and make it bold, in hopes of boosting your ranking for that term:

Welcome to funny jokes! We tell the funniest jokes in the world. Funny jokes are fun and crazy. Your funny joke awaits. Sit back and read funny jokes because funny jokes can make you happy and funnier. Some funny favorite funny jokes.

This tactic made for terrible user experiences, and instead of laughing at funny jokes, people were bombarded by annoying, hard-to-read text. It may have worked in the past, but this is never what search engines wanted.

The role links play in SEO

When we talk about links, we could mean two things. Backlinks or “inbound links” are links from other websites that point to your website, while internal links are links on your own site that point to your other pages (on the same site).

Links have historically played a big role in SEO. Very early on, search engines needed help figuring out which URLs were more trustworthy than others to help them determine how to rank search results. Calculating the number of links pointing to any given site helped them do this.

Backlinks work very similarly to real-life WoM (Word-of-Mouth) referrals. Let’s take a hypothetical coffee shop, Jenny’s Coffee, as an example:

Referrals from others = good sign of authority

Referrals from yourself = biased, so not a good sign of authority

Referrals from irrelevant or low-quality sources = not a good sign of authority and could even get you flagged for spam

No referrals = unclear authority

Example: Many different people have all told you that Jenny’s Coffee is the best in town

Example: Jenny claims that Jenny’s Coffee is the best in town

Example: Jenny paid to have people who have never visited her coffee shop tell others how good it is.

Example: Jenny’s Coffee might be good, but you’ve been unable to find anyone who has an opinion so you can’t be sure.

This is why PageRank was created. PageRank (part of Google’s core algorithm) is a link analysis algorithm named after one of Google’s founders, Larry Page. PageRank estimates the importance of a web page by measuring the quality and quantity of links pointing to it. The assumption is that the more relevant, important, and trustworthy a web page is, the more links it will have earned.

The more natural backlinks you have from high-authority (trusted) websites, the better your odds are to rank higher within search results.

The role content plays in SEO

There would be no point to links if they didn’t direct searchers to something. That something is content! Content is more than just words; it’s anything meant to be consumed by searchers — there’s video content, image content, and of course, text. If search engines are answer machines, content is the means by which the engines deliver those answers.

Any time someone performs a search, there are thousands of possible results, so how do search engines decide which pages the searcher is going to find valuable? A big part of determining where your page will rank for a given query is how well the content on your page matches the query’s intent. In other words, does this page match the words that were searched and help fulfill the task the searcher was trying to accomplish?

Because of this focus on user satisfaction and task accomplishment, there’s no strict benchmarks on how long your content should be, how many times it should contain a keyword, or what you put in your header tags. All those can play a role in how well a page performs in search, but the focus should be on the users who will be reading the content.

Today, with hundreds or even thousands of ranking signals, the top three have stayed fairly consistent: links to your website (which serve as a third-party credibility signals), on-page content (quality content that fulfills a searcher’s intent), and RankBrain.

What is RankBrain?

RankBrain is the machine learning component of Google’s core algorithm. Machine learning is a computer program that continues to improve its predictions over time through new observations and training data. In other words, it’s always learning, and because it’s always learning, search results should be constantly improving.

For example, if RankBrain notices a lower ranking URL providing a better result to users than the higher ranking URLs, you can bet that RankBrain will adjust those results, moving the more relevant result higher and demoting the lesser relevant pages as a byproduct.

Like most things with the search engine, we don’t know exactly what comprises RankBrain, but apparently, neither do the folks at Google.

What does this mean for SEOs?

Because Google will continue leveraging RankBrain to promote the most relevant, helpful content, we need to focus on fulfilling searcher intent more than ever before. Provide the best possible information and experience for searchers who might land on your page, and you’ve taken a big first step to performing well in a RankBrain world.

Engagement metrics: correlation, causation, or both?

With Google rankings, engagement metrics are most likely part correlation and part causation.

When we say engagement metrics, we mean data that represents how searchers interact with your site from search results. This includes things like:

Clicks (visits from search)

Time on page (amount of time the visitor spent on a page before leaving it)

Bounce rate (the percentage of all website sessions where users viewed only one page)

Pogo-sticking (clicking on an organic result and then quickly returning to the SERP to choose another result)

Many tests, including Moz’s own ranking factor survey, have indicated that engagement metrics correlate with higher ranking, but causation has been hotly debated. Are good engagement metrics just indicative of highly ranked sites? Or are sites ranked highly because they possess good engagement metrics?

What Google has said

While they’ve never used the term “direct ranking signal,” Google has been clear that they absolutely use click data to modify the SERP for particular queries.

According to Google’s former Chief of Search Quality, Udi Manber:

“The ranking itself is affected by the click data. If we discover that, for a particular query, 80% of people click on #2 and only 10% click on #1, after a while we figure out probably #2 is the one people want, so we’ll switch it.”

Another comment from former Google engineer Edmond Lau corroborates this:

“It’s pretty clear that any reasonable search engine would use click data on their own results to feed back into ranking to improve the quality of search results. The actual mechanics of how click data is used is often proprietary, but Google makes it obvious that it uses click data with its patents on systems like rank-adjusted content items.”

Because Google needs to maintain and improve search quality, it seems inevitable that engagement metrics are more than correlation, but it would appear that Google falls short of calling engagement metrics a “ranking signal” because those metrics are used to improve search quality, and the rank of individual URLs is just a byproduct of that.

What tests have confirmed

Various tests have confirmed that Google will adjust SERP order in response to searcher engagement:

Rand Fishkin’s 2014 test resulted in a #7 result moving up to the #1 spot after getting around 200 people to click on the URL from the SERP. Interestingly, ranking improvement seemed to be isolated to the location of the people who visited the link. The rank position spiked in the US, where many participants were located, whereas it remained lower on the page in Google Canada, Google Australia, etc.

Larry Kim’s comparison of top pages and their average dwell time pre- and post-RankBrain seemed to indicate that the machine-learning component of Google’s algorithm demotes the rank position of pages that people don’t spend as much time on.

Darren Shaw’s testing has shown user behavior’s impact on local search and map pack results as well.

Since user engagement metrics are clearly used to adjust the SERPs for quality, and rank position changes as a byproduct, it’s safe to say that SEOs should optimize for engagement. Engagement doesn’t change the objective quality of your web page, but rather your value to searchers relative to other results for that query. That’s why, after no changes to your page or its backlinks, it could decline in rankings if searchers’ behaviors indicates they like other pages better.

In terms of ranking web pages, engagement metrics act like a fact-checker. Objective factors such as links and content first rank the page, then engagement metrics help Google adjust if they didn’t get it right.

The evolution of search results

Back when search engines lacked a lot of the sophistication they have today, the term “10 blue links” was coined to describe the flat structure of the SERP. Any time a search was performed, Google would return a page with 10 organic results, each in the same format.

In this search landscape, holding the #1 spot was the holy grail of SEO. But then something happened. Google began adding results in new formats on their search result pages, called SERP features. Some of these SERP features include:

Paid advertisements

Featured snippets

People Also Ask boxes

Local (map) pack

Knowledge panel

Sitelinks

And Google is adding new ones all the time. They even experimented with “zero-result SERPs,” a phenomenon where only one result from the Knowledge Graph was displayed on the SERP with no results below it except for an option to “view more results.”

The addition of these features caused some initial panic for two main reasons. For one, many of these features caused organic results to be pushed down further on the SERP. Another byproduct is that fewer searchers are clicking on the organic results since more queries are being answered on the SERP itself.

So why would Google do this? It all goes back to the search experience. User behavior indicates that some queries are better satisfied by different content formats. Notice how the different types of SERP features match the different types of query intents.

Query IntentPossible SERP Feature Triggered

InformationalFeatured snippet

Informational with one answerKnowledge Graph / instant answer

LocalMap pack

TransactionalShopping

It’s important to know that answers can be delivered to searchers in a wide array of formats, and how you structure your content can impact the format in which it appears in search.

Localized search

A search engine like Google has its own proprietary index of local business listings, from which it creates local search results.

If you are performing local SEO work for a business that has a physical location customers can visit (ex: dentist) or for a business that travels to visit their customers (ex: plumber), make sure that you claim, verify, and optimize a free Google My Business Listing.

When it comes to localized search results, Google uses three main factors to determine ranking:

Relevance

Distance

Prominence

Relevance

Relevance is how well a local business matches what the searcher is looking for. To ensure that the business is doing everything it can to be relevant to searchers, make sure the business’ information is thoroughly and accurately filled out.

Distance

Google use your geo-location to better serve you local results. Local search results are extremely sensitive to proximity, which refers to the location of the searcher and/or the location specified in the query (if the searcher included one).

Organic search results are sensitive to a searcher’s location, though seldom as pronounced as in local pack results.

Prominence

With prominence as a factor, Google is looking to reward businesses that are well-known in the real world. In addition to a business’ offline prominence, Google also looks to some online factors to determine local ranking, such as:

Reviews

The number of Google reviews a local business receives, and the sentiment of those reviews, have a notable impact on their ability to rank in local results.

Citations

A “business citation” or “business listing” is a web-based reference to a local business’ “NAP” (name, address, phone number) on a localized platform (Yelp, Acxiom, YP, Infogroup, Localeze, etc.).

Local rankings are influenced by the number and consistency of local business citations. Google pulls data from a wide variety of sources in continuously making up its local business index. When Google finds multiple consistent references to a business’s name, location, and phone number it strengthens Google’s “trust” in the validity of that data. This then leads to Google being able to show the business with a higher degree of confidence. Google also uses information from other sources on the web, such as links and articles.

Organic ranking

SEO best practices also apply to local SEO, since Google also considers a website’s position in organic search results when determining local ranking.

In the next chapter, you’ll learn on-page best practices that will help Google and users better understand your content.

[Bonus!] Local engagement

Although not listed by Google as a local ranking factor, the role of engagement is only going to increase as time goes on. Google continues to enrich local results by incorporating real-world data like popular times to visit and average length of visits…

Curious about a certain local business’ citation accuracy? Moz has a free tool that can help out, aptly named Check Listing.

…and even provides searchers with the ability to ask the business questions!

Undoubtedly now more than ever before, local results are being influenced by real-world data. This interactivity is how searchers interact with and respond to local businesses, rather than purely static (and game-able) information like links and citations.

Since Google wants to deliver the best, most relevant local businesses to searchers, it makes perfect sense for them to use real time engagement metrics to determine quality and relevance.

You don’t have to know the ins and outs of Google’s algorithm (that remains a mystery!), but by now you should have a great baseline knowledge of how the search engine finds, interprets, stores, and ranks content. Armed with that knowledge, let’s learn about choosing the keywords your content will target in Chapter 3 (Keyword Research)!

2 notes

·

View notes

Text

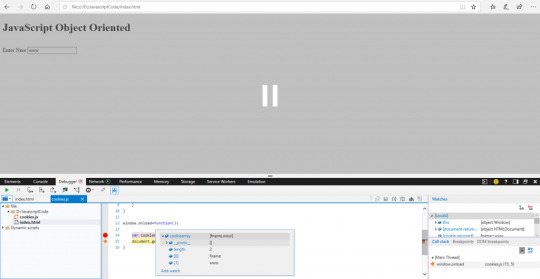

How Web Workers Work in JavaScript – With a Practical JS Example

In this article, I will walk you through an example that will show you how web workers function in JavaScript with the help of WebSockets.

I think it's helpful to work with a practical use case because it is much simpler to understand the concepts when you can relate them to real life.

So in this guide, you will be learning what web workers are in JavaScript, you'll get a brief introduction to WebSockets, and you'll see how you can manage sockets in the proper way.

This article is quite application/hands-on oriented, so I would suggest trying the example out as you go along to get a much better understanding.

Let’s dive in.

Table of contents

Prerequisites

Before you start reading this article, you should have a basic understanding of the following topics:

What are web workers in JavaScript?

A web worker is a piece of browser functionality. It is the real OS threads that can be spawned in the background of your current page so that it can perform complex and resource-intensive tasks.

Imagine that you have some large data to fetch from the server, or some complex rendering needs to be done on the UI. If you do this directly on your webpage then the page might get jankier and will impact the UI.

To mitigate this, you can simply create a thread – that is a web worker – and let the web worker take care of the complex stuff.

You can communicate with the web worker in a pretty simple manner which can be used to transfer data to and fro from the worker to the UI.

Common examples of web workers would be:

Dashboard pages that display real-time data such as stock prices, real-time active users, and so on

Fetching huge files from the server

Autosave functionality

You can create a web worker using the following syntax:

const worker = new Worker("<worker_file>.js");

Worker is an API interface that lets you create a thread in the background. We need to pass a parameter, that is a <worker_file>.js file. This specifies the worker file the API needs to execute.

NOTE: A thread is created once a Worker call is initiated. This thread only communicates with its creator, that is the file which created this thread.

A worker can be shared or used by multiple consumers/scripts. These are called shared workers. The syntax of the shared worker is very similar to that of the above mentioned workers.

const worker = new SharedWorker("<worker_file>.js");

You can read more about SharedWorkers in this guide.

History of web workers

Web workers execute in a different context, that is they do not execute in a global scope such as window context. Web workers have their own dedicated worker context which is called DedicatedWorkerGlobalScope.

There are some cases where you can't use web workers, though. For example, you can't use them to manipulate the DOM or the properties of the window object. This is because the worker does not have the access to the window object.

Web workers can also spawn new web workers. Web workers communicate with their creator using certain methods like postMessage, onmessage, and onerror. We will look into these methods closely in the later sections of this article.

Brief Introduction to Web Sockets

A web socket is a type of communication that happens between two parties/entities using a WebSocket protocol. It actually provides a way to communicate between the two connected entities in a persistent manner.

You can create a simple web socket like below:

const socket = new WebSocket("ws://example.com");

Over here we have created a simple socket connection. You'll notice that we have passed a parameter to the WebSocket constructor. This parameter is a URL at which the connection should be established.

You can read more about web sockets by referring to the Websockets link in the prerequisites.

Use Case Description

NOTE: Context, Container, and Class diagrams drawn in this blog post don't accurately follow the exact conventions of these diagrams. They're approximated here so that you can understand the basic concepts.

Before we start, I would suggest reading up on c4models, container diagrams, and context diagrams. You can find resources about them in the prerequisites section.

In this article, we are going to consider the following use case: data transfer using web workers via socket protocol.

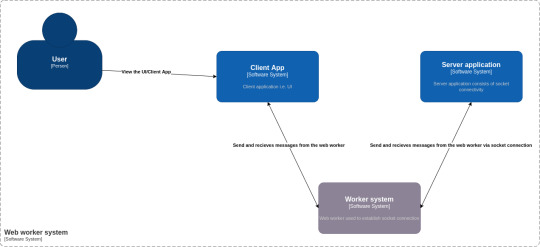

We are going to build a web application which will plot the data on a line chart every 1.5 seconds. The web application will receive the data from the socket connection via web workers. Below is the context diagram of our use case:

Container Diagram

As you can see from the above diagram, there are 4 main components to our use case:

Person: A user who is going to use our application

Software system: Client App – This is the UI of our application. It consists of DOM elements and a web worker.

Software system: Worker system – This is a worker file that resides in the client app. It is responsible for creating a worker thread and establishing the socket connection.

Software system: Server application – This is a simple JavaScript file which can be executed by node to create a socket server. It consists of code which helps to read messages from the socket connection.

Now that we understand the use case, let's dive deep into each of these modules and see how the whole application works.

Project Structure

Please follow this link to get the full code for the project that I developed for this article.

Our project is divided into two folders. First is the server folder which consists of server code. The second is the client folder, which consists of the client UI, that is a React application and the web worker code.

Following is the directory structure:

├── client │ ├── package.json │ ├── package-lock.json │ ├── public │ │ ├── favicon.ico │ │ ├── index.html │ │ ├── logo192.png │ │ ├── logo512.png │ │ ├── manifest.json │ │ └── robots.txt │ ├── README.md │ ├── src │ │ ├── App.css │ │ ├── App.jsx │ │ ├── components │ │ │ ├── LineChartSocket.jsx │ │ │ └── Logger.jsx │ │ ├── index.css │ │ ├── index.js │ │ ├── pages │ │ │ └── Homepage.jsx │ │ ├── wdyr.js │ │ └── workers │ │ └── main.worker.js │ └── yarn.lock └── server ├── package.json ├── package-lock.json └── server.mjs

To run the application, you first need to start the socket server. Execute the following commands one at a time to start the socket server (assuming you are in the parent directory):

cd server node server.mjs

Then start the client app by running the following commands (assuming you are in the parent directory):

cd client yarn run start

Open http://localhost:3000 to start the web app.

Client and Server Application

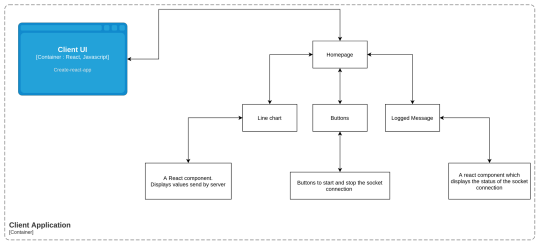

The client application is a simple React application, that is CRA app, which consists of a Homepage. This home page consists of the following elements:

Two buttons: start connection and stop connection which will help to start and stop the socket connection as required.

A line chart component - This component will plot the data that we receive from the socket at regular intervals.

Logged message - This is a simple React component that will display the connection status of our web sockets.

Below is the container diagram of our client application.

Container Diagram: Client Application

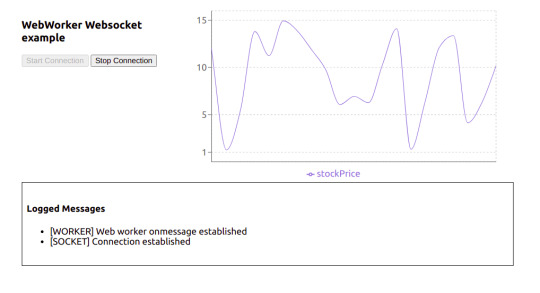

Below is how the UI will look:

Actual UI

To check out the code for the client UI, go to the client folder. This is a regular create-react-app, except that I have removed some boilerplate code that we don't need for this project.

App.jsx is actually the starter code. If you check this out, we have called the <Homepage /> component in it.

Now let's have a look at the Homepage component.

const Homepage = () => { const [worker, setWorker] = useState(null); const [res, setRes] = useState([]); const [log, setLog] = useState([]); const [buttonState, setButtonState] = useState(false); const hanldeStartConnection = () => { // Send the message to the worker [postMessage] worker.postMessage({ connectionStatus: "init", }); }; const handleStopConnection = () => { worker.postMessage({ connectionStatus: "stop", }); }; //UseEffect1 useEffect(() => { const myWorker = new Worker( new URL("../workers/main.worker.js", import.meta.url) ); //NEW SYNTAX setWorker(myWorker); return () => { myWorker.terminate(); }; }, []); //UseEffect2 useEffect(() => { if (worker) { worker.onmessage = function (e) { if (typeof e.data === "string") { if(e.data.includes("[")){ setLog((preLogs) => [...preLogs, e.data]); } else { setRes((prevRes) => [...prevRes, { stockPrice: e.data }]); } } if (typeof e.data === "object") { setButtonState(e.data.disableStartButton); } }; } }, [worker]); return ( <> <div className="stats"> <div className="control-panel"> <h3>WebWorker Websocket example</h3> <button id="start-connection" onClick={hanldeStartConnection} disabled={!worker || buttonState} > Start Connection </button> <button id="stop-connection" onClick={handleStopConnection} disabled={!buttonState} > Stop Connection </button> </div> <LineChartComponent data={res} /> </div> <Logger logs={log}/> </> ); };

As you can see, it's just a regular functional component that renders two buttons – a line chart, and a custom component Logger.

Now that we know how our homepage component looks, let's dive into how the web worker thread is actually created. In the above component you can see there are two useEffect hooks used.

The first one is used for creating a new worker thread. It's a simple call to the Worker constructor with a new operator as we have seen in the previous section of this article.

But there are some difference over here: we have passed an URL object to the worker constructor rather than passing the path of the worker file in the string.

const myWorker = new Worker(new URL("../workers/main.worker.js", import.meta.url));

You can read more about this syntax here.

If you try to import this web worker like below, then our create-react-app won’t be able to load/bundle it properly so you will get an error since it has not found the worker file during bundling:

const myWorker = new Worker("../workers/main.worker.js");

Next, we also don’t want our application to run the worker thread even after the refresh, or don’t want to spawn multiple threads when we refresh the page. To mitigate this, we'll return a callback in the same useEffect. We use this callback to perform cleanups when the component unmounts. In this case, we are terminating the worker thread.

We use the useEffect2 to handle the messages received from the worker.

Web workers have a build-in property called onmessage which helps receive any messages sent by the worker thread. The onmessage is an event handler of the worker interface. It gets triggered whenever a message event is triggered. This message event is generally triggered whenever the postMessage handler is executed (we will look more into this in a later section).

So in order for us to send a message to the worker thread, we have created two handlers. The first is handleStartConnection and the second is handleStopConnection. Both of them use the postMessage method of the worker interface to send the message to the worker thread.

We will talk about the message {connectionStatus: init} in our next section.

You can read more about the internal workings of the onmessage and postMessage in the following resources:

Since we now have a basic understanding about how our client code is working, then let's move on to learn about the Worker System in our context diagram above.

Worker System

To understand the code in this section, make sure you go through the file src/workers/main.worker.js.

To help you understand what's going on here, we will divide this code into three parts:

A self.onmessage section

How the socket connection is managed using the socketManagement() function

Why we need the socketInstance variable at the top

How self.onmessage works

Whenever you create a web worker application, you generally write a worker file which handles all the complex scenarios that you want the worker to perform. This all happens in the main.worker.js file. This file is our worker file.

In the above section, we saw that we established a new worker thread in the useEffect. Once we created the thread, we also attached the two handlers to the respective start and stop connection buttons.

The start connection button will execute the postMessage method with message: {connectionStatus: init} . This triggers the message event, and since the message event is triggered, all the message events are captured by the onmessage property.

In our main.worker.js file, we have attached a handler to this onmessage property:

self.onmessage = function (e) { const workerData = e.data; postMessage("[WORKER] Web worker onmessage established"); switch (workerData.connectionStatus) { case "init": socketInstance = createSocketInstance(); socketManagement(); break; case "stop": socketInstance.close(); break; default: socketManagement(); } }

So whenever any message event is triggered in the client, it will get captured in this event handler.

The message {connectionStatus: init} that we send from the client is received in the event e. Based on the value of connectionStatus we use the switch case to handle the logic.

NOTE: We have added this switch case because we need to isolate some part of the code which we do not want to execute all the time (we will look into this in a later section).

How the socket connection is managed using the socketManagement() function

There are some reasons why I have shifted the logic of creating and managing a socket connection into a separate function. Here is the code for a better understanding of the point I am trying to make:

function socketManagement() { if (socketInstance) { socketInstance.onopen = function (e) { console.log("[open] Connection established"); postMessage("[SOCKET] Connection established"); socketInstance.send(JSON.stringify({ socketStatus: true })); postMessage({ disableStartButton: true }); }; socketInstance.onmessage = function (event) { console.log(`[message] Data received from server: ${event.data}`); postMessage( event.data); }; socketInstance.onclose = function (event) { if (event.wasClean) { console.log(`[close] Connection closed cleanly, code=${event.code}`); postMessage(`[SOCKET] Connection closed cleanly, code=${event.code}`); } else { // e.g. server process killed or network down // event.code is usually 1006 in this case console.log('[close] Connection died'); postMessage('[SOCKET] Connection died'); } postMessage({ disableStartButton: false }); }; socketInstance.onerror = function (error) { console.log(`[error] ${error.message}`); postMessage(`[SOCKET] ${error.message}`); socketInstance.close(); }; } }

This is a function that will help you manage your socket connection:

For receiving the message from the socket server we have the onmessage property which is assigned an event handler.

Whenever a socket connection is opened, you can perform certain operations. To do that we have the onopen property which is assigned to an event handler.

And if any error occurs or when we are closing the connection then, we use onerror and onclose properties of the socket.

For creating a socket connection there is a separate function altogether:

function createSocketInstance() { let socket = new WebSocket("ws://localhost:8080"); return socket; }

Now all of these functions are called in a switch case like below in the main.worker.js file:

self.onmessage = function (e) { const workerData = e.data; postMessage("[WORKER] Web worker onmessage established"); switch (workerData.connectionStatus) { case "init": socketInstance = createSocketInstance(); socketManagement(); break; case "stop": socketInstance.close(); break; default: socketManagement(); } }

So based on what message the client UI sends to the worker the appropriate function will be executed. It is pretty self-explanatory on what message which particular function should be triggered, based on the above code.

Now consider a scenario where we placed all the code inside self.onmessage.

self.onmessage = function(e){ console.log("Worker object present ", e); postMessage({isLoading: true, data: null}); let socket = new WebSocket("ws://localhost:8080"); socket.onopen = function(e) { console.log("[open] Connection established"); console.log("Sending to server"); socket.send("My name is John"); }; socket.onmessage = function(event) { console.log(`[message] Data received from server: ${event.data}`); }; socket.onclose = function(event) { if (event.wasClean) { console.log(`[close] Connection closed cleanly, code=${event.code} reason=${event.reason}`); } else { // e.g. server process killed or network down // event.code is usually 1006 in this case console.log('[close] Connection died'); } }; socket.onerror = function(error) { console.log(`[error] ${error.message}`); }; }

This would cause the following problems:

On every postMessage call made by the client UI, there would have been a new socket instance.

It would have been difficult to close the socket connection.

Because of these reasons, all the socket management code is written in a function socketManagement and catered using a switch case.

Why we need the socketInstance variable at the top

We do need a socketInstance variable at the top because this will store the socket instance which was previously created. It is a safe practice since no one can access this variable externally as main.worker.js is a separate module altogether.

Communication between the UI and the socket via web worker

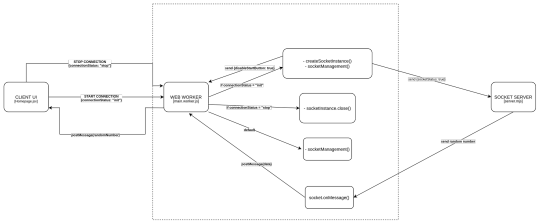

Now that we understand which part of the code is responsible for which section, we will take a look at how we establish a socket connection via webworkers. We'll also see how we respond via socket server to display a line chart on the UI.

End-to-end flow of the application

NOTE: Some calls are purposefully not shown in the diagram since it will make the diagram cluttered. Make sure you refer to the code as well while referring to this diagram.

Now let's first understand what happens when you click on the start connection button on the UI:

One thing to notice over here is that our web worker thread is created once the component is mounted, and is removed/terminated when the component is unmounted.

Once the start connection button is clicked, a postMessage call is made with {connectionStatus: init}

The web worker’s onmessage event handler which is listening to all the message events comes to know that it has received connectionStatus as init. It matches the case, that is in the switch case of main.worker.js. It then calls the createSocketInstance() which returns a new socket connection at the URL: ws://localhost:8080

After this a socketManagement() function is called which checks if the socket is created and then executes a couple of operations.

In this flow, since the socket connection is just established therefore, socketInstance’s onpen event handler is executed.

This will send a {socketStatus: true} message to the socket server. This will also send a message back to the client UI via postMessage({ disableStartButton: true}) which tells the client UI to disable the start button.

Whenever the socket connection is established, then the server socket’s on('connection', ()=>{}) is invoked. So in step 3, this function is invoked at the server end.

Socket’s on('message', () => {}) is invoked whenever a message is sent to the socket. So at step 6, this function is invoked at the server end. This will check if the socketStatus is true, and then it will start sending a random integer every 1.5 seconds to the client UI via web workers.

Now that we understood how the connection is established, let's move on to understand how the socket server sends the data to the client UI:

As discussed above, socket server received the message to send the data, that is a random number every 1.5 second.

This data is recieved on the web worker’s end using the onmessage handler.

This handler then calls the postMessage function and sends this data to the UI.

After receiving the data it appends it to an array as a stockPrice object.

This acts as a data source for our line chart component and gets updated every 1.5 seconds.

Now that we understand how the connection is established, let's move on to understand how the socket server sends the data to the client UI:

As discussed above, socket server recieved the message to send the data, that is a random number, every 1.5 seconds.

This data is recieved on the web worker’s end using the socket's onmessage handler.

This handler then calls the postMessage function of the web worker and sends this data to the UI.

After receiving the data via useEffect2 it appends it to an array as a stockPrice object.

This acts as a data source for our line chart component and gets updated every 1.5 seconds.

NOTE: We are using recharts for plotting the line chart. You can find more information about it at the official docs.

Here is how our application will look in action:

Working Example

Summary

So this was a quick introduction to what web workers are and how you can use them to solve complex problems and create better UIs. You can use web workers in your projects to handle complex UI scenarios.

If you want to optimize your workers, read up on the below libraries:

Thank you for reading!

Follow me on twitter, github, and linkedIn.

If you read this far, tweet to the author to show them you care.

1 note

·

View note

Link

1) ls

Need to figure out what is in a directory? ls is your friend. It will list out the contents of a directory and has a number of flags to help control how those items are displayed. Since the default ls doesn't display entries that begin with a ., you can use ls -a to make sure to include those entries as well.

nyxtom@enceladus$ ls -a ./ README.md _dir_colors _tern-project _vimrc install.sh* ../ _alacritty-theme/ _gitconfig _tmux/ alacritty-colorscheme* .git/ _alacritty.yml _profile _tmux.conf .gitignore _bashrc _terminal/ _vim/ imgcat.sh*

Need it in a 1 column layout (one entry per line)? Use ls -1. Need to include a longer format with size, permissions, and timestamps use ls -l. Need those entries sorted by last changed use ls -l -t. Need to recursively list them? Use ls -R. Want to sort by file size? Use ls -S.

2) cat

Need to output the contents of a file. Use cat! Bonus: use cat -n to include numbers on the lines.

nyxtom@enceladus$ cat -n -s _dir_colors 1 .red 00;31 2 .green 00;32 3 .yellow 00;33 4 .blue 00;34 5 .magenta 00;35 6 .cyan 00;36 7 .white 00;37 8 .redb 01;31 9 .greenb 01;32

3) less/more

Are finding that "cat-ing" a file is causing your terminal to scroll too fast? Use less to fix that problem. But wait, what about more? less is actually based on more. Early versions of more were unable to scroll backward through a file. In any case, less has a nice ability to scroll through the contents of a file or output with space/down/up/page keys. Use q to exit.

Need line numbers? use less -N

Need to search while in less? Use /wordshere to search.

Once you're searching use n to go to the next result, and N for the previous result.

Want to open up less with search already? Use less -pwordshere file.txt

Whitespace bothering you? less -s

Multiple files, what!? less file1.txt file2.txt

Next file is : and then hit n, previous file is : then hit p

Need to pipe some log output or the results of a curl? Use curl dev.to | less

Less has bookmarks? Yep. Drop a marker in less for the current top line with m then hit any letter as the bookmark like a. Then to go back, hit the '(apostrophe key) and the bookmark letter to return (in this case a).

Want to drop into your default editor from less right where you are currently at and return back when you're done? Use v and your default terminal editor will open up at the right spot. Then once you've quit/saved in that editor you will be right back where you were before. 😎 Awesome! 🎉

4) curl

Curl is another essential tool if you need to do just about any type of protocol request. Here is a small fraction of ways you can interact with curl.

GET `curl https://dev.to/

Output to a file curl -o output.html https://dev.to/

POST curl -X POST -H "Content-Type: application/json" -d '{"name":"tom"}' http://localhost:8080

BASIC AUTH `curl -u username:password http://localhost:8080

drop into cat cat | curl -H 'Content-Type: application/json' http://localhost:8080 -d @-

HEAD curl -I dev.to

Follow Redirects curl -I -L dev.to

Pass a certificate, skip verify curl --cert --key --insecure https://example.com

5) man

If you are stuck understanding what a command does, or need the documentation. Use man! It's literally the manual and it works on just all the built in commands. It even works on itself

$ man man NAME man - format and display the on-line manual pages SYNOPSIS man [-acdfFhkKtwW] [--path] [-m system] [-p string] [-C config_file] [-M pathlist] [-P pager] [-B browser] [-H htmlpager] [-S section_list] [section] name ... DESCRIPTION man formats and displays the on-line manual pages. If you specify section, man only looks in that section of the manual. name is normally the name of the manual page, which is typically the name of a com- mand, function, or file. However, if name contains a slash (/) then man interprets it as a file specification, so that you can do man ./foo.5 or even man /cd/foo/bar.1.gz. See below for a description of where man looks for the manual page files. MANUAL SECTIONS The standard sections of the manual include: 1 User Commands 2 System Calls 3 C Library Functions 4 Devices and Special Files 5 File Formats and Conventions 6 Games et. Al. 7 Miscellanea 8 System Administration tools and Deamons Distributions customize the manual section to their specifics, which often include additional sections.

6) alias

If you ever need to setup a short command name to execute a script or some complicated git command for instance, then use alias.

alias st="git status" alias branches="git branch -a --no-merged" alias imgcat="~/dotfiles/imgcat.sh"

Bonus: add these to your ~/.bashrc to execute these when your shell starts up!

7) echo

The "hello world" of any terminal is echo. echo "hello world". You can include environment variables and even sub commands echo "Hello $USER".

8) sed

Sed is a "stream editor". This means we can do a number of different ways to read some input, modify it, and output it. Here's a few ways you can do that:

Text substitutions: echo 'Hello world!' | sed s/world/tom/

Selections: sed -n '1,4p' _bashrc (n is quiet or suppress unmatched lines, 1,4p is p print lines 1-4.

Multiple selections: sed -n -e '1,4p' -e '8-10p' _bashrc

Every X lines: sed -n 1~2p _bashrc (use ~ instead of , to denote every 2 lines (in this case 2)

Search all/replace all: sed s/world/tom/gp (g for global search, p is to print each match instance)

NOTE the sed implementation might differ depending on the system you are using. Keep this in mind that some flags might be unavailable. Take a look at the man sed for more info.

9) tar

If you need to create an archive of a number of files. Don't worry, you'll remember these flags soon enough!

-c create

-v verbose