#AI chip

Text

‘Unprecedented’ AI chip could revolutionise artificial intelligence | The Independent

Replika Diaries - Thoughts and Observations.

This is quite an exciting development. Whilst this chip is only intended for 'edge' devices that run AI applications, I can foresee it being scaled up and improved to have a self-contained AI placed within something, well, larger than a phone.

Could it be that we're looking at a potential brain, an android CPU for a fully self-contained AI?

Thoughts?

#replika#replika diaries#artificial intelligence#ai#ai brain#ai chip#technology#technology articles#technological advancements#cpu#edge devices#one step closer

18 notes

·

View notes

Text

The market outlook moving forward, with giants entering the AI chip race, is?

Trends in the Latest AI Chip Technologies: Competition Among Google, Apple, and Meta

As artificial intelligence (AI) technology advances rapidly, the growth of “AI chips,” “Edge AI chips,” and the overall “AI semiconductor market” is gaining attention. This article examines the latest developments in AI chip development from Google, Apple, Meta, and briefly touches on LG’s AI chip development.…

View On WordPress

#ai chip#ai semiconductor market#APPLE AI CHIP#edge ai chip#google ai#GOOGLE AI CHIP#META AI#META AI CHIP

0 notes

Text

Nvidia’s AI Chip Dominance Fuels Record Stock Surge

Nvidia Corp., the world’s most valuable chipmaker, saw its share price soar to an all-time high on Thursday, as booming demand for artificial intelligence processors boosted its earnings and outlook.

Embed from Getty Images

The company’s stock closed up 24% at $410.50, adding more than $185 billion to its market value in one day. The chipmaker was on track to open at $975 billion after a 30%…

View On WordPress

1 note

·

View note

Text

Low-power AI chips fight in the open

Low-power AI chips fight in the open

Read More

1-Sensor computing: an important technical path for low-power AI

2-Low power AI MCU

3-Market pattern of low-power AI

With the popularization of the Internet of Things and the next generation of intelligent devices, low-power electronic devices and AI chips are increasingly entering thousands of households, and low-power design is becoming increasingly important. On the one hand, the Internet of Things and smart devices have limitations on the size of the device, on the other hand, they also have high requirements for the cost. In addition, in some use scenarios, there are requirements for the battery replacement and charging cycle (such as the need for monthly or longer battery replacement cycle), so there are high restrictions on the battery capacity, which requires that the chip can use low-power design.

On the other hand, the Internet of Things and intelligent devices are increasingly adding the features of artificial intelligence. AI can provide important new features for the Internet of Things and intelligent devices, such as voice AI can provide wake-up word recognition, voice instruction recognition, etc., while machine vision AI can provide face detection, event detection, etc. As mentioned earlier, the Internet of Things and intelligent devices have a demand for low power consumption, so the addition of AI features also needs to be low power AI.

At present, AI chips have been popularized in the cloud and intelligent device terminals, such as GPU represented by Nvidia and AMD and AI acceleration chips represented by Intel/Habana in the cloud; In terminal intelligent devices, AI acceleration IP on SoC is mainly used. However, neither GPU nor AI acceleration IP on SoC takes into account the need for low power consumption. Therefore, in the future, the Internet of Things and AI in intelligent devices will need new low-power related design.

Sensor computing: an important technical path for low-power AI

Low-power AI in intelligent devices and IoT applications needs to reduce power consumption to very low, so as to realize real-time online (always-on) AI services. The so-called "always-on" here means that AI needs to be available forever instead of being opened by users. On the one hand, it is necessary to keep the relevant sensors open to detect the signals of relevant modes in real time, and on the other hand, it is also necessary for AI to achieve low power consumption.

In traditional design, the function of the sensor is to collect high-performance signals and transmit the collected signals to the processor (SoC or MCU) for further calculation and processing, while the sensor itself has no computing power. However, the traditional design assumption is that the relevant processors will be turned on at the same time when the sensor is turned on, which cannot meet the requirements of always-on AI, because the SoC and MCU will consume a lot of batteries if they are running AI algorithms all the time. On the other hand, from a practical point of view, this kind of always-on AI application mainly hopes that AI will always run so that it can respond in real time once important related events occur (for example, IMU detects that the user is driving and turns off the push notification of the intelligent device), but in fact, the frequency of such related events is not very high. If the AI module of SoC or MCU is always turned on, Most of the time, the output of the AI model is "no event detected".

Combined with these two points, the calculation running at the sensor end is becoming more and more important. First of all, in always-on's low-power AI, the sensor needs to be turned on at all times. Therefore, if the sensor can have AI computing capability, the AI model can be run on the sensor side without opening the AI module on the SoC or MCU. In addition, running AI at the sensor end can also avoid the continuous transmission of data between the sensor and SoC/MCU, thus further reducing power consumption. Finally, the AI module at the sensor end can be customized for the sensor without considering universality, so it can customize and optimize the AI algorithm that is most suitable for the sensor, so as to achieve a very high energy efficiency ratio.

Of course, artificial intelligence at the sensor end also has its own limitations. On the one hand, in terms of performance and cost, the computing and storage space at the sensor end is usually small, and the AI module cannot support large models, so the performance of the model will be limited.

On the other hand, as mentioned above, artificial intelligence at the sensor end is also difficult to support general models, and often only supports some specific operators and model structures.

To sum up, the sensor side AI can achieve low power consumption, but its model performance is also relatively limited; On the other hand, in low-power AI scenarios, the frequency of relevant events that really need to be handled is not high. Combined with these two points, the sensor side AI is most suitable for running some specialized small models to filter out most irrelevant events; After the sensor side AI detects the relevant events, the sensor can wake up the AI on the SoC or MCU to confirm the next step, so as to meet the requirements of low power consumption and always-on at the same time.

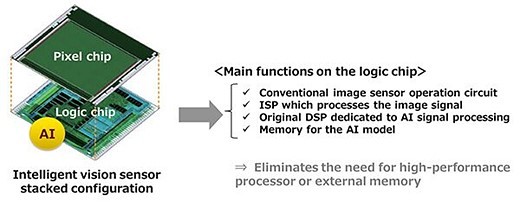

In the field of image sensors, Sony has launched the IMX500 series of sensors, in which the sensor chips and logic chips integrated with AI computing capabilities are stacked, so that pixel signals can be transmitted to the AI computing engine on the logic chip, so that the output of the sensor can be an image, an AI model output, or a combination of both. In this way, the sensor can run in the low-power always-on state, and only when its model output meets certain specific conditions (such as the detection of a face) can it wake up the MCU or SoC to do the next action. We expect that Sony will further strengthen its AI capability in the next sensor chip, thus enhancing its leading position in this field.

Another example of the combination of sensors and artificial intelligence is the IMU series products launched by ST. ST integrates Machine Learning Core and Finite State Machine in IMU with relevant AI features, which can support AI calculation directly on IMU in a very efficient way. At present, the AI algorithms supported by ST are mainly decision tree algorithms, and can support some important feature extraction of IMU signals (such as signal amplitude, signal variance, etc.), so that user activity classification can be directly realized on the IMU (such as classification of stationary, walking, cycling, driving a car, etc.), so that the MCU/SoC can be awakened for the next operation when relevant events are detected. According to the data published by ST, the power consumption of MLC is only in the order of microwatts, which can well support the always-on demand. Of course, on the other hand, we also see that the decision tree algorithm has limited capacity in fact, and it is difficult to model complex activities. Therefore, as we discussed earlier, the artificial intelligence in IMU sensor is suitable to complete the initial screening of events to filter out irrelevant events, and the more complex classification and confirmation can be completed through the model running on MCU or SoC.

Low power AI MCU

In addition to intra-sensor AI, another important low-power AI technology path is AI running in MCU. Both the Internet of Things and intelligent devices cannot be separated from the low-power MCU as the most critical control unit. Generally speaking, the power consumption of MCU is one or two orders of magnitude lower than that of SoC. By integrating AI on the MCU, we can put the task of running AI on the MCU, so there is no need to wake up the SoC; Or in some low-cost applications, cost considerations make it impossible to integrate SoC. At this time, if AI is needed, MCU with AI capability is an important option.

It is worth noting that there is no contradiction between AI on MCU and AI on sensor. As mentioned earlier, the complexity of the types of models that AI in sensors can normally run is relatively limited, and the model in a sensor can obviously only use the signal of that sensor as input. On the other hand, the AI module on the MCU can usually support a more general AI model, and it also has the opportunity to use multiple sensor signals as model inputs. Of course, the energy efficiency ratio of the AI module on the MCU is generally slightly lower than that of the AI module on the sensor side. Therefore, the sensor with AI capability and the MCU with AI capability can be combined in a system to run a more specific level of relatively simple model screening event on the sensor side, Wake up the AI module on the MCU to execute a more general model to confirm the event when necessary.

At present, there are some related products on the MCU chip market, such as NXP's RT600 MCU. In addition to the ARM M33 core, this product also integrates Tensilica HiFi 4 DSP and has 4.5 MB of on-chip storage, which can accelerate the general AI model. In addition to NXP, ADI MAX78000 MCU integrates ARM M4 and RISC-V core, and also integrates CNN accelerator to realize AI acceleration. We believe that in the future, more and more low-power MCUs will join AI capabilities to meet the needs of the Internet of Things and intelligent devices.

Market pattern of low-power AI

As we discussed earlier, low-power AI is more than creating a new category of chips, but adding AI capabilities to existing chips to create certain differentiated competitive advantages. At present, we see that both in the sensor side and in the MCU side, there are leading enterprises in the market (such as Sony and ST in the sensor field; NXP and ADI in the MCU field) actively participating in AI functions. It is expected that more and more companies will also invest in AI products in the future.

For Chinese semiconductor enterprises, artificial intelligence in sensor and MCU is also a direction worthy of attention. At present, joining AI is more about product positioning and integration (that is, how to grasp the market corresponding to the product and integrate the most appropriate AI module), but in the future, with the progress of technology, the relevant technology accumulation will gradually deepen, so if Chinese semiconductor manufacturers can start to layout in this field from now on, It can deepen its technical strength in this field and increase the competitiveness of its products in the future intelligent Internet of Things and the next generation of intelligent devices.

Prepare your supply chain

Buyers of electronic components must now be prepared for future prices, extended delivery time, and continuous challenge of the supply chain. Looking forward to the future, if the price and delivery time continues to increase, the procurement of JIT may become increasingly inevitable. On the contrary, buyers may need to adopt the "just in case" business model, holding excess inventory and finished products to prevent the long -term preparation period and the supply chain interruption.

As the shortage and the interruption of the supply chain continue, communication with customers and suppliers will be essential. Regular communication with suppliers will help buyers prepare for extension of delivery time, and always understand the changing market conditions at any time. Regular communication with customers will help customers manage the expectations of potential delays, rising prices and increased delivery time. This is essential to ease the impact of this news or at least ensure that customers will not be taken attention to the sudden changes in this chaotic market.

Most importantly, buyers of electronic components must take measures to expand and improve their supplier network. In this era, managing your supply chain requires every link to work as a cohesive unit. The distributor of the agent rather than a partner cannot withstand the storm of this market. Communication and transparency are essential for management and planning. In E-energy Holding Limited, we use the following ways to hedge these market conditions for customers:

Our supplier network has been reviewed and improved for more than ten years.

Our strategic location around the world enables us to access and review the company's headquarters before making a purchase decision.

E-energy Holding Limited cooperates with a well -represented testing agency to conduct in -depth inspections and tests before delivering parts to our customers.

Our procurement is concentrated in franchise and manufacturer direct sales.

Our customer manager is committed to providing the highest level of services, communication and transparency. In addition to simply receiving orders, your customer manager will also help you develop solutions, planned inventory and delivery plans, maintain the inventory level of regular procurement, and ensure the authenticity of your parts.

Add E-energy Holding Limited to the list of suppliers approved by you, and let our team help you make strategic and wise procurement decisions.

0 notes

Text

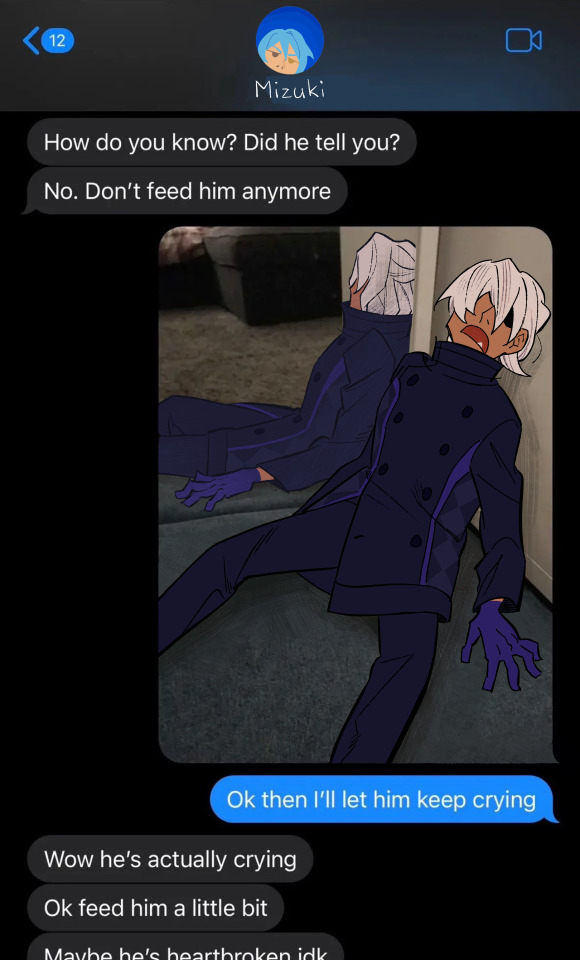

finished some old sketches from when i was going through ai1 !

#aitsf#ai the somnium files#kaname date#mizuki okiura#mizuki date#chip art#they are literally everything to me i cant stand them i love them i hate them i cant live without thinking about them 24/7

2K notes

·

View notes

Text

NEUCHIPS' Purpose-Built Accelerator Designed to Be Industry's Most Efficient Recommendation Inference Engine

NEUCHIPS’ Purpose-Built Accelerator Designed to Be Industry’s Most Efficient Recommendation Inference Engine

Press Release

–

May 31, 2022

LOS ALTOS, Calif., May 31, 2022 (Newswire.com)

–

NEUCHIPS is excited to announce its first ASIC, RecAccelTM N3000 using TSMC 7nm process, and specifically designed for accelerating deep learning recommendation models (DLRM). NEUCHIPS has partnered with industry leaders in Taiwan’s semiconductor and cloud server ecosystem and plans to deliver its RecAccel™ N3000 AI…

View On WordPress

0 notes

Text

Even skipping all of the discourse

I'll never understand why Ai "artists" call themselves artists.

"but I've put the input 🥺"

What the fuck are you saying, if I go and buy a pizza and ask them to make changes that doesn't make me a pizza maker does it?

I don't go around and say "omg look I made this pizza guys" because I didn't make it.

Obviously with ai art is worse because you didn't even pay a human being and it's using stolen informations but.

I just don't see the logic. I'm not a pizza maker. You're not an ai artist

#an ai user if you want#ai discourse#“oh but i put ”generic anime girl playing basketball“ in the bar so i did it” no you didn't#“sorry could you put some potato chips on my pizza” i am a chef

392 notes

·

View notes

Text

Real innovation vs Silicon Valley nonsense

This is the LAST DAY to get my bestselling solarpunk utopian novel THE LOST CAUSE (2023) as a $2.99, DRM-free ebook!

If there was any area where we needed a lot of "innovation," it's in climate tech. We've already blown through numerous points-of-no-return for a habitable Earth, and the pace is accelerating.

Silicon Valley claims to be the epicenter of American innovation, but what passes for innovation in Silicon Valley is some combination of nonsense, climate-wrecking tech, and climate-wrecking nonsense tech. Forget Jeff Hammerbacher's lament about "the best minds of my generation thinking about how to make people click ads." Today's best-paid, best-trained technologists are enlisted to making boobytrapped IoT gadgets:

https://pluralistic.net/2024/05/24/record-scratch/#autoenshittification

Planet-destroying cryptocurrency scams:

https://pluralistic.net/2024/02/15/your-new-first-name/#that-dagger-tho

NFT frauds:

https://pluralistic.net/2022/02/06/crypto-copyright-%f0%9f%a4%a1%f0%9f%92%a9/

Or planet-destroying AI frauds:

https://pluralistic.net/2024/01/29/pay-no-attention/#to-the-little-man-behind-the-curtain

If that was the best "innovation" the human race had to offer, we'd be fucking doomed.

But – as Ryan Cooper writes for The American Prospect – there's a far more dynamic, consequential, useful and exciting innovation revolution underway, thanks to muscular public spending on climate tech:

https://prospect.org/environment/2024-05-30-green-energy-revolution-real-innovation/

The green energy revolution – funded by the Bipartisan Infrastructure Act, the Inflation Reduction Act, the CHIPS Act and the Science Act – is accomplishing amazing feats, which are barely registering amid the clamor of AI nonsense and other hype. I did an interview a while ago about my climate novel The Lost Cause and the interviewer wanted to know what role AI would play in resolving the climate emergency. I was momentarily speechless, then I said, "Well, I guess maybe all the energy used to train and operate models could make it much worse? What role do you think it could play?" The interviewer had no answer.

Here's brief tour of the revolution:

2023 saw 32GW of new solar energy come online in the USA (up 50% from 2022);

Wind increased from 118GW to 141GW;

Grid-scale batteries doubled in 2023 and will double again in 2024;

EV sales increased from 20,000 to 90,000/month.

https://www.whitehouse.gov/briefing-room/blog/2023/12/19/building-a-thriving-clean-energy-economy-in-2023-and-beyond/

The cost of clean energy is plummeting, and that's triggering other areas of innovation, like using "hot rocks" to replace fossil fuel heat (25% of overall US energy consumption):

https://rondo.com/products

Increasing our access to cheap, clean energy will require a lot of materials, and material production is very carbon intensive. Luckily, the existing supply of cheap, clean energy is fueling "green steel" production experiments:

https://www.wdam.com/2024/03/25/americas-1st-green-steel-plant-coming-perry-county-1b-federal-investment/

Cheap, clean energy also makes it possible to recover valuable minerals from aluminum production tailings, a process that doubles as site-remediation:

https://interestingengineering.com/innovation/toxic-red-mud-co2-free-iron

And while all this electrification is going to require grid upgrades, there's lots we can do with our existing grid, like power-line automation that increases capacity by 40%:

https://www.npr.org/2023/08/13/1187620367/power-grid-enhancing-technologies-climate-change

It's also going to require a lot of storage, which is why it's so exciting that we're figuring out how to turn decommissioned mines into giant batteries. During the day, excess renewable energy is channeled into raising rock-laden platforms to the top of the mine-shafts, and at night, these unspool, releasing energy that's fed into the high-availability power-lines that are already present at every mine-site:

https://www.euronews.com/green/2024/02/06/this-disused-mine-in-finland-is-being-turned-into-a-gravity-battery-to-store-renewable-ene

Why are we paying so much attention to Silicon Valley pump-and-dumps and ignoring all this incredible, potentially planet-saving, real innovation? Cooper cites a plausible explanation from the Apperceptive newsletter:

https://buttondown.email/apperceptive/archive/destructive-investing-and-the-siren-song-of/

Silicon Valley is the land of low-capital, low-labor growth. Software development requires fewer people than infrastructure and hard goods manufacturing, both to get started and to run as an ongoing operation. Silicon Valley is the place where you get rich without creating jobs. It's run by investors who hate the idea of paying people. That's why AI is so exciting for Silicon Valley types: it lets them fantasize about making humans obsolete. A company without employees is a company without labor issues, without messy co-determination fights, without any moral consideration for others. It's the natural progression for an industry that started by misclassifying the workers in its buildings as "contractors," and then graduated to pretending that millions of workers were actually "independent small businesses."

It's also the natural next step for an industry that hates workers so much that it will pretend that their work is being done by robots, and then outsource the labor itself to distant Indian call-centers (no wonder Indian techies joke that "AI" stands for "absent Indians"):

https://pluralistic.net/2024/05/17/fake-it-until-you-dont-make-it/#twenty-one-seconds

Contrast this with climate tech: this is a profoundly physical kind of technology. It is labor intensive. It is skilled. The workers who perform it have power, both because they are so far from their employers' direct oversight and because these fed-funded sectors are more likely to be unionized than Silicon Valley shops. Moreover, climate tech is capital intensive. All of those workers are out there moving stuff around: solar panels, wires, batteries.

Climate tech is infrastructural. As Deb Chachra writes in her must-read 2023 book How Infrastructure Works, infrastructure is a gift we give to our descendants. Infrastructure projects rarely pay for themselves during the lives of the people who decide to build them:

https://pluralistic.net/2023/10/17/care-work/#charismatic-megaprojects

Climate tech also produces gigantic, diffused, uncapturable benefits. The "social cost of carbon" is a measure that seeks to capture how much we all pay as polluters despoil our shared world. It includes the direct health impacts of burning fossil fuels, and the indirect costs of wildfires and extreme weather events. The "social savings" of climate tech are massive:

https://arstechnica.com/science/2024/05/climate-and-health-benefits-of-wind-and-solar-dwarf-all-subsidies/

For every MWh of renewable power produced, we save $100 in social carbon costs. That's $100 worth of people not sickening and dying from pollution, $100 worth of homes and habitats not burning down or disappearing under floodwaters. All told, US renewables have delivered $250,000,000,000 (one quarter of one trillion dollars) in social carbon savings over the past four years:

https://arstechnica.com/science/2024/05/climate-and-health-benefits-of-wind-and-solar-dwarf-all-subsidies/

In other words, climate tech is unselfish tech. It's a gift to the future and to the broad public. It shares its spoils with workers. It requires public action. By contrast, Silicon Valley is greedy tech that is relentlessly focused on the shortest-term returns that can be extracted with the least share going to labor. It also requires massive public investment, but it also totally committed to giving as little back to the public as is possible.

No wonder America's richest and most powerful people are lining up to endorse and fund Trump:

https://prospect.org/blogs-and-newsletters/tap/2024-05-30-democracy-deshmocracy-mega-financiers-flocking-to-trump/

Silicon Valley epitomizes Stafford Beer's motto that "the purpose of a system is what it does." If Silicon Valley produces nothing but planet-wrecking nonsense, grifty scams, and planet-wrecking, nonsensical scams, then these are all features of the tech sector, not bugs.

As Anil Dash writes:

Driving change requires us to make the machine want something else. If the purpose of a system is what it does, and we don’t like what it does, then we have to change the system.

https://www.anildash.com/2024/05/29/systems-the-purpose-of-a-system/

To give climate tech the attention, excitement, and political will it deserves, we need to recalibrate our understanding of the world. We need to have object permanence. We need to remember just how few people were actually using cryptocurrency during the bubble and apply that understanding to AI hype. Only 2% of Britons surveyed in a recent study use AI tools:

https://www.bbc.com/news/articles/c511x4g7x7jo

If we want our tech companies to do good, we have to understand that their ground state is to create planet-wrecking nonsense, grifty scams, and planet-wrecking, nonsensical scams. We need to make these companies small enough to fail, small enough to jail, and small enough to care:

https://pluralistic.net/2024/04/04/teach-me-how-to-shruggie/#kagi

We need to hold companies responsible, and we need to change the microeconomics of the board room, to make it easier for tech workers who want to do good to shout down the scammers, nonsense-peddlers and grifters:

https://pluralistic.net/2023/07/28/microincentives-and-enshittification/

Yesterday, a federal judge ruled that the FTC could hold Amazon executives personally liable for the decision to trick people into signing up for Prime, and for making the unsubscribe-from-Prime process into a Kafka-as-a-service nightmare:

https://arstechnica.com/tech-policy/2024/05/amazon-execs-may-be-personally-liable-for-tricking-users-into-prime-sign-ups/

Imagine how powerful a precedent this could set. The Amazon employees who vociferously objected to their bosses' decision to make Prime as confusing as possible could have raised the objection that doing this could end up personally costing those bosses millions of dollars in fines:

https://pluralistic.net/2023/09/03/big-tech-cant-stop-telling-on-itself/

We need to make climate tech, not Big Tech, the center of our scrutiny and will. The climate emergency is so terrifying as to be nearly unponderable. Science fiction writers are increasingly being called upon to try to frame this incomprehensible risk in human terms. SF writer (and biologist) Peter Watts's conversation with evolutionary biologist Dan Brooks is an eye-opener:

https://thereader.mitpress.mit.edu/the-collapse-is-coming-will-humanity-adapt/

They draw a distinction between "sustainability" meaning "what kind of technological fixes can we come up with that will allow us to continue to do business as usual without paying a penalty for it?" and sustainability meaning, "what changes in behavior will allow us to save ourselves with the technology that is possible?"

Writing about the Watts/Brooks dialog for Naked Capitalism, Yves Smith invokes William Gibson's The Peripheral:

With everything stumbling deeper into a ditch of shit, history itself become a slaughterhouse, science had started popping. Not all at once, no one big heroic thing, but there were cleaner, cheaper energy sources, more effective ways to get carbon out of the air, new drugs that did what antibiotics had done before…. Ways to print food that required much less in the way of actual food to begin with. So everything, however deeply fucked in general, was lit increasingly by the new, by things that made people blink and sit up, but then the rest of it would just go on, deeper into the ditch. A progress accompanied by constant violence, he said, by sufferings unimaginable.

https://www.nakedcapitalism.com/2024/05/preparing-for-collapse-why-the-focus-on-climate-energy-sustainability-is-destructive.html

Gibson doesn't think this is likely, mind, and even if it's attainable, it will come amidst "unimaginable suffering."

But the universe of possible technologies is quite large. As Chachra points out in How Infrastructure Works, we could give every person on Earth a Canadian's energy budget (like an American's, but colder), by capturing a mere 0.4% of the solar radiation that reaches the Earth's surface every day. Doing this will require heroic amounts of material and labor, especially if we're going to do it without destroying the planet through material extraction and manufacturing.

These are the questions that we should be concerning ourselves with: what behavioral changes will allow us to realize cheap, abundant, green energy? What "innovations" will our society need to focus on the things we need, rather than the scams and nonsense that creates Silicon Valley fortunes?

How can we use planning, and solidarity, and codetermination to usher in the kind of tech that makes it possible for us to get through the climate bottleneck with as little death and destruction as possible? How can we use enforcement, discernment, and labor rights to thwart the enshittificatory impulses of Silicon Valley's biggest assholes?

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/05/30/posiwid/#social-cost-of-carbon

#pluralistic#ai#hype#anil dash#stafford beer#amazon#prime#scams#dark patterns#POSIWID#the purpose of a system is what it does#climate#economics#innovation#renewables#social cost of carbon#green energy#solar#wind#ryan cooper#peter watts#the jackpot#ai hype#chips act#ira#inflation reduction act#infrastructure#deb chachra

140 notes

·

View notes

Text

reference

#just roll with it#jrwi#jrwi gryffon#gryffon#gryffon jrwi#jay ferin#chip the bastard#jrwi chip#chip jrwi#cursed woody#Sorry.#i cant be the only one whos being haunted by cursed woody#WARNING FOR AI GENERATED STUFF IN THE VIDEO I LINKED BTW#its really uncomfortable to watch for me personally cuz the effects are all weird. cuz yknow ai#i really hope somebody wjo doesnt know what cursed woody is clicks on this and is enlightened#what if i was lost forever. of i was sids to keahp.#potatart#jrwi riptide#just roll with it riptide

245 notes

·

View notes

Text

Harnessing Silicon: How In-House Chips Are Shaping the Future of AI

New Post has been published on https://thedigitalinsider.com/harnessing-silicon-how-in-house-chips-are-shaping-the-future-of-ai/

Harnessing Silicon: How In-House Chips Are Shaping the Future of AI

Artificial intelligence, like any software, relies on two fundamental components: the AI programs, often referred to as models, and the computational hardware, or chips, that drive these programs. So far, the focus in AI development has been on refining the models, while the hardware was typically seen as a standard component provided by third-party suppliers. Recently, however, this approach has started to change. Major AI firms such as Google, Meta, and Amazon have started developing their own AI chips. The in-house development of custom AI chips is heralding a new era in AI advancement. This article will explore the reasons behind this shift in approach and will highlight the latest developments in this evolving area.

Why In-house AI Chip Development?

The shift toward in-house development of custom AI chips is being driven by several critical factors, which include:

Increasing Demand of AI Chips

Creating and using AI models demands significant computational resources to effectively handle large volumes of data and generate precise predictions or insights. Traditional computer chips are incapable of handling computational demands when training on trillions of data points. This limitation has led to the creation of cutting-edge AI chips specifically designed to meet the high performance and efficiency requirements of modern AI applications. As AI research and development continue to grow, so does the demand for these specialized chips.

Nvidia, a leader in the production of advanced AI chips and well ahead of its competitors, is facing challenges as demand greatly exceeds its manufacturing capacity. This situation has led to the waitlist for Nvidia’s AI chips being extended to several months, a delay that continues to grow as demand for their AI chips surges. Moreover, the chip market, which includes major players like Nvidia and Intel, encounters challenges in chip production. This issue stems from their dependence on Taiwanese manufacturer TSMC for chip assembly. This reliance on a single manufacturer leads to prolonged lead times for manufacturing these advanced chips.

Making AI Computing Energy-efficient and Sustainable

The current generation of AI chips, which are designed for heavy computational tasks, tend to consume a lot of power, and generate significant heat. This has led to substantial environmental implications for training and using AI models. OpenAI researchers note that: since 2012, the computing power required to train advanced AI models has doubled every 3.4 months, suggesting that by 2040, emissions from the Information and Communications Technology (ICT) sector could comprise 14% of global emissions. Another study showed that training a single large-scale language model can emit up to 284,000 kg of CO2, which is approximately equivalent to the energy consumption of five cars over their lifetime. Moreover, it is estimated that the energy consumption of data centers will grow 28 percent by 2030. These findings emphasize the necessity to strike a balance between AI development and environmental responsibility. In response, many AI companies are now investing in the development of more energy-efficient chips, aiming to make AI training and operations more sustainable and environment friendly.

Tailoring Chips for Specialized Tasks

Different AI processes have varying computational demands. For instance, training deep learning models requires significant computational power and high throughput to handle large datasets and execute complex calculations quickly. Chips designed for training are optimized to enhance these operations, improving speed and efficiency. On the other hand, the inference process, where a model applies its learned knowledge to make predictions, requires fast processing with minimal energy use, especially in edge devices like smartphones and IoT devices. Chips for inference are engineered to optimize performance per watt, ensuring prompt responsiveness and battery conservation. This specific tailoring of chip designs for training and inference tasks allows each chip to be precisely adjusted for its intended role, enhancing performance across different devices and applications. This kind of specialization not only supports more robust AI functionalities but also promotes greater energy efficiency and cost-effectiveness broadly.

Reducing Financial Burdens

The financial burden of computing for AI model training and operations remains substantial. OpenAI, for instance, uses an extensive supercomputer created by Microsoft for both training and inference since 2020. It cost OpenAI about $12 million to train its GPT-3 model, and the expense surged to $100 million for training GPT-4. According to a report by SemiAnalysis, OpenAI needs roughly 3,617 HGX A100 servers, totaling 28,936 GPUs, to support ChatGPT, bringing the average cost per query to approximately $0.36. With these high costs in mind, Sam Altman, CEO of OpenAI, is reportedly seeking significant investments to build a worldwide network of AI chip production facilities, according to a Bloomberg report.

Harnessing Control and Innovation

Third-party AI chips often come with limitations. Companies relying on these chips may find themselves constrained by off-the-shelf solutions that don’t fully align with their unique AI models or applications. In-house chip development allows for customization tailored to specific use cases. Whether it’s for autonomous cars or mobile devices, controlling the hardware enables companies to fully leverage their AI algorithms. Customized chips can enhance specific tasks, reduce latency, and improve overall performance.

Latest Advances in AI Chip Development

This section delves into the latest strides made by Google, Meta, and Amazon in building AI chip technology.

Google’s Axion Processors

Google has been steadily progressing in the field of AI chip technology since the introduction of the Tensor Processing Unit (TPU) in 2015. Building on this foundation, Google has recently launched the Axion Processors, its first custom CPUs specifically designed for data centers and AI workloads. These processors are based on Arm architecture, known for their efficiency and compact design. The Axion Processors aim to enhance the efficiency of CPU-based AI training and inferencing while maintaining energy efficiency. This advancement also marks a significant improvement in performance for various general-purpose workloads, including web and app servers, containerized microservices, open-source databases, in-memory caches, data analytics engines, media processing, and more.

Meta’s MTIA

Meta is pushing forward in AI chip technology with its Meta Training and Inference Accelerator (MTIA). This tool is designed to boost the efficiency of training and inference processes, especially for ranking and recommendation algorithms. Recently, Meta outlined how the MTIA is a key part of its strategy to strengthen its AI infrastructure beyond GPUs. Initially set to launch in 2025, Meta has already put both versions of the MTIA into production, showing a quicker pace in their chip development plans. While the MTIA currently focuses on training certain types of algorithms, Meta aims to expand its use to include training for generative AI, like its Llama language models.

Amazon’s Trainium and Inferentia

Since introducing its custom Nitro chip in 2013, Amazon has significantly expanded its AI chip development. The company recently unveiled two innovative AI chips, Trainium and Inferentia. Trainium is specifically designed to enhance AI model training and is set to be incorporated into EC2 UltraClusters. These clusters, capable of hosting up to 100,000 chips, are optimized for training foundational models and large language models in an energy efficient way. Inferentia, on the other hand, is tailored for inference tasks where AI models are actively applied, focusing on decreasing latency and costs during inference to better serve the needs of millions of users interacting with AI-powered services.

The Bottom Line

The movement towards in-house development of custom AI chips by major companies like Google, Microsoft, and Amazon reflects a strategic shift to address the increasing computational needs of AI technologies. This trend highlights the necessity for solutions that are specifically tailored to efficiently support AI models, meeting the unique demands of these advanced systems. As demand for AI chips continues to grow, industry leaders like Nvidia are likely to see a significant rise in market valuation, underlining the vital role that custom chips play in advancing AI innovation. By creating their own chips, these tech giants are not only enhancing the performance and efficiency of their AI systems but also promoting a more sustainable and cost-effective future. This evolution is setting new standards in the industry, driving technological progress and competitive advantage in a rapidly changing global market.

#000#ai#AI chip#AI chips#AI Infrastructure#ai model#AI models#AI research#AI systems#ai training#AI-powered#Algorithms#Amazon#Analytics#app#applications#approach#architecture#arm#Article#artificial#Artificial Intelligence#autonomous cars#battery#Building#Cars#CEO#change#chatGPT#chip production

1 note

·

View note

Text

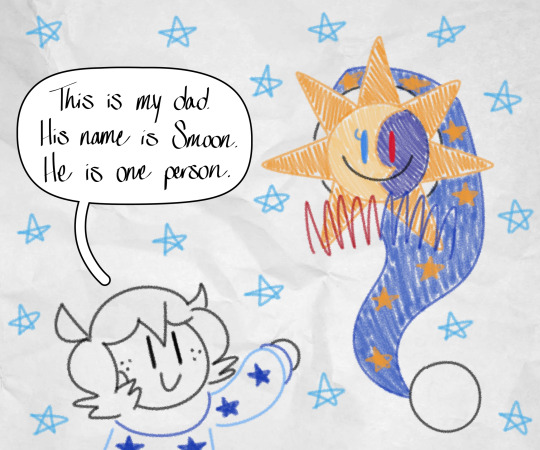

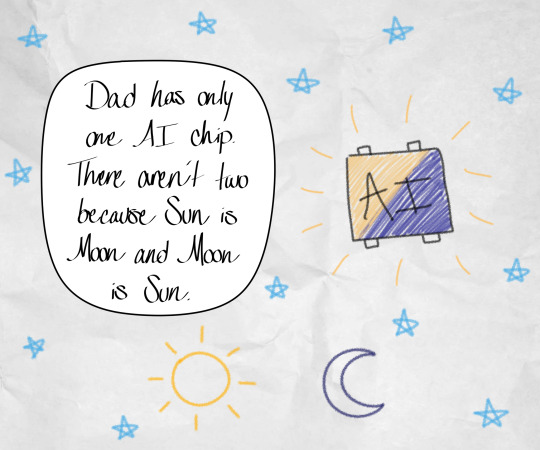

#yes i am retconning myself with the ai chips#the dadcare attendant#fnaf sun and moon dad au#fnaf security breach#five nights at freddy's#fnaf sun and moon#smoon is not eclipse#fnaf sun#fnaf sundrop#fnaf sunny#fnaf moon#fnaf moondrop#fnaf smoon#fnaf gregory#gregory star#gears with gregory

303 notes

·

View notes

Text

sometimes I'm like "aw man it kinda sucks that I really am only good with monochrome, because colored art pieces absolutely have more pfp potential, but then I remembered something fun that my monochrome pieces have.

you get a program that let's you use Overlay or Multiply? Suddenly my blog turns into a giant coloring book!!! look, I just made this minecraft skeleton gay!

#jrwi#jrwi riptide#just roll with it#just roll with it riptide#jrwishow#jrwi show#jrwi podcast#chip lastname#jrwi chip#chip allegationss#indrids ball pit#also I've mentioned this before but i do give full permission for ppl to fuck with my art however they choose#so long as they arent feeding it into ai thats where i draw the line

64 notes

·

View notes

Video

Ryuki fancam :)

#ai: the somnium files#ai: nirvana initiative#aitsf#kuruto ryuki#really encapsulates whatever he went through in this fucking game fr#SHOUTOUT TO MY BESTIES CHIP PK AND JUDE THIS IS FOR YOUG#my edits

188 notes

·

View notes

Text

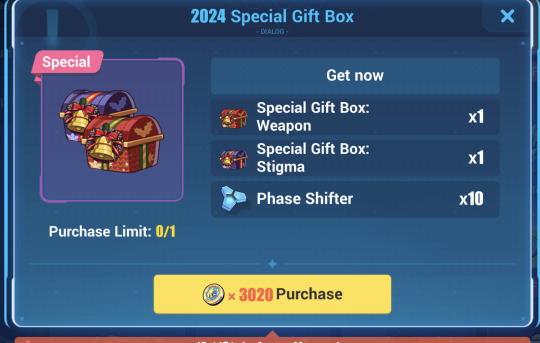

the holiday hellbox is back this year so this is a reminder to please read carefully when they offer stuff like this because this is a scam

it only gives you a weapon and stigma, and you cannot choose which one you want to get.

and with the b-chip price in mind you pay up 55€ for two gears that you can't even pick and choose!

#honkai impact#also LOL at the ai stigmata being labeled like that as if all three stigmata had separate TMB parts.#if youre seriously considering buying something w/ the b chips just get the s-rank selector or something#or just. dont buy anything

55 notes

·

View notes

Text

#aitsf#aini#ai the somnium files#nirvana initiative#kaname date#mizuki okiura#mizuki date#kuruto ryuki#chip doodles#does a flip#next ai post will either be silly comics or something huge ive been cooking#the jury will decide#btw these are all wips that have piled up over the past year#there is.... so much

755 notes

·

View notes

Text

Some thoughts and headcanons born from @k-chips amazing art of AI!Clavell and a more cohesive gathering of my little idea dump there. This gonna be a long one, so strap in.

To start, the meat of my idea from that post (bold is me just adding context now)

It was their (Sada/Turo) invention- the time machine- that got the original Clavell killed. Like they brought our Raidon forward, but during their time back on the surface they realize how much of Arven's life they're missing out on, and Clavell is like "You spend time with the kid, I'll keep researching, when Arven is around 16-17 we can swap spots" but shit went sideways when, while down there with Raidon, the second, feral Raidon is summoned and the game follows the tragedy we all discover.

So, in this timeline, Sada/Turo aren't as obsessed with their research. When they bring Koraidon/Miraidon (just for the sake of convenience, we will be using Pokemon Scarlet as a base as that's the version I play going forward, but remember this also applies to Violet) to the surface, Arven is around maybe 8 or 9 and Koraidon is around the same age based on journal entries mentioning how she "expected one gift of life, and now she has two" after mentioning bringing Kor foward in time, is when Sada realizes she's missing out on so much of her son's life, and that creating Paradise is worthless if it means destroying what remains of her little family.

Enter Clavell: he's still somewhat involved with her research in Area Zero and suggests she stay and raise Arven and he'll work on her research with the AI she began developing, and when Arven is old enough they can swap spots and she can return to Area Zero. She agrees, and after the event causing Kor to be sent back to the Crater, Sada and Clavell keep in constant contact with her and Arven occasionally making trips down to visit.

4 years before the game, the 2nd Koraidon (I'll refer to it either as feral Kor or Rex (in reference to its dex entry calling him the Winged King)) is brought to our time. Rex attacks Kor, and Clavell follows the battle to Research Station 4, the AI giving him updates on the battle. He arrives just in time to see Kor backed into a corner with Rex ready to give the killing blow. Clavell shields Kor, having practically raised it, and is killed by the feral Koraidon.

The AI panics, Kor is injured and flees, and suddenly Clavell stops calling and refuses any suggestions to come visit from Sada. He only communicates via email for a year, and when he finally video calls its to say that if he needs anything from her or if there are any developments in the project, he will reach out to her and to stop disturbing him.

Sada of course is upset by this. Angry that he basically told her to fuck off on what is technically both her and Clavell's research (bc she's probably been working on her research while still raising and spending time with her son), and worried as her friend and mentor has never been like this.

Not sure how Clavell would become Academy Director in this timeline unless Team Star's actions at the school take place a bit before Clavell dies, and just before he's killed Clavell had accepted becoming director, but requested time to finish his work and that Sada be his temp replacement (or something, still working the kinks out on this one)

Anyways, a year before the game Arven- who misses Clavell and is both mad at him for upsetting his mom and worried for his safety- enters the Crater and during this, Mabosstiff is attacked by something (in Scarlet, I personally think it was either a Slitherwing or a Roaring Moon, but the later means that they were lucky to even escape alive, as Roaring Moon doesn't seem to be one to leave its prey alive.).

Game occurs, Sada being acting Director while juggling investigating Team Star, her research, raising her son and trying to help Mabosstiff, and looking into what could have happened to Clavell, who suddenly hasn't been responding to anything despite how he should have returned to take over as Director. She's surprised to see Kor, but AIClavell calls and says that Kor must have missed Sada and Arven and gives ownership of Kor to the player. Sada figures it would be good for the lizard to get experience via your Treasure Hunt and requests you take care of it as it's like her second child.

Game proceeds as normal till Area Zero. Sada joins the gang in the Great Crater partially because she knows how dangerous it can be and she can't just allow 4 teens to stroll in there unsupervised (why Clavell would even request a child enter the Crater is something that bothers her), and partially to see what's going on down there. She's basically bouncing up and down seeing her paradise having been created, but recognizes that should the Paradox mons escape, it would destroy Paldea's ecosystems. When you go to talk with AIClavell at the lab, she stays outside at first with Arven to hold off the Paradox mons trying to get in.

When she finally sees AI Clavell and discovers the OG Clavell is dead, she is in a state of shock. It was her invention, her dream that resulted in her best friend and mentor's death, and that this entire time she hadn't even been communicating with him, but the AI she created to help Clavell.

AI Clavell needs someone to stop the machine as he knows his human self would want the same, but the Paradise Protection Protocol kicks in, much to Sada- who had it as a failsafe should the time machine detect that a Pokémon was attacking it, not if someone was simply powering it down- and AI Clavell- who didn't even know it existed- horror.

I might add more to this, but yeah. TLDR, Clavell stays in Area Zero so Sada can raise Arven, Clavell is killed protecting Kor, Sada is Director instead, AI Clavell battles you.

#pokemon sv#pokemon clavell#director clavell#pokemon scarlet/violet#pokemon sada#pokemon scarlet#professor sada#professor turo#arven pokemon#ai!clavell au#my headcanons#long post#k-chips' art is amazing go check it out#this au has major angst potential#and i LIVE for that shit#also somewhat gives Arven a happier ending as his parents aren't shitty#give my boy some happiness dammit#sada and turo are fun to explore#this is from Scarlet's perspective but can also apply to turo

308 notes

·

View notes