#Apache Solr Development Company

Explore tagged Tumblr posts

Link

If you are looking for a Solr Consulting Company, then you can contact us and we will provide you with the best Solr Consulting Services. Our experienced Solr Search consultants will guide you to implement the awesome Enterprise Search Apache Solr in your system and future-proof your website/portal.

#Apache Solr Consulting & Development#Apache Solr Consulting Services#Apache Solr Development Company#Apache Solr Development Services

0 notes

Text

AWS

𝐀𝐦𝐚𝐳𝐨𝐧 𝐖𝐞𝐛 𝐒𝐞𝐫𝐯𝐢𝐜𝐞𝐬 (𝐀𝐖𝐒) is the world’s most comprehensive and broadly adopted cloud platform, offering over 200 fully featured services from data centers globally. Millions of customers—including the fastest-growing startups, largest enterprises, and leading government agencies—are using AWS to lower costs, become more agile, and innovate faster.

𝐀𝐦𝐚𝐳𝐨𝐧 𝐖𝐞𝐛 𝐒𝐞𝐫𝐯𝐢𝐜𝐞𝐬 (𝐀𝐖𝐒)provides over 170 AWS services to the developers so they can access them from anywhere at the time of need. AWS has customers in over 190 countries worldwide, including 5000 ed-tech institutions and 2000 government organizations. Many companies like ESPN, Adobe, Twitter, Netflix, Facebook, BBC, etc., use AWS services.

Amazon has a list of services:

Compute service

Storage

Database

Networking and delivery of content

Security tools

Developer tools

Management tools

and many more.

Some features of AWSLow Cost

AWS offers low, pay-as-you-go pricing with no up-front expenses or long-term commitments. We are able to build and manage a global infrastructure at scale, and pass the cost saving benefits onto you in the form of lower prices. With the efficiencies of our scale and expertise, we have been able to lower our prices on 15 different occasions over the past four years.

Agility and Instant Elasticity

AWS provides a massive global cloud infrastructure that allows you to quickly innovate, experiment and iterate. Instead of waiting weeks or months for hardware, you can instantly deploy new applications, instantly scale up as your workload grows, and instantly scale down based on demand. Whether you need one virtual server or thousands, whether you need them for a few hours or 24/7, you still only pay for what you use.

Open and Flexible

AWS is a language and operating system agnostic platform. You choose the development platform or programming model that makes the most sense for your business. You can choose which services you use, one or several, and choose how you use them. This flexibility allows you to focus on innovation, not infrastructure.

Secure

AWS is a secure, durable technology platform with industry-recognized certifications and audits: PCI DSS Level 1, ISO 27001, FISMA Moderate, FedRAMP, HIPAA, and SOC 1 (formerly referred to as SAS 70 and/or SSAE 16) and SOC 2 audit reports. Our services and data centers have multiple layers of operational and physical security to ensure the integrity and safety of your data.

Use Cases of Amazon Web Services (AWS)

Slack

Slack is the cloud-based team collaboration tool developed in 2013 under the team of Silicon Valley entrepreneurs. It was initially built for a company to work in team. Now, it is used by all over the leading enterprises with an estimate of over a million daily active users.

It was only a chat application in 2013. Later, Slack announced the acquisition of ScreenHero, that have additional features of data and file sharing along with the screen sharing. That wisely facilitate team in collaboration.

The Slack founders faced failures in their previous startup ventures. So, they worked with that experience to develop slack. They just needed an extra layer of expertise to run the infrastructure. The prior company named Tiny Speck, used AWS in 2009, which became ‘Slack Tech’. That time, this was the only viable offering for the public cloud.

Now, Slack has very simple IT architecture that is based on AWS services. They are using Amazon Elastic Compute Cloud (EC2), Amazon Simple Storage Service (S3) for file uploads and sharing static assets, Elastic Load Balancer to balance the load across servers.

To protect the network on cloud and firewall rules with security groups, they are using Amazon Virtual Private Cloud (VPC). For the protection of user credentials and accounts, they are utilizing Amazon Identity and Access Management (IAM) to control user credentials. Along with these services, they are using Redis data structure server, Apache Solr search tool, the Squid caching proxy, and a MySQL database.

To make it easier for AWS customers to manage their environment, they recently launched a collection of Slack Integration Blueprints for AWS Lambda. Amazon Web Services helps them achieving their goal with ease. Hosting Slack in AWS made their customers more confident that Slack is safe, secure, and always-on.

Netflix

Netflix is the online platform for video streaming with low latency and delay. The main focus of Netflix is to make it available for their customers to watch the videos on demand, of their interests.

Their main goal is to deliver the customers an ease to enjoy whatever they like. They have an estimated 7 billion hours video streaming, 50 million customers in 60 countries.

In 2009, they moved to AWS cloud to incorporate the content delivery throughout the globe. They preferred AWS because they wanted to be more focused on updating, saving and managing instances over the cloud.

To do that, they used the model of the dynamic AWS infrastructure of about tons of instances over many geographical areas. Before using AWS cloud, they were using assembly language or any protocol available for deploying content. Using AWS, they can handle the infrastructure programmatically.

Netflix is using dozens of EC2 instances running across 3 AWS regions. There are hundreds of micro services running and serving 1 billion hours of content serving per month. They use Amazon S3 for chopping the video content into 5 seconds parts, package it, and then deploy to the content delivery networks.

AWS helps them in achieving setting up a backup for disaster activity (here, Lambda helped them to copy and validate it). AWS cloud helps them in monitoring and creating alerts and trigger it to compensate the changes in situations.

They quoted that AWS Lambda helped them to build a rule-based self managing infrastructure and replacing it with the current system.

This is How AWS helped many Companies .

Thank You for Reading.

3 notes

·

View notes

Text

How can I run a Java app on Apache without Tomcat?

Apache Solr is a popular enterprise-level search platform which is being used widely by popular websites such as Reddit, Netflix, and Instagram. The reason for the popularity of Apache Solr is its well-built text search, faceted search, real-time indexing, dynamic clustering, and easy integration. Apache Solr helps building high level search options for the websites containing high volume data.

Java being one of the most useful and important languages gained the reputation worldwide for creating customized web applications. Prominent Pixel has been harnessing the power of Java web development to the core managing open source Java-based systems all over the globe. Java allows developers to develop the unique web applications in less time with low expenses.

Prominent Pixel provides customized Java web development services and Java Application development services that meets client requirements and business needs. Being a leading Java web application development company in India, we have delivered the best services to thousands of clients throughout the world.

For its advanced security and stable nature, Java has been using worldwide in web and business solutions. Prominent Pixel is one of the best Java development companies in India that has a seamless experience in providing software services. Our Java programming services are exceptional and are best suitable to the requirements of the clients and website as well. Prominent Pixel aims at providing the Java software development services for the reasonable price from small, medium to large scale companies.

We have dealt with various clients whose requirements are diversified, such as automotive, banking, finance, healthcare, insurance, media, retail and e-commerce, entertainment, lifestyle, real estate, education, and much more. We, at Prominent Pixel support our clients from the start to the end of the project.

Being the leading java development company in India for years, success has become quite common to us. We always strive to improve the standards to provide the clients more and better than they want. Though Java helps in developing various apps, it uses complex methodology which definitely needs an expert. At Prominent Pixel, we do have the expert Java software development team who has several years of experience.

Highly sophisticated web and mobile applications can only be created by Java programming language and once the code is written, it can be used anywhere and that is the best feature of Java. Java is everywhere and so the compatibility of Java apps. The cost for developing the web or mobile application on Java is also very low which is the reason for people to choose it over other programming languages.

It is not an easy task to manage a large amount of data at one place if there is no Big Data. Let it be a desktop or a sensor, the transition can be done very effectively using Big Data. So, if you think your company requires Big Data development services, you must have to choose the company that offers amazing processing capabilities and authentic skills.

Prominent Pixel is one of the best Big Data consulting companies that offer excellent Big Data solutions. The exceptional growth in volume, variety, and velocity of data made it necessary for various companies to look for Big Data consulting services. We, at Prominent Pixel, enable faster data-driven decision making using our vast experience in data management, warehousing, and high volume data processing.

Cloud DevOps development services are required to cater the cultural and ethical requirements of different teams of a software company. All the hurdles caused by the separation of development, QA, and IT teams can be resolved easily using Cloud DevOps. It also amplifies the delivery cycle by supporting the development and testing to release the product on the same platform. Though Cloud and DevOps are independent, they together have the ability to add value to the business through IT.

Prominent Pixel is the leading Cloud DevOps service provider in India which has also started working for the abroad projects recently. A steady development and continuous delivery of the product can be possible through DevOps consulting services and Prominent Pixel can provide such services. Focusing on the multi-phase of the life cycle to be more connected is another benefit of Cloud DevOps and this can be done efficiently with the best tools we have at Prominent Pixel.

Our Cloud DevOps development services ensure end-to-end delivery through continuous integration and development through the best cloud platforms. With our DevOps consulting services, we help small, medium, and large-scale enterprises align their development and operation to ensure higher efficiency and faster response to build and market high-quality software.

Prominent Pixel Java developers are here to hire! Prominent Pixel is one of the top Java development service providers in India. We have a team of dedicated Java programmers who are skilled in the latest Java frameworks and technologies that fulfills the business needs. We also offer tailored Java website development services depending on the requirements of your business model. Our comprehensive Java solutions help our clients drive business value with innovation and effectiveness.

With years of experience in providing Java development services, Prominent Pixel has developed technological expertise that improves your business growth. Our Java web application programmers observe and understand the business requirements in a logical manner that helps creating robust creative, and unique web applications.

Besides experience, you can hire our Java developers for their creative thinking, efforts, and unique approach to every single project. Over the years, we are seeing the increase in the count of our clients seeking Java development services and now we have satisfied and happy customers all over the world. Our team of Java developers follows agile methodologies that ensure effective communication and complete transparency.

At Prominent Pixel, Our dedicated Java website development team is excelled in providing integrated and customized solutions to the Java technologies. Our Java web application programmers have the capability to build robust and secure apps that enhance productivity and bring high traffic. Hiring Java developers from a successful company gives you the chance to get the right suggestion for your Java platform to finalize the architecture design.

Prominent Pixel has top Java developers that you can hire in India and now, many clients from other countries are also hiring our experts for their proven skills and excellence in the work. Our Java developers team also have experience in formulating business class Java products that increase the productivity. You will also be given the option to choose the flexible engagement model if you want or our developers will do it for you without avoiding further complications.

With a lot of new revolutions in the technology world, the Java platform has introduced a new yet popular framework that is a Spring Framework. To avoid the complexity of this framework in the development phase, the team of spring again introduced Spring Boot which is identified as the important milestone of spring framework. So, do you think your business needs an expert Spring Boot developer to help you! Here is the overview of spring Boot to decide how our dedicated Spring Boot developers can support you with our services.

Spring Boot is a framework that was designed to develop a new spring application by simplifying a bootstrapping. You can start new Spring projects in no time with default code and configuration. Spring Boot also saves a lot of development time and increases productivity. In the rapid application development field, Spring helps developers in boilerplate configuration. Hire Spring Boot developer from Prominent Pixel to get stand-alone Spring applications right now!

Spring IO Platform has complex XML configuration with poor management and so Spring Boot was introduced for XML-free development which can be done very easily without any flaws. With the help of spring Boot, developers can even be free from writing import statements. By the simplicity of framework with its runnable web application, Spring Boot has gained much popularity in no time.

At prominent Pixel, our developers can develop and deliver matchless Spring applications in a given time frame. With years of experience in development and knowledge in various advanced technologies, our team of dedicated Spring Boot developers can provide matchless Spring applications that fulfill your business needs. So, hire Java Spring boot developers from Prominent Pixel to get default imports and configuration which internally uses some powerful Groovy based techniques and tools.

Our Spring Boot developers also help combining existing framework into some simple annotations. Spring Boot also changes Java-based application model to a whole new model. But everything of this needs a Spring Boot professional and you can find many of them at Prominent Pixel. At Prominent Pixel, we always hire top and experienced Spring boot developers so that we can guarantee our clients with excellent services.

So, hire dedicated Spring Boot developers from Prominent Pixel to get an amazing website which is really non-comparable with others. Our developers till now have managed complete software cycle from all the little requirements to deployment, our Spring Boot developers will take care of your project from the beginning to an end. Rapid application development is the primary goal of almost all the online businesses today which can only be fulfilled by the top service providers like Prominent Pixel.

Enhance your Business with Solr

If you have a website with a large number of documents, you must need good content management architecture to manage search functionality. Let it be an e-commerce portal with numerous products or website with thousand pages of lengthy content, it is hard to search for what you exactly want. Here comes integrated Solr with a content management system to help you with fast and effective document search. At Prominent Pixel, we offer comprehensive consulting services for Apache Solr for eCommerce websites, content-based websites, and internal enterprise-level content management systems.

Prominent Pixel has a team of expert and experienced Apache Solr developers who have worked on several Solr projects to date. Our developers’ will power up your enterprise search with flexible features of Solr. Our Solr services are specially designed for eCommerce websites, content-based websites and enterprise-level websites. Also, our dedicated Apache Solr developers create new websites with solar integrated content architecture.

Elasticsearch is a search engine based on Lucene that provides a distributed, multitenant-capable full-text search engine with an HTTP web interface and schema-free JSON documents. At Prominent Pixel, we have a team of dedicated Elasticsearch developers who have worked with hundreds of clients worldwide, providing them with a variety of services.

Before going into the production stage, the design and data modeling are two important steps one should have to take care of. Our Elasticsearch developers will help you to choose the perfect design and helps in data modeling before getting into the actual work.

Though there are tons of Elastic search developers available in the online world, only a few of them have experience and expertise and Prominent Pixel developers are one among them. Our Elastic search developers will accelerate your progress in Elastic journey no matter where you are. Whether you are facing technical issues, business problems, or any other problems, our professional developers will take care of everything.

All your queries of your data and strategies will be answered by our Elastic search developers. With in-depth technical knowledge, they will help you to realize possibilities which increase the search results, push past plateaus and find out new and unique solutions with Elastic Stack and X-Pack. Finally, we build a relationship and understand all the business and technical problems and solve them in no time.

There are thousands of Big Data development companies who can provide the services for low price. Then why us? We have a reason for you. Prominent Pixel is a company that was established a few years but gained a huge success with its dedication towards work. In a short span of time, we have worked on more than a thousand Big Data projects and all of them were successful.

Our every old clients will choose us with confidence remembering the work we have done for them! At Prominent Pixel, we always hire the top senior Big Data Hadoop developers who can resolve the issues of clients perfectly within a given time frame. So, if you are choosing Prominent Pixel for your Big Data project, then you are in safe hands.

These days, every business needs an easy access to data for exponential growth and for that, we definitely need an emerging digital Big Data solution. Handling the great volume of data has become a challenge even to the many big Data analysts as it needs an expertise and experience. At Prominent Pixel, you can hire an expert Hadoop Big Data developer who can help you handle a large amount of data by analyzing your business through extensive research.

The massive data needs the best practices to handle carefully to not lose any information. Identifying the secret technique to manage Big Data is the key factor to develop the perfect strategy to help your business grow. So, to manage your large data using the best strategy and practices, you can hire top senior Big Data Hadoop developer from Prominent Pixel who will never disappoint you. For the past few years, Prominent Pixel has become the revolution by focusing on quality of work. With a team of certified Big Data professionals, we have never comprised in providing quality services at the reasonable price.

Being one of the leading Big Data service providers in India, we assist our customers in coming up with the best strategy that suits their business and gives a positive outcome. Our dedicated big data developers will help you selecting the suitable technology and tools to achieve your business goals. Depending on the technology our customer using, our Big Data Hadoop developers will offer vendor-neutral recommendations.

At Prominent Pixel, our Hadoop Big Data developers will establish the best-suitable modern architecture that encourages greater efficiency in everyday processes. The power of big data can improve the business outcomes and to make the best utilization of big Data, you must have to hire top senior big Data Hadoop developer from prominent Pixel.

The best infrastructure model is also required to achieve the business goals meanwhile, great deployment of big data technologies is also necessary. At Prominent Pixel, our Big Data analysts will manage the both at the same time. You can hire Big Data analytics solutions from Prominent Pixel to simplify the integration and installation of big Data infrastructure by eliminating complexity.

At Prominent Pixel, we have the experts in all relative fields and so same for the Hadoop. You can hire dedicated Hadoop developer from Prominent Pixel to solve your analytical challenges which include the complex Big Data issues. The expertise of our Hadoop developers is far beyond your thinking as they will always provide more than what you want.

With vast experience in Big Data, our Hadoop developers will provide the analytical solution to your business very quickly with no flaws. We can also solve the complexities of misconfigured Hadoop clusters by developing new ones. Through the rapid implementation, our Hadoop developers will help you derive immense value from Big Data.

You can also hire our dedicated Hadoop developer to build scalable and flexible solutions for your business and everything comes for an affordable price. Till now, our developers worked on thousands of Hadoop projects where the platform can be deployed onsite or in the cloud so that organizations can deploy Hadoop with the help of technology partners. In this case, the cost of hardware acquisition will be reduced which benefits our clients.

At Prominent Pixel, we have the experts in all relative fields and so same for the Hadoop. You can hire dedicated Hadoop developer from Prominent Pixel to solve your analytical challenges which include the complex Big Data issues. The expertise of our Hadoop developers is far beyond your thinking as they will always provide more than what you want.

With vast experience in Big Data, our Hadoop developers will provide the analytical solution to your business very quickly with no flaws. We can also solve the complexities of misconfigured Hadoop clusters by developing new ones. Through the rapid implementation, our Hadoop developers will help you derive immense value from Big Data.

You can also hire our dedicated Hadoop developer to build scalable and flexible solutions for your business and everything comes for an affordable price. Till now, our developers worked on thousands of Hadoop projects where the platform can be deployed onsite or in the cloud so that organizations can deploy Hadoop with the help of technology partners. In this case, the cost of hardware acquisition will be reduced which benefits our clients.

Apache Spark is an open-source framework for large-scale data processing. At Prominent Pixel, we have expert Spark developers to hire and they will help you to achieve high performance for batch and streaming data as well. With years of experience in handling Apache spark projects, we integrate Apache Spark that meets the needs of the business by utilizing unique capabilities and facilitating user experience. Hire senior Apache Spark developers from prominent Pixel right now!

Spark helps you to simplify the challenges and the tough task of processing the high volumes of real-time data. This work can be only managed effectively by the senior Spark developers where you can find many in Prominent Pixel. Our experts will also make high-performance possible using Batch and Data streaming.

Our team of experts will explore the features of Apache Spark for data management and Big Data requirements to help your business grow. Spark developers will help you by user profiling and recommendation for utilizing the high potential of Apache spark application.

A well-built configuration is required for the in-memory processing which is a distinctive feature over other frameworks and it definitely needs an expert spark developer. So, to get the uninterrupted services, you can hire senior Apache Spark developer from Prominent Pixel.

With more than 14 years of experience, our Spark developers can manage your projects with ease and effectiveness. You can hire senior Apache Spark SQL developers from Prominent Pixel who can develop and deliver a tailor-made Spark based analytic solutions as per the business needs. Our senior Apache Spark developers will support you in all the stages until the project is handed over to you.

At Prominent Pixel, our Spark developers can handle the challenges efficiently that encounter often while working on the project. Our senior Apache Spark developers are excelled in all the Big Data technologies but they are excited to work on Apache Spark because of its features and benefits.

We have experienced and expert Apache Spark developers who have worked extensively on data science projects using MLlib on Sparse data formats stored in HDFS. Also, they have been practising on handling the project on multiple tools which have undefined benefits. If you want to build an advanced analytics platform using Spark, then Prominent Pixel is the right place for you!

At Prominent Pixel, our experienced Apache spark developers are able to maintain a Spark cluster in bioinformatics, data integration, analysis, and prediction pipelines as a central component. Our developers also have analytical ability to design the predictive models and recommendation systems for marketing automation.

1 note

·

View note

Text

Stages of Developing your NFT Market

On the client side, NFT marketplaces work just like normal online stores . The user must register on the platform and create a personal digital wallet to store NFTs and cryptocurrencies.

Stages of developing your NFT market

An NFT trading platform for buying and selling is complex software that is best left to be developed by a development company with relevant experience, such as Merehead. We have been helping companies and individuals with fintech and blockchain projects — from wallets to cryptocurrency exchanges — since 2015. To do this, our company can help you create a nft marketplace development from scratch or clone an existing platform.

Step 1: Open the project

The first step in creating an NFT marketplace is for you and the development team to examine the details of your project to assess its technical feasibility.

Here you must answer the questions:

In which niche will you operate?

How exactly are you going to market the NFT?

What is your main target audience?

What token protocol are you going to use?

What tech stack are you going to use?

What monetization model will you use?

What will make your project stand out from the competition?

What features do you intend to implement?

Other questions…

The answers to these questions will guide you through the development process and help you create specifications for your NFT marketplace. If you find it difficult to answer the questions, don’t worry, the development team will help you: they can describe your ideas in text and visual schematics and prepare technical documentation so you can start designing.

Once the initial concept and specifications are ready, the development team can draw up a development plan to give an approximate timeline and budget for the project. You can then start the design.

Step 2: Design and development

When all the technical requirements and the development plan have been agreed, the development team can get to work. First, you, the business analyst, and/or the designers design the marketplace user interface (framework, mockups, and prototypes) with a description of user flows and platform features. An effective architecture for the trading platform is also created.

UX/UI design . The navigation and appearance of the user interface are very important when developing an NFT marketplace platform development , since first impressions, usability and the overall user experience depend on it. So make sure your site design is appealing to your audience and simple enough to be understood by anyone even remotely familiar with Amazon and Ebay.

Back-end and smart contracts . At this level the entire back-end of your trading platform operates. When developing it, in addition to the usual business logic and marketplace functionality, you need to implement blockchain, smart contracts, wallets, and provide an auction mechanism (most NFTs sell through it). This is an example of a tech stack for an NFT marketplace backend :

Blockchain: Ethereum, Binance Savvy Chain.

Token norm: ERC721, ERC1155, BEP-721, BEP-1155.

Savvy contracts: Ethereum Virtual Machine, BSC Virtual Machine.

Systems: Spring, Symfony, Cup.

Programming dialects: Java, PHP, Python.

SQL information bases: MySQL, PostgreSQL, MariaDB, MS SQL, Prophet.

NoSQL information bases: MongoDB, Cassandra, DynamoDB.

Web crawlers: Apache Solr, Elasticsearch.

DevOps: GitLab CI, TeamCity, GoCD Jenkins, WS CodeBuild, Terraform.

Store: Redis, Memcached.

The front end .

This is the whole outer piece of the exchanging stage, which is answerable for the collaboration with the end client.The main task of front-end development is to ensure ease of management, as well as reliability and performance. This is an example of a technology stack for the front-end of an NFT marketplace:

Web languages: Angular.JS, React.JS, Backbone and Ember.

Mobile languages: Java, Kotlin for Android and Swift for iOS.

Architecture: MVVM for Android and MVC, MVP, MVVM and VIPER for iOS.

IDE: Android Studio and Xcode for iOS.

SDK: Android SDK and iOS SDK.

Step 3: Test the product you have created

In this phase, several cycles of code testing are performed to ensure that the platform works correctly. The QA team performs various checks to ensure that your project’s code is free of bugs and critical bugs. In addition, content, usability, security, reliability and performance tests are carried out for all possible scenarios of use of the platform.

Step 4: Deployment and support

When the nft marketplace development service platform has been tested, it is time to deploy your NFT trading platform on a server (the cloud). Please note that this is not the last step, as you will also need to organize your support team. In addition, you also have to plan the further development of the platform to follow market trends and user expectations.

#development of nft market#nft market#stages of developing nft market#nft marketplace#nft marketplace development

0 notes

Text

Global Enterprise Search Software Market Demand, Supply and Consumption 2020-2026

Summary - A new market study, titled “Global Enterprise Search Software Market Size, Status and Forecast 2020-2026” has been featured on WiseGuyReports

This report focuses on the global Enterprise Search Software status, future forecast, growth opportunity, key market and key players. The study objectives are to present the Enterprise Search Software development in North America, Europe, China, Japan, Southeast Asia, India and Central & South America.

ALSO READ: https://icrowdnewswire.com/2020/04/02/enterprise-search-software-market-2020-global-analysis-opportunities-and-forecast-to-2026/

The key players covered in this study Swiftype Algolia Elasticsearch Apache Solr SearchSpring AddSearch SLI Systems Amazon CloudSearch Coveo FishEye Inbenta

Market segment by Type, the product can be split into Cloud Based Web Based Market segment by Application, split into Large Enterprises SMEs

Market segment by Regions/Countries, this report covers North America Europe China Japan Southeast Asia India Central & South America

The study objectives of this report are: To analyze global Enterprise Search Software status, future forecast, growth opportunity, key market and key players. To present the Enterprise Search Software development in North America, Europe, China, Japan, Southeast Asia, India and Central & South America. To strategically profile the key players and comprehensively analyze their development plan and strategies. To define, describe and forecast the market by type, market and key regions.

In this study, the years considered to estimate the market size of Enterprise Search Software are as follows: History Year: 2015-2019 Base Year: 2019 Estimated Year: 2020 Forecast Year 2020 to 2026 For the data information by region, company, type and application, 2019 is considered as the base year. Whenever data information was unavailable for the base year, the prior year has been considered.

FOR MORE DETAILS: https://www.wiseguyreports.com/reports/5049615-global-enterprise-search-software-market-size-status-and-forecast-2020-2026

About Us:

Wise Guy Reports is part of the Wise Guy Research Consultants Pvt. Ltd. and offers premium progressive statistical surveying, market research reports, analysis & forecast data for industries and governments around the globe.

Contact Us:

NORAH TRENT

Ph: +162-826-80070 (US)

Ph: +44 203 500 2763 (UK)

0 notes

Text

Why Your Enterprise Wants Technology Consulting

Every Business has its own set of technology needs. it’s vital that your software is customized consistent with the requirements of the business. it’s well said that one size doesn’t fit all.

To understand the personalized technology strategy consulting process let’s take an example. Say you would like to create a Lost & Found website, where both lost and located objects are often listed in order that people can find their lost objects and finders and obtain rewarded.

Now allow us to list the main steps in Technical Strategy Consulting, and see specific examples on how it can benefit you.

Technical Specifications – the primary and most vital step within the IT consulting process is to rework your business idea into a group of technical specifications requirements which describes intimately the wants of the software application that you simply try to create . Here the expert Technical Consultant will work hand-in-hand with you, asking many questions, providing suggestions supported experience and latest IT trends. a couple of examples are as follows:

·Wouldn’t it’s nice to pinpoint the situation where precisely the object was lost or found and show it on a Map to the user?

·Gamification: this is often a really popular trend in websites, to use the facility of social gamification to extend gratification and successively drive usage. during this specific case, the IT consultant could suggest using gamification to keep track of number of things successfully returned by a finder, rate them supported user reviews and tie in some monetary benefit to the highest performers

·Mobile support: Since tons of things are found while on the move, the IT consultant might recommend making the location mobile-friendly and using the GPS on phones to stay track of where items were lost/found.

Architecture and Design – A software’s success is predicated on a good range of things like performance, security and manageability. Software architecture is that the process of defining a well-structured software solution that meets all its functional and business requirements. Its helps in chalking out tons of structures like:

·What are the third party integrations which will be used and the way they’re going to work, e.g. Google Maps for pinpointing where items were found.

·For enabling gamification (as described above), what data model should be used – whether the appliance should use a daily Database or a NoSQL database (like Redis) along side batch jobs to calculate the scores in an offline fashion.

·How the searching and matching algorithm would work, and whether the appliance must use an enquiry index like Apache Solr to enable faster search.

Project Management – Project Management is usually overlooked during technology strategy consulting but it can push any software to its fullest potential and play an enormous role in its success. An experienced project manager studies the wants in terms of technologies needed, size of project, budget, etc. and recommends appropriate vendors for both interface Design (UI) also as actual Software Development, and helps negotiate with them (both in terms of man hours also as rate) to form sure that you simply get the simplest software development experience at an inexpensive price. He then monitors the whole software development lifecycle and makes sure that the appliance is deployed bug-free, during a timely and cost-effective manner.

Testing – Testing is that the process of verifying whether a system behaves in an expected manner or not. tons of software development companies await the project to be 100% complete before they begin testing, however, IT consulting experts believe that software testing should be started at an early stage and will go hand-in-hand with development. A bug/defect is far easier and price effective to unravel at an early stage.

Deployment – After your application is prepared , software deployment is what makes it available for everyone to use. it’s vital to believe the longer term while making your deployment strategy like which platform are you targeting, what proportion traffic you’re expecting, and which servers should be used. An expert IT Consultant helps you propose for today keeping scalability in mind for the near future.

·In terms of the Lost and located site, some inquiries to ask would be: Is the site getting to be used from various parts of the world? If so, it’d add up to use a Content Delivery Network (CDN) to serve the static content locally to enhance the load time.

·What are the traffic patterns that are estimated within the first 6 months and beyond? this is often wont to determine server configuration and scaling requirements within the future.

Scopenc Technologies Provides Technology Consulting Service with Smart ‘CTO’ Service.

Related more articles - https://www.scopenc.com/blog/

Contact us:-

https://www.scopenc.com

Email - [email protected], [email protected]

#software development#mobile app development#mobile app developers#startup#enterpreneur#software developers#iphone developer#andorid developer#machine learning#artificial intelligence#python developers

0 notes

Text

Apache Flume vs NiFi и еще 2 потоковые ETL-платформы Big Data и IoT/IIoT

Рассмотрев пакетные ETL-инструменты больших данных, сегодня мы поговорим про потоковые средства загрузки и маршрутизации информации из различных источников: Apache NiFi, Fluentd и StreamSets Data Collector. Читайте в нашей статье про их сходства, различия, достоинства и недостатки. Также мы собрали для вас реальные примеры их практического использования в Big Data системах и интернете вещей (Internet of Things, IoT), в т.ч. индустриальном (Industrial IoT, IIoT).

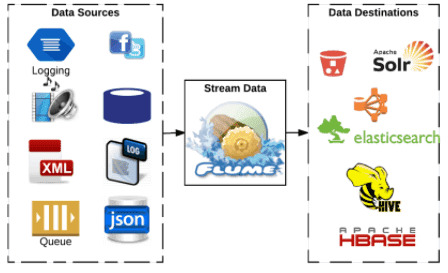

Как используется Apache Flume для потоковых ETL-задач

Из систем потоковой загрузки данных среди проектов фонда Apache Software Foundation (ASF), кроме NiFi, на практике часто используется Apache Flume – распределенная и высоконадежная система для эффективного сбора, агрегации и сохранения больших объемов логов из множества различных источников в централизованное хранилище данных. Изначально созданный для потоковой обработки логов в конвейерах, Flume масштабируется горизонтально и управляется событиями. Этот ETL-инструмент характеризуется низкой временной задержкой (low latency), отказоустойчивостью и гибкими возможностями дополнения за счет мощного API-интерфейса и SDK (software development kit). Из недостатков Flume стоит отметить, что он, как и Apache NiFi, гарантирует доставку сообщений в семантиках «максимум один раз» (at most once) и «по крайней мере один раз» (at least once). Это позволяет быстро перемещать данные и удешевлять обеспечение отказоустойчивости за счет минимизации состояний, которые нужно хранить. Также Flume позволяет доставлять события «по крайней мере один раз», но это сказывается на пропускной способности системы и может привести к дублированию сообщений [1]. Тем не менее, на практике Apache Flume широко используется в качестве ETL-средства для Big Data. В частности, сингапурская ИТ-компания Capillary Technologies, которая предоставляет облачную платформу электронной коммерции и сопутствующие услуги для розничных продавцов и брендов Omnichannel Customer Engagement, применяет Flume для агрегации логов с 25 различных источников данных. Корпорация Mozilla использует Flume вместе с ElasticSearch в проекте BuildBot по созданию своего инструмента непрерывной интеграции при разработке программного обеспечения, который автоматизирует цикл компиляции или тестирования, необходимый для проверки изменений в коде проекта. Сейчас это средство используется в Mozilla, Chromium, WebKit и многих других проектах. Один из крупнейших травел-агрегаторов Индии, компания Goibibo также применяет Flume для передачи журналов из своих производственных систем в HDFS [2].

Apache Flume часто используется для ETL-задач и маршрутизации потоков Big Data

Другие решения для потокового ETL в Big Data

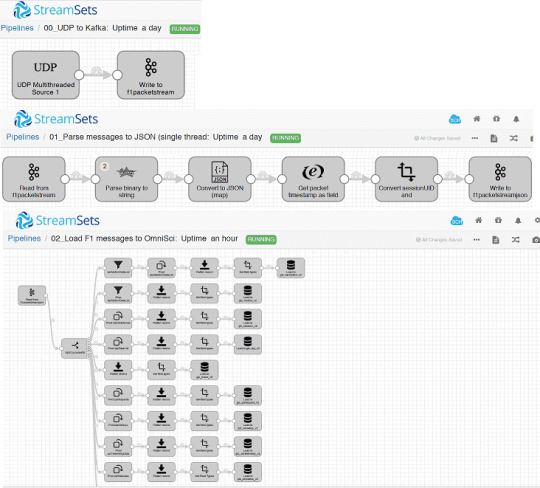

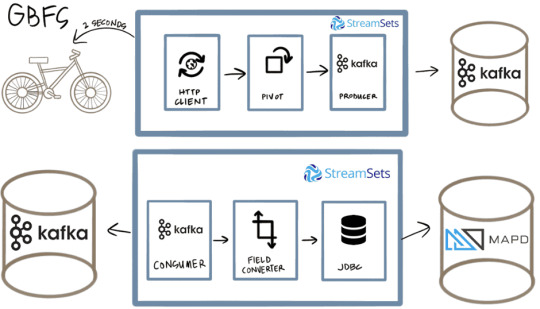

Среди платформ загрузки и маршрутизации данных, не являющихся проектами фонда ASF, наиболее часто для передачи информации между разными источниками и приемниками используются следующие: · Fluentd – открытый коллектор данных, предназначенный для объединения и систематизации масштабируемой инфраструктуры логов. Собранные с помощью Fluentd могут быть переданы для хранения и дальнейшей обработки в базы данных (MySQL, PostgreSQL, CouchBase, CouchDB, MongoDB, OpenTSDB, InfluxDB) распределенные файловые системы, включая HDFS, облачные сервисы (AWS, Google BigQuery), поисковые инструменты (Elasticsearch, Splunk, Loggly) [3]. · StreamSets Data Collector – наиболее похожая на Apache NiFi корпоративная инфраструктура непрерывного приема больших данных с открытым исходным кодом. Благодаря наличию пользовательского веб-GUI она позволяет разработчикам, инженерам, аналитикам и ученым по данным легко создавать ETL-конвейеры со сложными сценариями загрузки. StreamSets Data Collector интегрирован со множеством распределенных систем, от файловых хранилищ до реляционных СУБД, включая NoSQL и системы управления очередями сообщений: HDFS, HBase, Hive, Cassandra, MongoDB, Apache Solr, Elasticsearch, Oracle, MS SQL Server, MySQL, PostgreSQL, Netezza, Teradata и другие реляционные СУБД с поддержкой JDBC, Apache Kafka, JMS, Kinesis, Amazon S3 и т.д. [4]. В отличие от Apache NiFi, управляемого потоковыми файлами (FileFlow), StreamSets Data Collector управляется записями, что обусловливает дальнейшую разницу в эксплуатации этих систем. Подробнее про сходства и различия Apache NiFi и StreamSets Data Collector читайте в нашей новой статье. В практическом применении Fluentd часто используется как DevOps-инструмент и средство системного администрирования для сбора и анализа логов из множества распределенных приложений. Оно позволяет работать с контейнерами и системами управления контейнеризованными приложениями, в частности, Docker и Kubernetes. Это существенно облегчает процессы тестирования и развертывания в соответствии с методологией непрерывной интеграции и поставки программного обеспечения [5]. Из примеров практического использования StreamSets Data Collector отметим опыт американской компании OmniSci, которая разрабатывает программное обеспечение графических и центральных процессоров для визуализации больших данных. В частности, при создании реалистичных видеоигр по мотивам соревнований «Формула 1», с помощью StreamSets Data Collector был построен конвейер получения телеметрических данных о вождении автомобилей с периферийных IIoT-устройств, упаковка данных в UDP-пакет и непрерывная отправка информации в брокер сообщений Apache Kafka. Далее выполняется перевод двоичного потока в строки и конвертация в файл JSON, который дополняется временными метками и уникальным идентификатором сессии. Затем обогащенные данные снова записываются в кластер Kafka, откуда они расходятся по различным системам-приемникам: базам данных, BI-дэшбордам и т.д. Вся эта сложная схема маршрутизации телеметрических данных о движущемся автомобиле с целью их интерактивного отображения на визуальных панелях была построена в рамках StreamSets Data Collector [6].

Конвейер передачи IIoT-данных в компании OmniSci на базе StreamSets Data Collector Аналогичным образом, в рамках IIoT-направления, разработчик распределенных решений One Click Retail использовала StreamSets Data Collector для чтения данных о локации и состоянии прокатных велосипедов Ford GoBike и отправки их в базу данных MapD через Kafka и JDBC-подключение [7].

ETL-конвейер на основе StreamSets Data Collector для IIoT в прокате велосипедов Больше примеров практического использования StreamSets Data Collector, его сходства и отличия от Apache NiFi читайте в нашей следующей статье. Освойте все тонкости установки, администрирования и эксплуатации потокового ETL в Big Data на нашем практическом курсе Кластер Apache NiFi в лицензированном учебном центре обучения и повышения квалификации ИТ-специалистов (менеджеров, архитекторов, инженеров, администраторов, Data Scientist’ов и аналитиков Big Data) в Москве.

Смотреть расписание занятий

Зарегистрироваться на курс Источники 1. https://moluch.ru/archive/202/49512/ 2. https://www.dezyre.com/article/sqoop-vs-flume-battle-of-the-hadoop-etl-tools-/176 3. https://blog.selectel.ru/sbor-i-analiz-logov-s-fluentd/ 4. https://github.com/streamsets/datacollector 5. https://habr.com/ru/company/selectel/blog/250969/ 6. https://streamsets.com/blog/omnisci-f1-demo-real-time-data-ingestion-streamsets/ 7. https://streamsets.com/blog/real-time-bike-share-data-pipeline-streamsets-kafka-mapd/ Read the full article

0 notes

Link

If you are looking for Elasticsearch Consulting Services or Apache Solr Consulting services, you can contact us and we will help you choose the right technology that fits the best for your business application.

Dm us on [email protected] for a free consultation.

#Elasticsearch Development company#Apache Solr Development Services#Apache Solr Consulting Services#Apache Solr Development Company

0 notes

Text

Global Enterprise Search Software Market Insights and Forecast to 2020-2026

Summary – A new market study, “Global Enterprise Search Software Market Insights and Forecast to 2020-2026” has been featured on WiseGuyReports.

This report focuses on the global Enterprise Search Software status, future forecast, growth opportunity, key market and key players. The study objectives are to present the Enterprise Search Software development in North America, Europe, China, Japan, Southeast Asia, India and Central & South America.

The key players covered in this study

Swiftype

Algolia

Elasticsearch

Apache Solr

SearchSpring

AddSearch

SLI Systems

Amazon CloudSearch

Coveo

FishEye

Inbenta

Market segment by Type, the product can be split into

Cloud Based

Web Based

Market segment by Application, split into

Large Enterprises

SMEs

Also Read : https://icrowdnewswire.com/2020/04/02/enterprise-search-software-market-2020-global-analysis-opportunities-and-forecast-to-2026/

Market segment by Regions/Countries, this report covers

North America

Europe

China

Japan

Southeast Asia

India

Central & South America

The study objectives of this report are:

To analyze global Enterprise Search Software status, future forecast, growth opportunity, key market and key players.

To present the Enterprise Search Software development in North America, Europe, China, Japan, Southeast Asia, India and Central & South America.

To strategically profile the key players and comprehensively analyze their development plan and strategies.

To define, describe and forecast the market by type, market and key regions.

In this study, the years considered to estimate the market size of Enterprise Search Software are as follows:

History Year: 2015-2019

Base Year: 2019

Estimated Year: 2020

Forecast Year 2020 to 2026

For the data information by region, company, type and application, 2019 is considered as the base year. Whenever data information was unavailable for the base year, the prior year has been considered.

FOR MORE DETAILS https://www.wiseguyreports.com/reports/5049615-global-enterprise-search-software-market-size-status-and-forecast-2020-2026

About Us:

Wise Guy Reports is part of the Wise Guy Research Consultants Pvt. Ltd. and offers premium progressive statistical surveying, market research reports, analysis & forecast data for industries and governments around the globe.

Contact Us:

NORAH TRENT

Ph: +162-825-80070 (US)

Ph: +44 2035002763 (UK)

0 notes

Link

NextBrick: Solar Support and Solr Consulting Company

NextBrick offers apache solr support, solr consulting, architecture, integration and implementation services on competitive pricing. Our expert engineers provide 24x7 support cover with Solr performance and relevancy tuning services.

0 notes

Text

EXCELLENT JAVA WEB APPLICATION DEVELOPMENT SERVICES

Java being one of the most useful and important languages gained the reputation worldwide for creating customized web applications. ProminentPixel has been harnessing the power of Java web development to the core managing open source Java-based systems all over the globe. Java allows developers to develop the unique web applications in less time with low expenses.

ProminentPixel provides customized Java web development services and Java Application development services that meets client requirements and business needs. Being a leading Java web application development company in India, we have delivered the best services to thousands of clients throughout the world.

WHAT WE DO OUR JAVA WEB DEVELOPMENT SERVICES ProminentPixel has been harnessing the power of Java web development to the core managing open source Java-based systems all over the globe.

Web Services Development Web Application Development SaaS Application Development REST API Development Microservice Development

Legacy Application Modernization Our Java Web development team has successfully modernized numerous applications by replacing old frameworks with modern web application frameworks, by adding a completely new front end frameworks to the systems which has helped our clients to have modern application without rebuilding the whole enterprise application.

Application Migration We have helped enormous clients in application migration which includes Cloud environment migration for better availability and scaling. Our Java development team also have expertise in full end to end migration for various applications, and we also have done hybrid approach of application migration for our clients.

Apache Solr Integration Elasticsearch ELK Integration Artificial Intelligence Integration Legacy Application Modernization Application Migration WHAT WE DO OUR TECHNOLOGY Our Java web development team has crafted numerous solutions for global clients using prominent tools & technologies.

Backend Frontend Servers Database Tools Project Lifecycle Management

JAVA

SCALA

SPRING FRAMEWORK

SPRING

HIBERNATE

SPRING-JPA

SPRINGBOOT

MICROSERVICES

STRUTS

OUR JAVA WEB APPLICATION DEVELOPMENT TEAM Our team provides best-in-class Java web application development services developing and implementing multiple n-tier Java / J2EE / J2ME based applications with a perfect architecture and framework. Our experienced team caters the needs of enterprises by offering scalable Java web development services at an affordable price.

At ProminentPixel, we have certified and professional Java web and application developers who are experts in Industry domains. For cutting-edge solutions on the Java platform, the only way you have is hiring the ProminentPixel Java web application team. Matchless Java web development services along with high-quality user experience is the ground for our success in no time.

WHAT WE DO WHY PROMINENTPIXEL AS YOUR NEXT JAVA DEVELOPMENT PARTNER? We deliver unique, innovative, budget friendly solutions in time bound manner to our business partners which helps them in achieving business excellence.

Solution Driven Approach

Innovative Teams

Matchless Services

Best In Class Experts

Certified Developers

Cost Effective WHAT WE DO OUR APPROACH Our unique, innovative approach and “Out of the box” thinking pattern places ProminentPixel among top Java Web Development team providers in india.

0 notes

Text

How to Improve Problem Solving Skills - Developer Guide

Via http://bit.ly/2WD0x0l

How to Improve Problem Solving Skillscode{white-space:pre-wrap}span.smallcaps{font-variant:small-caps}span.underline{text-decoration:underline}div.column{display:inline-block;vertical-align:top;width:50%}a.sourceLine{display:inline-block;line-height:1.25}a.sourceLine{pointer-events:none;color:inherit;text-decoration:inherit}a.sourceLine:empty{height:1.2em;position:absolute}.sourceCode{overflow:visible}code.sourceCode{white-space:pre;position:relative}div.sourceCode{margin:1em 0}pre.sourceCode{margin:0}@media screen{div.sourceCode{overflow:auto}}@media print{code.sourceCode{white-space:pre-wrap}a.sourceLine{text-indent:-1em;padding-left:1em}}pre.numberSource a.sourceLine{position:relative}pre.numberSource a.sourceLine:empty{position:absolute}pre.numberSource a.sourceLine::before{content:attr(data-line-number);position:absolute;left:-5em;text-align:right;vertical-align:baseline;border:none;pointer-events:all;-webkit-touch-callout:none;-webkit-user-select:none;-khtml-user-select:none;-moz-user-select:none;-ms-user-select:none;user-select:none;padding:0 4px;width:4em;background-color:#2a211c;color:#bdae9d}pre.numberSource{margin-left:3em;border-left:1px solid #bdae9d;padding-left:4px}div.sourceCode{color:#bdae9d;background-color:#2a211c}@media screen{a.sourceLine::before{text-decoration:underline}}code span.al{color:#ff0}code span.an{color:#06f;font-weight:700;font-style:italic}code span.at{}code span.bn{color:#44aa43}code span.bu{}code span.cf{color:#43a8ed;font-weight:700}code span.ch{color:#049b0a}code span.cn{}code span.co{color:#06f;font-weight:700;font-style:italic}code span.do{color:#06f;font-style:italic}code span.dt{text-decoration:underline}code span.dv{color:#44aa43}code span.er{color:#ff0;font-weight:700}code span.ex{}code span.fl{color:#44aa43}code span.fu{color:#ff9358;font-weight:700}code span.im{}code span.in{color:#06f;font-weight:700;font-style:italic}code span.kw{color:#43a8ed;font-weight:700}code span.op{}code span.pp{font-weight:700}code span.sc{color:#049b0a}code span.ss{color:#049b0a}code span.st{color:#049b0a}code span.va{}code span.vs{color:#049b0a}code span.wa{color:#ff0;font-weight:700}

How to Improve Problem Solving Skills

Jeffery Yuan

April 24, 2019

Why Problem-solving skills matters

Problem Solving and troubleshooting

Is fun

Is part of daily work

Solve the problem, get things done

Work More efficiently

With more confidence

Less pressure

Go home earlier

Solve the problem when needed

It’s your responsibility if it blocks you, the team

How to Solve a Problem

Understand the problem/environment first

Understand the problem before google search otherwise it may just lead you to totally wrong directions.

Find related log/data

Copy/Save logs/info/findings that may be related

Check the Log and Error Message

Read/Understand the error message

Where to find log

Common places: /var/log

From command line:

-Dcassandra.logdir=/var/log/cassandra

Case Study – The Log and Error Message

Problem: Failed to talk Cassandra server: 10.10.10.10

Where 10.10.10.10 comes from?

gfind . -iname '*.jar' -printf "unzip -c %p | grep -q 10.10.10.10' && echo %p\n"

or search in corporate github/code base

It comes from commons2 project; check what’s the current settings in Github

Now the problem and solution is clear

Just upgrade commons2 to latest version

Source code is always the ultimate truth

Find related code in Github

Find examples/working code

Understand how/why the code works by running and debug the code

Check the log with the code

Most problems can be solved by checking log and source code

Reproduce the problem

Find easier way to reproduce them

main method, unit test, mock

Simplify the suspect code

Find the code related, remove things not realtedUse

Reproduce locally

Connect to the remote data in local dev

Remote debug

Last resort, slow

Solving Problem from Different Angles

Sometime we find problem in production and we need code change to fix it

Try to find a workaround by changing database/Solr or other configuration

We can fix code later

Find Information Effectively

Google search: error message, exception

Search source code in Github/Eclipse

Search log

Search in IDE

Cmd+alt+h, cmd+h

Search command history

history | grep git | grep erase

history | grep ssh | grep 9042

history | grep kubectl | awk ‘{$1="";print}’ | sort -u

Find Information Effectively Cont.

Know company’s internal resource

where to find them

Know some experts (in company) you can ask help from

How to Troubleshoot and Debug

Don’t overcomplicate it.

In most cases, the solution/problem is quite simple

Troubleshooting is about thinking what may go wrong.

Track what change you have made

Resource About Troubleshooting

Debug It!: Find, Repair, and Prevent Bugs in Your Code

Urgent Issues in Production

Collaborate and share update timely

Let others know what you are testing/checking, the progress, what you have found, what you will do next

Ask for help

Before

Try to understand the problem and fix it by yourself first

If this applies: not urgent

Where and Who

Coworkers

Involve more people: the team, related teams

Stackoverflow

Specific forums

http://bit.ly/2QHXwq4

Google Groups

Github issues

Ask in multiple channels

How to Ask Help

Provide more context and info

log, stack trace or any information that may help others understand the problem

Provide what you have found, tried

Ask help once for same/similar/related issues

Learn more

The knowledge: root cause, etc

Learn their thinking process

how they approach this problem (logs, code), tools

Fix same/similar/related problems in other places

People make same mistakes in different places

Example: GetMapping(value = "/config/{name:.+}")

Knowledge

Be prepared

Learn how to debug/troubleshooting

Learn tools used for debugging

Learn framework, library, products, services used in your project

Apache/Tomcat configuration

How to manage/troubleshoot Cassandra/Kafka/Solr

Know what problem may happen, code change recently

Knowledge cont.

Common Problems

Different versions of same library

mvn dependency:tree

mvn dependency:tree -Dverbose -Dincludes=com.amazonaws:aws-java-sdk-core

Tools

Tools - Eclipse

Use Conditional Breakpoint to Execute Arbitrary Code (and automatically)

Use Display View to Execute Arbitrary Code

Find which jar containing the class and the application is using

MediaType.class.getProtectionDomain().getCodeSource().getLocation()

Breakpoint doesn’t work

Multiple versions of same class or library

Practice - Connect to the remote data in local dev

Create a tunnel to zookeeper and solr nodes

Add a conditional breakpoint at CloudSolrClient.sendRequest(SolrRequest, String)

before LBHttpSolrClient.Req req = new LBHttpSolrClient.Req(request, theUrlList);

theUrlList.clear(); theUrlList.add("http://localhost:18983/solr/searchItems/"); theUrlList.add("http://localhost:28983/solr/searchItems/"); return false;

Tools - Decompiler

GUI: Bytecode-Viewer

alias decom=“java -jar /Users/jyuan/apple/tools/misc/Bytecode-Viewer-2.9.11.jar”

CFR

Best, Support java8

Tools - Java

jcmd: One JDK Command-Line Tool to Rule Them All

jcmd Thread.print

jcmd GC.heap_dump

Thread dump Analyzer

http://fastthread.io/

Heap dump Analyzer

Eclipse MAT

VisualVM

Tools - Splunk

Syntax

Expand messages to show all fields

Click Format on the top and select All lines for the Max Lines setting

After search and find the problem, use nearby Events +/- x seconds to show context

Tools - Misc

Search Contents of .jar Files for Specific String gfind . -iname '*.jar' -printf "unzip -c %p | grep -q 'string_to_search' && echo %p\n" | s

nc -zv, lsof, df, find, grep

Fiddler

Problem Solving in Practice

Example: Redis cache.put Hangs

Get thread dump, figure out what’s happening when read from cache

Read related code to figure out how Spring implements @Cacheable(sync=true) RedisCache$RedisCachePutCallback

Check whether there’s cacheName~lock in redis

When use some feature, know how it’s implemented.

Practice - Iterator vs Iterable

What’s the problem in the following Code?

@Cacheable(key = "#appName") public Iterator<Message> findActiveMessages(final String appName) {}

Practice - Iterator vs Iterable

How to find the root cause

Symptoms: The function only works once in a while: when cache is refreshed

Difference between Iterator vs Iterable

Don’t use Iterator when need traverse multiple times

Don’t use Iterator as cache value

Practice 2 - Spring Cacheable Not Working

The class using cache annotation inited too early

Add a breakpoint at the default constructor of the bean, then from the stack trace we can figure out why and which bean (or configuration class) causes this bean to be created

Understand how spring cache works internally, spring proxy

CacheAspectSupport

Post Mortem

Reflection: Lesson Learned

How we find the root causeWhy it takes so long

What we have learned

What’s the root cause

Why we made the mistake

How we can prevent this happens again

Share the knowledge in the team via wiki, quip, email etc.

Take time to solve problem, find the root cause, but only (take time to) solve it once

Think More

Think over the code/problem, try to find better solution even the issue’s solved

Everything that stops you from working effectively is a problem

Fix them

Bonus

Building Troubleshooting-Friendly Application

Return meaningful error code and response

Return debug info that can check from response when requested

Should be safe to use or protected

Add requested_id in client (automatically when provide client application)

Mock User Feature

Preview Feature

How to write test efficiently

Learn and Use Hamcrest, Mockito, JUnit, TestNG, REST Assured

Add Static Import in Eclipse

Preferences > Java > Editor > Content Assist > Favorites, then add:

org.hamcrest org.hamcrest.Matchers.* org.hamcrest.CoreMatchers.* org.junit.* org.junit.Assert.* org.junit.Assume.* org.junit.matchers.JUnitMatchers.* org.mockito.Mockito org.mockito.Matchers org.mockito.ArgumentMatchers io.restassured.RestAssured

This presentation was built using Reveal.js, Markdown and Github Pages

Related Posts

From lifelongprogrammer.blogspot.com

0 notes

Text

Data engineer job at INTELLLEX Singapore

INTELLLEX (www.intelllex.com) makes it easy for law firms and companies to optimise their underused legal knowledge with AI technology, leading them into the New Age of Knowledge.

The legal industry is one of the most-knowledge intensive industries and knowledge management is even more difficult with the data surge in the digital age. INTELLLEX has built an online workspace which combines an intelligent search engine (SOURCE) with a knowledge management system (STACKS).

We help unlock the latent knowledge in documents to allow lawyers to tap on each other's expertise This allows law firms to take stock of their lawyers' work and have a better understanding of the kind of expertise it has. Their institutional knowledge is accounted for and securely stored.

We have been awarded as one of the top 10 legal tech solutions in Asia Pacific (https://legal.apacciooutlook.com/vendor/intelllex-harnessing-the-power-of-knowledge-in-law-firms-cid-3331-mid-165.html) and accepted into the Barclays Bank LawTech incubation programme in London.

We would love to have you join us if our culture described below resonates with you.

Ownership and driving impact: We give you the flexibility to take charge of your domain and push boundaries. Chart the direction for an evolving product.

Support for your development: We believe in growing our people so they can grow with us. We are supportive of relevant learning opportunities that will strengthen our team e.g. training or conferences.

Strong knowledge sharing culture: We're in the business of knowledge organisation and sharing, and we walk the talk in INTELLLEX. We often reach out to external speakers or alumni.

Team bonding: We are big on team activities that bring us out of our comfort zone and help us see a different side to each other. We've climbed Mount Ophir and biked in Penang.

Coordinate, streamline and automate data ingestion to our data warehouse

Improve the NLP capabilities of our search engine

Explore and benchmark different system architectures and design

What skills do you need?

Ninja-level Python skills as our data pipeline is built on Python scripts/tools

Proficiency in Java to understand the code components from Apache foundation

Experienced in administering mainstream databases such as PostgreSQL and MySQL

Experience with Solr or equivalent full text databases

Experience with airflow or similar ETL workflow frameworks

What skills will give you bonus points?

You have good intuition for data

Exceptional understanding in networking and security

Proficient in modern languages such as Scala and Go

Experience managing AWS and Kubernetes clusters

From http://www.startupjobs.asia/job/40584-data-engineer-big-data-job-at-intelllex-singapore

from https://startupjobsasiablog.wordpress.com/2018/09/24/data-engineer-job-at-intelllex-singapore/

0 notes

Text

Data engineer job at INTELLLEX Singapore

INTELLLEX (www.intelllex.com) makes it easy for law firms and companies to optimise their underused legal knowledge with AI technology, leading them into the New Age of Knowledge.

The legal industry is one of the most-knowledge intensive industries and knowledge management is even more difficult with the data surge in the digital age. INTELLLEX has built an online workspace which combines an intelligent search engine (SOURCE) with a knowledge management system (STACKS). We help unlock the latent knowledge in documents to allow lawyers to tap on each other’s expertise This allows law firms to take stock of their lawyers' work and have a better understanding of the kind of expertise it has. Their institutional knowledge is accounted for and securely stored. We have been awarded as one of the top 10 legal tech solutions in Asia Pacific (https://legal.apacciooutlook.com/vendor/intelllex-harnessing-the-power-of-knowledge-in-law-firms-cid-3331-mid-165.html) and accepted into the Barclays Bank LawTech incubation programme in London. We would love to have you join us if our culture described below resonates with you.

Ownership and driving impact: We give you the flexibility to take charge of your domain and push boundaries. Chart the direction for an evolving product.

Support for your development: We believe in growing our people so they can grow with us. We are supportive of relevant learning opportunities that will strengthen our team e.g. training or conferences.

Strong knowledge sharing culture: We’re in the business of knowledge organisation and sharing, and we walk the talk in INTELLLEX. We often reach out to external speakers or alumni.

Team bonding: We are big on team activities that bring us out of our comfort zone and help us see a different side to each other. We’ve climbed Mount Ophir and biked in Penang.

Coordinate, streamline and automate data ingestion to our data warehouse

Improve the NLP capabilities of our search engine

Explore and benchmark different system architectures and design

What skills do you need?

Ninja-level Python skills as our data pipeline is built on Python scripts/tools

Proficiency in Java to understand the code components from Apache foundation

Experienced in administering mainstream databases such as PostgreSQL and MySQL

Experience with Solr or equivalent full text databases

Experience with airflow or similar ETL workflow frameworks

What skills will give you bonus points?

You have good intuition for data

Exceptional understanding in networking and security

Proficient in modern languages such as Scala and Go

Experience managing AWS and Kubernetes clusters

StartUp Jobs Asia - Startup Jobs in Singapore , Malaysia , HongKong ,Thailand from http://www.startupjobs.asia/job/40584-data-engineer-big-data-job-at-intelllex-singapore Startup Jobs Asia https://startupjobsasia.tumblr.com/post/178410794794

0 notes

Text

Data engineer job at INTELLLEX Singapore

INTELLLEX (www.intelllex.com) makes it easy for law firms and companies to optimise their underused legal knowledge with AI technology, leading them into the New Age of Knowledge.

The legal industry is one of the most-knowledge intensive industries and knowledge management is even more difficult with the data surge in the digital age. INTELLLEX has built an online workspace which combines an intelligent search engine (SOURCE) with a knowledge management system (STACKS). We help unlock the latent knowledge in documents to allow lawyers to tap on each other's expertise This allows law firms to take stock of their lawyers' work and have a better understanding of the kind of expertise it has. Their institutional knowledge is accounted for and securely stored. We have been awarded as one of the top 10 legal tech solutions in Asia Pacific (https://legal.apacciooutlook.com/vendor/intelllex-harnessing-the-power-of-knowledge-in-law-firms-cid-3331-mid-165.html) and accepted into the Barclays Bank LawTech incubation programme in London. We would love to have you join us if our culture described below resonates with you.

Ownership and driving impact: We give you the flexibility to take charge of your domain and push boundaries. Chart the direction for an evolving product.

Support for your development: We believe in growing our people so they can grow with us. We are supportive of relevant learning opportunities that will strengthen our team e.g. training or conferences.

Strong knowledge sharing culture: We're in the business of knowledge organisation and sharing, and we walk the talk in INTELLLEX. We often reach out to external speakers or alumni.

Team bonding: We are big on team activities that bring us out of our comfort zone and help us see a different side to each other. We've climbed Mount Ophir and biked in Penang.

Coordinate, streamline and automate data ingestion to our data warehouse

Improve the NLP capabilities of our search engine

Explore and benchmark different system architectures and design

What skills do you need?

Ninja-level Python skills as our data pipeline is built on Python scripts/tools

Proficiency in Java to understand the code components from Apache foundation

Experienced in administering mainstream databases such as PostgreSQL and MySQL

Experience with Solr or equivalent full text databases

Experience with airflow or similar ETL workflow frameworks

What skills will give you bonus points?

You have good intuition for data

Exceptional understanding in networking and security

Proficient in modern languages such as Scala and Go

Experience managing AWS and Kubernetes clusters

StartUp Jobs Asia - Startup Jobs in Singapore , Malaysia , HongKong ,Thailand from http://www.startupjobs.asia/job/40584-data-engineer-big-data-job-at-intelllex-singapore

0 notes

Text

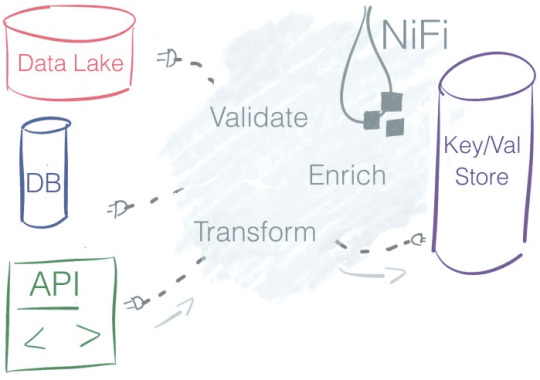

Чем хорош Apache NiFi: 10 главных достоинств для применения в Big Data и IoT-проектах

Продолжая разговор про практическое использование Apache NiFi в системах больших данных (Big Data) и интернета вещей (Internet of Things), сегодня мы рассмотрим, чем обусловлена популярность этой кластерной платформы маршрутизации, преобразования и доставки распределенной информации. Читайте в нашей статье про ключевые преимущества Apache NiFi в контексте прикладного использования этого инструмента.

10 основных преимуществ Apache Nifi