#Benefits Of API Testing Tools

Explore tagged Tumblr posts

Text

Benefits Of API Testing Tools

API testing is a crucial aspect of modern software development that involves evaluating the functionality, performance, security, and reliability of Application Programming Interfaces (APIs). APIs act as bridges between different software components, enabling them to communicate and share data seamlessly.

What is API Testing?

API testing is the meticulous examination of APIs to ensure they function correctly, securely, and reliably. It focuses on verifying inputs, outputs, and interactions with external systems.

Importance of Using API Testing Tools

API testing tools play a pivotal role in ensuring the quality of APIs and the applications that rely on them. They automate the testing process, provide extensive test coverage, and help identify issues early in the development cycle.

Types of API Testing

A. Unit Testing

Verify individual API components in isolation.

B. Functional Testing

Validate the functionality of APIs against specified requirements.

C. Load Testing

Assess how APIs perform under heavy load.

D. Security Testing

Detect vulnerabilities and ensure data security.

E. Compatibility Testing

Ensure compatibility with different devices and browsers.

F. Regression Testing

Confirm that changes haven't adversely affected existing functionality.

G. Endpoints Testing

Test various endpoints an API offers.

H. Mock Testing

Simulate the behavior of external dependencies for testing purposes.

Advantages of Using API Testing Tools

A. Improved Efficiency

Automated Testing

Tools automate the testing process, reducing manual effort.

Rapid Test Execution

Quick execution of test cases enables faster feedback.

B. Enhanced Test Coverage

Testing Various Scenarios

Tools facilitate testing of diverse scenarios, including edge cases.

Testing Multiple Endpoints

Test multiple API endpoints simultaneously for comprehensive coverage.

C. Early Detection of Bugs

Identifying Issues Before Integration

Tools catch issues early in the development cycle, preventing integration problems.

D. Cost-Effective

Reducing Development and Testing Costs

Automation and early issue identification lead to cost savings.

Challenges in API Testing

A. Common Challenges

Versioning Issues

Managing changes to APIs and ensuring backward compatibility can be challenging.

Dependency on External Services

Testing may depend on external services that can be unpredictable.

B. Mitigation Strategies

Version Control

Implement robust version control practices.

Mocking Services

Use mock services to simulate external dependencies.

API testing tools offer improved efficiency, enhanced test coverage, early bug detection, and cost savings.

By understanding the importance of API testing and harnessing the capabilities of API testing tools, developers and organizations can build software that not only meets user expectations but also stands the test of time in an ever-evolving digital landscape.

0 notes

Text

Navigate the New Rules of ZATCA e-Invoicing Phase 2

The digital shift in Saudi Arabia’s tax landscape is picking up speed. At the center of it all is ZATCA e-Invoicing Phase 2—a mandatory evolution for VAT-registered businesses that brings more structure, security, and real-time integration to how invoices are issued and reported.

If you’ve already adjusted to Phase 1, you’re halfway there. But Phase 2 introduces new technical and operational changes that require deeper preparation. The good news? With the right understanding, this shift can actually help streamline your business and improve your reporting accuracy.

Let’s walk through everything you need to know—clearly, simply, and without the technical overwhelm.

What Is ZATCA e-Invoicing Phase 2?

To recap, ZATCA stands for the Zakat, Tax and Customs Authority in Saudi Arabia. It oversees tax compliance in the Kingdom and is driving the movement toward electronic invoicing through a phased approach.

The Two Phases at a Glance:

Phase 1 (Generation Phase): Started in December 2021, requiring businesses to issue digital (structured XML) invoices using compliant systems.

Phase 2 (Integration Phase): Began in January 2023, and requires companies to integrate their invoicing systems directly with ZATCA for invoice clearance or reporting.

This second phase is a big leap toward real-time transparency and anti-fraud efforts, aligning with Vision 2030’s goal of building a smart, digital economy.

Why Does Phase 2 Matter?

ZATCA isn’t just ticking boxes—it’s building a national infrastructure where tax-related transactions are instant, auditable, and harder to manipulate. For businesses, this means more accountability but also potential benefits.

Benefits include:

Reduced manual work and paperwork

More accurate tax reporting

Easier audits and compliance checks

Stronger business credibility

Less risk of invoice rejection or disputes

Who Must Comply (and When)?

ZATCA isn’t pushing everyone into Phase 2 overnight. Instead, it’s rolling out compliance in waves, based on annual revenue.

Here's how it’s working:

Wave 1: Companies earning over SAR 3 billion (Started Jan 1, 2023)

Wave 2: Businesses making over SAR 500 million (Started July 1, 2023)

Future Waves: Will gradually include businesses with lower revenue thresholds

If you haven’t been notified yet, don’t relax too much. ZATCA gives companies a 6-month window to prepare after they're selected—so it’s best to be ready early.

What Does Compliance Look Like?

So, what exactly do you need to change in Phase 2? It's more than just creating digital invoices—now your system must be capable of live interaction with ZATCA’s platform, FATOORA.

Main Requirements:

System Integration: Your invoicing software must connect to ZATCA’s API.

XML Format: Invoices must follow a specific structured format.

Digital Signatures: Mandatory to prove invoice authenticity.

UUID and Cryptographic Stamps: Each invoice must have a unique identifier and be digitally stamped.

QR Codes: Required especially for B2C invoices.

Invoice Clearance or Reporting:

B2B invoices (Standard): Must be cleared in real time before being sent to the buyer.

B2C invoices (Simplified): Must be reported within 24 hours after being issued.

How to Prepare for ZATCA e-Invoicing Phase 2

Don’t wait for a formal notification to get started. The earlier you prepare, the smoother the transition will be.

1. Assess Your Current Invoicing System

Ask yourself:

Can my system issue XML invoices?

Is it capable of integrating with external APIs?

Does it support digital stamping and signing?

If not, it’s time to either upgrade your system or migrate to a ZATCA-certified solution.

2. Choose the Right E-Invoicing Partner

Many local and international providers now offer ZATCA-compliant invoicing tools. Look for:

Local support and Arabic language interface

Experience with previous Phase 2 implementations

Ongoing updates to stay compliant with future changes

3. Test in ZATCA’s Sandbox

Before going live, ZATCA provides a sandbox environment for testing your setup. Use this opportunity to:

Validate invoice formats

Test real-time API responses

Simulate your daily invoicing process

4. Train Your Staff

Ensure everyone involved understands what’s changing. This includes:

Accountants and finance officers

Sales and billing teams

IT and software teams

Create a simple internal workflow that covers:

Who issues the invoice

How it gets cleared or reported

What happens if it’s rejected

Common Mistakes to Avoid

Transitioning to ZATCA e-Invoicing Phase 2 isn’t difficult—but there are a few traps businesses often fall into:

Waiting too long: 6 months isn’t much time if system changes are required.

Relying on outdated software: Non-compliant systems can cause major delays.

Ignoring sandbox testing: It’s your safety net—use it.

Overcomplicating the process: Keep workflows simple and efficient.

What Happens If You Don’t Comply?

ZATCA has teeth. If you’re selected for Phase 2 and fail to comply by the deadline, you may face:

Financial penalties

Suspension of invoicing ability

Legal consequences

Reputation damage with clients and partners

This is not a soft suggestion—it’s a mandatory requirement with real implications.

The Upside of Compliance

Yes, it’s mandatory. Yes, it takes some effort. But it’s not all downside. Many businesses that have adopted Phase 2 early are already seeing internal benefits:

Faster approvals and reduced invoice disputes

Cleaner, more accurate records

Improved VAT recovery processes

Enhanced data visibility for forecasting and planning

The more digital your systems, the better equipped you are for long-term growth in Saudi Arabia's evolving business landscape.

Final Words: Don’t Just Comply—Adapt and Thrive

ZATCA e-invoicing phase 2 isn’t just about avoiding penalties—it’s about future-proofing your business. The better your systems are today, the easier it will be to scale, compete, and thrive in a digital-first economy.

Start early. Get the right tools. Educate your team. And treat this not as a burden—but as a stepping stone toward smarter operations and greater compliance confidence.

Key Takeaways:

Phase 2 is live and being rolled out in waves—check if your business qualifies.

It requires full system integration with ZATCA via APIs.

Real-time clearance and structured XML formats are now essential.

Early preparation and testing are the best ways to avoid stress and penalties.

The right software partner can make all the difference.

2 notes

·

View notes

Text

Revolutionizing DeFi Development: How STON.fi API & SDK Simplify Token Swaps

The decentralized finance (DeFi) landscape is evolving rapidly, and developers are constantly seeking efficient ways to integrate token swap functionalities into their platforms. However, building seamless and optimized swap mechanisms from scratch can be complex, time-consuming, and risky.

This is where STON.fi API & SDK come into play. They provide developers with a ready-to-use, optimized solution that simplifies the process of enabling fast, secure, and cost-effective swaps.

In this article, we’ll take an in-depth look at why developers need efficient swap solutions, how the STON.fi API & SDK work, and how they can be integrated into various DeFi applications.

Why Developers Need a Robust Swap Integration

One of the core functions of any DeFi application is token swapping—the ability to exchange one cryptocurrency for another instantly and at the best possible rate.

But integrating swaps manually is not a straightforward task. Developers face several challenges:

Complex Smart Contract Logic – Handling liquidity pools, slippage, and price calculations requires expertise and rigorous testing.

Security Vulnerabilities – Improperly coded swaps can expose user funds to attacks.

Performance Issues – Slow execution or high gas fees can frustrate users and hurt adoption.

A poorly integrated swap feature can turn users away from a DeFi application, affecting engagement and liquidity. That’s why an efficient, battle-tested API and SDK can make a significant difference.

STON.fi API & SDK: What Makes Them a Game-Changer?

STON.fi has built an optimized API and SDK designed to handle the complexities of token swaps while giving developers an easy-to-use toolkit. Here’s why they stand out:

1. Seamless Swap Execution

Instead of manually routing transactions through liquidity pools, the STON.fi API automates the process, ensuring users always get the best swap rates.

2. Developer-Friendly SDK

For those who prefer working with structured development tools, the STON.fi SDK comes with pre-built functions that remove the need for extensive custom coding. Whether you’re integrating swaps into a mobile wallet, trading platform, or decentralized app, the SDK simplifies the process.

3. High-Speed Performance & Low Costs

STON.fi’s infrastructure is optimized for fast transaction execution, reducing delays and minimizing slippage. Users benefit from lower costs, while developers get a plug-and-play solution that ensures a smooth experience.

4. Secure & Scalable

Security is a major concern in DeFi, and STON.fi’s API is built with strong security measures, protecting transactions from vulnerabilities and ensuring reliability even under heavy traffic.

Practical Use Cases for Developers

1. Building Decentralized Exchanges (DEXs)

STON.fi API enables developers to integrate swap functionalities directly into their DEX platforms without having to build custom liquidity management solutions.

2. Enhancing Web3 Wallets

Crypto wallets can integrate STON.fi’s swap functionality, allowing users to exchange tokens without leaving the wallet interface.

3. Automating Trading Strategies

The API can be used to build automated trading bots that execute swaps based on real-time market conditions, improving efficiency for traders.

4. Scaling DeFi Platforms

For DeFi applications handling high transaction volumes, STON.fi API ensures fast and cost-effective execution, improving user retention.

Why Developers Should Consider STON.fi API & SDK

For developers aiming to create efficient, user-friendly, and scalable DeFi applications, STON.fi offers a robust solution that eliminates the complexities of manual integrations.

Saves Development Time – Reduces the need for custom swap coding.

Improves Security – Pre-tested smart contracts minimize vulnerabilities.

Enhances User Experience – Faster swaps create a smoother, more reliable platform.

Optimizes Performance – Low latency and cost-efficient execution ensure better outcomes.

Whether you’re working on a new DeFi project or improving an existing platform, STON.fi’s API & SDK provide a solid foundation to enhance functionality and scalability.

By leveraging STON.fi’s tools, developers can focus on building innovative features, rather than getting caught up in the technical challenges of token swaps.

3 notes

·

View notes

Text

This Week in Rust 572

Hello and welcome to another issue of This Week in Rust! Rust is a programming language empowering everyone to build reliable and efficient software. This is a weekly summary of its progress and community. Want something mentioned? Tag us at @ThisWeekInRust on X (formerly Twitter) or @ThisWeekinRust on mastodon.social, or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub and archives can be viewed at this-week-in-rust.org. If you find any errors in this week's issue, please submit a PR.

Want TWIR in your inbox? Subscribe here.

Updates from Rust Community

Official

October project goals update

Next Steps on the Rust Trademark Policy

This Development-cycle in Cargo: 1.83

Re-organising the compiler team and recognising our team members

This Month in Our Test Infra: October 2024

Call for proposals: Rust 2025h1 project goals

Foundation

Q3 2024 Recap from Rebecca Rumbul

Rust Foundation Member Announcement: CodeDay, OpenSource Science(OS-Sci), & PROMOTIC

Newsletters

The Embedded Rustacean Issue #31

Project/Tooling Updates

Announcing Intentrace, an alternative strace for everyone

Ractor Quickstart

Announcing Sycamore v0.9.0

CXX-Qt 0.7 Release

An 'Educational' Platformer for Kids to Learn Math and Reading—and Bevy for the Devs

[ZH][EN] Select HTML Components in Declarative Rust

Observations/Thoughts

Safety in an unsafe world

MinPin: yet another pin proposal

Reached the recursion limit... at build time?

Building Trustworthy Software: The Power of Testing in Rust

Async Rust is not safe with io_uring

Macros, Safety, and SOA

how big is your future?

A comparison of Rust’s borrow checker to the one in C#

Streaming Audio APIs in Rust pt. 3: Audio Decoding

[audio] InfinyOn with Deb Roy Chowdhury

Rust Walkthroughs

Difference Between iter() and into_iter() in Rust

Rust's Sneaky Deadlock With if let Blocks

Why I love Rust for tokenising and parsing

"German string" optimizations in Spellbook

Rust's Most Subtle Syntax

Parsing arguments in Rust with no dependencies

Simple way to make i18n support in Rust with with examples and tests

How to shallow clone a Cow

Beginner Rust ESP32 development - Snake

[video] Rust Collections & Iterators Demystified 🪄

Research

Charon: An Analysis Framework for Rust

Crux, a Precise Verifier for Rust and Other Languages

Miscellaneous

Feds: Critical Software Must Drop C/C++ by 2026 or Face Risk

[audio] Let's talk about Rust with John Arundel

[audio] Exploring Rust for Embedded Systems with Philip Markgraf

Crate of the Week

This week's crate is wtransport, an implementation of the WebTransport specification, a successor to WebSockets with many additional features.

Thanks to Josh Triplett for the suggestion!

Please submit your suggestions and votes for next week!

Calls for Testing

An important step for RFC implementation is for people to experiment with the implementation and give feedback, especially before stabilization. The following RFCs would benefit from user testing before moving forward:

RFCs

No calls for testing were issued this week.

Rust

No calls for testing were issued this week.

Rustup

No calls for testing were issued this week.

If you are a feature implementer and would like your RFC to appear on the above list, add the new call-for-testing label to your RFC along with a comment providing testing instructions and/or guidance on which aspect(s) of the feature need testing.

Call for Participation; projects and speakers

CFP - Projects

Always wanted to contribute to open-source projects but did not know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

If you are a Rust project owner and are looking for contributors, please submit tasks here or through a PR to TWiR or by reaching out on X (formerly Twitter) or Mastodon!

CFP - Events

Are you a new or experienced speaker looking for a place to share something cool? This section highlights events that are being planned and are accepting submissions to join their event as a speaker.

If you are an event organizer hoping to expand the reach of your event, please submit a link to the website through a PR to TWiR or by reaching out on X (formerly Twitter) or Mastodon!

Updates from the Rust Project

473 pull requests were merged in the last week

account for late-bound depth when capturing all opaque lifetimes

add --print host-tuple to print host target tuple

add f16 and f128 to invalid_nan_comparison

add lp64e RISC-V ABI

also treat impl definition parent as transparent regarding modules

cleanup attributes around unchecked shifts and unchecked negation in const

cleanup op lookup in HIR typeck

collect item bounds for RPITITs from trait where clauses just like associated types

do not enforce ~const constness effects in typeck if rustc_do_not_const_check

don't lint irrefutable_let_patterns on leading patterns if else if let-chains

double-check conditional constness in MIR

ensure that resume arg outlives region bound for coroutines

find the generic container rather than simply looking up for the assoc with const arg

fix compiler panic with a large number of threads

fix suggestion for diagnostic error E0027

fix validation when lowering ? trait bounds

implement suggestion for never type fallback lints

improve missing_abi lint

improve duplicate derive Copy/Clone diagnostics

llvm: match new LLVM 128-bit integer alignment on sparc

make codegen help output more consistent

make sure type_param_predicates resolves correctly for RPITIT

pass RUSTC_HOST_FLAGS at once without the for loop

port most of --print=target-cpus to Rust

register ~const preds for Deref adjustments in HIR typeck

reject generic self types

remap impl-trait lifetimes on HIR instead of AST lowering

remove "" case from RISC-V llvm_abiname match statement

remove do_not_const_check from Iterator methods

remove region from adjustments

remove support for -Zprofile (gcov-style coverage instrumentation)

replace manual time convertions with std ones, comptime time format parsing

suggest creating unary tuples when types don't match a trait

support clobber_abi and vector registers (clobber-only) in PowerPC inline assembly

try to point out when edition 2024 lifetime capture rules cause borrowck issues

typingMode: merge intercrate, reveal, and defining_opaque_types

miri: change futex_wait errno from Scalar to IoError

stabilize const_arguments_as_str

stabilize if_let_rescope

mark str::is_char_boundary and str::split_at* unstably const

remove const-support for align_offset and is_aligned

unstably add ptr::byte_sub_ptr

implement From<&mut {slice}> for Box/Rc/Arc<{slice}>

rc/Arc: don't leak the allocation if drop panics

add LowerExp and UpperExp implementations to NonZero

use Hacker's Delight impl in i64::midpoint instead of wide i128 impl

xous: sync: remove rustc_const_stable attribute on Condvar and Mutex new()

add const_panic macro to make it easier to fall back to non-formatting panic in const

cargo: downgrade version-exists error to warning on dry-run

cargo: add more metadata to rustc_fingerprint

cargo: add transactional semantics to rustfix

cargo: add unstable -Zroot-dir flag to configure the path from which rustc should be invoked

cargo: allow build scripts to report error messages through cargo::error

cargo: change config paths to only check CARGO_HOME for cargo-script

cargo: download targeted transitive deps of with artifact deps' target platform

cargo fix: track version in fingerprint dep-info files

cargo: remove requirement for --target when invoking Cargo with -Zbuild-std

rustdoc: Fix --show-coverage when JSON output format is used

rustdoc: Unify variant struct fields margins with struct fields

rustdoc: make doctest span tweak a 2024 edition change

rustdoc: skip stability inheritance for some item kinds

mdbook: improve theme support when JS is disabled

mdbook: load the sidebar toc from a shared JS file or iframe

clippy: infinite_loops: fix incorrect suggestions on async functions/closures

clippy: needless_continue: check labels consistency before warning

clippy: no_mangle attribute requires unsafe in Rust 2024

clippy: add new trivial_map_over_range lint

clippy: cleanup code suggestion for into_iter_without_iter

clippy: do not use gen as a variable name

clippy: don't lint unnamed consts and nested items within functions in missing_docs_in_private_items

clippy: extend large_include_file lint to also work on attributes

clippy: fix allow_attributes when expanded from some macros

clippy: improve display of clippy lints page when JS is disabled

clippy: new lint map_all_any_identity

clippy: new lint needless_as_bytes

clippy: new lint source_item_ordering

clippy: return iterator must not capture lifetimes in Rust 2024

clippy: use match ergonomics compatible with editions 2021 and 2024

rust-analyzer: allow interpreting consts and statics with interpret function command

rust-analyzer: avoid interior mutability in TyLoweringContext

rust-analyzer: do not render meta info when hovering usages

rust-analyzer: add assist to generate a type alias for a function

rust-analyzer: render extern blocks in file_structure

rust-analyzer: show static values on hover

rust-analyzer: auto-complete import for aliased function and module

rust-analyzer: fix the server not honoring diagnostic refresh support

rust-analyzer: only parse safe as contextual kw in extern blocks

rust-analyzer: parse patterns with leading pipe properly in all places

rust-analyzer: support new #[rustc_intrinsic] attribute and fallback bodies

Rust Compiler Performance Triage

A week dominated by one large improvement and one large regression where luckily the improvement had a larger impact. The regression seems to have been caused by a newly introduced lint that might have performance issues. The improvement was in building rustc with protected visibility which reduces the number of dynamic relocations needed leading to some nice performance gains. Across a large swath of the perf suit, the compiler is on average 1% faster after this week compared to last week.

Triage done by @rylev. Revision range: c8a8c820..27e38f8f

Summary:

(instructions:u) mean range count Regressions ❌ (primary) 0.8% [0.1%, 2.0%] 80 Regressions ❌ (secondary) 1.9% [0.2%, 3.4%] 45 Improvements ✅ (primary) -1.9% [-31.6%, -0.1%] 148 Improvements ✅ (secondary) -5.1% [-27.8%, -0.1%] 180 All ❌✅ (primary) -1.0% [-31.6%, 2.0%] 228

1 Regression, 1 Improvement, 5 Mixed; 3 of them in rollups 46 artifact comparisons made in total

Full report here

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

[RFC] Default field values

RFC: Give users control over feature unification

Final Comment Period

Every week, the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

RFCs

[disposition: merge] Add support for use Trait::func

Tracking Issues & PRs

Rust

[disposition: merge] Stabilize Arm64EC inline assembly

[disposition: merge] Stabilize s390x inline assembly

[disposition: merge] rustdoc-search: simplify rules for generics and type params

[disposition: merge] Fix ICE when passing DefId-creating args to legacy_const_generics.

[disposition: merge] Tracking Issue for const_option_ext

[disposition: merge] Tracking Issue for const_unicode_case_lookup

[disposition: merge] Reject raw lifetime followed by ', like regular lifetimes do

[disposition: merge] Enforce that raw lifetimes must be valid raw identifiers

[disposition: merge] Stabilize WebAssembly multivalue, reference-types, and tail-call target features

Cargo

No Cargo Tracking Issues or PRs entered Final Comment Period this week.

Language Team

No Language Team Proposals entered Final Comment Period this week.

Language Reference

No Language Reference RFCs entered Final Comment Period this week.

Unsafe Code Guidelines

No Unsafe Code Guideline Tracking Issues or PRs entered Final Comment Period this week.

New and Updated RFCs

[new] Implement The Update Framework for Project Signing

[new] [RFC] Static Function Argument Unpacking

[new] [RFC] Explicit ABI in extern

[new] Add homogeneous_try_blocks RFC

Upcoming Events

Rusty Events between 2024-11-06 - 2024-12-04 🦀

Virtual

2024-11-06 | Virtual (Indianapolis, IN, US) | Indy Rust

Indy.rs - with Social Distancing

2024-11-07 | Virtual (Berlin, DE) | OpenTechSchool Berlin + Rust Berlin

Rust Hack and Learn | Mirror: Rust Hack n Learn Meetup

2024-11-08 | Virtual (Jersey City, NJ, US) | Jersey City Classy and Curious Coders Club Cooperative

Rust Coding / Game Dev Fridays Open Mob Session!

2024-11-12 | Virtual (Dallas, TX, US) | Dallas Rust

Second Tuesday

2024-11-14 | Virtual (Charlottesville, NC, US) | Charlottesville Rust Meetup

Crafting Interpreters in Rust Collaboratively

2024-11-14 | Virtual and In-Person (Lehi, UT, US) | Utah Rust

Green Thumb: Building a Bluetooth-Enabled Plant Waterer with Rust and Microbit

2024-11-14 | Virtual and In-Person (Seattle, WA, US) | Seattle Rust User Group

November Meetup

2024-11-15 | Virtual (Jersey City, NJ, US) | Jersey City Classy and Curious Coders Club Cooperative

Rust Coding / Game Dev Fridays Open Mob Session!

2024-11-19 | Virtual (Los Angeles, CA, US) | DevTalk LA

Discussion - Topic: Rust for UI

2024-11-19 | Virtual (Washington, DC, US) | Rust DC

Mid-month Rustful

2024-11-20 | Virtual and In-Person (Vancouver, BC, CA) | Vancouver Rust

Embedded Rust Workshop

2024-11-21 | Virtual (Berlin, DE) | OpenTechSchool Berlin + Rust Berlin

Rust Hack and Learn | Mirror: Rust Hack n Learn Meetup

2024-11-21 | Virtual (Charlottesville, NC, US) | Charlottesville Rust Meetup

Trustworthy IoT with Rust--and passwords!

2024-11-21 | Virtual (Rotterdam, NL) | Bevy Game Development

Bevy Meetup #7

2024-11-25 | Bratislava, SK | Bratislava Rust Meetup Group

ONLINE Talk, sponsored by Sonalake - Bratislava Rust Meetup

2024-11-26 | Virtual (Dallas, TX, US) | Dallas Rust

Last Tuesday

2024-11-28 | Virtual (Charlottesville, NC, US) | Charlottesville Rust Meetup

Crafting Interpreters in Rust Collaboratively

2024-12-03 | Virtual (Buffalo, NY, US) | Buffalo Rust Meetup

Buffalo Rust User Group

Asia

2024-11-28 | Bangalore/Bengaluru, IN | Rust Bangalore

RustTechX Summit 2024 BOSCH

2024-11-30 | Tokyo, JP | Rust Tokyo

Rust.Tokyo 2024

Europe

2024-11-06 | Oxford, UK | Oxford Rust Meetup Group

Oxford Rust and C++ social

2024-11-06 | Paris, FR | Paris Rustaceans

Rust Meetup in Paris

2024-11-09 - 2024-11-11 | Florence, IT | Rust Lab

Rust Lab 2024: The International Conference on Rust in Florence

2024-11-12 | Zurich, CH | Rust Zurich

Encrypted/distributed filesystems, wasm-bindgen

2024-11-13 | Reading, UK | Reading Rust Workshop

Reading Rust Meetup

2024-11-14 | Stockholm, SE | Stockholm Rust

Rust Meetup @UXStream

2024-11-19 | Leipzig, DE | Rust - Modern Systems Programming in Leipzig

Daten sichern mit ZFS (und Rust)

2024-11-21 | Edinburgh, UK | Rust and Friends

Rust and Friends (pub)

2024-11-21 | Oslo, NO | Rust Oslo

Rust Hack'n'Learn at Kampen Bistro

2024-11-23 | Basel, CH | Rust Basel

Rust + HTMX - Workshop #3

2024-11-27 | Dortmund, DE | Rust Dortmund

Rust Dortmund

2024-11-28 | Aarhus, DK | Rust Aarhus

Talk Night at Lind Capital

2024-11-28 | Augsburg, DE | Rust Meetup Augsburg

Augsburg Rust Meetup #10

2024-11-28 | Berlin, DE | OpenTechSchool Berlin + Rust Berlin

Rust and Tell - Title

North America

2024-11-07 | Chicago, IL, US | Chicago Rust Meetup

Chicago Rust Meetup

2024-11-07 | Montréal, QC, CA | Rust Montréal

November Monthly Social

2024-11-07 | St. Louis, MO, US | STL Rust

Game development with Rust and the Bevy engine

2024-11-12 | Ann Arbor, MI, US | Detroit Rust

Rust Community Meetup - Ann Arbor

2024-11-14 | Mountain View, CA, US | Hacker Dojo

Rust Meetup at Hacker Dojo

2024-11-15 | Mexico City, DF, MX | Rust MX

Multi threading y Async en Rust parte 2 - Smart Pointes y Closures

2024-11-15 | Somerville, MA, US | Boston Rust Meetup

Ball Square Rust Lunch, Nov 15

2024-11-19 | San Francisco, CA, US | San Francisco Rust Study Group

Rust Hacking in Person

2024-11-23 | Boston, MA, US | Boston Rust Meetup

Boston Common Rust Lunch, Nov 23

2024-11-25 | Ferndale, MI, US | Detroit Rust

Rust Community Meetup - Ferndale

2024-11-27 | Austin, TX, US | Rust ATX

Rust Lunch - Fareground

Oceania

2024-11-12 | Christchurch, NZ | Christchurch Rust Meetup Group

Christchurch Rust Meetup

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Jobs

Please see the latest Who's Hiring thread on r/rust

Quote of the Week

Any sufficiently complicated C project contains an adhoc, informally specified, bug ridden, slow implementation of half of cargo.

– Folkert de Vries at RustNL 2024 (youtube recording)

Thanks to Collin Richards for the suggestion!

Please submit quotes and vote for next week!

This Week in Rust is edited by: nellshamrell, llogiq, cdmistman, ericseppanen, extrawurst, andrewpollack, U007D, kolharsam, joelmarcey, mariannegoldin, bennyvasquez.

Email list hosting is sponsored by The Rust Foundation

Discuss on r/rust

3 notes

·

View notes

Text

Exploring the STON.fi API & SDK Demo App: A Game-Changer for Developers

If you’re a developer in the blockchain space, especially within the TON ecosystem, you know how overwhelming it can be to find reliable resources to help bring your ideas to life. That’s where STON.fi comes in. They’ve just launched something incredibly useful: the STON.fi API & SDK Demo App.

This isn’t just another technical tool. It’s designed to give you a hands-on example of how to integrate STON.fi’s powerful features, like the token swap function, directly into your projects. But let me tell you, it’s more than just a simple demo. It’s a resource that will save you time, reduce your workload, and unlock your project’s true potential.

Why Should You Care About the STON.fi Demo App

I know what you might be thinking: “Why would I need a demo app? I can figure things out on my own.” And you probably can. But here’s the thing—every great project starts with a strong foundation. Imagine trying to build a house without the proper tools or blueprint. You’d spend way more time than necessary trying to get things right.

The STON.fi API & SDK Demo App acts as that blueprint. It shows you exactly how to implement essential features like swaps, providing a clean, functional example you can build on. And in a fast-moving space like blockchain, having a ready-made starting point can save you days, if not weeks, of effort.

What’s Inside the Demo App

The demo is designed to showcase one of STON.fi’s key features—the swap function. But it’s more than just that.

Simple, Functional Code: The demo app shows you exactly how to integrate the swap feature with clear, easy-to-understand code snippets. You don’t have to guess or second-guess your work.

Real-Time Examples: You’ll be working with a live, fully functional application that gives you a feel for how things will work in your own project.

Built-In Flexibility: The app is designed to be adaptable. Whether you’re building a DeFi platform, a DEX, or something else, you can take the demo and tweak it to fit your project’s specific needs.

How Does This Make Your Life Easier

As a developer, you probably understand the value of working smarter, not harder. The demo app lets you skip the guesswork and dive right into the important stuff—building the functionality you need.

Let’s take a real-life example. Say you’re working on a decentralized exchange (DEX). One of the core functions you need is the ability to swap tokens. Without the right tools or resources, integrating token swaps could become a long, tedious process. With the STON.fi demo app, however, you get to see a working example of how it’s done, and you can integrate it into your own platform with minimal effort.

It’s like having a personal assistant who does the heavy lifting for you, so you can focus on the fun, creative parts of development.

The Benefits You Won’t Want to Miss

If you’re still wondering what makes the STON.fi API & SDK Demo App worth your time, let’s break it down into tangible benefits:

1. Faster Development: No need to reinvent the wheel. With the demo app, you’re already starting from a working base, which speeds up your development process.

2. Clear Guidance: The demo app gives you a clear, proven example of how to integrate the key features, meaning you won’t waste time searching for solutions online.

3. Confidence in Your Work: Knowing that the demo app is functional and has been tested in real-world scenarios boosts your confidence when implementing it into your own project.

Who Can Benefit from the Demo App

The beauty of this tool is that it’s not just for seasoned blockchain developers. Whether you’re just starting your journey in the crypto space or you’re already an expert, this demo app offers value to everyone.

New Developers: If you’re new to blockchain development, the demo app is a fantastic way to see how everything fits together. It’s like having a mentor walk you through the process.

Experienced Developers: Even if you’re experienced, the demo app can save you time by offering a proven solution for integrating swaps and other features into your platform.

Blockchain Entrepreneurs: If you’re launching a new DeFi project or any kind of blockchain-based application, this demo will give you the tools you need to hit the ground running.

Try the Stonfi SDK and API

My Takeaway

I remember when I was getting started in blockchain development. Every new concept felt overwhelming, and it often took me hours, sometimes days, to figure out how to implement even basic features. The STON.fi API & SDK Demo App eliminates that frustration.

Having the ability to see a fully functional example that works out-of-the-box is priceless. It saves time, eliminates mistakes, and gives you the confidence to move forward with your project.

And the best part? It’s all available to you for free. There’s no reason not to check it out and see how it can make your development process smoother, faster, and more enjoyable.

So, if you’re working on a blockchain project and want to integrate STON.fi’s powerful features, don’t reinvent the wheel. Check out the demo app and start building on a solid foundation today. You’ll be glad you did.

3 notes

·

View notes

Text

What Are the Costs Associated with Fintech Software Development?

The fintech industry is experiencing exponential growth, driven by advancements in technology and increasing demand for innovative financial solutions. As organizations look to capitalize on this trend, understanding the costs associated with fintech software development becomes crucial. Developing robust and secure applications, especially for fintech payment solutions, requires significant investment in technology, expertise, and compliance measures. This article breaks down the key cost factors involved in fintech software development and how businesses can navigate these expenses effectively.

1. Development Team and Expertise

The development team is one of the most significant cost drivers in fintech software development. Hiring skilled professionals, such as software engineers, UI/UX designers, quality assurance specialists, and project managers, requires a substantial budget. The costs can vary depending on the team’s location, expertise, and experience level. For example:

In-house teams: Employing full-time staff provides better control but comes with recurring costs such as salaries, benefits, and training.

Outsourcing: Hiring external agencies or freelancers can reduce costs, especially if the development team is located in regions with lower labor costs.

2. Technology Stack

The choice of technology stack plays a significant role in the overall development cost. Building secure and scalable fintech payment solutions requires advanced tools, frameworks, and programming languages. Costs include:

Licenses and subscriptions: Some technologies require paid licenses or annual subscriptions.

Infrastructure: Cloud services, databases, and servers are essential for hosting and managing fintech applications.

Integration tools: APIs for payment processing, identity verification, and other functionalities often come with usage fees.

3. Security and Compliance

The fintech industry is heavily regulated, requiring adherence to strict security standards and legal compliance. Implementing these measures adds to the development cost but is essential to avoid potential fines and reputational damage. Key considerations include:

Data encryption: Robust encryption protocols like AES-256 to protect sensitive data.

Compliance certifications: Obtaining certifications such as PCI DSS, GDPR, and ISO/IEC 27001 can be costly but are mandatory for operating in many regions.

Security audits: Regular penetration testing and vulnerability assessments are necessary to ensure application security.

4. Customization and Features

The complexity of the application directly impacts the cost. Basic fintech solutions may have limited functionality, while advanced applications require more extensive development efforts. Common features that add to the cost include:

User authentication: Multi-factor authentication (MFA) and biometric verification.

Real-time processing: Handling high volumes of transactions with minimal latency.

Analytics and reporting: Providing users with detailed financial insights and dashboards.

Blockchain integration: Leveraging blockchain for enhanced security and transparency.

5. User Experience (UX) and Design

A seamless and intuitive user interface is critical for customer retention in the fintech industry. Investing in high-quality UI/UX design ensures that users can navigate the platform effortlessly. Costs in this category include:

Prototyping and wireframing.

Usability testing.

Responsive design for compatibility across devices.

6. Maintenance and Updates

Fintech applications require ongoing maintenance to remain secure and functional. Post-launch costs include:

Bug fixes and updates: Addressing issues and releasing new features.

Server costs: Maintaining and scaling infrastructure to accommodate user growth.

Monitoring tools: Real-time monitoring systems to track performance and security.

7. Marketing and Customer Acquisition

Once the fintech solution is developed, promoting it to the target audience incurs additional costs. Marketing strategies such as digital advertising, influencer partnerships, and content marketing require significant investment. Moreover, onboarding users and providing customer support also contribute to the total cost.

8. Geographic Factors

The cost of fintech software development varies significantly based on geographic factors. Development in North America and Western Europe tends to be more expensive compared to regions like Eastern Europe, South Asia, or Latin America. Businesses must weigh the trade-offs between cost savings and access to high-quality talent.

9. Partnering with Technology Providers

Collaborating with established technology providers can reduce development costs while ensuring top-notch quality. For instance, Xettle Technologies offers comprehensive fintech solutions, including secure APIs and compliance-ready tools, enabling businesses to streamline development processes and minimize risks. Partnering with such providers can save time and resources while enhancing the application's reliability.

Cost Estimates

While costs vary depending on the project's complexity, here are rough estimates:

Basic applications: $50,000 to $100,000.

Moderately complex solutions: $100,000 to $250,000.

Highly advanced platforms: $250,000 and above.

These figures include development, security measures, and initial marketing efforts but may rise with added features or broader scope.

Conclusion

Understanding the costs associated with fintech software development is vital for effective budgeting and project planning. From assembling a skilled team to ensuring compliance and security, each component contributes to the total investment. By leveraging advanced tools and partnering with experienced providers like Xettle Technologies, businesses can optimize costs while delivering high-quality fintech payment solutions. The investment, though significant, lays the foundation for long-term success in the competitive fintech industry.

2 notes

·

View notes

Text

New Cloud Translation AI Improvements Support 189 Languages

189 languages are now covered by the latest Cloud Translation AI improvements.

Your next major client doesn’t understand you. 40% of shoppers globally will never consider buying from a non-native website. Since 51.6% of internet users speak a language other than English, you may be losing half your consumers.

Businesses had to make an impossible decision up until this point when it came to handling translation use cases. They have to decide between the following options:

Human interpreters: Excellent, but costly and slow

Simple machine translation is quick but lacks subtleties.

DIY fixes: Unreliable and dangerous

The problem with translation, however, is that you need all three, and conventional translation techniques are unable to keep up. Using the appropriate context and tone to connect with people is more important than simply translating words.

For this reason, developed Translation AI in Vertex AI at Google Cloud. Its can’t wait to highlight the most recent developments and how they can benefit your company.

Translation AI: Unmatched translation quality, but in your way

There are two options available in Google Cloud‘s Translation AI:

A necessary set of tools for translation capability is the Translation API Basic. Google Cloud sophisticated Neural Machine Translation (NMT) model allows you to translate text and identify languages immediately. For chat interactions, short-form content, and situations where consistency and speed are essential, Translation AI Basic is ideal.

Advanced Translation API: Utilize bespoke glossaries to ensure terminology consistency, process full documents, and perform batch translations. For lengthy content, you can utilize Gemini-powered Translation model; for shorter content, you can use Adaptive Translation to capture the distinct tone and voice of your business. By using a glossary, improving its industry-leading translation algorithms, or modifying translation forecasts in real time, you can even personalize translations.

What’s new in Translation AI

Increased accuracy and reach

With 189-language support, which now includes Cantonese, Fijian, and Balinese, you can now reach audiences around the world while still achieving lightning-fast performance, making it ideal for call centers and user content.

Smarter adaptive translation

You can use as little as five samples to change the tone and style of your translations, or as many as 30,000 for maximum accuracy.

Choosing a model according to your use case

Depending on how sophisticated your translation use case is, you can select from a variety of methods when using Cloud Translation Advanced. For instance, you can select Adaptive Translation for real-time modification or use NMT model for translating generic text.

Quality without sacrificing

Although reports and leaderboards provide information about the general performance of the model, they don’t show how well a model meets your particular requirements. With the help of the gen AI assessment service, you can choose your own evaluation standards and get a clear picture of how well AI models and applications fit your use case. Examples of popular tools for assessing translation quality include Google MetricX and the popular COMET, which are currently accessible on the Vertex gen AI review service and have a significant correlation with human evaluation. Choose the translation strategy that best suits your demands by comparing models and prototyping solutions.

Google cloud two main goals while developing Translation AI were to change the way you translate and the way you approach translation. Its deliver on both in four crucial ways, whereas most providers only offer either strong translation or simple implementation.

Vertex AI for quick prototyping

Test translations in 189 languages right away. To determine your ideal fit, compare NMT or most recent translation-optimized Gemini-powered model. Get instant quality metrics to confirm your decisions and see how your unique adaptations work without creating a single line of code.

APIs that are ready for production for your current workflows

For high-volume, real-time translations, integrate Translation API (NMT) straight into your apps. When tone and context are crucial, use the same Translation API to switch to Adaptive Translation Gemini-powered model. Both models scale automatically to meet your demands and fit into your current workflows.

Customization without coding

Teach your industry’s unique terminology and phrases to bespoke translation models. All you have to do is submit domain-specific data, and Translation AI will create a unique model that understands your language. With little need for machine learning knowledge, it is ideal for specialist information in technical, legal, or medical domains.

Complete command using Vertex AI

With all-inclusive platform, Vertex AI, you can use Translation AI to own your whole translation workflow. You may choose the models you want, alter how they behave, and track performance in the real world with Vertex AI. Easily integrate with your current CI/CD procedures to get translation at scale that is really enterprise-grade.

Real impact: The Uber story

Uber’s goal is to enable individuals to go anywhere, get anything, and make their own way by utilizing the Google Cloud Translation AI product suite.

Read more on Govindhtech.com

#TranslationAI#VertexAI#GoogleCloud#AImodels#genAI#Gemini#CloudTranslationAI#News#Technology#technologynews#technews#govindhtech

2 notes

·

View notes

Text

Eko API Integration: A Comprehensive Solution for Money Transfer, AePS, BBPS, and Money Collection

The financial services industry is undergoing a rapid transformation, driven by the need for seamless digital solutions that cater to a diverse customer base. Eko, a prominent fintech platform in India, offers a suite of APIs designed to simplify and enhance the integration of various financial services, including Money Transfer, Aadhaar-enabled Payment Systems (AePS), Bharat Bill Payment System (BBPS), and Money Collection. This article delves into the process and benefits of integrating Eko’s APIs to offer these services, transforming how businesses interact with and serve their customers.

Understanding Eko's API Offerings

Eko provides a powerful set of APIs that enable businesses to integrate essential financial services into their digital platforms. These services include:

Money Transfer (DMT)

Aadhaar-enabled Payment System (AePS)

Bharat Bill Payment System (BBPS)

Money Collection

Each of these services caters to different needs but together they form a comprehensive financial toolkit that can significantly enhance a business's offerings.

1. Money Transfer API Integration

Eko’s Money Transfer API allows businesses to offer domestic money transfer services directly from their platforms. This API is crucial for facilitating quick, secure, and reliable fund transfers across different banks and accounts.

Key Features:

Multiple Transfer Modes: Support for IMPS (Immediate Payment Service), NEFT (National Electronic Funds Transfer), and RTGS (Real Time Gross Settlement), ensuring flexibility for various transaction needs.

Instant Transactions: Enables real-time money transfers, which is crucial for businesses that need to provide immediate service.

Security: Strong encryption and authentication protocols to ensure that every transaction is secure and compliant with regulatory standards.

Integration Steps:

API Key Acquisition: Start by signing up on the Eko platform to obtain API keys for authentication.

Development Environment Setup: Use the language of your choice (e.g., Python, Java, Node.js) and integrate the API according to the provided documentation.

Testing and Deployment: Utilize Eko's sandbox environment for testing before moving to the production environment.

2. Aadhaar-enabled Payment System (AePS) API Integration

The AePS API enables businesses to provide banking services using Aadhaar authentication. This is particularly valuable in rural and semi-urban areas where banking infrastructure is limited.

Key Features:

Biometric Authentication: Allows users to perform transactions using their Aadhaar number and biometric data.

Core Banking Services: Supports cash withdrawals, balance inquiries, and mini statements, making it a versatile tool for financial inclusion.

Secure Transactions: Ensures that all transactions are securely processed with end-to-end encryption and compliance with UIDAI guidelines.

Integration Steps:

Biometric Device Integration: Ensure compatibility with biometric devices required for Aadhaar authentication.

API Setup: Follow Eko's documentation to integrate the AePS functionalities into your platform.

User Interface Design: Work closely with UI/UX designers to create an intuitive interface for AePS transactions.

3. Bharat Bill Payment System (BBPS) API Integration

The BBPS API allows businesses to offer bill payment services, supporting a wide range of utility bills, such as electricity, water, gas, and telecom.

Key Features:

Wide Coverage: Supports bill payments for a vast network of billers across India, providing users with a one-stop solution.

Real-time Payment Confirmation: Provides instant confirmation of bill payments, improving user trust and satisfaction.

Secure Processing: Adheres to strict security protocols, ensuring that user data and payment information are protected.

Integration Steps:

API Key and Biller Setup: Obtain the necessary API keys and configure the billers that will be available through your platform.

Interface Development: Develop a user-friendly interface that allows customers to easily select and pay their bills.

Testing: Use Eko’s sandbox environment to ensure all bill payment functionalities work as expected before going live.

4. Money Collection API Integration

The Money Collection API is designed for businesses that need to collect payments from customers efficiently, whether it’s for e-commerce, loans, or subscriptions.

Key Features:

Versatile Collection Methods: Supports various payment methods including UPI, bank transfers, and debit/credit cards.

Real-time Tracking: Allows businesses to track payment statuses in real-time, ensuring transparency and efficiency.

Automated Reconciliation: Facilitates automatic reconciliation of payments, reducing manual errors and operational overhead.

Integration Steps:

API Configuration: Set up the Money Collection API using the detailed documentation provided by Eko.

Payment Gateway Integration: Integrate with preferred payment gateways to offer a variety of payment methods.

Testing and Monitoring: Conduct thorough testing and set up monitoring tools to track the performance of the money collection service.

The Role of an Eko API Integration Developer

Integrating these APIs requires a developer who not only understands the technical aspects of API integration but also the regulatory and security requirements specific to financial services.

Skills Required:

Proficiency in API Integration: Expertise in working with RESTful APIs, including handling JSON data, HTTP requests, and authentication mechanisms.

Security Knowledge: Strong understanding of encryption methods, secure transmission protocols, and compliance with local financial regulations.

UI/UX Collaboration: Ability to work with designers to create user-friendly interfaces that enhance the customer experience.

Problem-Solving Skills: Proficiency in debugging, testing, and ensuring that the integration meets the business’s needs without compromising on security or performance.

Benefits of Integrating Eko’s APIs

For businesses, integrating Eko’s APIs offers a multitude of benefits:

Enhanced Service Portfolio: By offering services like money transfer, AePS, BBPS, and money collection, businesses can attract a broader customer base and improve customer retention.

Operational Efficiency: Automated processes for payments and collections reduce manual intervention, thereby lowering operational costs and errors.

Increased Financial Inclusion: AePS and BBPS services help businesses reach underserved populations, contributing to financial inclusion goals.

Security and Compliance: Eko’s APIs are designed with robust security measures, ensuring compliance with Indian financial regulations, which is critical for maintaining trust and avoiding legal issues.

Conclusion

Eko’s API suite for Money Transfer, AePS, BBPS, and Money Collection is a powerful tool for businesses looking to expand their financial service offerings. By integrating these APIs, developers can create robust, secure, and user-friendly applications that meet the diverse needs of today’s customers. As digital financial services continue to grow, Eko’s APIs will play a vital role in shaping the future of fintech in India and beyond.

Contact Details: –

Mobile: – +91 9711090237

E-mail:- [email protected]

#Eko India#Eko API Integration#api integration developer#api integration#aeps#Money transfer#BBPS#Money transfer Api Integration Developer#AePS API Integration#BBPS API Integration

2 notes

·

View notes

Text

Quality Assurance (QA) Analyst - Tosca

Model-Based Test Automation (MBTA):

Tosca uses a model-based approach to automate test cases, which allows for greater reusability and easier maintenance.

Scriptless Testing:

Tosca offers a scriptless testing environment, enabling testers with minimal programming knowledge to create complex test cases using a drag-and-drop interface.

Risk-Based Testing (RBT):

Tosca helps prioritize testing efforts by identifying and focusing on high-risk areas of the application, improving test coverage and efficiency.

Continuous Integration and DevOps:

Integration with CI/CD tools like Jenkins, Bamboo, and Azure DevOps enables automated testing within the software development pipeline.

Cross-Technology Testing:

Tosca supports testing across various technologies, including web, mobile, APIs, and desktop applications.

Service Virtualization:

Tosca allows the simulation of external services, enabling testing in isolated environments without dependency on external systems.

Tosca Testing Process

Requirements Management:

Define and manage test requirements within Tosca, linking them to test cases to ensure comprehensive coverage.

Test Case Design:

Create test cases using Tosca’s model-based approach, focusing on functional flows and data variations.

Test Data Management:

Manage and manipulate test data within Tosca to support different testing scenarios and ensure data-driven testing.

Test Execution:

Execute test cases automatically or manually, tracking progress and results in real-time.

Defect Management:

Identify, log, and track defects through Tosca’s integration with various bug-tracking tools like JIRA and Bugzilla.

Reporting and Analytics:

Generate detailed reports and analytics on test coverage, execution results, and defect trends to inform decision-making.

Benefits of Using Tosca for QA Analysts

Efficiency: Automation and model-based testing significantly reduce the time and effort required for test case creation and maintenance.

Accuracy: Reduces human error by automating repetitive tasks and ensuring consistent execution of test cases.

Scalability: Easily scales to accommodate large and complex testing environments, supporting continuous testing in agile and DevOps processes.

Integration: Seamlessly integrates with various tools and platforms, enhancing collaboration across development, testing, and operations teams.

Skills Required for QA Analysts Using Tosca

Understanding of Testing Principles: Fundamental knowledge of manual and automated testing principles and methodologies.

Technical Proficiency: Familiarity with Tosca and other testing tools, along with basic understanding of programming/scripting languages.

Analytical Skills: Ability to analyze requirements, design test cases, and identify potential issues effectively.

Attention to Detail: Keen eye for detail to ensure comprehensive test coverage and accurate defect identification.

Communication Skills: Strong verbal and written communication skills to document findings and collaborate with team members.

2 notes

·

View notes

Text

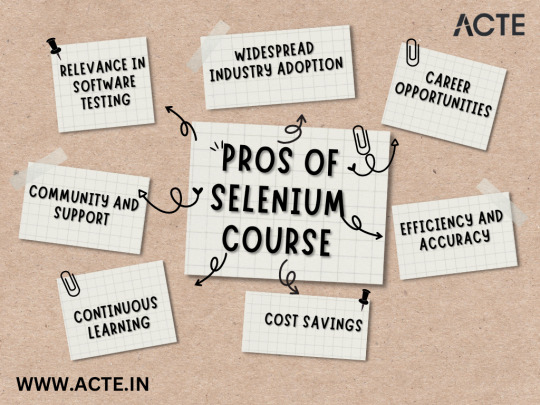

From Beginner to Pro: Dominate Automated Testing with Our Selenium Course

Welcome to our comprehensive Selenium course designed to help individuals from all backgrounds, whether novice or experienced, enhance their automated testing skills and become proficient in Selenium. In this article, we will delve into the world of Selenium, an open-source automated testing framework that has revolutionized software testing. With our course, we aim to empower aspiring professionals with the knowledge and techniques necessary to excel in the field of automated testing.

Why Choose Selenium?

Selenium offers a wide array of features and capabilities that make it the go-to choice for automated testing in the IT industry.

It allows testers to write test scripts in multiple programming languages, including Java, Python, C#, and more, ensuring flexibility and compatibility with various project requirements.

Selenium’s compatibility with different web browsers such as Chrome, Firefox, Safari, and Internet Explorer makes it a versatile choice for testing web applications.

The ability to leverage Selenium WebDriver, which provides a simple and powerful API, allows for seamless interaction with web elements, making automating tasks easier than ever before.

Selenium’s Key Components:

Selenium IDE:

Selenium Integrated Development Environment (IDE) is a Firefox plugin primarily used for recording and playing back test cases. It offers a user-friendly interface, allowing even non-programmers to create basic tests effortlessly.

Although Selenium IDE is a valuable tool for beginners, our course primarily focuses on Selenium WebDriver due to its advanced capabilities and wider scope.

Selenium WebDriver:

Selenium WebDriver is the most critical component of the Selenium framework. It provides a programming interface to interact with web elements and perform actions programmatically.

WebDriver’s functionality extends beyond just browser automation; it also enables testers to handle alerts, pop-ups, frames, and handle various other web application interactions.

Our Selenium course places significant emphasis on WebDriver, equipping learners with the skills to automate complex test scenarios efficiently.

Selenium Grid:

Selenium Grid empowers testers by allowing them to execute tests on multiple machines and browsers simultaneously, making it an essential component for testing scalability and cross-browser compatibility.

Through our Selenium course, you’ll gain a deep understanding of Selenium Grid and learn how to harness its capabilities effectively.

The Benefits of Our Selenium Course

Comprehensive Curriculum: Our course is designed to cover everything from the fundamentals of automated testing to advanced techniques in Selenium, ensuring learners receive a well-rounded education.

Hands-on Experience: Practical exercises and real-world examples are incorporated to provide learners with the opportunity to apply their knowledge in a realistic setting.

Expert Instruction: You’ll be guided by experienced instructors who have a profound understanding of Selenium and its application in the industry, ensuring you receive the best possible education.

Flexibility: Our course offers flexible learning options, allowing you to study at your own pace and convenience, ensuring a stress-free learning experience.

Industry Recognition: Completion of our Selenium course will provide you with a valuable certification recognized by employers worldwide, enhancing your career prospects within the IT industry.

Who Should Enroll?

Novice Testers: If you’re new to the world of automated testing and aspire to become proficient in Selenium, our course is designed specifically for you. We’ll lay a strong foundation and gradually guide you towards becoming a pro in Selenium automation.

Experienced Testers: Even if you already have experience in automated testing, our course will help you enhance your skills and keep up with the latest trends and best practices in Selenium.

IT Professionals: Individuals working in the IT industry, such as developers or quality assurance engineers, who want to broaden their skillset and optimize their testing processes, will greatly benefit from our Selenium course.

In conclusion, our Selenium course is a one-stop solution for individuals seeking to dominate automated testing and excel in their careers. With a comprehensive curriculum, hands-on experience, expert instruction, and industry recognition, you’ll be well-prepared to tackle any automated testing challenges that come your way. Make the smart choice and enroll in our Selenium course at ACTE Technologies today to unlock your full potential in the world of software testing.

7 notes

·

View notes

Text

Enhancing Communication: The Power of a WordPress Text Message Plugin

In today's fast-paced digital age, effective communication is key to the success of any website or business. With the increasing reliance on mobile devices, text messaging has become one of the most preferred and efficient ways to connect with audiences. Recognizing this trend, many website owners are integrating text messaging capabilities into their WordPress sites through the use of dedicated plugins. In this blog post, we'll explore the benefits and features of WordPress Text Message Plugin and how they can elevate your communication strategy.

The Rise of Text Messaging

Text messaging has evolved from a casual means of communication to a powerful tool for businesses to engage with their audience. The immediacy and directness of text messages make them an ideal channel for reaching out to users, be it for marketing promotions, customer support, or important announcements. Integrating text messaging functionality into your WordPress site can provide a seamless and convenient way to connect with your audience.

Streamlining Communication with WordPress Text Message Plugins

WordPress Text Message Plugins offer a range of features designed to streamline communication efforts. These plugins typically allow you to send SMS messages directly from your WordPress dashboard, eliminating the need for third-party platforms. This not only simplifies the communication process but also ensures that your messages are sent promptly and reliably.

Key Features of WordPress Text Message Plugins:

1. Two-Way Communication: Enable users to respond to your messages, creating an interactive and engaging communication channel.

2. Personalization: Tailor your messages to individual users, adding a personal touch to your communication strategy.

3. Scheduled Messaging: Plan and schedule messages in advance, ensuring timely delivery without manual intervention.

4. Opt-In and Opt-Out: Comply with regulations and respect user preferences by implementing opt-in and opt-out features for SMS subscriptions.

5. Analytics: Gain insights into the performance of your text messaging campaigns through detailed analytics, allowing you to refine your strategy based on user engagement.

Choosing the Right WordPress Text Message Plugin

With the growing demand for text messaging solutions, the WordPress plugin repository offers a variety of options. When selecting a plugin for your site, consider factors such as compatibility, ease of use, and the specific features that align with your communication goals. Some popular choices include Twilio SMS, WP SMS, and Nexmo.

Getting Started with WordPress Text Messaging

Integrating a text messaging plugin into your WordPress site is a straightforward process. Follow these general steps:

1. Select a Plugin: Choose a WordPress Text Message Plugin that suits your requirements and install it through your WordPress dashboard.

2. Configuration: Configure the plugin settings, including API credentials, sender details, and any other necessary parameters.

3. Create Opt-In Forms: If applicable, create opt-in forms to allow users to subscribe to your text messaging service.

4. Compose Messages: Craft compelling and concise messages for your audience, keeping in mind the value and relevance of your content.

5. Test and Launch: Before going live, conduct tests to ensure the proper functioning of the plugin and the delivery of messages.

Conclusion

Incorporating a WordPress Text Message Plugin into your website can revolutionize the way you communicate with your audience. From personalized marketing messages to instant customer support, the possibilities are vast. As technology continues to advance, staying ahead of the curve by embracing innovative communication tools is crucial for maintaining a strong online presence. Upgrade your communication strategy today and unlock the full potential of text messaging through the power of WordPress plugins.

#Ultimate SMS#Wordpress SMS#SMS For Wordpress#SMS Wordpress Plugin#SMS Woocommerce#SMS Marketing Wordpress#Wp SMS#WooCommerce SMS Marketing#Text Message Wordpress Plugin

2 notes

·

View notes

Text

Politifact says: Mostly True!

First: Roller Coaster Tycoon's graphics were done by an artist named Simon Foster, and QA testing was done by both Sawyer's friends and family and dedicated teams at Hasbro.

This shouldn't be a surprise: even in today's indie space, with tools and talents abound, it's very rare for a game to actually be entirely a single person's work. Undertale, Dwarf Fortress, Braid, etc. -- all of these famous 'solo games' had other people working on things like the artwork, or the sound design, or the story, or the design, or something. Just because the same person did all the design and all the programming, doesn't mean they're the only person who deserves credit for their work.

Second: Roller Coaster Tycoon was not written entirely in IA-32 assembler. Remember, this is 1999. This is a Windows application. The days of 16-bit Real Mode DOS and BIOS boot games are long dead; if you want to draw a window on the screen, you have to talk to Windows. Heck, if you want to load a file you're gonna have to talk to Windows in some way -- direct drive access isn't something that the OS will just expose to any program! This is done either via the dynamic link libraries in System32 or via wrappers around those same libraries.

Likewise, if you want to do portable, performant 2D graphics and sound in 1999, your options are:

DirectX

Slower, objectively worse software rendering via (again) the Windows API

Fucking off

and that's it. This isn't 1982! If you want to draw something to the screen, or play a sound effect, or read input from the keyboard, or do any kind of I/O at all, you have to talk to a library. Roller Coaster Tycoon was not an exception.

Furthermore, while the bulk of the game (something like 99%, according to Chris Sawyer's webpage) was written in IA-32 assembler, the parts of the game talked to these libraries were written in C. Sawyer may have been eccentric, but he wasn't insane. These were pretty damn complicated APIs! Lots of big data structures being passed around, lots of state to keep track of, etc. It was technically possible to interface with them without C, but it would've been a complete pain in the ass for literally no benefit.

It was still a hell of an accomplishment, don't get me wrong.

15K notes

·

View notes

Text

Premium URL Shortener Nulled Script 7.4.4

Download Premium URL Shortener Nulled Script for Free If you're searching for a high-performance URL management tool that empowers you to create, share, and manage short links effortlessly, then look no further. The Premium URL Shortener Nulled Script is your go-to solution for building a robust, scalable, and monetizable URL shortener platform—without spending a dime. Packed with advanced features and a user-friendly interface, this nulled version provides everything you need to launch your own branded link shortener service. What is Premium URL Shortener Nulled Script? The Premium URL Shortener is a powerful PHP-based script designed to convert long URLs into sleek, trackable short links. With integrated analytics, custom aliases, and link management features, this script is widely regarded as one of the best URL shorteners on the market. The nulled version allows users to access all premium functionalities absolutely free, making it ideal for startups, marketers, and developers who want to avoid hefty licensing fees. Technical Specifications Script Type: PHP-based standalone application Database: MySQL/MariaDB Server Requirements: PHP 7.4 or higher, MySQL 5.6+ Responsive: Mobile-friendly design Multi-language Support: Yes API Access: Yes (Developer-friendly API) Top Features and Benefits Why choose the Premium URL Shortener Nulled Script? Here’s a breakdown of the most outstanding features that make this tool a must-have for your online project: Advanced Link Analytics: Monitor clicks, geolocation, browser usage, and referrer data in real-time. Custom Short URLs: Create branded links with your own domain and custom aliases. Ad Monetization: Integrate interstitial and banner ads to generate revenue from every link click. Admin Dashboard: A sleek and intuitive control panel to manage users, links, and campaigns. Social Media Sharing: Easily share your shortened URLs across multiple platforms. Security Options: Password protection, CAPTCHA, and link expiration ensure maximum link safety. Ideal Use Cases Whether you're an affiliate marketer, content creator, or digital agency, the Premium URL Shortener can boost your productivity and professionalism: Affiliate Marketing: Clean up lengthy affiliate links and track performance with ease. Social Media Campaigns: Share eye-catching short URLs that fit perfectly in posts and bios. Team Collaboration: Allow multiple team members to manage and analyze links with multi-user access. Marketing Agencies: Offer branded short URL services to clients with your own white-label platform. Quick Installation Guide Setting up the Premium URL Shortener Nulled Script is simple and fast. Just follow these steps: Download the nulled script package from our website. Upload the files to your web server using FTP or a file manager. Create a new MySQL database and import the included SQL file. Edit the configuration file with your database credentials. Run the installation script by visiting your domain in a web browser. Log into the admin panel and start shortening links instantly! Frequently Asked Questions (FAQs) Is the nulled version safe to use? Yes, our team ensures that all nulled scripts are thoroughly scanned and tested before sharing. You can download and use the Premium URL Shortener Nulled Script with confidence. Can I customize the appearance? Absolutely! The script includes editable templates and CSS files that allow you to fully personalize the look and feel of your URL shortener platform. Will I get support with the nulled version? While official support is not included, a vast number of online communities and forums can help you troubleshoot and expand the script’s functionality. Why Download from Us? Our platform provides safe, free access to premium tools like the Premium URL Shortener Nulled Script. No hidden fees, no subscriptions—just high-quality resources for digital entrepreneurs and developers. Explore our full collection of nulled wordpress themes to find more valuable tools for your projects.

For those interested in plugins, we also recommend checking out nulled plugins to expand your site's capabilities. Conclusion The Premium URL Shortener is more than just a URL shortener—it's a feature-rich platform that allows you to brand, monetize, and manage your links like a pro. With our free download, you can start building a professional-grade URL shortening service today without any cost. Don’t miss this opportunity to gain full access to premium features and streamline your digital marketing strategy.

0 notes

Text

Full-Stack Face-Off: Breaking Down MERN and MEAN Stacks

Introduction

In today’s ever-changing tech world, choosing the right full‑stack approach can feel overwhelming. Two popular choices stand out: the MERN and MEAN stacks. Both bring together powerful JavaScript frameworks and tools to help developers build dynamic, end‑to‑end web applications. Whether you represent a MERN Stack development company or a MEAN Stack development firm, understanding the strengths and trade‑offs of each is essential. In this guide, we’ll walk through the basics, explore individual components, and help you decide which path aligns best with your project goals.

Understanding the MERN and MEAN Stacks