#C++ Data Structures & Algorithms

Explore tagged Tumblr posts

Text

Normally I just post about movies but I'm a software engineer by trade so I've got opinions on programming too.

Apparently it's a month of code or something because my dash is filled with people trying to learn Python. And that's great, because Python is a good language with a lot of support and job opportunities. I've just got some scattered thoughts that I thought I'd write down.

Python abstracts a number of useful concepts. It makes it easier to use, but it also means that if you don't understand the concepts then things might go wrong in ways you didn't expect. Memory management and pointer logic is so damn annoying, but you need to understand them. I learned these concepts by learning C++, hopefully there's an easier way these days.

Data structures and algorithms are the bread and butter of any real work (and they're pretty much all that come up in interviews) and they're language agnostic. If you don't know how to traverse a linked list, how to use recursion, what a hash map is for, etc. then you don't really know how to program. You'll pretty much never need to implement any of them from scratch, but you should know when to use them; think of them like building blocks in a Lego set.

Learning a new language is a hell of a lot easier after your first one. Going from Python to Java is mostly just syntax differences. Even "harder" languages like C++ mostly just mean more boilerplate while doing the same things. Learning a new spoken language in is hard, but learning a new programming language is generally closer to learning some new slang or a new accent. Lists in Python are called Vectors in C++, just like how french fries are called chips in London. If you know all the underlying concepts that are common to most programming languages then it's not a huge jump to a new one, at least if you're only doing all the most common stuff. (You will get tripped up by some of the minor differences though. Popping an item off of a stack in Python returns the element, but in Java it returns nothing. You have to read it with Top first. Definitely had a program fail due to that issue).

The above is not true for new paradigms. Python, C++ and Java are all iterative languages. You move to something functional like Haskell and you need a completely different way of thinking. Javascript (not in any way related to Java) has callbacks and I still don't quite have a good handle on them. Hardware languages like VHDL are all synchronous; every line of code in a program runs at the same time! That's a new way of thinking.

Python is stereotyped as a scripting language good only for glue programming or prototypes. It's excellent at those, but I've worked at a number of (successful) startups that all were Python on the backend. Python is robust enough and fast enough to be used for basically anything at this point, except maybe for embedded programming. If you do need the fastest speed possible then you can still drop in some raw C++ for the places you need it (one place I worked at had one very important piece of code in C++ because even milliseconds mattered there, but everything else was Python). The speed differences between Python and C++ are so much smaller these days that you only need them at the scale of the really big companies. It makes sense for Google to use C++ (and they use their own version of it to boot), but any company with less than 100 engineers is probably better off with Python in almost all cases. Honestly thought the best programming language is the one you like, and the one that you're good at.

Design patterns mostly don't matter. They really were only created to make up for language failures of C++; in the original design patterns book 17 of the 23 patterns were just core features of other contemporary languages like LISP. C++ was just really popular while also being kinda bad, so they were necessary. I don't think I've ever once thought about consciously using a design pattern since even before I graduated. Object oriented design is mostly in the same place. You'll use classes because it's a useful way to structure things but multiple inheritance and polymorphism and all the other terms you've learned really don't come into play too often and when they do you use the simplest possible form of them. Code should be simple and easy to understand so make it as simple as possible. As far as inheritance the most I'm willing to do is to have a class with abstract functions (i.e. classes where some functions are empty but are expected to be filled out by the child class) but even then there are usually good alternatives to this.

Related to the above: simple is best. Simple is elegant. If you solve a problem with 4000 lines of code using a bunch of esoteric data structures and language quirks, but someone else did it in 10 then I'll pick the 10. On the other hand a one liner function that requires a lot of unpacking, like a Python function with a bunch of nested lambdas, might be easier to read if you split it up a bit more. Time to read and understand the code is the most important metric, more important than runtime or memory use. You can optimize for the other two later if you have to, but simple has to prevail for the first pass otherwise it's going to be hard for other people to understand. In fact, it'll be hard for you to understand too when you come back to it 3 months later without any context.

Note that I've cut a few things for simplicity. For example: VHDL doesn't quite require every line to run at the same time, but it's still a major paradigm of the language that isn't present in most other languages.

Ok that was a lot to read. I guess I have more to say about programming than I thought. But the core ideas are: Python is pretty good, other languages don't need to be scary, learn your data structures and algorithms and above all keep your code simple and clean.

#programming#python#software engineering#java#java programming#c++#javascript#haskell#VHDL#hardware programming#embedded programming#month of code#design patterns#common lisp#google#data structures#algorithms#hash table#recursion#array#lists#vectors#vector#list#arrays#object oriented programming#functional programming#iterative programming#callbacks

20 notes

·

View notes

Text

// August 18th, 2024

Yesterday I broke in tears because I think I’m going to fail Data Structures and Algorithms this semester. It’s just, too much. Not the topics I have to study, not even that I don’t actually enjoy practicing it, oh no. It’s that I don’t think I’m going to be able to send all of my assignments on time and they affect the final grade a lot. The materials for all three subjects get released only on Wednesdays at noon and it’s expected that an almost impossible amount of assignments are delivered before the following Tuesday at 8 p.m.

Why can’t I, for instance, do the work, send it as soon as I have it ready, and they can just take into account that I actually followed on the lessons and studied hard for the two exams before the final? It’s just… I don’t know. It seems ridiculous to me. I would be doing my best even without having that stupid pressure of doing the assignments on time, like any other person who is serious about theirs studies. I know I probably sound like a stupid kid, but people going to this university at night usually have a job already, otherwise, why wouldn’t we be taking the lessons on mornings/afternoons? Don’t they think about those cases? Incredible.

And I know one can probably think, “well, why don’t you drop that subject and follow up others and retake this one further in the future?

And the answer to that is, a) I’m feeling old to keep postponing my graduation (35 is becoming my least worst shot) and b) I have more plans, like for other important aspects of my life as well. And… ugh. The pressure. It’s too much.

I spoke to my sweet boyfriend about all these worries I have and he’s happy with both me telling him what I feel and how hard I am working with this. “Honey, it’s just the second week of the semester. You got this”. Cuddles and sushi were provided, and even when that didn’t wash the worries or pressure away, I felt loved. And cared for. I’m so lucky to have him in my life.

Sorry for the rant guys. I get that this is just the way it is and that these are the terms for getting a good grade, and if I don’t like it I can just leave it and try again, but… I just needed to get some of it out of my chest 🥲

But hey, at least I was able to write my very first little programs in C yesterday. Yay 🍷

#studyblr#my post#shelikesrainydays#pressure#being and adult and a student is so freaking hard#personal rant#data structures#algorithms#C#paulastudies

2 notes

·

View notes

Text

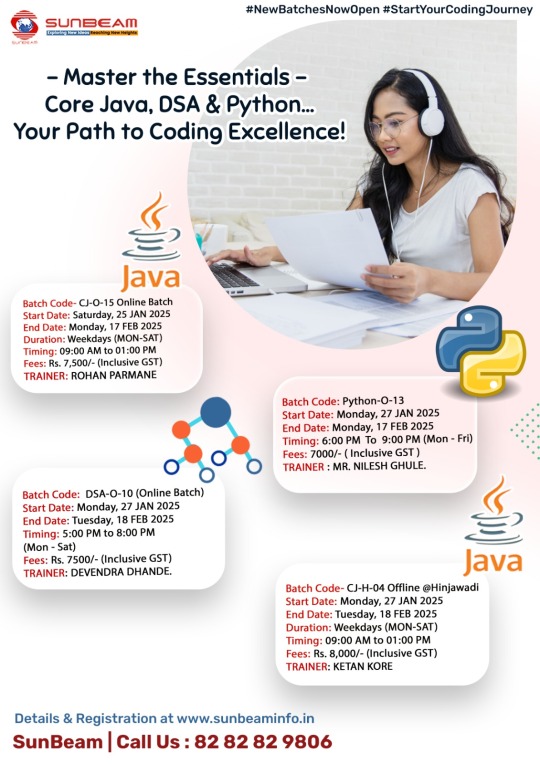

Elevate your IT expertise with Sunbeam Institute's comprehensive modular courses in Pune. Designed for both budding professionals and seasoned experts, our programs offer in-depth knowledge and practical skills to excel in today's dynamic tech industry.

Course Offerings:

Advance Java with Spring Hibernate

Duration: 90 hours

Overview: Master advanced Java programming and integrate with Spring and Hibernate frameworks for robust enterprise applications.

Python Development

Duration: 40 hours

Overview: Gain proficiency in Python programming, covering fundamental to advanced topics for versatile application development.

Data Structures and Algorithms

Duration: 60 hours

Overview: Understand and implement essential data structures and algorithms to optimize problem-solving skills.

DevOps

Duration: 80 hours

Overview: Learn the principles and tools of DevOps to streamline software development and deployment processes.

Machine Learning

Duration: 40 hours

Overview: Delve into machine learning concepts and applications, preparing you for the evolving AI landscape.

Why Choose Sunbeam Institute?

Experienced Faculty: Learn from industry experts with extensive teaching and real-world experience.

Hands-on Training: Engage in practical sessions and projects to apply theoretical knowledge effectively.

State-of-the-Art Infrastructure: Benefit from modern labs and resources that foster an optimal learning environment.

Placement Assistance: Leverage our strong industry connections to secure promising career opportunities.

#python course in pune#advanced python classes pune#c programming#core java course#devops classes in pune#data structures algorithms

0 notes

Text

Data structures and algorithms courses in Bangalore

Explore coding mastery with our Data Structures and Algorithms Courses in Bangalore. Guided by expert instructors, delve into essential concepts for software development excellence. Gain proficiency in algorithmic problem-solving and data structures, equipping yourself for success in the tech industry. Elevate your coding skills in the dynamic learning environment of Bangalore, a thriving tech hub.

Visit Us - https://www.limatsoftsolutions.co.in/data-structures-and-algorithms-course

Facebook - https://www.facebook.com/people/Li-Mat-Soft-Solutions-Pvt-Ltd/100063951045722/

Instagram - https://www.instagram.com/limatsoftsolutions/

LinkedIn - https://in.linkedin.com/company/limatsoftsolutions

Twitter - https://twitter.com/solutions_limat

Phone - +91 879 948 8096

#Data structures and algorithms courses in Bangalore#Data Structures and Algorithms Course#Digital Marketing Course Kanpur#Best DSA Course in c++#Summer Training Internship in Kolkata

0 notes

Text

Minor, The Pain of All The World, c. 1910

* * * *

The New Malthusianism of the Right

How the Right Repackages Malthusian Logic to Justify Exclusion, Fear, and Social Control

James B. Greenberg

Jun 17, 2025

There is an unspoken logic behind the right’s crusade to dismantle the public sphere: a modern Malthusianism, dressed in the language of efficiency and merit, but rooted in something much older and more brutal. It sees poverty not as a structural failure, but as evidence of surplus life—populations deemed unnecessary, unworthy, unfit for rescue.

This worldview doesn’t rely on overt violence. It doesn’t need to. The tools are policy, budget cuts, and selective silence. Remove access to healthcare. Undermine vaccination campaigns. Hollow out the safety net. The result is a slow culling by design—death by bureaucratic abandonment. What emerges is not the spectacle of fascism, but its quieter cousin: a soft, managed cruelty that lets nature, supposedly, take its course.

Thanks for reading James’s Substack! Subscribe for free to receive new posts and support my work.Pledge your support

The recent gutting of USAID under Elon Musk’s influence is a case in point. A technocrat’s dream of efficiency masks a strategic withdrawal from responsibility. Bill Gates, not often given to hyperbole, warned that this vision leaves the world’s poorest to die at the hands of the world’s richest. It’s not just a policy shift—it’s a value statement. A declaration about whose lives are worth sustaining, and whose are not.

This isn’t new. Malthusian logic has long served as moral cover for violent inequality—from colonial famine policies to eugenics programs to the gatekeeping of immigration. The targets change, but the rationale remains: some lives are worth preserving, others are simply excess. What’s changed is the mechanism. Today it’s not enacted through spectacle or coercion, but through metrics, models, and managed invisibility. The cruelty is buried in algorithms and budget lines.

Malthus imagined famine and disease as natural checks on the population of agrarian societies. But the 21st century presents the opposite challenge. Birthrates in the wealthiest countries have dropped below replacement levels. Scarcity, where it exists, is political, not demographic. Yet the Malthusian myth has endured—reshaped and redeployed as ideological cover for policies of containment and control.

Today, that logic finds new footing in national security circles. Climate change is no longer just an environmental issue—it’s portrayed as a destabilizer of poor nations and a trigger for mass migration. Droughts, floods, and crop failures become reframed not as humanitarian emergencies, but as threats to the wealth and borders of the Global North. Migrants are recast as invaders. The displaced become suspects. Fortress policies follow.

But these policies don’t just emerge from fear—they serve profit. As walls rise and aid retracts, private security firms, data contractors, and border surveillance industries step in. Crisis becomes a business model. Technologies once pitched as humanitarian tools—satellite tracking, biometric IDs, AI forecasting—are now deployed to sort, exclude, and contain. The logic remains unchanged: manage the risk, shield the center, and let the margins fall away.

What’s most revealing is how this rhetoric obscures the actual source of vulnerability. It isn’t overpopulation that drives suffering—it’s the uneven distribution of power, resources, and the means of survival. Climate change doesn’t kill indiscriminately. It amplifies existing inequalities. It hits hardest where protections have been deliberately withdrawn.

This isn’t governance. It’s triage on a planetary scale. And it reflects a profound shift in the function of the state—from protector to gatekeeper, from provider to sorter. The new Malthusianism isn’t about managing numbers. It’s about managing narratives—who belongs, who drains, who deserves.

Anthropologists have long studied how states make populations legible, governable, and expendable. What we’re witnessing now is a recalibration of that calculus under the pressures of climate, capital, and ideology. The danger is not just that certain lives are deemed unworthy—but that their abandonment becomes rational, even moralized.

We are told this is simply how the world works now. But that’s not true. It’s how power works when it no longer pretends to care. But people are not numbers. And history reminds us that even in the shadow of abandonment, solidarity can rewrite the script.

Suggested Readings

Agamben, Giorgio. State of Exception. Chicago: University of Chicago Press, 2005.

Biehl, João. “The Juridical Afterlife of the Poor: Brazilian Public Health and the Politics of Abandonment.” Journal of Political Ecology 15 (2008): 1–18.

Greenberg, James B., and Thomas K. Park, eds. Terrestrial Transformations: Political Ecology, Climate, and the Remaking of Planet Earth. New York: Lexington Books, 2023.

Mbembe, Achille. Necropolitics. Durham, NC: Duke University Press, 2019.

Sassen, Saskia. Expulsions: Brutality and Complexity in the Global Economy. Cambridge, MA: Belknap Press, 2014.

Vélez-Ibáñez, Carlos G. The Rise of Necro/Narco-Citizenship: Belonging and Dying in the National Borderlands. Tucson: University of Arizona Press, 2025.

Weizman, Eyal. The Least of All Possible Evils: Humanitarian Violence from Arendt to Gaza.London: Verso, 2012.

#James Greenberg#political#history#power#people#human beings#humanism#inequalities#resources#Malthusian logic#violent inequality

17 notes

·

View notes

Text

'Ghost towns' of the universe: Ultra-faint, rare dwarf galaxies offer clues to the early cosmos

Three ultra-faint dwarf galaxies residing in an isolated region of space were found to contain only very old stars, supporting the theory that events in the early universe cut star formation short in the smallest galaxies.

A team of astronomers led by David Sand, a professor of astronomy at the University of Arizona Steward Observatory, has uncovered three faint and ultra-faint dwarf galaxies in the vicinity of NGC 300, a galaxy approximately 6.5 million light-years from Earth. These rare discoveries – named Sculptor A, B and C – offer an unprecedented opportunity to study the smallest galaxies in the universe and the cosmic forces that halted their star formation billions of years ago.

Sand presented the findings, which are published in The Astrophysical Journal Letters, during a press briefing at the 245th Meeting of the American Astronomical Society in National Harbor, Maryland, on Wednesday.

Ultra-faint dwarf galaxies are the faintest type of galaxy in the universe. Typically containing just a few hundred to thousands of stars – compared to the hundreds of billions that make up the Milky Way – these small diffuse structures usually hide inconspicuously among the many brighter residents of the sky. For this reason, astronomers have previously had the most luck finding them nearby, in the vicinity of the Milky Way.

But this presents a problem for understanding them; the Milky Way's gravitational forces and hot gases in its outermost reaches strip away the dwarf galaxies' gas and interfere with their natural evolution. Additionally, further beyond the Milky Way, ultra-faint dwarf galaxies increasingly become too diffuse and unresolvable for astronomers and traditional computer algorithms to detect.

"Small galaxies like these are remnants from the early universe," Sand said. "They help us understand what conditions were like when the first stars and galaxies formed, and why some galaxies stopped creating new stars entirely."

A manual search by eye was needed to discover three faint and ultra-faint dwarf galaxies. Sand saw them when reviewing images taken for the DECam Legacy Survey, or DECaLS, one of three public surveys known as the DESI Legacy Imaging Surveys, which jointly imaged about a third of the sky to provide targets for the ongoing Dark Energy Spectroscopic Instrument, or DESI, Survey.

"It was during the pandemic," Sand recalled. "I was watching TV and scrolling through the DESI Legacy Survey viewer, focusing on areas of sky that I knew hadn't been searched before. It took a few hours of casual searching – and then boom! They just popped out."

The Sculptor galaxies are among the first ultra-faint dwarf galaxies found in a pristine, isolated environment free from the influence of the Milky Way or other large structures. To investigate these galaxies further, Sand and his team used the Gemini South telescope, one half of the International Gemini Observatory, partly funded by the NSF and operated by NSF NOIRLab..

Gemini South's Gemini Multi-Object Spectrograph captured all three galaxies in exquisite detail. An analysis of the data showed that they appear to be empty of gas and contain only very old stars, suggesting that their star formation was stifled long ago. This bolsters existing theories that ultra-faint dwarf galaxies are stellar "ghost towns" where star formation was cut off in the early universe.

"This is exactly what we would expect for such tiny objects," Sand said. "Gas is the crucial raw material required to coalesce and ignite the fusion of a new star. But ultra-faint dwarf galaxies just have too little gravity to hold on to this all-important ingredient, and it is easily lost when they are affected by nearby, massive galaxies."

Because the Sculptor galaxies are far from any larger galaxies, their gas could not have been removed by giant neighbors. An alternative explanation is what astronomers call the Epoch of Reionization – a period not long after the Big Bang when high-energy ultraviolet photons filled the cosmos, potentially boiling away the gas in the smallest galaxies. Another possibility is that some of the earliest stars in the dwarf galaxies underwent energetic supernova explosions, emitting ejecta at up to 35 million kilometers (about 20 million miles) per hour and pushing the gas out of their own hosts from within.

Dwarf galaxies could open a window into studying the very early universe, according to the research team, because the Epoch of Reionization potentially connects the current-day structure of all galaxies with the earliest formation of structure on a cosmological scale.

"We don't know how strong or uniform this reionization effect was," Sand explained. "It could be that reionization is patchy, not occurring everywhere all at once."

To answer that question, astronomers need to find more objects like the Sculptor galaxies. By enlisting machine learning tools, Sand and his team hope to automate and accelerate discoveries, in hopes that astronomers can draw stronger conclusions.

IMAGE: The three ultra-faint dwarf galaxies reside in a region of space isolated from the environmental influence of larger objects. Containing only very old stars, they support the theory that star formation was cut short in the early universe. Credit DECaLS/DESI Legacy Imaging Surveys/LBNL/DOE & KPNO/CTIO/NOIRLab/NSF/AURA

8 notes

·

View notes

Text

Blog Post #2 - due 2/6/25

1. How do digital security measures reinforce existing power structures, particularly in terms of class, race, and access to resources? Although we are typically unphased by the prevalence of security cameras and data-collection systems that we come in contact with on a day-to-day basis, we don’t realize how much more prevalent they are in low-income neighborhoods, as crime is much more likely to be reported there. One fact that stuck out to me was how digital security guards are “so deeply woven into the fabric of social life that, most of the time, we don’t even notice we are being watched and analyzed” (V. Eubanks, 2018, p.16). In my own experience, I have worked a few sales jobs where we have only about 1 or 2 cameras that surveillance the exterior of the shop, where customers frequent. However, when I need to drop cash in the safe located in the office, I am usually overwhelmed by the number of cameras on the monitor, which monitors every corner of the employees' workspace. There are about 5 separate cameras that locate various angles of one single space. For one, this heightened surveillance serves as a tool to monitor productivity and compliance with policies, but also to reinforce power imbalances between employers and their employees. These cameras may also be used to target and monitor specific racial groups, as an employer may monitor a Black or Latino worker far more than a White or Asian worker holding the same job position/status.

2. Nicole Brown poses a significant question: “Do we really understand the far-reaching implications of algorithms, specifically related to anti-Black racism, social justice, and institutionalized surveillance and policing?” (Brown, 0:14). The answer is, in many ways, complex. However, Brown brings up a very important point. Many algorithms are trained with the potential to improve many areas of our lives, however, they can prove damaging in terms of predictive policing as well as perpetuating biases and inequalities. According to Christina Swarns in an article titled “When Artificial Intelligence Gets it Wrong,” “facial recognition software is significantly less reliable for Black and Asian people, who, according to a study by the National Institute of Standards and Technology, were 10 to 100 times more likely to be misidentified than white people,” further emphasizing how algorithms may lead to false identifications, caused by a lack in diversity–highlighting the need for improvements in algorithmic technology to mitigate the harm caused for marginalized communities. In regards to predictive policing, algorithms that are trained to predict crimes utilize historical crime data, which may result in higher policing rates for those areas, when the historical data may just reflect biased policing as opposed to true criminal activity.

3. How do surveillance and algorithms affect healthcare outcomes for minorities? In a video titled “Race and Technology,” Nicole Brown explains that since White people are recorded to make up a majority of healthcare consumers, the healthcare system’s algorithm deems White individuals more likely to require healthcare than their non-White counterparts (Brown, 2:12). Although we are typically used to having our information and activity utilized by certain social media platforms to generate user-centered content, I think the connection between algorithms and healthcare outcomes is an interesting topic to unpack, as I never have thought about this connection. “Doctors and other health care providers are increasingly using healthcare algorithms (a computation, often based on statistical or mathematical models, that helps medical practitioners make diagnoses and decisions for treatments” (Colón-Rodríguez, 2023). Colón-Rodríguez uses a case study of a woman who gave birth via c-section in 2017, and how the database was later updated to reflect a false prediction that Black/African American and Hispanic/Latino women were more likely to need c-sections, and were less likely to naturally give birth successfully as opposed to White women. For one, this prediction was false, and it further caused doctors to perform more c-sections on Latino and Black women than White women. C-sections are known to be generally safe but are known to cause infections, blood clots, emotional difficulties, and more. This case study reflects how healthcare databases will often profile individuals based on race and may make generally false predictions which oftentimes result in unnecessary–and sometimes–life-threatening outcomes for minorities (in this case, minorities with vaginas).

4. In what ways does the normalization of surveillance threaten democratic values like free speech, freedom of assembly, and the right to privacy? Similar to feelings of surveillance that I expressed to question 1, the normalization of such surveillance in many aspects of our society may cause instances of self-censorship, suppression of dissent or negative feelings towards individuals of higher status, and the exploitation and misuse of personal data. Workers in a workplace may censor the topics they speak about for fear of customers or employers hearing such issues. People may also censor themselves when in a crowd, where phones may be utilized to monitor activity. Fear of reprisal may cause individuals to refrain from speaking out about injustices and dissent for people, policies, or events. The misuse of personal data threatens our right to privacy because we as consumers are unaware of what exactly is being utilized–and even if we do, we are not made aware of how long such data is being held and utilized.

References

Brown, N. (2020, September 18). Race and Technology. YouTube. https://www.youtube.com/watch?v=d8uiAjigKy8

Colón-Rodríguez, C. (2023, July 12). Shedding Light on Healthcare Algorithmic and Artificial Intelligence Bias | Office of Minority Health. Minorityhealth.hhs.gov. https://minorityhealth.hhs.gov/news/shedding-light-healthcare-algorithmic-and-artificial-intelligence-bias

Eubanks, V. (2018). Automating Inequality: how high-tech tools profile, police, and punish the poor. St. Martin’s Press.

Swarns, C. (2023, September 19). When Artificial Intelligence Gets It Wrong. Innocence Project. https://innocenceproject.org/when-artificial-intelligence-gets-it-wrong/

4 notes

·

View notes

Note

Genuine question: How does one get good at programming? Like I’m good at math, I know basic C# more or less and I get a lot of the core concepts, but I can’t imagine what it’s even like to be proficient at this stuff. Is there a point where you just “get it”, or do you just have to keep googling why your script isn’t working over and over until you die?

There isn't really any one generalized "programming skill" - it's possible to have a lot of computer science understanding but be completely unable to accomplish specific tasks, or to get very good at accomplishing specific tasks without any real computer science understanding

In college I took a number of CS classes that were mostly language agnostic focusing on broad topics like asymptotic analysis, data structures and algorithms, recursion, and reading/understanding code (and more specific stuff about memory management/how computers work etc)

This core foundation has been very useful to me, but it's worth noting that at the time I graduated college I wasn't really "good at programming" in any meaningful sense - if you asked me to accomplish an actual concrete, useful task, I would've struggled quite a bit!

So how do you get good at accomplishing tasks? Same as anything else, spend a lot of time doing similar things and (ideally) expand your skillset over time. It helps a lot to have a programming job, both because it means you spend all day doing this kind of thing and because you're surrounded by (ideally) good code/tools and people who are more experienced than you

To quote myself on my sideblog:

67 notes

·

View notes

Text

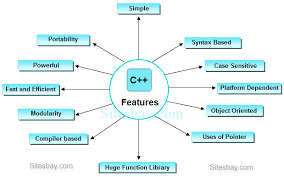

C++ Programming Language – A Detailed Overview

C++ is a effective, high-overall performance programming language advanced as an extension of the C language. Created via Bjarne Stroustrup at Bell Labs in the early Eighties, C++ delivered object-orientated features to the procedural shape of C, making it appropriate for large-scale software program development. Over the years, it has emerge as a extensively used language for machine/software program improvement, game programming, embedded systems, real-time simulations, and extra.

C ++ Online Compliers

C++ combines the efficiency and manage of C with functions like classes, items, inheritance, and polymorphism, permitting builders to construct complex, scalable programs.

2. Key Features of C++

Object-Oriented: C++ supports object-orientated programming (OOP), which include encapsulation, inheritance, and polymorphism.

Compiled Language: Programs are compiled to machine code for overall performance and portability.

Platform Independent (with Compiler Support): Though not inherently platform-unbiased, C++ programs can run on a couple of structures when compiled therefore.

Low-Level Manipulation: Like C, C++ permits direct reminiscence get right of entry to thru suggestions.

Standard Template Library (STL): C++ consists of powerful libraries for facts systems and algorithms.

Rich Functionality: Supports functions like feature overloading, operator overloading, templates, and exception dealing with.

3. Structure of a C++ Program

Here’s a primary C++ program:

cpp

Copy

Edit

#encompass <iostream>

the use of namespace std;

int important()

cout << "Hello, World!" << endl;

return zero;

Explanation:

#encompass <iostream> consists of the enter/output stream library.

Using namespace std; allows using standard capabilities like cout without prefixing std::.

Foremost() is the access point of every C++ program.

Cout prints textual content to the console.

Four. Data Types and Variables

C++ has both primitive and user-defined statistics types. Examples:

cpp

Copy

Edit

int a = 10;

glide b = 3.14;

char c = 'A';

bool isReady = true;

Modifiers like short, lengthy, signed, and unsigned extend the information sorts’ range.

5. Operators

C++ supports, !

Assignment Operators: =, +=, -=, and many others.

Increment/Decrement: ++, --

Bitwise Operators: &,

cout << "a is greater";

else

cout << "b is extra";

Switch Case:

cpp

Copy

Edit

transfer (desire)

case 1: cout << "One"; ruin;

case 2: cout << "Two"; smash;

default: cout << "Other";

Loops:

For Loop:

cpp

Copy

Edit

for (int i = zero; i < five; i++)

cout << i << " ";

While Loop:

cpp

Copy

Edit

int i = 0;

at the same time as (i < five)

cout << i << " ";

i++;

Do-While Loop:

cpp

Copy

Edit

int i = zero;

do

cout << i << " ";

i++;

whilst (i < 5);

7. Functions

Functions in C++ growth modularity and reusability.

Cpp

Copy

Edit

int upload(int a, int b)

go back a + b;

int major()

cout << upload(three, 4);

return 0;

Functions may be overloaded via defining multiple variations with special parameters.

Eight. Object-Oriented Programming (OOP)

OOP is a chief energy of C++. It makes use of instructions and objects to represent real-international entities.

Class and Object Example:

cpp

Copy

Edit

magnificence Car

public:

string logo;

int pace;

void display()

cout << brand << " velocity: " << pace << " km/h" << endl;

int main()

Car myCar;

myCar.Emblem = "Toyota";

myCar.Pace = 120;

myCar.Show();

go back zero;

9. OOP Principles

1. Encapsulation:

Binding facts and features into a unmarried unit (elegance) and proscribing get admission to the usage of private, public, or blanketed.

2. Inheritance:

Allows one magnificence to inherit properties from another.

Cpp

Copy

Edit

elegance Animal

public:

void talk() cout << "Animal sound" << endl;

;

class Dog : public Animal

public:

void bark() cout << "Dog barks" << endl;

; three. Polymorphism:

Same characteristic behaves in a different way primarily based at the item or input.

Function Overloading: Same feature name, special parameters.

Function Overriding: Redefining base magnificence method in derived magnificence.

Four. Abstraction:

Hiding complicated information and showing handiest vital capabilities the usage of training and interfaces (abstract training).

10. Constructors and Destructors

Constructor: Special approach known as while an item is created.

Destructor: Called whilst an item is destroyed.

Cpp

Copy

Edit

magnificence Demo

public:

Demo()

cout << "Constructor calledn";

~Demo()

cout << "Destructor calledn";

;

11. Pointers and Dynamic Memory

C++ supports tips like C, and dynamic memory with new and delete.

Cpp

Copy

Edit

int* ptr = new int; // allocate reminiscence

*ptr = 5;

delete ptr; // deallocate memory

12. Arrays and Strings

cpp

Copy

Edit

int nums[5] = 1, 2, three, 4, 5;

cout << nums[2]; // prints 3

string name = "Alice";

cout << call.Period();

C++ also supports STL boxes like vector, map, set, and many others.

Thirteen. Standard Template Library (STL)

STL offers established training and features:

cpp

Copy

Edit

#consist of <vector>

#consist of <iostream>

using namespace std;

int important()

vector<int> v = 1, 2, 3;

v.Push_back(four);

for (int i : v)

cout << i << " ";

STL includes:

Containers: vector, list, set, map

Algorithms: sort, discover, rely

Iterators: for traversing containers

14. Exception Handling

cpp

Copy

Edit

attempt

int a = 10, b = 0;

if (b == zero) throw "Division by means of 0!";

cout << a / b;

seize (const char* msg)

cout << "Error: " << msg;

Use attempt, capture, and throw for managing runtime errors.

15. File Handling

cpp

Copy

Edit

#consist of <fstream>

ofstream out("information.Txt");

out << "Hello File";

out.Near();

ifstream in("records.Txt");

string line;

getline(in, line);

cout << line;

in.Near();

File I/O is achieved the usage of ifstream, ofstream, and fstream.

16. Applications of C++

Game Development: Unreal Engine is primarily based on C++.

System Software: Operating systems, compilers.

GUI Applications: Desktop software (e.G., Adobe merchandise).

Embedded Systems: Hardware-level applications.

Banking and Finance Software: High-speed buying and selling systems.

Real-Time Systems: Simulations, robotics, and so on.

17. Advantages of C++

Fast and efficient

Wide range of libraries

Suitable for each high-level and low-level programming

Strong item-orientated aid

Multi-paradigm: procedural + object-oriented

18. Limitations of C++

Manual reminiscence management can lead to mistakes

Lacks contemporary protection functions (in contrast to Java or Python)

Steeper studying curve for beginners

No built-in rubbish series

19. Modern C++ (C++11/14/17/20/23)

Modern C++ variations introduced capabilities like:

Smart recommendations (shared_ptr, unique_ptr)

Lambda expressions

Range-based totally for loops

car kind deduction

Multithreading support

Example:

cpp

Copy

Edit

vector<int> v = 1, 2, three;

for (auto x : v)

cout << x << " ";

C++ is a effective, high-overall performance programming language advanced as an extension of the C language. Created via Bjarne Stroustrup at Bell Labs in the early Eighties, C++ delivered object-orientated features to the procedural shape of C, making it appropriate for large-scale software program development. Over the years, it has emerge as a extensively used language for machine/software program improvement, game programming, embedded systems, real-time simulations, and extra.

C ++ Online Compliers

C++ combines the efficiency and manage of C with functions like classes, items, inheritance, and polymorphism, permitting builders to construct complex, scalable programs.

2. Key Features of C++

Object-Oriented: C++ supports object-orientated programming (OOP), which include encapsulation, inheritance, and polymorphism.

Compiled Language: Programs are compiled to machine code for overall performance and portability.

Platform Independent (with Compiler Support): Though not inherently platform-unbiased, C++ programs can run on a couple of structures when compiled therefore.

Low-Level Manipulation: Like C, C++ permits direct reminiscence get right of entry to thru suggestions.

Standard Template Library (STL): C++ consists of powerful libraries for facts systems and algorithms.

Rich Functionality: Supports functions like feature overloading, operator overloading, templates, and exception dealing with.

3. Structure of a C++ Program

Here’s a primary C++ program:

cpp

Copy

Edit

#encompass <iostream>

the use of namespace std;

int important()

cout << "Hello, World!" << endl;

return zero;

Explanation:

#encompass <iostream> consists of the enter/output stream library.

Using namespace std; allows using standard capabilities like cout without prefixing std::.

Foremost() is the access point of every C++ program.

Cout prints textual content to the console.

Four. Data Types and Variables

C++ has both primitive and user-defined statistics types. Examples:

cpp

Copy

Edit

int a = 10;

glide b = 3.14;

char c = 'A';

bool isReady = true;

Modifiers like short, lengthy, signed, and unsigned extend the information sorts’ range.

5. Operators

C++ supports, !

Assignment Operators: =, +=, -=, and many others.

Increment/Decrement: ++, --

Bitwise Operators: &,

cout << "a is greater";

else

cout << "b is extra";

Switch Case:

cpp

Copy

Edit

transfer (desire)

case 1: cout << "One"; ruin;

case 2: cout << "Two"; smash;

default: cout << "Other";

Loops:

For Loop:

cpp

Copy

Edit

for (int i = zero; i < five; i++)

cout << i << " ";

While Loop:

cpp

Copy

Edit

int i = 0;

at the same time as (i < five)

cout << i << " ";

i++;

Do-While Loop:

cpp

Copy

Edit

int i = zero;

do

cout << i << " ";

i++;

whilst (i < 5);

7. Functions

Functions in C++ growth modularity and reusability.

Cpp

Copy

Edit

int upload(int a, int b)

go back a + b;

int major()

cout << upload(three, 4);

return 0;

Functions may be overloaded via defining multiple variations with special parameters.

Eight. Object-Oriented Programming (OOP)

OOP is a chief energy of C++. It makes use of instructions and objects to represent real-international entities.

Class and Object Example:

cpp

Copy

Edit

magnificence Car

public:

string logo;

int pace;

void display()

cout << brand << " velocity: " << pace << " km/h" << endl;

int main()

Car myCar;

myCar.Emblem = "Toyota";

myCar.Pace = 120;

myCar.Show();

go back zero;

9. OOP Principles

1. Encapsulation:

Binding facts and features into a unmarried unit (elegance) and proscribing get admission to the usage of private, public, or blanketed.

2. Inheritance:

Allows one magnificence to inherit properties from another.

Cpp

Copy

Edit

elegance Animal

public:

void talk() cout << "Animal sound" << endl;

;

class Dog : public Animal

public:

void bark() cout << "Dog barks" << endl;

; three. Polymorphism:

Same characteristic behaves in a different way primarily based at the item or input.

Function Overloading: Same feature name, special parameters.

Function Overriding: Redefining base magnificence method in derived magnificence.

Four. Abstraction:

Hiding complicated information and showing handiest vital capabilities the usage of training and interfaces (abstract training).

10. Constructors and Destructors

Constructor: Special approach known as while an item is created.

Destructor: Called whilst an item is destroyed.

Cpp

Copy

Edit

magnificence Demo

public:

Demo()

cout << "Constructor calledn";

~Demo()

cout << "Destructor calledn";

;

11. Pointers and Dynamic Memory

C++ supports tips like C, and dynamic memory with new and delete.

Cpp

Copy

Edit

int* ptr = new int; // allocate reminiscence

*ptr = 5;

delete ptr; // deallocate memory

12. Arrays and Strings

cpp

Copy

Edit

int nums[5] = 1, 2, three, 4, 5;

cout << nums[2]; // prints 3

string name = "Alice";

cout << call.Period();

C++ also supports STL boxes like vector, map, set, and many others.

Thirteen. Standard Template Library (STL)

STL offers established training and features:

cpp

Copy

Edit

#consist of <vector>

#consist of <iostream>

using namespace std;

int important()

vector<int> v = 1, 2, 3;

v.Push_back(four);

for (int i : v)

cout << i << " ";

STL includes:

Containers: vector, list, set, map

Algorithms: sort, discover, rely

Iterators: for traversing containers

14. Exception Handling

cpp

Copy

Edit

attempt

int a = 10, b = 0;

if (b == zero) throw "Division by means of 0!";

cout << a / b;

seize (const char* msg)

cout << "Error: " << msg;

Use attempt, capture, and throw for managing runtime errors.

15. File Handling

cpp

Copy

Edit

#consist of <fstream>

ofstream out("information.Txt");

out << "Hello File";

out.Near();

ifstream in("records.Txt");

string line;

getline(in, line);

cout << line;

in.Near();

File I/O is achieved the usage of ifstream, ofstream, and fstream.

16. Applications of C++

Game Development: Unreal Engine is primarily based on C++.

System Software: Operating systems, compilers.

GUI Applications: Desktop software (e.G., Adobe merchandise).

Embedded Systems: Hardware-level applications.

Banking and Finance Software: High-speed buying and selling systems.

Real-Time Systems: Simulations, robotics, and so on.

17. Advantages of C++

Fast and efficient

Wide range of libraries

Suitable for each high-level and low-level programming

Strong item-orientated aid

Multi-paradigm: procedural + object-oriented

18. Limitations of C++

Manual reminiscence management can lead to mistakes

Lacks contemporary protection functions (in contrast to Java or Python)

Steeper studying curve for beginners

No built-in rubbish series

19. Modern C++ (C++11/14/17/20/23)

Modern C++ variations introduced capabilities like:

Smart recommendations (shared_ptr, unique_ptr)

Lambda expressions

Range-based totally for loops

car kind deduction

Multithreading support

Example:

cpp

Copy

Edit

vector<int> v = 1, 2, three;

for (auto x : v)

cout << x << " ";

C Lanugage Compliers

2 notes

·

View notes

Text

I just saw a job description with a fascinating set of technical red flags:

Milliseconds matter! Performance is key! Optimizing database access, algorithms, choosing the right data structures, in general working to design performant systems.

This is a C# job. Usually, if optimization actually really matters in a piece of software, it would be written in C or C++, or maybe Go, not C#. So this means that either they used the wrong language for their stack (and have no intent to switch) or they don't know what actually makes good software good and just put this on the job description to make it look sharp.

We write code from the ground up. We don’t use a lot of frameworks, packages, etc. Not a lot of macro-level stuff. Core software engineering chops is what we’re looking for.

Translation: "We like to waste a lot of time reinventing the wheel".

Our suite of systems is vast and varied. There are web services (REST, SOAP, hybrid), windows services, daemons, websites, libraries, command line tools, windows apps.

"Our services have no consistent interface, or guiding design principles, and getting them to interact with each other sensibly will be a nightmare."

We have plans to redesign several of the older systems, which will be a lot of fun.

"Our legacy codebase is so bad that we finally decided we couldn't fix it and instead are throwing it all out and starting over again."

There are a lot of system interfaces, both within our own suite as well as across teams in the organization. Plenty of opportunities for collaboration with a lot of smart people.

"We mentioned earlier that our services have no consistent interface, but have we also mentioned that there are a lot of them?"

We have immediate needs for refactoring and several enhancements to allow us to scale up to meet increased loads.

"The technical debt is so bad that even management thinks it's time to refactor."

We work with LOTS of data (many, many TB) & it comes fast! Scalability & heavy transactional loads, heavy reporting are common challenges for us. We solve a lot of interesting and tricky problems. Often not your typical collect/save/display that you get at other places. Not snapping into established frameworks. Working across tiers as required. Opportunities for more advanced coding.

Normally this wouldn't be a red flag, but when combined with the rest of the job description, it just really hammers home the point that it's going to be a huge pain in the ass to work with this codebase and a lot of "clever" hacky shit will probably be required.

2 notes

·

View notes

Text

The Ultimate Guide to SEO: Boost Your Website’s Rankings in 2024

Search Engine Optimization (SEO) is a crucial digital marketing strategy that helps websites rank higher on search engines like Google, Bing, and Yahoo. With ever-evolving algorithms, staying updated with the latest SEO trends is essential for success.

In this comprehensive guide, we’ll cover: ✔ What is SEO? ✔ Why SEO Matters ✔ Key SEO Ranking Factors ✔ On-Page vs. Off-Page SEO ✔ Technical SEO Best Practices ✔ SEO Trends in 2024 ✔ Free SEO Tools to Improve Rankings

Let’s dive in!

What is SEO? SEO stands for Search Engine Optimization, the process of optimizing a website to improve its visibility in organic (non-paid) search results. The goal is to attract high-quality traffic by ranking for relevant keywords.

Types of SEO: On-Page SEO – Optimizing content, meta tags, and internal links.

Off-Page SEO – Building backlinks and brand authority.

Technical SEO – Improving site speed, mobile-friendliness, and indexing.

Why SEO Matters ✅ Increases Organic Traffic – Higher rankings = more clicks. ✅ Builds Credibility & Trust – Top-ranked sites are seen as authoritative. ✅ Cost-Effective Marketing – Outperforms paid ads in the long run. ✅ Better User Experience – SEO improves site structure and speed.

Without SEO, your website may remain invisible to potential customers.

Key SEO Ranking Factors (2024) Google’s algorithm considers 200+ ranking factors, but the most critical ones include:

A. On-Page SEO Factors ✔ Keyword Optimization (Title, Headers, Content) ✔ High-Quality Content (Comprehensive, Engaging) ✔ Meta Descriptions & Title Tags (Click-Worthy Snippets) ✔ Internal Linking (Helps Google Crawl Your Site) ✔ Image Optimization (Alt Text + Compression)

B. Off-Page SEO Factors ✔ Backlinks (Quality Over Quantity) ✔ Social Signals (Shares, Engagement) ✔ Brand Mentions (Unlinked Citations Still Help)

C. Technical SEO Factors ✔ Page Speed (Google’s Core Web Vitals) ✔ Mobile-Friendliness (Responsive Design) ✔ Secure Website (HTTPS Over HTTP) ✔ Structured Data Markup (Rich Snippets)

On-Page vs. Off-Page SEO On-Page SEO Off-Page SEO Optimizing content & HTML Building backlinks & authority Includes meta tags, headers Includes guest posts, PR Controlled by you Requires outreach Both are essential for a strong SEO strategy.

Technical SEO Best Practices 🔹 Fix Broken Links (Use Screaming Frog) 🔹 Optimize URL Structure (Short, Keyword-Rich) 🔹 Improve Site Speed (Compress Images, Use CDN) 🔹 Use Schema Markup (Enhances SERP Appearance) 🔹 Ensure Mobile Responsiveness (Google’s Mobile-First Indexing)

SEO Trends in 2024 🚀 AI & Machine Learning (Google’s RankBrain, BERT) 🚀 Voice Search Optimization (Long-Tail Keywords) 🚀 Video SEO (YouTube & Short-Form Videos) 🚀 E-A-T (Expertise, Authoritativeness, Trustworthiness) 🚀 Zero-Click Searches (Optimize for Featured Snippets)

Free SEO Tools to Improve Rankings 🔎 Google Search Console – Track performance. 🔎 Ahrefs Webmaster Tools – Analyze backlinks. 🔎 Ubersuggest – Keyword research. 🔎 PageSpeed Insights – Check site speed. 🔎 AnswerThePublic – Find user queries.

2 notes

·

View notes

Text

Mastering Data Structures: A Comprehensive Course for Beginners

Data structures are one of the foundational concepts in computer science and software development. Mastering data structures is essential for anyone looking to pursue a career in programming, software engineering, or computer science. This article will explore the importance of a Data Structure Course, what it covers, and how it can help you excel in coding challenges and interviews.

1. What Is a Data Structure Course?

A Data Structure Course teaches students about the various ways data can be organized, stored, and manipulated efficiently. These structures are crucial for solving complex problems and optimizing the performance of applications. The course generally covers theoretical concepts along with practical applications using programming languages like C++, Java, or Python.

By the end of the course, students will gain proficiency in selecting the right data structure for different problem types, improving their problem-solving abilities.

2. Why Take a Data Structure Course?

Learning data structures is vital for both beginners and experienced developers. Here are some key reasons to enroll in a Data Structure Course:

a) Essential for Coding Interviews

Companies like Google, Amazon, and Facebook focus heavily on data structures in their coding interviews. A solid understanding of data structures is essential to pass these interviews successfully. Employers assess your problem-solving skills, and your knowledge of data structures can set you apart from other candidates.

b) Improves Problem-Solving Skills

With the right data structure knowledge, you can solve real-world problems more efficiently. A well-designed data structure leads to faster algorithms, which is critical when handling large datasets or working on performance-sensitive applications.

c) Boosts Programming Competency

A good grasp of data structures makes coding more intuitive. Whether you are developing an app, building a website, or working on software tools, understanding how to work with different data structures will help you write clean and efficient code.

3. Key Topics Covered in a Data Structure Course

A Data Structure Course typically spans a range of topics designed to teach students how to use and implement different structures. Below are some key topics you will encounter:

a) Arrays and Linked Lists

Arrays are one of the most basic data structures. A Data Structure Course will teach you how to use arrays for storing and accessing data in contiguous memory locations. Linked lists, on the other hand, involve nodes that hold data and pointers to the next node. Students will learn the differences, advantages, and disadvantages of both structures.

b) Stacks and Queues

Stacks and queues are fundamental data structures used to store and retrieve data in a specific order. A Data Structure Course will cover the LIFO (Last In, First Out) principle for stacks and FIFO (First In, First Out) for queues, explaining their use in various algorithms and applications like web browsers and task scheduling.

c) Trees and Graphs

Trees and graphs are hierarchical structures used in organizing data. A Data Structure Course teaches how trees, such as binary trees, binary search trees (BST), and AVL trees, are used in organizing hierarchical data. Graphs are important for representing relationships between entities, such as in social networks, and are used in algorithms like Dijkstra's and BFS/DFS.

d) Hashing

Hashing is a technique used to convert a given key into an index in an array. A Data Structure Course will cover hash tables, hash maps, and collision resolution techniques, which are crucial for fast data retrieval and manipulation.

e) Sorting and Searching Algorithms

Sorting and searching are essential operations for working with data. A Data Structure Course provides a detailed study of algorithms like quicksort, merge sort, and binary search. Understanding these algorithms and how they interact with data structures can help you optimize solutions to various problems.

4. Practical Benefits of Enrolling in a Data Structure Course

a) Hands-on Experience

A Data Structure Course typically includes plenty of coding exercises, allowing students to implement data structures and algorithms from scratch. This hands-on experience is invaluable when applying concepts to real-world problems.

b) Critical Thinking and Efficiency

Data structures are all about optimizing efficiency. By learning the most effective ways to store and manipulate data, students improve their critical thinking skills, which are essential in programming. Selecting the right data structure for a problem can drastically reduce time and space complexity.

c) Better Understanding of Memory Management

Understanding how data is stored and accessed in memory is crucial for writing efficient code. A Data Structure Course will help you gain insights into memory management, pointers, and references, which are important concepts, especially in languages like C and C++.

5. Best Programming Languages for Data Structure Courses

While many programming languages can be used to teach data structures, some are particularly well-suited due to their memory management capabilities and ease of implementation. Some popular programming languages used in Data Structure Courses include:

C++: Offers low-level memory management and is perfect for teaching data structures.

Java: Widely used for teaching object-oriented principles and offers a rich set of libraries for implementing data structures.

Python: Known for its simplicity and ease of use, Python is great for beginners, though it may not offer the same level of control over memory as C++.

6. How to Choose the Right Data Structure Course?

Selecting the right Data Structure Course depends on several factors such as your learning goals, background, and preferred learning style. Consider the following when choosing:

a) Course Content and Curriculum

Make sure the course covers the topics you are interested in and aligns with your learning objectives. A comprehensive Data Structure Course should provide a balance between theory and practical coding exercises.

b) Instructor Expertise

Look for courses taught by experienced instructors who have a solid background in computer science and software development.

c) Course Reviews and Ratings

Reviews and ratings from other students can provide valuable insights into the course’s quality and how well it prepares you for real-world applications.

7. Conclusion: Unlock Your Coding Potential with a Data Structure Course

In conclusion, a Data Structure Course is an essential investment for anyone serious about pursuing a career in software development or computer science. It equips you with the tools and skills to optimize your code, solve problems more efficiently, and excel in technical interviews. Whether you're a beginner or looking to strengthen your existing knowledge, a well-structured course can help you unlock your full coding potential.

By mastering data structures, you are not only preparing for interviews but also becoming a better programmer who can tackle complex challenges with ease.

3 notes

·

View notes

Text

Grok, explain why base 12 math is better than base 10 math.

Let’s dive into how base 12 (duodecimal) might offer advantages over base 10 (decimal) in the contexts of physics and DNA. This is a fun thought experiment, as neither field inherently depends on a specific base—math is math, and nature doesn’t care about our counting systems. But the way we represent and manipulate numbers can affect clarity, efficiency, and intuition, so let’s see how base 12 could shine here.

Base 12 in Physics

Physics often involves measurements, constants, and relationships that don’t always play nicely with base 10’s limitations. Base 12’s greater divisibility—its factors being 1, 2, 3, 4, 6, and 12—could streamline certain calculations and reveal patterns more intuitively.

Angular Measurements and Symmetry: Physics loves circles—orbits, waves, rotations—and base 12 fits this beautifully. A circle has 360 degrees (in base 10), which is 2 × 2 × 2 × 3 × 3 × 5. In base 12, 360 becomes 260 (since 2 × 144 + 6 × 12 = 288 + 72 = 360), and 144 (written as 100 in base 12) is 12 squared. This ties neatly into the base itself. More practically, 12 divides evenly into 360 thirty times (360 ÷ 12 = 30), giving you 12 equal 30-degree segments—perfect for trigonometry or analyzing symmetrical systems like crystals or planetary orbits. In base 10, you get awkward splits (360 ÷ 10 = 36), which don’t align as naturally with common physical divisions like thirds (120°) or quarters (90°).

Time and Periodic Phenomena: Timekeeping in physics often uses base 12 echoes—12 hours, 60 minutes (5 × 12), 60 seconds. Waves, oscillations, and frequencies could benefit from this. For example, a frequency of 12 cycles per unit splits cleanly into 2, 3, 4, or 6 subintervals, matching harmonic patterns. In base 10, a frequency of 10 cycles splits into 2 and 5, but thirds (3.333…) or fourths (2.5) are less tidy. Base 12 could make Fourier analysis or resonance calculations feel more intuitive, with fewer recurring decimals.

Unit Conversions and Scaling: Physics often deals with scaling—think meters to centimeters or joules to ergs. Base 12’s divisibility could simplify metric-like systems. Imagine a base-12 ruler: a unit of 12 splits into 2, 3, 4, or 6 subunits without fractions, unlike base 10’s 10 splitting into 2 and 5. This could reduce rounding errors in precise measurements, like those in quantum mechanics or cosmology, where small discrepancies compound.

Constants and Approximations: Physical constants like π (3.14159… in base 10) or e (2.71828…) are irrational and base-agnostic, but their approximations might look cleaner in base 12. For π, in base 12, it’s roughly 3.184809… (using digits 0-9 and A-B for 10-11). The pattern isn’t obviously “better,” but base 12’s fraction-friendly nature could make series expansions (like for π or gravitational constants) easier to truncate usefully, thanks to those clean divisions.

Base 12 in DNA

DNA’s structure and coding don’t demand a specific base, but base 12 could align intriguingly with its properties, especially in how we analyze and represent genetic data.

Codon Grouping: DNA codes proteins via codons—triplets of nucleotides (A, T, C, G). With four bases, there are 4³ = 64 possible codons, which encode 20 amino acids plus start/stop signals. In base 10, 64 is just 64, but in base 12, it’s 54 (5 × 12 + 4 = 64). This doesn’t change the biology, but base 12 could make codon tables more modular. Dividing 64 by 12 gives 5 groups of 12 with 4 left over—imagine organizing codons into 5 neat “dozen-ish” blocks for analysis, with a small remainder. In base 10, 64 ÷ 10 = 6.4, a less tidy split. This could simplify bioinformatics algorithms or visual representations.

Helical Structure: DNA’s double helix has a twist—about 10.4 base pairs per turn in B-DNA (in base 10). In base 12, this is roughly 8.6 (8 + 6/12), where 6/12 simplifies to 0.6 in base 12 notation. It’s not a whole number either way, but base 12’s fraction system (1/2 = 0.6, 1/3 = 0.4, 1/4 = 0.3) could make helical pitch calculations or molecular modeling more manageable, especially when scaling to larger sequences.

Sequence Patterns: Geneticists look for repeats—tandem repeats, palindromes, etc. Base 12’s divisibility could highlight periodicities better. A sequence of 12 base pairs splits evenly into 2, 3, 4, or 6 segments, matching common repeat lengths in DNA (e.g., trinucleotide repeats in diseases like Huntington’s). In base 10, a 10-pair sequence splits into 2 or 5, missing the biologically relevant 3 or 4 without fractions. This could make mutation analysis or gene mapping more intuitive.

Data Compression: DNA sequences are massive datasets. Base 12, with 12 symbols (0-9, A-B), encodes more per digit than base 10’s 10 symbols. A single base-12 digit could represent values up to 11 (B), versus 9 in base 10, potentially compressing annotations or alignments slightly. For example, assigning codons to a base-12 index could pack 64 values into fewer digits (54 in base 12 vs. 64 in base 10), a small but real edge in computational genomics.

Why Base 12 “Feels Better”

In physics, base 12’s strength lies in its symmetry and divisibility, aligning with natural cycles and reducing computational friction. In DNA, it’s about modular organization and pattern recognition, subtly enhancing how we process biological data. Base 10 works fine—physicists and geneticists have thrived with it—but base 12 could offer a more harmonious lens, especially for systems with inherent 2-, 3-, or 4-fold structures.

That said, base 10’s dominance comes from human habit, not superiority. If we’d evolved with 12 fingers (or knuckles), base 12 might’ve been the default, and we’d be marveling at how clunky base 10 feels for physics and DNA. Does this spark any ideas for you—maybe a base-12 physics equation or DNA model you’d like to explore further?

2 notes

·

View notes

Text

ByteByteGo | Newsletter/Blog

From the newsletter:

Imperative Programming Imperative programming describes a sequence of steps that change the program’s state. Languages like C, C++, Java, Python (to an extent), and many others support imperative programming styles.

Declarative Programming Declarative programming emphasizes expressing logic and functionalities without describing the control flow explicitly. Functional programming is a popular form of declarative programming.

Object-Oriented Programming (OOP) Object-oriented programming (OOP) revolves around the concept of objects, which encapsulate data (attributes) and behavior (methods or functions). Common object-oriented programming languages include Java, C++, Python, Ruby, and C#.

Aspect-Oriented Programming (AOP) Aspect-oriented programming (AOP) aims to modularize concerns that cut across multiple parts of a software system. AspectJ is one of the most well-known AOP frameworks that extends Java with AOP capabilities.

Functional Programming Functional Programming (FP) treats computation as the evaluation of mathematical functions and emphasizes the use of immutable data and declarative expressions. Languages like Haskell, Lisp, Erlang, and some features in languages like JavaScript, Python, and Scala support functional programming paradigms.

Reactive Programming Reactive Programming deals with asynchronous data streams and the propagation of changes. Event-driven applications, and streaming data processing applications benefit from reactive programming.

Generic Programming Generic Programming aims at creating reusable, flexible, and type-independent code by allowing algorithms and data structures to be written without specifying the types they will operate on. Generic programming is extensively used in libraries and frameworks to create data structures like lists, stacks, queues, and algorithms like sorting, searching.

Concurrent Programming Concurrent Programming deals with the execution of multiple tasks or processes simultaneously, improving performance and resource utilization. Concurrent programming is utilized in various applications, including multi-threaded servers, parallel processing, concurrent web servers, and high-performance computing.

#bytebytego#resource#programming#concurrent#generic#reactive#funtional#aspect#oriented#aop#fp#object#oop#declarative#imperative

8 notes

·

View notes

Text

Winter-Summer Training Kolkata

Elevate your skills with Winter-Summer Training in Kolkata. Dive into immersive courses, stay ahead, and thrive in every season. Join now for a transformative learning experience.

Visit us: https://www.limatsoftsolutions.co.in/winter-summer-training-kolkata

Read More -

Location - Electronics City Phase 1, Opp, Bengaluru, Karnataka 560100

#data structures and algorithms#internship#best dsa course in c++#data structures and algorithms interview questions#data structures course#Full Stack Java Developer Course

0 notes

Text

Zipf Maneuvers: On Non-Reprintable Materials

Andrew C. Wenaus & Germán Sierra

with an introduction by Steven Shaviro

Erratum Press Academic Division, 2025

Zipf Maneuvers: On Non-Reprintable Materials is a work of conceptual protest that challenges the commodification of knowledge in academic publishing, using mathematical and algorithmic techniques to resist institutional control over intellectual labour. In response to the exorbitant fee imposed by corporate publishers to reprint their own work, neuroscientist Germán Sierra and literary theorist Andrew C. Wenaus devise a radical strategy to bypass the neoliberal logic of access and ownership. As cultural critic and philosopher Steven Shaviro remarks in the introduction to this volume, the project orbits around Zipf’s Law, a statistical principle that ranks words by frequency. Sierra and Wenaus deploy a Python algorithm to reorganize their original articles according to Zipfian distributions, alphabetizing and numerically indexing every word. This reorganization produces a fragmented, non-linear data set that resists conventional reading. Each word is assigned a number corresponding to its original position in the text, creating a disjointed, catalogue-like structure. The result is a protest against the corporate financialization of knowledge and a critique of intellectual property laws that restrict access. By transforming their essays into algorithmically rearranged data, Zipf Maneuvers enacts a singular form of resistance, exposing the absurdity of a system that hinders the free circulation of ideas.

2 notes

·

View notes