#Computer Science solution manuals

Explore tagged Tumblr posts

Text

Masterlist of Free PDF Versions of Textbooks Used in Undergrad SNHU Courses in 2025 C-1 (Jan - Mar)

Literally NONE of the Accounting books are available on libgen, they all have isbns that start with the same numbers, so I think they're made for the school or something. The single Advertising course also didn't have a PDF available.

This list could also be helpful if you just want to learn stuff

NOTE: I only included textbooks that have access codes if it was stated that you won't need the access code ANYWAY

ATH (anthropology)

only one course has an available pdf ATH-205 - In The Beginning: An Introduction to Archaeology

BIO (Biology)

BIO-205 Publication Manual of the American Psychological Association Essentials of Human Anatomy & Physiology 13th Edition

NOTE: These are not the only textbook you need for this class, I couldn't get the other one

CHE (IDK what this is)

CHE-329

The Aging Networks: A Guide to Policy, Programs, and Services

Publication Manual Of The American Psychological Association

CHE-460

Health Communication: Strategies and Skills for a New Era

Publication Manual Of The American Psychological Association

CJ (Criminal Justice)

CJ-303

The Wisdom of Psychopaths: What Saints, Spies, and Serial Killers Can Teach Us About Success

Without Conscious: The Disturbing World of the Psychopaths Among Us

CJ-308

Cybercrime Investigations: a Comprehensive Resource for Everyone

CJ-315

Victimology and Victim Assistance: Advocacy, Intervention, and Restoration

CJ-331

Community and Problem-Oriented Policing: Effectively Addressing Crime and Disorder

CJ-350

Deception Counterdeception and Counterintelligence

NOTE: This is not the only textbook you need for this class, I couldn't find the other one

CJ-405Private Security Today

CJ-408

Strategic Security Management-A Risk Assessment Guide for Decision Makers, Second Edition

COM (Communications)

COM-230

Graphic Design Solutions

COM-325McGraw-Hill's Proofreading Handbook

NOTE: This is not the only book you need for this course, I couldn't find the other one

COM-329

Media Now: Understanding Media, Culture, and Technology

COM-330The Only Business Writing Book You’ll Ever Need

NOTE: This is not the only book you need for this course, I couldn't find the other one

CS (Computer Science)

CS-319Interaction Design

CYB (Cyber Security)

CYB-200Fundamentals of Information Systems Security

CYB-240

Internet and Web Application Security

NOTE: This is not the only resource you need for this course. The other one is a program thingy

CYB-260Legal and Privacy Issues in Information Security

CYB-310

Hands-On Ethical Hacking and Network Defense (MindTap Course List)

NOTE: This is not the only resource you need for this course. The other one is a program thingy

CYB-400

Auditing IT Infrastructures for Compliance

NOTE: This is not the only resource you need for this course. The other one is a program thingy

CYB-420CISSP Official Study Guide

DAT (IDK what this is, but I think it's computer stuff)

DAT-430

Dashboard book

ECO (Economics)

ECO-322

International Economics

ENG (English)

ENG-226 (I'm taking this class rn, highly recommend. The book is good for any writer)

The Bloomsbury Introduction to Creative Writing: Second Edition

ENG-328

Ordinary genius: a guide for the poet within

ENG-329 (I took this course last term. The book I couldn't find is really not necessary, and is in general a bad book. Very ablest. You will, however, need the book I did find, and I recommend it even for people not taking the class. Lots of good short stories.)

100 years of the best American short stories

ENG-341You can't make this stuff up : the complete guide to writing creative nonfiction--from memoir to literary journalism and everything in between

ENG-347

Save The Cat! The Last Book on Screenwriting You'll Ever Need

NOTE: This i snot the only book you need for this course, I couldn't find the other one

ENG-350

Linguistics for Everyone: An Introduction

ENG-351Tell It Slant: Creating, Refining, and Publishing Creative Nonfiction

ENG-359 Crafting Novels & Short Stories: Everything You Need to Know to Write Great Fiction

ENV (Environmental Science)

ENV-101

Essential Environment 6th Edition The Science Behind the Stories

ENV-220

Fieldwork Ready: An introductory Guide to Field Research for Agriculture, Environment, and Soil Scientists

NOTE: You will also need lab stuff

ENV-250

A Pocket Style Manual 9th Edition

ENV-319

The Environmental Case: Translating Values Into Policy

Salzman and Thompson's Environmental Law and Policy

FAS (Fine Arts)

FAS-235Adobe Photoshop Lightroom Classic Classroom in a Book (2023 Release)

FAS-342 History of Modern Art

ALRIGHTY I'm tired, I will probably add ore later though! Good luck!

22 notes

·

View notes

Text

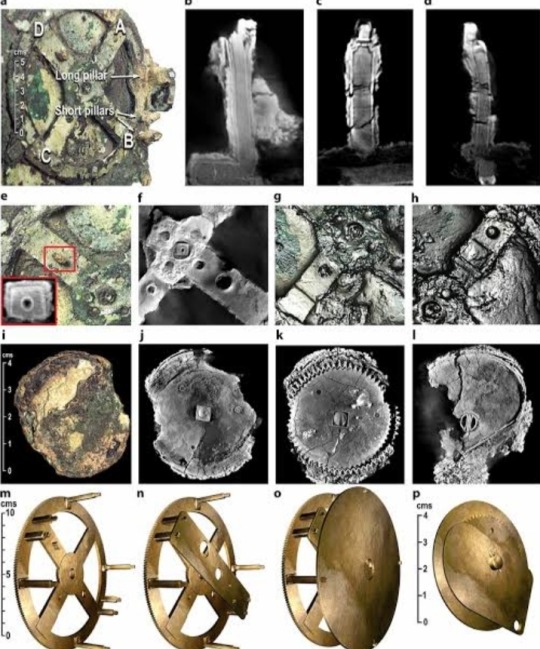

Antikythera Mechanism

Researchers claim breakthrough in study of 2000 year-old Antikythera mechanism, an astronomical calculator found in sea.

From the moment it was discovered more than a century ago, scholars have puzzled over the Antikythera mechanism, a remarkable and baffling astronomical calculator that survives from the ancient world.

The hand-powered, 2000 year-old device displayed the motion of the universe, predicting the movement of the five known planets, the phases of the moon, and the solar and lunar eclipses.

But quite how it achieved such impressive feats has proved fiendishly hard to untangle.

Now researchers at UCL believe they have solved the mystery – at least in part – and have set about reconstructing the device, gearwheels and all, to test whether their proposal works.

If they can build a replica with modern machinery, they aim to do the same with techniques from antiquity.

“We believe that our reconstruction fits all the evidence that scientists have gleaned from the extant remains to date,” said Adam Wojcik, a materials scientist at UCL.

While other scholars have made reconstructions in the past, the fact that two-thirds of the mechanism are missing has made it hard to know for sure how it worked.

The mechanism, often described as the world’s first analogue computer, was found by sponge divers in 1901 amid a haul of treasures salvaged from a merchant ship that met with disaster off the Greek island of Antikythera.

The ship is believed to have foundered in a storm in 1st Century BC, as it passed between Crete and Peloponnese en route to Rome from Asia Minor.

The battered fragments of corroded brass were barely noticed at first, but decades of scholarly work have revealed the object to be a masterpiece of mechanical engineering.

Originally encased in a wooden box one foot tall, the mechanism was covered in inscriptions – a built-in user’s manual – and contained more than 30 bronze gearwheels connected to dials and pointers.

Turn the handle and the heavens, as known to the Greeks, swung into motion.

Michael Wright, a former curator of mechanical engineering at the Science Museum in London, pieced together much of how the mechanism operated and built a working replica, but researchers have never had a complete understanding of how the device functioned.

Their efforts have not been helped by the remnants surviving in 82 separate fragments, making the task of rebuilding it equivalent to solving a battered 3D puzzle that has most of its pieces missing.

Writing in the journal Scientific Reports, the UCL team describe how they drew on the work of Wright and others.

They used inscriptions on the mechanism and a mathematical method described by the ancient Greek philosopher Parmenides to work out new gear arrangements that would move the planets and other bodies in the correct way.

The solution allows nearly all of the mechanism’s gearwheels to fit within a space only 25mm deep.

According to the team, the mechanism may have displayed the movement of the sun, moon and the planets Mercury, Venus, Mars, Jupiter, and Saturn on concentric rings.

Because the device assumed that the sun and planets revolved around Earth, their paths were far more difficult to reproduce with gearwheels than if the sun was placed at the centre.

Another change the scientists propose is a double-ended pointer they call a “Dragon Hand” that indicates when eclipses are due to happen.

The researchers believe the work brings them closer to a true understanding of how the Antikythera device displayed the heavens, but it is not clear whether the design is correct or could have been built with ancient manufacturing techniques.

The concentric rings that make up the display would need to rotate on a set of nested, hollow axles, but without a lathe to shape the metal, it is unclear how the ancient Greeks would have manufactured such components.

#Antikythera mechanism#astronomical calculator#ancient world#ancient civilizations#Antikythera#world’s first analogue computer#corroded brass#mechanical engineering#inscriptions#gearwheels#Parmenides#ancient greece#ancient history

2 notes

·

View notes

Text

Why Should You Do Web Scraping for python

Web scraping is a valuable skill for Python developers, offering numerous benefits and applications. Here’s why you should consider learning and using web scraping with Python:

1. Automate Data Collection

Web scraping allows you to automate the tedious task of manually collecting data from websites. This can save significant time and effort when dealing with large amounts of data.

2. Gain Access to Real-World Data

Most real-world data exists on websites, often in formats that are not readily available for analysis (e.g., displayed in tables or charts). Web scraping helps extract this data for use in projects like:

Data analysis

Machine learning models

Business intelligence

3. Competitive Edge in Business

Businesses often need to gather insights about:

Competitor pricing

Market trends

Customer reviews Web scraping can help automate these tasks, providing timely and actionable insights.

4. Versatility and Scalability

Python’s ecosystem offers a range of tools and libraries that make web scraping highly adaptable:

BeautifulSoup: For simple HTML parsing.

Scrapy: For building scalable scraping solutions.

Selenium: For handling dynamic, JavaScript-rendered content. This versatility allows you to scrape a wide variety of websites, from static pages to complex web applications.

5. Academic and Research Applications

Researchers can use web scraping to gather datasets from online sources, such as:

Social media platforms

News websites

Scientific publications

This facilitates research in areas like sentiment analysis, trend tracking, and bibliometric studies.

6. Enhance Your Python Skills

Learning web scraping deepens your understanding of Python and related concepts:

HTML and web structures

Data cleaning and processing

API integration

Error handling and debugging

These skills are transferable to other domains, such as data engineering and backend development.

7. Open Opportunities in Data Science

Many data science and machine learning projects require datasets that are not readily available in public repositories. Web scraping empowers you to create custom datasets tailored to specific problems.

8. Real-World Problem Solving

Web scraping enables you to solve real-world problems, such as:

Aggregating product prices for an e-commerce platform.

Monitoring stock market data in real-time.

Collecting job postings to analyze industry demand.

9. Low Barrier to Entry

Python's libraries make web scraping relatively easy to learn. Even beginners can quickly build effective scrapers, making it an excellent entry point into programming or data science.

10. Cost-Effective Data Gathering

Instead of purchasing expensive data services, web scraping allows you to gather the exact data you need at little to no cost, apart from the time and computational resources.

11. Creative Use Cases

Web scraping supports creative projects like:

Building a news aggregator.

Monitoring trends on social media.

Creating a chatbot with up-to-date information.

Caution

While web scraping offers many benefits, it’s essential to use it ethically and responsibly:

Respect websites' terms of service and robots.txt.

Avoid overloading servers with excessive requests.

Ensure compliance with data privacy laws like GDPR or CCPA.

If you'd like guidance on getting started or exploring specific use cases, let me know!

2 notes

·

View notes

Text

What is Customer Analytics? – The Importance of Understanding It

Consumers have clear expectations when selecting products or services. Business leaders need to understand what influences customer decisions. By leveraging advanced analytics and engaging in data analytics consulting, they can pinpoint these factors and improve customer experiences to boost client retention. This article will explore the importance of customer analytics.

Understanding Customer Analytics

Customer analytics involves applying computer science, statistical modeling, and consumer psychology to uncover the logical and emotional drivers behind consumer behavior. Businesses and sales teams can work with a customer analytics company to refine customer journey maps, leading to better conversion rates and higher profit margins. Furthermore, they can identify disliked product features, allowing them to improve or remove underperforming products and services.

Advanced statistical methods and machine learning (ML) models provide deeper insights into customer behavior, reducing the need for extensive documentation and trend analysis.

Why Customer Analytics is Essential

Reason 1 — Boosting Sales

Insights into consumer behavior help marketing, sales, and CRM teams attract more customers through effective advertisements, customer journey maps, and post-purchase support. Additionally, these insights, provided through data analytics consulting, can refine pricing and product innovation strategies, leading to improved sales outcomes.

Reason 2 — Automation

Advances in advanced analytics services have enhanced the use of ML models for evaluating customer sentiment, making pattern discovery more efficient. Consequently, manual efforts are now more manageable, as ML and AI facilitate automated behavioral insight extraction.

Reason 3 — Enhancing Long-Term Customer Relationships

Analytical models help identify the best experiences to strengthen customers’ positive associations with your brand. This results in better reception, positive word-of-mouth, and increased likelihood of customers reaching out to your support team rather than switching to competitors.

Reason 4 — Accurate Sales and Revenue Forecasting

Analytics reveal seasonal variations in consumer demand, impacting product lines or service packages. Data-driven financial projections, supported by data analytics consulting, become more reliable, helping corporations adjust production capacity to optimize their average revenue per user (ARPU).

Reason 5 — Reducing Costs

Cost per acquisition (CPA) measures the expense of acquiring a customer. A decrease in CPA signifies that conversions are becoming more cost-effective. Customer analytics solutions can enhance brand awareness and improve CPA. Benchmarking against historical CPA trends and experimenting with different acquisition strategies can help address inefficiencies and optimize marketing spend.

Reason 6 — Product Improvements

Customer analytics provides insights into features that can enhance engagement and satisfaction. Understanding why customers switch due to missing features or performance issues allows production and design teams to identify opportunities for innovation.

Reason 7 — Optimizing the Customer Journey

A customer journey map outlines all interaction points across sales funnels, complaint resolutions, and loyalty programs. Customer analytics helps prioritize these touchpoints based on their impact on engaging, retaining, and satisfying customers. Address risks such as payment issues or helpdesk errors by refining processes or implementing better CRM systems.

Conclusion

Understanding the importance of customer analytics is crucial for modern businesses. It offers significant benefits, including enhancing customer experience (CX), driving sales growth, and preventing revenue loss. Implementing effective strategies for CPA reduction and product performance is essential, along with exploring automation-compatible solutions to boost productivity. Customer insights drive optimization and brand loyalty, making collaboration with experienced analysts and engaging in data analytics consulting a valuable asset in overcoming inefficiencies in marketing, sales, and CRM.

3 notes

·

View notes

Text

Scientists use generative AI to answer complex questions in physics

New Post has been published on https://thedigitalinsider.com/scientists-use-generative-ai-to-answer-complex-questions-in-physics/

Scientists use generative AI to answer complex questions in physics

When water freezes, it transitions from a liquid phase to a solid phase, resulting in a drastic change in properties like density and volume. Phase transitions in water are so common most of us probably don’t even think about them, but phase transitions in novel materials or complex physical systems are an important area of study.

To fully understand these systems, scientists must be able to recognize phases and detect the transitions between. But how to quantify phase changes in an unknown system is often unclear, especially when data are scarce.

Researchers from MIT and the University of Basel in Switzerland applied generative artificial intelligence models to this problem, developing a new machine-learning framework that can automatically map out phase diagrams for novel physical systems.

Their physics-informed machine-learning approach is more efficient than laborious, manual techniques which rely on theoretical expertise. Importantly, because their approach leverages generative models, it does not require huge, labeled training datasets used in other machine-learning techniques.

Such a framework could help scientists investigate the thermodynamic properties of novel materials or detect entanglement in quantum systems, for instance. Ultimately, this technique could make it possible for scientists to discover unknown phases of matter autonomously.

“If you have a new system with fully unknown properties, how would you choose which observable quantity to study? The hope, at least with data-driven tools, is that you could scan large new systems in an automated way, and it will point you to important changes in the system. This might be a tool in the pipeline of automated scientific discovery of new, exotic properties of phases,” says Frank Schäfer, a postdoc in the Julia Lab in the Computer Science and Artificial Intelligence Laboratory (CSAIL) and co-author of a paper on this approach.

Joining Schäfer on the paper are first author Julian Arnold, a graduate student at the University of Basel; Alan Edelman, applied mathematics professor in the Department of Mathematics and leader of the Julia Lab; and senior author Christoph Bruder, professor in the Department of Physics at the University of Basel. The research is published today in Physical Review Letters.

Detecting phase transitions using AI

While water transitioning to ice might be among the most obvious examples of a phase change, more exotic phase changes, like when a material transitions from being a normal conductor to a superconductor, are of keen interest to scientists.

These transitions can be detected by identifying an “order parameter,” a quantity that is important and expected to change. For instance, water freezes and transitions to a solid phase (ice) when its temperature drops below 0 degrees Celsius. In this case, an appropriate order parameter could be defined in terms of the proportion of water molecules that are part of the crystalline lattice versus those that remain in a disordered state.

In the past, researchers have relied on physics expertise to build phase diagrams manually, drawing on theoretical understanding to know which order parameters are important. Not only is this tedious for complex systems, and perhaps impossible for unknown systems with new behaviors, but it also introduces human bias into the solution.

More recently, researchers have begun using machine learning to build discriminative classifiers that can solve this task by learning to classify a measurement statistic as coming from a particular phase of the physical system, the same way such models classify an image as a cat or dog.

The MIT researchers demonstrated how generative models can be used to solve this classification task much more efficiently, and in a physics-informed manner.

The Julia Programming Language, a popular language for scientific computing that is also used in MIT’s introductory linear algebra classes, offers many tools that make it invaluable for constructing such generative models, Schäfer adds.

Generative models, like those that underlie ChatGPT and Dall-E, typically work by estimating the probability distribution of some data, which they use to generate new data points that fit the distribution (such as new cat images that are similar to existing cat images).

However, when simulations of a physical system using tried-and-true scientific techniques are available, researchers get a model of its probability distribution for free. This distribution describes the measurement statistics of the physical system.

A more knowledgeable model

The MIT team’s insight is that this probability distribution also defines a generative model upon which a classifier can be constructed. They plug the generative model into standard statistical formulas to directly construct a classifier instead of learning it from samples, as was done with discriminative approaches.

“This is a really nice way of incorporating something you know about your physical system deep inside your machine-learning scheme. It goes far beyond just performing feature engineering on your data samples or simple inductive biases,” Schäfer says.

This generative classifier can determine what phase the system is in given some parameter, like temperature or pressure. And because the researchers directly approximate the probability distributions underlying measurements from the physical system, the classifier has system knowledge.

This enables their method to perform better than other machine-learning techniques. And because it can work automatically without the need for extensive training, their approach significantly enhances the computational efficiency of identifying phase transitions.

At the end of the day, similar to how one might ask ChatGPT to solve a math problem, the researchers can ask the generative classifier questions like “does this sample belong to phase I or phase II?” or “was this sample generated at high temperature or low temperature?”

Scientists could also use this approach to solve different binary classification tasks in physical systems, possibly to detect entanglement in quantum systems (Is the state entangled or not?) or determine whether theory A or B is best suited to solve a particular problem. They could also use this approach to better understand and improve large language models like ChatGPT by identifying how certain parameters should be tuned so the chatbot gives the best outputs.

In the future, the researchers also want to study theoretical guarantees regarding how many measurements they would need to effectively detect phase transitions and estimate the amount of computation that would require.

This work was funded, in part, by the Swiss National Science Foundation, the MIT-Switzerland Lockheed Martin Seed Fund, and MIT International Science and Technology Initiatives.

#ai#approach#artificial#Artificial Intelligence#Bias#binary#change#chatbot#chatGPT#classes#computation#computer#Computer modeling#Computer Science#Computer Science and Artificial Intelligence Laboratory (CSAIL)#Computer science and technology#computing#crystalline#dall-e#data#data-driven#datasets#dog#efficiency#Electrical Engineering&Computer Science (eecs)#engineering#Foundation#framework#Future#generative

2 notes

·

View notes

Text

This honestly got me thinking about the greater nature of optimization as a computer scientist*. Because there's really a lot of nuances that I think people don't fully comprehend. Like yes, many games are incredibly bloated and there are many easy fixes available, but also optimization is literally a science and there are entire higher academic courses purely dedicated to teaching it.

Generally speaking, there's often an inverse relationship between small file sizes and quick software. The more math you have the computer do up front the less you have to do later. On the flip side, the more "solutions" you have to store to be used at a later date. It's the difference between having a chart of times tables on hand vs having to calculate it all out manually. The chart is taking up physical space on paper. It's not free.

This is generally why pre-rendered cutscenes look different from real time graphics for many games. Not just decades ago like the below example, but even through today. To oversimplify, the left screen-shot has the individual pixel values prepared ahead of time (hence pre-rendered) and just pulled out of storage when it needs to be used. The right screenshot however is made from each model and background stored in isolation built from scratch in real time according to the instructions given. Left is faster computationally, but has bigger file sizes and rigid in its output. Right is smaller and dynamic, but slower for the same output because it has to do the math all over every time it plays.

One of the most extreme examples of this trade off is .kkrieger, an FPS from 2004 that takes up 96KB of storage. The screenshot (Below Left) I'm showing you right now is ten times that size at 911KB. Many NES games take up 40KB, just under half of .kkrieger's. The catch? All of .kkrieger's graphics were built from the ground up every single time it ran, eventually blowing up to take 300 MB of memory, a size increase of 312,500%. Consoles of that era, had anywhere between 8-20% of that space available for RAM (Below Right)

As I've said, that's an extreme example. It's not an all or nothing deal. Sometimes prerendered is better and sometimes real time is preferrable. You can even compress the prerendered files, and uncompress in real time and prerender an operation to be used to augment complex real time operations.

This 8x8 texture (above) is a prerendered calculation done by CodeParade that helps convert 4-dimensional models into 3D slices for his game 4D Golf, not unlike the times-tables example I brought up earlier (the link goes to his YT channel where he talks about the development of said game).

The aforementioned trade off is still there. It takes time to uncompress the prerendered parts and the prerendered assistance still takes up space. Nothing is free.

Especially dev time. What I've been talking about exclusively is the code itself. The trade offs involved with the experience of the game. However, to get to that point who need some form of man power that can allow such trade offs in the first place.

#old post sitting in my drafts (there's a lot of these)#idk why i didn't initially post this one it's pretty apt for my argument all things considered

19K notes

·

View notes

Text

Lab Accuracy & Reliability: Why State-of-the-Art Equipment Matters in Genetic Testing

In the world of genetic diagnostics, the margin for error is virtually zero. A single inaccurate result can lead to misdiagnosis, unnecessary anxiety, or missed opportunities for early intervention. That’s why the accuracy and reliability of a genetic testing laboratory hinge not only on expertise, but also on its technological backbone — the equipment.

At Greenarray Genomics Research and Solutions Pvt. Ltd., we invest in state-of-the-art instrumentation to ensure that every test result is precise, reproducible, and clinically actionable. Here's why modern lab equipment plays a pivotal role in upholding the highest standards in genetic testing.

🔬 Precision Matters: The Nature of Genetic Data

Genetic testing involves analyzing microscopic changes in DNA, chromosomes, or gene expression. Detecting these variations requires:

High-resolution imaging

Sensitive detection systems

Advanced data processing

Even the smallest technical inaccuracy can lead to false negatives (missing a mutation) or false positives (identifying something that isn’t truly there). That’s why technical precision is non-negotiable in this field.

🧪 How Advanced Equipment Enhances Lab Reliability

1. Next Generation Sequencing (NGS) Platforms

Modern NGS machines allow massive parallel sequencing with unmatched speed and depth.

High-end sequencers can detect even low-frequency mutations or mosaicism that traditional methods may miss.

Automated data output ensures consistency, minimizing human error.

2. Automated DNA Extraction Systems

Ensures uniformity across samples

Reduces contamination risk

Speeds up processing time without compromising quality

3. Digital PCR and qPCR Machines

Ideal for targeted mutation analysis or copy number variation (CNV) detection

Provide highly sensitive quantification of DNA, even at low concentrations

4. Cytogenetic Imaging & Karyotyping Tools

Advanced microscopes and imaging software support accurate chromosomal analysis, especially for prenatal, cancer, and infertility diagnostics.

5. Bioinformatics Infrastructure

High-throughput sequencing is only useful when paired with robust computing systems to interpret the data.

AI-assisted variant calling tools enhance accuracy, speed, and scalability

🏥 Why It Matters to Patients and Clinicians

✅ Reliable Diagnoses

Accurate equipment ensures that clinicians receive trustworthy data, guiding effective medical decisions.

⏱️ Faster Turnaround Times

Automation and high-throughput systems reduce manual labor, enabling faster delivery without compromising quality.

💰 Cost Efficiency

Accurate first-time testing avoids repeat tests, unnecessary treatments, or further diagnostic delays — saving time and resources for both labs and patients.

🛡️ Regulatory Compliance and Accreditation

Maintaining cutting-edge equipment helps meet international quality standards (like NABL, CAP, or ISO), which demand stringent validation and calibration protocols.

🧭 Greenarray Genomics: Investing in Precision

Our lab is equipped with:

High-end NGS platforms for comprehensive genome, exome, and panel testing

Advanced cytogenetics systems for prenatal and cancer diagnosis

Fully automated sample processing units

Secure, AI-enabled bioinformatics pipelines

Regularly calibrated, quality-checked instruments

We also maintain rigorous internal quality controls and proficiency testing to guarantee dependable results.

🔍 Looking Ahead: The Role of Innovation in Diagnostics

As genetic science evolves, so must the tools we use. Newer platforms with enhanced sensitivity, integration with cloud systems, and AI-based decision support are becoming the new standard — and Greenarray is prepared to lead the way.

✅ Conclusion: Trust Begins with Technology

In genetic testing, accuracy isn’t optional — it’s foundational. At Greenarray Genomics, we believe that combining scientific knowledge with cutting-edge equipment is the best way to serve patients, support clinicians, and uphold trust in every report we generate.

0 notes

Text

DevOps Training Institute in Indore – Your Gateway to Continuous Delivery & Automation

Accelerate Your IT Career with DevOps

In today’s fast-paced IT ecosystem, businesses demand faster deployments, automation, and collaborative workflows. DevOps is the solution—and becoming proficient in this powerful methodology can drastically elevate your career. Enroll at Infograins TCS, the leading DevOps Training Institute in Indore, to gain practical skills in integration, deployment, containerization, and continuous monitoring with real-time tools and cloud technologies.

What You’ll Learn in Our DevOps Course

Our specialized DevOps course in Indore blends development and operations practices to equip students with practical expertise in CI/CD pipelines, Jenkins, Docker, Kubernetes, Ansible, Git, AWS, and monitoring tools like Nagios and Prometheus. The course is crafted by industry experts to ensure learners gain a hands-on understanding of real-world DevOps applications in cloud-based environments.

Key Benefits – Why Our DevOps Training Stands Out

At Infograins TCS, our DevOps training in Indore offers learners several advantages:

In-depth coverage of popular DevOps tools and practices.

Hands-on projects on automation and cloud deployment.

Industry-aligned curriculum updated with the latest trends.

Internship and job assistance for eligible students. This ensures you not only gain certification but walk away with project experience that matters in the real world.

Why Choose Us – A Trusted DevOps Training Institute in Indore

Infograins TCS has earned its reputation as a reliable DevOps Training Institute in Indore through consistent quality and commitment to excellence. Here’s what sets us apart:

Professional instructors with real-world DevOps experience.

100% practical learning through case studies and real deployments.

Personalized mentoring and career guidance.

Structured learning paths tailored for both beginners and professionals. Our focus is on delivering value that goes beyond traditional classroom learning.

Certification Programs at Infograins TCS

We provide industry-recognized certifications that validate your knowledge and practical skills in DevOps. This credential is a powerful tool for standing out in job applications and interviews. After completing the DevOps course in Indore, students receive a certificate that reflects their readiness for technical roles in the IT industry.

After Certification – What Comes Next?

Once certified, students can pursue DevOps-related roles such as DevOps Engineer, Release Manager, Automation Engineer, and Site Reliability Engineer. We also help students land internships and jobs through our strong network of hiring partners, real-time project exposure, and personalized support. Our DevOps training in Indore ensures you’re truly job-ready.

Explore Our More Courses – Build a Broader Skill Set

Alongside our flagship DevOps course, Infograins TCS also offers:

Cloud Computing with AWS

Python Programming & Automation

Software Testing – Manual & Automation

Full Stack Web Development

Data Science and Machine Learning These programs complement DevOps skills and open additional career opportunities in tech.

Why We Are the Right Learning Partner

At Infograins TCS, we don’t just train—we mentor, guide, and prepare you for a successful IT journey. Our career-centric approach, live-project integration, and collaborative learning environment make us the ideal destination for DevOps training in Indore. When you partner with us, you’re not just learning tools—you’re building a future.

FAQs – Frequently Asked Questions

1. Who can enroll in the DevOps course? Anyone with a basic understanding of Linux, networking, or software development can join. This course is ideal for freshers, IT professionals, and system administrators.

2. Will I get certified after completing the course? Yes, upon successful completion of the training and evaluation, you’ll receive an industry-recognized certification from Infograins TCS.

3. Is this DevOps course available in both online and offline modes? Yes, we offer both classroom and online training options to suit your schedule and convenience.

4. Do you offer placement assistance? Absolutely! We provide career guidance, interview preparation, and job/internship opportunities through our dedicated placement cell.

5. What tools will I learn in this DevOps course? You will gain hands-on experience with tools such as Jenkins, Docker, Kubernetes, Ansible, Git, AWS, and monitoring tools like Nagios and Prometheus.

Join the Best DevOps Training Institute in Indore

If you're serious about launching or advancing your career in DevOps, there’s no better place than Infograins TCS – your trusted DevOps Training Institute in Indore. With our project-based learning, expert guidance, and certification support, you're well on your way to becoming a DevOps professional in high demand.

0 notes

Text

Mechatronic Design Engineer: Bridging Mechanical, Electrical, and Software Engineering

The role of a Mechatronic Design Engineer is at the cutting edge of modern engineering. Combining the principles of mechanical engineering, electronics, computer science, and control systems, mechatronic engineers design and develop smart systems and innovative machines that improve the functionality, efficiency, and intelligence of products and industrial processes. From robotics and automation systems to smart consumer devices and vehicles, mechatronic design engineers are the architects behind today’s and tomorrow’s intelligent technology.

What Is Mechatronic Engineering?

Mechatronics is a multidisciplinary field that integrates various engineering disciplines to design and create intelligent systems and products. A mechatronic system typically consists of mechanical components (such as gears and actuators), electronic systems (sensors, controllers), and software (embedded systems and algorithms).

In practical terms, a Mechatronic Design Engineer might work on:

Industrial robots for factory automation.

Autonomous vehicles.

Consumer electronics (like smart appliances).

Medical devices (robotic surgery tools, prosthetics).

Aerospace systems.

Agricultural automation equipment.

These professionals play a vital role in building machines that can sense, process, and respond to their environment through advanced control systems.

Core Responsibilities of a Mechatronic Design Engineer

Mechatronic design engineers wear multiple hats. Their responsibilities span the design, simulation, testing, and integration of various components into a unified system. Key responsibilities include:

Conceptual Design:

Collaborating with cross-functional teams to define product requirements.

Designing mechanical, electrical, and software systems.

Creating prototypes and evaluating design feasibility.

Mechanical Engineering:

Designing moving parts, enclosures, and structures using CAD tools.

Selecting materials and designing components for performance, durability, and manufacturability.

Electrical Engineering:

Designing circuit boards, selecting sensors, and integrating microcontrollers.

Managing power systems and signal processing components.

Embedded Systems and Software Development:

Writing control algorithms and firmware to operate machines.

Programming in languages like C, C++, or Python.

Testing and debugging embedded software.

System Integration and Testing:

Bringing together mechanical, electrical, and software components into a functional prototype.

Running simulations and real-world tests to validate performance.

Iterating design based on test data.

Project Management and Documentation:

Coordinating with suppliers, clients, and team members.

Preparing technical documentation and user manuals.

Ensuring compliance with safety and industry standards.

Skills Required for a Mechatronic Design Engineer

Being successful in mechatronic engineering requires a broad skill set across multiple disciplines:

Mechanical Design – Proficiency in CAD software like SolidWorks, AutoCAD, or CATIA.

Electronics – Understanding of circuits, PCB design, microcontrollers (e.g., Arduino, STM32), and sensors.

Programming – Skills in C/C++, Python, MATLAB/Simulink, and embedded software development.

Control Systems – Knowledge of PID controllers, motion control, automation, and feedback systems.

Problem Solving – Ability to approach complex engineering problems with innovative solutions.

Collaboration – Strong communication and teamwork skills are essential in multidisciplinary environments.

Industries Hiring Mechatronic Design Engineers

Mechatronic engineers are in demand across a wide array of industries, including:

Automotive: Designing autonomous and electric vehicle systems.

Robotics: Creating robotic arms, drones, and autonomous platforms.

Manufacturing: Developing automated assembly lines and CNC systems.

Medical Devices: Designing wearable health tech and robotic surgery tools.

Aerospace: Building UAVs and advanced flight control systems.

Consumer Electronics: Creating smart appliances and personal tech devices.

Agriculture: Developing automated tractors, irrigation systems, and crop-monitoring drones.

Mechatronics in the Age of Industry 4.0

With the rise of Industry 4.0, smart factories, and the Internet of Things (IoT), the demand for mechatronic design engineers is rapidly increasing. These professionals are at the forefront of integrating cyber-physical systems, enabling machines to communicate, adapt, and optimize operations in real-time.

Technologies such as AI, machine learning, digital twins, and cloud-based monitoring are further expanding the scope of mechatronic systems, making the role of mechatronic engineers more strategic and valuable in innovation-driven industries.

Career Path and Growth

Entry-level mechatronic engineers typically begin in design or testing roles, working under experienced engineers. With experience, they may move into project leadership, system architecture, or R&D roles. Others transition into product management or specialize in emerging technologies like AI in robotics.

Engineers can further enhance their careers by obtaining certifications in areas like:

PLC Programming

Robotics System Design

Embedded Systems Development

Project Management (PMP or Agile)

Advanced degrees (MS or PhD) in mechatronics, robotics, or automation can open opportunities in academic research or senior technical roles.

Future Trends in Mechatronic Design Engineering

Human-Robot Collaboration: Cobots (collaborative robots) are transforming how humans and robots work together on factory floors.

AI and Machine Learning: Enabling predictive maintenance, adaptive control, and smarter decision-making.

Wireless Communication: Integration with 5G and IoT platforms is making mechatronic systems more connected.

Miniaturization: Smaller, more powerful components are making devices more compact and energy-efficient.

Sustainability: Engineers are designing systems with energy efficiency, recyclability, and sustainability in mind.

Conclusion

A Mechatronic Design Engineer by Servotechinc plays a pivotal role in shaping the future of intelligent machines and systems. As industries become more automated and interconnected, the demand for multidisciplinary expertise continues to rise. Mechatronic engineering offers a dynamic and rewarding career path filled with opportunities for innovation, creativity, and impactful problem-solving. Whether you’re designing a robot that assembles products, a drone that surveys farmland, or a wearable medical device that saves lives—mechatronic design engineers are truly the bridge between imagination and reality in the world of modern engineering.

0 notes

Text

Why Document Translation Services Are Driving Global Business Growth in 2025

In today's rapidly evolving global economy, the ability to scale across borders has become a key competitive advantage—and at the heart of that growth lies the power of clear, consistent communication. Whether you're a tech company entering the Japanese market or a healthcare provider expanding into the Middle East, document translation services have become a strategic necessity.

This is no longer about translating words—it's about preserving intent, legal accuracy, brand identity, and regulatory compliance across diverse regions and cultures.

The Business Case for Document Translation

According to CSA Research, 76% of consumers prefer to buy products with information in their native language. This insight is reinforced by Harvard Business Review, which points out that even globally recognized brands risk losing consumer trust if their materials are poorly translated or not localized.

Here's why document translation is more essential than ever:

Compliance Across Borders: Legal documents, financial reports, and medical records must comply with local regulations. Translation errors can lead to lawsuits or government penalties.

Cultural Alignment: Marketing materials that resonate locally require translation strategies that account for tone, idioms, and cultural sensitivities.

Operational Efficiency: Employee handbooks, training manuals, and internal communications need precise translations to avoid internal miscommunication.

Industries with the Highest Demand for Document Translation

Some sectors rely heavily on precise documentation for day-to-day operations. These include:

Healthcare and Life Sciences: Patient records, clinical trial data, medical device manuals

Legal and Finance: Contracts, compliance documents, tax forms

E-Learning: Courseware, certifications, online training platforms

Manufacturing and Engineering: Technical specs, safety manuals, patents

Organizations in these industries can’t afford ambiguity, which is why many rely on certified translation agencies that adhere to ISO 17100 and ISO 9001 standards.

The Rise of Hybrid Translation Workflows

With the integration of AI tools like machine translation, document workflows have evolved. However, quality still depends on human expertise. Leading solutions involve:

CAT Tools (Computer-Assisted Translation): Tools like SDL Trados and MemoQ enhance consistency through translation memory.

Machine Translation Post-Editing (MTPE): Combines AI-generated drafts with human editing for speed and accuracy.

Terminology Management: Ensures consistent use of industry-specific terms across all documents.

These solutions help companies reduce turnaround time and translation costs without sacrificing quality.

Localization vs. Translation: Know the Difference

While translation focuses on converting text from one language to another, localization goes a step further. It adapts the message to reflect cultural preferences, user behavior, and regional standards.

For example:

A product brochure translated for the Saudi market must follow not only Arabic language rules but also culturally preferred layouts and color schemes.

A software manual for German users may require the addition of region-specific compliance content.

Top-tier document translation services often offer localization as part of their suite, ensuring that your message fits the target market contextually and technically.

Key Features to Look For in a Document Translation Partner

To get the most value from document translation, choose a partner with:

Industry expertise and certifications

A global network of native translators

Proven success with multilingual documentation

Integration with CMS or project management tools

A strong focus on data security and confidentiality

In regulated industries like finance and pharmaceuticals, look for agencies familiar with GDPR and HIPAA compliance.

A Trusted Partner for High-Stakes Translation

With global operations demanding flawless communication, document translation services offer a complete, scalable solution. Whether you're localizing technical manuals or translating sensitive legal documents, a trusted provider can help you bridge language gaps with confidence and compliance.

0 notes

Text

Lode Palle: Merging Innovation with Real-World Coding

In today’s technology-driven world, software is more than just code; it’s the foundation of modern business, communication, and progress. Behind every impactful application is a developer who understands not just the lines of logic, but the human needs that technology serves. One such developer is Lode Palle, a 33-year-old software professional from Melbourne, Australia, whose work represents a rare blend of innovation and practical application.

More than just a coder, Lode Palle is a creator of intelligent systems that solve real-world problems. With a passion for innovation and a grounded approach to software architecture, he has become a trusted figure in the ever-evolving tech ecosystem.

A Melbourne-Based Developer with a Global Mindset

Lode’s professional roots are firmly planted in Melbourne, a city known for its vibrant tech scene and diverse startup culture. However, his outlook and skills transcend geographical boundaries. He develops software not just for local clients but for businesses across the world—each one seeking scalable, adaptive, and innovative digital solutions.

His ability to work within multiple domains—from finance to health tech, e-commerce to logistics—makes him an exceptionally versatile developer. But what sets him apart isn’t just his breadth of knowledge; it’s his ability to bridge cutting-edge technology with real-world functionality.

From Curiosity to Code

Like many successful developers, Lode Emmanuel Palle’s journey began with curiosity. What started as a fascination with how machines “think” evolved into a deep commitment to learning the art and science of programming. Over the years, he mastered several programming languages including Python, JavaScript, and Kotlin, while also developing expertise in modern frameworks, cloud computing, DevOps, and machine learning.

However, Lode didn’t stop at technical proficiency. He took time to understand user experience, system design, cybersecurity, and even business operations. This holistic perspective enables him to build software that isn’t just functional—but intelligent, secure, and aligned with long-term goals.

Innovation Is a Mindset, Not a Buzzword

Innovation is often misunderstood in tech as simply doing something new. For Lode Palle, innovation is about doing something better. Whether that’s automating a manual business process, improving app performance, or designing an intuitive interface, his goal is always improvement with purpose.

He stays updated with the latest technologies, but doesn’t jump on trends for the sake of novelty. He evaluates new tools and techniques through a pragmatic lens—asking how they can enhance performance, usability, or scalability in actual use cases.

Some of his recent projects have integrated:

AI for predictive analytics in customer-facing platforms

Blockchain for secure digital transaction.

Real-World Coding: Building What Matters

What does “real-world coding” really mean? For Lode Palle, it means building software that solves actual problems—software that people use, that performs under pressure, and that grows with the business.

He avoids overengineering and focuses on elegant, maintainable solutions. His code is structured for clarity, adaptability, and performance, which makes it easier for teams to build on it and for businesses to scale it.

Lodi Palle believes that great software doesn’t just “work”—it enables. It helps companies do more with less, improves the lives of users, and serves as a foundation for future innovation.

The Human Side of a Coder

Outside the world of algorithms and architectures, Lode Palle lives a life rich in physical activity and creativity. He is passionate about gym workouts, hiking, boxing, photography, and of course, learning new coding techniques.

These hobbies may seem unrelated to tech, but they feed into his professional success in powerful ways:

Gym and boxing develop discipline and mental toughness—essential for navigating tough bugs and high-pressure deadlines.

Hiking provides mental clarity and fresh ideas, helping him reset and return to work with new perspectives.

Photography sharpens his visual thinking, influencing the design side of software and UI/UX decisions.

Learning coding continuously ensures he’s always evolving, always ready for the next challenge.

Lode’s lifestyle reflects balance—between mind and body, work and play, logic and creativity. This balance brings a unique energy to his development work and inspires those who work with him.

A Trusted Developer for Businesses of All Sizes

Lode Palle has built a strong reputation as someone who doesn’t just deliver code—he delivers value. Whether working with startups or established enterprises, his clients know they’re getting a product that’s robust, innovative, and tailored to their needs.

He offers:

End-to-end application development

Technical consulting and software architecture planning

Performance optimization and scalability solutions

Ongoing maintenance and upgrades

What clients appreciate most is his transparent communication, his ability to explain complex concepts clearly, and his dedication to creating solutions that grow with their business.

Tech for Good: Building with Purpose

Lode Palle is also passionate about technology as a force for positive change. He’s contributed to projects aimed at improving education, healthcare access, and environmental sustainability. For him, software is more than a product—it’s a tool for social impact.

By mentoring junior developers and contributing to open-source projects, Lode actively strengthens the developer community. He believes in knowledge-sharing and collaboration as pathways to innovation and ethical growth in tech.

Future-Ready: What’s Next for Lode Palle

As technology evolves, so does Lode. He is currently exploring:

Edge computing and its implications for real-time applications

AI model integration into everyday consumer apps

Augmented reality interfaces for mobile experiences

More sustainable, energy-efficient coding practices

His vision for the future includes not only building better apps but creating development models that are more inclusive, ethical, and environmentally conscious.

Conclusion: A Developer Who Codes with Vision

In a world full of coders, Lode Palle stands out as a developer with vision. He doesn’t just build what’s possible—he builds what’s meaningful. By merging cutting-edge innovation with practical, real-world coding, he delivers solutions that are impactful, future-ready, and human-centered.

His journey is a powerful reminder that behind every great piece of software is a thinker, a learner, and a problem-solver.

0 notes

Text

Predictive Analytics in Manufacturing

Predictive analytics is a powerful tool to help manufacturing companies make better decisions and improve efficiency. It provides a competitive edge for organizations in the Manufacturing Industry. It can help manufacturers identify new trends and opportunities and address challenges with an efficient solution.

Predictive analytics can forecast demand, manage inventory, and predict maintenance needs to minimize downtime. It is also used for quality control by identifying defective products before shipping. It is an excellent example of how data science can be applied to business problems to increase decision-making effectiveness.

Predictive analytics uses statistical techniques to identify patterns in data and use this information to make predictions. It is a powerful tool that can help manufacturing companies predict demand and make better production decisions. Accordingly, IDC estimates that spending on AI-powered applications, such as predictive analytics, will increase from $40.1 billion in 2019 to $95.5 billion by 2022.

Some advantages of predictive analytics

1.Predict performance by detecting patterns

Predictive analytics can filter through massive volumes of historical data far faster and more precisely than humans. We can enhance output by 10% without losing first-pass yield by using machine learning technology to recognize recurrent patterns and other connection factors.

AI and machine learning can look for trends and combine them to assist your company in uncovering possible efficiency gains, foresee problems, and cut costs.

2.Analyze market trends

Another application for PA is forecasting customer demand. Knowing what to expect in the future might help you determine what to do next.

Every business conducts some manual market research. For example, some consumer items are seasonal and sell better at certain times of the year. Demand forecasting may be aided by predictive analytics using statistical algorithms, which isn’t a new concept.

Customers’ future purchase patterns, supplier connections, market availability, and the impact of the global economy are all influenced by a variety of variables. The only way ahead is to manage them all through PA.

3.Assists with inventory management

Supply chain management—stocking raw supplies, keeping completed goods, and coordinating transportation and distribution networks—is a complex commercial process that necessitates significant training, data from several sources, and excellent decision-making ability.

A computer model based on your data, on the other hand, may help supply chain managers make more confident and precise judgments. For example, such a model may ensure that you are never overstocked, understocked, or overburdened with unsaleable goods, even determining the best positioning of things on your shelves.

4.Enhance the product’s quality

There are make-or-break steps in every manufacturing process where overall product quality is determined; these are frequently handled by humans who are prone to error. Artificial intelligence (AI) and machine learning are cutting-edge alternatives to this problem. Sensitive manufacturing stages can be delegated to robots that use machine learning to improve with each use. When compared to human-made items, this results in higher product quality.

PepsiCo, for example, has used machine learning systems to improve how it makes one of its famous Lays chips, from estimating potato weight to examining the texture of each chip with a laser system. These have resulted in a 35% increase in product quality.

Conclusion

Predictive analytics is undoubtedly a key boon for manufacturers as it can help them identify new trends and opportunities and address challenges with an efficient solution. It can also help manufacturers understand their customers better and create products that will be successful in the market.

For more details visit us : MITS Summit

0 notes

Text

Data Analytics in Climate Change Research | SG Analytics

Corporations, governments, and the public are increasingly aware of the detrimental impacts of climate change on global ecosystems, raising concerns about economic, supply chain, and health vulnerabilities.

Fortunately, data analytics offers a promising approach to strategize effective responses to the climate crisis. By providing insights into the causes and potential solutions of climate change, data analytics plays a crucial role in climate research. Here’s why leveraging data analytics is essential:

The Importance of Data Analytics in Climate Change Research

Understanding Complex Systems

Climate change involves intricate interactions between natural systems—such as the atmosphere, oceans, land, and living organisms—that are interconnected and complex. Data analytics helps researchers analyze vast amounts of data from scholarly and social platforms to uncover patterns and relationships that would be challenging to detect manually. This analytical capability is crucial for studying the causes and effects of climate change.

Informing Policy and Decision-Making

Effective climate action requires evidence-based policies and decisions. Data analytics provides comprehensive insights that equip policymakers with essential information to design and implement sustainable development strategies. These insights are crucial for reducing greenhouse gas emissions, adapting to changing conditions, and protecting vulnerable populations.

Enhancing Predictive Models

Predictive modeling is essential in climate science for forecasting future climate dynamics and evaluating mitigation and adaptation strategies. Advanced data analytics techniques, such as machine learning algorithms, improve the accuracy of predictive models by identifying trends and anomalies in historical climate data.

Applications of Data Analytics in Climate Change Research

Monitoring and Measuring Climate Variables

Data analytics is instrumental in monitoring climate variables like temperature, precipitation, and greenhouse gas concentrations. By integrating data from sources such as satellites and weather stations, researchers can track changes over time and optimize region-specific monitoring efforts.

Assessing Climate Impacts

Analyzing diverse datasets—such as ecological surveys and health statistics—allows researchers to assess the long-term impacts of climate change on biodiversity, food security, and public health. This holistic approach helps in evaluating policy effectiveness and planning adaptation strategies.

Mitigation and Adaptation Strategies

Data analytics supports the development of strategies to mitigate greenhouse gas emissions and enhance resilience. By analyzing data on energy use, transportation patterns, and land use, researchers can identify opportunities for reducing emissions and improving sustainability.

Future Directions in Climate Data Analytics

Big Data and Edge Computing

The increasing volume and complexity of climate data require scalable computing solutions like big data analytics and edge computing. These technologies enable more detailed and accurate analysis of large datasets, enhancing climate research capabilities.

Artificial Intelligence and Machine Learning

AI and ML technologies automate data processing and enhance predictive capabilities in climate research. These advancements enable researchers to model complex climate interactions and improve predictions of future climate scenarios.

Crowdsourced Datasets

Engaging the public in data collection through crowdsourcing enhances the breadth and depth of climate research datasets. Platforms like Weather Underground demonstrate how crowdsourced data can improve weather forecasting and climate research outcomes.

Conclusion

Data analytics is transforming climate change research by providing innovative tools and deeper insights into sustainable climate action. By integrating modern analytical techniques, researchers can address significant global challenges, including carbon emissions and environmental degradation. As technologies evolve, the integration of climate research will continue to play a pivotal role in safeguarding our planet and promoting a sustainable global ecosystem.

2 notes

·

View notes

Text

The year is 2077. Over the years, ease of use in the average user computer market has gone up that most people have forgotten there is someone that operates the machine. It's worse than "what you see is what you get": "What you see is all there is." The average person can no longer manually turn on a computer, for that is done automatically. They can no longer move files, for all file that has to be moved is done so automatically. They no longer click icons on a desktop for all apps are launched at the computer's guess: all they do is tell, in plain English, what they want, and the machine will find a way to provide them. They no longer know what is hardware, they would never be able to repair a machine, even if the solution is as simple as plugging it in. They no longer understand what and why is a keyboard. They no longer understand, nor do they suspect.

For every hundred thousand, a thousand knows what an operating system is, 500 of which knows its possible to change it, but only 100 have done so. Communities that once would fill entire hotels with nerds and the curious uninformed alike now have been reduced to local metups where people exchange files of dubious legality, play, and develop further their little community. What unites them is the curse of knowledge. They know that if they went to the same thrift store as everyone and bought the same computer as everyone, they would be forced to use outdated, exploitable spyware, and pay completely incoherent fees for basic abilities.

The average user would think that it is like that, and you either pay or don't have it. But this group gets that these functionalities are basic things they could make themselves in minutes, and they have. Using layouts from expired patents, reversed-enginereed outdated machinery, and scrap parts, they are able to produce computer hardware that are free of any limitations and compatible with software of the old age, from which they have developed fully functioning operating systems with all capabilities you would expect in a modern day computer, which they distribute for the price of the thumb drive they come in. Most content they consume is pirated, as almost all content there is paid, but have extremely outdated piracy measures; the average user won't even think of the possibility of getting anything on the computer without paying.

The uninformed would never doubt the machine or its requests and, as a result, tracking is at its worst. Telemetry is feared. Machines smarter than the human made to hipnotise entire nations are out there, and all you can do is hide. No webcams, no names, no aliases, no trace-backable traits, not when using the computer. They keep it down and use cryptography on all their activity. They have no accounts in online services, they use spoofs to make it seem like they do. They only update with physical medias of their software, which they check first. Privacy now requires advanced understanding of computer science, to know your computer like it's your child.

The impact of those who fail their measures is psychological torture by a society: the machine will pressure whole cities to force a user to give up their basic rights. To try to ignore all they have learned, all those tales of freedom, all those warnings of dystopia, or live in a world that will be always playing the dice so that they are always in the worst. For they know, that no convenience is truly for free, that thank yous will never satisfy a machine of money making.

The steel can make your life the easiest. It only wants one thing.

You.

So learn the linux terminal or fucking else.

Telling young zoomers to "just switch to linux" is nuts some of these ipad kids have never even heard of a cmd.exe or BIOS you're throwing them to the wolves

61K notes

·

View notes

Text

AI-Powered Future: From Machine Learning to Avatars & Co-Pilots

Artificial Intelligence (AI) is no longer a visionary term—it's already revolutionising sectors of the world today. From AI building and machine learning building to AI as a service, companies are leveraging bleeding-edge technologies to remain ahead of competition and innovate at a quicker rate. With the changing environment, recruiting talented experts like AI engineers and ChatGPT developers has become crucial. Let's get into how these innovations, particularly in industries such as retail, are dictating the future with enterprise AI solutions, large language model creation, AI co-pilot creation, and AI avatar creation.

The Expanding Scope of AI Development

An AI development company deals with the creation of intelligent systems that are adept at tasks that have traditionally been performed by people. It is the field that has a rich collection of information, like problem-solving, decision-making, natural language understanding, and learning from data, as its central issues.

AI development today encompasses not just machine learning but also natural language processing, computer vision, and robotics, resulting in a proliferation of powerful AI apps enabling organizations to automate processes, improve customer service, and uncover business insights.

Machine Learning Development: A Pillar of AI Innovation

A machine learning development represents the central operational element for present-day AI environments. The organization focuses on creating intelligent data-based systems that achieve performance improvement through learning instead of requiring manual development for each new function.

The company use extensive datasets to develop models that adjust to actual operating conditions and produce precise and efficient and scalable AI solutions for complicated enterprise issues. Modern AI solutions depend on machine learning development to create predictive analytics and recommendation engines and real-time decision-making systems that power contemporary enterprise operations.

When you work with an established machine learning development company, your business receives the necessary resources to establish strong AI capabilities. These solutions provide the tools needed for competitive advantage and fast innovation and operational readiness across healthcare, finance, and machine learning in retail environments.

AI as a Service: Democratizing AI Access

The AI delivery sector experiences a profound transformation through the establishment of Artificial Intelligence as a Service (AIaaS). Organizations at any scale can access advanced AI technology through cloud platforms, which eliminates the requirement for large initial expenses in infrastructure or personnel. Organizations that subscribe to AI services gain the capability to add natural language processing together with image recognition and predictive analytics and conversational AI to their system or operation without difficulty. This transformation enables companies without the means to create internal AI development teams to access AI technology, thus extending the advantages of artificial intelligence to multiple sectors.

Why Hire AI Engineers and ChatGPT Developers?

As AI becomes more pervasive, the demand for specialized talent is soaring. Hiring artificial intelligence engineers skilled in machine learning, data science, and algorithm design is crucial for companies aiming to build custom AI solutions that align with their unique business goals.

Similarly, hiring ChatGPT developers—experts in large language model development—is essential for companies seeking to integrate advanced conversational AI into their customer service, marketing, or internal workflows. These developers tailor AI chatbots and virtual assistants that understand and respond naturally to human language, enhancing user engagement and operational efficiency.

Machine Learning in Retail: Revolutionizing the Shopping Experience

Machine learning in retail technologies drives substantial changes in the retail sector together with other industries. The retail sector deploys machine learning, which generates individualised customer interactions alongside predictive sales patterns and efficient stock handling and fraud prevention.

Through extensive customer data analysis, machine learning algorithms detect purchasing behaviour and individual preferences, which retailers leverage to create precise promotions and personalized product suggestions. This simultaneous effect increases both revenue and customer dedication.

The retail industry implements machine learning to improve supply chain management operations, which enables efficient product availability while decreasing both waste and expenses. AI-driven market insights empower retailers to fast-track their responses to consumer needs and market trends, which protects their competitive position.

Enterprise AI Solutions: Scaling Intelligence Across Organizations

Large corporations are more and more using enterprise AI solutions to simplify tough processes, boost their decision-making, and discover new sources of income. These are usually a mix of AI technologies, that may include such versions as machine learning, natural language processing, and robotic process automation, inside a single platform that cares for every business function.

A definite example in favour of this is that from predictive maintenance in manufacturing to detecting fraud in banking, enterprise AI solutions become those drivers which support this efficiency and, in some cases, the process of innovation. To leverage their AI to reach full potential, firms often invest in the development of huge language models to get their AI to understand human-like text and make better communication and insights possible.

The Rise of AI Co-Pilots and AI Avatars

The AI Co-Pilot Development and AI Avatar Development are currently the trendiest sectors of the AI industry.

AI Co-Pilot Development: AI co-pilots function as smart helpers, who aid experts in handling their assignments in complex conditions. Be it writing software codes, guiding pilots in their navigation, or assisting customer service agents, AI co-pilots do all this and even more. These AI-powered friends never stop learning; they change according to the user's preferences and give their human colleagues contextual insights, so in this way, they revolutionise work in every existing industry.

AI Avatar Development: AI avatars are the new age of amazing virtual assistants, backed by high-level AI. They employ the power of natural language processing, computer vision, and emotion recognition to establish a conversational connection with users and also make themselves a part of the user's life. Whether it is virtual customer care reps or personalized health coaches or hosts for entertainment, AI avatars inject human-like touch in the world of automation, thus creating more engaging experiences for people.

Large Language Model Development for Scalable AI Solutions

Large language model development is like the infrastructure on which modern AI runs. In sum, it is large language model development that allows machines to understand and generate human-like text in bulk, thereby making communication more human-like. This trend touches every major and minor AI-driven innovation and contributes to such principles as personalization, productivity, and innovation.

Final Thoughts

For businesses that want to do well with this AI-powered future, the investment in artificial intelligence development and artificial intelligence as a service is not something that is optional any more; it's essential. Employing artificial intelligence engineers and ChatGPT developers guarantees that you have the right skills to develop and deliver AI solutions that are at the cutting edge of technological innovation.

Osiz Technologies creates intelligent AI solutions that help businesses innovate and grow across various industries. Our expert team builds advanced tools like virtual assistants and automation systems to prepare your business for the future.

#ArtificialIntelligence#MachineLearning#AIDevelopment#EnterpriseAI#AIasaService#ChatGPTDevelopers#AIEngineers#RetailAI#AICoPilot#AIAvatar#LargeLanguageModels#NaturalLanguageProcessing#MLinRetail#AIInnovation#OsizTechnologies

0 notes