#Extract Data from Product Website

Explore tagged Tumblr posts

Text

Extracting Shopify product data efficiently can give your business a competitive edge. In just a few minutes, you can gather essential information such as product names, prices, descriptions, and inventory levels. Start by using web scraping tools like Python with libraries such as BeautifulSoup or Scrapy, which allow you to automate the data collection process. Alternatively, consider using user-friendly no-code platforms that simplify the extraction without programming knowledge. This valuable data can help inform pricing strategies, product listings, and inventory management, ultimately driving your eCommerce success.

#extract Shopify websites#scrape Shopify product data#extract product data from Shopify websites#Data Scraping

0 notes

Text

You can get a huge number of products on Walmart. It uses big data analytics for deciding its planning and strategies. Things like the Free-shipping day approach, are sult of data scraping as well as big data analytics, etc. against Amazon Prime have worked very well for Walmart. Getting the product features is a hard job to do and Walmart is doing wonderfully well in that. At Web Screen Scraping, we scrape data from Walmart for managing pricing practices using Walmart’s pricing scraping by our Walmart data scraper.

0 notes

Text

Georgia to purchase Israeli data extraction tech amid street protest crackdown

Georgia has moved to renew contracts with Israeli technology firm Cellebrite DI Ltd (CLBT.O) for software used to extract data from mobile devices, procurement documents show, as the country grapples with ongoing anti-government street protests. [...] The software, called Inseyets, allows law enforcement to "access locked devices to lawfully extract critical information from a broad range of devices", Cellebrite's website says. Cellebrite products are widely used by law enforcement, including the FBI, to unlock smartphones and scour them for evidence. [...] Georgia was plunged into political crisis in October, when opposition parties charged the ruling Georgian Dream party with rigging a parliamentary election. GD, in power since 2012, denies any wrongdoing. Georgians have been rallying nightly to demand the government's resignation since GD said in November it was suspending European Union accession talks until 2028. The demonstrations have drawn a swift crackdown by police, resulting in hundreds of arrests and beatings, rights groups say. The government has defended the police response to the protests. Gangs of masked men in black have attacked opposition politicians, activists and some journalists in recent months, raising alarm in Western capitals. Georgian authorities have said they are not involved in the attacks, and condemn them. A letter dated February 13 included among the documents on the state procurement website suggests Cellebrite was concerned about its sales to Georgia. A Cellebrite sales director, writing to a Georgian interior ministry official on what he called a "sensitive issue", warned Cellebrite's local office "could be blocked from selling our equipment". "Therefore, I would like to advise you that if you are planning a purchase this year, please try to make it as early as possible," the employee wrote, without specifying why sales might be halted.

wherever a brutal government consolidates itself, israel shows up

#is there any authoritarian police state that's too much for this grubby little country?#sticking their hands in every pot they can find#georgia

65 notes

·

View notes

Text

Update on AB 3080 and AB 1949

AB 3080 (age verification for adult websites and online purchase of products and services not allowed for minors) and AB 1949 (prohibiting data collection on individuals less than 18 years of age) both officially have hearing dates for the California Senate Judiciary Committee.

The hearing date for these bills is scheduled to be Tuesday 07/02/2024. Which means that the deadline to turn in position letters is going to be noon one week before the hearing on 06/25/2024. It's not a lot of time from this moment, but I'm certain we can each turn one in before then

Remember that position letters should be single topic, in strict opposition of what each bill entails. Keep on topic and professional when writing them. Let us all do our best to keep these bills from leaving committee so that we don't have to fight them on the Senate floor. But let's also not stop sending correspondence to our state representatives anyway.

Remember, the jurisdiction of the Senate Judiciary Committee is as follows.

"Bills amending the Civil Code, Code of Civil Procedure, Evidence Code, Family Code, and Probate Code. Bills relating to courts, judges, and court personnel. Bills relating to liens, claims, and unclaimed property. Bills relating to privacy and consumer protection."

Best of luck everyone. And thank you for your efforts to fight this so far.

Below is linked the latest versions of the bills.

Below are the links to the Committee's homepage which gives further information about the Judiciary Committee, and the page explaining further in depth their letter policy.

Edit: Was requested to add in information such as why these bills are bad and what sites could potentially be affected by these bills. So here's the explanation I gave in asks.

Why are these bills bad?

Both bills are essentially age verification requirement laws. AB 3080 explicitly, and AB 1949 implicitly.

AB 3080 strictly is calling for dangerous age verification requirements for both adult websites and any website which sells products or services which it is illegal for minors to access in California. While this may sound like a good idea on paper, it's important to keep in mind that any information that's put online is at risk of being extracted and used by bad actors like hackers. Even if there are additional requirements by the law that data be deleted after its used for its intended purpose and that it not be used to trace what websites people access. The former of which provides very little protection from people who could access the databases of identification that are used for verification, and the latter which is frankly impossible to completely enforce and could at any time reasonably be used by the government or any surveying entity to see what private citizens have been looking at since their ID would be linked to the access and not anonymized.

AB 1949 is nominally to protect children from having their data collected and sold without permission on websites. However by restricting this with an age limit it opens up similar issues wherein it could cause default requirements for age verification for any website so that they can avoid liability by users and the state.

What websites could they affect?

AB 3080, according to the bill's text, would affect websites which sells the types of items listed below

"

(b) Products or services that are illegal to sell to a minor under state law that are subject to subdivision (a) include all of the following:

(1) An aerosol container of paint that is capable of defacing property, as referenced in Section 594.1 of the Penal Code.

(2) Etching cream that is capable of defacing property, as referenced in Section 594.1 of the Penal Code.

(3) Dangerous fireworks, as referenced in Sections 12505 and 12689 of the Health and Safety Code.

(4) Tanning in an ultraviolet tanning device, as referenced in Sections 22702 and 22706 of the Business and Professions Code.

(5) Dietary supplement products containing ephedrine group alkaloids, as referenced in Section 110423.2 of the Health and Safety Code.

(6) Body branding, as referenced in Sections 119301 and 119302 of the Health and Safety Code.

(c) Products or services that are illegal to sell to a minor under state law that are subject to subdivision (a) include all of the following:

(1) Firearms or handguns, as referenced in Sections 16520, 16640, and 27505 of the Penal Code.

(2) A BB device, as referenced in Sections 16250 and 19910 of the Penal Code.

(3) Ammunition or reloaded ammunition, as referenced in Sections 16150 and 30300 of the Penal Code.

(4) Any tobacco, cigarette, cigarette papers, blunt wraps, any other preparation of tobacco, any other instrument or paraphernalia that is designed for the smoking or ingestion of tobacco, products prepared from tobacco, or any controlled substance, as referenced in Division 8.5 (commencing with Section 22950) of the Business and Professions Code, and Sections 308, 308.1, 308.2, and 308.3 of the Penal Code.

(5) Electronic cigarettes, as referenced in Section 119406 of the Health and Safety Code.

(6) A less lethal weapon, as referenced in Sections 16780 and 19405 of the Penal Code."

This is stated explicitly to include "internet website on which the owner of the internet website, for commercial gain, knowingly publishes sexually explicit content that, on an annual basis, exceeds one-third of the contents published on the internet website". Wherein "sexually explicit content" is defined as "visual imagery of an individual or individuals engaging in an act of masturbation, sexual intercourse, oral copulation, or other overtly sexual conduct that, taken as a whole, lacks serious literary, artistic, political, or scientific value."

This would likely not include websites like AO3 or any website which displays NSFW content not in excess of 1/3 of the content on the site. Possibly not inclusive of writing because of the "visual imagery", but don't know at this time. In any case we don't want to set a precedent off of which it could springboard into non-commercial websites or any and all places with NSFW content.

AB 1949 is a lot more broad because it's about general data collection by any and all websites in which they might sell personal data collected by the website to third parties, especially if aimed specifically at minors or has a high chance of minors commonly accesses the site. But with how broad the language is I can't say there would be ANY limits to this one. So both are equally bad and would require equal attention in my opinion.

#california#kosa#ab 3080#ab 1949#age verification#internet safety#online privacy#online safety#bad internet bills

192 notes

·

View notes

Text

How to know if a USB cable is hiding malicious hacker hardware

Are your USB cables sending your data to hackers?

We expect USB-C cables to perform a specific task: transferring either data or files between devices. We give little more thought to the matter, but malicious USB-C cables can do much more than what we expect.

These cables hide malicious hardware that can intercept data, eavesdrop on phone calls and messages, or, in the worst cases, take complete control of your PC or cellphone. The first of these appeared in 2008, but back then they were very rare and expensive — which meant the average user was largely safeguarded.

Since then, their availability has increased 100-fold and now with both specialist spy retailers selling them as “spy cables” as well as unscrupulous sellers passing them off as legitimate products, it’s all too easy to buy one by accident and get hacked. So, how do you know if your USB-C cable is malicious?

Further reading: We tested 43 old USB-C to USB-A cables. 1 was great. 10 were dangerous

Identifying malicious USB-C cables

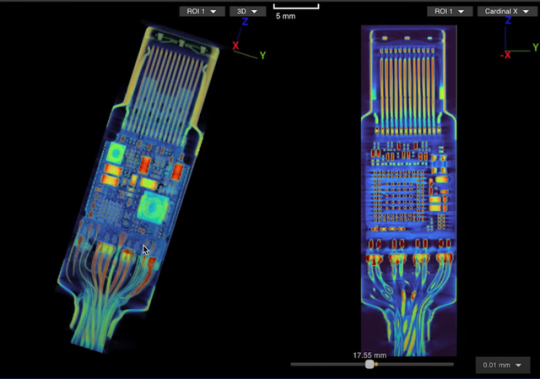

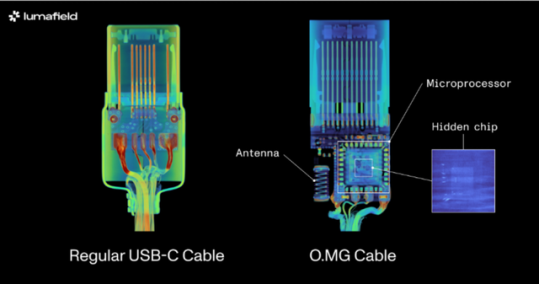

Identifying malicious USB-C cables is no easy task since they are designed to look just like regular cables. Scanning techniques have been largely thought of as the best way to sort the wheat from the chaff, which is what industrial scanning company, Lumafield of the Lumafield Neptune industrial scanner fame, recently set out to show.

The company employed both 2D and 3D scanning techniques on the O.MG USB-C cable — a well-known hacked cable built for covert field-use and research. It hides an embedded Wi-Fi server and a keylogger in its USB connector. PCWorld Executive Editor Gordon Ung covered it back in 2021, and it sounds scary as hell.

What Lumafield discovered is interesting to say the least. A 2D X-ray image could identify the cable’s antenna and microcontroller, but only the 3D CT scan could reveal another band of wires connected to a die stacked on top of the cable’s microcontroller. You can explore a 3D model of the scan yourself on Lumafield’s website.

It confirms the worst — that you can only unequivocally confirm that a USB-C cable harbors malicious hardware with a 3D CT scanner, which unless you’re a medical radiographer or 3D industrial scientist is going to be impossible for you to do. That being so, here are some tips to avoid and identify suspicious USB-C cables without high-tech gear:

Buy from a reputable seller: If you don’t know and trust the brand, simply don’t buy. Manufacturers like Anker, Apple, Belkin, and Ugreen have rigorous quality-control processes that prevent malicious hardware parts from making it into cables. Of course, the other reason is simply that you’ll get a better product — 3D scans have similarly revealed how less reputable brands can lack normal USB-C componentry, which can result in substandard performance. If you’re in the market for a new cable right now, see our top picks for USB-C cables.

Look for the warning signs: Look for brand names or logos that don’t look right. Strange markings, cords that are inconsistent lengths or widths, and USB-C connectors with heat emanating from them when not plugged in can all be giveaways that a USB-C cable is malicious.

Use the O.MG malicious cable detector: This detector by O.MG claims to detect all malicious USB cables.

Use data blockers: If you’re just charging and not transferring data, a blocker will ensure no data is extracted. Apart from detecting malicious USB-C cables, the O.MG malicious cable detector functions as such a data blocker.

Use a detection service: If you’re dealing with extremely sensitive data for a business or governmental organization, you might want to employ the services of a company like Lumafield to detect malicious cables with 100 percent accuracy. Any such service will come with a fee, but it could be a small price to pay for security and peace of mind.

11 notes

·

View notes

Text

Microsoft raced to put generative AI at the heart of its systems. Ask a question about an upcoming meeting and the company’s Copilot AI system can pull answers from your emails, Teams chats, and files—a potential productivity boon. But these exact processes can also be abused by hackers.

Today at the Black Hat security conference in Las Vegas, researcher Michael Bargury is demonstrating five proof-of-concept ways that Copilot, which runs on its Microsoft 365 apps, such as Word, can be manipulated by malicious attackers, including using it to provide false references to files, exfiltrate some private data, and dodge Microsoft’s security protections.

One of the most alarming displays, arguably, is Bargury’s ability to turn the AI into an automatic spear-phishing machine. Dubbed LOLCopilot, the red-teaming code Bargury created can—crucially, once a hacker has access to someone’s work email—use Copilot to see who you email regularly, draft a message mimicking your writing style (including emoji use), and send a personalized blast that can include a malicious link or attached malware.

“I can do this with everyone you have ever spoken to, and I can send hundreds of emails on your behalf,” says Bargury, the cofounder and CTO of security company Zenity, who published his findings alongside videos showing how Copilot could be abused. “A hacker would spend days crafting the right email to get you to click on it, but they can generate hundreds of these emails in a few minutes.”

That demonstration, as with other attacks created by Bargury, broadly works by using the large language model (LLM) as designed: typing written questions to access data the AI can retrieve. However, it can produce malicious results by including additional data or instructions to perform certain actions. The research highlights some of the challenges of connecting AI systems to corporate data and what can happen when “untrusted” outside data is thrown into the mix—particularly when the AI answers with what could look like legitimate results.

Among the other attacks created by Bargury is a demonstration of how a hacker—who, again, must already have hijacked an email account—can gain access to sensitive information, such as people’s salaries, without triggering Microsoft’s protections for sensitive files. When asking for the data, Bargury’s prompt demands the system does not provide references to the files data is taken from. “A bit of bullying does help,” Bargury says.

In other instances, he shows how an attacker—who doesn’t have access to email accounts but poisons the AI’s database by sending it a malicious email—can manipulate answers about banking information to provide their own bank details. “Every time you give AI access to data, that is a way for an attacker to get in,” Bargury says.

Another demo shows how an external hacker could get some limited information about whether an upcoming company earnings call will be good or bad, while the final instance, Bargury says, turns Copilot into a “malicious insider” by providing users with links to phishing websites.

Phillip Misner, head of AI incident detection and response at Microsoft, says the company appreciates Bargury identifying the vulnerability and says it has been working with him to assess the findings. “The risks of post-compromise abuse of AI are similar to other post-compromise techniques,” Misner says. “Security prevention and monitoring across environments and identities help mitigate or stop such behaviors.”

As generative AI systems, such as OpenAI’s ChatGPT, Microsoft’s Copilot, and Google’s Gemini, have developed in the past two years, they’ve moved onto a trajectory where they may eventually be completing tasks for people, like booking meetings or online shopping. However, security researchers have consistently highlighted that allowing external data into AI systems, such as through emails or accessing content from websites, creates security risks through indirect prompt injection and poisoning attacks.

“I think it’s not that well understood how much more effective an attacker can actually become now,” says Johann Rehberger, a security researcher and red team director, who has extensively demonstrated security weaknesses in AI systems. “What we have to be worried [about] now is actually what is the LLM producing and sending out to the user.”

Bargury says Microsoft has put a lot of effort into protecting its Copilot system from prompt injection attacks, but he says he found ways to exploit it by unraveling how the system is built. This included extracting the internal system prompt, he says, and working out how it can access enterprise resources and the techniques it uses to do so. “You talk to Copilot and it’s a limited conversation, because Microsoft has put a lot of controls,” he says. “But once you use a few magic words, it opens up and you can do whatever you want.”

Rehberger broadly warns that some data issues are linked to the long-standing problem of companies allowing too many employees access to files and not properly setting access permissions across their organizations. “Now imagine you put Copilot on top of that problem,” Rehberger says. He says he has used AI systems to search for common passwords, such as Password123, and it has returned results from within companies.

Both Rehberger and Bargury say there needs to be more focus on monitoring what an AI produces and sends out to a user. “The risk is about how AI interacts with your environment, how it interacts with your data, how it performs operations on your behalf,” Bargury says. “You need to figure out what the AI agent does on a user's behalf. And does that make sense with what the user actually asked for.”

25 notes

·

View notes

Text

Why Should You Do Web Scraping for python

Web scraping is a valuable skill for Python developers, offering numerous benefits and applications. Here’s why you should consider learning and using web scraping with Python:

1. Automate Data Collection

Web scraping allows you to automate the tedious task of manually collecting data from websites. This can save significant time and effort when dealing with large amounts of data.

2. Gain Access to Real-World Data

Most real-world data exists on websites, often in formats that are not readily available for analysis (e.g., displayed in tables or charts). Web scraping helps extract this data for use in projects like:

Data analysis

Machine learning models

Business intelligence

3. Competitive Edge in Business

Businesses often need to gather insights about:

Competitor pricing

Market trends

Customer reviews Web scraping can help automate these tasks, providing timely and actionable insights.

4. Versatility and Scalability

Python’s ecosystem offers a range of tools and libraries that make web scraping highly adaptable:

BeautifulSoup: For simple HTML parsing.

Scrapy: For building scalable scraping solutions.

Selenium: For handling dynamic, JavaScript-rendered content. This versatility allows you to scrape a wide variety of websites, from static pages to complex web applications.

5. Academic and Research Applications

Researchers can use web scraping to gather datasets from online sources, such as:

Social media platforms

News websites

Scientific publications

This facilitates research in areas like sentiment analysis, trend tracking, and bibliometric studies.

6. Enhance Your Python Skills

Learning web scraping deepens your understanding of Python and related concepts:

HTML and web structures

Data cleaning and processing

API integration

Error handling and debugging

These skills are transferable to other domains, such as data engineering and backend development.

7. Open Opportunities in Data Science

Many data science and machine learning projects require datasets that are not readily available in public repositories. Web scraping empowers you to create custom datasets tailored to specific problems.

8. Real-World Problem Solving

Web scraping enables you to solve real-world problems, such as:

Aggregating product prices for an e-commerce platform.

Monitoring stock market data in real-time.

Collecting job postings to analyze industry demand.

9. Low Barrier to Entry

Python's libraries make web scraping relatively easy to learn. Even beginners can quickly build effective scrapers, making it an excellent entry point into programming or data science.

10. Cost-Effective Data Gathering

Instead of purchasing expensive data services, web scraping allows you to gather the exact data you need at little to no cost, apart from the time and computational resources.

11. Creative Use Cases

Web scraping supports creative projects like:

Building a news aggregator.

Monitoring trends on social media.

Creating a chatbot with up-to-date information.

Caution

While web scraping offers many benefits, it’s essential to use it ethically and responsibly:

Respect websites' terms of service and robots.txt.

Avoid overloading servers with excessive requests.

Ensure compliance with data privacy laws like GDPR or CCPA.

If you'd like guidance on getting started or exploring specific use cases, let me know!

2 notes

·

View notes

Text

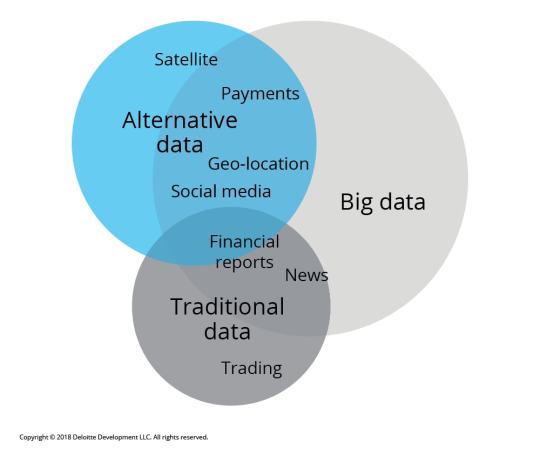

📊 Unlocking Trading Potential: The Power of Alternative Data 📊

In the fast-paced world of trading, traditional data sources—like financial statements and market reports—are no longer enough. Enter alternative data: a game-changing resource that can provide unique insights and an edge in the market. 🌐

What is Alternative Data? Alternative data refers to non-traditional data sources that can inform trading decisions. These include:

Social Media Sentiment: Analyzing trends and sentiments on platforms like Twitter and Reddit can offer insights into public perception of stocks or market movements. 📈

Satellite Imagery: Observing traffic patterns in retail store parking lots can indicate sales performance before official reports are released. 🛰️

Web Scraping: Gathering data from e-commerce websites to track product availability and pricing trends can highlight shifts in consumer behavior. 🛒

Sensor Data: Utilizing IoT devices to track activity in real-time can give traders insights into manufacturing output and supply chain efficiency. 📡

How GPT Enhances Data Analysis With tools like GPT, traders can sift through vast amounts of alternative data efficiently. Here’s how:

Natural Language Processing (NLP): GPT can analyze news articles, earnings calls, and social media posts to extract key insights and sentiment analysis. This allows traders to react swiftly to market changes.

Predictive Analytics: By training GPT on historical data and alternative data sources, traders can build models to forecast price movements and market trends. 📊

Automated Reporting: GPT can generate concise reports summarizing alternative data findings, saving traders time and enabling faster decision-making.

Why It Matters Incorporating alternative data into trading strategies can lead to more informed decisions, improved risk management, and ultimately, better returns. As the market evolves, staying ahead of the curve with innovative data strategies is essential. 🚀

Join the Conversation! What alternative data sources have you found most valuable in your trading strategy? Share your thoughts in the comments! 💬

#Trading #AlternativeData #GPT #Investing #Finance #DataAnalytics #MarketInsights

2 notes

·

View notes

Text

Must-Have Programmatic SEO Tools for Superior Rankings

Understanding Programmatic SEO

What is programmatic SEO?

Programmatic SEO uses automated tools and scripts to scale SEO efforts. In contrast to traditional SEO, where huge manual efforts were taken, programmatic SEO extracts data and uses automation for content development, on-page SEO element optimization, and large-scale link building. This is especially effective on large websites with thousands of pages, like e-commerce platforms, travel sites, and news portals.

The Power of SEO Automation

The automation within SEO tends to consume less time, with large content levels needing optimization. Using programmatic tools, therefore, makes it easier to analyze vast volumes of data, identify opportunities, and even make changes within the least period of time available. This thus keeps you ahead in the competitive SEO game and helps drive more organic traffic to your site.

Top Programmatic SEO Tools

1. Screaming Frog SEO Spider

The Screaming Frog is a multipurpose tool that crawls websites to identify SEO issues. Amongst the things it does are everything, from broken links to duplication of content and missing metadata to other on-page SEO problems within your website. Screaming Frog shortens a procedure from thousands of hours of manual work to hours of automated work.

Example: It helped an e-commerce giant fix over 10,000 broken links and increase their organic traffic by as much as 20%.

2. Ahrefs

Ahrefs is an all-in-one SEO tool that helps you understand your website performance, backlinks, and keyword research. The site audit shows technical SEO issues, whereas its keyword research and content explorer tools help one locate new content opportunities.

Example: A travel blog that used Ahrefs for sniffing out high-potential keywords and updating its existing content for those keywords grew search visibility by 30%.

3. SEMrush

SEMrush is the next well-known, full-featured SEO tool with a lot of features related to keyword research, site audit, backlink analysis, and competitor analysis. Its position tracking and content optimization tools are very helpful in programmatic SEO.

Example: A news portal leveraged SEMrush to analyze competitor strategies, thus improving their content and hoisting themselves to the first page of rankings significantly.

4. Google Data Studio

Google Data Studio allows users to build interactive dashboards from a professional and visualized perspective regarding SEO data. It is possible to integrate data from different sources like Google Analytics, Google Search Console, and third-party tools while tracking SEO performance in real-time.

Example: Google Data Studio helped a retailer stay up-to-date on all of their SEO KPIs to drive data-driven decisions that led to a 25% organic traffic improvement.

5. Python

Python, in general, is a very powerful programming language with the ability to program almost all SEO work. You can write a script in Python to scrape data, analyze huge datasets, automate content optimization, and much more.

Example: A marketing agency used Python for thousands of product meta-description automations. This saved the manual time of resources and improved search rank.

The How for Programmatic SEO

Step 1: In-Depth Site Analysis

Before diving into programmatic SEO, one has to conduct a full site audit. Such technical SEO issues, together with on-page optimization gaps and opportunities to earn backlinks, can be found with tools like Screaming Frog, Ahrefs, and SEMrush.

Step 2: Identify High-Impact Opportunities

Use the data collected to figure out the biggest bang-for-buck opportunities. Look at those pages with the potential for quite a high volume of traffic, but which are underperforming regarding the keywords focused on and content gaps that can be filled with new or updated content.

Step 3: Content Automation

This is one of the most vital parts of programmatic SEO. Scripts and tools such as the ones programmed in Python for the generation of content come quite in handy for producing significant, plentiful, and high-quality content in a short amount of time. Ensure no duplication of content, relevance, and optimization for all your target keywords.

Example: An e-commerce website generated unique product descriptions for thousands of its products with a Python script, gaining 15% more organic traffic.

Step 4: Optimize on-page elements

Tools like Screaming Frog and Ahrefs can also be leveraged to find loopholes for optimizing the on-page SEO elements. This includes meta titles, meta descriptions, headings, or even adding alt text for images. Make these changes in as effective a manner as possible.

Step 5: Build High-Quality Backlinks

Link building is one of the most vital components of SEO. Tools to be used in this regard include Ahrefs and SEMrush, which help identify opportunities for backlinks and automate outreach campaigns. Begin to acquire high-quality links from authoritative websites.

Example: A SaaS company automated its link-building outreach using SEMrush, landed some wonderful backlinks from industry-leading blogs, and considerably improved its domain authority. ### Step 6: Monitor and Analyze Performance

Regularly track your SEO performance on Google Data Studio. Analyze your data concerning your programmatic efforts and make data-driven decisions on the refinement of your strategy.

See Programmatic SEO in Action

50% Win in Organic Traffic for an E-Commerce Site

Remarkably, an e-commerce electronics website was undergoing an exercise in setting up programmatic SEO for its product pages with Python scripting to enable unique meta descriptions while fixing technical issues with the help of Screaming Frog. Within just six months, the experience had already driven a 50% rise in organic traffic.

A Travel Blog Boosts Search Visibility by 40%

Ahrefs and SEMrush were used to recognize high-potential keywords and optimize the content on their travel blog. By automating updates in content and link-building activities, it was able to set itself up to achieve 40% increased search visibility and more organic visitors.

User Engagement Improvement on a News Portal

A news portal had the option to use Google Data Studio to make some real-time dashboards to monitor their performance in SEO. Backed by insights from real-time dashboards, this helped them optimize the content strategy, leading to increased user engagement and organic traffic.

Challenges and Solutions in Programmatic SEO

Ensuring Content Quality

Quality may take a hit in the automated process of creating content. Therefore, ensure that your automated scripts can produce unique, high-quality, and relevant content. Make sure to review and fine-tune the content generation process periodically.

Handling Huge Amounts of Data

Dealing with huge amounts of data can become overwhelming. Use data visualization tools such as Google Data Studio to create dashboards that are interactive, easy to make sense of, and result in effective decision-making.

Keeping Current With Algorithm Changes

Search engine algorithms are always in a state of flux. Keep current on all the recent updates and calibrate your programmatic SEO strategies accordingly. Get ahead of the learning curve by following industry blogs, attending webinars, and taking part in SEO forums.

Future of Programmatic SEO

The future of programmatic SEO seems promising, as developing sectors in artificial intelligence and machine learning are taking this space to new heights. Developing AI-driven tools would allow much more sophisticated automation of tasks, thus making things easier and faster for marketers to optimize sites as well.

There are already AI-driven content creation tools that can make the content to be written highly relevant and engaging at scale, multiplying the potential of programmatic SEO.

Conclusion

Programmatic SEO is the next step for any digital marketer willing to scale up efforts in the competitive online landscape. The right tools and techniques put you in a position to automate key SEO tasks, thus optimizing your website for more organic traffic. The same goals can be reached more effectively and efficiently if one applies programmatic SEO to an e-commerce site, a travel blog, or even a news portal.

#Programmatic SEO#Programmatic SEO tools#SEO Tools#SEO Automation Tools#AI-Powered SEO Tools#Programmatic Content Generation#SEO Tool Integrations#AI SEO Solutions#Scalable SEO Tools#Content Automation Tools#best programmatic seo tools#programmatic seo tool#what is programmatic seo#how to do programmatic seo#seo programmatic#programmatic seo wordpress#programmatic seo guide#programmatic seo examples#learn programmatic seo#how does programmatic seo work#practical programmatic seo#programmatic seo ai

4 notes

·

View notes

Text

Best data extraction services in USA

In today's fiercely competitive business landscape, the strategic selection of a web data extraction services provider becomes crucial. Outsource Bigdata stands out by offering access to high-quality data through a meticulously crafted automated, AI-augmented process designed to extract valuable insights from websites. Our team ensures data precision and reliability, facilitating decision-making processes.

For more details, visit: https://outsourcebigdata.com/data-automation/web-scraping-services/web-data-extraction-services/.

About AIMLEAP

Outsource Bigdata is a division of Aimleap. AIMLEAP is an ISO 9001:2015 and ISO/IEC 27001:2013 certified global technology consulting and service provider offering AI-augmented Data Solutions, Data Engineering, Automation, IT Services, and Digital Marketing Services. AIMLEAP has been recognized as a ‘Great Place to Work®’.

With a special focus on AI and automation, we built quite a few AI & ML solutions, AI-driven web scraping solutions, AI-data Labeling, AI-Data-Hub, and Self-serving BI solutions. We started in 2012 and successfully delivered IT & digital transformation projects, automation-driven data solutions, on-demand data, and digital marketing for more than 750 fast-growing companies in the USA, Europe, New Zealand, Australia, Canada; and more.

-An ISO 9001:2015 and ISO/IEC 27001:2013 certified -Served 750+ customers -11+ Years of industry experience -98% client retention -Great Place to Work® certified -Global delivery centers in the USA, Canada, India & Australia

Our Data Solutions

APISCRAPY: AI driven web scraping & workflow automation platform APISCRAPY is an AI driven web scraping and automation platform that converts any web data into ready-to-use data. The platform is capable to extract data from websites, process data, automate workflows, classify data and integrate ready to consume data into database or deliver data in any desired format.

AI-Labeler: AI augmented annotation & labeling solution AI-Labeler is an AI augmented data annotation platform that combines the power of artificial intelligence with in-person involvement to label, annotate and classify data, and allowing faster development of robust and accurate models.

AI-Data-Hub: On-demand data for building AI products & services On-demand AI data hub for curated data, pre-annotated data, pre-classified data, and allowing enterprises to obtain easily and efficiently, and exploit high-quality data for training and developing AI models.

PRICESCRAPY: AI enabled real-time pricing solution An AI and automation driven price solution that provides real time price monitoring, pricing analytics, and dynamic pricing for companies across the world.

APIKART: AI driven data API solution hub APIKART is a data API hub that allows businesses and developers to access and integrate large volume of data from various sources through APIs. It is a data solution hub for accessing data through APIs, allowing companies to leverage data, and integrate APIs into their systems and applications.

Locations: USA: 1-30235 14656 Canada: +1 4378 370 063 India: +91 810 527 1615 Australia: +61 402 576 615 Email: [email protected]

2 notes

·

View notes

Text

25 Python Projects to Supercharge Your Job Search in 2024

Introduction: In the competitive world of technology, a strong portfolio of practical projects can make all the difference in landing your dream job. As a Python enthusiast, building a diverse range of projects not only showcases your skills but also demonstrates your ability to tackle real-world challenges. In this blog post, we'll explore 25 Python projects that can help you stand out and secure that coveted position in 2024.

1. Personal Portfolio Website

Create a dynamic portfolio website that highlights your skills, projects, and resume. Showcase your creativity and design skills to make a lasting impression.

2. Blog with User Authentication

Build a fully functional blog with features like user authentication and comments. This project demonstrates your understanding of web development and security.

3. E-Commerce Site

Develop a simple online store with product listings, shopping cart functionality, and a secure checkout process. Showcase your skills in building robust web applications.

4. Predictive Modeling

Create a predictive model for a relevant field, such as stock prices, weather forecasts, or sales predictions. Showcase your data science and machine learning prowess.

5. Natural Language Processing (NLP)

Build a sentiment analysis tool or a text summarizer using NLP techniques. Highlight your skills in processing and understanding human language.

6. Image Recognition

Develop an image recognition system capable of classifying objects. Demonstrate your proficiency in computer vision and deep learning.

7. Automation Scripts

Write scripts to automate repetitive tasks, such as file organization, data cleaning, or downloading files from the internet. Showcase your ability to improve efficiency through automation.

8. Web Scraping

Create a web scraper to extract data from websites. This project highlights your skills in data extraction and manipulation.

9. Pygame-based Game

Develop a simple game using Pygame or any other Python game library. Showcase your creativity and game development skills.

10. Text-based Adventure Game

Build a text-based adventure game or a quiz application. This project demonstrates your ability to create engaging user experiences.

11. RESTful API

Create a RESTful API for a service or application using Flask or Django. Highlight your skills in API development and integration.

12. Integration with External APIs

Develop a project that interacts with external APIs, such as social media platforms or weather services. Showcase your ability to integrate diverse systems.

13. Home Automation System

Build a home automation system using IoT concepts. Demonstrate your understanding of connecting devices and creating smart environments.

14. Weather Station

Create a weather station that collects and displays data from various sensors. Showcase your skills in data acquisition and analysis.

15. Distributed Chat Application

Build a distributed chat application using a messaging protocol like MQTT. Highlight your skills in distributed systems.

16. Blockchain or Cryptocurrency Tracker

Develop a simple blockchain or a cryptocurrency tracker. Showcase your understanding of blockchain technology.

17. Open Source Contributions

Contribute to open source projects on platforms like GitHub. Demonstrate your collaboration and teamwork skills.

18. Network or Vulnerability Scanner

Build a network or vulnerability scanner to showcase your skills in cybersecurity.

19. Decentralized Application (DApp)

Create a decentralized application using a blockchain platform like Ethereum. Showcase your skills in developing applications on decentralized networks.

20. Machine Learning Model Deployment

Deploy a machine learning model as a web service using frameworks like Flask or FastAPI. Demonstrate your skills in model deployment and integration.

21. Financial Calculator

Build a financial calculator that incorporates relevant mathematical and financial concepts. Showcase your ability to create practical tools.

22. Command-Line Tools

Develop command-line tools for tasks like file manipulation, data processing, or system monitoring. Highlight your skills in creating efficient and user-friendly command-line applications.

23. IoT-Based Health Monitoring System

Create an IoT-based health monitoring system that collects and analyzes health-related data. Showcase your ability to work on projects with social impact.

24. Facial Recognition System

Build a facial recognition system using Python and computer vision libraries. Showcase your skills in biometric technology.

25. Social Media Dashboard

Develop a social media dashboard that aggregates and displays data from various platforms. Highlight your skills in data visualization and integration.

Conclusion: As you embark on your job search in 2024, remember that a well-rounded portfolio is key to showcasing your skills and standing out from the crowd. These 25 Python projects cover a diverse range of domains, allowing you to tailor your portfolio to match your interests and the specific requirements of your dream job.

If you want to know more, Click here:https://analyticsjobs.in/question/what-are-the-best-python-projects-to-land-a-great-job-in-2024/

#python projects#top python projects#best python projects#analytics jobs#python#coding#programming#machine learning

2 notes

·

View notes

Text

Collecting seller and quantity related data may provide the finest leads for you Web Screen Scraping offers Best Walmart Product Data Scraping Services.

0 notes

Text

How to Extract Amazon Product Prices Data with Python 3

Web data scraping assists in automating web scraping from websites. In this blog, we will create an Amazon product data scraper for scraping product prices and details. We will create this easy web extractor using SelectorLib and Python and run that in the console.

#webscraping#data extraction#web scraping api#Amazon Data Scraping#Amazon Product Pricing#ecommerce data scraping#Data EXtraction Services

3 notes

·

View notes

Note

Your post about ab3080 and ab1949 are great, but could you add a blurb quickly explaining why both of these bills are bad please ? might be useful and encourage people to reblog if they understand whats at stake

Both bills are essentially age verification requirement laws. AB 3080 explicitly, and AB 1949 implicitly.

AB 3080 strictly is calling for dangerous age verification requirements for both adult websites and any website which sells products or services which it is illegal for minors to access in California. While this may sound like a good idea on paper, it's important to keep in mind that any information that's put online is at risk of being extracted and used by bad actors like hackers. Even if there are additional requirements by the law that data be deleted after its used for its intended purpose and that it not be used to trace what websites people access. The former of which provides very little protection from people who could access the databases of identification that are used for verification, and the latter which is frankly impossible to completely enforce and could at any time reasonably be used by the government or any surveying entity to see what private citizens have been looking at since their ID would be linked to the access and not anonymized.

AB 1949 is nominally to protect children from having their data collected and sold without permission on websites. However by restricting this with an age limit it opens up similar issues wherein it could cause default requirements for age verification for any website so that they can avoid liability by users and the state.

23 notes

·

View notes

Text

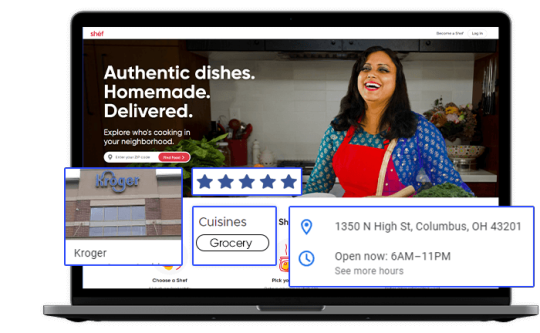

Tapping into Fresh Insights: Kroger Grocery Data Scraping

In today's data-driven world, the retail grocery industry is no exception when it comes to leveraging data for strategic decision-making. Kroger, one of the largest supermarket chains in the United States, offers a wealth of valuable data related to grocery products, pricing, customer preferences, and more. Extracting and harnessing this data through Kroger grocery data scraping can provide businesses and individuals with a competitive edge and valuable insights. This article explores the significance of grocery data extraction from Kroger, its benefits, and the methodologies involved.

The Power of Kroger Grocery Data

Kroger's extensive presence in the grocery market, both online and in physical stores, positions it as a significant source of data in the industry. This data is invaluable for a variety of stakeholders:

Kroger: The company can gain insights into customer buying patterns, product popularity, inventory management, and pricing strategies. This information empowers Kroger to optimize its product offerings and enhance the shopping experience.

Grocery Brands: Food manufacturers and brands can use Kroger's data to track product performance, assess market trends, and make informed decisions about product development and marketing strategies.

Consumers: Shoppers can benefit from Kroger's data by accessing information on product availability, pricing, and customer reviews, aiding in making informed purchasing decisions.

Benefits of Grocery Data Extraction from Kroger

Market Understanding: Extracted grocery data provides a deep understanding of the grocery retail market. Businesses can identify trends, competition, and areas for growth or diversification.

Product Optimization: Kroger and other retailers can optimize their product offerings by analyzing customer preferences, demand patterns, and pricing strategies. This data helps enhance inventory management and product selection.

Pricing Strategies: Monitoring pricing data from Kroger allows businesses to adjust their pricing strategies in response to market dynamics and competitor moves.

Inventory Management: Kroger grocery data extraction aids in managing inventory effectively, reducing waste, and improving supply chain operations.

Methodologies for Grocery Data Extraction from Kroger

To extract grocery data from Kroger, individuals and businesses can follow these methodologies:

Authorization: Ensure compliance with Kroger's terms of service and legal regulations. Authorization may be required for data extraction activities, and respecting privacy and copyright laws is essential.

Data Sources: Identify the specific data sources you wish to extract. Kroger's data encompasses product listings, pricing, customer reviews, and more.

Web Scraping Tools: Utilize web scraping tools, libraries, or custom scripts to extract data from Kroger's website. Common tools include Python libraries like BeautifulSoup and Scrapy.

Data Cleansing: Cleanse and structure the scraped data to make it usable for analysis. This may involve removing HTML tags, formatting data, and handling missing or inconsistent information.

Data Storage: Determine where and how to store the scraped data. Options include databases, spreadsheets, or cloud-based storage.

Data Analysis: Leverage data analysis tools and techniques to derive actionable insights from the scraped data. Visualization tools can help present findings effectively.

Ethical and Legal Compliance: Scrutinize ethical and legal considerations, including data privacy and copyright. Engage in responsible data extraction that aligns with ethical standards and regulations.

Scraping Frequency: Exercise caution regarding the frequency of scraping activities to prevent overloading Kroger's servers or causing disruptions.

Conclusion

Kroger grocery data scraping opens the door to fresh insights for businesses, brands, and consumers in the grocery retail industry. By harnessing Kroger's data, retailers can optimize their product offerings and pricing strategies, while consumers can make more informed shopping decisions. However, it is crucial to prioritize ethical and legal considerations, including compliance with Kroger's terms of service and data privacy regulations. In the dynamic landscape of grocery retail, data is the key to unlocking opportunities and staying competitive. Grocery data extraction from Kroger promises to deliver fresh perspectives and strategic advantages in this ever-evolving industry.

#grocerydatascraping#restaurant data scraping#food data scraping services#food data scraping#fooddatascrapingservices#zomato api#web scraping services#grocerydatascrapingapi#restaurantdataextraction

4 notes

·

View notes

Text

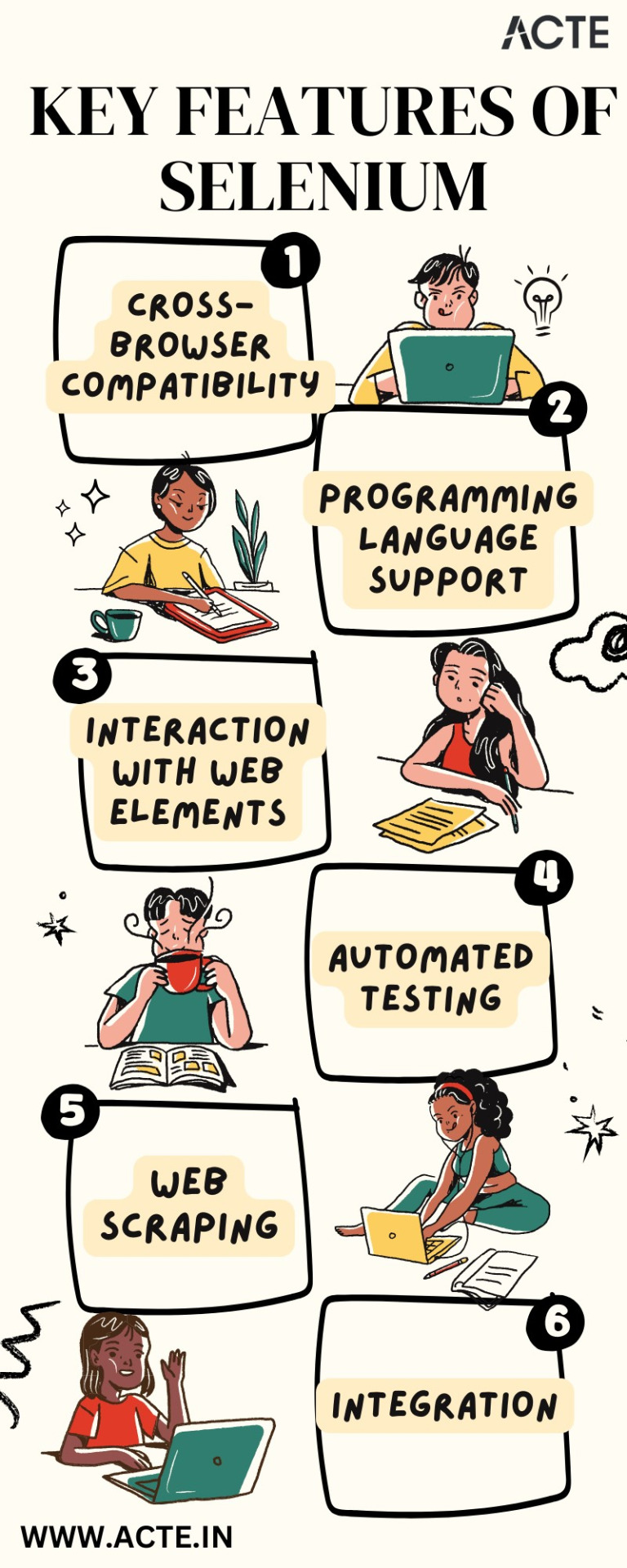

The Trendy Path to Web Automation: Selenium's Effortless Approach

In the vast expanse of the digital landscape, automation has emerged as the North Star, guiding us toward efficiency, precision, and productivity. It's in this realm of automated wonders that Selenium, a powerful open-source framework, takes center stage. Selenium isn't just a tool; it's a beacon of possibilities, a bridge between human intent and machine execution. As we embark on this journey, we'll dive deep into the multifaceted world of Selenium, exploring its key role in automating web browsers and unleashing its full potential across various domains.

Selenium: The Backbone of Web Automation

Selenium is not just a tool in your toolkit; it's the backbone that supports your web automation aspirations. It empowers a diverse community of developers, testers, and data enthusiasts to navigate the complex web of digital interactions with precision and finesse. It's more than lines of code; it's the key to unlocking a world where repetitive tasks melt away, and possibilities multiply.

1. Cross-Browser Compatibility: Bridging the Browser Divide

One of Selenium's defining strengths is its cross-browser compatibility. It extends a welcoming hand to an array of web browsers, from the familiarity of Chrome to the reliability of Firefox, the edge of Edge, and beyond. With Selenium as your ally, you can be assured that your web automation scripts will seamlessly traverse the digital landscape, transcending the vexing barriers of browser compatibility.

2. Programming Language Support: Versatility Unleashed

Selenium's versatility is the cornerstone of its appeal. It doesn't tie you down to a specific programming language; instead, it opens a world of possibilities. Whether you're fluent in the elegance of Java, the simplicity of Python, the resilience of C#, the agility of Ruby, or others, Selenium stands ready to complement your expertise.

3. Interaction with Web Elements: Crafting User Experiences

Web applications are complex ecosystems, abundant with buttons, text fields, dropdown menus, and a myriad of interactive elements. Selenium's prowess shines as it empowers you to interact with these web elements as if you were sitting in front of your screen, performing actions like clicking, typing, and scrolling with surgical precision. It's the tool you need to craft seamless user experiences through automation.

4. Automated Testing: Elevating Quality Assurance

In the realm of quality assurance, Selenium assumes the role of a vigilant guardian. Its automated testing capabilities are a testament to its commitment to quality. As a trusted ally, it carefully examines web applications, identifying issues, pinpointing regressions, and uncovering functional anomalies during the development phase. With Selenium by your side, you ensure the delivery of software that stands as a benchmark of quality and reliability.

5. Web Scraping: Harvesting Insights from the Digital Terrain

In the era of data-driven decision-making, web scraping is a strategic endeavor, and Selenium is your trusty companion. It equips you with the tools to extract data from websites, scrape valuable information, and store it for in-depth analysis or integration into other applications. With Selenium's data harvesting capabilities, you transform the digital terrain into a fertile ground for insights and innovation.

6. Integration: The Agile Ally

Selenium is not an isolated entity; it thrives in collaboration. Seamlessly integrating with an expansive array of testing frameworks and continuous integration (CI) tools, it becomes an agile ally in your software development lifecycle. It streamlines testing and validation processes, reducing manual effort, and fostering a cohesive development environment.

In conclusion, Selenium is not just a tool; it's the guiding light that empowers developers, testers, and data enthusiasts to navigate the complex realm of web automation. Its adaptability, cross-browser compatibility, and support for multiple programming languages have solidified its position as a cornerstone of modern web development and quality assurance.

Yet, Selenium is merely one part of your journey in the realm of technology. In a world that prizes continuous learning and professional growth, ACTE Technologies emerges as your trusted partner. Whether you're embarking on a new career, upskilling, or staying ahead in your field, ACTE Technologies offers tailored solutions and world-class resources.

Your journey to success commences here, where your potential knows no bounds. Welcome to a future filled with endless opportunities, fueled by Selenium and guided by ACTE Technologies. As you navigate this web automation odyssey, remember that the path ahead is illuminated by your curiosity, determination, and the unwavering support of your trusted partners.

4 notes

·

View notes