#Food Data Scraping API

Explore tagged Tumblr posts

Text

How to Utilize Foodpanda API: A Guide to Data Sets and Applications

In this blog by Actowiz Solutions, we delve into the depths of the Foodpanda API, showcasing how creative solutions can revolutionize food delivery, enhance user experiences, and foster business growth in the ever-evolving world of online meal ordering.

#Foodpanda API#Foodpanda Food Data Scraping API#Food delivery Data Scraping API#Food Data Scraping API

0 notes

Text

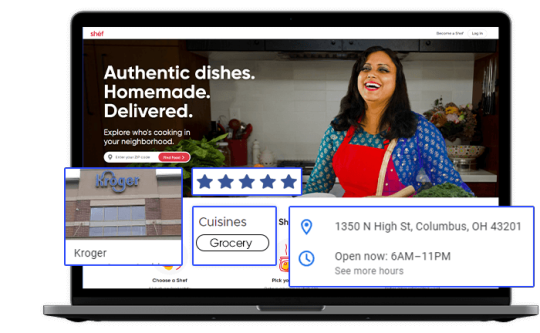

Kroger Grocery Data Scraping | Kroger Grocery Data Extraction

Shopping Kroger grocery online has become very common these days. At Foodspark, we scrape Kroger grocery apps data online with our Kroger grocery data scraping API as well as also convert data to appropriate informational patterns and statistics.

#food data scraping services#restaurantdataextraction#restaurant data scraping#web scraping services#grocerydatascraping#zomato api#fooddatascrapingservices#Scrape Kroger Grocery Data#Kroger Grocery Websites Apps#Kroger Grocery#Kroger Grocery data scraping company#Kroger Grocery Data#Extract Kroger Grocery Menu Data#Kroger grocery order data scraping services#Kroger Grocery Data Platforms#Kroger Grocery Apps#Mobile App Extraction of Kroger Grocery Delivery Platforms#Kroger Grocery delivery#Kroger grocery data delivery

2 notes

·

View notes

Text

Easily extract online food delivery data using the powerful Zomato API. Gain valuable insights, optimize operations, and make data-driven decisions for your business success.

For More Information:-

0 notes

Text

Exploring the Uber Eats API: A Definitive Guide to Integration and Functionality

In this blog, we delve into the various types of data the Uber Eats API offers and demonstrate how they can be ingeniously harnessed to craft engaging and practical meal-serving apps.

#Uber Eats Data Scraping API#Scrape Uber Eats Data API#Extract Uber Eats Data#Scrape Food Delivery App Data#Food Delivery App Data Scraping

0 notes

Text

🌯 Chipotle’s Expansion Is Data-Rich—Are You Tapping Into It?

As food delivery competition intensifies, understanding where and how your competitors operate is crucial. Real Data API enables you to scrape Chipotle restaurant locations across the USA, providing granular insights to optimize delivery zones, launch strategies, and market positioning.

📍 Key Insights You Can Uncover:

🗺️ Identify Chipotle store distribution by city, ZIP code, or region.

🚚 Pinpoint delivery hotspots and underserved zones.

📊 Compare location density with third-party delivery platforms.

🧠 Improve last-mile logistics, competitive benchmarking & hyperlocal strategy.

💡 “In the food delivery economy, geography is your growth engine.”

📩 Contact us: [email protected]

0 notes

Text

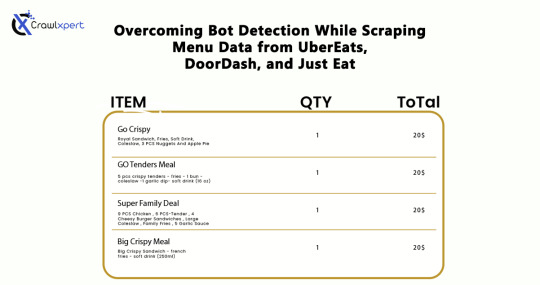

Overcoming Bot Detection While Scraping Menu Data from UberEats, DoorDash, and Just Eat

Introduction

In industries where menu data collection is concerned, web scraping would serve very well for us: UberEats, DoorDash, and Just Eat are the some examples. However, websites use very elaborate bot detection methods to stop the automated collection of information. In overcoming these factors, advanced scraping techniques would apply with huge relevance: rotating IPs, headless browsing, CAPTCHA solving, and AI methodology.

This guide will discuss how to bypass bot detection during menu data scraping and all challenges with the best practices for seamless and ethical data extraction.

Understanding Bot Detection on Food Delivery Platforms

1. Common Bot Detection Techniques

Food delivery platforms use various methods to block automated scrapers:

IP Blocking – Detects repeated requests from the same IP and blocks access.

User-Agent Tracking – Identifies and blocks non-human browsing patterns.

CAPTCHA Challenges – Requires solving puzzles to verify human presence.

JavaScript Challenges – Uses scripts to detect bots attempting to load pages without interaction.

Behavioral Analysis – Tracks mouse movements, scrolling, and keystrokes to differentiate bots from humans.

2. Rate Limiting and Request Patterns

Platforms monitor the frequency of requests coming from a specific IP or user session. If a scraper makes too many requests within a short time frame, it triggers rate limiting, causing the scraper to receive 403 Forbidden or 429 Too Many Requests errors.

3. Device Fingerprinting

Many websites use sophisticated techniques to detect unique attributes of a browser and device. This includes screen resolution, installed plugins, and system fonts. If a scraper runs on a known bot signature, it gets flagged.

Techniques to Overcome Bot Detection

1. IP Rotation and Proxy Management

Using a pool of rotating IPs helps avoid detection and blocking.

Use residential proxies instead of data center IPs.

Rotate IPs with each request to simulate different users.

Leverage proxy providers like Bright Data, ScraperAPI, and Smartproxy.

Implement session-based IP switching to maintain persistence.

2. Mimic Human Browsing Behavior

To appear more human-like, scrapers should:

Introduce random time delays between requests.

Use headless browsers like Puppeteer or Playwright to simulate real interactions.

Scroll pages and click elements programmatically to mimic real user behavior.

Randomize mouse movements and keyboard inputs.

Avoid loading pages at robotic speeds; introduce a natural browsing flow.

3. Bypassing CAPTCHA Challenges

Implement automated CAPTCHA-solving services like 2Captcha, Anti-Captcha, or DeathByCaptcha.

Use machine learning models to recognize and solve simple CAPTCHAs.

Avoid triggering CAPTCHAs by limiting request frequency and mimicking human navigation.

Employ AI-based CAPTCHA solvers that use pattern recognition to bypass common challenges.

4. Handling JavaScript-Rendered Content

Use Selenium, Puppeteer, or Playwright to interact with JavaScript-heavy pages.

Extract data directly from network requests instead of parsing the rendered HTML.

Load pages dynamically to prevent detection through static scrapers.

Emulate browser interactions by executing JavaScript code as real users would.

Cache previously scraped data to minimize redundant requests.

5. API-Based Extraction (Where Possible)

Some food delivery platforms offer APIs to access menu data. If available:

Check the official API documentation for pricing and access conditions.

Use API keys responsibly and avoid exceeding rate limits.

Combine API-based and web scraping approaches for optimal efficiency.

6. Using AI for Advanced Scraping

Machine learning models can help scrapers adapt to evolving anti-bot measures by:

Detecting and avoiding honeypots designed to catch bots.

Using natural language processing (NLP) to extract and categorize menu data efficiently.

Predicting changes in website structure to maintain scraper functionality.

Best Practices for Ethical Web Scraping

While overcoming bot detection is necessary, ethical web scraping ensures compliance with legal and industry standards:

Respect Robots.txt – Follow site policies on data access.

Avoid Excessive Requests – Scrape efficiently to prevent server overload.

Use Data Responsibly – Extracted data should be used for legitimate business insights only.

Maintain Transparency – If possible, obtain permission before scraping sensitive data.

Ensure Data Accuracy – Validate extracted data to avoid misleading information.

Challenges and Solutions for Long-Term Scraping Success

1. Managing Dynamic Website Changes

Food delivery platforms frequently update their website structure. Strategies to mitigate this include:

Monitoring website changes with automated UI tests.

Using XPath selectors instead of fixed HTML elements.

Implementing fallback scraping techniques in case of site modifications.

2. Avoiding Account Bans and Detection

If scraping requires logging into an account, prevent bans by:

Using multiple accounts to distribute request loads.

Avoiding excessive logins from the same device or IP.

Randomizing browser fingerprints using tools like Multilogin.

3. Cost Considerations for Large-Scale Scraping

Maintaining an advanced scraping infrastructure can be expensive. Cost optimization strategies include:

Using serverless functions to run scrapers on demand.

Choosing affordable proxy providers that balance performance and cost.

Optimizing scraper efficiency to reduce unnecessary requests.

Future Trends in Web Scraping for Food Delivery Data

As web scraping evolves, new advancements are shaping how businesses collect menu data:

AI-Powered Scrapers – Machine learning models will adapt more efficiently to website changes.

Increased Use of APIs – Companies will increasingly rely on API access instead of web scraping.

Stronger Anti-Scraping Technologies – Platforms will develop more advanced security measures.

Ethical Scraping Frameworks – Legal guidelines and compliance measures will become more standardized.

Conclusion

Uber Eats, DoorDash, and Just Eat represent great challenges for menu data scraping, mainly due to their advanced bot detection systems. Nevertheless, if IP rotation, headless browsing, solutions to CAPTCHA, and JavaScript execution methodologies, augmented with AI tools, are applied, businesses can easily scrape valuable data without incurring the wrath of anti-scraping measures.

If you are an automated and reliable web scraper, CrawlXpert is the solution for you, which specializes in tools and services to extract menu data with efficiency while staying legally and ethically compliant. The right techniques, along with updates on recent trends in web scrapping, will keep the food delivery data collection effort successful long into the foreseeable future.

Know More : https://www.crawlxpert.com/blog/scraping-menu-data-from-ubereats-doordash-and-just-eat

#ScrapingMenuDatafromUberEats#ScrapingMenuDatafromDoorDash#ScrapingMenuDatafromJustEat#ScrapingforFoodDeliveryData

0 notes

Text

Introduction - The Rise of On-Demand Delivery Platforms like Glovo

The global landscape of e-commerce and food delivery has witnessed an unprecedented transformation with the rise of on-demand delivery platforms. These platforms, including Glovo, have capitalized on the increasing demand for fast, convenient, and contactless delivery solutions. In 2020 alone, the global on-demand delivery industry was valued at over $100 billion and is projected to grow at a compound annual growth rate (CAGR) of 23% until 2027. The Glovo platform, which began in Spain, has expanded to more than 25 countries and 250+ cities worldwide, offering services ranging from restaurant deliveries to grocery and pharmaceutical goods.

The widespread use of smartphones and changing consumer habits have driven the growth of delivery services, making it a vital part of the modern retail ecosystem. Consumers now expect fast, accurate, and accessible delivery from local businesses, and platforms like Glovo have become key players in this demand. As businesses strive to stay competitive, Glovo Data Scraping plays an essential role in acquiring real-time insights and market intelligence.

On-demand delivery services are no longer a luxury but a necessity for businesses, and companies that harness reliable data will lead the charge. Let’s examine the growing need for accurate delivery data as we look deeper into the challenges faced by businesses relying on real-time information.

Real-Time Delivery Data Changes Frequently

While platforms like Glovo are revolutionizing the delivery landscape, one of the significant challenges businesses face is the inconsistency and volatility of real-time data. Glovo, like other on-demand services, operates in a dynamic environment where store availability, pricing, and inventory fluctuate frequently. A store’s listing can change based on delivery zones, operating hours, or ongoing promotions, making it difficult for businesses to rely on static data for decision-making.

For example, store availability can vary by time of day—some stores may not be operational during off-hours, or a delivery fee could change based on the customer’s location. The variability in Glovo Delivery Data Scraping extends to pricing, with each delivery zone potentially having different costs for the same product, depending on the distance or demand.

This constant flux in data can lead to several challenges, such as inconsistent pricing strategies, missed revenue opportunities, and poor customer experience. Moreover, with shared URLs for chains like McDonald’s or KFC, Glovo Scraper API tools must be precise in extracting data across multiple store locations to ensure data accuracy.

The problem becomes even more significant when businesses need to rely on data for forecasting, marketing, and real-time decision-making. Glovo API Scraping and other advanced scraping methods offer a potential solution, helping to fill the gaps in data accuracy.

Stay ahead of the competition by leveraging Glovo Data Scraping for accurate, real-time delivery data insights. Contact us today!

Contact Us Today!

The Need for Glovo Data Scraping to Maintain Reliable Business Intelligence

As businesses struggle to keep up with the ever-changing dynamics of Glovo’s delivery data, the importance of reliable data extraction becomes more evident. Glovo Data Scraping offers a powerful solution for companies seeking accurate, real-time data that can support decision-making and business intelligence. Unlike traditional methods of manually tracking updates, automated scraping using Glovo Scraper tools can continuously fetch the latest store availability, menu items, pricing, and delivery conditions.

Utilizing Glovo API Scraping ensures that businesses have access to the most up-to-date and accurate data on a regular basis, mitigating the challenges posed by fluctuating delivery conditions. Whether it’s monitoring Glovo Restaurant Data Scraping for competitive pricing or gathering Glovo Menu Data Extraction for inventory management, data scraping empowers businesses to optimize operations and gain an edge over competitors.

Moreover, Glovo Delivery Data Scraping ensures that companies can monitor changes in delivery fees, product availability, and pricing models, allowing them to adapt their strategies to real-time conditions. For companies in sectors like Q-commerce, which depend heavily on timely and accurate data, integrating Scrape Glovo Data into their data pipelines can dramatically enhance operational efficiency and business forecasting.

Through intelligent Glovo Scraper API solutions, companies can bridge the data gap and create more informed strategies to capture market opportunities.

The Problems with Glovo’s Real-Time Data

Glovo, a major player in the on-demand delivery ecosystem, faces challenges in providing accurate and consistent data to its users. These issues can lead to discrepancies in business intelligence, making it difficult for organizations to rely on the platform for accurate decision-making. Several critical problems hinder the effective use of Glovo Data Scraping and Glovo API Scraping. Let’s explore these problems in detail.

1. Glovo Only Shows Stores That Are Online at the Moment

One of the primary issues with Glovo is that it only displays stores that are currently online, which means businesses may miss potential opportunities. Store availability can fluctuate rapidly throughout the day, and a business may only see a partial picture of the stores operating at any given time. This makes it difficult to make decisions based on a consistent dataset, especially for those relying on real-time data.

To address this issue, companies must use Web Scraping Glovo Delivery Data to scrape data multiple times a day. By performing automated scraping at different intervals, businesses can ensure they gather complete data and avoid gaps caused by the transient nature of store availability.

2. Listings Vary by Time of Day and Delivery Radius

Another challenge is the variation in store listings by time of day and delivery radius. Due to Glovo’s dynamic delivery system, the availability of stores changes based on the user’s delivery location and the time of day. A restaurant that is available in the morning may not be available in the evening, or it may charge different delivery fees depending on the delivery zone. This introduces significant volatility in data that businesses must account for.

The solution is to Scrape Glovo Data using location-based API scraping techniques. With the right strategies, Glovo Scraper API tools can be programmed to fetch this data by specific delivery zones, ensuring a more accurate representation of store listings.

3. Shared URLs Across Multiple Branches Complicate Precise Location Tracking

For larger chains like McDonald's or KFC, Glovo often uses a single URL to represent multiple store branches within the same city. This means that all data tied to a single restaurant chain will be lumped together, even though there may be differences in location, inventory, and pricing. Such discrepancies complicate accurate data collection and make it harder to pinpoint specific store information.

The answer lies in Glovo Restaurant Data Scraping. By utilizing advanced scraping tools like Glovo Scraper and incorporating specific store locations within the scraping process, businesses can separate out data for each branch and ensure a more accurate dataset.

4. Gaps in Sitemap Coverage and Dynamic Delivery-Based Pricing Add Complexity

Glovo's sitemap often lacks comprehensive coverage of all stores, which further complicates data extraction. For example, some cities may have incomplete data on restaurant availability or listings may be outdated. Additionally, dynamic pricing based on delivery distance, demand, and time of day adds another layer of complexity. Pricing variations can be difficult to track accurately, especially for businesses that require up-to-date data for competitive pricing strategies.

Glovo Pricing Data Scraping can help resolve this issue by extracting dynamic pricing from multiple locations, ensuring businesses always have the most current pricing information. With Glovo Delivery Data Scraping, companies can access detailed pricing data in real-time and adjust their strategies based on accurate, up-to-date information.

By addressing these challenges through smart Glovo Data Scraping and leveraging technologies like Glovo Scraper API and Glovo Delivery Data Scraping, businesses can collect more accurate and reliable data, enabling them to adapt more effectively to the fluctuations in real-time delivery information. These tools help streamline data collection, making it easier for businesses to stay competitive in a fast-moving market. Learn More

#LocationBasedAPIScraping#GlovoMenuDataExtraction#GlovoScraperAPITools#GlovoDeliveryDataScraping#GlovoDataScraping

0 notes

Text

How ArcTechnolabs Builds Grocery Pricing Datasets in UK & Australia

Introduction

In 2025, real-time grocery price intelligence is mission-critical for FMCG brands, retailers, and grocery tech startups...

ArcTechnolabs specializes in building ready-to-use grocery pricing datasets that enable fast, reliable, and granular price comparisons...

Why Focus on the UK and Australia for Grocery Price Intelligence?

The grocery and FMCG sectors in both regions are undergoing massive digitization...

Key Platforms Tracked by ArcTechnolabs:

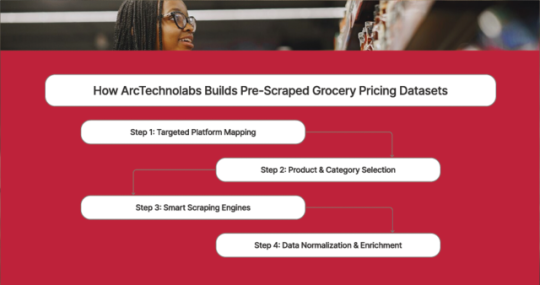

How ArcTechnolabs Builds Pre-Scraped Grocery Pricing Datasets

Step 1: Targeted Platform Mapping

UK: Tesco (Superstore), Ocado (Online-only)

AU: Coles (urban + suburban), Woolworths (nationwide chain)

Step 2: SKU Categorization

Dairy

Snacks & Beverages

Staples (Rice, Wheat, Flour)

Household & Personal Care

Fresh Produce (location-based)

Step 3: Smart Scraping Engines

Rotating proxies

Headless browsers

Captcha solvers

Throttling logic

Step 4: Data Normalization & Enrichment

Product names, pack sizes, units, currency

Price history, stock status, delivery time

Sample Dataset: UK Grocery (Tesco vs Sainsbury’s)

ProductTesco PriceSainsbury’s PriceDiscount TescoStock1L Semi-Skimmed Milk£1.15£1.10NoneIn StockHovis Wholemeal Bread£1.35£1.25£0.10In StockCoca-Cola 2L£2.00£1.857.5%In Stock

Sample Dataset: Australian Grocery (Coles vs Woolworths)

Product Comparison – Coles vs Woolworths

Vegemite 380g

--------------------

Coles: AUD 5.20 | Woolworths: AUD 4.99

Difference: AUD 0.21

Discount: No

Dairy Farmers Milk 2L

---------------------------------

Coles: AUD 4.50 | Woolworths: AUD 4.20

Difference: AUD 0.30

Discount: Yes

Uncle Tobys Oats

------------------------------

Coles: AUD 3.95 | Woolworths: AUD 4.10

Difference: -AUD 0.15 (cheaper at Coles)

Discount: No

What’s Included in ArcTechnolabs’ Datasets?

Attribute Overview for Grocery Product Data:

Product Name: Full title with brand and variant

Category/Subcategory: Structured food/non-food grouping

Retailer Name: Tesco, Sainsbury’s, etc.

Original Price: Base MRP

Offer Price: Discounted/sale price

Discount %: Auto-calculated

Stock Status: In stock, limited, etc.

Unit of Measure: kg, liter, etc.

Scrape Timestamp: Last updated time

Region/City: London, Sydney, etc.

Use Cases for FMCG Brands & Retailers

Competitor Price Monitoring – Compare real-time prices across platforms.

Retailer Negotiation – Use data insights in B2B talks.

Promotion Effectiveness – Check if discounts drive sales.

Price Comparison Apps – Build tools for end consumers.

Trend Forecasting – Analyze seasonal price patterns.

Delivery & Formats

Formats: CSV, Excel, API JSON

Frequencies: Real-time, Daily, Weekly

Custom Options: Region, brand, platform-specific, etc.

Book a discovery call today at ArcTechnolabs.com/contact

Conclusion

ArcTechnolabs delivers grocery pricing datasets with unmatched speed, scale, and geographic depth for brands operating in UK and Australia’s dynamic FMCG ecosystem.

Source >> https://www.arctechnolabs.com/arctechnolabs-grocery-pricing-datasets-uk-australia.php

#ReadyToUseGroceryPricingDatasets#AustraliaGroceryProductDataset#TimeSeriesUKSupermarketData#WebScrapingGroceryPricesDataset#GroceryPricingDatasetsUKAustralia#RetailPricingDataForQCommerce#ArcTechnolabs

0 notes

Text

📊 Unlock Deeper Food Delivery Intelligence with City-Wise Menu Trend Analysis Using Zomato & Swiggy Scraping API

In today's dynamic food delivery landscape, staying relevant means understanding how preferences shift not just nationally—but city by city. By harnessing the power of our #ZomatoScrapingAPI and #SwiggyScrapingAPI, businesses can extract granular data to reveal #menu trends, #dish popularity, #pricing variations, and #regional consumer preferences across urban centers.

Whether you're a #restaurant chain planning regional expansion, a #foodtech startup refining your offerings, or a #marketresearch firm delivering insights to clients—real-time, city-specific menu analytics are essential.

With our robust scraping solution, you can: ✔️ Analyze which items are trending in key metro areas ✔️ Adjust your menu for hyperlocal appeal ✔️ Monitor competitor offerings and pricing strategies ✔️ Predict demand patterns based on regional consumption behavior

This level of #data granularity not only boosts operational efficiency but also helps refine marketing strategies, product positioning, and business forecasting.

0 notes

Text

Tapping into Fresh Insights: Kroger Grocery Data Scraping

In today's data-driven world, the retail grocery industry is no exception when it comes to leveraging data for strategic decision-making. Kroger, one of the largest supermarket chains in the United States, offers a wealth of valuable data related to grocery products, pricing, customer preferences, and more. Extracting and harnessing this data through Kroger grocery data scraping can provide businesses and individuals with a competitive edge and valuable insights. This article explores the significance of grocery data extraction from Kroger, its benefits, and the methodologies involved.

The Power of Kroger Grocery Data

Kroger's extensive presence in the grocery market, both online and in physical stores, positions it as a significant source of data in the industry. This data is invaluable for a variety of stakeholders:

Kroger: The company can gain insights into customer buying patterns, product popularity, inventory management, and pricing strategies. This information empowers Kroger to optimize its product offerings and enhance the shopping experience.

Grocery Brands: Food manufacturers and brands can use Kroger's data to track product performance, assess market trends, and make informed decisions about product development and marketing strategies.

Consumers: Shoppers can benefit from Kroger's data by accessing information on product availability, pricing, and customer reviews, aiding in making informed purchasing decisions.

Benefits of Grocery Data Extraction from Kroger

Market Understanding: Extracted grocery data provides a deep understanding of the grocery retail market. Businesses can identify trends, competition, and areas for growth or diversification.

Product Optimization: Kroger and other retailers can optimize their product offerings by analyzing customer preferences, demand patterns, and pricing strategies. This data helps enhance inventory management and product selection.

Pricing Strategies: Monitoring pricing data from Kroger allows businesses to adjust their pricing strategies in response to market dynamics and competitor moves.

Inventory Management: Kroger grocery data extraction aids in managing inventory effectively, reducing waste, and improving supply chain operations.

Methodologies for Grocery Data Extraction from Kroger

To extract grocery data from Kroger, individuals and businesses can follow these methodologies:

Authorization: Ensure compliance with Kroger's terms of service and legal regulations. Authorization may be required for data extraction activities, and respecting privacy and copyright laws is essential.

Data Sources: Identify the specific data sources you wish to extract. Kroger's data encompasses product listings, pricing, customer reviews, and more.

Web Scraping Tools: Utilize web scraping tools, libraries, or custom scripts to extract data from Kroger's website. Common tools include Python libraries like BeautifulSoup and Scrapy.

Data Cleansing: Cleanse and structure the scraped data to make it usable for analysis. This may involve removing HTML tags, formatting data, and handling missing or inconsistent information.

Data Storage: Determine where and how to store the scraped data. Options include databases, spreadsheets, or cloud-based storage.

Data Analysis: Leverage data analysis tools and techniques to derive actionable insights from the scraped data. Visualization tools can help present findings effectively.

Ethical and Legal Compliance: Scrutinize ethical and legal considerations, including data privacy and copyright. Engage in responsible data extraction that aligns with ethical standards and regulations.

Scraping Frequency: Exercise caution regarding the frequency of scraping activities to prevent overloading Kroger's servers or causing disruptions.

Conclusion

Kroger grocery data scraping opens the door to fresh insights for businesses, brands, and consumers in the grocery retail industry. By harnessing Kroger's data, retailers can optimize their product offerings and pricing strategies, while consumers can make more informed shopping decisions. However, it is crucial to prioritize ethical and legal considerations, including compliance with Kroger's terms of service and data privacy regulations. In the dynamic landscape of grocery retail, data is the key to unlocking opportunities and staying competitive. Grocery data extraction from Kroger promises to deliver fresh perspectives and strategic advantages in this ever-evolving industry.

#grocerydatascraping#restaurant data scraping#food data scraping services#food data scraping#fooddatascrapingservices#zomato api#web scraping services#grocerydatascrapingapi#restaurantdataextraction

4 notes

·

View notes

Text

Monitor Competitor Pricing with Food Delivery Data Scraping

In the highly competitive food delivery industry, pricing can be the deciding factor between winning and losing a customer. With the rise of aggregators like DoorDash, Uber Eats, Zomato, Swiggy, and Grubhub, users can compare restaurant options, menus, and—most importantly—prices in just a few taps. To stay ahead, food delivery businesses must continually monitor how competitors are pricing similar items. And that’s where food delivery data scraping comes in.

Data scraping enables restaurants, cloud kitchens, and food delivery platforms to gather real-time competitor data, analyze market trends, and adjust strategies proactively. In this blog, we’ll explore how to use web scraping to monitor competitor pricing effectively, the benefits it offers, and how to do it legally and efficiently.

What Is Food Delivery Data Scraping?

Data scraping is the automated process of extracting information from websites. In the food delivery sector, this means using tools or scripts to collect data from food delivery platforms, restaurant listings, and menu pages.

What Can Be Scraped?

Menu items and categories

Product pricing

Delivery fees and taxes

Discounts and special offers

Restaurant ratings and reviews

Delivery times and availability

This data is invaluable for competitive benchmarking and dynamic pricing strategies.

Why Monitoring Competitor Pricing Matters

1. Stay Competitive in Real Time

Consumers often choose based on pricing. If your competitor offers a similar dish for less, you may lose the order. Monitoring competitor prices lets you react quickly to price changes and stay attractive to customers.

2. Optimize Your Menu Strategy

Scraped data helps identify:

Popular food items in your category

Price points that perform best

How competitors bundle or upsell meals

This allows for smarter decisions around menu engineering and profit margin optimization.

3. Understand Regional Pricing Trends

If you operate across multiple locations or cities, scraping competitor data gives insights into:

Area-specific pricing

Demand-based variation

Local promotions and discounts

This enables geo-targeted pricing strategies.

4. Identify Gaps in the Market

Maybe no competitor offers free delivery during weekdays or a combo meal under $10. Real-time data helps spot such gaps and create offers that attract value-driven users.

How Food Delivery Data Scraping Works

Step 1: Choose Your Target Platforms

Most scraping projects start with identifying where your competitors are listed. Common targets include:

Aggregators: Uber Eats, Zomato, DoorDash, Grubhub

Direct restaurant websites

POS platforms (where available)

Step 2: Define What You Want to Track

Set scraping goals. For pricing, track:

Base prices of dishes

Add-ons and customization costs

Time-sensitive deals

Delivery fees by location or vendor

Step 3: Use Web Scraping Tools or Custom Scripts

You can either:

Use scraping tools like Octoparse, ParseHub, Apify, or

Build custom scripts in Python using libraries like BeautifulSoup, Selenium, or Scrapy

These tools automate the extraction of relevant data and organize it in a structured format (CSV, Excel, or database).

Step 4: Automate Scheduling and Alerts

Set scraping intervals (daily, hourly, weekly) and create alerts for major pricing changes. This ensures your team is always equipped with the latest data.

Step 5: Analyze the Data

Feed the scraped data into BI tools like Power BI, Google Data Studio, or Tableau to identify patterns and inform strategic decisions.

Tools and Technologies for Effective Scraping

Popular Tools:

Scrapy: Python-based framework perfect for complex projects

BeautifulSoup: Great for parsing HTML and small-scale tasks

Selenium: Ideal for scraping dynamic pages with JavaScript

Octoparse: No-code solution with scheduling and cloud support

Apify: Advanced, scalable platform with ready-to-use APIs

Hosting and Automation:

Use cron jobs or task schedulers for automation

Store data on cloud databases like AWS RDS, MongoDB Atlas, or Google BigQuery

Legal Considerations: Is It Ethical to Scrape Food Delivery Platforms?

This is a critical aspect of scraping.

Understand Platform Terms

Many websites explicitly state in their Terms of Service that scraping is not allowed. Scraping such platforms can violate those terms, even if it’s not technically illegal.

Avoid Harming Website Performance

Always scrape responsibly:

Use rate limiting to avoid overloading servers

Respect robots.txt files

Avoid scraping login-protected or personal user data

Use Publicly Available Data

Stick to scraping data that’s:

Publicly accessible

Not behind paywalls or logins

Not personally identifiable or sensitive

If possible, work with third-party data providers who have pre-approved partnerships or APIs.

Real-World Use Cases of Price Monitoring via Scraping

A. Cloud Kitchens

A cloud kitchen operating in three cities uses scraping to monitor average pricing for biryani and wraps. Based on competitor pricing, they adjust their bundle offers and introduce combo meals—boosting order value by 22%.

B. Local Restaurants

A family-owned restaurant tracks rival pricing and delivery fees during weekends. By offering a free dessert on orders above $25 (when competitors don’t), they see a 15% increase in weekend orders.

C. Food Delivery Startups

A new delivery aggregator monitors established players’ pricing to craft a price-beating strategy, helping them enter the market with aggressive discounts and gain traction.

Key Metrics to Track Through Price Scraping

When setting up your monitoring dashboard, focus on:

Average price per cuisine category

Price differences across cities or neighborhoods

Top 10 lowest/highest priced items in your segment

Frequency of discounts and offers

Delivery fee trends by time and distance

Most used upsell combinations (e.g., sides, drinks)

Challenges in Food Delivery Data Scraping (And Solutions)

Challenge 1: Dynamic Content and JavaScript-Heavy Pages

Solution: Use headless browsers like Selenium or platforms like Puppeteer to scrape rendered content.

Challenge 2: IP Blocking or Captchas

Solution: Rotate IPs with proxies, use CAPTCHA-solving tools, or throttle request rates.

Challenge 3: Frequent Site Layout Changes

Solution: Use XPaths and CSS selectors dynamically, and monitor script performance regularly.

Challenge 4: Keeping Data Fresh

Solution: Schedule automated scraping and build change detection algorithms to prioritize meaningful updates.

Final Thoughts

In today’s digital-first food delivery market, being reactive is no longer enough. Real-time competitor pricing insights are essential to survive and thrive. Data scraping gives you the tools to make informed, timely decisions about your pricing, promotions, and product offerings.

Whether you're a single-location restaurant, an expanding cloud kitchen, or a new delivery platform, food delivery data scraping can help you gain a critical competitive edge. But it must be done ethically, securely, and with the right technologies.

0 notes

Text

Power Your Decisions with Real-Time Web Data Scraping APIs

In today’s fast-paced digital economy, real-time access to web data is the game-changer. The Real Data API delivers ultra-fast, scalable, and structured data directly from websites—helping businesses across industries make instant, informed decisions.

📌 Key Benefits of Real-Time Scraping API:

🌐 Instant access to live product, pricing, stock, and review data

⚙️ Scalable infrastructure for large-volume data extraction

📦 Supports eCommerce, travel, real estate, food, finance & more

🧠 Compatible with AI/ML models for predictive analytics

⏱️ Real-time updates for agile decision-making

💡 Whether you're tracking competitor prices, updating inventory, or analyzing user sentiment—Real Data API ensures you never miss a beat. 📩 Contact us: [email protected]

0 notes

Text

How to Track Restaurant Promotions on Instacart and Postmates Using Web Scraping

Introduction

With the rapid growth of food delivery services, companies such as Instacart and Postmates are constantly advertising for their restaurants to entice customers. Such promotions can range from discounts and free delivery to combinations and limited-time offers. For restaurants and food businesses, tracking these promotions gives them a competitive edge to better adjust their pricing strategies, identify trends, and stay ahead of their competitors.

One of the topmost ways to track promotions is using web scraping, which is an automated way of extracting relevant data from the internet. This article examines how to track restaurant promotions from Instacart and Postmates using the techniques, tools, and best practices in web scraping.

Why Track Restaurant Promotions?

1. Contest Research

Identify promotional strategies of competitors in the market.

Compare their discounting rates between restaurants.

Create pricing strategies for competitiveness.

2. Consumer Behavior Intuition

Understand what kinds of promotions are the most patronized by customers.

Deducing patterns that emerge determine what day, time, or season discounts apply.

Marketing campaigns are also optimized based on popular promotions.

3. Distribution Profit Maximization

Determine the optimum timing for promotion in restaurants.

Analyzing competitors' discounts and adjusting is critical to reducing costs.

Maximize the Return on investments, and ROI of promotional campaigns.

Web Scraping Techniques for Tracking Promotions

Key Data Fields to Extract

To effectively monitor promotions, businesses should extract the following data:

Restaurant Name – Identify which restaurants are offering promotions.

Promotion Type – Discounts, BOGO (Buy One Get One), free delivery, etc.

Discount Percentage – Measure how much customers save.

Promo Start & End Date – Track duration and frequency of offers.

Menu Items Included – Understand which food items are being promoted.

Delivery Charges - Compare free vs. paid delivery promotions.

Methods of Extracting Promotional Data

1. Web Scraping with Python

Using Python-based libraries such as BeautifulSoup, Scrapy, and Selenium, businesses can extract structured data from Instacart and Postmates.

2. API-Based Data Extraction

Some platforms provide official APIs that allow restaurants to retrieve promotional data. If available, APIs can be an efficient and legal way to access data without scraping.

3. Cloud-Based Web Scraping Tools

Services like CrawlXpert, ParseHub, and Octoparse offer automated scraping solutions, making data extraction easier without coding.

Overcoming Anti-Scraping Measures

1. Avoiding IP Blocks

Use proxy rotation to distribute requests across multiple IP addresses.

Implement randomized request intervals to mimic human behavior.

2. Bypassing CAPTCHA Challenges

Use headless browsers like Puppeteer or Playwright.

Leverage CAPTCHA-solving services like 2Captcha.

3. Handling Dynamic Content

Use Selenium or Puppeteer to interact with JavaScript-rendered content.

Scrape API responses directly when possible.

Analyzing and Utilizing Promotion Data

1. Promotional Dashboard Development

Create a real-time dashboard to track ongoing promotions.

Use data visualization tools like Power BI or Tableau to monitor trends.

2. Predictive Analysis for Promotions

Use historical data to forecast future discounts.

Identify peak discount periods and seasonal promotions.

3. Custom Alerts for Promotions

Set up automated email or SMS alerts when competitors launch new promotions.

Implement AI-based recommendations to adjust restaurant pricing.

Ethical and Legal Considerations

Comply with robots.txt guidelines when scraping data.

Avoid excessive server requests to prevent website disruptions.

Ensure extracted data is used for legitimate business insights only.

Conclusion

Web scraping allows tracking restaurant promotions at Instacart and Postmates so that businesses can best optimize their pricing strategies to maximize profits and stay ahead of the game. With the help of automation, proxies, headless browsing, and AI analytics, businesses can beautifully keep track of and respond to the latest promotional trends.

CrawlXpert is a strong provider of automated web scraping services that help restaurants follow promotions and analyze competitors' strategies.

0 notes