#datascraping

Explore tagged Tumblr posts

Text

SQL Server deadlocks are a common phenomenon, particularly in multi-user environments where concurrency is essential. Let's Explore:

https://madesimplemssql.com/deadlocks-in-sql-server/

Please follow on FB: https://www.facebook.com/profile.php?id=100091338502392

#technews#microsoft#sqlite#sqlserver#database#sql#tumblr milestone#vpn#powerbi#data#madesimplemssql#datascience#data scientist#datascraping#data analytics#dataanalytics#data analysis#dataannotation#dataanalystcourseinbangalore#data analyst training#microsoft azure

5 notes

·

View notes

Text

📊 Unlock Business Intelligence with Uber Eats Data Scraping

📊 Unlock Business Intelligence with Uber Eats Data Scraping

In the rapidly evolving #fooddeliverylandscape, staying ahead requires more than great service — it requires #datadrivendecisions.

With our Uber Eats hashtag#FoodDataScraping Services, you can:

✅ Extract detailed restaurant menus

✅ Monitor real-time pricing trends

✅ Analyze customer reviews

✅ Gain actionable market insights

Whether you're in #foodtech, #analytics, or #retailintelligence, our service empowers you to make informed decisions, #optimize pricing strategies, and understand consumer preferences.

Explore the power of structured data today! 🔗 www.iwebdatascraping.com

#UberEats#DataScraping#FoodDeliveryData#MenuAnalytics#BusinessIntelligence#WebScraping#MarketInsights#SmartData#FoodTech

0 notes

Text

How To Scrape Airbnb Listing Data Using Python And Beautiful Soup: A Step-By-Step Guide

The travel industry is a huge business, set to grow exponentially in coming years. It revolves around movement of people from one place to another, encompassing the various amenities and accommodations they need during their travels. This concept shares a strong connection with sectors such as hospitality and the hotel industry.

Here, it becomes prudent to mention Airbnb. Airbnb stands out as a well-known online platform that empowers people to list, explore, and reserve lodging and accommodation choices, typically in private homes, offering an alternative to the conventional hotel and inn experience.

Scraping Airbnb listings data entails the process of retrieving or collecting data from Airbnb property listings. To Scrape Data from Airbnb's website successfully, you need to understand how Airbnb's listing data works. This blog will guide us how to scrape Airbnb listing data.

What Is Airbnb Scraping?

Airbnb serves as a well-known online platform enabling individuals to rent out their homes or apartments to travelers. Utilizing Airbnb offers advantages such as access to extensive property details like prices, availability, and reviews.

Data from Airbnb is like a treasure trove of valuable knowledge, not just numbers and words. It can help you do better than your rivals. If you use the Airbnb scraper tool, you can easily get this useful information.

Effectively scraping Airbnb’s website data requires comprehension of its architecture. Property information, listings, and reviews are stored in a database, with the website using APIs to fetch and display this data. To scrape the details, one must interact with these APIs and retrieve the data in the preferred format.

In essence, Airbnb listing scraping involves extracting or scraping Airbnb listings data. This data encompasses various aspects such as listing prices, locations, amenities, reviews, and ratings, providing a vast pool of data.

What Are the Types of Data Available on Airbnb?

Navigating via Airbnb's online world uncovers a wealth of data. To begin with, property details, like data such as the property type, location, nightly price, and the count of bedrooms and bathrooms. Also, amenities (like Wi-Fi, a pool, or a fully-equipped kitchen) and the times for check-in and check-out. Then, there is data about the hosts and guest reviews and details about property availability.

Here's a simplified table to provide a better overview:

Property Details Data regarding the property, including its category, location, cost, number of rooms, available features, and check-in/check-out schedules.

Host Information Information about the property's owner, encompassing their name, response time, and the number of properties they oversee.

Guest Reviews Ratings and written feedback from previous property guests.

Booking Availability Data on property availability, whether it's available for booking or already booked, and the minimum required stay.

Why Is the Airbnb Data Important?

Extracting data from Airbnb has many advantages for different reasons:

Market Research

Scraping Airbnb listing data helps you gather information about the rental market. You can learn about prices, property features, and how often places get rented. It is useful for understanding the market, finding good investment opportunities, and knowing what customers like.

Getting to Know Your Competitor

By scraping Airbnb listings data, you can discover what other companies in your industry are doing. You'll learn about their offerings, pricing, and customer opinions.

Evaluating Properties

Scraping Airbnb listing data lets you look at properties similar to yours. You can see how often they get booked, what they charge per night, and what guests think of them. It helps you set the prices right, make your property better, and make guests happier.

Smart Decision-Making

With scraped Airbnb listing data, you can make smart choices about buying properties, managing your portfolio, and deciding where to invest. The data can tell you which places are popular, what guests want, and what is trendy in the vacation rental market.

Personalizing and Targeting

By analyzing scraped Airbnb listing data, you can learn what your customers like. You can find out about popular features, the best neighborhoods, or unique things guests want. Next, you can change what you offer to fit what your customers like.

Automating and Saving Time

Instead of typing everything yourself, web scraping lets a computer do it for you automatically and for a lot of data. It saves you time and money and ensures you have scraped Airbnb listing data.

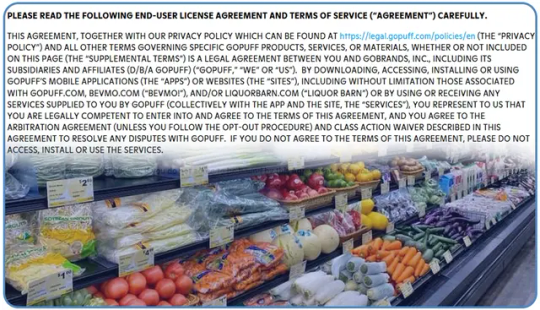

Is It Legal to Scrape Airbnb Data?

Collecting Airbnb listing data that is publicly visible on the internet is okay, as long as you follow the rules and regulations. However, things can get stricter if you are trying to gather data that includes personal info, and Airbnb has copyrights on that.

Most of the time, websites like Airbnb do not let automatic tools gather information unless they give permission. It is one of the rules you follow when you use their service. However, the specific rules can change depending on the country and its policies about automated tools and unauthorized access to systems.

How To Scrape Airbnb Listing Data Using Python and Beautiful Soup?

Websites related to travel, like Airbnb, have a lot of useful information. This guide will show you how to scrape Airbnb listing data using Python and Beautiful Soup. The information you collect can be used for various things, like studying market trends, setting competitive prices, understanding what guests think from their reviews, or even making your recommendation system.

We will use Python as a programming language as it is perfect for prototyping, has an extensive online community, and is a go-to language for many. Also, there are a lot of libraries for basically everything one could need. Two of them will be our main tools today:

Beautiful Soup — Allows easy scraping of data from HTML documents

Selenium — A multi-purpose tool for automating web-browser actions

Getting Ready to Scrape Data

Now, let us think about how users scrape Airbnb listing data. They start by entering the destination, specify dates then click "search." Airbnb shows them lots of places.

This first page is like a search page with many options. But there is only a brief data about each.

After browsing for a while, the person clicks on one of the places. It takes them to a detailed page with lots of information about that specific place.

We want to get all the useful information, so we will deal with both the search page and the detailed page. But we also need to find a way to get info from the listings that are not on the first search page.

Usually, there are 20 results on one search page, and for each place, you can go up to 15 pages deep (after that, Airbnb says no more).

It seems quite straightforward. For our program, we have two main tasks:

looking at a search page, and getting data from a detailed page.

So, let us begin writing some code now!

Getting the listings

Using Python to scrape Airbnb listing data web pages is very easy. Here is the function that extracts the webpage and turns it into something we can work with called Beautiful Soup.

def scrape_page(page_url): """Extracts HTML from a webpage""" answer = requests.get(page_url) content = answer.content soup = BeautifulSoup(content, features='html.parser') return soup

Beautiful Soup helps us move around an HTML page and get its parts. For example, if we want to take the words from a “div” object with a class called "foobar" we can do it like this:

text = soup.find("div", {"class": "foobar"}).get_text()

On Airbnb's listing data search page, what we are looking for are separate listings. To get to them, we need to tell our program which kinds of tags and names to look for. A simple way to do this is to use a tool in Chrome called the developer tool (press F12).

The listing is inside a "div" object with the class name "8s3ctt." Also, we know that each search page has 20 different listings. We can take all of them together using a Beautiful Soup tool called "findAll.

def extract_listing(page_url): """Extracts listings from an Airbnb search page""" page_soup = scrape_page(page_url) listings = page_soup.findAll("div", {"class": "_8s3ctt"}) return listings

Getting Basic Info from Listings

When we check the detailed pages, we can get the main info about the Airbnb listings data, like the name, total price, average rating, and more.

All this info is in different HTML objects as parts of the webpage, with different names. So, we could write multiple single extractions -to get each piece:

name = soup.find('div', {'class':'_hxt6u1e'}).get('aria-label') price = soup.find('span', {'class':'_1p7iugi'}).get_text() ...

However, I chose to overcomplicate right from the beginning of the project by creating a single function that can be used again and again to get various things on the page.

def extract_element_data(soup, params): """Extracts data from a specified HTML element"""

# 1. Find the right tag

if 'class' in params: elements_found = soup.find_all(params['tag'], params['class']) else: elements_found = soup.find_all(params['tag'])

# 2. Extract text from these tags

if 'get' in params: element_texts = [el.get(params['get']) for el in elements_found] else: element_texts = [el.get_text() for el in elements_found]

# 3. Select a particular text or concatenate all of them tag_order = params.get('order', 0) if tag_order == -1: output = '**__**'.join(element_texts) else: output = element_texts[tag_order] return output

Now, we've got everything we need to go through the entire page with all the listings and collect basic details from each one. I'm showing you an example of how to get only two details here, but you can find the complete code in a git repository.

RULES_SEARCH_PAGE = { 'name': {'tag': 'div', 'class': '_hxt6u1e', 'get': 'aria-label'}, 'rooms': {'tag': 'div', 'class': '_kqh46o', 'order': 0}, } listing_soups = extract_listing(page_url) features_list = [] for listing in listing_soups: features_dict = {} for feature in RULES_SEARCH_PAGE: features_dict[feature] = extract_element_data(listing, RULES_SEARCH_PAGE[feature]) features_list.append(features_dict)

Getting All the Pages for One Place

Having more is usually better, especially when it comes to data. Scraping Airbnb listing data lets us see up to 300 listings for one place, and we are going to scrape them all.

There are different ways to go through the pages of search results. It is easiest to see how the web address (URL) changes when we click on the "next page" button and then make our program do the same thing.

All we have to do is add a thing called "items_offset" to our initial URL. It will help us create a list with all the links in one place.

def build_urls(url, listings_per_page=20, pages_per_location=15): """Builds links for all search pages for a given location""" url_list = [] for i in range(pages_per_location): offset = listings_per_page * i url_pagination = url + f'&items_offset={offset}' url_list.append(url_pagination) return url_list

We have completed half of the job now. We can run our program to gather basic details for all the listings in one place. We just need to provide the starting link, and things are about to get even more exciting.

Dynamic Pages

It takes some time for a detailed page to fully load. It takes around 3-4 seconds. Before that, we could only see the base HTML of the webpage without all the listing details we wanted to collect.

Sadly, the "requests" tool doesn't allow us to wait until everything on the page is loaded. But Selenium does. Selenium can work just like a person, waiting for all the cool website things to show up, scrolling, clicking buttons, filling out forms, and more.

Now, we plan to wait for things to appear and then click on them. To get information about the amenities and price, we need to click on certain parts.

To sum it up, here is what we are going to do:

Start up Selenium.

Open a detailed page.

Wait for the buttons to show up.

Click on the buttons.

Wait a little longer for everything to load.

Get the HTML code.

Let us put them into a Python function.

def extract_soup_js(listing_url, waiting_time=[5, 1]): """Extracts HTML from JS pages: open, wait, click, wait, extract""" options = Options() options.add_argument('--headless') options.add_argument('--no-sandbox') driver = webdriver.Chrome(options=options) driver.get(listing_url) time.sleep(waiting_time[0]) try: driver.find_element_by_class_name('_13e0raay').click() except: pass # amenities button not found try: driver.find_element_by_class_name('_gby1jkw').click() except: pass # prices button not found time.sleep(waiting_time[1]) detail_page = driver.page_source driver.quit() return BeautifulSoup(detail_page, features='html.parser')

Now, extracting detailed info from the listings is quite straightforward because we have everything we need. All we have to do is carefully look at the webpage using a tool in Chrome called the developer tool. We write down the names and names of the HTML parts, put all of that into a tool called "extract_element_data.py" and we will have the data we want.

Running Multiple Things at Once

Getting info from all 15 search pages in one location is pretty quick. When we deal with one detailed page, it takes about just 5 to 6 seconds because we have to wait for the page to fully appear. But, the fact is the CPU is only using about 3% to 8% of its power.

So. instead of going to 300 webpages one by one in a big loop, we can split the webpage addresses into groups and go through these groups one by one. To find the best group size, we have to try different options.

from multiprocessing import Pool with Pool(8) as pool: result = pool.map(scrape_detail_page, url_list)

The Outcome

After turning our tools into a neat little program and running it for a location, we obtained our initial dataset.

The challenging aspect of dealing with real-world data is that it's often imperfect. There are columns with no information, many fields need cleaning and adjustments. Some details turned out to be not very useful, as they are either always empty or filled with the same values.

There's room for improving the script in some ways. We could experiment with different parallelization approaches to make it faster. Investigating how long it takes for the web pages to load can help reduce the number of empty columns.

To Sum It Up

We've mastered:

Scraping Airbnb listing data using Python and Beautiful Soup.

Handling dynamic pages using Selenium.

Running the script in parallel using multiprocessing.

Conclusion

Web scraping today offers user-friendly tools, which makes it easy to use. Whether you are a coding pro or a curious beginner, you can start scraping Airbnb listing data with confidence. And remember, it's not just about collecting data – it's also about understanding and using it.

The fundamental rules remain the same, whether you're scraping Airbnb listing data or any other website, start by determining the data you need. Then, select a tool to collect that data from the web. Finally, verify the data it retrieves. Using this info, you can make better decisions for your business and come up with better plans to sell things.

So, be ready to tap into the power of web scraping and elevate your sales game. Remember that there's a wealth of Airbnb data waiting for you to explore. Get started with an Airbnb scraper today, and you'll be amazed at the valuable data you can uncover. In the world of sales, knowledge truly is power.

0 notes

Link

Looking to supercharge your lead generation? Discover the Google Maps Places Scraper by Outscraper—now available on AppSumo. This innovative tool allows you to extract local business contacts directly from Google Maps, giving you access to invaluable data that can elevate your marketing strategy. For just $129, you gain lifetime access to a tool that normally retails for over $2,000. Not only can you export data in various formats, but it also enriches your findings with emails, social media profiles, and more. Picture building a comprehensive lead database with ease. However, it's essential to navigate the pricing structure cautiously, as some users have reported unexpected charges. Our latest blog post delves into the benefits and challenges of this powerful scraper, ensuring you're fully informed before making a purchase. Ready to harness the power of local business data? Check out the full review and find out if the Google Maps Places Scraper is the right fit for your marketing toolkit. #LeadGeneration #DigitalMarketing #GoogleMaps #DataScraping #Outscraper #AppSumo #BusinessGrowth #MarketingStrategy #LocalBusiness Read more here: https://jomiruddin.com/google-maps-scraper-lifetime-deal-review-appsumo/

#datascraping#marketingtools#billingpractices#customerfeedback#salesmanagement#AppSumodiscount#leadgenerationtool#localbusinesscontacts#GoogleMapsscraper#Outscraperreview#sales management

0 notes

Text

Why Quick Commerce Scraping API Is Key to Maximizing Profits in 2025?

Introduction

Quick commerce businesses encounter significant challenges and opportunities in the fast-paced digital marketplace. With consumer preferences shifting rapidly, pricing dynamics evolving, and competition intensifying, staying ahead requires a data-driven approach that adapts to real-time market changes. As we move through 2025, one solution has emerged as a key driver of sustained growth: the Quick Commerce Scraping API. This advanced tool is revolutionizing how businesses collect market intelligence, refine pricing strategies, and enhance profitability in the quick commerce sector.

Understanding the Quick Commerce Revolution

The quick commerce industry—defined by ultra-fast delivery windows of 10-30 minutes—has dramatically reshaped consumer expectations across retail sectors. Pioneered by industry leaders like Gopuff, Getir, and Gorillas, this market has experienced rapid expansion, with projections estimating it will reach $72.1 billion by 2025.

As competition heats, businesses increasingly seek innovative strategies to secure a competitive advantage. This is where data becomes the ultimate game-changer. The ability to gather, analyze, and leverage real-time market data is critical for Maximize Quick Commerce Profits and staying ahead in an ever-evolving landscape.

The Growing Data Challenge in Quick Commerce

Quick commerce thrives on ultra-fast decision-making and operates within razor-thin margins. To stay competitive, businesses must navigate several key challenges:

Real-time pricing intelligence: Prices fluctuate multiple times a day across various competitors, requiring constant monitoring to maintain a competitive edge.

Product availability tracking: Inventory levels are highly dynamic, and stockouts or restocks can significantly impact sales and customer satisfaction.

Promotional activity monitoring: Flash sales, exclusive discounts, and limited-time offers demand immediate tracking and response to capitalize on market opportunities.

Consumer behavior insights: Understanding rapid shifts in shopping trends and preferences is crucial for personalization and demand forecasting.

Operational efficiency metrics: Effective delivery logistics, inventory flow, and staffing management ensure cost optimization and seamless fulfillment.

By leveraging a Quick Commerce Scraping API, businesses can streamline their market intelligence efforts and gain access to crucial data points that drive competitive pricing, product assortment strategies, and consumer behavior analysis.

What Is a Quick Commerce Scraping API?

A Quick Commerce Scraping API is a powerful automated tool that allows businesses to seamlessly collect and analyze vast amounts of market data from various online sources.

This API efficiently gathers structured data from competitor websites, e-commerce platforms, marketplaces, and even social media channels, providing businesses with real-time insights without the burden of manual data collection.

By leveraging a Quick Commerce Scraping API, businesses can streamline their market intelligence efforts and gain access to crucial data points that drive competitive pricing, product assortment strategies, and consumer behavior analysis.

Key Benefits of a Quick Commerce Data API

Automated data extraction from thousands of online sources ensures comprehensive market trend coverage.

Structured and analysis-ready data outputs, eliminating the need for additional data processing.

Real-time or scheduled data collection lets businesses stay updated with the latest market changes.

Advanced anti-scraping bypass mechanisms, utilizing sophisticated proxy networks to ensure uninterrupted data access.

Compliance with legal and ethical data standards, ensuring responsible and secure data collection practices.

Seamless integration with business intelligence tools enables businesses to effortlessly transform raw data into actionable insights.

By integrating a Quick Commerce Scraping API, companies can unlock deeper market visibility, optimize pricing strategies, and enhance overall business decision-making.

Strategic Applications of Scraping APIs in Quick Commerce

In the fast-paced world of quick commerce, staying ahead requires real-time data insights to optimize pricing, inventory, competitive positioning, marketing, and supply chain operations. Scraping APIs is a powerful tool that enables businesses to make data-driven decisions that enhance profitability and efficiency.

1. Dynamic Pricing Optimization

Price competitiveness in quick commerce is crucial, as consumers frequently compare prices before purchasing. Leveraging a Scraping API For E-Commerce Growth, businesses can track real-time competitor pricing and refine their pricing strategies accordingly.

Case Study: A leading quick grocery delivery platform used scraping API technology to implement dynamic pricing. By adjusting prices based on competitor data, they maintained a competitive edge on high-visibility products while maximizing margins on less price-sensitive items—leading to an 18% profitability boost within three months.

2. Inventory and Assortment Planning

To remain competitive, quick commerce businesses must constantly fine-tune their product mix in response to market trends and consumer demand.

Data Scraping For Quick Commerce Businesses provides actionable insights into:

Trending products across competitor platforms.

Category performance metrics.

Seasonal demand fluctuations.

Stock availability patterns.

Emerging product introductions.

By leveraging these insights, businesses can make informed decisions on inventory investments, discontinue underperforming products, and prioritize new product launches.

3. Competitive Intelligence and Market Positioning

Gaining an in-depth understanding of market trends and competitor movements is essential for strategic planning by:

Track competitor expansion into new geographical areas.

Monitor delivery time variations and service enhancements.

Analyze promotional strategies and marketing messages.

Identify emerging competitors and disruptive market entrants.

Aggregate customer reviews to gauge sentiment.

These insights enable businesses to anticipate competitive shifts and proactively refine their market strategies rather than merely reacting to industry changes.

4. Marketing and Promotional Effectiveness

Promotions are critical in quick commerce, driving customer acquisition and repeat purchases.

Quick Commerce Insights With Scraping API technologies allow businesses to:

Monitor competitor promotional campaigns.

Evaluate the effectiveness of various promotional structures.

Determine the best timing for promotions.

Measure promotional impact across different customer demographics.

Optimize marketing spending through ROI-driven insights.

By leveraging data-driven promotional strategies, businesses can attract and retain customers more effectively while minimizing unnecessary price wars.

5. Supply Chain Optimization

Efficient supply chain management is critical for Profit Optimization Through Scraping APIs. By analyzing competitor product availability, delivery times, and geographical coverage, businesses can:

This enables businesses to:

Identify ideal locations for dark stores and micro-fulfillment centers.

Maintain optimal inventory levels across product categories.

Strengthen supplier relationships based on demand and performance data.

Minimize stockouts and overstock situations.

Enhance logistics planning and improve delivery efficiency.

By refining supply chain operations, businesses can reduce costs while improving service quality—which is essential to maintaining a competitive edge in quick commerce.

How to Implement a Quick Commerce Scraping Strategy?

Implementing a Quick Commerce Scraping API effectively requires a structured approach to ensure valuable insights and actionable outcomes. By following a strategic plan, businesses can maximize the potential of data scraping for competitive advantage.

Identify Key Data Points and Sources

Select the Right API Partner

Establish Data Analysis Frameworks

Create Action Protocols

Measure ROI and Refine Your Approach

Legal and Ethical Considerations

Adhering to legal and ethical standards is essential when integrating data scraping for Quick Commerce businesses to ensure compliance and responsible data usage.

Here’s how businesses can maintain best practices:

Respect Terms of Service: Always ensure that your scraping activities align with website terms of service to avoid potential legal complications.

Manage Server Load: Implement request throttling and efficient crawling techniques to prevent target websites from overloading and ensure sustainable data extraction.

Handle Personal Data Responsibly: Adhere to GDPR, CCPA, and other privacy regulations, ensuring that user data is managed in a compliant and secure manner.

Use Data Ethically: Leverage insights for aggregate market intelligence rather than identifying or targeting specific individuals, maintaining ethical data practices.

Maintain Transparency: Communicate your data collection practices to customers and partners to build trust and ensure compliance.

Partnering with reputable API providers can help businesses navigate legal complexities, reduce risks, and ensure data collection remains within regulatory boundaries.

Future Trends in Quick Commerce Data Intelligence

As we progress through 2024-25, several transformative trends are set to reshape how businesses leverage Quick Commerce Scraping API solutions to maintain a competitive edge:

AI-Powered Analysis: Machine learning will extend beyond data extraction, enabling automated analysis that translates scraped data into meaningful, actionable business insights rather than just raw numbers.

Predictive Capabilities: Advanced scraping systems will provide real-time market data and forecast competitor strategies and industry shifts, allowing businesses to stay proactive.

Integration with IoT: By merging scraped online data with real-time inputs from in-store sensors and connected devices, businesses can gain a more comprehensive view of market dynamics.

Hyper-Personalization: Leveraging collected data, businesses can enhance customer experiences with tailored recommendations, fostering greater engagement, loyalty, and retention.

Cross-Platform Intelligence: APIs will evolve to track data across emerging sales channels—including social commerce and voice commerce—ensuring a well-rounded market analysis.

Businesses that embrace these trends and invest in sophisticated data intelligence will be best positioned to Maximize Quick Commerce Profits in the evolving digital landscape.

How Web Data Crawler Can Help You?

We lead the way in quick commerce data solutions, providing specialized API services tailored to the unique demands of the quick commerce industry. Our platform empowers businesses to develop quick commerce profit strategies through advanced data collection and analysis.

With us, you gain access to:

Custom-built APIs designed for quick commerce data needs.

Advanced proxy networks for seamless and reliable data extraction.

AI-powered data cleaning and normalization for accurate insights.

Real-time competitive intelligence dashboards to track market trends.

Seamless integration with significant business intelligence platforms.

A compliance-focused approach to ethical data collection.

Dedicated support from quick commerce data specialists.

Our solutions have driven success for quick commerce businesses across various sectors, enhancing pricing optimization, inventory management, and competitive positioning.

By leveraging Quick Commerce Insights With Scraping API technology, clients typically achieve 15-25% profit improvements within just six months of implementation.

Conclusion

In the fast-paced, quick commerce industry, data-driven decision-making is crucial for success. A Quick Commerce Scraping API empowers businesses with actionable pricing, inventory, marketing, and operations insights.

Explore how our tailored solutions can enhance your market intelligence. Our experts analyze your challenges and craft custom data solutions to sharpen your competitive edge.

Contact Web Data Crawler today to see how our Quick Commerce Scraping API can fuel your growth in 2025. Stay ahead in the data intelligence race—turn market data into your competitive advantage!

Originally published at https://www.webdatacrawler.com.

#QuickCommerce#EcommerceData#WebScraping#DataIntelligence#MarketTrends#CompetitiveAnalysis#DynamicPricing#RetailTech#AIData#BigData#BusinessGrowth#PriceMonitoring#DataDriven#RetailAnalytics#APIIntegration#DataScraping#QuickCommerceAPI#WebDataCrawler#EcommerceInsights#SmartPricing#SupplyChainOptimization

0 notes

Text

eBay Web Scraper: Unlocking Data-Driven Business Insights

In today’s fast-paced eCommerce world, data is the key to success. Whether you're a seller looking for pricing strategies, a researcher analyzing market trends, or a business tracking competitor inventory, an eBay web scraper is a game-changer. With automated web scraping, you can collect real-time product, pricing, and seller data effortlessly.

Why Use an eBay Web Scraper?

Manually tracking thousands of listings on eBay is time-consuming and nearly impossible. With a web scraper, businesses can:

Monitor Competitor Pricing – Stay ahead by analyzing real-time price fluctuations.

Track Best-Selling Products – Identify trending products and top-performing categories.

Analyze Seller Performance – Gain insights into top-rated sellers and their strategies.

Extract Product Listings – Gather detailed product descriptions, images, and reviews for research.

How eBay Web Scraping Works

A web scraper automatically navigates eBay, extracts relevant data, and organizes it in a structured format. This data can be used for market analysis, price comparison, and decision-making, giving you a competitive edge.

For businesses needing an efficient and scalable solution, eBay Web Scraping API simplifies the process, ensuring accurate and up-to-date data collection.

Beyond eBay: Expanding Data Collection

While eBay data scraping is essential for eCommerce businesses, other industries can benefit from web scraping, too. Here are additional powerful scraping solutions:

Yellow Pages Data Scraping – Extract business listings, contact details, and customer reviews.

YT Scraper – Gather video analytics, comments, and channel insights for YouTube.

Scrape Images from a Website – Extract high-quality images and product details for digital marketing.

Scrape Jobs from the Internet – Automate job postings extraction to track hiring trends and opportunities.

Get Started with eBay Web Scraping

If you’re ready to leverage data-driven decision-making, integrating an eBay web scraper into your business strategy is a must. Whether you need pricing insights, product analytics, or competitor tracking, automated web scraping solutions can transform the way you operate.

Need a custom web scraping solution? Reach out today to explore how data scraping can supercharge your business growth!

#eBayScraper#WebScraping#DataDrivenInsights#eCommerceTools#CompetitorAnalysis#MarketTrends#PricingStrategy#DataScraping#BusinessGrowth#Automation#eCommerceData#ProductResearch#CompetitiveEdge#WebScrapingAPI#DataAnalytics#DigitalMarketing#BusinessIntelligence#ScrapingSolutions#eCommerceSuccess#TechInnovation

0 notes

Text

Grass Price Rises as Daily Scraped Data Surges to a Record High

The Surge in Grass Price and Scraped Data Highlights the Growth of the Network. The Grass price has seen a remarkable rise in recent days, reaching new highs thanks to an increase in network activity. This surge in the price of Grass (GRASS) token is a direct result of the exponential growth in the amount of data being scraped daily. As of February 23, the price of Grass surged to $2.10, marking a 122% increase from its lowest point this year. This surge is not only a reflection of the increasing demand for the token but also highlights the power of its data-scraping capabilities.

The Grass network's rapid expansion is driven by its ability to scrape enormous volumes of data. Just a few weeks ago, on February 15, the network achieved an important milestone by reaching 1 million gigabytes of daily scraped data. By Wednesday, the daily scraped data had hit a record high of 1.32 million gigabytes, up from only 2,600 gigabytes at the beginning of the year. This surge in data scraping has significantly boosted the value of the Grass token, which currently has a market capitalisation of more than $500 million. Also Read: crypto-criminal-transactions-crossed-40b-in-2024-a-deep-dive-into-the-growing-trend/ Since its inception, Grass has steadily grown its scope, scraping over 109.7 million IP addresses and indexing more than 4.47 billion URLs. This level of data traffic demonstrates the power and scalability of the Grass network. To improve its data scraping capabilities, Grass released the Grass Sion upgrade earlier this month, which improved its ability to handle multimodal data and simplified the scraping process. The growth in the Grass price follows broader market trends, as demand for tokens related to data scraping and blockchain-based analytics increases. With the growing interest in decentralised networks and data integrity, Grass's value is projected to rise further as more developers and consumers recognise its potential. The Grass network's ability to process such enormous amounts of data distinguishes it as a pioneer in the blockchain data sector, paving the way for even greater benefits in the future. In conclusion, the growth in the Grass price reflects both the increasing value of the token and higher network activity. As the volume of daily scraped data increases, so will the demand for Grass, making it a formidable challenger in the fast developing realm of blockchain technology and decentralised data scraping. Read the full article

#blockchain#blockchaingrowth#cryptocurrency#dailyscrapeddata#datascraping#Grassnetwork#Grassprice#Grasstoken#marketcap

0 notes

Text

Extracting Product Information from Capterra.com

Extracting Product Information from Capterra.com

Extracting Product Information from Capterra.com for Competitive Insights. Capterra.com is one of the leading platforms for software and business solutions, providing detailed insights into various software categories, reviews, pricing, and feature comparisons. For businesses looking to analyze competitors, identify market trends, or build a comprehensive product database, ExtractingProduct Information from Capterra.com is a game-changer.

At DataScrapingServices.com, we offer Capterra.com Product Data Extraction Services to help businesses access structured and real-time product information. With our advanced scraping techniques, companies can collect valuable software data for market research, lead generation, and strategic decision-making.

What is Capterra.com Product Information Scraping?

Capterra.com provides in-depth details about thousands of software products, including reviews, pricing, features, and competitor comparisons. Our automated web scraping solutions help businesses extract, organize, and analyze product details from Capterra.com efficiently.

By leveraging Capterra product data scraping, businesses can gain competitive insights, track software industry trends, and enhance their product offerings.

Key Data Fields Extracted from Capterra.com

Our Capterra Product Data Extraction Services provide structured and accurate data, including:

🔹 Software Name – The name of the software listed on Capterra. 🔹 Category – The industry or business category the software belongs to. 🔹 Company Name – The developer or vendor offering the software. 🔹 Features – A detailed list of software functionalities and capabilities. 🔹 Pricing Information – Cost details, including free trials, subscription models, and pricing plans. 🔹 User Ratings & Reviews – Customer feedback and star ratings to assess product performance. 🔹 Comparison with Competitors – Features, pricing, and benefits compared with similar tools. 🔹 Deployment Options – Whether the software is web-based, cloud-based, or on-premises. 🔹 Integration Capabilities – Information about integrations with other tools or platforms. 🔹 Contact Information – Business details, including website links and customer support options.

With these essential data points, businesses can make informed decisions, enhance their software offerings, and improve marketing strategies.

Benefits of Extracting Product Data from Capterra.com

1. Competitive Market Analysis

By extracting software data from Capterra, businesses can compare features, pricing, and customer feedback, gaining insights into competitor strengths and weaknesses.

2. Lead Generation & Business Growth

Companies can use Capterra product data to identify potential leads, reach out to businesses looking for software solutions, and tailor their marketing efforts accordingly.

3. Software Trend Analysis

Tracking software reviews, ratings, and features over time helps businesses identify industry trends, customer preferences, and emerging technologies.

4. Enhanced Product Development

Analyzing competitor products allows businesses to improve their own software, add missing features, and stay ahead in the market.

5. Improved Marketing & SEO Strategies

With detailed product data, companies can optimize their content, target the right audience, and develop data-driven marketing campaigns.

6. Automated & Scalable Data Extraction

Our Capterra.com data scraping services provide businesses with real-time and structured data in an automated, scalable, and cost-effective manner.

Why Choose DataScrapingServices.com?

At DataScrapingServices.com, we provide customized Capterra data extraction services tailored to your business needs.

✅ Accurate & Reliable Data – We ensure high-quality, clean, and structureddata for business analysis. ✅ Real-Time Updates – Get the latest software details, reviews, and pricing trends from Capterra. ✅ Scalable Solutions – Whether you need data for hundreds or thousands of software products, we handle it efficiently. ✅ Multiple Data Formats – We deliver data in CSV, Excel, JSON, or API format, making integration easy. ✅ 24/7 Support – Our team is available to assist with queries, customizations, and ongoing support.

Best eCommerce Data Scraping Services Provider

Amazon Product Review Extraction

Walmart Product Price Scraping Services

Etsy Price and Product Details Scraping

Macys Product Data Extraction

Overstock Product Pricing Scraping Services

Gap Product Pricing Extraction

Product Reviews Data Extraction

Best Buy Product Price Extraction

Homedepot Product Listing Scraping

Overstock Product Prices Data Extraction

Extracting Product Information from Capterra.com Services in USA:

Atlanta, Denver, Fresno, Bakersfield, Mesa, Long Beach, Fresno, Austin, Tulsa, Philadelphia, Indianapolis, Colorado, Houston, San Jose, Jacksonville, El Paso, Sacramento, Charlotte, Sacramento, Wichita, Louisville, Washington, Orlando, Seattle, Memphis, Dallas, Las Vegas, San Antonio, Oklahoma City, San Francisco, Omaha, New Orleans, Milwaukee, Fort Worth, Virginia Beach, Raleigh, Columbus, Chicago, Long Beach, Nashville, Boston, Tucson and New York.

Get Started with Capterra.com Product Data Scraping Today!

Are you looking to extract valuable software insights from Capterra.com? Our Capterra.com Product Data Extraction Services provide the structured data you need for business growth, competitor analysis, and market research.

📧 Contact us at: [email protected]🌐 Visit our website: DataScrapingServices.com

🚀 Gain a competitive edge with accurate and real-time software product insights!

#webscraping#datascraping#ecommercedatascraping#capterradatascraping#extractingproductinformationfromcapterra#capterraproductreviewsscraping#productdetailsextraction#leadgeneration#datadrivenmarketing#webscrapingservices#businessinsights#digitalgrowth#datascrapingexperts#datascrapingservices

0 notes

Text

Data scraping and QR codes create a powerful duo for smart data collection. QR codes simplify data entry by providing instant access points, while data scraping automates the extraction of structured insights from websites or databases. Together, they streamline data workflows, enhance accuracy, and enable businesses to make data-driven decisions with ease and efficiency. Perfect for modern, tech-savvy organizations!

0 notes

Text

#DataScraping#digitalmarketing#dataextraction#artificial intelligence#DataAutomation#WebAutomation#AutomationTools

0 notes

Text

How Can Scraping On-Demand Grocery Delivery Data Revolutionize The Industry?

In recent years, technology has greatly changed how we get groceries delivered to our doorstep. Businesses using web scraping can learn a lot about how people shop for groceries online. This information helps them make intelligent choices, customize their services to people's wants, and make customers happier. Imagine your grocery delivery. The app predicts your needs before you know them. When you use grocery delivery apps, it can help you. A bundle of data is being compiled in the background. That's the power of data scraping.

The ease and accessibility of online grocery delivery solutions have changed how individuals buy groceries. This eliminates the requirement for journeys to nearby stores. This business is flourishing, with an expected 20% annual revenue increase between 2021 to 2031. Orders on well-known websites like Amazon Fresh, InstaCart and DoorDash are rising significantly. However, collecting data on on-demand grocery delivery has led to the emergence of other data-scraping businesses.

What is On-Demand Grocery Data?

Grocery delivery app data scraping means all the information gathered from how on-demand grocery delivery services work. These services have transformed the buying behavior of customers and deliver them quickly to their door. The data includes different parts of the process, like what customers order, how much, where it's going, and when they want it delivered. This data is essential to make sure orders are correct and delivered on time. So, looking at on-demand grocery delivery app data scraping helps understand how people shop for groceries.

By collecting information from grocery data, businesses can see what customers are doing, keep an eye on product prices, and know about different products and special deals. This helps businesses run more smoothly daily, make smarter decisions, stay aware of prices, and give them an advantage over others.

What is the Significance of Scraping On-Demand Grocery Delivery Data?

Businesses scrape on-demand grocery delivery data as a significant boost to the industry. It is a tool that makes everything work smoother and more innovative. When businesses scrape data, they get the most up-to-date and accurate information. For example, they can know current prices, available products, and customers' preferences.

This is crucial since the grocery segment is undergoing rapid change. Scraping grocery data enables businesses to change and swiftly become incredibly efficient. They can keep ahead of the game by regularly monitoring what their competitors are doing, giving them a significant advantage over other firms. This helps not just enterprises but also customers. Companies may use scraped data to guarantee that our groceries come faster, at better prices, and with a more personalized shopping experience.

Businesses are able to make well-informed decisions by extracting grocery data because they have detailed knowledge of the market. Imagine knowing which store has the best prices or always has your favorite products in stock. Scraping helps companies figure this out. They can adjust their prices in real time to stay competitive and offer us the best discounts.

Grocery delivery app data scraping helps firms identify what their consumers like. They may learn about our tastes by reading our reviews, ratings, and comments. This implies companies may modify their goods to meet our preferences, making our purchasing experience more personalized. It acts as a virtual shopping assistant that understands just what you prefer.

Benefits of Scraping On-Demand Grocery Delivery Data

Businesses are able to scrape grocery delivery app data that offers several benefits to firms in this ever-changing market. Gathering and evaluating data from these services provides several opportunities for improvement.

Getting the correct prices

Assume you're in a race and want to ensure you're running at the same pace as everyone else. At the store, they aim to ensure the pricing is competitive with other stores. It works similarly to a speedometer for our prices by providing real-time information on other retailers' charges. If we observe competitors moving quicker (charging less), we might accelerate (reducing our rates) to stay in the race.

Consumer Behavior Evaluation

Businesses can learn a lot from customer reviews and ratings. They can gather this information in real-time and adjust their offerings to meet customer needs and expectations better. Scraping grocery delivery app data helps make customers happier and more loyal.

Delivering Faster and Smarter

We want to find the fastest way to the park and the most efficient way to deliver groceries. Finding efficient and fast delivery methods is like finding the quickest paths to the park. This saves fuel and time. It also helps the environment and makes delivery faster.

Competitor Analysis

In our store, we want to know what other stores are doing so we can make the right moves, too. Watching what other stores are doing helps to understand the actions of competitors. If we see something new or changing in the grocery market, we can make intelligent decisions and stay ahead of the competition.

Enhanced Customer Experience

Imagine having all your favorite snacks whenever you want. We want our store to have what our customers want so they are happy. We always offer what customers want, just like having a snack cabinet full of all your favorites. Customers like shopping with us, and we deliver their items as quickly as possible to make them even happier.

Operational Efficiency

Nowadays, we want the computer to assist us with our shop operations. Imagine having a robot companion who assists you with your duties. Similarly, we want the computer to assist us with our shop operations.

Future Trends of On-Demand Grocery Delivery Data

AI, machine learning, blockchain, and regular grocery data scraping can give us more detailed information. We can use this information to ensure we collect data fairly and sustainably from grocery delivery and grocery apps.

Advanced Machine Learning and AI Integration:

Organizations can enhance the processing of on-demand grocery delivery data scraping using advanced machine learning and AI. This improves automation and sophistication. It also offers valuable insights and predictive analytics to help with decision-making.

Predictive demand forecasting

Businesses can use historical and also real-time information to predict future demand. This improves management of inventory and helps forecast high-demand periods. It also ensures enough resources to meet consumer expectations.

Enhanced personalization

Using scraped data to provide highly tailored suggestions and experiences to each consumer. Tailoring on-demand delivery services to unique interests and habits will help boost consumer loyalty and happiness.

Geospatial Intelligence for Efficient Delivery

Businesses use location information, such as maps and real-time data, to help drivers find the best and fastest routes. This is similar to how your GPS guides you to the quickest way to a friend's house. Businesses use this location data to plan to improve delivery routes in real-time.

Blockchain in Data Security

Using blockchain technology can improve the security of the information we gather. As concerns about data security rise, blockchain emerges as a highly secure and trustworthy solution. It protects the acquired data from manipulation and guarantees accuracy and reliability.

Conclusion

Web scraping can have a big impact on the fast-changing, on-demand grocery delivery industry. It lets businesses get the most current information to make smart decisions and improve their operations. This technology also helps businesses to stay updated about their competition. The industry depends on data. Grocery data scraping will change how we shop for essential items. Web Screen Scraping assists in making the experience smoother and more personalized approach to scrape grocery delivery app data. Grocery delivery services can use data to create a personalized and efficient shopping experience. They are leaders in the digital changes happening in retail.

0 notes

Link

Ready to supercharge your lead generation? Introducing the Google Maps Places Scraper, a powerful tool that allows you to extract local business contacts directly from Google Maps. With features like data enrichment—with emails, social profiles, and phone numbers—you can create a robust lead database that helps you identify potential clients and monitor competitors effortlessly. But wait. Before you dive in, it's crucial to understand the pricing structure and user experiences. While the one-time payment offers lifetime access, there are limitations to consider, including unexpected billing issues and usage caps. Our latest blog breaks down the pros and cons, detailed features, and user feedback, providing you with insights to make an informed decision. Is it worth the investment? Join us as we explore the benefits and pitfalls of this innovative tool. Don't miss out on valuable marketing strategies that could transform your outreach efforts. Check out our full review to get all the details: https://jomiruddin.com/google-maps-scraper-lifetime-deal-review-appsumo/ #DigitalMarketing #LeadGeneration #BusinessTools #Entrepreneur #MarketingStrategies

#localbusinesscontacts#GoogleMapsscraper#AppSumodiscount#salesmanagement#leadgenerationtool#customerfeedback#datascraping#Outscraperreview#marketingtools#billingpractices#sales management

0 notes

Text

Unlock Marketing Potential with Wisbar.org Attorney Data Extraction by Lawyersdatalab.com

Unlock Marketing Potential with Wisbar.org Attorney Data Extraction by Lawyersdatalab.com

In the competitive legal landscape, data-driven marketing is essential for law firms and lawyer marketing companies to stand out. One valuable resource to harness this potential is Wisbar.org Attorney Data Extraction, offered by Lawyersdatalab.com. By extracting detailed and up-to-date attorney information from the Wisconsin State Bar (Wisbar.org), firms can enhance their outreach, marketing, and networking strategies.

Wisbar.org Attorney Data Extraction by LawyersDataLab.com is an essential service for law firms and marketing agencies looking to streamline their outreach and marketing strategies. By extracting detailed attorney information, such as contact details, practice areas, and locations, this service enables targeted campaigns that drive better results. With access to accurate and up-to-date data from the Wisconsin State Bar, firms can efficiently identify potential clients, partners, or competitors, and tailor their marketing efforts for maximum impact. Whether you're a law firm or a legal marketing company, leveraging Wisbar.org attorney data helps unlock new growth opportunities.

Key Data Fields

With our Wisbar.org Attorney Data Extraction services, you gain access to:

1. Attorney names

2. Contact details (phone numbers, email addresses)

3. Practice areas and specializations

4. Firm names and affiliations

5. Geographic locations

6. Years of experience

7. Membership status

8. Education and certifications

9. License details

10. Bar association memberships

This comprehensive set of data gives you the ability to create segmented and tailored marketing campaigns that resonate with your target audience.

How It Works?

Our Wisbar.org Attorney Data Extraction service helps you extract valuable attorney data, such as names, contact information, practice areas, and location. This data is crucial for law firms and marketing companies looking to build targeted campaigns or expand their network in the legal industry. The extraction process is efficient, ensuring that you receive accurate and organized data for immediate use.

Benefits for Law Firm Marketing

For law firms, targeted marketing is key to standing out and attracting clients. With the Wisbar.org Attorney Data Extraction service, you can identify potential partners, clients, or competitors. Law firms can use the data to create personalized outreach efforts, whether for networking, client acquisition, or strategic partnerships. The ability to pinpoint specific attorneys based on practice area and location allows law firms to create more effective and focused marketing campaigns.

Benefits for Lawyer Marketing Companies

For lawyer marketing companies, the Wisbar.org Attorney Data Extraction provides a solid foundation for effective marketing strategies. By having access to accurate attorney data, marketing agencies can craft personalized marketing materials, create specific outreach lists, and help law firms grow their clientele. The data also allows for detailed analysis, providing insights into market trends, competitive positioning, and the needs of prospective clients.

State Bar Directory Data Scraping

Nebraska State Bar Attorneys Email Database

Azbar.org Attorney Data Scraping

Ohio State Bar Lawyers Mailing List

RIBar Lawyers Email Database

Utahbar.org Lawyers Email List

Wyoming Bar Association Lawyer Data Scraping

Oregon State Bar Attorney Mailing List

State Bar of Michigan Lawyers Email List

Floridabar.org Lawyers Data Scraping

Texasbar.com Lawyer Email Database Extraction

Hawaii State Bar Lawyers Email List

Best State bar Data Extraction Services in USA

Bakersfield, Tulsa, Portland, Oklahoma City, Long Beach, Columbus, Chicago, San Antonio, Indianapolis, Jacksonville, Detroit, Denver, San Diego, New Orleans, Tucson, Phoenix, Nashville, Omaha, Virginia Beach, Atlanta, Los Angeles, Miami, Washington D.C., Baltimore, Kansas City, San Francisco, Sacramento, Raleigh, San Jose, Arlington, El Paso, Honolulu, Wichita, Austin, New York, Colorado, Louisville, Fresno, Memphis, Philadelphia, Fort Worth, Houston, Springs, Orlando, Dallas, Albuquerque, Las Vegas, Seattle, Boston, Charlotte, Milwaukee and Mesa.

Unlock New Opportunities with Accurate Attorney Data from Wisbar.org

At Lawyersdatalab.com, we understand the importance of having reliable and relevant data to drive effective marketing efforts. Our Wisbar.org Attorney Data Extraction service empowers law firms and marketing agencies with the data they need to thrive in the legal sector. Contact us today to start unlocking new marketing opportunities with precise attorney data.

Website: Lawyersdatalab.com

Email: [email protected]

#wisbarattorneydataextraction#extractingattorneyprofilesfromwisbar#statebardatascraping#lawfirmmarketing#attorneydatascraping#wisbar#attorneymarketing#lawyermarketing#datascraping#legalnetworking#lawyersdatalab

0 notes

Text

Web Scraping will extract data from websites and generate website pages using HTML scripting scripts for market monitoring, analysis, research, key comparisons, and data collection.🎯

🔮Common tools include Scrappy and Selenium, and programming languages such as Python, Java Script, etc.✨

👉So can you give me the responsibility I will check the website status for all website issues using a browser request agent and handle exceptions and errors gracefully Can you give me the responsibility as a powerful tool for collecting web scraping data?

💠Then get in touch today👇

🪩Humayera Sultana Himu ✅

0 notes

Text

🌟 Dive Into the World of Price Monitoring! 🌟

Hey Tumblr fam! Ever wondered how some folks always seem to snag the best deals? It's all about mastering the art of price monitoring, and we've got the inside scoop for you! 📉

Our latest blog article, "How to Monitor Walmart Price Changes," explores the exciting world of retail dynamics. With Walmart as a shining example of success, the article reveals how they ace strategic price monitoring. If you're curious about the tools and strategies that make it happen, this is your go-to guide!

From understanding when to look for price drops to tips on tracking effectively, this read is packed with game-changing insights. Ready to level up your shopping game and stay ahead in the retail race? Check out the full article and unlock the secrets to smart shopping! 🛒✨

#PriceMonitoring#WalmartDeals#RetailSecrets#SmartShopping#scrapingpros#webscraping#datascraping#dataextraction

0 notes