#Kubernetes real-time monitoring

Explore tagged Tumblr posts

Text

Lens Kubernetes: Simple Cluster Management Dashboard and Monitoring

Lens Kubernetes: Simple Cluster Management Dashboard and Monitoring #homelab #kubernetes #KubernetesManagement #LensKubernetesDesktop #KubernetesClusterManagement #MultiClusterManagement #KubernetesSecurityFeatures #KubernetesUI #kubernetesmonitoring

Kubernetes is a well-known container orchestration platform. It allows admins and organizations to operate their containers and support modern applications in the enterprise. Kubernetes management is not for the “faint of heart.” It requires the right skill set and tools. Lens Kubernetes desktop is an app that enables managing Kubernetes clusters on Windows and Linux devices. Table of…

View On WordPress

#Kubernetes cluster management#Kubernetes collaboration tools#Kubernetes management#Kubernetes performance improvements#Kubernetes real-time monitoring#Kubernetes security features#Kubernetes user interface#Lens Kubernetes 2023.10#Lens Kubernetes Desktop#multi-cluster management

0 notes

Text

How Python Powers Scalable and Cost-Effective Cloud Solutions

Explore the role of Python in developing scalable and cost-effective cloud solutions. This guide covers Python's advantages in cloud computing, addresses potential challenges, and highlights real-world applications, providing insights into leveraging Python for efficient cloud development.

Introduction

In today's rapidly evolving digital landscape, businesses are increasingly leveraging cloud computing to enhance scalability, optimize costs, and drive innovation. Among the myriad of programming languages available, Python has emerged as a preferred choice for developing robust cloud solutions. Its simplicity, versatility, and extensive library support make it an ideal candidate for cloud-based applications.

In this comprehensive guide, we will delve into how Python empowers scalable and cost-effective cloud solutions, explore its advantages, address potential challenges, and highlight real-world applications.

Why Python is the Preferred Choice for Cloud Computing?

Python's popularity in cloud computing is driven by several factors, making it the preferred language for developing and managing cloud solutions. Here are some key reasons why Python stands out:

Simplicity and Readability: Python's clean and straightforward syntax allows developers to write and maintain code efficiently, reducing development time and costs.

Extensive Library Support: Python offers a rich set of libraries and frameworks like Django, Flask, and FastAPI for building cloud applications.

Seamless Integration with Cloud Services: Python is well-supported across major cloud platforms like AWS, Azure, and Google Cloud.

Automation and DevOps Friendly: Python supports infrastructure automation with tools like Ansible, Terraform, and Boto3.

Strong Community and Enterprise Adoption: Python has a massive global community that continuously improves and innovates cloud-related solutions.

How Python Enables Scalable Cloud Solutions?

Scalability is a critical factor in cloud computing, and Python provides multiple ways to achieve it:

1. Automation of Cloud Infrastructure

Python's compatibility with cloud service provider SDKs, such as AWS Boto3, Azure SDK for Python, and Google Cloud Client Library, enables developers to automate the provisioning and management of cloud resources efficiently.

2. Containerization and Orchestration

Python integrates seamlessly with Docker and Kubernetes, enabling businesses to deploy scalable containerized applications efficiently.

3. Cloud-Native Development

Frameworks like Flask, Django, and FastAPI support microservices architecture, allowing businesses to develop lightweight, scalable cloud applications.

4. Serverless Computing

Python's support for serverless platforms, including AWS Lambda, Azure Functions, and Google Cloud Functions, allows developers to build applications that automatically scale in response to demand, optimizing resource utilization and cost.

5. AI and Big Data Scalability

Python’s dominance in AI and data science makes it an ideal choice for cloud-based AI/ML services like AWS SageMaker, Google AI, and Azure Machine Learning.

Looking for expert Python developers to build scalable cloud solutions? Hire Python Developers now!

Advantages of Using Python for Cloud Computing

Cost Efficiency: Python’s compatibility with serverless computing and auto-scaling strategies minimizes cloud costs.

Faster Development: Python’s simplicity accelerates cloud application development, reducing time-to-market.

Cross-Platform Compatibility: Python runs seamlessly across different cloud platforms.

Security and Reliability: Python-based security tools help in encryption, authentication, and cloud monitoring.

Strong Community Support: Python developers worldwide contribute to continuous improvements, making it future-proof.

Challenges and Considerations

While Python offers many benefits, there are some challenges to consider:

Performance Limitations: Python is an interpreted language, which may not be as fast as compiled languages like Java or C++.

Memory Consumption: Python applications might require optimization to handle large-scale cloud workloads efficiently.

Learning Curve for Beginners: Though Python is simple, mastering cloud-specific frameworks requires time and expertise.

Python Libraries and Tools for Cloud Computing

Python’s ecosystem includes powerful libraries and tools tailored for cloud computing, such as:

Boto3: AWS SDK for Python, used for cloud automation.

Google Cloud Client Library: Helps interact with Google Cloud services.

Azure SDK for Python: Enables seamless integration with Microsoft Azure.

Apache Libcloud: Provides a unified interface for multiple cloud providers.

PyCaret: Simplifies machine learning deployment in cloud environments.

Real-World Applications of Python in Cloud Computing

1. Netflix - Scalable Streaming with Python

Netflix extensively uses Python for automation, data analysis, and managing cloud infrastructure, enabling seamless content delivery to millions of users.

2. Spotify - Cloud-Based Music Streaming

Spotify leverages Python for big data processing, recommendation algorithms, and cloud automation, ensuring high availability and scalability.

3. Reddit - Handling Massive Traffic

Reddit uses Python and AWS cloud solutions to manage heavy traffic while optimizing server costs efficiently.

Future of Python in Cloud Computing

The future of Python in cloud computing looks promising with emerging trends such as:

AI-Driven Cloud Automation: Python-powered AI and machine learning will drive intelligent cloud automation.

Edge Computing: Python will play a crucial role in processing data at the edge for IoT and real-time applications.

Hybrid and Multi-Cloud Strategies: Python’s flexibility will enable seamless integration across multiple cloud platforms.

Increased Adoption of Serverless Computing: More enterprises will adopt Python for cost-effective serverless applications.

Conclusion

Python's simplicity, versatility, and robust ecosystem make it a powerful tool for developing scalable and cost-effective cloud solutions. By leveraging Python's capabilities, businesses can enhance their cloud applications' performance, flexibility, and efficiency.

Ready to harness the power of Python for your cloud solutions? Explore our Python Development Services to discover how we can assist you in building scalable and efficient cloud applications.

FAQs

1. Why is Python used in cloud computing?

Python is widely used in cloud computing due to its simplicity, extensive libraries, and seamless integration with cloud platforms like AWS, Google Cloud, and Azure.

2. Is Python good for serverless computing?

Yes! Python works efficiently in serverless environments like AWS Lambda, Azure Functions, and Google Cloud Functions, making it an ideal choice for cost-effective, auto-scaling applications.

3. Which companies use Python for cloud solutions?

Major companies like Netflix, Spotify, Dropbox, and Reddit use Python for cloud automation, AI, and scalable infrastructure management.

4. How does Python help with cloud security?

Python offers robust security libraries like PyCryptodome and OpenSSL, enabling encryption, authentication, and cloud monitoring for secure cloud applications.

5. Can Python handle big data in the cloud?

Yes! Python supports big data processing with tools like Apache Spark, Pandas, and NumPy, making it suitable for data-driven cloud applications.

#Python development company#Python in Cloud Computing#Hire Python Developers#Python for Multi-Cloud Environments

2 notes

·

View notes

Text

Exploring the Azure Technology Stack: A Solution Architect’s Journey

Kavin

As a solution architect, my career revolves around solving complex problems and designing systems that are scalable, secure, and efficient. The rise of cloud computing has transformed the way we think about technology, and Microsoft Azure has been at the forefront of this evolution. With its diverse and powerful technology stack, Azure offers endless possibilities for businesses and developers alike. My journey with Azure began with Microsoft Azure training online, which not only deepened my understanding of cloud concepts but also helped me unlock the potential of Azure’s ecosystem.

In this blog, I will share my experience working with a specific Azure technology stack that has proven to be transformative in various projects. This stack primarily focuses on serverless computing, container orchestration, DevOps integration, and globally distributed data management. Let’s dive into how these components come together to create robust solutions for modern business challenges.

Understanding the Azure Ecosystem

Azure’s ecosystem is vast, encompassing services that cater to infrastructure, application development, analytics, machine learning, and more. For this blog, I will focus on a specific stack that includes:

Azure Functions for serverless computing.

Azure Kubernetes Service (AKS) for container orchestration.

Azure DevOps for streamlined development and deployment.

Azure Cosmos DB for globally distributed, scalable data storage.

Each of these services has unique strengths, and when used together, they form a powerful foundation for building modern, cloud-native applications.

1. Azure Functions: Embracing Serverless Architecture

Serverless computing has redefined how we build and deploy applications. With Azure Functions, developers can focus on writing code without worrying about managing infrastructure. Azure Functions supports multiple programming languages and offers seamless integration with other Azure services.

Real-World Application

In one of my projects, we needed to process real-time data from IoT devices deployed across multiple locations. Azure Functions was the perfect choice for this task. By integrating Azure Functions with Azure Event Hubs, we were able to create an event-driven architecture that processed millions of events daily. The serverless nature of Azure Functions allowed us to scale dynamically based on workload, ensuring cost-efficiency and high performance.

Key Benefits:

Auto-scaling: Automatically adjusts to handle workload variations.

Cost-effective: Pay only for the resources consumed during function execution.

Integration-ready: Easily connects with services like Logic Apps, Event Grid, and API Management.

2. Azure Kubernetes Service (AKS): The Power of Containers

Containers have become the backbone of modern application development, and Azure Kubernetes Service (AKS) simplifies container orchestration. AKS provides a managed Kubernetes environment, making it easier to deploy, manage, and scale containerized applications.

Real-World Application

In a project for a healthcare client, we built a microservices architecture using AKS. Each service—such as patient records, appointment scheduling, and billing—was containerized and deployed on AKS. This approach provided several advantages:

Isolation: Each service operated independently, improving fault tolerance.

Scalability: AKS scaled specific services based on demand, optimizing resource usage.

Observability: Using Azure Monitor, we gained deep insights into application performance and quickly resolved issues.

The integration of AKS with Azure DevOps further streamlined our CI/CD pipelines, enabling rapid deployment and updates without downtime.

Key Benefits:

Managed Kubernetes: Reduces operational overhead with automated updates and patching.

Multi-region support: Enables global application deployments.

Built-in security: Integrates with Azure Active Directory and offers role-based access control (RBAC).

3. Azure DevOps: Streamlining Development Workflows

Azure DevOps is an all-in-one platform for managing development workflows, from planning to deployment. It includes tools like Azure Repos, Azure Pipelines, and Azure Artifacts, which support collaboration and automation.

Real-World Application

For an e-commerce client, we used Azure DevOps to establish an efficient CI/CD pipeline. The project involved multiple teams working on front-end, back-end, and database components. Azure DevOps provided:

Version control: Using Azure Repos for centralized code management.

Automated pipelines: Azure Pipelines for building, testing, and deploying code.

Artifact management: Storing dependencies in Azure Artifacts for seamless integration.

The result? Deployment cycles that previously took weeks were reduced to just a few hours, enabling faster time-to-market and improved customer satisfaction.

Key Benefits:

End-to-end integration: Unifies tools for seamless development and deployment.

Scalability: Supports projects of all sizes, from startups to enterprises.

Collaboration: Facilitates team communication with built-in dashboards and tracking.

4. Azure Cosmos DB: Global Data at Scale

Azure Cosmos DB is a globally distributed, multi-model database service designed for mission-critical applications. It guarantees low latency, high availability, and scalability, making it ideal for applications requiring real-time data access across multiple regions.

Real-World Application

In a project for a financial services company, we used Azure Cosmos DB to manage transaction data across multiple continents. The database’s multi-region replication ensure data consistency and availability, even during regional outages. Additionally, Cosmos DB’s support for multiple APIs (SQL, MongoDB, Cassandra, etc.) allowed us to integrate seamlessly with existing systems.

Key Benefits:

Global distribution: Data is replicated across regions with minimal latency.

Flexibility: Supports various data models, including key-value, document, and graph.

SLAs: Offers industry-leading SLAs for availability, throughput, and latency.

Building a Cohesive Solution

Combining these Azure services creates a technology stack that is flexible, scalable, and efficient. Here’s how they work together in a hypothetical solution:

Data Ingestion: IoT devices send data to Azure Event Hubs.

Processing: Azure Functions processes the data in real-time.

Storage: Processed data is stored in Azure Cosmos DB for global access.

Application Logic: Containerized microservices run on AKS, providing APIs for accessing and manipulating data.

Deployment: Azure DevOps manages the CI/CD pipeline, ensuring seamless updates to the application.

This architecture demonstrates how Azure’s technology stack can address modern business challenges while maintaining high performance and reliability.

Final Thoughts

My journey with Azure has been both rewarding and transformative. The training I received at ACTE Institute provided me with a strong foundation to explore Azure’s capabilities and apply them effectively in real-world scenarios. For those new to cloud computing, I recommend starting with a solid training program that offers hands-on experience and practical insights.

As the demand for cloud professionals continues to grow, specializing in Azure’s technology stack can open doors to exciting opportunities. If you’re based in Hyderabad or prefer online learning, consider enrolling in Microsoft Azure training in Hyderabad to kickstart your journey.

Azure’s ecosystem is continuously evolving, offering new tools and features to address emerging challenges. By staying committed to learning and experimenting, we can harness the full potential of this powerful platform and drive innovation in every project we undertake.

#cybersecurity#database#marketingstrategy#digitalmarketing#adtech#artificialintelligence#machinelearning#ai

2 notes

·

View notes

Text

Breaking Barriers With DevOps: A Digital Transformation Journey

In today's rapidly evolving technological landscape, the term "DevOps" has become ingrained. But what does it truly entail, and why is it of paramount importance within the realms of software development and IT operations? In this comprehensive guide, we will embark on a journey to delve deeper into the principles, practices, and substantial advantages that DevOps brings to the table.

Understanding DevOps

DevOps, a fusion of "Development" and "Operations," transcends being a mere collection of practices; it embodies a cultural and collaborative philosophy. At its core, DevOps aims to bridge the historical gap that has separated development and IT operations teams. Through the promotion of collaboration and the harnessing of automation, DevOps endeavors to optimize the software delivery pipeline, empowering organizations to efficiently and expeditiously deliver top-tier software products and services.

Key Principles of DevOps

Collaboration: DevOps champions the concept of seamless collaboration between development and operations teams. This approach dismantles the conventional silos, cultivating communication and synergy.

Automation: Automation is the crucial for DevOps. It entails the utilization of tools and scripts to automate mundane and repetitive tasks, such as code integration, testing, and deployment. Automation not only curtails errors but also accelerates the software delivery process.

Continuous Integration (CI): Continuous Integration (CI) is the practice of automatically combining code alterations into a shared repository several times daily. This enables teams to detect integration issues in the embryonic stages of development, expediting resolutions.

Continuous Delivery (CD): Continuous Delivery (CD) is an extension of CI, automating the deployment process. CD guarantees that code modifications can be swiftly and dependably delivered to production or staging environments.

Monitoring and Feedback: DevOps places a premium on real-time monitoring of applications and infrastructure. This vigilance facilitates the prompt identification of issues and the accumulation of feedback for incessant enhancement.

Core Practices of DevOps

Infrastructure as Code (IaC): Infrastructure as Code (IaC) encompasses the management and provisioning of infrastructure using code and automation tools. This practice ensures uniformity and scalability in infrastructure deployment.

Containerization: Containerization, expressed by tools like Docker, covers applications and their dependencies within standardized units known as containers. Containers simplify deployment across heterogeneous environments.

Orchestration: Orchestration tools, such as Kubernetes, oversee the deployment, scaling, and monitoring of containerized applications, ensuring judicious resource utilization.

Microservices: Microservices architecture dissects applications into smaller, autonomously deployable services. Teams can fabricate, assess, and deploy these services separately, enhancing adaptability.

Benefits of DevOps

When an organization embraces DevOps, it doesn't merely adopt a set of practices; it unlocks a treasure of benefits that can revolutionize its approach to software development and IT operations. Let's delve deeper into the wealth of advantages that DevOps bequeaths:

1. Faster Time to Market: In today's competitive landscape, speed is of the essence. DevOps expedites the software delivery process, enabling organizations to swiftly roll out new features and updates. This acceleration provides a distinct competitive edge, allowing businesses to respond promptly to market demands and stay ahead of the curve.

2. Improved Quality: DevOps places a premium on automation and continuous testing. This relentless pursuit of quality results in superior software products. By reducing manual intervention and ensuring thorough testing, DevOps minimizes the likelihood of glitches in production. This improves consumer happiness and trust in turn.

3. Increased Efficiency: The automation-centric nature of DevOps eliminates the need for laborious manual tasks. This not only saves time but also amplifies operational efficiency. Resources that were once tied up in repetitive chores can now be redeployed for more strategic and value-added activities.

4. Enhanced Collaboration: Collaboration is at the heart of DevOps. By breaking down the traditional silos that often exist between development and operations teams, DevOps fosters a culture of teamwork. This collaborative spirit leads to innovation, problem-solving, and a shared sense of accountability. When teams work together seamlessly, extraordinary results are achieved.

5. Increased Resistance: The ability to identify and address issues promptly is a hallmark of DevOps. Real-time monitoring and feedback loops provide an early warning system for potential problems. This proactive approach not only prevents issues from escalating but also augments system resilience. Organizations become better equipped to weather unexpected challenges.

6. Scalability: As businesses grow, so do their infrastructure and application needs. DevOps practices are inherently scalable. Whether it's expanding server capacity or deploying additional services, DevOps enables organizations to scale up or down as required. This adaptability ensures that resources are allocated optimally, regardless of the scale of operations.

7. Cost Savings: Automation and effective resource management are key drivers of long-term cost reductions. By minimizing manual intervention, organizations can save on labor costs. Moreover, DevOps practices promote efficient use of resources, resulting in reduced operational expenses. These cost savings can be channeled into further innovation and growth.

In summation, DevOps transcends being a fleeting trend; it constitutes a transformative approach to software development and IT operations. It champions collaboration, automation, and incessant improvement, capacitating organizations to respond to market vicissitudes and customer requisites with nimbleness and efficiency.

Whether you aspire to elevate your skills, embark on a novel career trajectory, or remain at the vanguard in your current role, ACTE Technologies is your unwavering ally on the expedition of perpetual learning and career advancement. Enroll today and unlock your potential in the dynamic realm of technology. Your journey towards success commences here. Embracing DevOps practices has the potential to usher in software development processes that are swifter, more reliable, and of higher quality. Join the DevOps revolution today!

10 notes

·

View notes

Text

Journey to Devops

The concept of “DevOps” has been gaining traction in the IT sector for a couple of years. It involves promoting teamwork and interaction, between software developers and IT operations groups to enhance the speed and reliability of software delivery. This strategy has become widely accepted as companies strive to provide software to meet customer needs and maintain an edge, in the industry. In this article we will explore the elements of becoming a DevOps Engineer.

Step 1: Get familiar with the basics of Software Development and IT Operations:

In order to pursue a career as a DevOps Engineer it is crucial to possess a grasp of software development and IT operations. Familiarity with programming languages like Python, Java, Ruby or PHP is essential. Additionally, having knowledge about operating systems, databases and networking is vital.

Step 2: Learn the principles of DevOps:

It is crucial to comprehend and apply the principles of DevOps. Automation, continuous integration, continuous deployment and continuous monitoring are aspects that need to be understood and implemented. It is vital to learn how these principles function and how to carry them out efficiently.

Step 3: Familiarize yourself with the DevOps toolchain:

Git: Git, a distributed version control system is extensively utilized by DevOps teams, for code repository management. It aids in monitoring code alterations facilitating collaboration, among team members and preserving a record of modifications made to the codebase.

Ansible: Ansible is an open source tool used for managing configurations deploying applications and automating tasks. It simplifies infrastructure management. Saves time when performing tasks.

Docker: Docker, on the other hand is a platform for containerization that allows DevOps engineers to bundle applications and dependencies into containers. This ensures consistency and compatibility across environments from development, to production.

Kubernetes: Kubernetes is an open-source container orchestration platform that helps manage and scale containers. It helps automate the deployment, scaling, and management of applications and micro-services.

Jenkins: Jenkins is an open-source automation server that helps automate the process of building, testing, and deploying software. It helps to automate repetitive tasks and improve the speed and efficiency of the software delivery process.

Nagios: Nagios is an open-source monitoring tool that helps us monitor the health and performance of our IT infrastructure. It also helps us to identify and resolve issues in real-time and ensure the high availability and reliability of IT systems as well.

Terraform: Terraform is an infrastructure as code (IAC) tool that helps manage and provision IT infrastructure. It helps us automate the process of provisioning and configuring IT resources and ensures consistency between development and production environments.

Step 4: Gain practical experience:

The best way to gain practical experience is by working on real projects and bootcamps. You can start by contributing to open-source projects or participating in coding challenges and hackathons. You can also attend workshops and online courses to improve your skills.

Step 5: Get certified:

Getting certified in DevOps can help you stand out from the crowd and showcase your expertise to various people. Some of the most popular certifications are:

Certified Kubernetes Administrator (CKA)

AWS Certified DevOps Engineer

Microsoft Certified: Azure DevOps Engineer Expert

AWS Certified Cloud Practitioner

Step 6: Build a strong professional network:

Networking is one of the most important parts of becoming a DevOps Engineer. You can join online communities, attend conferences, join webinars and connect with other professionals in the field. This will help you stay up-to-date with the latest developments and also help you find job opportunities and success.

Conclusion:

You can start your journey towards a successful career in DevOps. The most important thing is to be passionate about your work and continuously learn and improve your skills. With the right skills, experience, and network, you can achieve great success in this field and earn valuable experience.

2 notes

·

View notes

Text

What Web Development Companies Do Differently for Fintech Clients

In the world of financial technology (fintech), innovation moves fast—but so do regulations, user expectations, and cyber threats. Building a fintech platform isn’t like building a regular business website. It requires a deeper understanding of compliance, performance, security, and user trust.

A professional Web Development Company that works with fintech clients follows a very different approach—tailoring everything from architecture to front-end design to meet the demands of the financial sector. So, what exactly do these companies do differently when working with fintech businesses?

Let’s break it down.

1. They Prioritize Security at Every Layer

Fintech platforms handle sensitive financial data—bank account details, personal identification, transaction histories, and more. A single breach can lead to massive financial and reputational damage.

That’s why development companies implement robust, multi-layered security from the ground up:

End-to-end encryption (both in transit and at rest)

Secure authentication (MFA, biometrics, or SSO)

Role-based access control (RBAC)

Real-time intrusion detection systems

Regular security audits and penetration testing

Security isn’t an afterthought—it’s embedded into every decision from architecture to deployment.

2. They Build for Compliance and Regulation

Fintech companies must comply with strict regulatory frameworks like:

PCI-DSS for handling payment data

GDPR and CCPA for user data privacy

KYC/AML requirements for financial onboarding

SOX, SOC 2, and more for enterprise-level platforms

Development teams work closely with compliance officers to ensure:

Data retention and consent mechanisms are implemented

Audit logs are stored securely and access-controlled

Reporting tools are available to meet regulatory checks

APIs and third-party tools also meet compliance standards

This legal alignment ensures the platform is launch-ready—not legally exposed.

3. They Design with User Trust in Mind

For fintech apps, user trust is everything. If your interface feels unsafe or confusing, users won’t even enter their phone number—let alone their banking details.

Fintech-focused development teams create clean, intuitive interfaces that:

Highlight transparency (e.g., fees, transaction histories)

Minimize cognitive load during onboarding

Offer instant confirmations and reassuring microinteractions

Use verified badges, secure design patterns, and trust signals

Every interaction is designed to build confidence and reduce friction.

4. They Optimize for Real-Time Performance

Fintech platforms often deal with real-time transactions—stock trading, payments, lending, crypto exchanges, etc. Slow performance or downtime isn’t just frustrating; it can cost users real money.

Agencies build highly responsive systems by:

Using event-driven architectures with real-time data flows

Integrating WebSockets for live updates (e.g., price changes)

Scaling via cloud-native infrastructure like AWS Lambda or Kubernetes

Leveraging CDNs and edge computing for global delivery

Performance is monitored continuously to ensure sub-second response times—even under load.

5. They Integrate Secure, Scalable APIs

APIs are the backbone of fintech platforms—from payment gateways to credit scoring services, loan underwriting, KYC checks, and more.

Web development companies build secure, scalable API layers that:

Authenticate via OAuth2 or JWT

Throttle requests to prevent abuse

Log every call for auditing and debugging

Easily plug into services like Plaid, Razorpay, Stripe, or banking APIs

They also document everything clearly for internal use or third-party developers who may build on top of your platform.

6. They Embrace Modular, Scalable Architecture

Fintech platforms evolve fast. New features—loan calculators, financial dashboards, user wallets—need to be rolled out frequently without breaking the system.

That’s why agencies use modular architecture principles:

Microservices for independent functionality

Scalable front-end frameworks (React, Angular)

Database sharding for performance at scale

Containerization (e.g., Docker) for easy deployment

This allows features to be developed, tested, and launched independently, enabling faster iteration and innovation.

7. They Build for Cross-Platform Access

Fintech users interact through mobile apps, web portals, embedded widgets, and sometimes even smartwatches. Development companies ensure consistent experiences across all platforms.

They use:

Responsive design with mobile-first approaches

Progressive Web Apps (PWAs) for fast, installable web portals

API-first design for reuse across multiple front-ends

Accessibility features (WCAG compliance) to serve all user groups

Cross-platform readiness expands your market and supports omnichannel experiences.

Conclusion

Fintech development is not just about great design or clean code—it’s about precision, trust, compliance, and performance. From data encryption and real-time APIs to regulatory compliance and user-centric UI, the stakes are much higher than in a standard website build.

That’s why working with a Web Development Company that understands the unique challenges of the financial sector is essential. With the right partner, you get more than a website—you get a secure, scalable, and regulation-ready platform built for real growth in a high-stakes industry.

0 notes

Text

CNAPP Explained: The Smartest Way to Secure Cloud-Native Apps with EDSPL

Introduction: The New Era of Cloud-Native Apps

Cloud-native applications are rewriting the rules of how we build, scale, and secure digital products. Designed for agility and rapid innovation, these apps demand security strategies that are just as fast and flexible. That’s where CNAPP—Cloud-Native Application Protection Platform—comes in.

But simply deploying CNAPP isn’t enough.

You need the right strategy, the right partner, and the right security intelligence. That’s where EDSPL shines.

What is CNAPP? (And Why Your Business Needs It)

CNAPP stands for Cloud-Native Application Protection Platform, a unified framework that protects cloud-native apps throughout their lifecycle—from development to production and beyond.

Instead of relying on fragmented tools, CNAPP combines multiple security services into a cohesive solution:

Cloud Security

Vulnerability management

Identity access control

Runtime protection

DevSecOps enablement

In short, it covers the full spectrum—from your code to your container, from your workload to your network security.

Why Traditional Security Isn’t Enough Anymore

The old way of securing applications with perimeter-based tools and manual checks doesn’t work for cloud-native environments. Here’s why:

Infrastructure is dynamic (containers, microservices, serverless)

Deployments are continuous

Apps run across multiple platforms

You need security that is cloud-aware, automated, and context-rich—all things that CNAPP and EDSPL’s services deliver together.

Core Components of CNAPP

Let’s break down the core capabilities of CNAPP and how EDSPL customizes them for your business:

1. Cloud Security Posture Management (CSPM)

Checks your cloud infrastructure for misconfigurations and compliance gaps.

See how EDSPL handles cloud security with automated policy enforcement and real-time visibility.

2. Cloud Workload Protection Platform (CWPP)

Protects virtual machines, containers, and functions from attacks.

This includes deep integration with application security layers to scan, detect, and fix risks before deployment.

3. CIEM: Identity and Access Management

Monitors access rights and roles across multi-cloud environments.

Your network, routing, and storage environments are covered with strict permission models.

4. DevSecOps Integration

CNAPP shifts security left—early into the DevOps cycle. EDSPL’s managed services ensure security tools are embedded directly into your CI/CD pipelines.

5. Kubernetes and Container Security

Containers need runtime defense. Our approach ensures zero-day protection within compute environments and dynamic clusters.

How EDSPL Tailors CNAPP for Real-World Environments

Every organization’s tech stack is unique. That’s why EDSPL never takes a one-size-fits-all approach. We customize CNAPP for your:

Cloud provider setup

Mobility strategy

Data center switching

Backup architecture

Storage preferences

This ensures your entire digital ecosystem is secure, streamlined, and scalable.

Case Study: CNAPP in Action with EDSPL

The Challenge

A fintech company using a hybrid cloud setup faced:

Misconfigured services

Shadow admin accounts

Poor visibility across Kubernetes

EDSPL’s Solution

Integrated CNAPP with CIEM + CSPM

Hardened their routing infrastructure

Applied real-time runtime policies at the node level

�� The Results

75% drop in vulnerabilities

Improved time to resolution by 4x

Full compliance with ISO, SOC2, and GDPR

Why EDSPL’s CNAPP Stands Out

While most providers stop at integration, EDSPL goes beyond:

🔹 End-to-End Security: From app code to switching hardware, every layer is secured. 🔹 Proactive Threat Detection: Real-time alerts and behavior analytics. 🔹 Customizable Dashboards: Unified views tailored to your team. 🔹 24x7 SOC Support: With expert incident response. 🔹 Future-Proofing: Our background vision keeps you ready for what’s next.

EDSPL’s Broader Capabilities: CNAPP and Beyond

While CNAPP is essential, your digital ecosystem needs full-stack protection. EDSPL offers:

Network security

Application security

Switching and routing solutions

Storage and backup services

Mobility and remote access optimization

Managed and maintenance services for 24x7 support

Whether you’re building apps, protecting data, or scaling globally, we help you do it securely.

Let’s Talk CNAPP

You’ve read the what, why, and how of CNAPP — now it’s time to act.

📩 Reach us for a free CNAPP consultation. 📞 Or get in touch with our cloud security specialists now.

Secure your cloud-native future with EDSPL — because prevention is always smarter than cure.

0 notes

Text

Enterprise Kubernetes Storage with Red Hat OpenShift Data Foundation (DO370)

In the era of cloud-native transformation, data is the fuel powering everything from mission-critical enterprise apps to real-time analytics platforms. However, as Kubernetes adoption grows, many organizations face a new set of challenges: how to manage persistent storage efficiently, reliably, and securely across distributed environments.

To solve this, Red Hat OpenShift Data Foundation (ODF) emerges as a powerful solution — and the DO370 training course is designed to equip professionals with the skills to deploy and manage this enterprise-grade storage platform.

🔍 What is Red Hat OpenShift Data Foundation?

OpenShift Data Foundation is an integrated, software-defined storage solution that delivers scalable, resilient, and cloud-native storage for Kubernetes workloads. Built on Ceph and Rook, ODF supports block, file, and object storage within OpenShift, making it an ideal choice for stateful applications like databases, CI/CD systems, AI/ML pipelines, and analytics engines.

🎯 Why Learn DO370?

The DO370: Red Hat OpenShift Data Foundation course is specifically designed for storage administrators, infrastructure architects, and OpenShift professionals who want to:

✅ Deploy ODF on OpenShift clusters using best practices.

✅ Understand the architecture and internal components of Ceph-based storage.

✅ Manage persistent volumes (PVs), storage classes, and dynamic provisioning.

✅ Monitor, scale, and secure Kubernetes storage environments.

✅ Troubleshoot common storage-related issues in production.

🛠️ Key Features of ODF for Enterprise Workloads

1. Unified Storage (Block, File, Object)

Eliminate silos with a single platform that supports diverse workloads.

2. High Availability & Resilience

ODF is designed for fault tolerance and self-healing, ensuring business continuity.

3. Integrated with OpenShift

Full integration with the OpenShift Console, Operators, and CLI for seamless Day 1 and Day 2 operations.

4. Dynamic Provisioning

Simplifies persistent storage allocation, reducing manual intervention.

5. Multi-Cloud & Hybrid Cloud Ready

Store and manage data across on-prem, public cloud, and edge environments.

📘 What You Will Learn in DO370

Installing and configuring ODF in an OpenShift environment.

Creating and managing storage resources using the OpenShift Console and CLI.

Implementing security and encryption for data at rest.

Monitoring ODF health with Prometheus and Grafana.

Scaling the storage cluster to meet growing demands.

🧠 Real-World Use Cases

Databases: PostgreSQL, MySQL, MongoDB with persistent volumes.

CI/CD: Jenkins with persistent pipelines and storage for artifacts.

AI/ML: Store and manage large datasets for training models.

Kafka & Logging: High-throughput storage for real-time data ingestion.

👨🏫 Who Should Enroll?

This course is ideal for:

Storage Administrators

Kubernetes Engineers

DevOps & SRE teams

Enterprise Architects

OpenShift Administrators aiming to become RHCA in Infrastructure or OpenShift

🚀 Takeaway

If you’re serious about building resilient, performant, and scalable storage for your Kubernetes applications, DO370 is the must-have training. With ODF becoming a core component of modern OpenShift deployments, understanding it deeply positions you as a valuable asset in any hybrid cloud team.

🧭 Ready to transform your Kubernetes storage strategy? Enroll in DO370 and master Red Hat OpenShift Data Foundation today with HawkStack Technologies – your trusted Red Hat Certified Training Partner. For more details www.hawkstack.com

0 notes

Text

Containerization and Test Automation Strategies

Containerization is revolutionizing how software is developed, tested, and deployed. It allows QA teams to build consistent, scalable, and isolated environments for testing across platforms. When paired with test automation, containerization becomes a powerful tool for enhancing speed, accuracy, and reliability. Genqe plays a vital role in this transformation.

What is Containerization? Containerization is a lightweight virtualization method that packages software code and its dependencies into containers. These containers run consistently across different computing environments. This consistency makes it easier to manage environments during testing. Tools like Genqe automate testing inside containers to maximize efficiency and repeatability in QA pipelines.

Benefits of Containerization Containerization provides numerous benefits like rapid test setup, consistent environments, and better resource utilization. Containers reduce conflicts between environments, speeding up the QA cycle. Genqe supports container-based automation, enabling testers to deploy faster, scale better, and identify issues in isolated, reproducible testing conditions.

Containerization and Test Automation Containerization complements test automation by offering isolated, predictable environments. It allows tests to be executed consistently across various platforms and stages. With Genqe, automated test scripts can be executed inside containers, enhancing test coverage, minimizing flakiness, and improving confidence in the release process.

Effective Testing Strategies in Containerized Environments To test effectively in containers, focus on statelessness, fast test execution, and infrastructure-as-code. Adopt microservice testing patterns and parallel execution. Genqe enables test suites to be orchestrated and monitored across containers, ensuring optimized resource usage and continuous feedback throughout the development cycle.

Implementing a Containerized Test Automation Strategy Start with containerizing your application and test tools. Integrate your CI/CD pipelines to trigger tests inside containers. Use orchestration tools like Docker Compose or Kubernetes. Genqe simplifies this with container-native automation support, ensuring smooth setup, execution, and scaling of test cases in real-time.

Best Approaches for Testing Software in Containers Use service virtualization, parallel testing, and network simulation to reflect production-like environments. Ensure containers are short-lived and stateless. With Genqe, testers can pre-configure environments, manage dependencies, and run comprehensive test suites that validate both functionality and performance under containerized conditions.

Common Challenges and Solutions Testing in containers presents challenges like data persistence, debugging, and inter-container communication. Solutions include using volume mounts, logging tools, and health checks. Genqe addresses these by offering detailed reporting, real-time monitoring, and support for mocking and service stubs inside containers, easing test maintenance.

Advantages of Genqe in a Containerized World Genqe enhances containerized testing by providing scalable test execution, seamless integration with Docker/Kubernetes, and cloud-native automation capabilities. It ensures faster feedback, better test reliability, and simplified environment management. Genqe’s platform enables efficient orchestration of parallel and distributed test cases inside containerized infrastructures.

Conclusion Containerization, when combined with automated testing, empowers modern QA teams to test faster and more reliably. With tools like Genqe, teams can embrace DevOps practices and deliver high-quality software consistently. The future of testing is containerized, scalable, and automated — and Genqe is leading the way.

0 notes

Text

Boost Business Agility with DevOps Consulting and Azure DevOps Services.

In today’s competitive digital world, companies need to deliver high-quality software quickly and reliably, with minimal risk. At CloudKodeForm Technologies, our DevOps consulting aims to bring development and operations closer together. We streamline your delivery process and support your digital growth.

Why DevOps is Important DevOps is more than a popular term. It’s a way to change how teams work by uniting development and operations with shared goals. Using automation, integrated tools, and flexible practices, businesses can speed up software releases. They also get better system stability and performance.

CloudKodeForm Technologies provides DevOps management services that help fix common development delays. From planning and building to testing and deploying, our team makes sure each part of your software cycle is fast and scalable.

Our Full DevOps Consulting Services Our services look at your current setup, find gaps, and help you build a plan. Whether you are new to DevOps or trying to improve what you have, our experts offer:

Infrastructure as Code (IaC) setups

Automation of CI/CD pipelines

Container tools and management (Docker, Kubernetes)

Security measures integrated into workflows (DevSecOps)

Ongoing performance checks to improve results

We focus on aligning your tools, teams, and processes so you can deliver software smoothly and continuously.

DevOps Management: From Start to Finish Once your plan is ready, your next step is flawless execution. We support you with ongoing monitoring, support, and infrastructure management. Our team helps you:

Expand your system easily

Keep applications running smoothly

Manage cloud-based software

Follow rules and security standards

Our hands-on approach keeps your system safe and working well, reducing downtime and security risks. Your users will have a better experience too.

Making Your Delivery Faster The core of DevOps is the delivery pipeline. It automates everything from writing code to launching new features. This speeds up releases and cuts down mistakes.

At CloudKodeForm, we create pipelines for:

Continuous Integration (CI)

Continuous Delivery (CD)

Automated tests and quality checks

Deployment options like canary or blue-green releases

With a strong pipeline, you can push new features faster, respond quickly to market needs, and stay ahead of competitors.

Azure DevOps Setup: Easy Cloud Connection We also focus on Azure DevOps, giving you smooth connection to Microsoft’s cloud. From planning and coding to testing and releasing, Azure DevOps covers every stage.

Using Azure DevOps Services Sign In, your team can:

Work together with Git and project boards

Automate builds and releases with Azure Pipelines

Manage deployments with clear dashboards

Store and access project files securely

We help you set up and manage your Azure DevOps account fast, so everything stays secure and organized.

Azure DevOps Test Plans for Better Quality Quality matters in today’s software work. Azure DevOps Test Plans let us check your apps before they go live.

Our testing services include:

Manual and exploratory testing

Managing test cases and execution

Linking with CI/CD systems

Real-time reports and results

Using Azure DevOps Test Plans helps reduce bugs and improve app speed. It supports happier users and better performance.

0 notes

Text

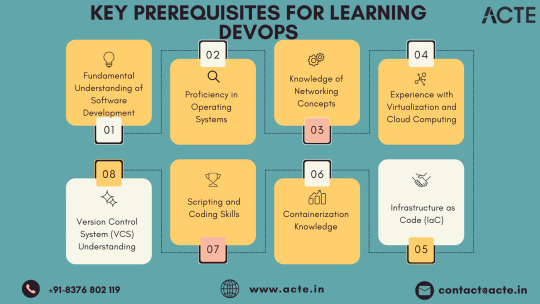

DevOps Landscape: Building Blocks for a Seamless Transition

In the dynamic realm where software development intersects with operations, the role of a DevOps professional has become instrumental. For individuals aspiring to make the leap into this dynamic field, understanding the key building blocks can set the stage for a successful transition. While there are no rigid prerequisites, acquiring foundational skills and knowledge areas becomes pivotal for thriving in a DevOps role.

1. Embracing the Essence of Software Development: At the core of DevOps lies collaboration, making it essential for individuals to have a fundamental understanding of software development processes. Proficiency in coding practices, version control, and the collaborative nature of development projects is paramount. Additionally, a solid grasp of programming languages and scripting adds a valuable dimension to one's skill set.

2. Navigating System Administration Fundamentals: DevOps success is intricately linked to a foundational understanding of system administration. This encompasses knowledge of operating systems, networks, and infrastructure components. Such familiarity empowers DevOps professionals to adeptly manage and optimize the underlying infrastructure supporting applications.

3. Mastery of Version Control Systems: Proficiency in version control systems, with Git taking a prominent role, is indispensable. Version control serves as the linchpin for efficient code collaboration, allowing teams to track changes, manage codebases, and seamlessly integrate contributions from multiple developers.

4. Scripting and Automation Proficiency: Automation is a central tenet of DevOps, emphasizing the need for scripting skills in languages like Python, Shell, or Ruby. This skill set enables individuals to automate repetitive tasks, fostering more efficient workflows within the DevOps pipeline.

5. Embracing Containerization Technologies: The widespread adoption of containerization technologies, exemplified by Docker, and orchestration tools like Kubernetes, necessitates a solid understanding. Mastery of these technologies is pivotal for creating consistent and reproducible environments, as well as managing scalable applications.

6. Unveiling CI/CD Practices: Continuous Integration and Continuous Deployment (CI/CD) practices form the beating heart of DevOps. Acquiring knowledge of CI/CD tools such as Jenkins, GitLab CI, or Travis CI is essential. This proficiency ensures the automated execution of code testing, integration, and deployment processes, streamlining development pipelines.

7. Harnessing Infrastructure as Code (IaC): Proficiency in Infrastructure as Code (IaC) tools, including Terraform or Ansible, constitutes a fundamental aspect of DevOps. IaC facilitates the codification of infrastructure, enabling the automated provisioning and management of resources while ensuring consistency across diverse environments.

8. Fostering a Collaborative Mindset: Effective communication and collaboration skills are non-negotiable in the DevOps sphere. The ability to seamlessly collaborate with cross-functional teams, spanning development, operations, and various stakeholders, lays the groundwork for a culture of collaboration essential to DevOps success.

9. Navigating Monitoring and Logging Realms: Proficiency in monitoring tools such as Prometheus and log analysis tools like the ELK stack is indispensable for maintaining application health. Proactive monitoring equips teams to identify issues in real-time and troubleshoot effectively.

10. Embracing a Continuous Learning Journey: DevOps is characterized by its dynamic nature, with new tools and practices continually emerging. A commitment to continuous learning and adaptability to emerging technologies is a fundamental trait for success in the ever-evolving field of DevOps.

In summary, while the transition to a DevOps role may not have rigid prerequisites, the acquisition of these foundational skills and knowledge areas becomes the bedrock for a successful journey. DevOps transcends being a mere set of practices; it embodies a cultural shift driven by collaboration, automation, and an unwavering commitment to continuous improvement. By embracing these essential building blocks, individuals can navigate their DevOps journey with confidence and competence.

5 notes

·

View notes

Text

Best Software Development Company in Chennai | Leading Software Solutions

When searching for the best software development company in Chennai, businesses of all sizes look for a partner who combines technical expertise, a customer-centric approach, and proven delivery. A leading Software Development Company in Chennai offers end-to-end solutions—from ideation and design to development, testing, deployment, and maintenance—ensuring your software is scalable, secure, and aligned with your strategic goals.

Why Choose the Best Software Development Company in Chennai?

Local Expertise, Global Standards Chennai has emerged as a thriving IT hub, home to talented engineers fluent in cutting-edge technologies. By selecting the best software development company in Chennai, you tap into deep local expertise guided by global best practices, ensuring your project stays on time and within budget.

Proven Track Record The top Software Development Company in Chennai showcases a rich portfolio of successful projects across industries—finance, healthcare, e-commerce, education, and more. Their case studies demonstrate on-point requirements gathering, agile delivery, and robust support.

Cost-Effective Solutions Chennai offers competitive rates without compromising quality. The best software development company in Chennai provides flexible engagement models—fixed price, time & materials, or dedicated teams—so you can choose the structure that best fits your budget and timeline.

Cultural Alignment & Communication Teams in Chennai often work in overlapping time zones with North America, Europe, and Australia, enabling real-time collaboration. A leading Software Development Company in Chennai emphasizes transparent communication, regular status updates, and seamless integration with your in-house team.

Core Services Offered

A comprehensive Software Development Company in Chennai typically delivers:

Custom Software Development Tailor-made applications built from the ground up to address unique business challenges—whether it’s a CRM, ERP, inventory system, or specialized B2B software.

Mobile App Development Native and cross-platform iOS/Android apps designed for performance, usability, and engagement. Ideal for startups and enterprises aiming to reach customers on the go.

Web Application Development Responsive, SEO-friendly, and secure web apps using frameworks like React, Angular, and Vue.js, backed by scalable back-end systems in Node.js, .NET, Java, or Python.

UI/UX Design User-centered design that drives adoption. Wireframes, prototypes, and high-fidelity designs ensure an intuitive interface that delights end users.

Quality Assurance & Testing Automated and manual testing—functional, performance, security, and usability—to deliver a bug-free product that scales under real-world conditions.

DevOps & Cloud Services CI/CD pipelines, containerization with Docker/Kubernetes, and deployments on AWS, Azure, or Google Cloud for high availability and rapid release cycles.

Maintenance & Support Post-launch monitoring, feature enhancements, and 24/7 support to keep your software running smoothly and securely.

The Development Process

Discovery & Planning Workshops and stakeholder interviews to define scope, objectives, and success metrics.

Design & Prototyping Rapid prototyping of wireframes and UI mockups for early feedback and iterative refinement.

Agile Development Two-week sprints with sprint demos, ensuring transparency and adaptability to changing requirements.

Testing & QA Continuous testing throughout development to catch issues early and deliver a stable release.

Deployment & Go-Live Seamless rollout with thorough planning, user training, and post-deployment support.

Maintenance & Evolution Ongoing enhancements, performance tuning, and security updates to keep your application competitive.

Benefits of Partnering Locally

Speedy Onboarding: Proximity to Chennai’s tech ecosystem speeds up recruitment of additional talent.

Cultural Synergy: Shared cultural context helps in understanding your business nuances faster.

Time-Zone Overlap: Real-time collaboration during key business hours reduces turnaround times.

Networking & Events: Access to local tech meetups, hackathons, and startup incubators for continuous innovation.

Conclusion

Choosing the best software development company in Chennai means entrusting your digital transformation to a partner with deep technical skills, transparent processes, and a client-first ethos. Whether you’re a startup looking to disrupt the market or a large enterprise aiming to modernize legacy systems, the right Software Development Company in Chennai will guide you from concept to success—delivering high-quality software on schedule and within budget. Start your journey today and experience why Chennai stands out as a premier destination for software development excellence.

0 notes

Text

Cloud Cost Optimization Strategies Every CTO Should Know in 2025

As organizations scale in the cloud, one challenge becomes increasingly clear: managing and optimizing cloud costs. With the promise of scalability and flexibility comes the risk of unexpected expenses, idle resources, and inefficient spending.

In 2025, cloud cost optimization is no longer just a financial concern—it’s a strategic imperative for CTOs aiming to drive innovation without draining budgets. In this blog, we’ll explore proven strategies every CTO should know to control cloud expenses while maintaining performance and agility.

🧾 The Cost Optimization Challenge in the Cloud

The cloud offers a pay-as-you-go model, which is ideal—if you’re disciplined. However, most companies face challenges like:

Overprovisioned virtual machines

Unused storage or idle databases

Redundant services running in the background

Poor visibility into cloud usage across teams

Limited automation of cost governance

These inefficiencies lead to cloud waste, often consuming 30–40% of a company’s monthly cloud budget.

🛠️ Core Strategies for Cloud Cost Optimization

1. 📉 Right-Sizing Resources

Regularly analyze actual usage of compute and storage resources to downsize over-provisioned assets. Choose instance types or container configurations that match your workload’s true needs.

2. ⏱️ Use Auto-Scaling and Scheduling

Enable auto-scaling to adjust resource allocation based on demand. Implement scheduling scripts or policies to shut down dev/test environments during off-hours.

3. 📦 Leverage Reserved Instances and Savings Plans

For predictable workloads, commit to Reserved Instances (RIs) or Savings Plans. These options can reduce costs by up to 70% compared to on-demand pricing.

4. 🚫 Eliminate Orphaned Resources

Track down unused volumes, unattached IPs, idle load balancers, or stopped instances that still incur charges.

5. 💼 Centralized Cost Management

Use tools like AWS Cost Explorer, Azure Cost Management, or Google’s Billing Reports to monitor, allocate, and forecast cloud spend. Consolidate billing across accounts for better control.

🔐 Governance and Cost Policies

✅ Tag Everything

Apply consistent tagging (e.g., environment:dev, owner:teamA) to group and track costs effectively.

✅ Set Budgets and Alerts

Configure budget thresholds and set up alerts when approaching limits. Enable anomaly detection for cost spikes.

✅ Enforce Role-Based Access Control (RBAC)

Restrict who can provision expensive resources. Apply cost guardrails via service control policies (SCPs).

✅ Use Cost Allocation Reports

Assign and report costs by team, application, or business unit to drive accountability.

📊 Tools to Empower Cost Optimization

Here are some top tools every CTO should consider integrating:

Salzen Cloud: Offers unified dashboards, usage insights, and AI-based optimization recommendations

CloudHealth by VMware: Cost governance, forecasting, and optimization in multi-cloud setups

Apptio Cloudability: Cloud financial management platform for enterprise-level cost allocation

Kubecost: Cost visibility and insights for Kubernetes environments

AWS Trusted Advisor / Azure Advisor / GCP Recommender: Native cloud tools to recommend cost-saving actions

🧠 Advanced Tips for 2025

🔁 Adopt FinOps Culture

Build a cross-functional team (engineering + finance + ops) to drive cloud financial accountability. Make cost discussions part of sprint planning and retrospectives.

☁️ Optimize Multi-Cloud and Hybrid Environments

Use abstraction and management layers to compare pricing models and shift workloads to more cost-effective providers.

🔄 Automate with Infrastructure as Code (IaC)

Define auto-scaling, backup, and shutdown schedules in code. Automation reduces human error and enforces consistency.

🚀 How Salzen Cloud Helps

At Salzen Cloud, we help CTOs and engineering leaders:

Monitor multi-cloud usage in real-time

Identify idle resources and right-size infrastructure

Predict usage trends with AI/ML-based models

Set cost thresholds and auto-trigger alerts

Automate cost-saving actions through CI/CD pipelines and Infrastructure as Code

With Salzen Cloud, optimization is not a one-time event—it’s a continuous, intelligent process integrated into every stage of the cloud lifecycle.

✅ Final Thoughts

Cloud cost optimization is not just about cutting expenses—it's about maximizing value. With the right tools, practices, and mindset, CTOs can strike the perfect balance between performance, scalability, and efficiency.

In 2025 and beyond, the most successful cloud leaders will be those who innovate smartly—without overspending.

0 notes

Text

Powering Progress – Why an IT Solutions Company India Should Be Your Technology Partner

In today’s hyper‑connected world, agile technology is the backbone of every successful enterprise. From cloud migrations to cybersecurity fortresses, an IT Solutions Company India has become the go‑to partner for businesses of every size. India’s IT sector, now worth over USD 250 billion, delivers world‑class solutions at unmatched value, helping startups and Fortune 500 firms alike turn bold ideas into reality.

1 | A Legacy of Tech Excellence

The meteoric growth of the Indian IT industry traces back to the early 1990s when reform policies sparked global outsourcing. Three decades later, an IT Solutions Company India is no longer a mere offshore vendor but a full‑stack innovation hub. Indian engineers lead global code commits on GitHub, contribute to Kubernetes and TensorFlow, and spearhead R&D in AI, blockchain, and IoT.

2 | Comprehensive Service Portfolio

Your business can tap into an integrated bouquet of services without juggling multiple vendors:

Custom Software Development – Agile sprints, DevOps pipelines, and rigorous QA cycles ensure robust, scalable products.

Cloud & DevOps – Migrate legacy workloads to AWS, Azure, or GCP and automate deployments with Jenkins, Docker, and Kubernetes.

Cybersecurity & Compliance – SOC 2, ISO 27001, GDPR: an IT Solutions Company India hardens your defenses and meets global regulations.

Data Analytics & AI – Transform raw data into actionable insights using ML algorithms, predictive analytics, and BI dashboards.

Managed IT Services – 24×7 monitoring, incident response, and helpdesk support slash downtime and boost productivity.

3 | Why India Wins on the Global Stage

Talent Pool – Over four million skilled technologists graduate each year.

Cost Efficiency – Competitive rates without compromising quality.

Time‑Zone Advantage – Overlapping work windows enable real‑time collaboration with APAC, EMEA, and the Americas.

Innovation Culture – Government initiatives like “Digital India” and “Startup India” fuel continuous R&D.

Proven Track Record – Case studies show a 40‑60 % reduction in TCO after partnering with an IT Solutions Company India.

4 | Success Story Snapshot

A U.S. healthcare startup needed HIPAA‑compliant telemedicine software within six months. Partnering with an IT Solutions Company India, they:

Deployed a microservices architecture on AWS using Terraform

Integrated real‑time video via WebRTC with 99.9 % uptime

Achieved HIPAA compliance in the first audit cycle The result? A 3× increase in user adoption and Series B funding secured in record time.

5 | Engagement Models to Fit Every Need

Dedicated Development Team – Ideal for long‑term projects needing continuous innovation.

Fixed‑Scope, Fixed‑Price – Best for clearly defined deliverables and budgets.

Time & Material – Flexibility for evolving requirements and rapid pivots.

6 | Future‑Proofing Your Business

Technologies like edge AI, quantum computing, and 6G will reshape industries. By aligning with an IT Solutions Company India, you gain a strategic partner who anticipates disruptions and prototypes tomorrow’s solutions today.

7 | Call to Action

Ready to accelerate digital transformation? Choose an IT Solutions Company India that speaks the language of innovation, agility, and ROI. Schedule a free consultation and turn your tech vision into a competitive edge.

Plot No 9, Sarwauttam Complex, Manwakheda Road,Anand Vihar, Behind Vaishali Apartment, Sector 4, Hiran Magri, Udaipur, Udaipur, Rajasthan 313002

1 note

·

View note

Text

🌐 DevOps with AWS – Learn from the Best! 🚀 Kickstart your tech journey with our hands-on DevOps with AWS training program led by expert Mr. Ram – starting 23rd June at 7:30 AM (IST). Whether you're an aspiring DevOps engineer or an IT enthusiast looking to upscale, this course is your gateway to mastering modern software delivery pipelines.

💡 Why DevOps with AWS? In today's tech-driven world, companies demand faster deployments, better scalability, and secure infrastructure. This course combines core DevOps practices with the powerful cloud platform AWS, giving you the edge in a competitive market.

📘 What You’ll Learn:

CI/CD Pipeline with Jenkins

Version Control using Git & GitHub

Docker & Kubernetes for containerization

Infrastructure as Code with Terraform

AWS services for DevOps: EC2, S3, IAM, Lambda & more

Real-time projects with monitoring & alerting tools

📌 Register here: https://tr.ee/3L50Dt

🔍 Explore More Free Courses: https://linktr.ee/ITcoursesFreeDemos

Be future-ready with Naresh i Technologies – where expert mentors and project-based learning meet career transformation. Don’t miss this opportunity to build smart, deploy faster, and grow your DevOps career.

#DevOps#AWS#DevOpsEngineer#NareshIT#CloudComputing#CI_CD#Jenkins#Docker#Kubernetes#Terraform#OnlineLearning#CareerGrowth

0 notes

Text

Developing and Deploying AI/ML Applications on Red Hat OpenShift AI (AI268)

As AI and Machine Learning continue to reshape industries, the need for scalable, secure, and efficient platforms to build and deploy these workloads is more critical than ever. That’s where Red Hat OpenShift AI comes in—a powerful solution designed to operationalize AI/ML at scale across hybrid and multicloud environments.

With the AI268 course – Developing and Deploying AI/ML Applications on Red Hat OpenShift AI – developers, data scientists, and IT professionals can learn to build intelligent applications using enterprise-grade tools and MLOps practices on a container-based platform.

🌟 What is Red Hat OpenShift AI?

Red Hat OpenShift AI (formerly Red Hat OpenShift Data Science) is a comprehensive, Kubernetes-native platform tailored for developing, training, testing, and deploying machine learning models in a consistent and governed way. It provides tools like:

Jupyter Notebooks

TensorFlow, PyTorch, Scikit-learn

Apache Spark

KServe & OpenVINO for inference

Pipelines & GitOps for MLOps

The platform ensures seamless collaboration between data scientists, ML engineers, and developers—without the overhead of managing infrastructure.

📘 Course Overview: What You’ll Learn in AI268

AI268 focuses on equipping learners with hands-on skills in designing, developing, and deploying AI/ML workloads on Red Hat OpenShift AI. Here’s a quick snapshot of the course outcomes:

✅ 1. Explore OpenShift AI Components

Understand the ecosystem—JupyterHub, Pipelines, Model Serving, GPU support, and the OperatorHub.

✅ 2. Data Science Workspaces

Set up and manage development environments using Jupyter notebooks integrated with OpenShift’s security and scalability features.

✅ 3. Training and Managing Models

Use libraries like PyTorch or Scikit-learn to train models. Learn to leverage pipelines for versioning and reproducibility.

✅ 4. MLOps Integration

Implement CI/CD for ML using OpenShift Pipelines and GitOps to manage lifecycle workflows across environments.

✅ 5. Model Deployment and Inference

Serve models using tools like KServe, automate inference pipelines, and monitor performance in real-time.

🧠 Why Take This Course?

Whether you're a data scientist looking to deploy models into production or a developer aiming to integrate AI into your apps, AI268 bridges the gap between experimentation and scalable delivery. The course is ideal for:

Data Scientists exploring enterprise deployment techniques

DevOps/MLOps Engineers automating AI pipelines

Developers integrating ML models into cloud-native applications

Architects designing AI-first enterprise solutions

🎯 Final Thoughts

AI/ML is no longer confined to research labs—it’s at the core of digital transformation across sectors. With Red Hat OpenShift AI, you get an enterprise-ready MLOps platform that lets you go from notebook to production with confidence.

If you're looking to modernize your AI/ML strategy and unlock true operational value, AI268 is your launchpad.

👉 Ready to build and deploy smarter, faster, and at scale? Join the AI268 course and start your journey into Enterprise AI with Red Hat OpenShift.

For more details www.hawkstack.com

0 notes