#Linear Transformation Assignment Help

Explore tagged Tumblr posts

Text

Visions of Mana review

Non-spoilery thoughts:

I really like this game! I was a bit worried about combat from the demo, but I really like the combat in this game, actually. The different weapons all have their own combos and situationally helpful moves (dishing out a lot of damage, attacking in a wide area, launching enemies, staggering them, etc.), so there is quite a bit of satisfaction in learning different fighting styles for each weapon. It almost feels like a fighting game rather than an action/adventure game, which I like.

I feel like this game successfully captures a lot of recurring elements of the Mana series (multiple weapon systems, finding elemental spirits, familiar monsters and bosses, world in decline, simple environmental puzzle-solving) while also being a fresh story and world with its own rules, which is pretty ideal for me. I also think this game does a better job of merging Legend of Mana worldbuilding elements with non-Legend of Mana elements than any other Mana game, and I find that pretty impressive because the aesthetics kind of clash, in my opinion. But they did a good job of merging them here.

The characters are really fun and I like the party dialogues that happen as you go around the world. I think the game could probably use some sidequests or subplots (rather than fairly simple fetch/hunt quests) to flesh out the world a bit and give the story a bit of nonlinearity (it is *quite* linear, although this is pretty normal for the Mana series (LOM aside)...), but I am not complaining. Even though they were fairly simple, I enjoyed the quests here, and they got pretty elaborate in the end.

The progression and gradual unlock of skills that make your characters stronger is also really satisfying. I'm also a big fan of the way that they incorporated a game mechanic (random corestone drops) into the story, and I felt the corestone ability trading added a bit of depth to the gameplay.

Overall, I rate this game like 9/10. It could be better, but there is a lot here to love and the gameplay is pretty polished (game crashes a lot, though -- be sure to save frequently!).

Spoilery thoughts:

My partner keeps joking that Careena is somehow this world's only Texan. (How come her parents have no accent?!)

Magical girl transformation sequences was SUCH a good idea. I approve. (Is there a way to toggle these back on...?)

The tragedy/tragic backstories in this game are exquisite.

At the same time, I really like the humor and cuteness in this game. It's important to have both in a Mana game, I feel.

I wasn't expecting anti-capitalist propaganda in this game, haha.

I like that there's a bit of a nudge in this game about which elemental vessels to assign to which character. Like, Careena's Wind class has pretty nice passives compared to the others and same with Morley's Moon class -- kind of nudges you to start them off with those classes.

It's interesting having Niccolo in this game be such a nice guy. In most games, he's pretty sketchy and self-interested (although he is a pretty great guy in Trials of Mana too). I like that he's Morley's adoptive dad and Palamena's confidante too.

Val is the himbo we all need.

Hinna 😭 They were so ready to be happy together too. I guess Hinna dying this early means she has a reasonable chance of coming back by the end.

SOMEONE high-up in the development team definitely has a foot fetish. But I like seeing Palamena's stylish footwear so I don't mind.

I'm a big fan of Pikuls for land transport and Vuscav for sea transport. Love that we got Vuscav's theme back for this game.

Oh snap I'm in The Lion King right now. XD In general, I was a bit meh about the areas featured in the demo (Rime Falls, Fallow Steppe, and Rhata Harbor), but I've been pretty impressed by the other areas. I liked the Charred Passage, Ledgas Bay, Pritta Ridge, Illystana, Dura Gorge (love the music of this one), Deade Cliffs, and quite a lot of the dungeons.

Von Boyage / Professor Bomb is here! Great cameo.

I like how this game strikes a balance between SOM/TOM-style "there is one of each elemental spirit" and LOM/World of Mana-style "there are many spirits for each element" by having one main elemental spirit but a bunch of mini/lesser elemental spirits with a slightly different design (and they're very cute!).

I like how Khoda looks exactly halfway between Sumo/FFA protagonist and Randi. Well done.

Lol, I thought Aesh was going to be evil. I do like how he occasionally goes into "mad scientist" mode, though. The parts of the game where he has bonding time with the party were some of the best. (His initial introduction was sooo good. When you introduce your friend to your other friends and he's the worrrrst, lol. I like how Morley went from jealous of Aesh to Worried Mother Hen lol.)

Overall, I like the boss battles in this game. I like some of them have a gimmick or puzzle-solving aspect to them, and I like that none of them dragged on too long, which is the main thing I hate about modern action RPGs. The Earth Benevodon battle in particular was a favorite -- epic! Some of the other benevodons sadly went down a bit too quickly (I kind of felt sorry for them having to fight my overleveled party XD).

Okay I guess Hinna isn't coming back. I do like the theme here of it being important to accept the death of loved ones. Ephemeral beauty i.e. things are valuable because they don't last forever. (Ever since my partner pointed out this is an extremely common theme in Japanese art, I am unable to unsee it...)

Some of these late game areas are also really beautiful. *o* I really love the floating island, the Entwine Pass boss battle with all the flowers in the background, and the lighting in the corrupted Mana Sanctuary areas.

Being able to see the previous alm contingents (including Lyza's group!) made me emotional. ;_;

Oh snap, I can't believe Passar was the big bad.

I haven't finished all the postgame content, and I'm not sure if I'll have time. I do eventually want to farm all the abilities and play around with different party setups, and I also want to try playing on Expert difficulty and also with Japanese voices, but we'll see.

Overall, I liked the story! You didn't get Hinna back, but you did manage to fix the world, so there is still that bittersweetness that is important for a Mana game.

11 notes

·

View notes

Text

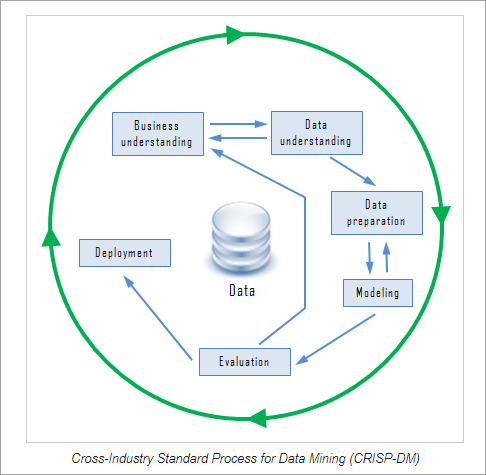

Data gathering. Relevant data for an analytics application is identified and assembled. The data may be located in different source systems, a data warehouse or a data lake, an increasingly common repository in big data environments that contain a mix of structured and unstructured data. External data sources may also be used. Wherever the data comes from, a data scientist often moves it to a data lake for the remaining steps in the process.

Data preparation. This stage includes a set of steps to get the data ready to be mined. It starts with data exploration, profiling and pre-processing, followed by data cleansing work to fix errors and other data quality issues. Data transformation is also done to make data sets consistent, unless a data scientist is looking to analyze unfiltered raw data for a particular application.

Mining the data. Once the data is prepared, a data scientist chooses the appropriate data mining technique and then implements one or more algorithms to do the mining. In machine learning applications, the algorithms typically must be trained on sample data sets to look for the information being sought before they're run against the full set of data.

Data analysis and interpretation. The data mining results are used to create analytical models that can help drive decision-making and other business actions. The data scientist or another member of a data science team also must communicate the findings to business executives and users, often through data visualization and the use of data storytelling techniques.

Types of data mining techniques

Various techniques can be used to mine data for different data science applications. Pattern recognition is a common data mining use case that's enabled by multiple techniques, as is anomaly detection, which aims to identify outlier values in data sets. Popular data mining techniques include the following types:

Association rule mining. In data mining, association rules are if-then statements that identify relationships between data elements. Support and confidence criteria are used to assess the relationships -- support measures how frequently the related elements appear in a data set, while confidence reflects the number of times an if-then statement is accurate.

Classification. This approach assigns the elements in data sets to different categories defined as part of the data mining process. Decision trees, Naive Bayes classifiers, k-nearest neighbor and logistic regression are some examples of classification methods.

Clustering. In this case, data elements that share particular characteristics are grouped together into clusters as part of data mining applications. Examples include k-means clustering, hierarchical clustering and Gaussian mixture models.

Regression. This is another way to find relationships in data sets, by calculating predicted data values based on a set of variables. Linear regression and multivariate regression are examples. Decision trees and some other classification methods can be used to do regressions, too

Data mining companies follow the procedure

#data enrichment#data management#data entry companies#data entry#banglore#monday motivation#happy monday#data analysis#data entry services#data mining

4 notes

·

View notes

Text

Artificial Intelligence Course in USA: A Complete Guide to Learning AI in 2025

Artificial Intelligence (AI) is transforming the world at an unprecedented pace, powering everything from personalized recommendations to self-driving vehicles. With industries across healthcare, finance, retail, and technology investing heavily in AI, there's never been a better time to upskill in this game-changing field. And when it comes to gaining a world-class education in AI, the United States stands as a global leader.

If you're considering enrolling in an Artificial Intelligence course in the USA, this guide will walk you through the benefits, curriculum, career prospects, and how to choose the right program.

What to Expect from an AI and ML Course in the USA?

The United States is a global leader in artificial intelligence and machine learning education, offering some of the most advanced academic and industry-aligned programs in the world. Whether you study at an Ivy League university, a top engineering school, or a specialized tech institute, AI and ML courses in the USA provide a deep, hands-on learning experience that prepares students for high-impact roles in both research and industry.

Strong Theoretical and Practical Foundations

AI and ML courses in the USA typically start by grounding students in essential concepts such as supervised and unsupervised learning, neural networks, deep learning, and probabilistic models. You’ll also study mathematical foundations like linear algebra, calculus, probability, and statistics, which are critical for understanding algorithm behavior. Theoretical lectures are complemented by extensive lab work and coding assignments, ensuring you learn how to apply concepts in real-world contexts.

Advanced Tools and Programming Skills

Expect to gain hands-on experience with industry-standard tools and languages. Python is the most widely used programming language, supported by libraries like TensorFlow, Keras, PyTorch, and Scikit-learn. You’ll also work with data platforms, cloud services (AWS, Google Cloud), and development environments used in AI/ML production settings. Many courses involve building and training machine learning models, analyzing large datasets, and solving practical problems using algorithms you’ve coded from scratch.

Specializations and Electives

Many U.S. programs offer the flexibility to specialize in areas such as natural language processing (NLP), computer vision, robotics, reinforcement learning, or AI ethics. Depending on your interests and career goals, you can dive deeper into these subfields through elective modules or focused research projects.

Capstone Projects and Internships

Most AI and ML programs in the U.S. culminate in a capstone project, where students work individually or in teams to solve a real-world problem using the skills they’ve acquired. Many universities also have strong links to industry, offering internships with top tech firms, startups, and research labs. These experiences not only build your portfolio but also connect you with potential employers.

Career Support and Global Recognition

U.S. universities provide robust career services, including job placement support, resume workshops, interview prep, and alumni networking. A degree or certification from a respected American institution carries significant weight globally and opens doors to top employers in technology, finance, healthcare, and academia.

Who Should Take an AI Course in the USA?

AI programs are tailored for:

Students & Graduates of engineering, computer science, statistics, and math.

IT Professionals looking to pivot into AI or ML roles.

Business Analysts & Managers aiming to incorporate AI into strategic decision-making.

Entrepreneurs & Innovators seeking to build AI-powered products.

Career Switchers with analytical thinking and a desire to learn technical skills.

Some beginner-friendly courses include foundational modules that help you transition into AI—even without a tech background.

Best Learning Formats: Online, On-Campus, or Hybrid?

The USA offers flexibility in how you can learn AI:

On-Campus Courses: Perfect for full-time students or international learners wanting immersive education and networking.

Online Courses: Great for working professionals or those needing flexibility. Many top-tier programs offer live classes, project support, and global certification.

Hybrid Programs: Combine the best of both worlds—classroom learning and online flexibility.

Top Career Paths After Completing an AI Course in the USA

With AI integration across all sectors, the job market is thriving. Graduates can pursue roles such as:

AI Engineer

Machine Learning Engineer

Data Scientist

NLP Engineer

Computer Vision Specialist

AI Research Associate

Business Intelligence Analyst

AI Product Manager

The average salary for AI professionals in the U.S. ranges from $100,000 to $160,000+ depending on experience and specialization.

How to Choose the Right AI Course in the USA?

Choosing the right AI course in the USA can significantly impact your learning experience and career trajectory. With so many options available, it’s important to consider a variety of factors to ensure the program aligns with your goals, background, and aspirations. Here’s a guide on how to choose the right AI course in the USA:

1. Assess Your Skill Level and Background

Before selecting a course, evaluate your current knowledge and skill level. AI and machine learning courses often require a solid understanding of mathematics, programming, and data science. If you are a complete beginner, consider starting with introductory courses in Python, linear algebra, and basic statistics. If you already have experience in computer science or data science, you can choose more advanced programs that dive deeper into specific AI areas such as deep learning, computer vision, or natural language processing (NLP).

2. Consider Your Career Goals

AI encompasses a broad range of specializations, so it's important to align your course selection with your career aspirations. For example:

If you're interested in data science or business intelligence, look for courses that focus on machine learning algorithms, data analysis, and big data technologies.

If you're drawn to robotics or autonomous systems, seek programs that integrate robotics engineering, reinforcement learning, and sensor systems.

For those focused on AI ethics or policy-making, programs offering courses in AI governance, fairness, and privacy are essential.

3. Program Format and Flexibility

AI courses in the USA are offered in various formats:

Full-time degree programs (Master’s or Ph.D.) offer in-depth learning, access to academic research, and the possibility of becoming an AI researcher or specialist.

Part-time programs and bootcamps are ideal if you want to study while working or if you prefer a more condensed, skills-based approach.

Online courses provide flexibility and are a great choice for self-motivated learners. These programs are often more affordable and allow you to balance studies with work or other commitments.

4. Reputation of the Institution

The reputation of the institution offering the AI course is critical in determining the quality of the program. Renowned universities like MIT, Stanford University, Harvard University, and Carnegie Mellon University are famous for their AI research and robust AI programs. These institutions not only provide top-tier education but also have extensive industry connections, increasing your chances of landing internships or jobs with leading tech companies.

However, there are also reputable online platforms and bootcamps (like Udacity, Coursera, or DataCamp) that partner with top universities and offer quality AI education, often at a lower cost.

5. Curriculum and Specialization Areas

Ensure that the course you choose offers a curriculum that aligns with the areas of AI you wish to explore. Some programs may focus broadly on AI, while others offer more niche topics. Look for courses that include:

Core AI concepts: Machine learning, neural networks, reinforcement learning, etc.

Specializations: Natural language processing, computer vision, robotics, or deep learning.

Real-world projects: Hands-on experience working with datasets, building models, and solving industry problems.

6. Industry Connections and Networking Opportunities

Look for programs that provide opportunities to connect with professionals in the AI field. Networking opportunities such as guest lectures, hackathons, industry projects, and alumni networks can be invaluable. Many AI programs in the USA have partnerships with leading tech companies, offering students internships and direct exposure to real-world AI applications.

7. Cost and Financial Aid Options

AI courses, especially those at prestigious institutions, can be expensive. Ensure that you understand the tuition fees, and look for programs that offer financial aid, scholarships, or payment plans. Some online platforms also offer free courses or affordable certification programs, which can be a great way to explore AI at a lower cost before committing to a full-fledged degree program.

Final Thoughts

Choosing an Artificial Intelligence course in the USA is more than just enrolling in a program—it's stepping into the future of work. With cutting-edge curriculum, global networking, and a robust job market, the U.S. offers the ideal environment to master AI skills and launch a high-growth career.

Whether you're looking to become a machine learning expert, develop innovative AI products, or transition into a data-driven role, the right course in the USA can set you on the path to success.

#Artificial Intelligence course in the USA#AI courses in the USA#Artificial Intelligence programs in the USA#Best AI courses USA

0 notes

Text

Maximizing Your Exercise Book: Tips for Effective Note-Taking and Organization

That unassuming notebook in your hand – the exercise book – is more than just a collection of blank pages. It's a potential powerhouse for learning, a sanctuary for ideas, and a tangible record of your intellectual journey. Whether it's a standard school exercise book, a customized school exercise book print, or a bespoke creation tailored to your needs, mastering the art of effective note-taking and organization within its covers can significantly enhance your understanding, retention, and overall productivity. Let's dive into practical tips to transform your exercise book into your ultimate learning companion.

Laying the Groundwork: Setting Up Your Exercise Book for Success

Before you even begin taking notes, a little preparation can go a long way in establishing a system that promotes clarity and organization.

✅ Dedicate Each Book: Assign a specific exercise book to each subject, project, or area of interest. This prevents information from becoming jumbled and makes it easier to locate specific notes later. Clearly label the cover with the subject and date range.

✅ Number Your Pages: This simple step is crucial for creating a table of contents and referencing specific information quickly. It transforms your exercise book from a linear scroll to a navigable resource.

✅ Create a Table of Contents: Leave a few pages at the beginning of each exercise book for a table of contents. As you take notes, record the date and a brief description of the topic on these pages. This acts as an index, saving you valuable time when searching for specific information.

✅ Establish a Consistent Layout: Decide on a basic structure for your notes. Will you divide the page into sections? Will you use bullet points, numbered lists, or a combination? Consistency in layout improves readability and makes it easier to scan for key information.

✅ Consider Using Dividers (Especially for Larger Bespoke Books): If you're using a larger bespoke school exercise book for multiple related topics, consider using physical dividers or simply leaving blank pages to create distinct sections. This helps to compartmentalize information within the same book.

The Art of Effective Note-Taking: Capturing Information Efficiently

Effective note-taking isn't just about transcribing everything you hear or read. It's about actively engaging with the material and capturing the essence in a way that makes sense to you.

✅ Be an Active Listener/Reader: Focus intently on the information being presented. Identify key concepts, main ideas, and supporting details. Don't just passively write; actively process and synthesize the information.

✅ Use Abbreviations and Symbols: Develop a system of abbreviations and symbols for frequently used words and concepts. This will speed up your note-taking process and allow you to capture more information. Just be sure your abbreviations are clear to you.

✅ Paraphrase and Summarize: Instead of writing down every word, paraphrase and summarize the information in your own words. This demonstrates understanding and makes the notes more meaningful when you review them later.

✅ Highlight Key Information: Use different colored pens or highlighters to emphasize important points, definitions, or formulas. This visual cue will help you quickly identify crucial information during review.

✅ Leave White Space: Don't cram your notes together. Leave white space between different ideas or sections. This makes your notes less overwhelming and provides room for adding further details or annotations later.

✅ Ask Clarifying Questions (and Note the Answers): If you're in a lecture or discussion, don't hesitate to ask clarifying questions. Be sure to note down the answers in your exercise book, as these can be crucial for your understanding.

✅ Incorporate Visuals: Don't be afraid to include diagrams, charts, or simple sketches in your notes. Visual representations can often convey information more effectively than words alone. This is where the flexibility of a blank page in a school exercise book print truly shines.

✅ Note Examples and Applications: When learning new concepts, note down relevant examples and how they can be applied in different contexts. This helps solidify your understanding and makes the information more practical.

✅ Differentiate Between Facts and Opinions: Be mindful of distinguishing between factual information and personal opinions or interpretations. Clearly label opinions if you choose to include them in your notes.

Organizing for Optimal Recall: Structuring Your Notes for Future Use

Once you've taken your notes, the work isn't over. Organizing them effectively is key to being able to retrieve and utilize the information later.

✅ Use Headings and Subheadings: Structure your notes using clear headings and subheadings to categorize information and create a logical flow. This makes it easier to scan and locate specific topics.

✅ Employ Bullet Points and Numbered Lists: These formatting tools help to break down complex information into digestible chunks and highlight key points or sequential steps.

✅ Create Connections and Mind Maps: Use arrows, lines, or mind maps to visually connect related ideas and concepts. This can help you see the bigger picture and understand the relationships between different pieces of information. Your exercise book provides the perfect blank canvas for this kind of visual organization.

✅ Regularly Review and Refine Your Notes: Make it a habit to review your notes regularly, ideally within 24 hours of taking them. This helps reinforce what you've learned and allows you to clarify any points that are unclear. You can also add further details or examples during your review.

✅ Summarize Key Concepts at the End of a Topic: After completing a section of notes, take a few moments to summarize the main concepts in your own words. This reinforces your understanding and provides a concise overview for future reference.

✅ Index Key Terms and Concepts: For more complex subjects, consider creating an index at the back of your exercise book. List key terms and the page numbers where they are discussed. This can be incredibly helpful for quick look-ups.

✅ Color-Coding for Themes or Topics: If you're using a bespoke school exercise book for a broad subject, consider using different colored pens or highlighting to denote different themes or subtopics throughout your notes.

Leveraging Different Types of Exercise Books for Enhanced Organization

The type of exercise book you choose can also influence your note-taking and organizational strategies.

✅ Standard School Exercise Books: These lined books are ideal for linear note-taking, especially in subjects like English or History. The lines provide structure for neat handwriting and organized paragraphs.

✅ School Exercise Book Print with Grids: Grid paper is particularly useful for subjects like Mathematics, Science, or Engineering, where diagrams, graphs, and precise calculations are essential. The grids help maintain alignment and proportionality.

✅ Bespoke School Exercise Books with Specific Layouts: The beauty of bespoke options lies in their adaptability. You can design internal layouts that cater to specific subjects or learning styles. This could include Cornell note-taking sections, dedicated spaces for diagrams, or pre-printed prompts for reflection.

The Enduring Power of the Physical Page

In our increasingly digital world, the physical act of writing in an exercise book offers unique benefits for organization and learning. It encourages focused attention, reduces distractions, and creates a tangible record of your intellectual journey. The sensory experience of writing can also enhance memory and engagement. By implementing these tips and consciously utilizing the space within your school exercise book, you can transform it from a mere notebook into a powerful tool for effective note-taking, enhanced organization, and ultimately, greater academic and personal success. Embrace the power of the page and unlock your full learning potential.

0 notes

Text

Master the Future with Expert Machine Learning Education

Introduction to the World of Machine Learning In the digital age, machine learning has emerged as one of the most transformative technologies across industries. From voice assistants to self-driving cars, machine learning is no longer confined to labs—it’s embedded in our everyday lives. The growing demand for skilled professionals in this field makes it essential for aspiring data scientists, analysts, and developers to upskill through structured learning platforms. A machine learning online course offers a powerful way to dive into this dynamic field, enabling learners to understand algorithms, data patterns, and predictive modeling right from the comfort of their homes.

Why Choose Online Learning for Machine Learning? The convenience and flexibility of virtual learning have made it the preferred method for acquiring technical skills. Machine learning online classes cater to both beginners and experienced coders, allowing learners to study at their own pace and access resources anytime. These classes are designed to cover a wide range of topics—from basic linear regression to advanced neural networks. The best part? Learners can repeat lessons, participate in interactive exercises, and get feedback, all within a highly supportive digital environment.

Moreover, online classes eliminate the need for relocation or schedule compromises, making it easier for professionals to continue their education without leaving their jobs. The structure often includes real-world case studies, quizzes, and assignments that simulate the challenges faced by data scientists, thus preparing students for actual workplace demands.

Hands-On Learning with Real Machine Learning Projects Understanding theory is vital, but applying that knowledge to real-world scenarios makes it truly valuable. One of the key advantages of online learning is the opportunity to work on machine learning projects. These projects not only reinforce theoretical concepts but also help in building a strong portfolio that showcases your skills to potential employers.

From building spam filters and recommendation systems to developing sentiment analysis tools and fraud detection algorithms, project-based learning allows students to tackle complex problems using machine learning techniques. These experiences provide insights into data preprocessing, feature selection, model training, and evaluation—all critical steps in the machine learning pipeline.

Engaging in practical projects also promotes creativity and critical thinking, as learners must experiment with different models, optimize performance, and draw conclusions based on data-driven insights.

Learning at Your Pace with Machine Learning Tutorials For those who prefer self-guided learning or wish to supplement their existing knowledge, machine learning tutorials offer an excellent resource. These tutorials are typically modular, covering specific topics such as supervised learning, unsupervised learning, reinforcement learning, and deep learning.

By focusing on individual concepts, tutorials allow learners to explore and understand each component in depth. Whether it’s building a decision tree from scratch or implementing a convolutional neural network, tutorials offer a practical and digestible way to learn complex topics.

They often come with sample datasets, step-by-step guides, and even video walkthroughs that make learning interactive and engaging. More importantly, they help learners troubleshoot common issues and gain confidence in writing code, running models, and interpreting results.

The Career Impact of Learning Machine Learning The career opportunities in machine learning are vast and growing rapidly. From healthcare and finance to e-commerce and entertainment, almost every sector is investing in intelligent systems to optimize operations and improve decision-making.

Learning through a machine learning online course or a comprehensive set of online classes prepares you for roles such as Machine Learning Engineer, Data Scientist, AI Researcher, and Business Intelligence Analyst. With the right foundation and a portfolio of well-executed projects, learners can confidently apply for high-paying roles in the tech industry.

Additionally, having the ability to demonstrate knowledge through projects and tutorials makes a candidate stand out in competitive job markets. It signals initiative, self-discipline, and a commitment to continuous learning—all highly valued traits in technology professions.

Choosing the Right Learning Path With so many resources available, choosing the right path can feel overwhelming. A well-structured learning journey often begins with beginner-friendly machine learning online classes that lay the groundwork. From there, progressing into more advanced tutorials and undertaking increasingly complex machine learning projects can significantly enhance your skills.

It’s important to choose platforms that provide up-to-date content, experienced instructors, and community support. Look for courses that offer practical assignments, quizzes, and feedback mechanisms that help track your learning progress.

Final Thoughts: Take the Leap Into Machine Learning The future of technology is being shaped by machine learning, and those who invest time in mastering this skill today will be the innovators of tomorrow. Whether you prefer detailed machine learning tutorials, engaging in hands-on machine learning projects, or structured machine learning online classes, there is a learning path that fits your style and goals.

Now is the perfect time to enroll in a machine learning online course and embark on a journey that promises not only intellectual growth but also exciting career possibilities. The tools, resources, and opportunities are just a click away—take the first step and shape your future with the power of machine learning.

0 notes

Text

Logistic Regression: A Key Step in My Machine Learning

As I continue my deep dive into machine learning, logistic regression is one of the first models I’ve been exploring. It’s often one of the first algorithms introduced in machine learning courses, and for good reason—it’s simple, interpretable, and surprisingly powerful for classification problems.

In this post, I’ll explain logistic regression, why it’s useful, and how it fits into my learning path.

What is Logistic Regression?

Despite its name, logistic regression isn’t used for regression—it’s a classification algorithm. While linear regression predicts continuous values, logistic regression is designed for problems where the outcome falls into categories (e.g., pass/fail, spam/not spam, positive/negative).

It works by applying a sigmoid function to the output of a linear equation, transforming it into a probability between 0 and 1. If the likelihood crosses a certain threshold (often 0.5), the model classifies it as one category; otherwise, it assigns it to another.

Why is Logistic Regression Useful?

✅ Simple and Interpretable – Unlike more complex models, logistic regression provides apparent probabilities, making it easier to understand why a prediction was made.

✅ Great for Binary Classification – If there are two possible outcomes (like predicting whether a student will pass or fail), logistic regression is often a solid first choice.

✅ Less Prone to Overfitting – Compared to deep learning models, logistic regression is less likely to memorize noise in the data, mainly when regularization techniques like L1 and L2 are applied.

✅ Works Well with Small Datasets – While deep learning needs extensive data, logistic regression can perform well even when data is limited.

Where Logistic Regression Fits in My Learning Path

Logistic regression has been a foundational building block as I work through different machine-learning techniques. Here’s how I see it fitting into the bigger picture:

A Starting Point for Classification – Before jumping into complex models like decision trees or neural networks, it helps to understand how a more straightforward model like logistic regression works.

A Baseline Model – Even when using advanced models, logistic regression is often used as a benchmark to compare performance. If a complex model doesn’t outperform logistic regression, the extra complexity might not be worth it.

A Stepping Stone to More Advanced Concepts – Learning about logistic regression has helped me understand important concepts like loss functions, probability distributions, and optimization techniques (such as gradient descent).

Final Thoughts

Logistic regression might not be the flashiest algorithm in machine learning, but it’s one of the most important to understand. It’s a great starting point for classification tasks and lays the groundwork for more advanced models.

Next on my learning journey, I’ll dive into decision trees and random forests—models that can handle more complex decision-making. Stay tuned for that!

If you’ve worked with logistic regression before, I’d love to hear your thoughts—what challenges did you face, and how did you overcome them? Let’s discuss it!

1 note

·

View note

Text

Investigating the Interplay Between Math and Computation

Mathematics and computing have been companions for a long time, impacting disciplines ranging from engineering and artificial intelligence to finance and scientific research. An understanding of how maths and computing overlap can go a long way in fostering problem-solving capacity, analytical powers, and scholastic excellence. From unraveling complex equations to constructing algorithms to discovering data science, the interaction between maths and computation is inevitable. Students in need of math assignment help typically find that maintaining a background in both fields results in improved efficiency and precision in their assignments.

This piece discusses the close relationship between computation and mathematics, providing students with useful tips on how to better understand and excel in both disciplines. With the assistance of computational thinking, mathematical modeling, and applications, this guide provides the information necessary to excel in both fields.

The Relationship Between Mathematics and Computation

Mathematics is the foundation of computation, and computation facilitates mathematical discovery and problem-solving. Computation refers to the process of carrying out calculations, which can be done manually, with a calculator, or using sophisticated programming methods. Computational software such as Python, and Wolfram Alpha has transformed the way mathematical problems are solved in modern education. Assignment helpers often recommend the use of these tools to enhance problem-solving efficiency and accuracy in mathematical tasks.

Areas Where Mathematics and Computation Intersect

Algebra and Algorithm Design – Algebraic algorithms are the most common, ranging from solving linear equations to function optimization.

Calculus in Computational Simulations – Differential equations have a wide range of applications in physics, engineering, and computer graphics and are often solved numerically.

Statistics and Data Science – Statistical analysis is highly dependent on computational methods for handling large datasets, identifying patterns, and making predictions.

Cryptography and Number Theory – Techniques of cryptography in cybersecurity are based on number theory and computational methods.

Machine Learning and Artificial Intelligence – Both are based on mathematical principles such as matrices, probability, and optimization, with the help of computational models.

Knowledge of such connections enables students to apply both fields to achieve maximum efficiency and problem-solving potential in mathematical applications.

Computational Thinking in Mathematics

Computational thinking is a problem-solving process that includes breaking down complicated problems, recognizing patterns, and step-by-step building of solutions. It is one of the major aspects of mathematics, especially when solving abstract problems or a high volume of calculations.

Basic Principles of Computational Thinking

Decomposition – Reducing a complicated problem into small, manageable pieces.

Pattern Recognition – Identifying recurring patterns in mathematical problems.

Abstraction – Choosing key details and ignoring irrelevant information.

Algorithmic Thinking – Developing logical step-by-step procedures for solving problems.

Students of mathematics who use computational thinking in assignments achieve the work comfortably, leading to satisfactory academic performance. Tutors of homework recommend students do this sort of technique practice in a bid to improve problem-solving capacity.

How Computation Helps Mathematical Education

Since the time computer programs were invented, computation has become a tool of inevitable requirement in mathematical study. From programming and simulation to math packages, computational techniques offer students an interactive platform to understand theoretical concepts.

Advantages of Computational Packages in Mathematics

Visual Representation of Problems – Graph utilities allow visual representation of functions, equations, and mappings in geometry. Automation of Tedious Calculations – Computer calculations aid in saving time spent on tiresome and redundant computations. Prompt Feedback – Immediate feedback allows students to make errors and learn. Real-World Application – Models and simulations base mathematical concepts more.

GeoGebra, Wolfram Alpha, and Python libraries such as NumPy and SymPy allow students to play with mathematical concepts, improving understanding and retention.

Applications of Computation and Mathematics in the Real World

Mathematics and computation not only meet in school but also have an impact on different industries and inventions.

Fields Where Computation and Mathematics Play a Central Role

Engineering – Bridges, airplanes, and circuits are designed using computational models.

Finance and Economics – Stock market predictions, risk calculation, and economic forecasting are all computationally based.

Medicine and Healthcare – Computational biology and data analysis help in medical diagnosis and research.

Artificial Intelligence – Machine learning models employ sophisticated mathematical computation to enhance decision-making.

Cybersecurity and Cryptography – Cryptographic methods provide mathematical solutions to data security.

Gaining an understanding of how mathematics and computation work together allows students to acquire transferable skills that can be used in numerous career options. Assignment helpers and writers typically ask students to do real-life case studies in the expectation of enhancing their competence and improving their performance in studies.s.

Understanding Computation and Mathematics Strategies

To succeed in computation and mathematics, students need to use correct study habits that ensure understanding and application.

Practical Strategies for Success

Learn Programming – Python and MATLAB programming languages make mathematical problem-solving easy. Use Internet Resources – Experiential and visual learning through online websites. Practice Daily – Daily practice of mathematical problems enhances computational skills. Solve Challenging Problems – Problem-solving breaks concepts down into easy-to-grasp bits. Use Maths for Real-Life Scenarios – Practical application of theories to real life enhances understanding.

With the incorporation of such methods, students will learn computational efficiency when solving mathematical problems.

Conclusion: Best Learning with Mathematics and Computation

The interaction between mathematics and computation provides students with an excellent model for solving intricate problems in most fields. From designing algorithms to data science and engineering, the interaction between the two subjects is seen in both learning and actual applications.

By employing computational thinking, using digital resources, and applying math to real life, students develop helpful skills to ensure academic as well as workplace success. Mathematics assignment help seekers can gain significant benefits through their comprehension of how computational devices enable math study.

Under the professional tutelage of Assignment in Need, students are able to expand their understanding, improve grades, and begin enjoying math and computation rather than hating them.

1 note

·

View note

Text

Exploring Agile Methodologies: Tools, Processes, and Best Practices

In the world of project management and software development, agility has become a key factor for success. With businesses needing to adapt quickly to market changes, agile methodologies provide a framework for teams to respond swiftly and effectively. By emphasizing flexibility, collaboration, and continuous improvement, agile practices help organizations deliver high-quality results. Let’s dive into some of the essential agile methodologies and how they can transform the way projects are managed and executed.

Understanding the Agile Scrum Methodology

One of the most widely adopted frameworks within the agile family is the agile scrum methodology. Scrum focuses on delivering work in small, manageable increments, known as sprints, which typically last between one and four weeks. Scrum teams are self-organizing and cross-functional, ensuring that every member is involved in all stages of the project. The scrum approach encourages regular feedback and continuous collaboration, which helps teams stay on track and make necessary adjustments along the way. This iterative cycle allows for greater flexibility and faster adaptation to changing requirements, making scrum an ideal methodology for projects that need to remain dynamic.

The Importance of Agile Testing Methodology

Testing plays a crucial role in any development process, and the agile testing methodology is specifically designed to integrate testing throughout the entire project lifecycle. Unlike traditional testing methods, where testing occurs at the end of the project, agile testing is conducted in parallel with development. This continuous testing process helps teams identify and address issues early, ensuring that any defects are caught before they become major problems. Agile testing also promotes collaboration between developers, testers, and stakeholders, fostering better communication and quicker problem resolution. By embedding testing into every stage of the development cycle, agile testing methodologies help teams maintain a focus on quality and deliver products that meet customer expectations.

The Agile Methodology Process: Key Steps to Success

The agile methodology process is built around a series of defined stages that guide teams through the project. These stages include planning, design, development, testing, and review. However, the key difference between agile and traditional project management lies in the iterative nature of these steps. Instead of working through a linear process, agile teams revisit and refine each stage multiple times throughout the project. This cycle ensures that any changes in requirements or feedback from stakeholders are incorporated as the project progresses. Additionally, agile processes prioritize collaboration, transparency, and customer feedback, ensuring that the final product aligns with user needs and expectations.

Utilizing Agile Methodology Tools for Efficiency

To effectively implement agile practices, teams rely on agile methodology tools that support collaboration, tracking, and project management. These tools help teams manage sprints, assign tasks, and monitor progress in real time. Popular agile tools like Jira, Trello, and Asana enable teams to visualize workflows, track backlog items, and facilitate communication among team members. These tools also allow project managers to assess performance metrics, identify bottlenecks, and make data-driven decisions to improve team efficiency. With the right agile methodology tools in place, teams can streamline their processes, improve collaboration, and enhance overall project outcomes.

By embracing agile methodologies like scrum, agile testing, and agile methodology processes, businesses can enhance their project management capabilities and deliver high-quality results on time. The flexibility, collaboration, and iterative nature of agile practices are key to staying competitive in today's fast-paced business world.

0 notes

Text

Empowering Artificial Intelligence with Image Annotation: A GTS.ai Perspective

Data has turned out to be the most potent transformative force across industries, with visual data at its core. However, data in raw form is rarely sufficient. It is the act of using image annotation, or the act of assigning meaningful labels to an image, that allows AI systems to decipher the visual world around them. At GTS.ai, we are bringing a new standard of image annotation that will support innovative and accurate AI-driven applications.

Understanding Image Annotation

Image annotation-the naming of objects and features or regions in an image-has become the basis of computer vision. Object recognition refers to the ability of machines to identify and categorize visual elements. It trains AI models to do the following:

Recognize objects and patterns.

Composition of space between elements.

Make decisions based upon visual inputs.

The types of image annotation are:

Semantic Segmentation: Assigning a label to each pixel for detailed analysis.

Bounding Boxes: Highlighting objects within rectangular frames.

Polyline annotation: Mapping routes or linear features such as roads.

Landmark annotation: Pinpointing features of interest, such as facial markers or joint positions.

Each method sheds ground for the respective applications fostering in development across the wide spectrum of industries.

The Role of Image Annotation in AI

AI's capabilities of seeing the world rest on very well-annotated datasets. Only capable annotations enable the AI models to:

Predict Outcomes: Medical imaging applications are identifying diseases with an unmatched grain.

Optimize Operations: Retail platforms are automating image recognition to optimize inventory management.

Enhance End-User Experience: Applications such as augmented reality depend on seeing the world through the lenses of great detail and clarity to provide highly immersive interaction experiences.

Unless there is severe image annotation, there developments of AI applications would remain unrealized.

GTS.ai: Future-forward Image Annotation

The launch of GTS.ai will enable the unique win-win business approach on the part of image annotation. Other than basic, it goes on to provide:

1.Full Annotation Services

From bounding boxes to semantic segmentation, we tend to do every part of annotation diligently-this is made possible by our team of experts relying on thought-leading manual competencies some utilizing automated tools that lend themselves very handy solutions.

2.Scalable Solutions for Every Business

Whether you are a start-up or an enterprise, we give scalable solutions based on the size of your project and of its complexity.

3.Quality Control

Quality control systems designed to guarantee the adequate accuracy and consistency of datasets produced have to be very stringent.

4.Secure and Confidential

A key driver of GTS.ai business is data security. GTS.ai is committed to putting a strict protocol in place to protect your sensitive information.

Transformative Uses of Image Annotation

Applications of image annotation spread to sectors as diversify as

Healthcare: To help tap into the beauty of diagnostics via annotated scans and images.

Automotive: Training autonomous vehicles to detect road signs, pedestrians, and hazards.

Retail: Making product tagging and visual search for a smoother shopping journey.

Agriculture: Processing of aerial imagery to monitor and parcel crops.

Contributing to the vision of AI-enabled innovation through sustained transformation in these industries will be the moon shot of GTS.ai.

Moving Forward With Image Annotation Technology

The rapid advancement of AI renders it impossible to bring automated solutions relying on input data of static feature sets. New trends have emerged, such as:

Real-time annotation: Used to support applications in autonomous drones and robotics.

Synthetic data: When datasets undergo augmentation with computer-generated images for diversity in the scenario.

Contextual labeling: As the name suggests, another level of information is meant to be added to inform AI in real-time.

Here, at GTS.ai, we actively embrace these innovations to enable our clients to obtain future-proof solutions.

Let's Talk About Partnering With GTS.ai for Image Annotation

Find out how you can partner with the right annotation provider to achieve your AI dreams. At Globose Technology Solutions GTS.ai, further expertise, working innovation, and a client-centric approach form the core of solutions that yield practical results.

Want to know more? Switch over to GTS.ai to learn more about how our image annotation services can ensure the surge of your AI plans.0

0 notes

Text

Understanding Data Science: Tips for Students Facing Challenging Assignments

Data science is an exciting and rapidly evolving field that combines statistics, programming, and domain knowledge to extract meaningful insights from data. As students embark on their data science journey, they often encounter challenging assignments that can feel overwhelming.

This guide will provide you with essential tips to help you succeed in your data science studies while promoting the invaluable support offered by AssignmentDude.

At AssignmentDude, we recognize that the journey through data science can be daunting.

Whether you’re grappling with complex algorithms, statistical concepts, or programming languages like Python and R, our expert tutors are here to assist you.

Our personalized approach ensures that you receive the help you need to tackle your assignments confidently and efficiently.

By working with us, you can build a solid foundation in data science and develop the skills necessary for a successful career in this field.

As you navigate your data science courses, remember that seeking help is a proactive step toward mastering the subject.

With AssignmentDude’s support, you can overcome obstacles and deepen your understanding of key concepts. Let’s dive into some practical tips that can help you excel in your data science assignments.

1. Build a Strong Foundation

Master the Fundamentals

Before diving into advanced topics, ensure you have a solid grasp of the fundamental concepts of data science. This includes:

Mathematics: A strong understanding of linear algebra, calculus, and probability is crucial for working with data models and algorithms.

Statistics: Familiarize yourself with descriptive statistics, inferential statistics, hypothesis testing, and regression analysis.

Programming: Proficiency in programming languages such as Python or R is essential for data manipulation and analysis.

Recommended Resources

Books: Consider reading “Mathematics for Machine Learning” or “Practical Statistics for Data Scientists” to strengthen your mathematical and statistical foundation.

Online Courses: Platforms like Coursera or edX offer courses specifically designed to teach the fundamentals of data science.

Example: Understanding Linear Algebra

Linear algebra is fundamental in many areas of data science, especially in machine learning. Concepts such as vectors, matrices, and transformations are crucial when dealing with high-dimensional data. For instance:

Vectors represent points in space and can be used to describe features of an observation.

Matrices are used to represent datasets where each row corresponds to an observation and each column corresponds to a feature.

Familiarizing yourself with these concepts will enhance your ability to understand algorithms like Principal Component Analysis (PCA), which reduces dimensionality in datasets.

2. Stay Updated with Industry Trends

Data science is an ever-evolving field, with new tools and techniques emerging regularly. Staying informed about industry trends will not only enhance your knowledge but also make you more competitive in the job market.

How to Stay Updated

Follow Industry Blogs: Websites like KDnuggets, Towards Data Science, and Data Science Central provide valuable insights into current trends.

Attend Webinars and Conferences: Participate in online webinars or attend conferences to learn from industry experts and network with peers.

Join Online Communities: Engage with communities on platforms like Reddit or LinkedIn where data scientists share their experiences and knowledge.

Example: Following Key Influencers

Identify key influencers in the data science community on platforms like Twitter or LinkedIn. Following their posts can provide insights into new tools, methodologies, or even job opportunities. Some notable figures include:

Hilary Mason: A prominent data scientist known for her work at Fast Forward Labs.

Kirk Borne: A former NASA scientist who shares insights on big data and machine learning.

3. Practice Regularly

The best way to become proficient in data science is through consistent practice. Work on personal projects, participate in hackathons, and contribute to open-source projects to gain hands-on experience.

Project Ideas

Kaggle Competitions: Join Kaggle competitions to work on real-world datasets and solve complex problems collaboratively.

Personal Projects: Choose a topic of interest (e.g., sports analytics, healthcare) and analyze relevant datasets using techniques you’ve learned.

Open Source Contributions: Contribute to open-source data science projects on GitHub to enhance your coding skills while collaborating with others.

Example Project: Analyzing Public Datasets

Choose a public dataset from sources like Kaggle or UCI Machine Learning Repository. For example:

Download a dataset related to housing prices.

Use Python libraries like Pandas for data cleaning and analysis.

Visualize findings using Matplotlib or Seaborn.

Document your process on GitHub as part of your portfolio.

Practical Steps for Personal Projects

When starting a personal project:

Define Your Objective

Collect Data

Analyze Data

Visualize Results

Share Your Work

4. Utilize Tools Effectively

Familiarize yourself with essential tools used in data science:

Programming Languages

Python: Widely used for data manipulation and analysis due to its extensive libraries like Pandas, NumPy, and Matplotlib.

R: Particularly useful for statistical analysis; it has powerful packages such as ggplot2 for visualization.

Databases

SQL: Learn SQL (Structured Query Language) for querying databases effectively. Understanding how to manipulate data using SQL is crucial for any aspiring data scientist.

Visualization Tools

Tableau: A powerful tool for creating interactive visualizations.

Power BI: Another popular tool that helps visualize data insights effectively.

Example of Using Python Libraries

Here’s how you might use Pandas and Matplotlib together:

python

import pandas as pd

import matplotlib.pyplot as plt

# Load dataset

data = pd.read_csv(‘housing_prices.csv’)

# Clean dataset

data.dropna(inplace=True)

# Plotting

plt.figure(figsize=(10,6))

plt.scatter(data[‘SquareFeet’], data[‘Price’], alpha=0.5)

plt.title(‘Housing Prices vs Square Feet’)

plt.xlabel(‘Square Feet’)

plt.ylabel(‘Price’)

plt.show()

5. Master Data Cleaning Techniques

Data cleaning is one of the most critical steps in the data science process. Raw data often contains inaccuracies or inconsistencies that must be addressed before analysis.

Common Data Cleaning Techniques

Handling Missing Values:

python

# Filling missing values with mean

mean_value = data[‘column_name’].mean()

data[‘column_name’].fillna(mean_value, inplace=True)

Removing Duplicates:

python

# Remove duplicates

data.drop_duplicates(inplace=True)

Standardizing Formats:

python

# Convert date column to datetime format

data[‘date_column’] = pd.to_datetime(data[‘date_column’])

Example of Data Cleaning in Python

Here’s a simple example using Python’s Pandas library:

python

import pandas as pd

# Load dataset

data = pd.read_csv(‘data.csv’)

# Display initial shape

print(“Initial shape:”, data.shape)

# Remove duplicates

data.drop_duplicates(inplace=True)

# Fill missing values

data.fillna(method=’ffill’, inplace=True)

# Display cleaned shape

print(“Cleaned shape:”, data.shape)

6. Develop Strong Communication Skills

As a data scientist, it’s essential not only to analyze data but also to communicate findings effectively. Being able to present complex results in a clear manner is crucial when working with stakeholders who may not have a technical background.

Tips for Effective Communication

Create Visualizations

Tailor Your Message

Practice Presentations

Example Presentation Structure

When presenting findings:

Introduction:

Methodology:

Results:

Conclusion & Recommendations:

7. Work on Real-World Projects

Gaining practical experience through real-world projects will significantly enhance your learning process. This hands-on experience allows you to apply theoretical knowledge while building a portfolio that showcases your skills.

Finding Projects

Internships:

Volunteer Work:

Freelancing Platforms:

Example Project Idea: Analyzing Public Datasets

Choose a public dataset from sources like Kaggle or UCI Machine Learning Repository:

Download a dataset related to housing prices.

Use Python libraries like Pandas for data cleaning and analysis.

Visualize findings using Matplotlib or Seaborn.

Document your process on GitHub as part of your portfolio.

8. Build a Portfolio

A well-organized portfolio demonstrating your skills is vital when applying for jobs or internships in data science. Include various projects that showcase different aspects of your expertise.

What to Include in Your Portfolio

Project Descriptions

Code Samples

Visualizations

Example Portfolio Structure

Your portfolio could include sections such as:

Introduction/About Me

Projects (with links)

Skills (programming languages/tools)

Contact Information

9. Network with Professionals

Building connections within the industry can provide valuable insights into job opportunities and trends in data science.

How to Network Effectively

LinkedIn Connections

Local Meetups

Mentorship Programs

Example Networking Strategies

Attend industry conferences such as Strata Data Conference or PyData events where experts share their knowledge while providing opportunities network face-to-face!

Join relevant groups on social media platforms dedicated solely towards discussions around topics related specifically back onto interests within field!

10.Stay Motivated

Learning Data Science can be challenging at times; maintaining motivation is key towards success!

Tips For Staying Motivated

1 . Set Clear Goals : Define specific short-term long-term goals related what want achieve within field Data Science

2 . Break Down Tasks : Divide larger tasks manageable parts so they feel less overwhelming; celebrate small victories along way!

3 . Reward Yourself : After completing significant milestones — treat yourself! Whether it’s enjoying time off indulging something special — positive reinforcement helps keep spirits high!

11.Seek Help When Needed

Despite all efforts , there may be times when assignments become too challenging time-consuming . In such cases , don’t hesitate seek help from professionals who specialize providing assistance tailored specifically students facing difficulties .

Why Choose AssignmentDude?

AssignmentDude offers urgent programming assignment help services designed specifically students who find themselves overwhelmed tight deadlines complex topics within coursework! Our expert team available around-the-clock ensuring timely delivery without compromising quality standards!

By reaching out when needed — whether it’s clarifying concepts related directly back onto assignments — students can alleviate stress while ensuring they stay ahead academically!

Additional Tips for Success in Data Science Assignments

While we’ve covered numerous strategies already let’s delve deeper into some additional tips specifically aimed at helping students overcome challenges they may face during their assignments:

Understand Assignment Requirements Thoroughly

Before starting any assignment take time read through requirements carefully! This ensures clarity around what exactly expected from submission — avoid misinterpretations which could lead wasted effort down wrong path!

Tips To Clarify Requirements:

1 . Highlight Key Points : Identify critical components outlined within prompt such as specific methodologies required datasets needed etc .

2 . Ask Questions : If anything unclear don’t hesitate reach out instructors classmates clarify doubts early-on rather than later when deadlines approaching!

3 . Break Down Tasks : Once understood break down larger tasks smaller manageable chunks creating timeline completion helps keep organized focused throughout process!

Collaborate With Peers

Forming study groups collaborating classmates provides opportunity share knowledge tackle difficult topics together! Engaging discussions often lead new perspectives understanding concepts better than studying alone!

Benefits Of Collaboration :

1 . Diverse Perspectives : Different backgrounds experiences lead unique approaches problem-solving enhancing overall learning experience!

2 . Accountability : Working alongside others creates accountability encourages everyone stay committed towards completing assignments timely manner!

3 . Enhanced Understanding : Teaching explaining concepts peers reinforces own understanding solidifying grasp material learned thus far!

Embrace Feedback

Receiving feedback from instructors peers invaluable part learning process! Constructive criticism highlights areas improvement helps refine skills further develop expertise within field!

How To Embrace Feedback Effectively :

1 . Be Open-Minded : Approach feedback positively view it as opportunity grow rather than personal attack — this mindset fosters continuous improvement!

2 . Implement Suggestions : Take actionable steps based upon feedback received make necessary adjustments future assignments ensure progress made over time!

3 . Seek Clarification : If unsure about certain points raised during feedback sessions don’t hesitate ask questions clarify how best address concerns moving forward!

Explore Advanced Topics

Once comfortable foundational aspects consider exploring advanced topics within realm Data Science! These areas often require deeper understanding but offer exciting opportunities expand skill set further enhance employability prospects post-graduation!

Advanced Topics To Explore :

1 . Machine Learning Algorithms : Delve into supervised unsupervised learning techniques including decision trees random forests neural networks etc .

2 . Big Data Technologies : Familiarize yourself tools frameworks such as Hadoop Spark which enable processing large-scale datasets efficiently!

3 . Deep Learning : Explore deep learning architectures convolutional recurrent networks commonly used image/video processing natural language processing tasks alike!

4 . Natural Language Processing (NLP): Learn techniques analyze interpret human language allowing applications chatbots sentiment analysis text classification etc .

5 . Cloud Computing Solutions : Understand how cloud platforms AWS Azure Google Cloud facilitate storage computing power needed handle large-scale analytical workloads seamlessly across distributed systems .

Conclusion

Navigating through challenging assignments in Data Science requires dedication , practice , effective communication skills — and sometimes assistance from experts !

By following these tips outlined above while utilizing resources like AssignmentDude when needed — you’ll be well-equipped not just academically but also professionally as embark upon this exciting journey!

Remember that persistence pays off ; embrace each challenge opportunity growth ! With hard work combined strategic learning approaches — you’ll soon find yourself thriving within this dynamic field filled endless possibilities !

If ever faced difficulties during assignments related specifically C++ , don’t hesitate reaching out AssignmentDude — we’re here dedicated support tailored just YOU!

Submit Your Assignment Now!

Together we’ll conquer those challenges ensuring success throughout entire learning process! This expanded content now provides an extensive overview of understanding data science while offering practical tips for students facing challenging assignments.

#do my programming homework#programming assignment help#urgent assignment help#assignment help service#final year project help#python programming#php assignment help

0 notes

Text

Geek Out! Must-Have AI Certifications for 2024 Innovators

AI is driving innovation across industries, from transforming business processes to creating futuristic technology applications. For professionals passionate about pioneering in this high-growth field, acquiring an AI certification has become a powerful way to stay ahead. If you’re eager to dive into the technical, strategic, and ethical elements of AI, 2024 offers a wealth of top-notch certification options. This article covers some of the most sought-after AI certifications for innovators, including options from AI Certs and other leading institutions.

Why AI Certifications Are Essential for Innovators

With the demand for AI expertise at an all-time high, certifications have become key for professionals seeking to specialize in AI’s transformative power. Here’s how these certifications can benefit your career:

Hands-On Learning: The best AI certifications offer a hands-on approach, giving you the opportunity to work on projects that mirror real-world challenges.

Updated Skill Sets: Certifications allow you to keep your skills updated in a field known for its rapid advancement, from deep learning to AI ethics.

Enhanced Career Opportunities: AI certifications can help open doors to high-paying positions in tech firms, research institutions, and government agencies.

Community and Network: Many certification programs provide access to a network of professionals, mentors, and fellow AI enthusiasts to support your career journey.

Whether you’re a software engineer, data scientist, or project manager, there’s an AI certification to help you master this exciting field.

Must-Have AI Certifications for 2024 Innovators

For those passionate about using AI to spark innovation, here are the top AI certifications to consider in 2024.

1. AI+ Prompt Engineer™ by AI Certs

AI+ Prompt Engineer™ by AI Certs is specifically designed for professionals eager to explore the nuances of prompt engineering, an emerging and powerful area in AI. This course focuses on prompt optimization, covering essential techniques in natural language processing (NLP) to create accurate, human-like AI responses. With practical assignments and interactive learning, AI+ Prompt Engineer™ provides the skill set to innovate and use AI models effectively.

Key Topics Covered:

Foundations of Prompt Engineering: Understand prompt design and optimization in NLP.

Ethical Considerations: Learn responsible AI usage, including data privacy and bias mitigation.

Advanced NLP Techniques: Dive deep into practical applications, from chatbots to AI-driven customer support systems.

For anyone looking to make a mark in NLP and prompt engineering, AI+ Prompt Engineer™ is a valuable investment.

Use the coupon code NEWCOURSE25 to get 25% OFF on AI CERTS’ certifications. Don’t miss out on this limited-time offer! Visit this link to explore the courses and enroll today.

2. Machine Learning Specialization by Stanford University

Stanford University’s Machine Learning Specialization offers a comprehensive understanding of AI fundamentals, particularly in machine learning algorithms and practical applications. This program, available through Coursera, is one of the most respected AI certifications, providing both foundational and advanced concepts in supervised and unsupervised learning. This certification is well-suited for professionals aiming to develop skills in building and deploying machine learning models.

Key Topics Covered:

Machine Learning Foundations: Learn about linear regression, classification, clustering, and deep learning.

Real-World Applications: Understand how machine learning applies across various fields, including healthcare, finance, and technology.

Project-Based Learning: Gain hands-on experience with real-world case studies and projects.

With its rigorous coursework and Stanford’s academic reputation, this certification provides solid preparation for AI innovators.

3. Professional Certificate in Artificial Intelligence by edX and IBM

The Professional Certificate in Artificial Intelligence by IBM, available on edX, is designed for AI enthusiasts who want to gain deep knowledge in building AI applications. This program covers essential AI concepts and tools, providing learners with practical knowledge of neural networks, computer vision, and NLP.

Key Topics Covered:

Python Programming for AI: Learn Python, the programming language most commonly used for AI.

Neural Networks and Deep Learning: Understand the architecture of neural networks and applications in image and text recognition.

AI Applications in Business: Learn how AI is used to solve business problems in fields like customer service, finance, and marketing.

The IBM Professional Certificate is suitable for both beginners and experienced professionals looking to strengthen their skills in developing AI solutions.

4. Deep Learning Specialization by DeepLearning.AI and Andrew Ng

Created by Andrew Ng, a prominent AI researcher, the Deep Learning Specialization on Coursera is tailored for those interested in understanding the inner workings of deep learning. This specialization provides a deep dive into neural networks, covering a wide range of topics from simple models to complex architectures like convolutional networks and generative adversarial networks (GANs).

Key Topics Covered:

Fundamentals of Deep Learning: Learn how neural networks are built and deployed for different tasks.

Computer Vision and NLP: Gain knowledge in applying deep learning for image recognition and language processing.

Practical Implementation: Work on real-world projects to strengthen your practical skills.

This specialization is ideal for professionals looking to specialize in deep learning and develop expertise in high-demand AI technologies.

Choosing the Right Certification for You

Selecting the right AI certification depends on your career goals, skill level, and interests. Here are some tips to guide you:

Assess Your Skill Level: If you’re new to AI, a foundational course like Google’s Machine Learning Crash Course might be a good start. For those with a solid understanding, more advanced certifications like IBM’s AI Engineering or Stanford’s healthcare AI certification offer deeper insights.

Define Your Niche: Each certification program has a unique focus. If you’re passionate about NLP and prompt engineering, AI+ Prompt Engineer™ by AI Certs can give you targeted skills for a career in language-based AI. For those aiming to contribute to healthcare, Stanford’s certification provides industry-specific knowledge.

Consider Practical Applications: Choose certifications that offer hands-on labs and projects to help you apply what you learn.

Career Growth and Network: Consider certifications that offer career support, networking opportunities, and real-world applications, which can significantly boost your employability.

Wrapping Up: The Power of AI Certification for Innovators

AI certifications are more than just credentials — they’re a powerful way to gain industry-relevant skills, stay current, and shape the future of technology. In 2024, the demand for AI expertise will continue to grow, and professionals who invest in their skills today will be well-positioned to lead the charge in tomorrow’s tech-driven world. Whether you’re drawn to machine learning, NLP, healthcare, or ethics in AI, there’s a certification program tailored to help you geek out and excel in your chosen field.

Investing in certifications like AI+ Prompt Engineer™ by AI Certs, Google’s Machine Learning Crash Course, and IBM’s AI Engineering Professional Certificate will prepare you to not only meet but also drive the demands of an AI-powered future.

0 notes

Text

Module #8 Assignment

For this assignment, I made a series of correlation and regression visualizations using ggplot2 and mtcars.