#Mainframe Infrastructure Upgrade

Explore tagged Tumblr posts

Text

VRNexGen: Your Trusted Partner in Mainframe Modernization

VRNexGen is a leading technology consulting firm specializing in mainframe modernization. With a deep understanding of legacy systems and a forward-thinking approach, we assist organizations in transforming their mainframe environments to meet the demands of today's digital landscape.

#mainframe modernization#Mainframe Infrastructure Upgrade#Legacy System Modernization#Mainframe Transformation Partner#Mainframe Modernization Services#VRNexGen

1 note

·

View note

Text

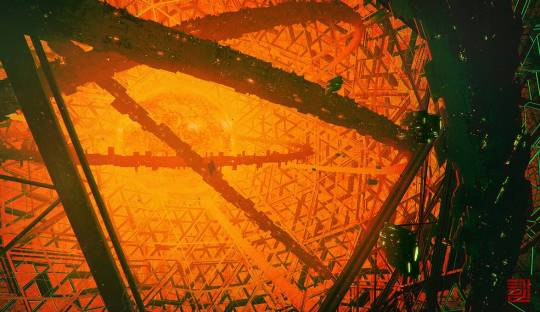

POST #004 — “LIVING GHOSTS”

The following footage was recorded via direct neural stream. No dramatization. No filters. Sound distortion minimized to preserve clarity without compromising identity.

It started with a hushed conversation.

An Exec looking to order a job. A foray into a Biotechnica storage facility, built on the edge of the Old Combat Zone. The goal: source lists for the ingredients used for a recent product. QC reports. Anything that might indicate a subpar product.

Word got to me. I got to them. Said I'd help put together the crew — and come along myself. On one condition: everything we see, everything we extract, I broadcast. Unfiltered. Uncensored.

They agreed.

I started digging — cross-referencing merc chatter, scan logs, and black market medical supply routes. MedTechs don't work in a vacuum. They need gear. They need stock. Eventually, one name surfaced. Someone who'd been slipping into that same Biotechnica facility — late, quiet, unlisted.

I reached out. They accepted. The way in was now secured. All we had to do was survive the rest.

Meanwhile, the fixer filled in the blanks. A Solo. A Netrunner. One to extract the data. One to keep us breathing while they did.

We showed up in disguise — just convincing enough to buy us a few quiet minutes. The MedTech’s contact had done their part: the door was unlocked.

Peace didn’t last.

05:32 — an employee crosses our path.

The Exec panics. Says the wrong thing. No cover story. No fallback.

The alarm trips.

06:34 — security measures engage.

A turret drops from the ceiling like a guillotine. The Exec reaches up, and jams a virus into its maintenance port while it warmed up.

06:42 — the override kicks in. Her hands shook just once.

Then, the hallway erupted. Gunfire. Screams. Shattered tiles. We returned fire out of instinct, not strategy.

The Netrunner used the turret as an access point. Turned their own defenses against them.

07:42 — silence. Brief, and grim.

We moved while it lasted. Made for the offices upstairs. Barricaded ourselves in. The windows would have to serve as an exit.

The Solo set up the barricade. The Exec prepared the getaway car. The MedTech patched up any wounds we had. I searched for physical records. The Netrunner jacked in.

What she found wasn’t just tight security. It was a Lich-class daemon — old code, war-forged. Arasaka architecture. She cracked the ICE, pulled the data, and made it out intact — barely.

During extraction, drones gave chase. The Solo turned them into scrap.

Back at the safehouse, the Exec reviewed the haul. It wasn’t what we went in for — but it was bigger. This wasn’t about a faulty product. This was about something buried deeper.

The file wasn’t QC logs. It was infrastructure planning. Memos between senior Biotechnica staff. Mentions of cloning incubators, leftover war-era mainframes, and something called the Infinity Protocol.

The phrase that stood out: “To house the SPIs.”

If you know what that means, you already understand the scale. If you don’t, you should read it for yourself.

I’ve included both the neural footage and the memo — unaltered.

[🔗 BD-Archive-004: “Living Ghosts”] (Viewer warning: Includes live-fire footage, high-level system breach, and Lich-class daemon interaction. Minimal compression. Voice masking applied.)

[📄 File Attachment: “MEMO 1 – BM-cRS Infrastructure Upgrade”] (Internal Biotechnica communication regarding enhanced cloning incubators, mainframes, and project security. Reference to “Infinity Protocol” and “SPIs” included.)

Ghosts don’t just haunt the past. Now, they might haunt the future, too.

1 note

·

View note

Text

Benefits We Have Over IBM Post-Warranty Maintenance Services

When your IBM server, storage, or networking equipment reaches the end of warranty, relying on OEM (Original Equipment Manufacturer) support can be costly and restrictive. Our third-party maintenance (TPM) services provide cost-effective, flexible, and efficient alternatives to IBM post-warranty maintenance.

🔹 Why Choose Us Over IBM Post-Warranty Maintenance?

✅ 1. Significant Cost Savings

�� IBM post-warranty support can be expensive, often requiring businesses to purchase extended OEM contracts. ✔ Our third-party maintenance (TPM) services reduce costs by up to 50-70% while maintaining high service standards.

✅ 2. Flexible & Customizable SLAs (Service Level Agreements)

✔ IBM support often offers one-size-fits-all contracts with limited customization options. ✔ We provide tailored SLAs based on your business needs, including:

24/7/365 support

Next-business-day or 4-hour on-site response times

Remote troubleshooting and proactive monitoring

✅ 3. Extended Hardware Lifespan

✔ IBM may encourage hardware upgrades instead of maintaining EOL (End-of-Life) or EOSL (End-of-Service-Life) equipment. ✔ We specialize in extending the lifecycle of IBM servers, storage, and networking gear, allowing businesses to maximize ROI on existing infrastructure.

✅ 4. Multi-Vendor Support (Beyond IBM)

✔ IBM post-warranty services only cover IBM products, requiring separate contracts for other brands. ✔ Our multi-vendor support model covers IBM and other OEMs like Dell, HP, Cisco, NetApp, Lenovo, etc.

✅ 5. Faster Response & Personalized Support

✔ OEM support queues can be slow, especially for non-critical issues. ✔ Our dedicated engineers and local field support teams ensure faster ticket resolution and direct engineer access—no long hold times!

✅ 6. Spare Parts Availability & Stocking

✔ IBM may limit access to replacement parts for EOL/EOSL hardware, pushing you to upgrade. ✔ We maintain an extensive stock of spare parts to ensure quick replacements and minimize downtime.

✅ 7. Proactive Monitoring & Predictive Maintenance

✔ OEMs typically offer reactive support, addressing issues only after failures occur. ✔ Our TPM services use AI-powered monitoring to predict and prevent failures, reducing unexpected outages.

✅ 8. Seamless Transition & Migration Support

✔ Switching from IBM support can seem complex. ✔ Our seamless onboarding process ensures zero disruption to your business operations during the transition.

✅ 9. Support for Legacy IBM Equipment

✔ IBM often discontinues support for older hardware, forcing unnecessary upgrades. ✔ We provide long-term support for legacy IBM servers, storage, and networking devices, keeping them running efficiently.

✅ 10. Independent & Unbiased Advisory

✔ IBM's goal is to sell new hardware and services, sometimes leading to biased recommendations. ✔ We focus on your actual business needs, offering unbiased guidance on IT maintenance, upgrades, and optimization.

🔹 Our IBM Maintenance Services Cover:

✅ IBM Power Systems (iSeries, AS/400, pSeries, etc.) ✅ IBM System x & BladeCenter Servers ✅ IBM Storage Solutions (Storwize, DS Series, FlashSystem, etc.) ✅ IBM Networking & Mainframes

🚀 Why Settle for Costly IBM Support? Choose a Smarter, More Affordable Alternative!

✔ Save up to 70% on IBM post-warranty maintenance ✔ Get 24/7/365 expert support & flexible SLAs ✔ Extend hardware lifespan & reduce downtime ✔ Access multi-vendor support for all IT infrastructure

💡 Ready to switch? Contact us today for a customized IBM maintenance plan that fits your business needs!

0 notes

Text

Best Application Modernization Companies Transforming Businesses in 2025

In today's rapidly evolving digital landscape, enterprises are increasingly looking to modernize their legacy applications to stay competitive and enhance efficiency. Application Modernization Services & Solutions play a crucial role in transforming outdated systems into agile, cloud-enabled applications that align with modern business needs. Here, we list the Top 10 companies in App Modernization Services, helping organizations accelerate digital transformation and optimize their IT infrastructure.

1. Vee Technologies

As a leading Application Modernization Company, Vee Technologies specializes in end-to-end App Modernization Services that help businesses transition from legacy systems to cloud-native architectures. With expertise in reengineering applications, migrating workloads, and integrating AI-driven capabilities, Vee Technologies delivers scalable, secure, and cost-effective modernization solutions that drive digital transformation. Their customer-centric approach and innovative solutions make them a preferred choice for enterprises across various industries.

2. Accenture

Accenture is a globally recognized leader in IT consulting and modernization. Their Application Modernization Services & Solutions focus on transforming legacy applications into cloud-compatible and microservices-based architectures, enabling businesses to increase agility and scalability.

3. IBM

IBM provides robust App Modernization Services, leveraging AI and automation to reengineer enterprise applications. Their comprehensive solutions include mainframe modernization, containerization, and cloud-native transformation.

4. Cognizant

Cognizant helps organizations enhance business agility with cutting-edge Application Modernization Services & Solutions. Their expertise in DevOps, cloud migration, and legacy application replatforming ensures seamless digital transformation.

5. Infosys

Infosys offers strategic App Modernization Services, utilizing AI and cloud computing to upgrade legacy applications. Their modernization framework accelerates digital adoption, improving business operations and customer experiences.

6. Capgemini

Capgemini delivers advanced Application Modernization Services & Solutions that optimize IT landscapes through automation, AI, and cloud integration, ensuring improved agility and reduced operational costs.

7. Wipro

Wipro’s Application Modernization Company services help enterprises transition from monolithic legacy systems to modern, cloud-based environments, ensuring better performance and security.

8. HCL Technologies

HCL Technologies offers comprehensive App Modernization Services, leveraging AI, machine learning, and cloud-native solutions to enhance business resilience and efficiency.

9. TCS (Tata Consultancy Services)

TCS provides robust Application Modernization Services & Solutions, enabling enterprises to embrace digital transformation through scalable, cost-efficient, and cloud-optimized solutions.

10. DXC Technology

DXC Technology specializes in legacy application transformation, offering AI-driven App Modernization Services that streamline IT operations, enhance security, and drive business innovation.

Conclusion Selecting the right Application Modernization Company is crucial for enterprises looking to future-proof their IT systems. These Companies lead the way in delivering innovative Application Modernization Services& Solutions that enhance agility, scalability, and business continuity. Whether it's migrating to the cloud, modernizing legacy applications, or integrating AI-driven features, these top companies provide best-in-class services to help businesses stay ahead in the digital era.

0 notes

Text

Legacy Modernization Services: Transforming Insurance for the Digital Age

The insurance industry is undergoing a massive digital transformation, yet many insurers continue to rely on legacy systems that are outdated, inefficient, and costly to maintain. These aging systems hinder scalability, security, and customer experience, making it difficult for insurers to stay competitive in today’s fast-paced market.

Legacy modernization services provide a strategic approach to upgrading these systems, ensuring seamless integration with digital technologies, enhanced operational efficiency, and compliance with evolving regulations. AgiraSure’s Legacy Modernization Services enable insurers to transition from outdated IT infrastructures to agile, cloud-based, and AI-driven solutions that drive business growth.

Why Insurance Companies Need Legacy Modernization Services

Legacy systems were once the backbone of insurance operations, but they now pose several challenges:

High Maintenance Costs – Older systems require expensive upkeep and manual interventions.

Limited Scalability – Legacy infrastructures struggle to support new digital capabilities.

Security Vulnerabilities – Outdated software is prone to cyber threats and compliance risks.

Inefficient Processes – Manual workflows slow down claims processing, underwriting, and policy management.

Integration Challenges – Legacy systems lack flexibility for AI, automation, and data analytics integration.

Modernizing these systems is essential for improving efficiency, enhancing customer experience, and staying ahead in a rapidly evolving insurance landscape.

Key Legacy Modernization Services for Insurers

1. Cloud Migration & Infrastructure Modernization

Moving from on-premise systems to cloud-based infrastructure is a core aspect of legacy modernization. Cloud transformation ensures:

Scalability – Expand resources based on business needs.

Cost Efficiency – Reduce IT expenses by eliminating on-premise maintenance.

Data Security & Compliance – Cloud providers ensure robust security measures and regulatory adherence.

2. API-Driven Integration & System Interoperability

Legacy systems often lack connectivity with modern platforms. API-driven integration enables insurers to:

Seamlessly connect old systems with new technologies.

Enable real-time data sharing across applications.

Enhance automation, improving claims and policy management.

3. AI-Powered Automation for Process Optimization

AI and automation streamline insurance workflows, reducing manual effort and increasing efficiency. Modernization enables:

AI-driven underwriting and risk assessment.

Chatbot-powered customer interactions for instant policy support.

Automated claims processing for faster settlements.

4. Microservices & Modular Architecture Implementation

Rather than overhauling entire systems, AgiraSure enables insurers to transition to microservices architectures that:

Break down monolithic applications into modular services.

Enhance agility, allowing faster feature deployment.

Improve system reliability and scalability.

5. Data Modernization & Real-Time Analytics

Legacy systems often store data in unstructured and siloed formats. Modernization services provide:

Advanced data warehousing for structured insights.

Predictive analytics for fraud detection and customer behavior analysis.

AI-driven risk modeling for underwriting optimization.

6. Mainframe Modernization & Core System Upgrades

For insurers still using mainframe-based core systems, modernization strategies include:

Re-platforming applications to cloud environments.

Re-hosting existing software on hybrid infrastructures.

Re-engineering processes to support real-time transactions.

Benefits of Legacy Modernization for Insurance Companies

1. Improved Operational Efficiency

By automating processes and reducing manual interventions, insurers can accelerate policy issuance, claims processing, and customer interactions.

2. Reduced IT Maintenance Costs

Legacy system upgrades eliminate high maintenance expenses, replacing outdated infrastructures with cost-effective cloud solutions.

3. Enhanced Customer Experience

Digital self-service portals, AI-powered chatbots, and real-time policy updates improve customer engagement and satisfaction.

4. Strengthened Cybersecurity & Compliance

Modernized systems enhance data security, encryption, and compliance with industry regulations such as GDPR, HIPAA, and NAIC standards.

5. Faster Time-to-Market for New Products

Cloud-native, AI-driven platforms allow insurers to develop and launch new insurance products faster, adapting to changing market demands.

6. Competitive Advantage in the Digital Era

Legacy modernization positions insurers as innovative market leaders, ensuring long-term business sustainability.

Industries Benefiting from Legacy Modernization Services

1. Life & Health Insurance

AI-powered automated underwriting and policy approvals.

Cloud-based digital health records and policyholder management.

Predictive analytics for personalized insurance offerings.

2. Property & Casualty Insurance

Real-time risk assessment using IoT and AI-driven analytics.

Blockchain-powered claims processing for faster and fraud-proof settlements.

Digital portals for seamless policy renewals and updates.

3. Auto Insurance

Telematics-driven usage-based insurance models.

AI-powered accident detection and automated claims approvals.

Cloud-based integration of driver behavior analytics.

4. Reinsurance & Risk Management

Cloud-based platforms for automated risk modeling.

AI-powered insights for portfolio risk optimization.

Blockchain-driven smart contract execution for reinsurance agreements.

Steps to Implement a Successful Legacy Modernization Strategy

1. Assess Current Legacy Systems

Identify outdated components, inefficiencies, and integration challenges.

2. Define Business Goals & Modernization Priorities

Align modernization with operational efficiency, regulatory compliance, and customer experience goals.

3. Choose the Right Modernization Approach

Select the best approach based on business needs:

Rehosting – Migrating applications to cloud infrastructure.

Replatforming – Modifying applications for cloud compatibility.

Rearchitecting – Redesigning systems for enhanced scalability and security.

4. Implement in Phases & Minimize Downtime

Modernization should occur in phases to prevent disruptions in ongoing operations.

5. Train Employees & Optimize Workflows

Adopting new technologies requires training teams and optimizing workflows for efficient implementation.

6. Monitor, Evaluate & Optimize Continuously

Post-modernization, insurers must track system performance and make iterative improvements to maximize ROI.

Future Trends in Legacy Modernization for Insurance

AI-Powered Insurtech Solutions – Enhancing risk assessment, fraud detection, and claims automation.

Blockchain for Smart Contracts – Enabling instant, transparent insurance transactions.

Edge Computing for Real-Time Data Processing – Reducing latency in policy management and claims approvals.

Quantum Computing in Risk Modeling – Improving complex risk analysis and predictive insights.

Conclusion

Legacy modernization services are essential for insurance companies looking to enhance operational efficiency, security, and customer engagement. AgiraSure’s expertise in cloud transformation, AI-driven automation, and API integration enables insurers to transition seamlessly into the digital-first era.

By adopting AgiraSure’s Legacy Modernization Services, insurance companies can reduce costs, improve agility, and future-proof their operations, ensuring sustainable growth and competitiveness in the evolving insurtech landscape.

0 notes

Text

What is Mainframe Outsourcing? A Comprehensive Introduction

Introduction to Mainframe Outsourcing

As organizations face increasing demands for data processing capabilities, outsourcing these functions to specialized mainframe outsourcing service providers becomes an efficient solution. By doing so, businesses gain access to cutting-edge technology and expertise without investing heavily in in-house resources.

Mainframe outsourcing services help companies stay ahead of technological advancements, improve their operational flexibility, and ensure business continuity. The growing importance of maintaining and upgrading mainframe systems in the current IT ecosystem makes outsourcing a strategic necessity.

The Evolution of Mainframe Outsourcing

Mainframes have been a critical part of enterprise IT for over half a century. Initially, these large systems were the foundation for handling massive data volumes, transactions, and critical operations. However, maintaining and upgrading these systems in-house became increasingly costly and complex as technology progressed. This led to the emergence of mainframe outsourcing services to simplify management and minimize operational overhead.

The rise of outsourcing during the late 20th century aligned with growing global IT demands and development of more agile business environments. IT service providers specializing in mainframe operations began offering comprehensive outsourcing models that included everything from system monitoring to software updates and disaster recovery solutions.

Core Components of Mainframe Outsourcing

Mainframe Managed Services: Day-to-Day Operations One of the primary offerings of mainframe outsourcing service providers is the management of day-to-day operations, which includes monitoring performance, resolving issues, and maintaining system integrity. Outsourcing partners ensure that systems run smoothly, preventing downtime and ensuring that enterprise data is handled efficiently and securely.

Disaster Recovery and Business Continuity Disaster recovery is a cornerstone of mainframe outsourcing. Providers implement robust recovery protocols to ensure that business operations can quickly resume after unexpected disruptions. By outsourcing this function, businesses minimize risk, ensuring continuous operations without needing to build and maintain complex in-house infrastructure.

Application Modernization and Support Legacy applications often need updating to meet the demands of modern environments. Mainframe outsourcing goes beyond basic maintenance, with providers offering application modernization services that enhance functionality and enable integration with newer technologies. These services ensure that businesses stay competitive by leveraging the latest in software and hardware innovations.

Key Drivers Behind Mainframe Outsourcing Adoption

Cost Optimization

One of the main drivers for businesses to turn to mainframe outsourcing services is the potential for cost reduction. Maintaining an in-house mainframe operation demands significant investments in hardware, software, and skilled personnel. By outsourcing, organizations can shift these expenses from fixed to variable costs, freeing up capital to invest in other critical areas.

Access to Specialized Expertise

Mainframes require specific skills to maintain and optimize. With the decreasing pool of mainframe talent, many organizations turn to mainframe outsourcing service providers to access specialized knowledge. These providers employ experts who stay up-to-date with industry developments, ensuring that businesses benefit from the latest innovations in mainframe technology.

Focus on Core Business Objectives

By outsourcing mainframe operations, organizations can offload the complexities of managing legacy systems. This allows businesses to focus on their core activities, such as customer engagement, innovation, and expanding market share, rather than getting bogged down by IT challenges.

Advantages of Mainframe Outsourcing

Enhanced Scalability and Flexibility

Outsourcing offers the flexibility to scale resources according to business needs. Providers offer services that can easily be adjusted as demand fluctuates, ensuring businesses aren’t constrained by rigid infrastructure or resources. This agility allows companies to respond to market changes swiftly and effectively.

Reduced Operational Risks

Mainframe outsourcing reduces operational risks by partnering with experienced providers who apply industry best practices. By leveraging external expertise, businesses can mitigate potential risks related to system failures, downtime, and security vulnerabilities, ensuring higher levels of reliability and uptime.

Improved Compliance and Security

Compliance and security are paramount for organizations handling sensitive data. Mainframe outsourcing service providers are well-versed in industry regulations, offering businesses the peace of mind that their mainframe operations comply with necessary standards. Outsourced providers employ rigorous security protocols to safeguard data, minimizing the risk of breaches or data loss.

Mainframe outsourcing offers a powerful strategy for modern organizations, enabling them to remain agile, secure, and efficient. As technology evolves, businesses can rely on mainframe outsourcing services to navigate the complexities of digital transformation and ensure long-term success.

#mainframe#mainframe services#mainframe outsourcing#mainframe outsourcing services#mainframe outsourcing service providers

0 notes

Text

How our Adelaide internet providers offer the best services

Providing internet access to households, and individual customers is the main responsibility of an internet service provider. This suggests that they are responsible for ensuring that our data is sent quickly and securely across the internet in addition to managing internet traffic and maintaining network infrastructures. We provide the gear and infrastructure required for internet connection, regardless of size.

Why choose our Adelaide internet providers

Depending on the price, our Adelaide internet providers provides its clients with a fixed amount of bandwidth and internet speed. Internet service providers may include phone and cable television service in a bundled package. ISPs are able to offer these packaged services since the majority of internet connections rely on the same infrastructure as these services.

Our Internet service providers need to go above and beyond simply providing people with access to the internet. It is the responsibility of an ISP to protect its users against online threats and warn them when they are in risk. ISPs should work together in the event of an emergency or threat that could jeopardize providers

Internet service providers have an unwritten obligation on their networks

Without a doubt, the largest, and most comprehensive initiative to upgrade to a fast, advanced telecommunications infrastructure is the rollout, which has the back of the government. Adelaide's current fixed line network, which consists of copper cable, is being replaced by a more durable, high-capacity optical fiber infrastructure in order to connect all facilities and improve internet connectivity. Our Adelaide internet providers will select the technology that connects each location and business to the mainframe. Before a selection is made, a variety of factors will be considered, such as the infrastructure's condition, population density, and distance from the local telephone exchange. Through its authorized commercial partners, it functions as a central wholesaler and depository for significant telecom services. After that, it will be your duty to collaborate with suppliers to locate the best rates that satisfy your requirements. Although the installation is free, there are costs involved with utilizing the network once it is installed. These costs are related to the various Internet plans that service providers provide. To receive internet from an internet service provider, one must subscribe to the provider's service, usually through an online form. People will open an account with their internet service provider and pay a monthly subscription in order to receive any equipment they might need and the bandwidth to access the internet. Larger entities, such as homes, businesses, or corporations, will incur higher costs to increase their capacity and bandwidth.

0 notes

Text

Google Cloud enables digital transformations with cutting-edge solutions in industries like Retail, Finance, and Healthcare. Harness AI, multicloud capabilities, and global infrastructure to tackle your toughest challenges. Explore now.

RoamNook - Fueling Digital Growth body { font-family: Arial, sans-serif; margin: 40px; color: #333; } h1 { color: #666; text-align: center; } h2 { color: #666; margin-bottom: 10px; } p { margin-bottom: 20px; } a { color: #4285f4; text-decoration: none; } a:hover { text-decoration: underline; } .container { max-width: 800px; margin: 0 auto; } .logo { text-align: center; } .logo img { max-height: 100px; } .section { margin-bottom: 40px; } .section-title { font-size: 24px; font-weight: bold; margin-bottom: 10px; } .section-content { font-size: 16px; } .conclusion { text-align: center; margin-bottom: 40px; } .conclusion-title { font-size: 24px; font-weight: bold; color: #4285f4; margin-bottom: 10px; } .conclusion-content { font-size: 16px; }

Bringing New, Polarizing, and Informative Facts to the Table

Google Cloud

Google Cloud is a leading provider of cloud computing services that can help businesses solve their toughest challenges. With a focus on digital transformation, Google Cloud offers a wide range of solutions to accelerate business growth and drive innovation.

Key Benefits of Google Cloud:

Why Google Cloud - Top reasons businesses choose Google Cloud

AI and ML - Enterprise-ready AI capabilities

Multicloud - Run your applications wherever you need them

Global infrastructure - Build on the same infrastructure as Google

Data Cloud - Make smarter decisions with unified data

Open cloud - Scale with open, flexible technology

Security - Protect your users, data, and apps

Productivity and collaboration - Connect your teams with AI-powered apps

Reports and Insights:

Executive insights - Curated C-suite perspectives

Analyst reports - What industry analysts say about Google Cloud

Whitepapers - Browse and download popular whitepapers

Customer stories - Explore case studies and videos

Solutions

Google Cloud offers industry-specific solutions to reduce costs, increase operational agility, and capture new market opportunities. Whether you're in the retail, consumer packaged goods, financial services, healthcare and life sciences, media and entertainment, telecommunications, gaming, manufacturing, supply chain and logistics, government, or education industry, Google Cloud has tailored solutions for your digital transformation needs.

Not seeing what you're looking for?

See all industry solutions

Application Modernization:

CAMP Program - Improve your software delivery capabilities

Modernize Traditional Applications - Analyze and migrate traditional workloads to the cloud

Migrate from PaaS: Cloud Foundry, Openshift - Tools for moving existing containers to Google's managed container services

Migrate from Mainframe - Tools and guidance for migrating mainframe apps to the cloud

Modernize Software Delivery - Best practices for software supply chain and CI/CD

DevOps Best Practices - Processes and resources for implementing DevOps

SRE Principles - Tools and resources for adopting Site Reliability Engineering

Day 2 Operations for GKE - Tools and guidance for effective GKE management and monitoring

FinOps and Optimization of GKE - Best practices for running cost-effective applications on GKE

Run Applications at the Edge - Guidance for localized and low-latency apps on Google's edge solution

Architect for Multicloud - Manage workloads across multiple clouds with a consistent platform

Go Serverless - Fully managed environment for developing, deploying, and scaling apps

Artificial Intelligence:

Contact Center AI - AI model for speaking with customers and assisting human agents

Document AI - Automated document processing and data capture

Product Discovery - Google-quality search and product recommendations for retailers

APIs and Applications:

New Business Channels Using APIs - Attract and empower an ecosystem of developers and partners

Unlocking Legacy Applications Using APIs - Cloud services for extending and modernizing legacy apps

Open Banking APIx - Simplify and accelerate secure delivery of open banking compliant APIs

Databases:

Database Migration - Guides and tools to simplify your database migration life cycle

Database Modernization - Upgrades to modernize your operational database infrastructure

Databases for Games - Build global, live games with Google Cloud databases

Google Cloud Databases - Database services to migrate, manage, and modernize data

Migrate Oracle workloads to Google Cloud - Rehost, replatform, rewrite your Oracle workloads

Open Source Databases - Fully managed open source databases with enterprise-grade support

SQL Server on Google Cloud - Options for running SQL Server virtual machines on Google Cloud

Data Cloud:

Databases Solutions - Migrate and manage enterprise data with fully managed data services

Smart Analytics Solutions - Generate instant insights from data at any scale

AI Solutions - Add intelligence and efficiency to your business with AI and machine learning

Data Cloud for ISVs - Innovate, optimize, and amplify your SaaS applications using Google's data and machine learning solutions

Data Cloud Alliance - Ensure global businesses have seamless access and insights into the data required for digital transformation

Digital Transformation

Google Cloud helps businesses accelerate their recovery and ensure a better future through solutions that enable hybrid and multi-cloud environments, generate intelligent insights, and keep workers connected. Whether you want to reimagine your operations, optimize costs, or adapt to the challenges presented by COVID-19, Google Cloud has solutions for you.

Areas of Focus:

Digital Innovation - Reimagine your operations and unlock new opportunities

Operational Efficiency - Prioritize investments and optimize costs

COVID-19 Solutions - Get work done more safely and securely

COVID-19 Solutions for the Healthcare Industry - How Google is helping healthcare meet extraordinary challenges

Infrastructure Modernization - Migrate quickly with solutions for SAP, VMware, Windows, Oracle, and other workloads

Application Migration - Discovery and analysis tools for moving to the cloud

SAP on Google Cloud - Certifications for running SAP applications and SAP HANA

High Performance Computing - Compute, storage, and networking options to support any workload

Windows on Google Cloud - Tools and partners for running Windows workloads

Data Center Migration - Solutions for migrating VMs, apps, databases, and more

Active Assist - Automatic cloud resource optimization and increased security

Virtual Desktops - Remote work solutions for desktops and applications (VDI & DaaS)

Rapid Migration Program (RaMP) - End-to-end migration program to simplify your path to the cloud

Backup and Disaster Recovery - Ensure business continuity needs are met

Red Hat on Google Cloud - Enterprise-grade platform for traditional on-prem and custom applications

Productivity and Collaboration

Google Workspace offers a suite of collaboration and productivity tools designed for enterprises. Whether you need collaboration tools, secure video meetings, cloud identity management, browser and device management, or enterprise search solutions, Google Workspace has you covered.

Productivity and Collaboration Solutions:

Google Workspace - Collaboration and productivity tools for enterprises

Google Workspace Essentials - Secure video meetings and modern collaboration for teams

Cloud Identity - Unified platform for IT admins to manage user devices and apps

Chrome Enterprise - ChromeOS, Chrome Browser, and Chrome devices built for business

Cloud Search - Enterprise search for quickly finding company information

Security

Google Cloud provides advanced security solutions to detect, investigate, and respond to online threats. With security analytics and operations, web app and API protection, risk and compliance automation, software supply chain security, and more, Google Cloud helps protect your business from cyber threats.

Security Solutions:

Security Analytics and Operations - Solution for analyzing petabytes of security telemetry

Web App and API Protection - Threat and fraud protection for web applications and APIs

Security and Resilience Framework - Solutions for each phase of the security and resilience life cycle

Risk and compliance as code (RCaC) - Solution to modernize your governance, risk, and compliance function with automation

Software Supply Chain Security - Solution for improving end-to-end software supply chain security

Security Foundation - Recommended products to help achieve a strong security posture

Smart Analytics

Google Cloud's smart analytics solutions enable organizations to generate instant insights from data at any scale. With data warehousing, data lake modernization, Spark integration, stream analytics, business intelligence, data science, marketing analytics, geospatial analytics, and access to various datasets, Google Cloud provides the foundation for data-driven decision making.

Smart Analytics Solutions:

0 notes

Text

Mainframe Apps Revolutionized Through Generative AI Magic

Using generative AI to modernize mainframe apps

If you take a closer look at any well-designed mobile application or business interface, you will probably discover mainframes operating underneath the integration and service layers of any significant enterprise’s application architecture.

As part of a hybrid infrastructure, these fundamental systems are used by critical applications and systems of record. The business’s ability to maintain its operational integrity might be severely jeopardized by any disruption to its current operations. So much so that many businesses are reluctant to alter them significantly.

But change is unavoidable, since technological debt is mounting up. Businesses must upgrade these apps in order to achieve company agility, meet consumer demand, and stay competitive. Leaders should look for innovative methods to speed up digital transformation in their hybrid strategy rather than putting it off.

COBOL is not to responsible for modernization delays

The shortage of skills is perhaps the largest barrier to mainframe modernization. Many of the mainframe and application specialists who throughout the years have built and extended business COBOL codebases are probably retired or have moved on.

Even more concerning, it will be difficult to get talent from this next generation since graduates of computer science programs that emphasize Java and other modern languages would not automatically think of themselves as mainframe application developers. They may not find the task as exciting or agile as cloud native programming or mobile app design. In many respects, this is a really unfair bias.

Long before object orientation, much alone cloud computing or service orientation, existed, COBOL was developed. It shouldn’t be a difficult language for less experienced developers to learn and comprehend since it has a small collection of instructions. Furthermore, there is no reason why agile development and more frequent, smaller releases inside an automated pipeline akin to DevOps wouldn’t be advantageous for mainframe programs.

Change management is particularly challenging when one has to figure out what various teams have done with COBOL throughout the years. An limitless number of components and logical loops have been added by developers to a procedural system that has to be updated and tested as a whole, not as individual parts or loosely connected services.

Interdependencies and possible points of failure are too complicated and many for even experienced developers to go through when code and applications are weaved together on the mainframe in this way. Because of this, developing COBOL apps might seem more difficult than necessary, leading many businesses to search for solutions outside of the mainframe before they should.

Overcoming generative AI’s constraints

Due to the increasing availability of consumer-grade visual AI picture generators and large language models (LLMs) like ChatGPT, generative artificial intelligence, or GenAI, has been the subject of several hypes recently.

Even though there are a lot of exciting new possibilities in this field, there is a persistent “hallucination factor” with LLMs when used in crucial business processes. Artificial intelligence (AI) systems that have been trained on online material often provide results that are somewhat correct but nonetheless persuasive and credible.

For example, ChatGPT recently used fictitious case law precedents in a federal court;

The indolent attorney who utilized it may face consequences. Putting your faith in an AI chatbot to write business application code raises comparable concerns. While a generic LLM may make fair general ideas for how to develop an app or simply churn out a standard registration form or build an asteroids-style game, the functional integrity of a business application relies greatly on the machine learning data the AI model was trained with.

Thankfully, production-focused AI research was underway long before ChatGPT made its debut. As the creator and pioneer of the mainframe, IBM has been developing deep learning and inference models under the watsonx brand. They have also developed observational GenAI models that have been trained and adjusted on COBOL-to-Java transition.

Their most recent IBM Watsonx Code Assistant for Z solution speeds up the modernization of mainframe applications by combining generative AI with rules-based workflows. Development teams may now rely on a very useful and enterprise-focused application of automation and GenAI to help with auto-refactoring, application discovery, and COBOL-to-Java conversion.

Three approaches to modernizing mainframe applications

Organizations should elevate mainframe programs to the level of top features in the continuous delivery pipeline so that they are as flexible and adaptable to change as any other distributed or object-oriented application.

Through three phases,

IBM Watsonx Code Assistant for Z assists developers in integrating COBOL code into the application modernization lifecycle:

1.Finding Developers: must determine which areas need care before updating. The method begins by listing every program on the mainframe and creating architecture flow diagrams that include all of the data inputs and outputs for each application. The visual flow model makes it simpler for developers and architects to detect dependencies and evident dead ends within the code base.

2.Refactoring: The main goal of this phase is to disassemble monoliths into more palatable forms. IBM Watsonx Code Assistant for Z searches through extensive program code bases to determine the system’s intended business logic. The method refactors the COBOL code into modular business service components by eliminating the connection between instructions and data, such as discrete operations.

3.Transformation: This is where an LLM optimized for corporate COBOL-to-Java translation may work its magic. The GenAI approach converts Java classes from COBOL program components, enabling real object orientation and concern separation to support agile, concurrent development by many teams. The AI then provides look-ahead ideas, much like a co-pilot feature you might find in other development tools, so developers can concentrate on improving Java code in an IDE.

The Intellyx perspective

As most vendor promises regarding AI are really automation under a different name, they tend to be wary about them.

Gaining proficiency in the syntax and structures of languages like COBOL and Java appears to be more in line with GenAI’s interests than studying every detail of the English language and conjecturing about the veracity of words and paragraphs.

The world’s most resource-constrained firms may save money and effort on modernization with the help of generative AI models created specifically for businesses, such as IBM Watsonx Code Assistant for Z. Recursive AI models such as IBM Watsonx Code Assistant for Z find perfect training grounds in applications on well-known platforms with thousands of lines of code.

GenAI can assist teams overcome modernization obstacles and enhance the skills of even more recent mainframe developers to create major advances in agility and resilience on top of their most important core business applications, even in circumstances with limited resources.

Read more on Govindhtech.com

#MainframeApps#GenerativeAI#COBOL#ChatGPT#LLM#AImodel#IBMWatsonx#Java#technews#technology#govindhtech

0 notes

Text

Top 10 reasons why your business needs an update from the traditional application to SaaS software.

Software as a Service (SaaS) is a specific type of cloud computing service that gives customers access to cloud-based applications from different vendors. The benchmark software installation process in the business environment (traditional model), where you must set up the server, enable and install the application, and then configure it for continued use, is intriguingly replaced by SaaS.

Software as a Service refers to using the Internet to access web-based cloud applications. Companies can access a range of software programs straight from the cloud by using Software as a Service (SaaS). As a result, they are more agile and economical.

How does software as a service work?

A cloud provider hosts applications and makes them accessible to end-users via the internet using the software as a service (SaaS) model of software delivery. In this model, a third-party cloud provider may be hired by an independent software vendor (ISV) to host the application. Or, with bigger businesses like Microsoft, the software seller may also double as the cloud provider.

What are the current drawbacks of traditional vendor software?

The projected return assurance on software utilization is difficult to ensure under traditional vendor license practice as it involves numerous aspects, like:

One-time payment, eternal use:

When using a traditional vendor license, it is difficult to replace software that has already been acquired, and if an objective is purchased and is not achieved, it cannot be changed or returned to the vendor.

Pay for updated versions and releases:

The necessity to buy the latest version of the software and replace it entirely is required since today's software may be lacking numerous features and functions compared to its equivalent of tomorrow. This boosts implementation and operational costs, putting a strain on the budget.

Infrastructure spending:

Some large and crucial business applications need huge and high-end infrastructures, which may require huge investments in order to achieve the application's specified operating requirements. This could be just another challenge for small and midsize firms to overcome.

No provision for adding features or functionalities:

Well-publicized software may not be very useful to many potential customers because packaged software applications typically have fewer or no feature enhancement options because packaged software is typically defined by specific standard requirements.

Unavoidable upgrades:

A significant irritation with vendor-licensed software is the necessity for periodic program upgrades, which must be made in order to keep up with current technological breakthroughs. These upgrades are time-consuming and difficult to complete.

There are many issues and concerns that arise in your head when you decide to purchase help desk software for your business. Software-as-a-Service (SaaS) has grown significantly in importance over time, and more and more individuals are utilizing it.

Here is a list of 10 reasons why businesses prefer SaaS:

The benefits of SaaS for businesses are given below to help enterprises understand why they should choose them over traditional software.

Assurance of Quality of Service:

The majority of SaaS service providers promise that your applications will be reachable 99.5% of the time. With the majority of well-known software programs, you are not provided a guarantee about how well they will function, but, with SaaS, you are given a guarantee regarding their performance levels.

Highly Cost-effective:

You can utilize SaaS software entirely through a web browser; no server room, mainframe, or desktop software needs to be installed. The software supplier manages and handles all of this in an efficient manner from a distance. There are no up-front expenses needed for it. In comparison to traditional software, SaaS is typically sold mainly via subscription, which includes updates, maintenance, and some level of customer support.

Upgrading and updating flawlessly:

You won't need to install or download various patches because your SaaS provider will take care of all software upgrades and updates for you. The software on all of your numerous computers and gadgets is constantly updated.

No requirement for physical infrastructure:

Your SaaS vendor manages the complex underlying IT architecture when enabling business applications using SaaS. Users do not need to worry about maintaining any hardware or which operating system version supports which databases. There is no need to purchase new specialist hardware or servers because cloud software runs on your current computers.

Easily Implemented and deployed:

With SaaS solutions, all you need to get started is a web browser and online connectivity. In contrast to on-premise software, SaaS does not need any installation. It can be accessed right away without having to wait.

Increased Rates of Adoption:

Since SaaS solutions are delivered online, there is essentially no learning curve associated with implementing a new solution because staff employees are typically accustomed to working online already. The software supplier manages and handles all of this in an efficient manner from a distance.

Enable data recovery and backups:

SaaS solutions eliminate the time-consuming task of regularly backing up your data, enabling automated backups without user intervention and ensuring the integrity of your data.

Permanent Client Relationships:

The fact is that SaaS suppliers don't make significant profits in the first year of the customer relationship after accounting for client conversion costs and money they have already spent on infrastructure. Therefore, it is crucial for them that their clients are happy with all of their services and stick around for a while.

Security and Safety:

SaaS offers great levels of security. Compared to what the majority of businesses expect from on-premise software, it offers better security. For the servers, there are several firewalls and routine backups. Additionally, a number of safeguards are employed, including frequent backups that guarantee data protection.

Working remotely:

SaaS products are accessible from any location in the world. The user can therefore efficiently operate from anywhere and retrieve his data. They can now easily access their information at any moment.

Conclusion:

For all the reasons listed above, more and more people are choosing SaaS, and it has greatly helped them! What are you waiting for then? The crucial elements are already in motion. Investing in SaaS software has several benefits, including cost savings, time savings, improved staff morale, data security, and more. Giving your workers top-notch equipment and putting in place the proper systems in your organization will help you give your business a competitive edge.

0 notes

Text

Mainframe Modernization Services Market 2022 | Growth Strategies, Opportunity, Challenges, Rising Trends and Revenue Analysis 2030

The latest market report published by Credence Research, Inc. “Global Mainframe Modernization Services Market: Growth, Future Prospects, and Competitive Analysis, 2016 – 2028. The global mainframe modernization services market has witnessed steady growth in recent years and is expected to continue growing at a CAGR of 3.50% between 2023 and 2030. The market was valued at USD 0.8 billion in 2022 and is expected to reach USD 1.0 billion in 2030.

Mainframe modernization services market dynamics refer to the ever-evolving landscape of the industry that caters to updating and enhancing mainframe systems. Mainframes have long been the backbone of large organizations, supporting critical operations and storing vast amounts of data. However, with technological advancements and changing business needs, these legacy systems need to adapt or risk becoming obsolete. The market dynamics surrounding mainframe modernization services are characterized by a constant push for innovation and efficiency. Companies offering these services must keep up with emerging trends such as cloud computing, virtualization, and containerization while ensuring seamless integration with existing infrastructures.

The mainframe modernization services market refers to the industry that provides solutions and services to update, enhance, or replace outdated mainframe computer systems with more contemporary and efficient technologies. Mainframes are large, powerful, and reliable computers that have been used by organizations for decades to handle critical data processing tasks.

As technology evolves, businesses and enterprises often face challenges in maintaining and integrating these legacy mainframes with newer systems, software, and applications. Therefore, mainframe modernization services have become essential to help organizations stay competitive, reduce costs, improve performance, and remain compliant with modern technology standards.

Key services and solutions offered in the mainframe modernization market include:

Application Modernization: Upgrading or migrating legacy mainframe applications to modern platforms, such as cloud-based solutions, distributed systems, or containerized environments. This enables better scalability, agility, and integration with other applications.

Data Migration: Efficiently moving data from mainframe systems to contemporary databases, data lakes, or data warehouses, ensuring data integrity and security throughout the process.

Replatforming: Adapting mainframe applications to run on different platforms or operating systems, optimizing performance and resource utilization.

Browse 180 pages report Mainframe Modernization Services Market By Service Type (Application Modernization, Cloud Migration, Data Modernization) By Enterprise Size (Small and Medium Enterprises (SMEs), Large Enterprises)- Growth, Future Prospects & Competitive Analysis, 2016 – 2030)- https://www.credenceresearch.com/report/mainframe-modernization-services-market

Mainframe Modernization Services Market Growth Factor Worldwide:

Legacy System Challenges: Many organizations rely on mainframe systems that are becoming increasingly difficult to maintain, scale, and integrate with modern technologies. As a result, there is a growing need to modernize these systems to remain competitive and agile in the digital era.

Cost Optimization: Legacy mainframe systems can be expensive to operate and maintain. Modernizing these systems can lead to cost optimization through improved efficiency, reduced hardware requirements, and streamlined operations.

Digital Transformation: Organizations are undergoing digital transformation to leverage emerging technologies, such as cloud computing, big data analytics, and AI/ML. Modernizing mainframe systems allows companies to integrate these technologies seamlessly.

Security and Compliance: Mainframes often contain sensitive and critical data. As cyber threats continue to evolve, modernization helps strengthen security measures and ensure compliance with industry regulations.

Skills Shortage: With many mainframe experts retiring, organizations face a shortage of skilled professionals to manage these systems. Modernization can involve migrating to more commonly used platforms, making it easier to find qualified talent.

Business Agility: Mainframe modernization enables businesses to become more agile and responsive to market changes, customer demands, and new opportunities.

Cloud Integration: Mainframe modernization often involves cloud integration, allowing organizations to take advantage of cloud-based services, scalability, and cost-effectiveness.

Legacy Application Transformation: Modernization efforts can also involve transforming and refactoring legacy applications, making them more modular and easier to maintain.

Competitive Advantage: Organizations that successfully modernize their mainframe systems gain a competitive edge by improving operational efficiency and providing better customer experiences.

Why to Buy This Report-

The report provides a qualitative as well as quantitative analysis of the global Mainframe Modernization Services Market by segments, current trends, drivers, restraints, opportunities, challenges, and market dynamics with the historical period from 2016-2020, the base year- 2021, and the projection period 2022-2028.

The report includes information on the competitive landscape, such as how the market's top competitors operate at the global, regional, and country levels.

Major nations in each region with their import/export statistics

The global Mainframe Modernization Services Market report also includes the analysis of the market at a global, regional, and country-level along with key market trends, major players analysis, market growth strategies, and key application areas.

Browse Full Report: https://www.credenceresearch.com/report/mainframe-modernization-services-market

Visit: https://www.credenceresearch.com/

Related Report: https://www.credenceresearch.com/report/contract-lifecycle-management-market

Related Report: https://www.credenceresearch.com/report/transformer-monitoring-systems-market

Browse Our Blog: https://www.linkedin.com/pulse/mainframe-modernization-services-market-grow-steadily-priyanshi-singh

Browse Our Blog: https://tealfeed.com/mainframe-modernization-services-market-analysis-size-c5fnl

About Us -

Credence Research is a viable intelligence and market research platform that provides quantitative B2B research to more than 10,000 clients worldwide and is built on the Give principle. The company is a market research and consulting firm serving governments, non-legislative associations, non-profit organizations, and various organizations worldwide. We help our clients improve their execution in a lasting way and understand their most imperative objectives. For nearly a century, we’ve built a company well-prepared for this task.

Contact Us:

Office No 3 Second Floor, Abhilasha Bhawan, Pinto Park, Gwalior [M.P] 474005 India

0 notes

Text

Transform Banking Transform Core

Transform Banking. Transform Core.

As the world enters the cloud generation, mainframe-based banking platforms are nearing an evolutionary dead end. Slowly but surely, the bank’s entire technology ecosystem – from the programming languages, the operating systems, the hardware, to the way their IT organizations operate – will all be in need of modernization. The situation is worsened by the shrinking talent pool needed to keep the legacy systems alive.

The scale of the problem

Decades of M&A activities are further exacerbating the challenges facing the banking technology infrastructure as disparate, incompatible back-end systems collide. These complex systems can cost a bank billions of dollars to simply maintain. On top of ballooning operating costs, banks are also facing the threat of upheaval: frequent outages due to system upgrades, limited digital banking offerings, slow response and problem resolution, etc.

Focusing on products alone only exacerbates the problem

Many global banks with deep pockets are racing to double down on their investment in technology innovation. The proliferation of fintech companies is providing them with a lifeline and fueling the advancement of banking digitalization worldwide, especially in the payments sector. However, banks are quickly realizing that their hands are tied when implementing many new digital banking solutions.

Their efforts to plug in new products are crippled by legacy mainframe-based, monolithic architecture. Legacy core technology is simply not capable of delivering innovation in today’s digital society. To get to the root of the problem, banks need to get to the “core” of the banking system – the platform that underpins all of the banking applications.

The evolution of core banking systems

The first generation

The “first generation” of cores that emerged in and around the 1970s were built to emulate a model of banking centered around brick-and-mortar branches. These systems were monolithic in style and exclusively designed to run on expensive mainframes.

The second generation

In the 1980s, the emergence of new banking channels such as ATMs and call centers spurred on a “second generation” of banking systems. As consumer banking evolved to be less branch centric, banks invested heavily in retooling the banking systems to achieve high resiliency and handle significant throughput at low latencies. However, the underlying architecture remained largely unchanged.

The third generation

At the turn of the century, rapid adoption of online and mobile banking drove the arrival of “third generation” systems with a key requirement to handle 24/7 banking.

To address the 24/7 requirements, banks have changed systems to run in “stand-in” mode so that payments are buffered as the bank transitions over the end-of-day process. This approach added considerable complexity into the system as banks finding themselves building a bank within a bank to handle stand-in.

To meet the changing consumer and regulatory demands, some banks developed new features and products using modern programming languages, which paved the way for banks to ditch their expensive mainframes. Banks that didn’t want to risk moving away from their mainframes began to adopt a “hollow out the core” strategy, which involves pulling the product engine, along with other key capabilities, out of the core.

The result is that banks can rely on more modern products to solve some of the shortcomings of the legacy core, however the downside is that there is an increased operational complexity and integration challenge.

Introducing the fourth generation

With the efforts to fix legacy systems, there is one common limitation – given that they are all inherently monolith, they can only be scaled vertically. This fundamentally inhibits the banks��� agility and ability to reduce cost.

Moving to the cloud

The next generation of core banking systems must be built using a cloud-first approach in mind.

Cloud technology enables banks to manage their resources on demand, enhance the accessibility of customer data, while also offering the agility needed to process data in real time. Capacity is effectively limitless in the cloud. Banks that want to take full advantage of cloud infrastructure need to adopt cloud native principles – building a core that is written in the cloud and for the cloud.

Building cloud-native systems requires a microservices architecture approach, with which an application is split into autonomous chunks called microservices that communicate via APIs.

Real-time access to data

Core systems built with streaming APIs offer banks the ability to process data in real time using modern AI and analytics technologies, enabling them to respond to both customer and regulatory demands effectively and efficiently.

Gaining control of the product roadmap

Banks gain full ownership of their product roadmaps by separating the financial product layer from the platform layer within the core. They can update products and add new products without having to wait for changes to be made by a fintech, gaining tremendous flexibility and agility as they respond to fast evolving customer and regulatory demands.

B2b events and conferences

Business events in Bangalore

B2b events

Tech summit

Business events in India

corporate events in India

Business Events

0 notes

Text

Cloud Computing and its Evolution in the Digital Era

Introduction

Cloud computing has revolutionized the way businesses and individuals store, access, and process data. It offers a flexible and scalable infrastructure that allows users to leverage powerful computing resources without the need for extensive on-premises hardware and infrastructure. In this article, we will explore the concept of cloud computing, its evolution over time, and its impact on the digital era.

Understanding Cloud Computing

Definition of Cloud Computing: Cloud computing refers to the delivery of computing resources, including servers, storage, databases, software, and networking, over the internet. Users can access these resources on-demand and pay only for what they use, making it a cost-effective and scalable solution for various applications.

Deployment Models: Cloud computing offers different deployment models, including public, private, and hybrid clouds. Public clouds are owned and operated by third-party providers, private clouds are dedicated to a single organization, and hybrid clouds combine both public and private cloud infrastructure.

Service Models: Cloud computing also offers various service models, including Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS). IaaS provides virtualized computing resources, PaaS offers a platform for developing and deploying applications, and SaaS delivers software applications over the internet.

Evolution of Cloud Computing

Early Development:The concept of cloud computing dates back to the 1950s when mainframe computers were shared among multiple users. However, it wasn’t until the late 1990s and early 2000s that cloud computing started gaining momentum with the emergence of internet-based services and virtualization technologies.

Advancements in Virtualization:Virtualization played a crucial role in the evolution of cloud computing. It allowed for the creation of virtual machines (VMs), enabling the consolidation of multiple servers onto a single physical machine. This led to improved resource utilization, cost savings, and the ability to scale computing resources rapidly.

Expansion of Cloud Services:As internet connectivity and bandwidth improved, cloud service providers began offering a wide range of services, including storage, computing power, databases, and software applications. This expansion enabled businesses and individuals to leverage the cloud for diverse purposes, such as data storage, application development, and collaboration.

Emergence of Big Data and Analytics:The proliferation of cloud computing coincided with the rise of big data and analytics. Cloud platforms provided the scalability and processing power required to handle massive volumes of data and perform complex data analytics. This facilitated data-driven decision-making and advanced insights for businesses across various industries.

Internet of Things (IoT) Integration:The integration of cloud computing with the Internet of Things (IoT) further accelerated its evolution. Cloud platforms became instrumental in managing and analyzing the vast amounts of data generated by IoT devices. This allowed for real-time monitoring, predictive analytics, and the development of innovative IoT applications.

Impact of Cloud Computing in the Digital Era

Scalability and Flexibility: Cloud computing offers unmatched scalability, allowing businesses to scale their resources up or down based on demand. This flexibility enables organizations to quickly adapt to changing market conditions, handle peak workloads, and launch new services without significant infrastructure investments.

Cost Savings: Cloud computing eliminates the need for upfront hardware and infrastructure investments. Businesses can leverage pay-as-you-go pricing models, reducing capital expenses and optimizing operational costs. Additionally, cloud services handle maintenance, upgrades, and security, further reducing IT-related expenses.

Collaboration and Remote Work: Cloud-based tools and applications have greatly facilitated collaboration and remote work. With cloud-based productivity suites and file-sharing platforms, teams can work together on projects regardless of their geographical location. This has become particularly important in the digital era, where remote work and distributed teams have become more prevalent.

Data Security and Disaster Recovery: Cloud service providers invest heavily in security measures to protect data stored in the cloud. They employ encryption, access controls, and regular backups to ensure data integrity and confidentiality. Additionally, cloud-based disaster recovery solutions offer businesses peace of mind by providing reliable backup and restore capabilities in case of data loss or system failures.

Innovation and Time-to-Market: Cloud computing has significantly reduced the barriers to entry for startups and small businesses. With cloud platforms, entrepreneurs can quickly launch and scale their services without the need for extensive IT infrastructure. This has fostered innovation, accelerated time-to-market, and leveled the playing field for businesses of all sizes.

Global Accessibility: Cloud computing enables global accessibility to digital assets. Users can access their data and applications from anywhere with an internet connection, allowing for seamless collaboration and remote access. This global accessibility has transformed the way individuals and organizations operate, breaking down geographical barriers and enabling global connectivity.

Environmental Impact: Cloud computing has the potential to have a positive environmental impact. By consolidating computing resources and optimizing energy consumption, cloud service providers can achieve higher energy efficiency compared to traditional on-premises data centers. This leads to reduced carbon footprint and contributes to sustainable practices in the digital era.

Conclusion

Cloud computing has transformed the way we store, access, and process data in the digital era. With its scalability, cost savings, collaboration capabilities, data security, and global accessibility, cloud computing has become an integral part of businesses and individuals’ digital strategy. As technology continues to evolve, cloud computing will play a vital role in enabling innovation, driving efficiency, and facilitating digital transformation across various industries.

0 notes

Text

Mainframe-as-a-Service – The Keystone for Bridging Traditional Computing and Modern Solutions

The integration of traditional computing systems with modern IT solutions is not merely a strategic advantage but a necessity in the evolving digital age. Mainframe-as-a-Service (MFaaS) emerges as a critical solution, enabling businesses to preserve the robust capabilities of legacy mainframe systems while capitalizing on the flexibility and scalability of cloud solutions. This integration is particularly crucial for industries rooted in high-volume transactions and sensitive data management, such as finance, healthcare, and government sectors.

Understanding the Need for Mainframe Modernization

Traditionally, Mainframes are celebrated for their high reliability, superior processing power, and robust security features. These systems are vital in managing enormous volume of transactions critical to daily business operations. However, as the digital landscape continuously evolves, the traditional mainframe setup faces challenges such as high maintenance costs, scarcity of skilled professionals, and difficulties in integrating with new-age technologies. Mainframe modernization thus focuses not on replacing, but on thoroughly reinventing these systems. MFaaS offers a pathway to revitalizing these legacy systems by integrating them with cloud capabilities. This collaboration not only addresses the fundamental limitations of traditional mainframes but also aligns them with modern business needs, driving more significant innovation and agility within enterprises.

How MFaaS Facilitates Integration of Traditional and Modern IT Solutions

Scalability and Flexibility: Traditional mainframes are often seen as inflexible, especially when it comes to scaling operations. MFaaS introduces a scalable model where resources are available on-demand, allowing businesses to manage workloads efficiently without the need for extensive physical infrastructure upgrades.

Cost Management: Transitioning to MFaaS converts large fixed costs into variable costs that are easier to manage. This model provides a pay-as-you-go approach, which is particularly advantageous for businesses looking to optimize expenses while still benefiting from the robustness of mainframe capabilities.

Enhanced Integration Capabilities: One of the significant hurdles with traditional mainframes is their integration with modern applications and data platforms. MFaaS providers typically offer tools and services that facilitate seamless integration with cloud services, APIs, and modern databases, bridging the gap between old and new technology environments.

Security and Compliance: MFaaS doesn’t compromise on the legendary security of mainframes. Instead, it enhances it by leveraging the advanced security protocols of cloud services. Additionally, compliance becomes more manageable as service providers ensure that the systems are up-to-date with the latest regulatory requirements.

Why Should Businesses Invest in MFaaS?

Meeting Modern IT Demands: As businesses face increasing pressure to modernize their IT infrastructures, MFaaS provides a less disruptive and more efficient pathway compared to complete system overhauls. This approach allows organizations to continue leveraging their existing mainframe investments while adopting new technologies that drive business growth.

Business Agility: In today's competitive market, agility is key. MFaaS enables organizations to quickly adapt to market changes or operational demands without the lead times typically associated with traditional mainframe reconfiguration or scaling.

Innovation Through Integration: By facilitating easier integration with emerging technologies like AI, machine learning, and big data analytics, MFaaS opens new avenues for innovation. This capability allows enterprises to unlock valuable insights from their existing data while improving operational efficiency.

Conclusion: Investing in the Future of Integrated IT Solutions

The integration of traditional mainframe systems with modern cloud solutions via MFaaS is not just a temporary trend but a forward-looking investment into the future of business technology. This approach provides enterprises with a strategic advantage by enhancing the strengths of mainframes with the flexibility and scalability of the cloud.

Investing in MFaaS means committing to a technology strategy that balances legacy optimization with modernization. It offers a pragmatic path forward that maximizes return on existing investments while positioning the business for future growth and innovation. By choosing MFaaS, companies are not just maintaining their competitive edge; they are sharpening it, ensuring that they are well-equipped to navigate the complexities of the modern digital economy.

0 notes

Text

7 Power Laws of the Technological Singularity

When people talk about the technological singularity they usually do so exclusively in the context of Moore’s Law. But there are several Moore’s Law-like laws at work in the world and each of them is equally baffling. I’m referring to this list of trends as “power laws” because of the nature of their incredible rate of growth and because they independently work as pistons driving the engine of the singularity. A few things to note about these power laws. Firstly they are just observations. There are no, known, deeper physical principles in the universe that would lead us to believe that they must hold true. Secondly, we’ve observed these trends long enough to warren their recognition as power laws and there is no evidence or signs of their stagnating. We’ll start with the most famous and well-known power law and work our way through the others.

1. Moore’s Law

Moore’s Law states that transistors on a chip double about every two years and that the cost of that doubling halves. This is a double-edged sword. It means that the next model computer will be way faster than the previous but it also means that the value of your existing computer is dropping rapidly. The end of Moore’s Law has been proclaimed for a long time but there seems to be no end to its progression.

As we reach the physical limits of transistor sizes, entirely new hardware architectures are developed that sustain the progression. Things like 3D chips, specialized chips, and non-silicon based chips like photonics, spintronics, and neuromorphic chips are being developed and will ensure that this law continues.

“Regular boosts to computing performance that used to come from Moore’s Law will continue, and will instead stem from changes to how chips are designed.” — Mike Muller, CTO at ARM