#MySQL DBA support

Explore tagged Tumblr posts

Text

Mysql Database Services | MySQL DBA Support| Data Patrol Technologies Pvt. Ltd, India

Data Patrol reliable MySQL DBA support services are tailored to meet your specific needs, allowing you to focus on your business goals. Streamline your data management process with our top-notch MySQL database 24*7 support services.You benefit from the collective knowledge of our team to help you monitor and manage your database environment and ensure you’re able to leverage all of the features of MySQL

#Mysql Database Services#Mysql Database support Services#MySQL DBA support#Mysql Database Services in pune

0 notes

Text

Ensuring a seamless and reliable database performance with MySQL DBA Support

In today's fast-paced digital landscape, businesses rely heavily on robust database management to ensure seamless operations. At Ralantech, we specialize in providing top-notch MySQL Database Administrator (DBA) support services, catering to businesses of all sizes. Our team of certified MySQL DBAs brings years of experience in handling complex database environments. We provide tailored solutions to optimize database performance, ensure high availability, and enhance security.

0 notes

Video

youtube

Amazon RDS Performance Insights | Monitor and Optimize Database Performance

Amazon RDS Performance Insights is an advanced monitoring tool that helps you analyze and optimize your database workload in Amazon RDS and Amazon Aurora. It provides real-time insights into database performance, making it easier to identify bottlenecks and improve efficiency without deep database expertise.

Key Features of Amazon RDS Performance Insights:

✅ Automated Performance Monitoring – Continuously collects and visualizes performance data to help you monitor database load. ✅ SQL Query Analysis – Identifies slow-running queries, so you can optimize them for better database efficiency. ✅ Database Load Metrics – Displays a simple Database Load (DB Load) graph, showing the active sessions consuming resources. ✅ Multi-Engine Support – Compatible with MySQL, PostgreSQL, SQL Server, MariaDB, and Amazon Aurora. ✅ Retention & Historical Analysis – Stores performance data for up to two years, allowing trend analysis and long-term optimization. ✅ Integration with AWS Services – Works seamlessly with Amazon CloudWatch, AWS Lambda, and other AWS monitoring tools.

How Amazon RDS Performance Insights Helps You:

🔹 Troubleshoot Performance Issues – Quickly diagnose and fix slow queries, high CPU usage, or locked transactions. 🔹 Optimize Database Scaling – Understand workload trends to scale your database efficiently. 🔹 Enhance Application Performance – Ensure your applications run smoothly by reducing database slowdowns. 🔹 Improve Cost Efficiency – Optimize resource utilization to prevent over-provisioning and reduce costs.

How to Enable Amazon RDS Performance Insights: 1️⃣ Navigate to AWS Management Console. 2️⃣ Select Amazon RDS and choose your database instance. 3️⃣ Click on Modify, then enable Performance Insights under Monitoring. 4️⃣ Choose the retention period (default 7 days, up to 2 years with paid plans). 5️⃣ Save changes and start analyzing real-time database performance!

Who Should Use Amazon RDS Performance Insights? 🔹 Database Administrators (DBAs) – To manage workload distribution and optimize database queries. 🔹 DevOps Engineers – To ensure smooth database operations for applications running on AWS. 🔹 Developers – To analyze slow queries and improve app performance. 🔹 Cloud Architects – To monitor resource utilization and plan database scaling effectively.

Amazon RDS Performance Insights simplifies database monitoring, making it easy to detect issues and optimize workloads for peak efficiency. Start leveraging it today to improve the performance and scalability of your AWS database infrastructure! 🚀

**************************** *Follow Me* https://www.facebook.com/cloudolus/ | https://www.facebook.com/groups/cloudolus | https://www.linkedin.com/groups/14347089/ | https://www.instagram.com/cloudolus/ | https://twitter.com/cloudolus | https://www.pinterest.com/cloudolus/ | https://www.youtube.com/@cloudolus | https://www.youtube.com/@ClouDolusPro | https://discord.gg/GBMt4PDK | https://www.tumblr.com/cloudolus | https://cloudolus.blogspot.com/ | https://t.me/cloudolus | https://www.whatsapp.com/channel/0029VadSJdv9hXFAu3acAu0r | https://chat.whatsapp.com/BI03Rp0WFhqBrzLZrrPOYy *****************************

*🔔Subscribe & Stay Updated:* Don't forget to subscribe and hit the bell icon to receive notifications and stay updated on our latest videos, tutorials & playlists! *ClouDolus:* https://www.youtube.com/@cloudolus *ClouDolus AWS DevOps:* https://www.youtube.com/@ClouDolusPro *THANKS FOR BEING A PART OF ClouDolus! 🙌✨*

#youtube#AmazonRDS RDSPerformanceInsights DatabaseOptimization AWSDevOps ClouDolus CloudComputing PerformanceMonitoring SQLPerformance CloudDatabase#amazon rds database S3 aws devops amazonwebservices free awscourse awstutorial devops awstraining cloudolus naimhossenpro ssl storage cloudc

0 notes

Text

🚀 Free Live Webinar on #DataScrambling

Registration Link: https://lnkd.in/geZv7Bkr

Are you ready to revolutionize your data security strategy?

Join us for an exclusive webinar where we'll showcase Clonetab Advanced-Data Scrambling (CT-ADS) — the game-changing solution for managing sensitive data across Oracle e-Business Suite, SAP, and major #databases like #OracleDatabase, #MySQL, and #PostgreSQL.

💡 What You’ll Learn:

🔹 What is CT-ADS and how it supports compliance with tailored algorithms.

🔹 The unparalleled speed and efficiency of scrambling millions of rows in minutes.

🔹 Simplified and secure operations designed to safeguard your sensitive data.

📽️ Live Demo: Watch CT-ADS scramble 1 million rows in under 30 minutes, showcasing its unmatched speed and precision.

🗓️ When:

📅 Saturday, December 13, 2024

🕐 11:00 AM - 12:00 PM (PST)

🎙️ Meet Our Speakers:

Venkat Meka: CEO, Clonetab Inc. | ERP & DBA automation leader with over 20 years of expertise.

Baji Munnisa Shaik: Senior Development Manager, Clonetab Inc. | Innovative Java solutions expert.

📌 Reserve Your Spot Today: https://lnkd.in/geZv7Bkr

Don't miss this opportunity to see CT-ADS in action and discover how it can transform your #DataSecurity and compliance.

#Webinar#CTADS#Clonetab#ERP#Oracle#SAP#OracleEBS#OracleEBusinessSuite#DatabaseAdminstrator#DBA#DataMasking#EBS

0 notes

Text

Mastering Database Administration with Your Path to Expert DB Management

In the age of data-driven businesses, managing and securing databases has never been more crucial. A database administrator (DBA) is responsible for ensuring that databases are well-structured, secure, and perform optimally. Whether you're dealing with a small-scale application or a large enterprise system, the role of a database administrator is key to maintaining data integrity, availability, and security.

If you're looking to build a career in database administration or enhance your existing skills, Jazinfotech’s Database Administration course offers comprehensive training that equips you with the knowledge and hands-on experience to manage databases efficiently and effectively.

In this blog, we’ll explore what database administration entails, why it's an essential skill in today's tech industry, and how Jazinfotech’s can help you become an expert in managing and maintaining databases for various platforms.

1. What is Database Administration (DBA)?

Database Administration refers to the practice of managing, configuring, securing, and maintaining databases to ensure their optimal performance. Database administrators are responsible for the overall health of the database environment, including aspects such as:

Data Security: Ensuring data is protected from unauthorized access and data breaches.

Database Performance: Monitoring and optimizing the performance of database systems to ensure fast and efficient data retrieval.

Backup and Recovery: Implementing robust backup strategies and ensuring databases can be restored in case of failures.

High Availability: Ensuring that databases are always available and accessible, even in the event of system failures.

Data Integrity: Ensuring that data remains consistent, accurate, and reliable across all operations.

Database administrators work with various types of databases (SQL, NoSQL, cloud databases, etc.), and they often specialize in specific database management systems (DBMS) such as MySQL, PostgreSQL, Oracle, Microsoft SQL Server, and MongoDB.

2. Why is Database Administration Important?

Database administration is a critical aspect of managing the infrastructure of modern organizations. Here are some reasons why database administration is vital:

a. Ensures Data Security and Compliance

In today’s world, where data breaches and cyber threats are prevalent, ensuring that your databases are secure is essential. A skilled DBA implements robust security measures such as encryption, access control, and monitoring to safeguard sensitive information. Moreover, DBAs are responsible for ensuring that databases comply with various industry regulations and data privacy laws.

b. Optimizes Performance and Scalability

As organizations grow, so does the volume of data. A good DBA ensures that databases are scalable, can handle large data loads, and perform efficiently even during peak usage. Performance optimization techniques like indexing, query optimization, and database tuning are essential to maintaining smooth database operations.

c. Prevents Data Loss

Data is often the most valuable asset for businesses. DBAs implement comprehensive backup and disaster recovery strategies to prevent data loss due to system crashes, human error, or cyber-attacks. Regular backups and recovery drills ensure that data can be restored quickly and accurately.

d. Ensures High Availability

Downtime can have significant business impacts, including loss of revenue, user dissatisfaction, and brand damage. DBAs design high-availability solutions such as replication, clustering, and failover mechanisms to ensure that the database is always accessible, even during maintenance or in case of failures.

e. Supports Database Innovation

With the evolution of cloud platforms, machine learning, and big data technologies, DBAs are also involved in helping organizations adopt new database technologies. They assist with migration to the cloud, implement data warehousing solutions, and work on database automation to support agile development practices.

3. Jazinfotech’s Database Administration Course: What You’ll Learn

At Jazinfotech, our Database Administration (DBA) course is designed to give you a thorough understanding of the core concepts and techniques needed to become an expert in database management. Our course covers various DBMS technologies, including SQL and NoSQL databases, and teaches you the necessary skills to manage databases effectively and efficiently.

Here’s a breakdown of the core topics you’ll cover in Jazinfotech’s DBA course:

a. Introduction to Database Management Systems

Understanding the role of DBMS in modern IT environments.

Types of databases: Relational, NoSQL, NewSQL, etc.

Key database concepts like tables, schemas, queries, and relationships.

Overview of popular DBMS technologies: MySQL, Oracle, SQL Server, PostgreSQL, MongoDB, and more.

b. SQL and Query Optimization

Mastering SQL queries to interact with relational databases.

Writing complex SQL queries: Joins, subqueries, aggregations, etc.

Optimizing SQL queries for performance: Indexing, query execution plans, and normalization.

Data integrity and constraints: Primary keys, foreign keys, and unique constraints.

c. Database Security and User Management

Implementing user authentication and access control.

Configuring database roles and permissions to ensure secure access.

Encryption techniques for securing sensitive data.

Auditing database activity and monitoring for unauthorized access.

d. Backup, Recovery, and Disaster Recovery

Designing a robust backup strategy (full, incremental, differential backups).

Automating backup processes to ensure regular and secure backups.

Recovering data from backups in the event of system failure or data corruption.

Implementing disaster recovery plans for business continuity.

e. Database Performance Tuning

Monitoring and analyzing database performance.

Identifying performance bottlenecks and implementing solutions.

Optimizing queries, indexing, and database configuration.

Using tools like EXPLAIN (for query analysis) and performance_schema to improve DB performance.

f. High Availability and Replication

Setting up database replication (master-slave, master-master) to ensure data availability.

Designing high-availability database clusters to prevent downtime.

Load balancing to distribute database requests and reduce the load on individual servers.

Failover mechanisms to automatically switch to backup systems in case of a failure.

g. Cloud Database Administration

Introduction to cloud-based database management systems (DBaaS) like AWS RDS, Azure SQL, and Google Cloud SQL.

Migrating on-premise databases to the cloud.

Managing database instances in the cloud, including scaling and cost management.

Cloud-native database architecture for high scalability and resilience.

h. NoSQL Database Administration

Introduction to NoSQL databases (MongoDB, Cassandra, Redis, etc.).

Managing and scaling NoSQL databases.

Differences between relational and NoSQL data models.

Querying and optimizing performance for NoSQL databases.

i. Database Automation and Scripting

Automating routine database maintenance tasks using scripts.

Scheduling automated backups, cleanup jobs, and index maintenance.

Using Bash, PowerShell, or other scripting languages for database automation.

4. Why Choose Jazinfotech for Your Database Administration Course?

At Jazinfotech, we provide high-quality, practical training in database administration. Our comprehensive DBA course covers all aspects of database management, from installation and configuration to performance tuning and troubleshooting.

Here’s why you should choose Jazinfotech for your DBA training:

a. Experienced Trainers

Our instructors are seasoned database professionals with years of hands-on experience in managing and optimizing databases for enterprises. They bring real-world knowledge and industry insights to the classroom, ensuring that you learn not just theory, but practical skills.

b. Hands-On Training

Our course offers plenty of hands-on labs and practical exercises, allowing you to apply the concepts learned in real-life scenarios. You will work on projects that simulate actual DBA tasks, including performance tuning, backup and recovery, and database security.

c. Industry-Standard Tools and Technologies

We teach you how to work with the latest database tools and technologies, including both relational and NoSQL databases. Whether you're working with Oracle, SQL Server, MySQL, MongoDB, or cloud-based databases like AWS RDS, you'll gain the skills needed to manage any database environment.

d. Flexible Learning Options

We offer both online and in-person training options, making it easier for you to learn at your own pace and according to your schedule. Whether you prefer classroom-based learning or virtual classes, we have the right solution for you.

e. Career Support and Placement Assistance

At Jazinfotech, we understand the importance of securing a job after completing the course. That’s why we offer career support and placement assistance to help you find your next role as a Database Administrator. We provide resume-building tips, mock interviews, and help you connect with potential employers.

5. Conclusion

Database administration is a critical skill that ensures your organization’s data is secure, accessible, and performant. With the right training and experience, you can become a highly skilled database administrator and take your career to new heights.

Jazinfotech’s Database Administration course provides the comprehensive knowledge, hands-on experience, and industry insights needed to excel in the field of database management. Whether you’re a beginner looking to start your career in database administration or an experienced professional aiming to deepen your skills, our course will help you become a proficient DBA capable of managing complex database environments.

Ready to kickstart your career as a Database Administrator? Enroll in Jazinfotech’s DBA course today and gain the expertise to manage and optimize databases for businesses of all sizes!

0 notes

Text

Best Database Institutes in Mohali: A Guide to Kickstart Your Career in Data Management

In today’s data-driven world, managing, storing, and retrieving data efficiently has become crucial for businesses. A solid understanding of databases and database management systems (DBMS) is essential for IT professionals, data analysts, developers, and anyone looking to build a career in technology. If you’re based in or around Mohali and want to master databases, it’s important to choose the right institute.

This guide highlights the best database training institutes in Mohali and what sets them apart from the rest.

Why Learn Database Management?

Database management is at the core of any technology infrastructure. Here are a few reasons why learning database systems is beneficial:

Career Growth: Expertise in databases opens doors to various roles like database administrator (DBA), data analyst, SQL developer, and more.

In-Demand Skill: Companies increasingly rely on data to make decisions, and proficient database managers are in high demand across industries.

Work with Big Data: Knowledge of databases is essential for working with big data and cloud computing technologies.

Increased Productivity: Efficient database management streamlines operations and enhances productivity in any organization.

Key Factors to Consider When Choosing a Database Institute

Before selecting an institute for database training, consider the following factors:

Comprehensive Curriculum: The course should cover both relational databases (SQL, MySQL) and NoSQL databases (MongoDB).

Experienced Instructors: Trainers should have real-world industry experience to provide practical insights.

Hands-On Learning: The institute should provide hands-on training with real-world databases and projects.

Certification: Industry-recognized certifications are essential for boosting your resume.

Placement Assistance: Some institutes offer placement support to help students secure jobs after course completion.

Top Institutes for Database Training in Mohali

1. ThinkNEXT Technologies

Why Choose ThinkNEXT Technologies for Database Training? ThinkNEXT Technologies is one of the leading IT training institutes in Mohali, offering a variety of database courses. Their programs cater to both beginners and professionals, providing a strong foundation in both SQL and NoSQL databases.

Course Highlights:

Training in SQL, MySQL, MongoDB, Oracle, and more.

Practical, hands-on experience with live projects.

Certified trainers with years of industry experience.

Certification & Placement: ThinkNEXT offers industry-recognized certification upon course completion. Their strong placement cell assists students in securing jobs at leading tech companies.

2. Excellence Technology

Why Choose Excellence Technology for Database Training? Excellence Technology is well-regarded for its practical approach to IT training. Their database management courses cover everything from basic SQL queries to advanced database administration, making it ideal for aspiring database administrators (DBAs).

Course Highlights:

Comprehensive training in relational databases (MySQL, Oracle) and NoSQL databases (MongoDB).

Real-world projects to gain practical experience.

In-depth focus on database optimization, administration, and security.

Certification & Placement: Upon completion, students receive certification recognized by top employers. Excellence Technology also provides strong job placement support, helping students transition into their careers.

3. Webtech Learning

Why Choose Webtech Learning for Database Training? Webtech Learning offers a robust database management course that focuses on real-world application and hands-on practice. The institute is known for its practical training programs, which are ideal for both beginners and working professionals.

Course Highlights:

Training in SQL, MySQL, PostgreSQL, MongoDB, and Oracle.

Emphasis on database design, query optimization, and data security.

Both classroom and online training options available.

Certification & Placement: After completing the course, students receive certification that is well-recognized in the industry. Webtech Learning offers placement assistance to help students secure roles in database management and data analysis.

4. iClass Mohali

Why Choose iClass Mohali for Database Training? iClass Mohali offers professional training in a range of IT courses, including database management. Their database training programs are designed to give students hands-on experience with different types of databases, making it a great option for those looking to gain practical skills.

Course Highlights:

In-depth coverage of SQL databases like MySQL, PostgreSQL, and Microsoft SQL Server.

Introduction to NoSQL databases such as MongoDB and Cassandra.

Hands-on lab sessions with real-time database management tasks.

Certification & Placement: iClass Mohali offers certification after course completion and provides placement assistance to help students land jobs in database management roles.

5. Netmax Technologies

Why Choose Netmax Technologies for Database Training? Netmax Technologies is a reputable institute in Mohali that offers specialized training in various database systems. Their courses are suitable for anyone looking to gain deep knowledge of database management and data handling techniques.

Course Highlights:

Comprehensive training in database languages like SQL, PL/SQL, and NoSQL databases.

Hands-on projects and real-world case studies to develop practical skills.

Training in database administration, optimization, and security.

Certification & Placement: Netmax Technologies provides a recognized certification after course completion. The institute also offers placement support to help students secure jobs in the IT industry.

Conclusion: Finding the Best Database Training Institute in Mohali

Choosing the right institute for database training is crucial to building a successful career in data management. Institutes like ThinkNEXT Technologies, Excellence Technology, and Webtech Learning offer comprehensive courses that provide both theoretical knowledge and practical experience with databases. Whether you’re looking to master SQL, NoSQL, or database administration, these institutes have the right programs to help you achieve your career goals.

With the increasing demand for data management professionals, having a strong foundation in database systems is a valuable asset. By enrolling in a top-notch training institute in Mohali, you’ll be well-prepared to take on roles such as database administrator, data analyst, or backend developer in leading companies.

0 notes

Text

Database Courses

Introduction

Databases are essential for storing, organizing, and managing data in today's digital age. A database course equips you with the skills to design, implement, and maintain efficient and reliable databases.

Types of Databases

Relational Databases: Organize data in tables with rows and columns. Examples include MySQL, PostgreSQL, and Oracle.

NoSQL Databases: Store data in a flexible and scalable format. Examples include MongoDB, Cassandra, and Redis.

Key Skills Covered in a Database Course

Database Concepts: Understand database terminology, normalization, and data integrity.

SQL (Structured Query Language): Learn to query, manipulate, and manage data in relational databases.

Database Design: Design efficient database schemas and data models.

Performance Optimization: Optimize database performance through indexing, query tuning, and data partitioning.

Data Modeling: Create data models to represent real-world entities and their relationships.

Database Administration: Manage database security, backups, and recovery.

NoSQL Databases (Optional): Learn about NoSQL databases and their use cases.

Course Structure

A typical database course covers the following modules:

Introduction to Databases: Overview of database concepts and their importance.

Relational Database Design: Learn about database normalization and ER (Entity-Relationship) diagrams.

SQL Fundamentals: Master SQL syntax for querying, inserting, updating, and deleting data.

Database Administration: Understand database security, backup and recovery, and performance tuning.

Advanced SQL: Explore advanced SQL features like subqueries, joins, and window functions.

NoSQL Databases (Optional): Learn about NoSQL databases and their use cases.

Database Performance Optimization: Optimize database queries and indexes for efficient performance.

Case Studies: Apply learned concepts to real-world database scenarios.

Choosing the Right Course

When selecting a database course, consider the following factors:

Database type: Choose a course that focuses on relational databases or NoSQL databases based on your needs.

Course format: Choose between online, in-person, or hybrid formats based on your preferences and learning style.

Instructor expertise: Look for instructors with practical experience in database administration and design.

Hands-on projects: Prioritize courses that offer hands-on projects to gain practical experience.

Community and support: A supportive community of students and instructors can be valuable during your learning journey.

Career Opportunities

A database course can open doors to various career paths, including:

Database Administrator (DBA)

Data Analyst

Data Engineer

Software Developer

Business Intelligence Analyst

Popular Online Platforms for Database Courses

Udemy: Offers a wide range of database courses for all levels.

Coursera: Provides specialized database courses from top universities.

Codecademy: Offers interactive database lessons and projects.

edX: Provides database courses from top universities, including MIT and Harvard.

Pluralsight: Offers comprehensive database courses with video tutorials and hands-on exercises.

Additional Tips

Practice regularly: Consistent practice is key to becoming proficient in database management.

Stay updated: The database landscape is constantly evolving, so it's important to stay up-to-date with the latest technologies and trends.

Build a portfolio: Showcase your database projects and skills on a personal website or portfolio platform.

Network with other database professionals: Attend meetups, conferences, and online communities to connect with other database professionals and learn from their experiences.

By following these steps and continuously learning and practicing, you can become a skilled database professional.

Upload an image

This prompt requires an image that you need to add. Tap the image button to upload an image. Got it

Need a little help with this prompt?

Power up your prompt and Gemini will expand it to get you better results Got it

Gemini may display inaccurate info, including about people,

0 notes

Text

Ensuring Database Excellence: DBA Support Services by Dizbiztek in Malaysia and Indonesia

In the rapidly evolving digital landscape, maintaining a robust and efficient database system is crucial for business success. Dizbiztek, a leading DBA support agency, offers top-tier DBA support services tailored to meet the unique needs of businesses in Malaysia and Indonesia. With our expertise, your database systems will run smoothly, ensuring optimal performance and security.

Why DBA Support Services are Essential

Database management is a complex task that requires specialized knowledge and continuous monitoring. As businesses grow and data volumes increase, the challenges of managing databases become more pronounced. Here’s where DBA support services come into play. These services ensure your database systems are not only up and running but also optimized for performance and security. Whether it’s routine maintenance, emergency troubleshooting, or performance tuning, a reliable DBA support agency like Dizbiztek is essential.

Remote DBA Support: Flexibility and Efficiency

Dizbiztek offers comprehensive remote DBA support services, providing businesses with the flexibility and expertise they need without the overhead costs of maintaining an in-house team. Our remote DBA support ensures that your databases are monitored and managed round the clock, minimizing downtime and maximizing efficiency. This service is particularly beneficial for small and medium-sized enterprises that need expert DBA support but may not have the resources to maintain a full-time DBA team.

Online DBA Service in Malaysia

In Malaysia, Dizbiztek has established itself as a trusted provider of online DBA services. Our team of experienced DBAs is proficient in handling a variety of database management systems, including Oracle, SQL Server, MySQL, and more. We offer a range of services, from installation and configuration to performance tuning and disaster recovery. By leveraging our online DBA service in Malaysia, businesses can ensure their databases are secure, reliable, and optimized for performance.

Remote DBA Support Services in Indonesia

Indonesia's vibrant business landscape demands robust database management solutions. Dizbiztek’s remote DBA support services in Indonesia are designed to meet these demands. Our team provides proactive monitoring, regular maintenance, and quick resolution of any database issues. This ensures that businesses can focus on their core operations while we take care of their database needs. Our remote DBA support services in Indonesia are tailored to meet the unique challenges faced by businesses in this region, providing them with the peace of mind that their data is in expert hands.

Why Choose Dizbiztek?

Expertise and Experience: Our team comprises highly skilled DBAs with extensive experience in managing complex database environments. Proactive Monitoring: We use advanced monitoring tools to detect and resolve issues before they impact your business. Customized Solutions: We understand that every business is unique, and we tailor our services to meet your specific needs. 24/7 Support: Our remote DBA support services are available round the clock, ensuring your databases are always monitored and managed.

Conclusion

In today’s data-driven world, effective database management is critical. Dizbiztek’s DBA support services in Malaysia and Indonesia provide businesses with the expertise and reliability they need to manage their databases efficiently. Whether you need remote DBA support or online DBA services, our team is here to ensure your database systems are optimized, secure, and always available. Partner with Dizbiztek and experience the peace of mind that comes with knowing your data is in capable hands.

#dba support services#dba support service in malaysia#dba support services in indonesia#dba service provider

0 notes

Text

Alibaba Cloud ApsaraDB Private Data Run By Intel TDX

Alibaba Cloud security

Cloud computing and big data have raised concerns about data security and privacy. More countries are improving data protection laws like the EU’s General Data Protection Regulation (GDPR) and China’s Personal Information Protection Law as people become more concerned about data security and privacy. So, firms must be more careful and compliant with user data processing and storage.

In light of this, private database technology has been developed. Secure databases can resolve data security problems from beginning to end in a variety of application scenarios, resulting in quick development and industry recognition. Intel Trust Domain Extensions (Intel TDX) and Alibaba Cloud security defences, along with the Alibaba Cloud ApsaraDB Confidential Database, can effectively defend against security threats from both inside and outside the cloud platform, helping to prevent user data leakage.

Secure Computing with Intel Xeon Scalable Processors Dependable Security Engines for Hardware-Based TEE Intel has created and provided two cutting-edge hardware-based security engines, Intel Software Guard Extensions (Intel SGX), an application-level isolation technology, and Intel TDX, a virtualization-level isolation technology, to help safeguard data in use and enable secret computing. Moreover, for a more complete private computing solution, Intel TDX may readily expand support to heterogeneous Trusted Execution Environment (TEE) utilisation. The 5th generation Intel Xeon Scalable processors can offer comprehensive private computing capabilities thanks to these two integrated security mechanisms. With these features, CSPs can provide IaaS, PaaS, and SaaS applications in a hardware-based TEE without having to change their current applications.

Intel TDX Enables the Confidential Database ApsaraDB Confidential database technology is used by Alibaba Cloud ApsaraDB to safeguard sensitive user data while maintaining transparent query, transaction, and other operational processes. On the other hand, tiered protection mechanisms like TLS (Transport Layer Security), TDE (Transparent Data Encryption), and RLS (Row Level Security) are used by traditional databases for data that is processed through all stages.

Confidential Database This version of the database builds on the Confidential Database (Basic Edition) by utilising TEE technologies such as Intel SGX and Intel TDX to guarantee that all of the database’s services are executed in a trusted environment and are shielded from outside security threats. The guest operating system and the database system components are the only things that fall inside the trust barrier.

Client-side encryption and ciphertext storage on untrusted servers are possible with secret databases (Level 3 and Level 4). They nevertheless support all database queries, transactions, analytics, and other tasks in spite of this. Confidential computing is used to keep administrators (like DBAs) and other unauthorised individuals from viewing unencrypted data, resulting in a condition where the data is accessible but not visible in the database.

Alibaba Cloud has formally released the ApsaraDB Confidential Database Basic Edition of PolarDB MySQL and RDS MySQL for Level 3 security. Based on the aforementioned Basic Edition, Alibaba Cloud and Intel collaborated to create the Alibaba Cloud ApsaraDB Confidential Database Hardware Enhanced Edition with Intel TDX, giving consumers a higher level of security (Level 4).

Advantages of ApsaraDB Confidential Database with Intel TDX Secrecy of Computing Isolation As previously indicated, Intel TDX uses Intel Multi-Key Total Memory Encryption (Intel MK-TME) and Intel Virtual Machine Extension (Intel VMX) to create a new virtual guest environment called “TD.” This TD can be separated from instances, other TDs, and the underlying system software. The TDX Module uses Secure Arbitration Mode (SEAM), an advanced security privilege mode, to enforce these security features.

Outstanding Performance for in-flight Memory Encryption Intel TDX uses an integrated memory encryption engine in the integrated memory controller (IMC) of CPU processors to allow customers to encrypt sensitive data while in flight. The extra overhead associated with conventional confidential databases is removed using this method. When handling user-sensitive data in a cloud database, the database operation engine can be executed on an Intel TDX-based TEE, which provides exceptional performance over conventional data protection techniques while maintaining data confidentiality.

Simple to Utilise for Hyperscale Deployment The “lift-and-shift” method makes it easier to move intricate database systems to private computing. Furthermore, Intel TDX offers comprehensive cloud operating features for hyperscale deployment, including uninterrupted live migration and TCB updates. All of these lower the expenses associated with maintaining and operating private databases while enhancing accessibility.

Even in the event that database accounts are compromised, important user data will always be returned encrypted thanks to Intel TDX, preventing data leaks. Additionally, Intel TDX fortifies the encryption defence for databases’ runtime memory. The Confidential Database Hardware Enhanced Edition of Alibaba Cloud ApsaraDB family provides an end-to-end secure key distribution mechanism, protecting against numerous security threats from the platform infrastructure layer when combined with Intel TDX and Remote Attestation.

Moreover, TPC-C testing show that ApsaraDB Confidential Databases perform similarly to plaintext data and enable extensive SQL query capabilities. They are also compatible with regular databases. They provide apps with transparent and seamless client access without the need for code modifications, and they work with ecosystem tools like DTS and DMS to make application migration simple.

With these benefits, Alibaba Cloud ApsaraDB Confidential Database can better meet the security requirements of the following scenarios and offer more robust protection for data in use:

Maintenance Security The confidential database can shield database service and operation staff from accessing business-sensitive data while maintaining the regular operation of the database in frequent scenarios where the data owner is the application service provider.

Data Security Compliance A confidential database can provide data management and analysis capabilities while preventing application service providers from accessing the private plaintext data in situations where the end users themselves are the owners of certain types of data (e.g., health and financial data). Additionally, in these kinds of situations, application service providers may find it easier to comply with regulations for the handling of sensitive data while using the confidential database.

Safe and dependable multi-party data sharing

In situations involving joint analysis of several sources of data, the private database can assist in guaranteeing that the information of each party is not viewed or obtained by other parties participating in the cooperative computation of multi-party data.

Cloud tenants with highly demanding security requirements for cloud databases can adopt the ApsaraDB Confidential Database family to achieve extremely strict data protection, thanks to the advantages of Intel TDX and Alibaba Cloud ApsaraDB Confidential Database technology.

An Overview of Function Data is encrypted in the Alibaba Cloud ApsaraDB Confidential Database for the duration of its existence. Data stays encrypted until it is received by authorised parties after it leaves a trustworthy environment. The data is decrypted only at this point, for example, within trustworthy client-side business systems. Direct database connections can only read encrypted data; plaintext cannot be accessed.

The Alibaba Cloud RDS MySQL, PolarDB MySQL, RDS PG, and ApsaraDB Confidential Database are all compatible with it. It has little effect on performance and stability and supports all of the syntax of MySQL.

The application’s end users have access to desensitised or unencrypted data and maintain complete control of the data. On the other hand, insider threat risk is significantly reduced because developers and operators of databases and applications can only work with encrypted data.

The database enables the creation of personalised sensitive data rules, facilitating the encryption of vital data according to particular requirements, including identity card numbers, addresses, and user phone numbers. Alibaba Cloud’s RAM system can control the process of establishing encryption rules. This guards against illegal rule changes and the possible export of plaintext data by ensuring that DBAs and developers follow the principle of least privilege. Users can query the database using a MySQL client after configuring the encryption rules, guaranteeing that non-sensitive data is displayed in plaintext and sensitive data is always provided as ciphertext.

Read more on Govindhtech.com

#inteltdx#AlibabaCloud#apsaradb#IntelVMX#IntelSGX#cloudcomputing#technologynews#technology#technews#news#govindhtech#intel

0 notes

Text

Pick Your Perfect Match: Aurora vs RDS - A Guide to AWS Database Solutions

Now that Database-as-a-service (DBaaS) is in high demand, there are multiple questions regarding AWS services that cannot always be answered easily: When should I use Aurora and when should I use RDS MySQL? What are the major differences in Aurora as well as RDS? What should I consider when deciding which one to choose?

The blog below we'll address all of these crucial questions and bring an overview of the two database options, Aurora vs RDS.

Understanding DBaaS

DBaaS cloud services permit users to access databases without configuring physical hardware infrastructure, or installing software. However, when figuring out which option perfect for an organization, diverse factors should be taken into account. They could include efficiency, operational costs, high availability and capacity planning, management, security, scalability, monitoring and more.

There are instances when, even though the work load and operational demands appear to perfect match to one solution however, there are other factors that could cause blockages (or at least require specific handling).

Understanding DBaaS

DBaaS cloud services permit users to access databases without configuring physical hardware infrastructure, or installing software. However, when figuring out which option perfect for an organization, diverse factors should be taken into account. They could include efficiency, operational costs, high availability and capacity planning, management, security, scalability, monitoring and more.

There are instances when, even though the work load and operational demands appear to perfect match to one solution however, there are other factors that could cause blockages (or at least require specific handling).

What we need to compare are those of the MySQL and Aurora database engines that are offered through Amazon RDS.

Download our ebook, “Enterprise Guide to Cloud Databases” to benefit you make better informed choices and avoid costly errors when you design and implement your strategy for cloud.

What is Amazon Aurora?

Amazon Aurora is a proprietary cloud-native, fully-managed relational database service created through Amazon Web Services (AWS). It supports MySQL and PostgreSQL and its automatic backup and replication capabilities, it is built to offer high performance as well as scalability and availability to support the requirements of critical applications.

Aurora Features

High Performance and Scalability

Amazon Aurora has gained widespread praise for its remarkable performance and scalability. This makes it a perfect solution to handle the demands of high-demand tasks. It efficiently handles the write and read operations, optimizes access to data and reduces contention which payoff in rapid throughput and low delay for you to assure that applications run to their desirable.

Aurora offers a range of options for scaling, such as the ability the addition of up 15 read replicas within one database cluster and the auto-scaling to read replications the development of read replicas across regions for disaster recovery, and enhanced read performance across different geographical locations, and auto-scaling for storage that can handle growing data without needing continuous monitoring.

Support for MySQL as well as PostgreSQL

Aurora provides seamless compatibility to MySQL and PostgreSQL that allows users and DBAs to use their database abilities and make use of the latest capabilities and improvements.

If you have applications developed using MySQL or PostgreSQL moving to Aurora is an easy process with minimal code modifications, because it works with the same protocols, tools and drivers.

Automated Backups and Point-in-Time Recovery

Aurora offers automated backup and point-in-time recovery that simplifies the management of backups and protecting data. Backups that are continuous and incremental are created automatically and then stored in Amazon S3, and data retention times can be set to satisfy compliance requirements.

The point-in-time recovery (PITR) feature enables the restoration of a database to a specific time within the set retention period, making it easier to roll the application back to a specific state or recover from accidental/purposeful data corruption.

Automated features lessen the workload on DBAs as well as organizations with their efforts to protect data by easing backups of databases and recovery.

Multi-Availability Zone (AZ) Deployment

Aurora’s multi-availability zone (AZ) deployment provides remarkably high reliability and resilience to faults by automatically replicating information across numerous accessibility zones together it’s distributed storage system to remove single point of failure. The constant synchronization between replica and primary storage ensures continuous redundancy. In the event of an interruption occurs within the main, Aurora seamlessly switches to the replica using automated failover to ensure continuous availability.

What is Amazon RDS?

Amazon Relational Database Service (Amazon RDS) is a cloud-hosted database service that offers diverse database options to pick from, such as Aurora, PostgreSQL, MySQL, MariaDB, Oracle, and Microsoft SQL Server.

RDS Features

Managed Database Service

Amazon RDS is a fully-managed database service that is provided by AWS and offers a simple approach to manage and maintain relational databases hosted in the cloud. AWS manages the essential administrative tasks such as database configuration, setup backups, monitoring and scaling. It makes it simpler for companies to manage their complex databases.

By delegating these administrative duties by delegating these administrative tasks to AWS, DBAs, and developers are no longer required to devote time to tedious tasks such as software installation and hardware provisioning, giving them time to focus on more business-oriented processes while also reducing expenses.

Multiple Database Engine Options

Amazon RDS supports various database engine options, such as MySQL, PostgreSQL, Oracle and SQL Server. This gives organizations the freedom to select the appropriate engine for their particular needs. With these choices, Amazon RDS empowers developers to adapt your database architecture to meet the unique requirements of their apps performance requirements, performance expectations, and compliance requirements, while ensuring that the database is compatible and efficient across all businesses.

Offering a simple method of migrating databases that are already in use, RDS allows for a variety of migration options that include imports of backup data from existing backups, and using AWS Database Migration Services (DMS) to enable real-time data migration. This flexibility lets businesses effortlessly move their databases into the AWS cloud without causing significant disruptions.

Automated Backups and Point-in-Time Recovery

Amazon RDS offers an automated backup feature to ensure the integrity of data and offers reliable protection for data. It takes regular backups, and captures small changes from the previous backup without affecting the performance. Users can choose the time frame for these backups. This allows the recovery of historical data in the event an accidental loss of data or corruption. Point-in-time recovery (PITR) permits users to restore the database at any time within the specified time. This is a great feature in reverting back to a prior state, or to repair damage caused by data or other occurrences.

Its RDS automatic backup as well as PITR features ensure that data is not lost and protect against system failures, providing the highest level of availability and performance, while making backup management easier for developers as well as DBAs.

Scalability and Elasticity

Amazon RDS offers several scalability options to allow organizations to adjust resources to accommodate changing applications and workload requirements. Vertical scaling permits for an increase in compute and memory capacity by upgrading to higher-end instances that are perfect for handling large demand for processing or traffic and horizontal scaling entails creating read replicas that distribute the workload across different instances, increasing the read scalability of applications that are heavy on reading.

RDS additionally simplifies the process of automatically scaling depending on demand for workloads by adding or subtracting replicas in order to efficiently divide read requests and decrease cost during periods of low demand. It also allows auto-scaling of storage and compute resources, adjusting capacity dynamically in accordance with the chosen thresholds for utilization to improve performance and decrease cost.

The ability to alter resources in response to changing demands gives organizations the capability to react quickly to fluctuations in demand without having to manually intervene — while still optimizing performance and decreasing costs.

Examining the similarities between Aurora vs RDS

If you compare Amazon Aurora and Amazon RDS It is clear that both provide advantages in time-saving administration of systems. Both options let you get a pre-configured system ready to run your apps. Particularly, in the absence of special database admins (DBAs), Amazon RDS offers a wide range of flexibility for different processes, such as backups and upgrades.

Amazon Aurora and Amazon RDS both Amazon Aurora and Amazon RDS offer continuous updates as well as patches that are applied by Amazon without interruption. You can set maintenance windows that allow automated patching to take place within these time frames. Furthermore, data is constantly stored on Amazon S3 in real-time, protecting your data without visible effect on performance. This means that there is no necessity for complex or scripted backup processes and defined backup windows.

Although these shared features provide significant benefits, it’s crucial to take into consideration potential issues like vendor lock-in, and the potential issues that result from enforced updates as well as client-side optimizations.

Aurora RDS RDS The key differences

In this article we will examine the distinct features and characteristics in Amazon Aurora along with Amazon RDS in addition to shedding light on their efficiency, scalability and pricing strategies, and so on.

Amazon Aurora is an open-source, relational closed-source database engine that comes and all the implications that it brings.

The RDS MySQL can be 5.5, 5.6, and 5.7 compatible, and provides the choice to select between minor versions. Although RDS MySQL supports numerous storage engines with different capabilities but not all are designed for recovery from crashes and long-term data protection. It was until recently an inconvenient fact to the extent that Aurora wasn’t compatible only with MySQL 5.6 however, the software is compatible now with MySQL 5.6 and 5.7 too.

In most instances, no major application modifications are needed to either of the products. Be aware that some MySQL features, such as those of the MyISAM storage engine aren’t available in Amazon Aurora. The migration to RDS is possible with the comprinno program.

For RDS products Shell access to the operating system in question is blocked, and access for MySQL user accounts that have access to the “SUPER” privilege isn’t allowed. To manage MySQL parameters or control users Amazon RDS provides specific parameters, APIs and other procedures for the system that are utilized. If you are looking to allow Amazon RDS remote access, this article can benefit to do it.

Considerations regarding performance

For instance, because of the requirement for disabling in the case of InnoDB changes buffer in Aurora (this is among the key components for this distributed storage system) and the fact that updates to secondary indexes need to be write-through, there’s an enormous performance hit when heavy writes which update the secondary indexes is performed. This is due to the method MySQL depends upon the buffer to delay and combine second index update. If your application has frequent updates to tables that have primary indexes Aurora speed may prove low. As you might have seen, AWS claims that the query_cache feature is a viable option and does not have issues with scalability. Personally, I’ve never had any issues with query_cache and the feature is able to greatly rise the overall performance.

In any event it is important to be aware that performance varies based on the schema’s design. When deciding to move, performance must be compared against the specific workload of your application. Conducting thorough tests will become the topic of a subsequent blog article.

Capacity Planning

In terms of storage under the hood Another factor to take into account is Aurora storage, there is no requirement for capacity planning. Aurora storage will grow automatically by a minimum of 10GB to 64 TiB in increments of 10GB without affecting the performance of databases. The limit on table size is only limited in relation to the volume of Aurora database cluster, which can reach an maximum capacity size of 64 Tebibytes (TiB). Therefore, the maximum size of a table for a table within the Aurora database will be 64 TiB. For RDS MySQL the maximum allocated storage limit limits the table’s size to a maximum that is 16TB when with InnoDB tablespaces that are file-per-table.

In the case of RDS MySQL, there has recently been added a brand-new function, known as storage autoscaling. Once you have created your instance you are able to enable this option which is somewhat similar to Aurora’s features. Aurora provides. More details are available here..

In August 2018. Aurora offers a second opportunity that does not need provisioned capacity. It’s Aurora Serverless.

“Amazon Aurora Serverless is an on-demand, auto-scaling configuration for Amazon Aurora (MySQL-compatible and PostgreSQL-compatible editions), where the database will automatically start up, shut down, and scale capacity up or down based on your application’s needs. It allows you to manage your database on the cloud, without having to manage all instances of your database. It’s an easy, affordable feature for occasional, irregular or unpredictably heavy work. Manually managing the database’s capacity can consume time and could result in inefficient utilization of the database’s resources. With Aurora Serverless It is as easy as create an endpoint for your database, indicate the desired capacity range, then connect your applications. The cost is per second basis for the capacity of your database that you utilize as long as the database is running and you can switch between serverless and standard configurations by a few clicks from the Management Console for Amazon RDS.”

0 notes

Text

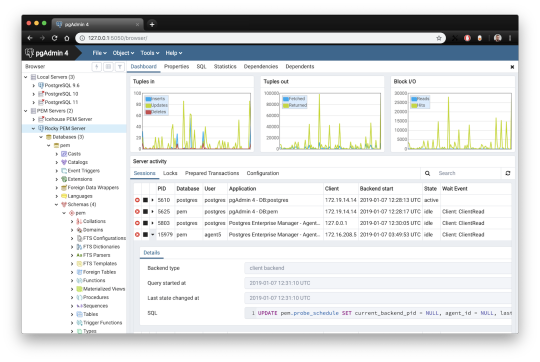

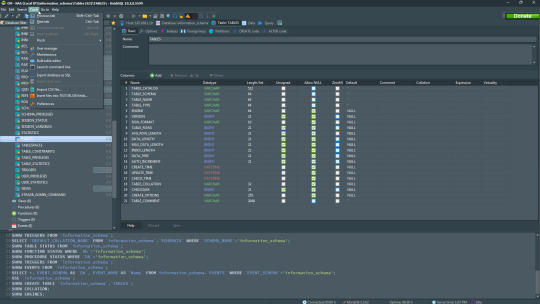

Which Is The Best PostgreSQL GUI? 2021 Comparison

PostgreSQL graphical user interface (GUI) tools help open source database users to manage, manipulate, and visualize their data. In this post, we discuss the top 6 GUI tools for administering your PostgreSQL hosting deployments. PostgreSQL is the fourth most popular database management system in the world, and heavily used in all sizes of applications from small to large. The traditional method to work with databases is using the command-line interface (CLI) tool, however, this interface presents a number of issues:

It requires a big learning curve to get the best out of the DBMS.

Console display may not be something of your liking, and it only gives very little information at a time.

It is difficult to browse databases and tables, check indexes, and monitor databases through the console.

Many still prefer CLIs over GUIs, but this set is ever so shrinking. I believe anyone who comes into programming after 2010 will tell you GUI tools increase their productivity over a CLI solution.

Why Use a GUI Tool?

Now that we understand the issues users face with the CLI, let’s take a look at the advantages of using a PostgreSQL GUI:

Shortcut keys make it easier to use, and much easier to learn for new users.

Offers great visualization to help you interpret your data.

You can remotely access and navigate another database server.

The window-based interface makes it much easier to manage your PostgreSQL data.

Easier access to files, features, and the operating system.

So, bottom line, GUI tools make PostgreSQL developers’ lives easier.

Top PostgreSQL GUI Tools

Today I will tell you about the 6 best PostgreSQL GUI tools. If you want a quick overview of this article, feel free to check out our infographic at the end of this post. Let’s start with the first and most popular one.

1. pgAdmin

pgAdmin is the de facto GUI tool for PostgreSQL, and the first tool anyone would use for PostgreSQL. It supports all PostgreSQL operations and features while being free and open source. pgAdmin is used by both novice and seasoned DBAs and developers for database administration.

Here are some of the top reasons why PostgreSQL users love pgAdmin:

Create, view and edit on all common PostgreSQL objects.

Offers a graphical query planning tool with color syntax highlighting.

The dashboard lets you monitor server activities such as database locks, connected sessions, and prepared transactions.

Since pgAdmin is a web application, you can deploy it on any server and access it remotely.

pgAdmin UI consists of detachable panels that you can arrange according to your likings.

Provides a procedural language debugger to help you debug your code.

pgAdmin has a portable version which can help you easily move your data between machines.

There are several cons of pgAdmin that users have generally complained about:

The UI is slow and non-intuitive compared to paid GUI tools.

pgAdmin uses too many resources.

pgAdmin can be used on Windows, Linux, and Mac OS. We listed it first as it’s the most used GUI tool for PostgreSQL, and the only native PostgreSQL GUI tool in our list. As it’s dedicated exclusively to PostgreSQL, you can expect it to update with the latest features of each version. pgAdmin can be downloaded from their official website.

pgAdmin Pricing: Free (open source)

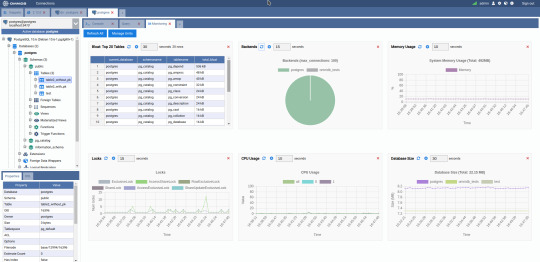

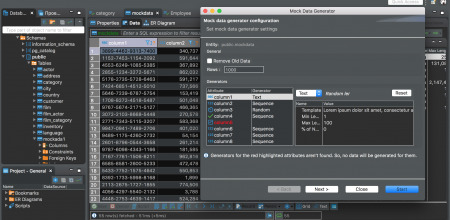

2. DBeaver

DBeaver is a major cross-platform GUI tool for PostgreSQL that both developers and database administrators love. DBeaver is not a native GUI tool for PostgreSQL, as it supports all the popular databases like MySQL, MariaDB, Sybase, SQLite, Oracle, SQL Server, DB2, MS Access, Firebird, Teradata, Apache Hive, Phoenix, Presto, and Derby – any database which has a JDBC driver (over 80 databases!).

Here are some of the top DBeaver GUI features for PostgreSQL:

Visual Query builder helps you to construct complex SQL queries without actual knowledge of SQL.

It has one of the best editors – multiple data views are available to support a variety of user needs.

Convenient navigation among data.

In DBeaver, you can generate fake data that looks like real data allowing you to test your systems.

Full-text data search against all chosen tables/views with search results shown as filtered tables/views.

Metadata search among rows in database system tables.

Import and export data with many file formats such as CSV, HTML, XML, JSON, XLS, XLSX.

Provides advanced security for your databases by storing passwords in secured storage protected by a master password.

Automatically generated ER diagrams for a database/schema.

Enterprise Edition provides a special online support system.

One of the cons of DBeaver is it may be slow when dealing with large data sets compared to some expensive GUI tools like Navicat and DataGrip.

You can run DBeaver on Windows, Linux, and macOS, and easily connect DBeaver PostgreSQL with or without SSL. It has a free open-source edition as well an enterprise edition. You can buy the standard license for enterprise edition at $199, or by subscription at $19/month. The free version is good enough for most companies, as many of the DBeaver users will tell you the free edition is better than pgAdmin.

DBeaver Pricing

: Free community, $199 standard license

3. OmniDB

The next PostgreSQL GUI we’re going to review is OmniDB. OmniDB lets you add, edit, and manage data and all other necessary features in a unified workspace. Although OmniDB supports other database systems like MySQL, Oracle, and MariaDB, their primary target is PostgreSQL. This open source tool is mainly sponsored by 2ndQuadrant. OmniDB supports all three major platforms, namely Windows, Linux, and Mac OS X.

There are many reasons why you should use OmniDB for your Postgres developments:

You can easily configure it by adding and removing connections, and leverage encrypted connections when remote connections are necessary.

Smart SQL editor helps you to write SQL codes through autocomplete and syntax highlighting features.

Add-on support available for debugging capabilities to PostgreSQL functions and procedures.

You can monitor the dashboard from customizable charts that show real-time information about your database.

Query plan visualization helps you find bottlenecks in your SQL queries.

It allows access from multiple computers with encrypted personal information.

Developers can add and share new features via plugins.

There are a couple of cons with OmniDB:

OmniDB lacks community support in comparison to pgAdmin and DBeaver. So, you might find it difficult to learn this tool, and could feel a bit alone when you face an issue.

It doesn’t have as many features as paid GUI tools like Navicat and DataGrip.

OmniDB users have favorable opinions about it, and you can download OmniDB for PostgreSQL from here.

OmniDB Pricing: Free (open source)

4. DataGrip

DataGrip is a cross-platform integrated development environment (IDE) that supports multiple database environments. The most important thing to note about DataGrip is that it’s developed by JetBrains, one of the leading brands for developing IDEs. If you have ever used PhpStorm, IntelliJ IDEA, PyCharm, WebStorm, you won’t need an introduction on how good JetBrains IDEs are.

There are many exciting features to like in the DataGrip PostgreSQL GUI:

The context-sensitive and schema-aware auto-complete feature suggests more relevant code completions.

It has a beautiful and customizable UI along with an intelligent query console that keeps track of all your activities so you won’t lose your work. Moreover, you can easily add, remove, edit, and clone data rows with its powerful editor.

There are many ways to navigate schema between tables, views, and procedures.

It can immediately detect bugs in your code and suggest the best options to fix them.

It has an advanced refactoring process – when you rename a variable or an object, it can resolve all references automatically.

DataGrip is not just a GUI tool for PostgreSQL, but a full-featured IDE that has features like version control systems.

There are a few cons in DataGrip:

The obvious issue is that it’s not native to PostgreSQL, so it lacks PostgreSQL-specific features. For example, it is not easy to debug errors as not all are able to be shown.

Not only DataGrip, but most JetBrains IDEs have a big learning curve making it a bit overwhelming for beginner developers.

It consumes a lot of resources, like RAM, from your system.

DataGrip supports a tremendous list of database management systems, including SQL Server, MySQL, Oracle, SQLite, Azure Database, DB2, H2, MariaDB, Cassandra, HyperSQL, Apache Derby, and many more.

DataGrip supports all three major operating systems, Windows, Linux, and Mac OS. One of the downsides is that JetBrains products are comparatively costly. DataGrip has two different prices for organizations and individuals. DataGrip for Organizations will cost you $19.90/month, or $199 for the first year, $159 for the second year, and $119 for the third year onwards. The individual package will cost you $8.90/month, or $89 for the first year. You can test it out during the free 30 day trial period.

DataGrip Pricing

: $8.90/month to $199/year

5. Navicat

Navicat is an easy-to-use graphical tool that targets both beginner and experienced developers. It supports several database systems such as MySQL, PostgreSQL, and MongoDB. One of the special features of Navicat is its collaboration with cloud databases like Amazon Redshift, Amazon RDS, Amazon Aurora, Microsoft Azure, Google Cloud, Tencent Cloud, Alibaba Cloud, and Huawei Cloud.

Important features of Navicat for Postgres include:

It has a very intuitive and fast UI. You can easily create and edit SQL statements with its visual SQL builder, and the powerful code auto-completion saves you a lot of time and helps you avoid mistakes.

Navicat has a powerful data modeling tool for visualizing database structures, making changes, and designing entire schemas from scratch. You can manipulate almost any database object visually through diagrams.

Navicat can run scheduled jobs and notify you via email when the job is done running.

Navicat is capable of synchronizing different data sources and schemas.

Navicat has an add-on feature (Navicat Cloud) that offers project-based team collaboration.

It establishes secure connections through SSH tunneling and SSL ensuring every connection is secure, stable, and reliable.

You can import and export data to diverse formats like Excel, Access, CSV, and more.

Despite all the good features, there are a few cons that you need to consider before buying Navicat:

The license is locked to a single platform. You need to buy different licenses for PostgreSQL and MySQL. Considering its heavy price, this is a bit difficult for a small company or a freelancer.

It has many features that will take some time for a newbie to get going.

You can use Navicat in Windows, Linux, Mac OS, and iOS environments. The quality of Navicat is endorsed by its world-popular clients, including Apple, Oracle, Google, Microsoft, Facebook, Disney, and Adobe. Navicat comes in three editions called enterprise edition, standard edition, and non-commercial edition. Enterprise edition costs you $14.99/month up to $299 for a perpetual license, the standard edition is $9.99/month up to $199 for a perpetual license, and then the non-commercial edition costs $5.99/month up to $119 for its perpetual license. You can get full price details here, and download the Navicat trial version for 14 days from here.

Navicat Pricing

: $5.99/month up to $299/license

6. HeidiSQL

HeidiSQL is a new addition to our best PostgreSQL GUI tools list in 2021. It is a lightweight, free open source GUI that helps you manage tables, logs and users, edit data, views, procedures and scheduled events, and is continuously enhanced by the active group of contributors. HeidiSQL was initially developed for MySQL, and later added support for MS SQL Server, PostgreSQL, SQLite and MariaDB. Invented in 2002 by Ansgar Becker, HeidiSQL aims to be easy to learn and provide the simplest way to connect to a database, fire queries, and see what’s in a database.

Some of the advantages of HeidiSQL for PostgreSQL include:

Connects to multiple servers in one window.

Generates nice SQL-exports, and allows you to export from one server/database directly to another server/database.

Provides a comfortable grid to browse and edit table data, and perform bulk table edits such as move to database, change engine or ollation.

You can write queries with customizable syntax-highlighting and code-completion.

It has an active community helping to support other users and GUI improvements.

Allows you to find specific text in all tables of all databases on a single server, and optimize repair tables in a batch manner.

Provides a dialog for quick grid/data exports to Excel, HTML, JSON, PHP, even LaTeX.

There are a few cons to HeidiSQL:

Does not offer a procedural language debugger to help you debug your code.

Built for Windows, and currently only supports Windows (which is not a con for our Windors readers!)

HeidiSQL does have a lot of bugs, but the author is very attentive and active in addressing issues.

If HeidiSQL is right for you, you can download it here and follow updates on their GitHub page.

HeidiSQL Pricing: Free (open source)

Conclusion

Let’s summarize our top PostgreSQL GUI comparison. Almost everyone starts PostgreSQL with pgAdmin. It has great community support, and there are a lot of resources to help you if you face an issue. Usually, pgAdmin satisfies the needs of many developers to a great extent and thus, most developers do not look for other GUI tools. That’s why pgAdmin remains to be the most popular GUI tool.

If you are looking for an open source solution that has a better UI and visual editor, then DBeaver and OmniDB are great solutions for you. For users looking for a free lightweight GUI that supports multiple database types, HeidiSQL may be right for you. If you are looking for more features than what’s provided by an open source tool, and you’re ready to pay a good price for it, then Navicat and DataGrip are the best GUI products on the market.

Ready for some PostgreSQL automation?

See how you can get your time back with fully managed PostgreSQL hosting. Pricing starts at just $10/month.

While I believe one of these tools should surely support your requirements, there are other popular GUI tools for PostgreSQL that you might like, including Valentina Studio, Adminer, DB visualizer, and SQL workbench. I hope this article will help you decide which GUI tool suits your needs.

Which Is The Best PostgreSQL GUI? 2019 Comparison

Here are the top PostgreSQL GUI tools covered in our previous 2019 post:

pgAdmin

DBeaver

Navicat

DataGrip

OmniDB

Original source: ScaleGrid Blog

3 notes

·

View notes

Text

Remote Database Support Services -Remote DBA Service - Datapatroltech

Datapatroltech offers the remote database support services to protect company data . Data Patrol’s remote DBA services team includes top expertise in Oracle DBA support, SQL server support, MySQL DBA support, and Postgres DBA support besides others.We have 14 years plus experience in Remote DBA give 24*7 monitoring

1 note

·

View note

Link

Database administrators are always in demand, especially as the amount of data collected by the company increases. As more companies move to the cloud, the company roles and tasks change. Automation is likely to disrupt them, but the role of the database administrator is not over – it is developing. The company integrates the employees into BI and the analysis team, this provides the ability to use DBA domain knowledge and mastery of data flow in the company environment.

Database Administration Consulting Services from Cloudaeon

Identifying users needs to create and administer databases.

Ensuring that the database operates efficiently and without any error.

Making and testing modifications to the database structure when needed.

Maintaining the database.

Backing up and restoring data to prevent data loss.

Database Administration Tools from Cloudaeon

The Cloudaeon Company provides many database administration tools that help the DBA in his decision-making.

The first step the company needs to make the decision when introducing DBaaS is to choose a DBMS. The Cloudaeon Company offers DBMS include:

Oracle database

SQL Server

NoSQL

MySQL

The next step is to determine which cloud service provider to use. The company uses cloud service providers which includes:

AWS

Microsoft Azure

Oracle cloud

Google Cloud Platform

Why Cloudaeon for your Database Administration?

Database performance optimization: All types of systems are optimized, used to increase throughput and minimize contention, enabling the largest possible workload to be processed. Database performance optimization: All types of systems are optimized, used to increase throughput and minimize contention, enabling the largest possible workload to be processed.

Encryption: When data is encrypted, it is transformed using an algorithm to make it unreadable to anyone without the decryption key. The general idea is to make the effort of decrypting so difficult as to outweigh the advantage to a hacker of accessing the unauthorized data

Label-Based Access Control: A growing number of DBMSs offer label-based access control, which delivers more fine-grained control over authorization to specific data in the database. With label-based access control, it is possible to support applications that need a more granular security scheme.

Data Masking: Data masking at cloudaeon is done with provisioning test environments so that copies created to support application development and testing do not expose sensitive information. Valid production data is replaced with usable, referentially intact. After masking, the test data is used just as with production data and the information content is secure.

2 notes

·

View notes

Text

MySQL NDB Cluster Backup & Restore In An Easy Way