#Option recompile sql

Explore tagged Tumblr posts

Text

Option recompile sql

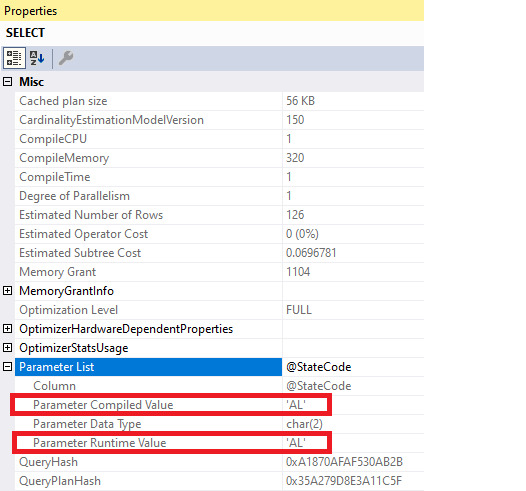

The difference between RECOMPILE and WITH RECOMPILE is that RECOMPILE can be added to the stored procedure as a query hint, and only that query within the stored procedure, not all of the queries in the stored procedure, will be recompiled. In SQL Server 2005, a new option if available: the RECOMPILE query hint. If the stored procedure has more than one query in it, as most do, it will recompile all of the queries in the stored procedure, even those that are not affected due to atypical parameters. This of course removes the benefits of query plan reuse, but it does ensure that each time the query is run, that the correct query plan is used. With this option added, a stored procedure will always recompile itself and create a new query plan each time it is run. In this case, what you can do is to ALTER the stored procedure and add the WITH RECOMPILE option to it. If you identify a stored procedure that usually runs fine, but sometimes runs slowly, it is very possible that you are seeing the problem described above. But, in some circumstances, it can cause a problem, assuming the parameters vary substantially from execution to execution of the query. Most of the time, you probably don’t need to worry about the above problem. But if the parameters are not typical, it is possible that the cached query plan that is being reused might not be optimal, resulting in the query running more slowly because it is using a query plan that is not really designed for the parameters used. If the values of the parameters of the stored procedure are similar from execution to execution, then the cached query plan will work fine and the query will perform optimally. But what if the same stored procedure is run, but the values of the parameters change? What happens depends on how typical the parameters are. If the query inside the stored procedure that runs each time has identical parameters in the WHERE clause, then reusing the same query plan for the stored procedure makes sense. So if you need to run the same stored procedure 1,000 times a day, a lot of time and hardware resources can be saved and SQL Server doesn’t have to work as hard. Each time the same stored procedure is run after it is cached, it will use the same query plan, eliminating the need for the same stored procedure from being optimized and compiled every time it is run. Whenever a stored procedure is run in SQL Server for the first time, it is optimized and a query plan is compiled and cached in SQL Server’s memory.

0 notes

Text

Sql server option recompile

#SQL SERVER OPTION RECOMPILE SERIAL#

#SQL SERVER OPTION RECOMPILE CODE#

Use of a temporary table within an SP also causes recompilation of that statement. Since we have auto-update statistics off, we have less recompilation of SP's to begin with. This plan reuse system works as long as objects are qualified with it owner and DatabaseName (for e.g. If all four tables have changed by about 20% since last statistics update, then the entire SP is recompiled. If say, you are accessing four tables in that SP and roughly 20% of the data for one table has been found to have changed since last statistics update, then that statement is recompiled. Recompilation happens only when about 20% of the data in the tables being called from within the SP is found to have changed since the last time statistics was updated for those tables and its indexes. So usage of different input parameters doesn't cause a recompilation. New input parameters in the current execution replace the previous input parameters from a previous execution plan in the execution context handle which is part of the overall execution plan.

#SQL SERVER OPTION RECOMPILE SERIAL#

The new plan is discarded imeediately after execution of the statement.Īssuming both of these options are not being used, an execution of an SP prompts a search for pre-existing plans (one serial plan and one parallel plan) in memory (plan cache). Any pre- existing plan even if it is exactly the same as the new plan, is not used. When used with a TSQL statement whether inside an SP or adhoc, Option 2 above creates a new execution plan for that particular statement. There is no caching of the execution plan for future reuse. Once the SP is executed, the plan is discarded immediately. Any existing plan is never reused even if the new plan is exactly the same as any pre-existing plan for that SP. It can not be used at a individual statement level.

#SQL SERVER OPTION RECOMPILE CODE#

When used in the code of a particular Stored procedure, Option 1compiles that SP everytime it is executed by any user. We should use RECOMPILE option only when the cost of generating a new execution plan is much less then the performance improvement which we got by using RECOMPILE option.WITH RECOMPILE This is because of the WITH RECOMPILE option, here each execution of stored procedure generates a new execution plan. Here you see the better execution plan and great improvement in Statistics IO. Now execute this stored procedure as: set statistics IO on Now again creating that stored procedure with RECOMPILE option. Here when we execute stored procedure again it uses the same execution plan with clustered index which is stored in procedure cache, while we know that if it uses non clustered index to retrieve the data here then performance will be fast. Now executing the same procedure with different parameter value: set statistics IO on The output of this execution generates below mention statistics and Execution plan: Select address,name from xtdetails where execute this stored procedure as: set statistics IO on Now create stored procedure as shown below: create procedure as varchar(50)) Set into xtdetails table xtdetails contains 10000 rows, where only 10 rows having name = asheesh and address=Moradabad. Now, I am inserting the data into this table: declare as int Ĭreate clustered index IX_xtdetails_id on xtdetails(id)Ĭreate Nonclustered index IX_xtdetails_address on xtdetails(address) In this case if we reuse the same plan for different values of parameters then performance may degrade.įor Example, create a table xtdetails and create indexes on them and insert some data as shown below: CREATE TABLE. But sometimes plans generation depends on parameter values of stored procedures. If plan found in cache then it reuse that plan that means we save our CPU cycles to generate a new plan. If we again execute the same procedure then before creating a new execution plan sql server search that plan in procedure cache. When we execute stored procedure then sql server create an execution plan for that procedure and stored that plan in procedure cache. Here i am focusing on why we use WITH RECOMPILE option. Some time, we also use WITH RECOMPILE option in stored procedures. We use stored procedures in sql server to get the benefit of reusability. Today here, I am explaining the Use of Recompile Clause in SQL Server Stored Procedures.

0 notes

Text

Sql server option recompile

SQL SERVER OPTION RECOMPILE SOFTWARE

SQL SERVER OPTION RECOMPILE CODE

SQL SERVER OPTION RECOMPILE FREE

I’m also available for consulting if you just don’t have time for that and need to solve performance problems quickly. I’m offering a 75% discount on to my blog readers if you click from here. If this is the kind of SQL Server stuff you love learning about, you’ll love my training. I’m not mad.Īnd yeah, there’s advances in SQL Server 20 that start to address some issues here, but they’re still imperfect. Using a plan guide doesn’t interfere with that precious vendor IP that makes SQL Server unresponsive every 15 minutes.

Plan Guides: An often overlooked detail of plan guides is that you can attach hints to them, including recompile.

You can single out troublesome queries to remove specific plans.

DBCC FREEPROCCACHE: No, not the whole cache.

Sure, you might be able to sneak a recompile hint somewhere in the mix even if it’d make the vendor upset.

SQL SERVER OPTION RECOMPILE SOFTWARE

For third party vendors who have somehow developed software that uses SQL Server for decades without running into a single best practice even by accident, it’s often harder to get those changes through. And yeah, sometimes there’s a good tuning option for these, like changing or adding an index, moving parts of the query around, sticking part of the query in a temp table, etc.īut all that assumes that those options are immediately available. Those are very real problems that I see on client systems pretty frequently.

SQL SERVER OPTION RECOMPILE CODE

CPU spikes for high-frequency execution queries: Maybe time for caching some stuff, or getting away from the kind of code that executes like this (scalar functions, cursors, etc.)īut for everything in the middle: a little RECOMPILE probably won’t hurt that bad.Sucks less if you have a monitoring tool or Query Store. No plan history in the cache (only the most recent plan): Sucks if you’re looking at the plan cache.Long compile times: Admittedly pretty rare, and plan guides or forced plans are likely a better option.Not necessarily caused by recompile, but by not re-using plans. Here are some problems you can hit with recompile. But as I list them out, I’m kinda shrugging. Obviously, you can run into problems if you (“you” includes Entity Framework, AKA the Database Demolisher) author the kind of queries that take a very long time to compile. And if you put it up against the performance problems that you can hit with parameter sniffing, I’d have a hard time telling someone strapped for time and knowledge that it’s the worst idea for them. You can do it in SSMS as well, but Plan Explorer is much nicer.It’s been a while since SQL Server has had a real RECOMPILE problem. Look at details of each operator in the plan and you should see what is going on.

SQL SERVER OPTION RECOMPILE FREE

I would recommend to look at both actual execution plans in the free SQL Sentry Plan Explorer tool. Without OPTION(RECOMPILE) optimiser has to generate a plan that is valid (produces correct results) for any possible value of the parameter.Īs you have observed, this may lead to different plans. If there are a lot of values in the table that are equal to 1, it would choose a scan. If there is only one value in the table that is equal to 1, most likely it will choose a seek. Also, optimiser knows statistics of the table and usually can make a better decision. It does not have to be valid for any other value of the parameter. The generated plan has to be valid for this specific value of the parameter. With OPTION(RECOMPILE) optimiser knows the value of the variable and essentially generates the plan, as if you wrote: SELECT * And simple (7 rows) and actual statistics. With OPTION (RECOMPILE) it uses the key lookup for the D table, without it uses scan for D table. INSERT INTO D (idH, detail) VALUES 'nonononono') INSERT INTO H (header) VALUES ('nonononono') The script is: Create two tables: CREATE TABLE H (id INT PRIMARY KEY CLUSTERED IDENTITY(1,1), header CHAR(100))ĬREATE TABLE D (id INT PRIMARY KEY CLUSTERED IDENTITY(1,1), idH INT, detail CHAR(100)) I am lost why execution plan is different if I run query with option recompile to compare to same query (with clean proc cache) without option recompile.

0 notes

Link

Description of Software Engineer

A software engineer is a person who applies the principles of software engineering to the design, development, maintenance, testing, and evaluation of computer software.

Prior to the mid-1970s, software practitioners generally called themselves computer scientists, computer programmers or software developers, regardless of their actual jobs. Many people prefer to call themselves software developer and programmer, because most widely agree what these terms mean, while the exact meaning of software engineer is still being debated.

What Does a Software Engineer Do?

Computer software engineers apply engineering principles and systematic methods to develop programs and operating data for computers. If you have ever asked yourself, "What does a software engineer do?" note that daily tasks vary widely. Professionals confer with system programmers, analysts, and other engineers to extract pertinent information for designing systems, projecting capabilities, and determining performance interfaces. Computer software engineers also analyze user needs, provide consultation services to discuss design elements, and coordinate software installation. Designing software systems requires professionals to consider mathematical models and scientific analysis to project outcomes.

KEY HARD SKILLS

Hard skills refers to practical, teachable competencies that an employee must develop to qualify for a particular position. Examples of hard skills for software engineers include learning to code with programming languages such as Java, SQL, and Python.

Java: This programming language produces software on multiple platforms without the need for recompilation. The code runs on nearly all operating systems including Mac OS or Windows. Java uses syntax from C and C++ programming. Browser-operated programs facilitate GUI and object interaction from users.

JavaScript: This scripting programming language allows users to perform complex tasks and is incorporated in most webpages. This language allows users to update content, animate images, operate multimedia, and store variables. JavaScript represents one of the web's three major technologies.

SQL: Also known as Structured Query Language, SQL queries, updates, modifies, deletes, and inserts data. To achieve this, SQL uses a set number of commands. This computer language is standard for the manipulation of data and relational database management. Professionals use SQL to manage structured data where relationships between variables and entities exist.

C++: Regarded as an object-oriented, general purpose programming language, C++ uses both low and high-level language. Given that virtually all computers contain C++, computer software engineers must understand this language. C++ encompases most C programs without switching the source code line. C++ primarily manipulates text, numbers, and other computer-capable tasks.

C#: Initially developed for Microsoft, this highly expressive program language is more simple in comparison to other languages, yet it includes components of C++ and Java. Generic types and methods provide additional safety and increased performance. C# also allows professionals to define iteration behavior, while supporting encapsulation, polymorphism, and inheritance.

Python: This high-level programing language contains dynamic semantics, structures, typing, and binding that connect existing components; however, the Python syntax is easy to learn with no compilation stage involved, reducing program maintenance and enhancing productivity. Python also supports module and package use, which allows engineers to use the language for varying projects.

Programming languages comprise a software engineer's bread and butter, with nearly as many options to explore as there are job possibilities. Examples include Ruby, an object-oriented language that works in blocks; Rust, which integrates with other languages for application development; PHP, a web development script that integrates with HTML; and Swift, which can program apps for all Apple products. Learn more about programming languages here.

KEY SOFT SKILLS

While hard skills like knowledge of programming languages are essential, software engineers must also consider which soft skills they may need to qualify for the position they seek. Soft skills include individual preferences and personality traits that demonstrate how an employee performs their duties and fits into a team.

Communication: Whether reporting progress to a supervisor, explaining a product to a client, or coordinating with team members to work on the same product, software engineers must be adept at communicating via email, phone, and in-person meetings.

Multitasking: Software development can require engineers to split attention across different modules of the same project, or switch easily between projects when working on a deadline or meeting team needs.

Organization: To handle multiple projects through their various stages and keep track of details, software engineers must demonstrate a certain level of organization. Busy supervisors oversee entire teams and need to access information efficiently at a client's request.

Attention to Detail: Concentration plays a critical role for software engineers. They must troubleshoot coding issues and bugs as they arise, and keep track of a host of complex details surrounding multiple ongoing projects.

2 notes

·

View notes

Text

Best way to prepare for PHP interview

PHP is one among the programming languages that are developed with built-in web development functions. The new language capabilities contained in PHP 7 ensure it is simpler for developers to extensively increase the performance of their web application without the use of additional resources. interview questions and answers topics for PHP They can move to the latest version of the commonly used server-side scripting language to make improvements to webpage loading without spending extra time and effort. But, the Web app developers still really should be able to read and reuse PHP code quickly to maintain and update web apps in the future.

Helpful tips to write PHP code clean, reliable and even reusable

Take advantage of Native Functions. When ever writing PHP code, programmers may easily achieve the same goal utilizing both native or custom functions. However, programmers should make use of the built-in PHP functions to perform a number of different tasks without writing extra code or custom functions. The native functions should also help developers to clean as well as read the application code. By reference to the PHP user manual, it is possible to collect information about the native functions and their use.

Compare similar functions. To keep the PHP code readable and clean, programmers can utilize native functions. However they should understand that the speed at which every PHP function is executed differs. Some PHP functions also consume additional resources. Developers must therefore compare similar PHP functions and select the one which does not negatively affect the performance of the web application and consume additional resources. As an example, the length of a string must be determined using isset) (instead of strlen). In addition to being faster than strlen (), isset () is also valid irrespective of the existence of variables.

Cache Most PHP Scripts. The PHP developers needs keep in mind that the script execution time varies from one web server to another. For example, Apache web server provide a HTML page much quicker compared with PHP scripts. Also, it needs to recompile the PHP script whenever the page is requested for. The developers can easily eliminate the script recompilation process by caching most scripts. They also get option to minimize the script compilation time significantly by using a variety of PHP caching tools. For instance, the programmers are able to use memcache to cache a lot of scripts efficiently, along with reducing database interactions.

common asked php interview questions and answers for freshers

Execute Conditional Code with Ternary Operators. It is actually a regular practice among PHP developers to execute conditional code with If/Else statements. But the programmers want to write extra code to execute conditional code through If/Else statements. They could easily avoid writing additional code by executing conditional code through ternary operator instead of If/Else statements. The ternary operator allows programmers to keep the code clean and clutter-free by writing conditional code in a single line.

Use JSON instead of XML. When working with web services, the PHP programmers have option to utilize both XML and JSON. But they are able to take advantage of the native PHP functions like json_encode( ) and json_decode( ) to work with web services in a faster and more efficient way. They still have option to work with XML form of data. The developers are able to parse the XML data more efficiently using regular expression rather than DOM manipulation.

Replace Double Quotes with Single Quotes. When writing PHP code, developers are able to use either single quotes (') or double quotes ("). But the programmers can easily improve the functionality of the PHP application by using single quotes instead of double quotes. The singular code will speed up the execution speed of loops drastically. Similarly, the single quote will further enable programmers to print longer lines of information more effectively. However, the developers will have to make changes to the PHP code while using single quotes instead of double quotes.

Avoid Using Wildcards in SQL Queries. PHP developers typically use wildcards or * to keep the SQL queries lightweight and simple. However the use of wildcards will impact on the performance of the web application directly if the database has a higher number of columns. The developers must mention the needed columns in particular in the SQL query to maintain data secure and reduce resource consumption.

However, it is important for web developers to decide on the right PHP framework and development tool. Presently, each programmer has the option to decide on a wide range of open source PHP frameworks including Laravel, Symfony, CakePHP, Yii, CodeIgniter, and Zend. Therefore, it becomes necessary for developers to opt for a PHP that complements all the needs of a project. interview questions on PHP They also need to combine multiple PHP development tool to decrease the development time significantly and make the web app maintainable.

php interview questions for freshers

youtube

1 note

·

View note

Text

PHP is one amongst the programming languages that were developed with inbuilt net development capabilities. The new language options enclosed in PHP seven any makes it easier for programmers to boost the speed of their net application considerably while not deploying extra resources. They programmers will switch to the foremost recent version of the wide used server-side scripting language to enhance the load speed of internet sites while not swing beyond regular time and energy. however the net application developers still want target the readability and reusability of the PHP code to take care of and update the net applications quickly in future.

12 Tips to write down Clean, rectifiable, and Reusable PHP Code

1) benefit of Native Functions While writing PHP code, the programmers have choice to accomplish identical objective by victimisation either native functions or custom functions. however the developers should benefit of the inbuilt functions provided by PHP to accomplish a spread of tasks while not writing extra code or custom functions. The native functions can any facilitate the developers to stay the appliance code clean and decipherable. they'll simply gather info concerning the native functions and their usage by touching on the PHP user manual.

Another way to check phpstorm key shortcuts

2) Compare Similar Functions The developers will use native functions to stay the PHP code decipherable and clean. however they need to keep in mind that the speed of individual PHP functions differs. Also, bound PHP functions consume extra resources than others. Hence, the developers should compare similar PHP functions, and select the one that doesn't have an effect on the performance of the net application negatively and consume extra resources. as an example, they need to verify the length of a string by victimisation isset() rather than strlen(). additionally to being quicker than strlen(), isset() conjointly remains valid in spite of the existence of variables.

3) Cache Most PHP Scripts The PHP programmers should keep in mind that the script execution time differs from one net server to a different. as an example, Apache net server serve a HTML page abundant quicker than PHP scripts. Also, it has to recompile the PHP script on every occasion the page is requested for. The programmers will simply eliminate the script recompilation method by caching most scripts. They even have choice to scale back the script compilation time considerably by employing a style of PHP caching tools. as an example, the programmers will use memcache to cache an oversized variety of scripts expeditiously, at the side of reducing info interactions.

4) Execute Conditional Code with Ternary Operators It is a typical observe among PHP developers to execute conditional code with If/Else statements. however the developers got to writing extra code to execute conditional code through If/Else statements. they'll simply avoid writing extra code by corporal punishment conditional code through ternary operator rather than If/Else statements. The ternary operator helps programmers to stay the code clean and clutter-free by writing conditional code during a single line.

5) Keep the Code decipherable and rectifiable Often programmers notice it intimidating perceive and modify the code written by others. Hence, they have beyond regular time to take care of and update the PHP applications expeditiously. whereas writing PHP code, the programmers will simply build the appliance simple to take care of and update by describing the usage and significance of individual code snippets clearly. they'll simply build the code decipherable by adding comments to every code piece. The comments can build it easier for alternative developers to form changes to the present code in future while not swing beyond regular time and energy.

6) Use JSON rather than XML While operating with net services, the PHP programmers have choice to use each XML and JSON. however they'll continuously benefit of the native PHP functions like json_encode( ) and json_decode( ) to figure with net services during a quicker and a lot of economical manner. They still have choice to work with XML style of information. The programmers will break down the XML information a lot of expeditiously by victimisation regular expression rather than DOM manipulation.

7) Pass References rather than worth to Functions The intimate PHP programmers ne'er declare new categories and strategies only if they become essential. They conjointly explore ways in which to recycle the categories and strategies throughout the code. However, they conjointly perceive the actual fact that a perform may be manipulated quickly by passing references rather than values. they'll any avoid adding additional overheads by passing references to the perform rather than values. However, they still got to make sure that the logic remains unaffected whereas passing relevancy the functions.

8) flip Error coverage on in Development Mode The developers should establish and repair all errors or flaws within the PHP code throughout the event method. They even have to place overtime and energy to mend the writing errors and problems known throughout testing method. The programmers merely set the error coverage to E_ALL to spot each minor and major errors within the PHP code throughout the event method. However, they need to flip the error coverage choice off once the appliance moves from development mode to production mode.

9) Replace inverted comma with Single Quotes While writing PHP code, programmers have choice to use either single quotes (') or inverted comma ("). however the developers will simply enhance the performance of the PHP application by victimisation single quotes rather than inverted comma. the only code can increase the speed of loops drastically. Likewise, the only quote can any change programmers to print longer lines of data a lot of expeditiously. However, the developers got to build changes to the PHP code whereas victimisation single quotes rather than inverted comma.

10) Avoid victimisation Wildcards in SQL Queries PHP programmers typically use wildcards or * to stay the SQL queries compact and easy. however the employment of wildcards might have an effect on the performance of the net application directly if the info includes a higher variety of columns. The programmers should mention the desired columns specifically within the SQL question to stay information secure and scale back resource consumption.

11) Avoid corporal punishment info Queries in Loop The PHP programmers will simply enhance the net application's performance by not corporal punishment info queries in loop. They even have variety of choices to accomplish identical results while not corporal punishment info queries in loop. as an example, the developers will use a strong WordPress plug-in like question Monitor to look at the info queries at the side of the rows laid low with them. they'll even use the debugging plug-in to spot the slow, duplicate, and incorrect info queries.

12) ne'er Trust User Input The sensible PHP programmers keep the net application secure by ne'er trusting the input submitted by users. They continuously check, filter and sanitize all user info to guard the appliance from varied security threats. they'll any forestall users from submitting inappropriate or invalid information by victimisation inbuilt functions like filter_var(). The perform can check for applicable values whereas receiving or process user input.

However, it's conjointly necessary for the net developers to select the correct PHP framework and development tool. At present, every technologist has choice to choose between a large vary of open supply PHP frameworks as well as Laravel, Symfony, CakePHP, Yii, CodeIgniter and Iranian language. Hence, it becomes essential for programmers to select a PHP that enhances all wants of a project. They conjointly got to mix multiple PHP development tool to scale back the event time considerably and build the net application rectifiable.

1 note

·

View note

Text

SQL Interview Questions and Answers - Part 12:

Q100. How can you return value from a stored procedure? Q101. What is a temporary stored procedure? What is the use of a temp stored procedure? Q102. What is the difference between recursive vs nested stored procedures? Q103. What is the use of the WITH RECOMPILE option in the stored procedure? Why one should use it? Q104. How can you ENCRYPT and DECRYPT the stored procedure? Q105. How can you improve the performance of a stored procedure? Q106. What is SCHEMABINDING in stored procedure? If all the stored procedures are bounded to the schema by default, then why do we need to do it explicitly? Q107. How can you write a stored procedure which executes automatically when SQL Server restarts? Q108. Is stored procedure names case-sensitive? Q109. What is CLR stored procedure? What is the use of the CLR procedure? Q110. What is the use of EXECUTE AS clause in the stored procedure?

#sqlinterviewquestions#mostfrequentlyaskedsqlinterviewquestions#sqlinterviewquestionsandanswers#interviewquestionsandanswers#techpointfundamentals#techpointfunda#techpoint

1 note

·

View note

Text

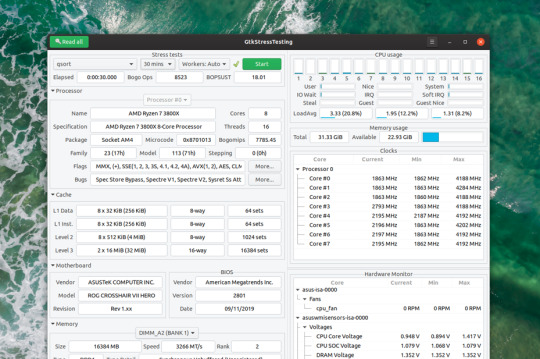

File Server Stress Test Tool

File Server Stress Test Tool Harbor Freight

Server Stress Tester

Server Stress Test Software

Web Server Stress Test

DTM DB Stress is a software for stress testing and load testing the server parts of information systems and database applications, as well as databases and servers themselves. It is suitable for solution scalability and performance testing, comparison and tuning. The stress tool supports all unified database interfaces: ODBC, IDAPI, OLE DB and Oracle Call Interface. The dynamic SQL statement support and built-in test data generator enable you to make test jobs more flexible. Value File allows users to emulate variations in the end-user activity. I’m working with my customer to perform a file server (Win2k8 R2) stress test exercise and I found the FSCT is a great tool that can help to simulate users workload. However I can’t find an option to define the file size using the tool. Whether you have a desktop PC or a server, Microsoft’s free Diskspd utility will stress test and benchmark your hard drives. NOTE: A previous version of this guide explained using Microsoft’s old “SQLIO” utility. The program says that the information passed to the server is anonymous. If you select a different stress level, the program cannot upload the results even if they are visible in the pane to the left. Most of the tests were completed quite fast, except for the Files Encrypt test which took 67 seconds to complete.

By: John Sterrett | Updated: 2012-07-18 | Comments (15) | Related: More >Testing

Problem

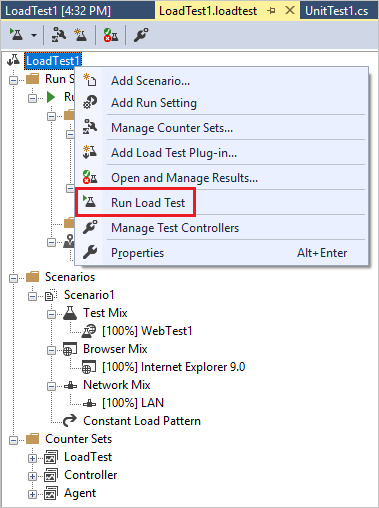

I have a stored procedure and I need to add additional stress and test thestored procedureusing a random set of parameters before it can be pushed to production. I don'thave a budget for stress testing tools. Can you show me how to accomplish thesegoals without buying a third party tool?

Solution

Yes, theSQLQueryStresstool provided byAdam Machaniccan be used to apply additional stress when testing your stored procedures. Thistool can also be used to apply a dataset as random parameter values when testingyour stored procedures. You can also read more about SQLStressTest on the toolsdocumentation page to find more details about how you can use the tool.

For the purpose of this tip we are going to use the uspGetEmployeeManagers storedprocedure in theAdventureWorks2008R2 database. You can exchange this with your stored procedureto walk through this tip in your own environment.

Step 1

Our first step is to test the following stored procedure with a test parameter.This is done in Management Studio using the query shown below.

Now that we know we have a working stored procedure and a valid parameter thatreturns data we can get started with theSQLStressTool.Once you downloaded and installed SQLQueryStress, fire the tool up and pastein the code that you used in Management Studio. Next, we need to click on the databasebutton to configure our database connection.

Step 2 - Configure Database Connectivity

Now that we clicked on the database button we will want to connect to our AdventureWorksdatabase. In this example I am using a instance named 'r2' on my localhost. We willconnect with windows authentication and our default database will be AdventureWorks2008R2.Once this is done we will click on Test Connection and click on the 'OK' box inthe popup window. We'll see the Connection Succeeded message to verify that ourconnection settings are connect.

Step 3 - Clear Proc Cache

Before we execute our stored procedure using SQLQueryStress we are going to clearout the procedure cache so we can track the total executions of our stored procedure.This shouldn't be done on a production system as this can causesignificant performance problems. You would have to recompile all user objects toget them back into the procedure cache. We are doing this in this walkthrough tipto show you how we can count the total executions of the stored procedure.

File Server Stress Test Tool Harbor Freight

NOTE: In SQL Server 2008 and up you can actually clear a specificplan from the buffer pool. In this example we are clearing out all plan's incaseyour using SQL 2005. Once again, this shouldn't be done on a productionsystem . Pleasesee BOL for a specific example on clearing out a single plan.

Step 4 - Execute Stored Procedure Using SQLQueryStress

Now that we have established our connection and specified a default databasewe are going to execute our stored procedure specified in step one. You can executethe stored procedure once by making sure the number of interations and number ofthreads both have the value of 'one.' We will go over these options in more detailsa little later in the tip. Once those values are set correctly you can execute thestored procedure once by clicking on the 'GO' button on the top right side of theSQLQueryStress tool.

Once the stored procedure execution completes you will see that statistics aregenerated to help give you valuable feedback towards your workload. You can seethe iterations that completed. In this case we only executed the stored procedureonce. You can also see valuable information for actual seconds, CPU, Logical readsand elapsed time as shown in the screen shot below.

Step 5 - View Total Executions via T-SQL

Now we will execute the following T-SQL script below, which will give us theexecution count for our stored procedure. We just cleared the procedure cache soyou will get an execution count of one as shown in the screen shot below.

Step 6 - Using SQLQueryStress to Add Additional Stress with Multiple Threads.

Now that we have gone over the basics of executing a stored procedure with SQLQueryStresswe will go over adding additional stress by changing the values for Number of Threadsand Number of Iterations. The number of Iterations means the query will be executedthis amount of times for each thread that is specified. The numbers of threads specifyhow many concurrent threads (SPIDS) will be used to execute the number of iterations.

Being that we changed the number of iterations to five and the number of threadsto five we will expect the total number of iterations completed to be twenty-five.The iterations completed is twenty-five because we used five threads and had fiveiterations that were executed for each thread. Below is a screen shot of thethe workload completed after we clicked on the 'GO' button with valuable averagestatistics during the workload.

If we rerun our T-SQL script from step 5, you will see that there is a totalof twenty-six executions for the uspGetEmployeeManagers stored procedure. This includesour initial execution from step 4 and the additional stress applied in step 6.

Server Stress Tester

Step 7 - Use Random Values for Parameters with SQLQueryStress

Next, we are going to cover using a dataset to randomly provide parameters toour stored procedure. Currently we use a hard coded value of eight as the valuefor the BusinessEntityID parameter. Now, we are going to click on the 'ParameterSubstitution' button to use a T-SQL script to create a pool of values that willbe used during our stress testing of the uspGetEmployeeManagers stored procedure.

Once the parameter substitution window opens we will want to copy our T-SQL statementprovided below that will generate the BusinessEntityID values we would want to passinto our stored procedure.

Once you added the T-SQL script, you would want to select the column you wouldlike to map to the parameter used for your stored procedure.

Finally, the last part of this step is to drop the hard coded value assignmentfor the stored procedure. This way the parameter substitution will be used for theparameter value.

Step 8 - Wrap-up Results

To wrap up this tip, we have gone over controlling a workload to provide additionalstress and randomly substituting parameters to be used for your workload replay.If you capture aSQL traceand replay the workload you should see a similar output as the one provided in thescreen shot below. Looking at the screen shot below you will notice that each thread(SPID) has five iterations. Also, you will notice that the values for the businessentityidprovided are randomly selected from our block of code provided for the parametersubstitution.

Next Steps

If you need to do some load testing, start usingSQLQueryStresstool.

Review severaltips on SQL Profiler and trace

Revew tips on working withStored Procedures

Last Updated: 2012-07-18

About the author

John Sterrett is a DBA and Software Developer with expertise in data modeling, database design, administration and development. View all my tips

One of the questions that often pops up in our forums is “how do I run a stress test on my game”?

There are several ways in which this can be done. A simple way to stress test your server side Extension is to build a client application that acts as a player, essentially a “bot”, which can be replicated several hundreds or thousands of times to simulate a large amount of clients.

» Building the client

For this example we will build a simple Java client using the standard SFS2X Java API which can be downloaded from here. The same could be done using C# or AS3 etc…

The simple client will connect to the server, login as guest, join a specific Room and start sending messages. This basic example can serve as a simple template to build more complex interactions for your tests.

» Replicating the load

Before we proceed with the creation of the client logic let’s see how the “Replicator” will work. With this name we mean the top-level application that will take a generic client implementation and will generate many copies at a constant interval, until all “test bots” are ready.

The class will startup by loading an external config.properties file which looks like this:

Server Stress Test Software

The properties are:

the name of the class to be used as the client logic (clientClassName)

the total number of clients for the test (totalCCU)

the interval between each generated client, expressed in milliseconds (generationSpeed)

Once these parameters are loaded the test will start by generating all the requested clients via a thread-pool based scheduled executor (ScheduledThreadPoolExecutor)

In order for the test class to be “neutral” to the Replicator we have created a base class called BaseStressClient which defines a couple of methods:

The startUp() method is where the client code gets initialized and it must be overridden in the child class. The onShutDown(…) method is invoked by the client implementation to signal the Replicator that the client has disconnected, so that they can be disposed.

» Building the client logic

Web Server Stress Test

This is the code for the client itself:

The class extends the BaseStressClient parent and instantiates the SmartFox API. We then proceed by setting up the event listeners and connection parameters. Finally we invoke the sfs.connect(…) method to get started.

Notice that we also declared a static ScheduledExecutorService at the top of the declarations. This is going to be used as the main scheduler for sending public messages at specific intervals, in this case one message every two second.

We chose to make it static so that we can share the same instance across all client objects, this way only one thread will take care of all our messages. If you plan to run thousands of clients or use faster message rates you will probably need to increase the number of threads in the constructor.

» Performance notes

When replicating many hundreds / thousands of clients we should keep in mind that every new instance of the SmartFox class (the main API class) will use a certain amount of resources, namely RAM and Java threads.

For this simple example each instance should take ~1MB of heap memory which means we can expect 1000 clients to take approximately 1GB of RAM. In this case you will probably need to adjust the heap settings of the JVM by adding the usual -Xmx switch to the startup script.

Similarly the number of threads in the JVM will increase by 2 units for each new client generated, so for 1000 clients we will end up with 2000 threads, which is a pretty high number.

Any relatively modern machine (e.g 2-4 cores, 4GB RAM) should be able to run at least 1000 clients, although the complexity of the client logic and the rate of network messages may reduce this value.

On more powerful hardware, such as a dedicated server, you should be able to run several thousands of CCU without much effort.

Before we start running the test let’s make sure we have all the necessary monitoring tool to watch the basic performance parameters:

Open the server’s AdminTool and select the Dashboard module. This will allow you to check all vital parameters of the server runtime.

Launch your OS resource monitor so that you can keep an eye on CPU and RAM usage.

Here are some important suggestions to make sure that a stress test is executed successfully:

Monitor the CPU and RAM usage after all clients have been generated and make sure you never pass the 90% CPU mark or 90% RAM used. This is of the highest importance to avoid creating a bottleneck between client and server. (NOTE: 90% is meant of the whole CPU, not just a single core)

Always run a stress test in a ethernet cabled LAN (local network) where you have access to at least a 100Mbit low latency connection. Even better if you have a 1Gbps or 10Gbps connection.

To reinforce the previous point: never run a stress test over a Wifi connection or worse, a remote server. The bandwidth and latency of a Wifi are horribly slow and bad for these kind of tests. Remember the point of these stress tests is assessing the performance of the server and custom Extension, not the network.

Before running a test make sure the ping time between client and server is less or equal to 1-5 milliseconds. More than that may suggest an inadequate network infrastructure.

Whenever possible make sure not to deliver the full list of Rooms to each client. This can be a major RAM eater if the test involves hundreds or thousands of Rooms. To do so simply remove all group references to the “Default groups” setting in your test Zone.

» Adding more client machines

What happens when the dreaded 90% of the machine resources are all used up but we need more CCU for our performance test?

It’s probably time to add another dedicated machine to run more clients. If you don’t have access to more hardware you may consider running the whole stress test in the cloud, so that you can choose the size and number of “stress clients” to employ.

The cloud is also convenient as it lets you clone one machine setup onto multiple servers, allowing a quick way for deploying more instances.

In order to choose the proper cloud provider for your tests make sure that they don’t charge you for internal bandwidth costs (i.e. data transfer between private IPs) and have a fast ping time between servers.

We have successfully run many performance tests using Jelastic and Rackspace Cloud. The former is economical and convenient for medium-size tests, while the latter is great for very large scale tests and also provides physical dedicated servers on demand.

Amazon EC2 should also work fine for these purposes and there are probably many other valid options as well. You can do a quick google research, if you want more options.

» Advanced testing

1) Login: in our simple example we have used an anonymous login request and we don’t employ a server side Extension to check the user credentials. Chances are that your system will probably use a database for login and you wish to test how the DB performs with a high traffic.

A simple solution is to pre-populate the user’s database with index-based names such as User-1, User-2 … User-N. This way you can build a simple client side logic that will generate these names with an auto-increment counter and perform the login. https://loadingiwant517.tumblr.com/post/661702399021481984/how-to-play-marvels-spider-man-on-pc. Passwords can be handled similarly using the same formula, e.g. Password-1, Password-2… Password-N

TIP: When testing a system with an integrated database always monitor the Queue status under the AdminTool > Dashboard. Slowness with DB transactions will show up in those queues.

2) Joining Rooms: another problem is how to distribute clients to multiple Rooms. Suppose we have a game for 4 players and we want to distribute a 1000 clients into Rooms for 4 users. A simple solution is to create this logic on the server side.

The Extension will take a generic “join” request and perform a bit of custom logic:

search for a game Room with free slots:

if found it will join the user there

otherwise it will create a new game Room and join the user

A similar logic has been discussed in details in this post in our support forum.

» Source files

The sources of the code discussed in this article are available for download as a zipped project for Eclipse. If you are using a different IDE you can unzip the archive and extract the source folder (src/), the dependencies (sfs2x-api/) and build a new project in your editor.

0 notes

Text

Diagnosing Slow-Running Stored Procedures

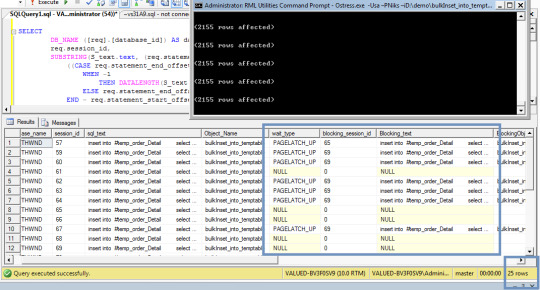

This week, I had a stored procedure taking 2 to 3 seconds to execute from a .NET application, where it previously took under 40ms. A few seconds doesn't seem that long, but the application was looping through a collection of items and calling the procedure around a hundred times. Meaning for the user, the time to complete the workflow had increased from around 4 seconds to over 4 minutes. Nothing had changed with the tables being used and index fragmentation was not an issue, thanks to fairly aggressive maintenance plans.

What was more baffling was the same process looping the same stored procedure used in the same application installed in a test environment took the expected four seconds or so. In SSMS, in both production and test environments, everything was well-behaved. Firing up the profiler, I could see that the sproc was reading around a thousand records when executed from SSMS (regardless of environment), but when the .NET application ran it, it was reading 250,000.

The culprit was the execution plan. Or rather plans. Plural. SSMS was getting one plan and the .NET application was getting another. To figure out why SQL was using two separate plans, you need to get the handles for the plans for the stored procedure:

SELECT sys.objects.object_id, sys.dm_exec_procedure_stats.plan_handle, [x].query_plan FROM sys.objects INNER JOIN sys.dm_exec_procedure_stats ON sys.objects.object_id = sys.dm_exec_procedure_stats.object_id CROSS APPLY sys.Dm_exec_query_plan(sys.dm_exec_procedure_stats.plan_handle) [x] WHERE sys.objects.object_id = Object_id('StoredProcName')

From there, consulting the SET options for the plans revealed something interesting: one plan had ARITHABORT ON, the other ARITHABORT OFF. SSMS, by default, has it set to on. Client applications have it set to off. The result was that SQL was using a horrifically bad execution plan for the .NET application and a reasonable one for SSMS. This is usually tied to issues with the parameter sniffing that SQL uses when compiling a plan. The solution I decided to go with was to queue the sproc for a plan recompile:

EXEC sp_recompile N'StoredProcName'

The next time the sproc was executed, SQL generated a new plan for it. The result was a drop from 250k reads and 3 seconds per execution to around a thousand reads and under 40ms. Exactly what it should be.

0 notes

Text

Programming Language Help

Programming Language Assignment Help

Can you do my assignment? Programming language assignment is an important service to help you. Programming language is a computing language engraved in the form of coding and decoding to instruct computers. The programming language is also called processed machine language by translators and interpreters to perform the work performed on the computer. We provide programming assignment writing services to students, helping them achieve the grade they are entitled to.

Programming language assignment help students in syntax and semantics, two common programming forms in which the language is subdivided. To be precise, both syntax and semantics are subclassified into the following:

context-free syntax

This gets the order in which the ordered characters i.e. symbols are divided into tokens. For more information on our programming support, get our Assignment Assistance Services.

syntax lexical

It is derived how ordered token phrases are clustered in. To help with programming language assignments, take advantage of our services.

context sensitive syntax

Also known as static semantics, it checks various constraints at compile time, type checking etc. For more information on programming language assignment help, select our services.

Dynamics Semantics

It plans the execution of verified programs. For more information about programming language assignment help, contact our experts.

History Of Programming Language

Authors who help with our online programming language assignment give you complete programming assignment writing services. In the year 1950, the programming language was first developed to instruct the computer. Since then, more than 500 qualified programming languages have developed significantly and it remains a continuous process for designing more advanced forms. The short language proposed by John Mauchli in the year 1951 was different from machine code in various aspects. The shortcode was designed with profound mathematical expressions but was not powerful enough to run as fast as machine code. Autocode is another important computer language developed in the mid-1950s that automatically converts code to machine language using the compiler. Experts who help with our programming language help assignment can highlight the history of the programming language.

Our programming language assignment help also provides knowledge in the stages of programming development. The main models of the programming language were developed between 1960 and 1970.

Array programming introduced by APL that plays a major role in influencing functional programming

The structural process of programming was refined by ALGOL

Object-oriented programming was supported by machine language simala

C is the most popular system programming language developed in 1970

The first language of logic programming is considered prolog which was developed in the year 1972.

Programming language assignment help provides full support on the programming language.

Sample Question & Answer Of Programming Language Assignment Help

question:

Since the development of Plancalukal in the 1940s, a large number of programming languages have been designed and implemented for their own specific problem domains and built with their own design decisions and compromises. For example, there are languages that:? is strongly typed and loosely typed,

Object Orientation / Object Orientation Provide support for abstraction of data types,

Use static or dynamic scoping rules,

Provide memory management (i.e. garbage collection) or give the developer precise control over pile-allocation and recycling,

Provide closures to allow passage around like variables,

Allow easy access to array slices and those that don't

Check the internal accuracy of the data and those that do not,

Provide diverse and comprehensive suites with built-in functionality and diverse limited features,

Use pre-processors and macros for select extension codes or option sources, etc.

north:

Introduction and clarification of language purpose

It is rare that programming language, one of many programming languages since the development of Plankalkul in 1940, has been clearly developed for the problem area of banking. COBOL (Common Business Oriented Language) was used in writing business software until the 1980s when it was replaced by C and C++ programming languages.

Objectives Of Programming Language

Helping language assignment helps students understand the purpose of programming languages:

It helps users communicate with computers by applying instructions through the programming language

To determine the design pattern of the programming language

To evaluate diversions and swaps between different programming language features

The benefits of recent machine languages are determined by comparing them with traditional languages

To observe the pattern of programming associated with different language features.

To study the efficiency of programming languages in the manufacture and development of software. For more information, take the help of our programming language assignments.

Types Of Programming Languages

Our programming language assignment helps experts explain a variety of programming languages. The description of the main programming languages is given below:

C Language

It is considered to be the most popular and general-purpose machine language, which aims to serve as building blocks for various popular programming languages such as JAA, C#, Python, JAVA scripts, etc. C is the effective application of the language to execute the operating. The system and various applications are built in it. For more information on C language, get our C Programming Assignment Assistance service.

Java

It is an object-oriented, concurrent and class-based system programming language that is used for general purpose. It once works on the principle of writing once and running anywhere', which implies that once developed code can run in any platform repeatedly without recompilation. Regardless of any architecture of the computer, the Java application can run in any Java virtual machine (JVM) due to its specific byte compilation. For more information on Java, take the help of programming language assignments

C++

It is a system programming language that has mandatory, general and object-oriented features of programming. C++ is used to design embedded and operating systems in kernels. It is a compiled version of the programming language that can be used across multiple platforms, including servers, desktops, and entertainment software applications. C++ ISO is standardized and its newest version is C#. For more information on C++, get our programming language assignment help.

C#

This object-oriented programming language is compatible with Microsoft.Net's platform. Compatibility of C# with Microsoft. Net enhances the development of portable applications and facilitates users with advanced web services. C# includes SOAP (Simple Object Access Protocol) and XML (Markup Language) to simplify programming without applying additional code in each step. In addition, C# plays an efficient role in introducing advanced services into the industry at relatively low cost. Big brands such as LEAD technologies, component sources, seagate software, apex software use ISO standardized C# applications. Our programming language assignment help explains more about this.

python

It is a high-level general-purpose programming language. The language is designed to simplify the overall application. Unlike Java and C++, the language encourages the implications of readable code and concepts to include fewer code lines. For more information, get our programming language assignment help.

Sql

It is an abbreviation for structured query language considered a language for special purpose programming. It is efficient to process a stream of relational data management systems and manipulate data into the relational database of the management system. In addition, SQL is specific as data definition and data manipulation language, due to relational calculus and in-built configuration of relational algebra. For more information, try our programming language assignment help.

Java Script

It is a scripting language based on prototypes displayed with dynamic and high-class functions. Being an important part of the web browser, the implementation of JavaScript helps to manipulate the browser, conduct asynchronous communication, allow the user to interact with client scripts and change the content of the documents displayed. JavaScript is renowned as a versatile language due to its functional, object-oriented and mandatory programming features. For more information on Java Script, get our programming language assignment help.

Different Levels Of Programming Languages

A programming language is broadly categorized according to its levels. Our programming language assignment help services explain this. The importance of each level is considered in detail below.

1. Micro-code

Each component of the CPU is directed to perform minute scale operation by this machine-specific code

Programmers develop instructions written in micro-code to execute micro-programs

Commonly used in CPU and other processing units such as microcontrollers, channels and disk controllers, processing unit of digital signal and graphics, controller of network interfaces, etc.

Microcode usually converts instructions into machine language and is a feature of high speed memory. For more on microcode, get our programming language assignment help.

2. Machine code

Machine code is a series of instructions executed directly by a computer's CPU

Machine code is relative to the architecture of the computer

Numeric machine code is considered as the hardware-based primitive language of programming that represents a computer program assembled at the lowest level

However, programs that are written directly into numerical machine code lead to problem-centered calculations. Our programming assignment authors can elaborate on this with the help of our programming language assignments.

3. Assembly Language

It usually represents the domain of the low-level programming language

Assembly language is assembled with computer code in computer code

This is different from the many utility systems of the high-level programming language

Instructions are given to low-level machine code or operations

Operations such as symbols, labels and expressions are essentially needed to execute a directive

For the purpose of offering macro instruction operations, macro assemblers represent code as extended

Adjustment of assembly process, creation of programs and debugging assistance are some of the important features that are provided by the assemblers. To help with assembly language, take the help of our programming language assignments.

4. Low-level programming language

It is a type of programming language that has negligible or no abstract with a set of instructions configured in the computer's architecture

Low-level language refers to both assembly language and machine code

However, there is no essence of language with machine language, but related to hardware

Language does not require the use of interpreter or compiler to translate it into machine code

Low-level language written programs are simple with negligible memory footprint and run very quickly

This includes detailed technical details. So, its usefulness is very difficult. For low-level programming language assistance, try our programming language assignment help.

5. High-level programming language

This programming language is strong abstraction with detailed instructions configured in the computer

It is a highly comprehensive and simple process of programming language

High-level language pseudocode as a compiler to translate the language into machine code

high level

Language data relates to item abstracts such as threads, arrays, objects, loops, locks, subroute, Boolean and complex arithmetic expressions, variables, functions, objects, etc.

Compared to low-level language, high-level language emphasizes the optimal efficiency of the program. For the high-level programming language assistant, get our programming language assignment assistant.

Difference Between High Level And Low Level Programming Language

translator

It refers to the translation or conversion of written instructions into machine language before it is executed. Our programming assignment writing services explain translators in more detail.

Translators are broadly classified as three important types.

Assembler

It converts programs written in assembly language to machine code before execution

eclectic

It converts programs written in high-level language to machine code before execution

Interpreters

It directly interprets high-level language instructions and sends them for execution.

Similarities between interpreters and compilers

High-level languages are translated into machine code by both interpreter and compiler

Both identify errors and print it in error messages

Both interpreters and compilers find memory addresses to store data and machine code. Contact us for more information about programming assignment writing services.

Why Writing Programming Language Assignments Are Difficult For Students?

It is clear that students should face problems writing their C programming language assignments if they are not knowledgeable about the basics of programming language. Writing programming language assignments seems difficult for students as they try to directly understand programs and skip the early learning modules of the computer language. Our programming assignment writers from our programming language assignments have to take care of these problems.

The basic but important drawback of students is that they do not focus on key areas of difference between high-level and low-level programming languages. This leads to serious mistakes in their assignments. Students are particularly suggested to seek professional assignment assistance, especially when preparing their programming language assignments. Students can get guidance on each stage of program execution so that it will be interesting and simple. Our programming language assignments help our programming language assignment help you get to such issues.

0 notes

Text

Option Recompile

When doing query tuning, sometimes the answer can be that it’s just better to get SQL Server to recompile a plan based on the data passed in. You might have a batch process that runs every hour and sometimes it has only a few hundred rows, other times it has a few billion rows. The ideal query plan for each could be vastly different. We could try indexing for these plans, we could try rewriting…

View On WordPress

0 notes

Text

Amazon RDS for SQL Server now supports SQL Server 2019