#PowerShell process management

Explore tagged Tumblr posts

Text

PowerShell Kill a Process from the Command Line

PowerShell Kill a Process from the Command Line #homelab #PowerShellProcessManagement #TerminatingProcessesInWindows #UsingTaskkillCommand #PowerShellVsCommandPrompt #AutomateKillingProcesses #PowerShellForceTermination #ManagingRemoteServerProcesses

Killing processes in Windows has long been the easiest way to deal with unresponsive programs that won’t close using the usual means by clicking the “X” in the top right-hand corner. Generally speaking, using the Windows Task Manager is the first method most use to find and close processes that are not responding. However, using the command line, we can leverage command prompt commands and…

View On WordPress

#advanced process scripting#automate killing processes#managing remote server processes#PowerShell for Windows environments#PowerShell force termination#PowerShell process management#PowerShell vs Command Prompt#Stop-Process cmdlet explained#terminating processes in Windows#using taskkill command

0 notes

Note

okay then give me your controversial linux opinions!

Thanks!

systemd is fine but you should use others to know how an init was supposed to work

Manjaro is worse than the devil

FISH is bad because people see fish features and assume they're exclusive to fish (not true)

ZSH is the Best Shell and we should link it to /bin/sh and everything. OhMyZsh is a crime against humanity, which adds runtime bloat to zsh and pushes people onto fish

Disk space bloat is good, actually. Give me all the files. This is completely different from runtime bloat

Powershell is actually a decent language for Linux scripts. It has types and can process json but shell commands are trivial as well.

GNOME system monitor is a better task manager than htop

The only filesystem you should ever need is BTRFS

Emacs is the best vim

21 notes

·

View notes

Text

Gaining Windows Credentialed Access Using Mimikatz and WCE

Prerequisites & Requirements

In order to follow along with the tools and techniques utilized in this document, you will need to use one of the following offensive Linux distributions:

Kali Linux

Parrot OS

The following is a list of recommended technical prerequisites that you will need in order to get the most out of this course:

Familiarity with Linux system administration.

Familiarity with Windows.

Functional knowledge of TCP/IP.

Familiarity with penetration testing concepts and life-cycle.

Note: The techniques and tools utilized in this document were performed on Kali Linux 2021.2 Virtual Machine

MITRE ATT&CK Credential Access Techniques

Credential Access consists of techniques for stealing credentials like account names and passwords. Techniques used to get credentials include: keylogging or credential dumping. Using legitimate credentials can give adversaries access to systems, make them harder to detect, and provide the opportunity to create more accounts to help achieve their goals.

The techniques outlined under the Credential Access tactic provide us with a clear and methodical way of extracting credentials and hashes from memory on a target system.

The following is a list of key techniques and sub techniques that we will be exploring:

Dumping SAM Database.

Extracting clear-text passwords and NTLM hashes from memory.

Dumping LSA Secrets

Scenario

Our objective is to extract credentials and hashes from memory on the target system after we have obtained an initial foothold. In this case, we will be taking a look at how to extract credentials and hashes with Mimikatz.

Note: We will be taking a look at how to use Mimikatz with Empire, however, the same techniques can also be replicated with meterpreter or other listeners as the Mimikatz syntax is universal.

Meterpreter is a Metasploit payload that provides attackers with an interactive shell that can be used to run commands, navigate the filesystem, and download or upload files to and from the target system.

Credential Access With Mimikatz

Mimikatz is a Windows post-exploitation tool written by Benjamin Delpy (@gentilkiwi). It allows for the extraction of plaintext credentials from memory, password hashes from local SAM/NTDS.dit databases, advanced Kerberos functionality, and more.

The SAM (Security Account Manager) database, is a database file on Windows systems that stores user’s passwords and can be used to authenticate users both locally and remotely.

The Mimikatz codebase is located at https://github.com/gentilkiwi/mimikatz/, and there is also an expanded wiki at https://github.com/gentilkiwi/mimikatz/wiki .

In order to extract cleartext passwords and hashes from memory on a target system, we will need an Empire agent with elevated privileges.

Extracting Cleartext Passwords & Hashes From Memory

Empire uses an adapted version of PowerSploit’s Invoke-Mimikatz function written by Joseph Bialek to execute Mimikatz functionality in PowerShell without touching disk.

PowerSploit is a collection of PowerShell modules that can be used to aid penetration testers during all phases of an assessment.

Empire can take advantage of nearly all Mimikatz functionality through PowerSploit’s Invoke-Mimikatz module.

We can invoke the Mimikatz prompt on the target agent by following the procedures outlined below.

The first step in the process involves interacting with your high integrity agent, this can be done by running the following command in the Empire client:

interact <AGENT-ID>/<NAME>

The next step is to Invoke Mimikatz on the Agent shell, this can be done by running the following command:

mimikatz

This will invoke Mimikatz on the target system and you should be able to interact with the Mimikatz prompt.

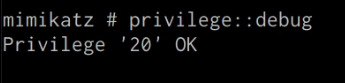

Before we take a look at how to dump cleartext credentials from memory with Mimikatz, you should confirm that you have the required privileges to take advantage of the various Mimikaz features, this can be done by running the following command in the Mimikatz prompt:

mimikatz # privilege::debug

If you have the correct privileges you should receive the message “Privilege ‘20’ OK” as shown in the following screenshot.

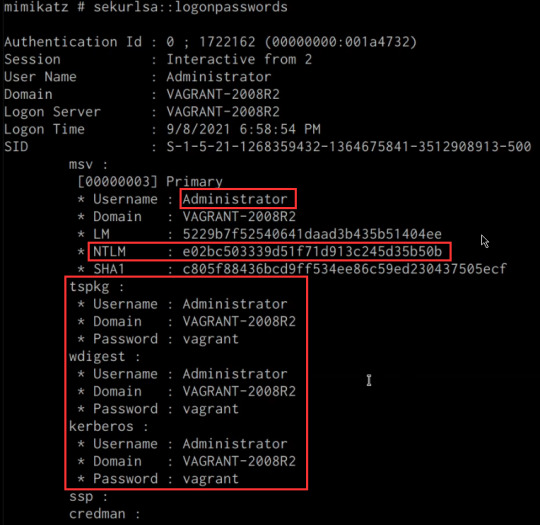

We can now extract cleartext passwords from memory with Mimikatz by running the following command in the Mimikatz prompt:

mimikatz # sekurlsa::logonpasswords

If successful, Mimikatz will output a list of cleartext passwords for user accounts and service accounts as shown in the following screenshot.

In this scenario, we were able to obtain the cleartext password for the Administrator user as well as the NTLM hash.

NTLM is the default hash format used by Windows to store passwords.

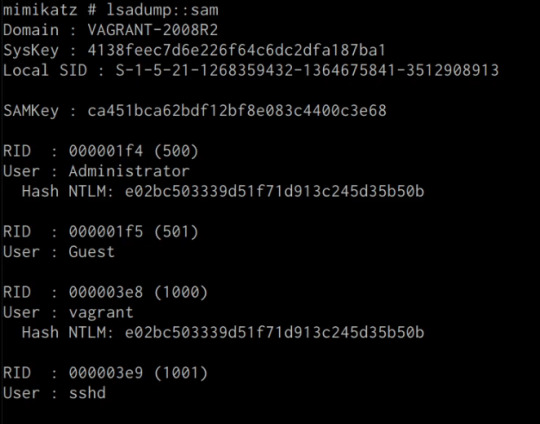

Dumping SAM Database

We can also dump the contents of the SAM (Security Account Manager) database with Mimikatz, this process will also require an Agent with administrative privileges.

The Security Account Manager (SAM) is a database file used on modern Windows systems and is used to store user account passwords. It can be used to authenticate local and remote users.

We can dump the contents of the SAM database on the target system by running the following command in the Mimikatz prompt:

mimikatz # lsadump::sam

If successful Mimikatz will output the contents of the SAM database as shown in the following screenshot.

As highlighted in the previous screenshot, the SAM database contains the user accounts and their respective NTLM hashes.

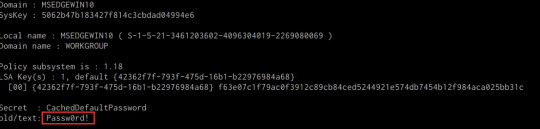

LSA Secrets

Mimikatz also has the ability to dump LSA Secrets, LSA secrets is a storage location used by the Local Security Authority (LSA) on Windows.

You can learn more about LSA and how it works here: https://networkencyclopedia.com/local-security-authority-lsa/

The purpose of the Local Security Authority is to manage a system’s local security policy, as a result, it will typically store data pertaining to user accounts such as user logins, authentication of users, and their LSA secrets, among other things. It is to be noted that this technique also requires an Agent with elevated privileges.

We can dump LSA Secrets on the target system by running the following command in the Mimikatz prompt:

mimikatz # lsadump::secrets

If successful Mimikatz will output the LSA Secrets on the target system as shown in the following screenshot.

So far, we have been able to extract both cleartext credentials as well as NTLM hashes for all the user and service accounts on the system. These credentials and hashes will come in handy when we will be exploring lateral movement techniques and how we can legitimately authenticate with the target system with the credentials and hashes we have been able to extract.

3 notes

·

View notes

Text

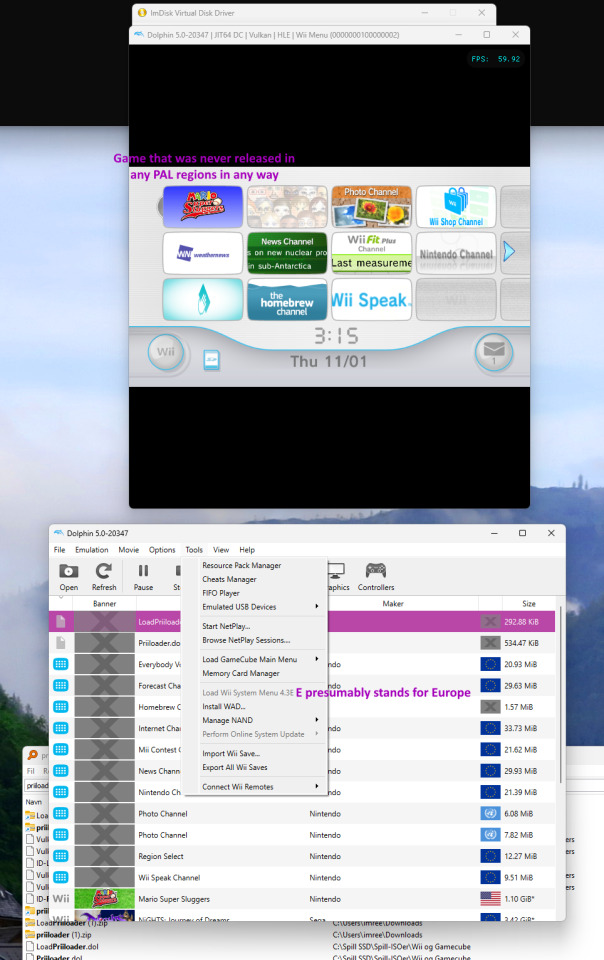

Managed to install Wii System Menu, News Channel, Homebrew Channel, and Priiloader in Dolphin

A chain of events led me to see the potential of what Dolphin was truly capable of:

Installing the Wii System Menu was the easiest part, far easier than on PCSX2. I just ran Tools → Perform Online System Update → Whichever region suits you best (Note that the region also determines available Forecast Channel countries to some extent).

——————————————————

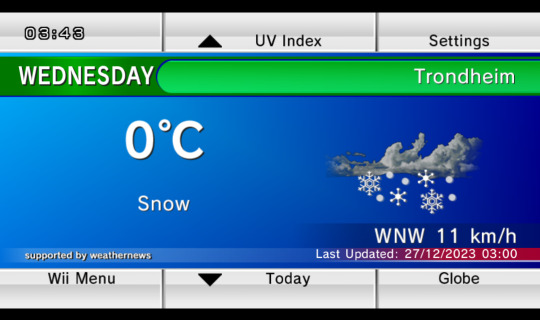

With Options → Settings → Wii → Enable WiiConnect24 via WiiLink; then going to the Wii System Menu → Settings → WiiConnect24 → turn on. In Europe, the System Menu's default country is Switzerland, which should be changed in the System Menu settings to your preferred country. You'll be prompted with a long and somewhat silly TOS by Nintendo, which you can accept. Forecast Channel will now work.

——————————————————

News Channel doesn't work through this, however, with Error 107305; and at the time of writing, the Dolphin Wiki is blatantly lying that it works.

Only through https://github.com/riiconnect24/RiiConnect24-Patcher/releases does it have a chance to work. On Windows, it's not sufficient to just click on the .bat no matter what the Releases page says. Instead, open PowerShell, run "cd [whatever folder you saved it in, with single apostrophes if the folder path has spaces]", then run ".\RiiConnect24Patcher.bat". Running the resulting menu should go pretty easy.

The files generated by the menu can then be pasted into the folder(s) where Dolphin detects games from, then right-click on the game's row in Dolphin and choose "Install to the NAND". News Channel will now work.

——————————————————

Now comes the crown jewel: Get Wii System Menu to show disc banners for games from other regions. For instance, Mario Super Sluggers on a PAL System Menu, or Monster Hunter G on an NTSC-NA System Menu. This was a tough one for sure:

Since fail0verflow's official repo doesn't have meaningfully downloadable versions of Homebrew Channel, one has to go to https://github.com/FIX94/hbc/releases instead. Once it has been downloaded, paste it in the Dolphin game detection folder, where it can be launched directly and/or from Wii System Menu.

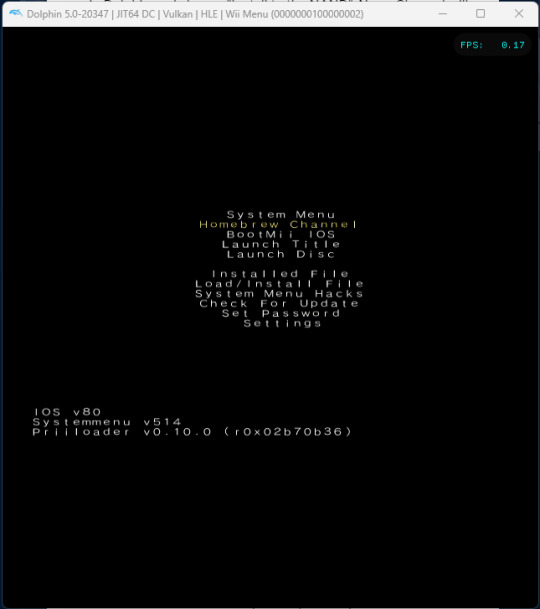

Once it has been confirmed that Homebrew Channel launches correctly, one needs to add Priiloader Installer and very likely also Launch Priiloader to Dolphin's emulated SD card; the standard Wii versions of both files will work. Dolphin didn't exactly make the process of adding files to the SD card easy, however:

Download ImDisk Manager from Sourceforge, then use Powershell once again, run "cd [the folder you unpacked it to]", then run ".\install.bat". Once installed correctly, the launch file is so hard to find that it's actually better to search for "ImDisk Virtual Disk Driver" in the Windows start menu.

It's likely best if Dolphin isn't running at this point. Run ImDisk Virtual Disk Driver → Mount new… → The exact filepath of the SD card that is used in "Dolphin → Options → Settings → SD Card Path:". Checking the "Removable Media" option in ImDisk is also said to be an advantage. At this point, the card will show up as a disk in Windows File Explorer, likely G:/ or something like that.

Extract Priiloader Installer and (if at all possible) Launch Priiloader into that disk. Then cut and paste the folders' "apps" folders onto the root (Top menu) of the disk. Don't worry about 2 folders having the same name, they will merge into each other.

In ImDisk, click on the disk and choose "Remove". Any complaints it claims about lacking rights are fake, and can be clicked OK on. If it complains about "Invalid filesize", click back and forth on the options of "Size of virtual disk" and then try "Remove" again.

Run Dolphin → Homebrew Channel, and see if Priiloader Installer and Launch Priiloader show up as launchable apps in Homebrew Channel. If yes, run Priiloader Installer.

There is one installation process across all Wii OSs, so don't worry about there being no specific Dolphin process. Once completed, the installer may hang on "Exiting…", but the emulator can safely be closed at this point.

To use Launch Priiloader, you have 2 options: 1) Launch Homebrew Channel → Launch Priiloader. 2) Rename the boot.dol from a LoadPriiloader folder to something descriptive, paste the .dol into the Dolphin game detection folder, and run it directly.

All the relevant options for Wii consoles (though not those exclusive to Wii U) will indeed show, including the good stuff like "Region Free EVERYTHING" and "Auto-Click A on Warning Message". If you want to, you can safely use the "System Menu" on top afterwards.

The result: Showtime!

(Piantas are wildly OP in that game, by the way. They live up to their nickname Chucksters.)

#wii#nintendo wii#dolphin emulator#games#tech#forecast channel#homebrew#homebrew channel#priiloader#mario super sluggers#well worth spending 2 hours to see if I could succeed at it#nintendo#long post

2 notes

·

View notes

Text

Years ago I worked for a BigLaw firm in the DC area. My job involved, among other things, handling cases with large-scale electronic discovery (sometimes entire servers’ worth of documents).

Well, when you’re dealing with docs on that scale, particularly docs from clients that don’t have good data management practices, you get massive numbers of files with the same file names and contents and have to figure out what is relevant, what is privileged, and what should or should not be produced to opposing parties. Some of this stuff can be pre-screened for responsiveness through agreed-upon search terms and automated processes, but once the chaff is gone many of the remaining docs have to be screened for privilege by hand - and if a judgment call has to be made about privilege or relevance, that hand has to be an attorney’s. Nor can you just discard “duplicates” - if 25 seeming duplicates are saved to the server by 25 different people in 25 different directories, they’re never truly identical. It can be highly relevant to know who read or saved or received or ignored those 25 otherwise-“identical” file copies, and when and how each person did so. That means metadata review, and we could not always delegate or farm that out.

One issue in a few large related cases I worked hinged upon this sort of file comparison - cross-referencing contents of tens of thousands of files, of which many were near-identical in all but metadata, all located within deeply nested subfolders. I decided that a particular task could be best achieved by pulling and running comparisons of specific metadata, but this would have been a massive time dump as the software we had couldn’t accomplish exactly what I wanted - and time is the client’s money.

So I did some research and wrote a couple of Powershell scripts to accomplish this myself. I figured the time spent would pale in comparison to the amount of time the task would have taken by hand.

Just one problem: at that firm, as at most large firms of that caliber, Powershell required (as it should) system admin access.

I went down to IT and described what I needed: to please take a look at the scripts and make sure I wasn’t trying to do something monumentally stupid or compromise anything confidential, and if acceptable, to run them. The two IT guys who were in that day looked at me, nonplussed. We’d never spoken before. I don’t think I’d actually ever heard either of them speak more than two words. I handed them my laptop to review the scripts.

They decided they didn’t need to run the scripts for me. They acted like Christmas had come early. They went from silent to freaking out in about two seconds flat. It was really not super impressive scripting work. It only hit me afterwards that the excitement was solely and completely due to me not being the seventy-fifth person that month to bring them something that could have been solved by “turning it off and back on again.”

They ultimately sent me back to my office, gave me full system admin privileges, and said “Godspeed, ma’am.” I later learned I was the only person at this massive firm to have that level of access aside from IT. I highly, highly doubt the partners ever knew.

124K notes

·

View notes

Text

The Role of an Automation Engineer in Modern Industry

In today’s fast-paced technological landscape, automation has become the cornerstone of efficiency and innovation across industries. At the heart of this transformation is the automation engineer—a professional responsible for designing, developing, testing, and implementing systems that automate processes and reduce the need for human intervention.

Who Is an Automation Engineer?

An automation engineer specializes in creating and managing technology that performs tasks with minimal human oversight. They work across a variety of industries including manufacturing, software development, automotive, energy, and more. Their primary goal is to optimize processes, improve efficiency, enhance quality, and reduce operational costs.

Key Responsibilities

Automation engineers wear many hats depending on their domain. However, common responsibilities include:

Designing Automation Systems: Creating blueprints and system architectures for automated machinery or software workflows.

Programming and Scripting: Writing code for automation tools using languages such as Python, Java, C#, or scripting languages like Bash or PowerShell.

Testing and Debugging: Developing test plans, running automated test scripts, and resolving bugs to ensure systems run smoothly.

Maintenance and Monitoring: Continuously monitoring systems to identify issues and perform updates or preventive maintenance.

Integration and Deployment: Implementing automated systems into existing infrastructure while ensuring compatibility and scalability.

Collaboration: Working closely with cross-functional teams such as developers, quality assurance, operations, and management.

Types of Automation Engineers

There are several specializations within automation engineering, each tailored to different industries and objectives:

Industrial Automation Engineers – Focus on automating physical processes in manufacturing using tools like PLCs (Programmable Logic Controllers), SCADA (Supervisory Control and Data Acquisition), and robotics.

Software Automation Engineers – Automate software development processes including continuous integration, deployment (CI/CD), and testing.

Test Automation Engineers – Specialize in creating automated test scripts and frameworks to verify software functionality and performance.

DevOps Automation Engineers – Streamline infrastructure management, deployment, and scaling through tools like Jenkins, Ansible, Kubernetes, and Docker.

Skills and Qualifications

To thrive in this role, an automation engineer typically needs:

Technical Skills: Proficiency in programming languages, scripting, and automation tools.

Analytical Thinking: Ability to analyze complex systems and identify areas for improvement.

Knowledge of Control Systems: Especially important in industrial automation.

Understanding of Software Development Life Cycle (SDLC): Crucial for software automation roles.

Communication Skills: To effectively collaborate with other teams and document systems.

A bachelor's degree in engineering, computer science, or a related field is usually required. Certifications in tools like Siemens, Rockwell Automation, Selenium, or Jenkins can enhance job prospects.

The Future of Automation Engineering

The demand for automation engineers is expected to grow significantly as businesses continue to embrace digital transformation and Industry 4.0 principles. Emerging trends such as artificial intelligence, machine learning, and Internet of Things (IoT) are expanding the scope and impact of automation.

Automation engineers are not just contributors to innovation—they are drivers of it. As technology evolves, their role will become increasingly central to building smarter, safer, and more efficient systems across the globe.

Conclusion

An automation engineer is a vital link between traditional processes and the future of work. Whether improving assembly lines in factories or ensuring flawless software deployment in tech companies, automation engineers are transforming industries, one automated task at a time. Their ability to blend engineering expertise with problem-solving makes them indispensable in today’s digital world.

0 notes

Text

Certified DevSecOps Professional: Career Path, Salary & Skills

Introduction

As the demand for secure, agile software development continues to rise, the role of a Certified DevSecOps Professional has become critical in modern IT environments. Organizations today are rapidly adopting DevSecOps to shift security left in the software development lifecycle. This shift means security is no longer an afterthought—it is integrated from the beginning. Whether you're just exploring the DevSecOps tutorial for beginners or looking to level up with a professional certification, understanding the career landscape, salary potential, and required skills can help you plan your next move.

This comprehensive guide explores the journey of becoming a Certified DevSecOps Professional, the skills you'll need, the career opportunities available, and the average salary you can expect. Let’s dive into the practical and professional aspects that make DevSecOps one of the most in-demand IT specialties in 2025 and beyond.

What Is DevSecOps?

Integrating Security into DevOps

DevSecOps is the practice of integrating security into every phase of the DevOps pipeline. Traditional security processes often occur at the end of development, leading to delays and vulnerabilities. DevSecOps introduces security checks early in development, making applications more secure and compliant from the start.

The Goal of DevSecOps

The ultimate goal is to create a culture where development, security, and operations teams collaborate to deliver secure and high-quality software faster. DevSecOps emphasizes automation, continuous integration, continuous delivery (CI/CD), and proactive risk management.

Why Choose a Career as a Certified DevSecOps Professional?

High Demand and Job Security

The need for DevSecOps professionals is growing fast. According to a Cybersecurity Ventures report, there will be 3.5 million unfilled cybersecurity jobs globally by 2025. Many of these roles demand DevSecOps expertise.

Lucrative Salary Packages

Because of the specialized skill set required, DevSecOps professionals are among the highest-paid tech roles. Salaries can range from $110,000 to $180,000 annually depending on experience, location, and industry.

Career Versatility

This role opens up diverse paths such as:

Application Security Engineer

DevSecOps Architect

Cloud Security Engineer

Security Automation Engineer

Roles and Responsibilities of a DevSecOps Professional

Core Responsibilities

Integrate security tools and practices into CI/CD pipelines

Perform threat modeling and vulnerability scanning

Automate compliance and security policies

Conduct security code reviews

Monitor runtime environments for suspicious activities

Collaboration

A Certified DevSecOps Professional acts as a bridge between development, operations, and security teams. Strong communication skills are crucial to ensure secure, efficient, and fast software delivery.

Skills Required to Become a Certified DevSecOps Professional

Technical Skills

Scripting Languages: Bash, Python, or PowerShell

Configuration Management: Ansible, Chef, or Puppet

CI/CD Tools: Jenkins, GitLab CI, CircleCI

Containerization: Docker, Kubernetes

Security Tools: SonarQube, Checkmarx, OWASP ZAP, Aqua Security

Cloud Platforms: AWS, Azure, Google Cloud

Soft Skills

Problem-solving

Collaboration

Communication

Time Management

DevSecOps Tutorial for Beginners: A Step-by-Step Guide

Step 1: Understand the Basics of DevOps

Before diving into DevSecOps, make sure you're clear on DevOps principles, including CI/CD, infrastructure as code, and agile development.

Step 2: Learn Security Fundamentals

Study foundational cybersecurity concepts like threat modeling, encryption, authentication, and access control.

Step 3: Get Hands-On With Tools

Use open-source tools to practice integrating security into DevOps pipelines:

# Example: Running a static analysis scan with SonarQube

sonar-scanner \

-Dsonar.projectKey=myapp \

-Dsonar.sources=. \

-Dsonar.host.url=http://localhost:9000 \

-Dsonar.login=your_token

Step 4: Build Your Own Secure CI/CD Pipeline

Practice creating pipelines with Jenkins or GitLab CI that include steps for:

Static Code Analysis

Dependency Checking

Container Image Scanning

Step 5: Monitor and Respond

Set up tools like Prometheus and Grafana to monitor your applications and detect anomalies.

Certification Paths for DevSecOps

Popular Certifications

Certified DevSecOps Professional

Certified Kubernetes Security Specialist (CKS)

AWS Certified Security - Specialty

GIAC Cloud Security Automation (GCSA)

Exam Topics Typically Include:

Security in CI/CD

Secure Infrastructure as Code

Cloud-native Security Practices

Secure Coding Practices

Salary Outlook for DevSecOps Professionals

Salary by Experience

Entry-Level: $95,000 - $115,000

Mid-Level: $120,000 - $140,000

Senior-Level: $145,000 - $180,000+

Salary by Location

USA: Highest average salaries, especially in tech hubs like San Francisco, Austin, and New York.

India: ₹9 LPA to ₹30+ LPA depending on experience.

Europe: €70,000 - €120,000 depending on country.

Real-World Example: How Companies Use DevSecOps

Case Study: DevSecOps at a Fintech Startup

A fintech company integrated DevSecOps tools like Snyk, Jenkins, and Kubernetes to secure their microservices architecture. They reduced vulnerabilities by 60% in just three months while speeding up deployments by 40%.

Key Takeaways

Early threat detection saves time and cost

Automated pipelines improve consistency and compliance

Developers take ownership of code security

Challenges in DevSecOps and How to Overcome Them

Cultural Resistance

Solution: Conduct training and workshops to foster collaboration between teams.

Tool Integration

Solution: Choose tools that support REST APIs and offer strong documentation.

Skill Gaps

Solution: Continuous learning and upskilling through real-world projects and sandbox environments.

Career Roadmap: From Beginner to Expert

Beginner Level

Understand DevSecOps concepts

Explore basic tools and scripting

Start with a DevSecOps tutorial for beginners

Intermediate Level

Build and manage secure CI/CD pipelines

Gain practical experience with container security and cloud security

Advanced Level

Architect secure cloud infrastructure

Lead DevSecOps adoption in organizations

Mentor junior engineers

Conclusion

The future of software development is secure, agile, and automated—and that means DevSecOps. Becoming a Certified DevSecOps Professional offers not only job security and high salaries but also the chance to play a vital role in creating safer digital ecosystems. Whether you’re following a DevSecOps tutorial for beginners or advancing into certification prep, this career path is both rewarding and future-proof.

Take the first step today: Start learning, start practicing, and aim for certification!

1 note

·

View note

Text

Extracting .pak/.utoc/.ucas files from Oblivion Remastered

Some Oblivion Remastered game assets are packed with Unreal Engine and can be found, for example, in

C:\Program Files\steam\steamapps\common\Oblivion Remastered\OblivionRemastered\Content\PaksContent\Paks\OblivionRemastered-Windows.pak

To extract these, you'll need to use UnrealPak from the Unreal Engine.

Step 1

Install Unreal Engine. This process is multi-step and may change by the time you read this, so please follow the directions provided by Epic.

Step 2

Find the path to UnrealPak.exe, e.g.,

C:\Program Files\Epic Games\UE_5.5\Engine\Binaries\Win64\UnrealPak.exe

Step 3

Open a directory of your choosing from PowerShell and run UnrealPak:

<Path-To-UnrealPak> <Path-To-Pak-File> -Extract <Path-To-Extract>

For example:

'C:\Program Files\Epic Games\UE_5.5\Engine\Binaries\Win64\UnrealPak.exe' 'C:\Program Files\steam\steamapps\common\Oblivion Remastered\OblivionRemastered\Content\PaksContent\Paks\OblivionRemastered-Windows.pak' -Extract C:\tmp\OblivionAssets

Additional Note

UnrealPak may produce a fatal error indicating that it "Ran out of memory:"

To get around this, you'll need to increase the virtual memory on the drive where the extraction occurs:

Right click on start menu, choose "System."

On the right, click on "Advanced System Settings."

Under "Performance," click "Settings…"

Go to the "Advanced" tab.

Under "Virtual memory," click "Change…"

Uncheck "Automatically manage paging file size for all drives."

Choose the drive containing the asset files (in my case, B:)

Select "Custom size" and enter in values (I used minimum 16 and maximum 200000).

Click "Set."

Click OK and apply any changes. A restart is likely necessary.

Video instructions for those so inclined.

1 note

·

View note

Text

Boost Your Fortnite FPS in 2025: The Complete Optimization Guide

youtube

Unlock Maximum Fortnite FPS in 2025: Pro Settings & Hidden Tweaks Revealed

In 2025, achieving peak performance in Fortnite requires more than just powerful hardware. Even the most expensive gaming setups can struggle with inconsistent frame rates and input lag if the system isn’t properly optimized. This guide is designed for players who want to push their system to its limits — without spending more money. Whether you’re a competitive player or just want smoother gameplay, this comprehensive Fortnite optimization guide will walk you through the best tools and settings to significantly boost FPS, reduce input lag, and create a seamless experience.

From built-in Windows adjustments to game-specific software like Razer Cortex and AMD Adrenalin, we’ll break down each step in a clear, actionable format. Our goal is to help you reach 240+ FPS with ease and consistency, using only free tools and smart configuration choices.

Check System Resource Usage First

Before making any deep optimizations, it’s crucial to understand how your PC is currently handling resource allocation. Begin by opening Task Manager (Ctrl + Alt + Delete > Task Manager). Under the Processes tab, review which applications are consuming the most CPU and memory.

Close unused applications like web browsers or VPN services, which often run in the background and consume RAM.

Navigate to the Performance tab to verify that your CPU is operating at its intended base speed.

Confirm that your memory (RAM) is running at its advertised frequency. If it’s not, you may need to enable XMP in your BIOS.

Avoid Complex Scripts — Use Razer Cortex Instead

While there are command-line based options like Windows 10 Debloater (DBLO), they often require technical knowledge and manual PowerShell scripts. For a user-friendly alternative, consider Razer Cortex — a free tool that automates performance tuning with just a few clicks.

Here’s how to use it:

Download and install Razer Cortex.

Open the application and go to the Booster tab.

Enable all core options such as:

Disable CPU Sleep Mode

Enable Game Power Solutions

Clear Clipboard and Clean RAM

Disable Sticky Keys, Cortana, Telemetry, and Error Reporting

Use Razer Cortex Speed Optimization Features

After setting up the Booster functions, move on to the Speed Up section of Razer Cortex. This tool scans your PC for services and processes that can be safely disabled or paused to improve overall system responsiveness.

Steps to follow:

Click Optimize Now under the Speed Up tab.

Let Cortex analyze and adjust unnecessary background activities.

This process will reduce system load, freeing resources for Fortnite and other games.

You’ll also find the Booster Prime feature under the same application, allowing game-specific tweaks. For Fortnite, it lets you pick from performance-focused or quality-based settings depending on your needs.

Optimize Fortnite Graphics Settings via Booster Prime

With Booster Prime, users can apply recommended Fortnite settings without navigating the in-game menu. This simplifies the optimization process, especially for players not familiar with technical configuration.

Key settings to configure:

Resolution: Stick with native (1920x1080 for most) or drop slightly for extra performance.

Display Mode: Use Windowed Fullscreen for better compatibility with overlays and task switching.

Graphics Profile: Choose Performance Mode to prioritize FPS over visuals, or Balanced for a mix of both.

Once settings are chosen, click Optimize, and Razer Cortex will apply all changes automatically. You’ll see increased FPS and reduced stuttering almost immediately.

Track Resource Gains and Performance Impact

Once you’ve applied Razer Cortex optimizations, monitor the system changes in real-time. The software displays how much RAM is freed and which services have been stopped.

For example:

You might see 3–4 GB of RAM released, depending on how many background applications were disabled.

Services like Cortana and telemetry often consume hidden resources — disabling them can free both memory and CPU cycles.

Enable AMD Adrenalin Performance Settings (For AMD Users)

If your system is powered by an AMD GPU, the Adrenalin Software Suite offers multiple settings that improve gaming performance with minimal setup.

Recommended options to enable:

Anti-Lag: Reduces input latency, making your controls feel more immediate.

Radeon Super Resolution: Upscales games to provide smoother performance at lower system loads.

Enhanced Sync: Improves frame pacing without the drawbacks of traditional V-Sync.

Image Sharpening: Adds clarity without a major hit to performance.

Radeon Boost: Dynamically lowers resolution during fast motion to maintain smooth FPS.

Be sure to enable Borderless Fullscreen in your game settings for optimal GPU performance and lower system latency.

Match Frame Rate with Monitor Refresh Rate

One of the simplest and most effective ways to improve both performance and gameplay experience is to cap your frame rate to match your monitor’s refresh rate. For instance, if you’re using a 240Hz monitor, setting Fortnite’s max FPS to 240 will reduce unnecessary GPU strain and maintain stable frame pacing.

Benefits of FPS capping:

Lower input latency

Reduced screen tearing

Better thermals and power efficiency

This adjustment ensures your system isn’t overworking when there’s no benefit, which can lead to more stable and predictable gameplay — especially during extended play sessions.

Real-World Performance Comparison

After applying Razer Cortex and configuring system settings, players often see dramatic performance improvements. In test environments using a 2K resolution on DirectX 12, systems previously capped at 50–60 FPS with 15–20 ms response times jumped to 170–180 FPS with a 3–5 ms response time.

When switching to 1080p resolution:

Frame rates typically exceed 200 FPS

Reduced frame time results in smoother aiming and lower delay

Competitive advantage improves due to lower latency and higher visual consistency

These results are reproducible on most modern gaming rigs, regardless of brand, as long as the system has adequate hardware and is properly optimized.

Switch Between Performance Modes for Different Games

One of Razer Cortex’s strongest features is its flexibility. You can easily switch between optimization profiles depending on the type of game you’re playing. For Fortnite, choose high-performance settings to prioritize responsiveness and frame rate. But for visually rich, story-driven games, you might want higher quality visuals.

Using Booster Prime:

Choose your desired game from the list.

Select a profile such as Performance, Balanced, or Quality.

Apply settings instantly by clicking Optimize, then launch the game directly.

This quick toggle capability makes it easy to adapt your system to different gaming needs without having to manually change settings every time.

Final Performance Test: Fortnite in 2K with Performance Mode

To push your system to the limit, test Fortnite under 2K resolution and Performance Mode enabled. Without any optimizations, many systems may average 140–160 FPS. However, with all the Razer Cortex and system tweaks applied:

Frame rates can spike above 400 FPS

Input delay and frame time reduce significantly

Gameplay becomes smoother and more responsive, ideal for fast-paced shooters

Conclusion: Unlock Peak Fortnite Performance in 2025

Optimizing Fortnite for maximum FPS and minimal input lag doesn’t require expensive upgrades or advanced technical skills. With the help of tools like Razer Cortex and AMD Adrenalin, along with proper system tuning, you can dramatically enhance your gameplay experience.

Key takeaways:

Monitor and free system resources using Task Manager

Use Razer Cortex to automate performance boosts with one click

Apply optimized settings for Fortnite via Booster Prime

Match FPS to your monitor’s refresh rate for smoother visuals

Take advantage of GPU-specific software like AMD Adrenalin

Customize settings for performance or quality based on your gaming style

By following this fortnite optimization guide, you can achieve a consistent fortnite fps boost in 2025 while also reducing input lag and ensuring your system runs at peak performance. These steps are applicable not only to Fortnite but to nearly any competitive game you play. It’s time to make your hardware work smarter — not harder.

🎮 Level 99 Kitchen Conjurer | Crafting epic culinary quests where every dish is a legendary drop. Wielding spatulas and controllers with equal mastery, I’m here to guide you through recipes that give +10 to flavor and +5 to happiness. Join my party as we raid the kitchen and unlock achievement-worthy meals! 🍳✨ #GamingChef #CulinaryQuests

For More, Visit @https://haplogamingcook.com

#fortnite fps guide 2025#increase fortnite fps#fortnite performance optimization#fortnite fps boost settings#fortnite graphics settings#best fortnite settings for fps#fortnite lag fix#fortnite fps drops fix#fortnite competitive settings#fortnite performance mode#fortnite pc optimization#fortnite fps boost tips#fortnite low end pc settings#fortnite high fps config#fortnite graphics optimization#fortnite game optimization#fortnite fps unlock#fortnite performance guide#fortnite settings guide 2025#fortnite competitive fps#Youtube

0 notes

Text

Top Function as a Service (FaaS) Vendors of 2025

Businesses encounter obstacles in implementing effective and scalable development processes. Traditional techniques frequently fail to meet the growing expectations for speed, scalability, and innovation. That's where Function as a Service comes in.

FaaS is more than another addition to the technological stack; it marks a paradigm shift in how applications are created and delivered. It provides a serverless computing approach that abstracts infrastructure issues, freeing organizations to focus on innovation and core product development. As a result, FaaS has received widespread interest and acceptance in multiple industries, including BFSI, IT & Telecom, Public Sector, Healthcare, and others.

So, what makes FaaS so appealing to corporate leaders? Its value offer is based on the capacity to accelerate time-to-market and improve development outcomes. FaaS allows companies to prioritize delivering new goods and services to consumers by reducing server maintenance, allowing for flexible scalability, cost optimization, and automatic high availability.

In this blog, we'll explore the meaning of Function as a Service (FaaS) and explain how it works. We will showcase the best function as a service (FaaS) software that enables businesses to reduce time-to-market and streamline development processes.

Download the sample report of Market Share: https://qksgroup.com/download-sample-form/market-share-function-as-a-service-2023-worldwide-5169

What is Function-as-a-Service (FaaS)?

Function-as-a-Service (FaaS), is a cloud computing service that enables developers to create, execute, and manage discrete units of code as individual functions, without the need to oversee the underlying infrastructure. This approach enables developers to focus solely on writing code for their application's specific functions, abstracting away the complexities of infrastructure management associated with developing and deploying microservices applications. With FaaS, developers can write and update small, modular pieces of code, which are designed to respond to specific events or triggers. FaaS is commonly used for building microservices, real-time data processing, and automating workflows. It decreases much of the infrastructure management complexity, making it easier for developers to focus on writing code and delivering functionality. FaaS can power the backend for mobile applications, handling user authentication, data synchronization, and push notifications, among other functions.

How Does Function-as-a-Service (FaaS) Work?

FaaS provides programmers with a framework for responding to events via web apps without managing servers.PaaS infrastructure frequently requires server tasks to continue in the background at all times. In contrast, FaaS infrastructure is often invoiced on demand by the service provider, using an event-based execution methodology.

FaaS functions should be formed to bring out a task in response to an input. Limit the scope of your code, keeping it concise and lightweight, so that functions load and run rapidly. FaaS adds value at the function separation level. If you have fewer functions, you will pay additional costs while maintaining the benefit of function separation. The efficiency and scalability of a function may be enhanced by utilizing fewer libraries. Features, microservices, and long-running services will be used to create comprehensive apps.

Download the sample report of Market Forecast: https://qksgroup.com/download-sample-form/market-forecast-function-as-a-service-2024-2028-worldwide-4685

Top Function-as-a-Service (FaaS) Vendors

Amazon

Amazon announced AWS Lambda in 2014. Since then, it has developed into one of their most valuable offerings. It serves as a framework for Alexa skill development and provides easy access to many of AWS's monitoring tools. Lambda natively supports Java, Go, PowerShell, Node.js, C#, Python, and Ruby code.

Alibaba Functions

Alibaba provides a robust platform for serverless computing. You may deploy and run your code using Alibaba Functions without managing infrastructure or servers. To run your code, computational resources are deployed flexibly and reliably. Dispersed clusters exist in a variety of locations. As a result, if one zone becomes unavailable, Alibaba Function Compute will immediately switch to another instance. Using distributed clusters allows any user from anywhere to execute your code faster. It increases productivity.

Microsoft

Microsoft and Azure compete with Microsoft Azure Functions. It is the biggest FaaS provider for designing and delivering event-driven applications. It is part of the Azure ecosystem and supports several programming languages, including C#, JavaScript, F#, Python, Java, PowerShell, and TypeScript.

Azure Functions provides a more complex programming style built around triggers and bindings. An HTTP-triggered function may read a document from Azure Cosmos DB and deliver a queue message using declarative configuration. The platform supports multiple triggers, including online APIs, scheduled tasks, and services such as Azure Storage, Azure Event Hubs, Twilio for SMS, and SendGrid for email.

Vercel

Vercel Functions offers a FaaS platform optimized for static frontends and serverless functions. It hosts webpages and online apps that install rapidly and expand themselves.

The platform stands out for its straightforward and user-friendly design. When running Node.js, Vercel manages dependencies using a single JSON. Developers may also change the runtime version, memory, and execution parameters. Vercel's dashboard provides monitoring logs for tracking functions and requests.

Key Technologies Powering FaaS and Their Strategic Importance

According to QKS Group and insights from the reports “Market Share: Function as a Service, 2023, Worldwide” and “Market Forecast: Function as a Service, 2024-2028, Worldwide”, organizations around the world are increasingly using Function as a Service (FaaS) platforms to streamline their IT operations, reduce infrastructure costs, and improve overall business agility. Businesses that outsource computational work to cloud service providers can focus on their core capabilities, increase profitability, gain a competitive advantage, and reduce time to market for new apps and services.

Using FaaS platforms necessitates sharing sensitive data with third-party cloud providers, including confidential company information and consumer data. As stated in Market Share: Function as a Service, 2023, Worldwide, this raises worries about data privacy and security, as a breach at the service provider's end might result in the disclosure or theft of crucial data. In an era of escalating cyber threats and severe data security rules, enterprises must recognize and mitigate the risks of using FaaS platforms. Implementing strong security measures and performing frequent risk assessments may assist in guaranteeing that the advantages of FaaS are realized without sacrificing data integrity and confidentiality.

Vendors use terms like serverless computing, microservices, and Function as a Service (FaaS) to describe similar underlying technologies. FaaS solutions simplify infrastructure management, enabling rapid application development, deployment, and scalability. Serverless computing and microservices brake systems into small, independent tasks that can be executed on demand, resulting in greater flexibility and efficiency in application development.

Conclusion

Function as a Service (FaaS) is helping businesses build and run applications more efficiently without worrying about server management. It allows companies to scale as needed, reduce costs, and focus on creating better products and services. As more sectors use FaaS, knowing how it works and selecting the right provider will be critical to keeping ahead in a rapidly altering digital landscape.

Related Reports –

https://qksgroup.com/market-research/market-forecast-function-as-a-service-2024-2028-western-europe-4684

https://qksgroup.com/market-research/market-share-function-as-a-service-2023-western-europe-5168

https://qksgroup.com/market-research/market-forecast-function-as-a-service-2024-2028-usa-4683

https://qksgroup.com/market-research/market-share-function-as-a-service-2023-usa-5167

https://qksgroup.com/market-research/market-forecast-function-as-a-service-2024-2028-middle-east-and-africa-4682

https://qksgroup.com/market-research/market-share-function-as-a-service-2023-middle-east-and-africa-5166

https://qksgroup.com/market-research/market-forecast-function-as-a-service-2024-2028-china-4679

https://qksgroup.com/market-research/market-share-function-as-a-service-2023-china-5163

https://qksgroup.com/market-research/market-forecast-function-as-a-service-2024-2028-asia-excluding-japan-and-china-4676

https://qksgroup.com/market-research/market-share-function-as-a-service-2023-asia-excluding-japan-and-china-5160

0 notes

Text

Automation Programming Basics

In today’s fast-paced world, automation programming is a vital skill for developers, IT professionals, and even hobbyists. Whether it's automating file management, data scraping, or repetitive tasks, automation saves time, reduces errors, and boosts productivity. This post covers the basics to get you started in automation programming.

What is Automation Programming?

Automation programming involves writing scripts or software that perform tasks without manual intervention. It’s widely used in system administration, web testing, data processing, DevOps, and more.

Benefits of Automation

Efficiency: Complete tasks faster than doing them manually.

Accuracy: Reduce the chances of human error.

Scalability: Automate tasks at scale (e.g., managing hundreds of files or websites).

Consistency: Ensure tasks are done the same way every time.

Popular Languages for Automation

Python: Simple syntax and powerful libraries like `os`, `shutil`, `requests`, `selenium`, and `pandas`.

Bash: Great for system and server-side scripting on Linux/Unix systems.

PowerShell: Ideal for Windows system automation.

JavaScript (Node.js): Used in automating web services, browsers, or file tasks.

Common Automation Use Cases

Renaming and organizing files/folders

Automating backups

Web scraping and data collection

Email and notification automation

Testing web applications

Scheduling repetitive system tasks (cron jobs)

Basic Python Automation Example

Here’s a simple script to move files from one folder to another based on file extension:import os import shutil source = 'Downloads' destination = 'Images' for file in os.listdir(source): if file.endswith('.jpg') or file.endswith('.png'): shutil.move(os.path.join(source, file), os.path.join(destination, file))

Tools That Help with Automation

Task Schedulers: `cron` (Linux/macOS), Task Scheduler (Windows)

Web Automation Tools: Selenium, Puppeteer

CI/CD Tools: GitHub Actions, Jenkins

File Watchers: `watchdog` (Python) for reacting to file system changes

Best Practices

Always test your scripts in a safe environment before production.

Add logs to track script actions and errors.

Use virtual environments to manage dependencies.

Keep your scripts modular and well-documented.

Secure your scripts if they deal with sensitive data or credentials.

Conclusion

Learning automation programming can drastically enhance your workflow and problem-solving skills. Start small, automate daily tasks, and explore advanced tools as you grow. The world of automation is wide open—begin automating today!

0 notes

Text

A Comprehensive Guide to iManage: Features, Reviews, and Expert Consultation

iManage has become a vital tool for law firms and other document-centric industries, offering a comprehensive suite of features for document and email management. It simplifies complex workflows, enhances productivity, and enables seamless integration with Microsoft Outlook, providing users with an all-in-one solution for managing critical documents and communications. In this blog, we will dive into iManage reviews, consultants, and key features like iManage Outlook integration, Worksite Manual, and PowerShell functionalities.

What Is iManage?

iManage is a leading provider of document and email management solutions. It helps organizations streamline processes for creating, storing, sharing, and organizing information. Whether you're a law firm, financial institution, or another document-driven organization, iManage's capabilities provide an efficient, secure, and compliant platform for handling sensitive data.

iManage Reviews: Why It's So Popular Among Legal Firms

imanage reviews has garnered positive feedback due to its comprehensive set of features and ease of use. Many legal professionals, accountants, and consultants praise its seamless integration with Microsoft Office applications, such as Outlook and Word. The platform's ability to manage both documents and emails in a centralized location simplifies compliance, ensures version control, and boosts collaboration within teams.

Users frequently mention the intuitive user interface, fast search functionality, and the secure environment iManage provides. These features significantly reduce the time spent searching for and organizing documents, allowing professionals to focus on more critical tasks.

However, some users also report a steep learning curve for new users, especially for advanced features like automation and scripting. This is where iManage consultants can help maximize the platform’s capabilities and ease the implementation process.

iManage Consultants: Expertise to Maximize Your Investment

Hiring the right consultants is essential when implementing iManage within your organization. iManage consultants bring in-depth expertise and experience to ensure that the platform is set up optimally, tailored to your organization's needs. Whether you're migrating from another document management system or looking to refine your current setup, iManage consultants can provide invaluable support.

MacroAgility, a leading provider of iManage consulting services, specializes in working with organizations to optimize their document and email management systems. As noted on their iManage Work Consultants page, they offer personalized services to ensure smooth transitions, from setup and training to troubleshooting and optimization.

iManage Outlook Integration: Streamline Your Email Management

One of iManage's standout features is its seamless integration with Microsoft Outlook. The iManage Outlook integration enables users to easily store, search, and retrieve emails from within their email client, eliminating the need to switch between platforms. This integration also ensures that all emails are appropriately tagged and stored for future reference, supporting compliance, retention, and audit requirements.

With the iManage Outlook add-in, users can drag and drop emails directly into iManage folders, assign them to relevant matters, and access the documents without leaving Outlook. This integration is a huge time-saver and helps ensure that important communication remains within a centralized, organized system. MacroAgility offers expert guidance on configuring and optimizing this integration, which you can learn more about on their Lotus2Outlook page.

iManage Worksite Manual: A Guide to Efficient Document Management

For those looking to dive deep into iManage Worksite, the iManage Worksite Manual is an essential resource. This manual covers everything from installation to advanced configuration, helping users navigate the full spectrum of features.

The manual provides detailed instructions for setting up workflows, defining document lifecycles, and configuring security settings. This can be particularly beneficial for organizations with complex document management needs. With iManage's robust features, having a thorough understanding of how to configure and manage the system ensures you’re getting the most out of the platform.

iManage PowerShell: Automating Your Workflow

For advanced users and IT professionals, iManage PowerShell

offers a powerful tool for automating processes, managing large volumes of data, and integrating other applications with the iManage platform. PowerShell scripts allow users to automate routine tasks, perform batch operations, and integrate iManage with external systems seamlessly.

Whether you’re working with iManage Worksite, iManage Share, or iManage Records Management, PowerShell can help streamline administrative tasks, reducing manual effort and enhancing system performance. It’s an essential tool for organizations looking to enhance their document management automation.

Why Choose MacroAgility for Your iManage Needs?

MacroAgility offers a wide range of services to help businesses harness the full potential of iManage. From iManage Work consultants to Lotus2Outlook migration support, their team of experts ensures that clients receive tailored solutions designed to improve productivity and reduce inefficiencies. Visit their website to learn more about their services:

iManage Work Consultants

iManage Email and Document Work

Lotus2Outlook

Conclusion

Whether you're looking to adopt iManage for the first time or improve an existing setup, iManage provides a robust and secure solution for document and email management. With the help of experienced iManage consultants and tools like PowerShell and Outlook integration, your organization can significantly enhance productivity, compliance, and collaboration. Visit MacroAgility for more information on how they can support your iManage journey.

This blog serves to inform and guide users, integrating both iManage-specific features and the services offered by MacroAgility. It offers value to both new users and those looking to optimize their iManage systems.

#Keywords#imanage reviews#imanage consultants#imanage outlook#imanage worksite manual#imanage powershell

0 notes

Text

Why Hiring DevOps Engineers is Crucial for Your Business Success

In today’s fast-paced digital landscape, businesses must ensure seamless software development, deployment, and maintenance processes. DevOps has emerged as a game-changer, bridging the gap between development and operations to optimize workflows, reduce time to market, and improve software quality. If you are looking to enhance your software delivery pipeline, hire DevOps engineers to gain a competitive edge.

What is DevOps, and Why Does It Matter?

DevOps is a set of practices, tools, and a cultural mindset that fosters collaboration between development and operations teams. It focuses on continuous integration, continuous delivery (CI/CD), automation, and monitoring to streamline software development processes.

Key Benefits of Implementing DevOps:

Faster Software Delivery: DevOps enables frequent and reliable software releases.

Improved Collaboration: Encourages seamless communication between development, operations, and QA teams.

Enhanced Security & Stability: Automated testing and monitoring help detect issues early.

Cost Efficiency: Reduces infrastructure costs through automation and cloud-native solutions.

The Role of a DevOps Engineer

A DevOps engineer is responsible for designing, implementing, and managing the infrastructure required to support CI/CD processes, automation, and cloud environments. Their primary goal is to ensure that development and operations work together harmoniously.

Key Responsibilities of DevOps Engineers:

CI/CD Pipeline Management: Automating build, test, and deployment processes.

Infrastructure as Code (IaC): Using tools like Terraform or Ansible to manage infrastructure efficiently.

Cloud Management: Deploying applications in cloud environments (AWS, Azure, Google Cloud).

Security & Compliance: Implementing security best practices and monitoring compliance.

Monitoring & Performance Optimization: Utilizing tools like Prometheus, Grafana, or ELK Stack.

Automation & Scripting: Writing scripts for process automation and workflow optimization.

Why You Should Hire DevOps Engineers

If you want to scale your software operations, improve deployment efficiency, and enhance security, you should hire DevOps engineers. Here’s why:

1. Accelerate Development & Deployment

DevOps engineers ensure faster and more efficient software delivery through CI/CD automation, reducing the time it takes to launch new features.

2. Enhance System Reliability & Stability

With continuous monitoring and proactive troubleshooting, DevOps engineers minimize downtime and enhance application stability.

3. Improve Security & Compliance

They integrate security measures into the development pipeline, ensuring compliance with industry standards.

4. Optimize Infrastructure Costs

By leveraging cloud solutions and Infrastructure as Code (IaC), DevOps engineers reduce operational costs while improving efficiency.

Skills to Look for When Hiring DevOps Engineers

When you hire DevOps engineers, ensure they possess the following key skills:

Technical Skills:

CI/CD Tools: Jenkins, GitLab CI/CD, CircleCI

Cloud Platforms: AWS, Google Cloud, Microsoft Azure

Containerization & Orchestration: Docker, Kubernetes

Infrastructure as Code (IaC): Terraform, Ansible, CloudFormation

Monitoring & Logging: Prometheus, ELK Stack, Grafana

Scripting Languages: Python, Bash, PowerShell

Soft Skills:

Strong problem-solving abilities

Effective collaboration and communication

Agile and DevOps mindset

Adaptability to new technologies

Where to Find and Hire DevOps Engineers

Finding skilled DevOps professionals requires the right approach. Here are the top platforms and methods to hire DevOps engineers:

1. Freelance Platforms

Upwork

Toptal

Freelancer

2. Tech Hiring Platforms

Turing

Toptal

DevOps Jobs Board

3. LinkedIn & Networking

Leverage LinkedIn and professional communities to connect with DevOps talent.

4. Hiring Agencies & DevOps Consulting Firms

Engage agencies specializing in DevOps staffing for reliable talent.

How Much Does It Cost to Hire DevOps Engineers?

The cost of hiring DevOps engineers depends on various factors, including experience, location, and project requirements.

Hourly Rates:

Junior DevOps Engineer: $30 - $50/hour

Mid-Level DevOps Engineer: $50 - $80/hour

Senior DevOps Engineer: $80 - $150/hour

Annual Salaries (Region-Wise):

USA: $100,000 - $160,000

Europe: $70,000 - $120,000

Asia: $30,000 - $80,000

Conclusion

DevOps plays a crucial role in modern software development, ensuring faster, secure, and efficient software delivery. If you want to streamline your development processes, hire DevOps engineers to optimize workflows, improve security, and reduce operational costs.

Investing in skilled DevOps professionals will help your business stay ahead in the competitive tech landscape. Ready to build a high-performing DevOps team? Start your hiring process today!

0 notes

Text

GPU Hosting Server Windows By CloudMinnister Technologies

Cloudminister Technologies GPU Hosting Server for Windows

Businesses and developers require more than just conventional hosting solutions in the data-driven world of today. Complex tasks that require high-performance computing capabilities that standard CPUs cannot effectively handle include artificial intelligence (AI), machine learning (ML), and large data processing. Cloudminister Technologies GPU hosting servers can help with this.

We will examine GPU hosting servers on Windows from Cloudminister Technologies' point of view in this comprehensive guide, going over their features, benefits, and reasons for being the best option for your company.

A GPU Hosting Server: What Is It?

A dedicated server with Graphical Processing Units (GPUs) for high-performance parallel computing is known as a GPU hosting server. GPUs can process thousands of jobs at once, in contrast to CPUs, which handle tasks sequentially. They are therefore ideal for applications requiring real-time processing and large-scale data computations.

Cloudminister Technologies provides cutting-edge GPU hosting solutions to companies that deal with:

AI and ML Model Training:- Quick and precise creation of machine learning models.

Data analytics:- It is the rapid processing of large datasets to produce insights that may be put to use.

Video processing and 3D rendering:- fluid rendering for multimedia, animation, and gaming applications.

Blockchain Mining:- Designed with strong GPU capabilities for cryptocurrency mining.

Why Opt for GPU Hosting from Cloudminister Technologies?

1. Hardware with High Performance

The newest NVIDIA and AMD GPUs power the state-of-the-art hardware solutions used by Cloudminister Technologies. Their servers are built to provide resource-intensive applications with exceptional speed and performance.

Important Points to Remember:

High-end GPU variants are available for quicker processing.

Dedicated GPU servers that only your apps can use; there is no resource sharing, guaranteeing steady performance.

Parallel processing optimization enables improved output and quicker work completion.

2. Compatibility with Windows OS

For companies that depend on Windows apps, Cloudminister's GPU hosting servers are a great option because they completely support Windows-based environments.

The Benefits of Windows Hosting with Cloudminister

Smooth Integration: Utilize programs developed using Microsoft technologies, like PowerShell, Microsoft SQL Server, and ASP.NET, without encountering compatibility problems.

Developer-Friendly: Enables developers to work in a familiar setting by supporting well-known development tools including Visual Studio,.NET Core, and DirectX.

Licensing Management: To ensure compliance and save time, Cloudminister handles Windows licensing.

3. The ability to scale

Scalability is a feature of Cloudminister Technologies' technology that lets companies expand without worrying about hardware constraints.

Features of Scalability:

Flexible Resource Allocation: Adjust your storage, RAM, and GPU power according to task demands.

On-Demand Scaling: Only pay for what you use; scale back when not in use and increase resources during periods of high usage.

Custom Solutions: Custom GPU configurations and enterprise-level customization according to particular business requirements.

4. Robust Security:-

Cloudminister Technologies places a high premium on security. Multiple layers of protection are incorporated into their GPU hosting solutions to guarantee the safety and security of your data.

Among the security features are:

DDoS Protection: Prevents Distributed Denial of Service (DDoS) assaults that might impair the functionality of your server.

Frequent Backups: Automatic backups to ensure speedy data recovery in the event of an emergency.

Secure data transfer:- across networks is made possible via end-to-end encryption, or encrypted connections.

Advanced firewalls: Guard against malware attacks and illegal access.

5. 24/7 Technical Assistance:-

Cloudminister Technologies provides round-the-clock technical assistance to guarantee prompt and effective resolution of any problems. For help with server maintenance, configuration, and troubleshooting, their knowledgeable staff is always on hand.

Support Services:

Live Monitoring: Ongoing observation to proactively identify and address problems.

Dedicated Account Managers: Tailored assistance for business customers with particular technical needs.

Managed Services: Cloudminister provides fully managed hosting services, including upkeep and upgrades, for customers who require a hands-off option.

Advantages of Cloudminister Technologies Windows-Based GPU Hosting

There are numerous commercial benefits to using Cloudminister to host GPU servers on Windows.

User-Friendly Interface:- The Windows GUI lowers the learning curve for IT staff by making server management simple.

Broad Compatibility:- Complete support for Windows-specific frameworks and apps, including Microsoft Azure SDK, DirectX, and the.NET Framework.

Optimized Performance:- By ensuring that the GPU hardware operates at its best, Windows-based drivers and upgrades reduce downtime.

Use Cases at Cloudminister Technologies for GPU Hosting

Cloudminister's GPU hosting servers are made to specifically cater to the demands of different sectors.

Machine learning and artificial intelligence:- With the aid of powerful GPU servers, machine learning models can be developed and trained more quickly. Perfect for PyTorch, Keras, TensorFlow, and other deep learning frameworks.

Media and Entertainment:- GPU servers provide the processing capacity required for VFX creation, 3D modeling, animation, and video rendering. These servers support programs like Blender, Autodesk Maya, and Adobe After Effects.

Big Data Analytics:- Use tools like Apache Hadoop and Apache Spark to process enormous amounts of data and gain real-time insights.

Development of Games:- Using strong GPUs that enable 3D rendering, simulations, and game engine integration with programs like Unreal Engine and Unity, create and test games.

Flexible Pricing and Plans

Cloudminister Technologies provides adjustable pricing structures to suit companies of all sizes:

Pay-as-you-go: This approach helps organizations efficiently manage expenditures by only charging for the resources you utilize.

Custom Packages: Hosting packages designed specifically for businesses with certain needs in terms of GPU, RAM, and storage.

Free Trials: Before making a long-term commitment, test the service risk-free.

Reliable Support and Services

To guarantee optimum server performance, Cloudminister Technologies provides a comprehensive range of support services:

24/7 Monitoring:- Proactive server monitoring to reduce downtime and maximize uptime.

Automated Backups:- To avoid data loss, create regular backups with simple restoration choices.

Managed Services:- Professional hosting environment management for companies in need of a full-service outsourced solution.

In conclusion

Cloudminister Technologies' GPU hosting servers are the ideal choice if your company relies on high-performance computing for AI, large data, rendering, or simulation. The scalability, security, and speed required to manage even the most resource-intensive workloads are offered by their Windows-compatible GPU servers.

Cloudminister Technologies is the best partner for companies looking for dependable GPU hosting solutions because of its flexible pricing, strong support, and state-of-the-art technology.

To find out how Cloudminister Technologies' GPU hosting services may improve your company's operations, get in contact with them right now.

VISIT:- www.cloudminister.com

0 notes

Text

Is DevOps All About Coding? Exploring the Role of Programming and Essential Skills in DevOps

As technology continues to advance, DevOps has emerged as a key practice for modern software development and IT operations. A frequently asked question among those exploring this field is: "Is DevOps full of coding?" The answer isn’t black and white. While coding is undoubtedly an important part of DevOps, it’s only one piece of a much larger puzzle.

This article unpacks the role coding plays in DevOps, highlights the essential skills needed to succeed, and explains how DevOps professionals contribute to smoother software delivery. Additionally, we’ll introduce the Boston Institute of Analytics’ Best DevOps Training program, which equips learners with the knowledge and tools to excel in this dynamic field.

What Exactly is DevOps?

DevOps is a cultural and technical approach that bridges the gap between development (Dev) and operations (Ops) teams. Its main goal is to streamline the software delivery process by fostering collaboration, automating workflows, and enabling continuous integration and delivery (CI/CD). DevOps professionals focus on:

Enhancing communication between teams.

Automating repetitive tasks to improve efficiency.

Ensuring systems are scalable, secure, and reliable.

The Role of Coding in DevOps

1. Coding is Important, But Not Everything

While coding is a key component of DevOps, it’s not the sole focus. Here are some areas where coding is essential:

Automation: Writing scripts to automate processes like deployments, testing, and monitoring.

Infrastructure as Code (IaC): Using tools such as Terraform or AWS CloudFormation to manage infrastructure programmatically.

Custom Tool Development: Building tailored tools to address specific organizational needs.

2. Scripting Takes Center Stage

In DevOps, scripting often takes precedence over traditional software development. Popular scripting languages include:

Python: Ideal for automation, orchestration, and data processing tasks.

Bash: Widely used for shell scripting in Linux environments.

PowerShell: Commonly utilized for automating tasks in Windows ecosystems.

3. Mastering DevOps Tools

DevOps professionals frequently work with tools that minimize the need for extensive coding. These include:

CI/CD Platforms: Jenkins, GitLab CI/CD, and CircleCI.

Configuration Management Tools: Ansible, Chef, and Puppet.

Monitoring Tools: Grafana, Prometheus, and Nagios.

4. Collaboration is Key

DevOps isn’t just about writing code—it’s about fostering teamwork. DevOps engineers serve as a bridge between development and operations, ensuring workflows are efficient and aligned with business objectives. This requires strong communication and problem-solving skills in addition to technical expertise.

Skills You Need Beyond Coding

DevOps demands a wide range of skills that extend beyond programming. Key areas include:

1. System Administration

Understanding operating systems, networking, and server management is crucial. Proficiency in both Linux and Windows environments is a major asset.

2. Cloud Computing

As cloud platforms like AWS, Azure, and Google Cloud dominate the tech landscape, knowledge of cloud infrastructure is essential. This includes:

Managing virtual machines and containers.

Using cloud-native tools like Kubernetes and Docker.

Designing scalable and secure cloud solutions.

3. Automation and Orchestration

Automation lies at the heart of DevOps. Skills include:

Writing scripts to automate deployments and system configurations.

Utilizing orchestration tools to manage complex workflows efficiently.

4. Monitoring and Incident Response

Ensuring system reliability is a critical aspect of DevOps. This involves:

Setting up monitoring dashboards for real-time insights.

Responding to incidents swiftly to minimize downtime.

Analyzing logs and metrics to identify and resolve root causes.

Is DevOps Right for You?

DevOps is a versatile field that welcomes professionals from various backgrounds, including:

Developers: Looking to expand their skill set into operations and automation.

System Administrators: Interested in learning coding and modern practices like IaC.

IT Professionals: Seeking to enhance their expertise in cloud computing and automation.

Even if you don’t have extensive coding experience, there are pathways into DevOps. With the right training and a willingness to learn, you can build a rewarding career in this field.

Boston Institute of Analytics: Your Path to DevOps Excellence

The Boston Institute of Analytics (BIA) offers a comprehensive DevOps training program designed to help professionals at all levels succeed. Their curriculum covers everything from foundational concepts to advanced techniques, making it one of the best DevOps training programs available.

What Sets BIA’s DevOps Training Apart?

Hands-On Experience:

Work with industry-standard tools like Docker, Kubernetes, Jenkins, and AWS.

Gain practical knowledge through real-world projects.

Expert Instructors:

Learn from seasoned professionals with extensive industry experience.

Comprehensive Curriculum:

Dive deep into topics like CI/CD, IaC, cloud computing, and monitoring.

Develop both technical and soft skills essential for DevOps roles.

Career Support:

Benefit from personalized resume reviews, interview preparation, and job placement assistance.

By enrolling in BIA’s Best DevOps Training, you’ll gain the skills and confidence to excel in this ever-evolving field.

Final Thoughts

So, is DevOps full of coding? While coding plays an important role in DevOps, it’s far from the whole picture. DevOps is about collaboration, automation, and ensuring reliable software delivery. Coding is just one of the many skills you’ll need to succeed in this field.

If you’re inspired to start a career in DevOps, the Boston Institute of Analytics is here to guide you. Their training program provides everything you need to thrive in this exciting domain. Take the first step today and unlock your potential in the world of DevOps!

#cloud training institute#best devops training#Cloud Computing Course#cloud computing#devops#devops course

0 notes

Text

Backup SQL Server Agent Jobs: PowerShell Automation Tips for SQL Server