#Rotating IP Proxies API

Explore tagged Tumblr posts

Text

How the IP Proxies API Solves Highly Concurrent Requests

With the rapid growth of Internet services and applications, organizations and developers are facing increasing challenges in handling highly concurrent requests. To ensure the efficient operation of the system, API interface becomes an essential tool. Especially when dealing with highly concurrent requests, Rotating IP Proxies API interfaces provide strong support for organizations. Today we'll take a deeper look at the role of the Rotating IP Proxies API and how it can help organizations solve the problems associated with high concurrency.

What is the Rotating IP Proxies API interface?

Rotating IP Proxies API interface is a kind of interface that provides users with network proxy services by means of Rotating IP address allocation. It can schedule IP addresses in different geographic locations in real time according to demand, which not only helps users to access anonymously, but also improves the efficiency of data collection, avoids IP blocking, and even carries out operations such as anti-crawler protection.

With the Rotating IP Proxies API, organizations can easily assign IP addresses from a large number of different regions to ensure continuous and stable online activity. For example, when conducting market research that requires frequent visits to multiple websites, the Rotating IP Proxies API ensures that IPs are not blocked due to too frequent requests.

Challenges posed by highly concurrent requests

Excessive server pressure

When a large number of users request a service at the same time, the load on the server increases dramatically. This can lead to slower server responses or even crashes, affecting the overall user experience.

IP ban risk

If an IP address sends out frequent requests in a short period of time, many websites will regard these requests as malicious behavior and block the IP. such blocking will directly affect normal access and data collection.

These issues are undoubtedly a major challenge for Internet applications and enterprises, especially when performing large-scale data collection, where a single IP may be easily blocked, thus affecting the entire business process.

How the Rotating IP Proxies API solves these problems

Decentralization of request pressure

One of the biggest advantages of the Rotating IP Proxies API is its ability to decentralize requests to different servers by constantly switching IP addresses. This effectively reduces the burden on individual servers, decreasing the stress on the system while improving the stability and reliability of the service.

Avoid IP blocking

Rotating IP addresses allows organizations to avoid the risk of blocking due to frequent requests. For example, when crawling and market monitoring, the use of Rotating IP Proxies can avoid being recognized by the target website as a malicious attack, ensuring that the data collection task can be carried out smoothly.

Improved efficiency of data collection

Rotating IP Proxies API not only avoids blocking, but also significantly improves the efficiency of data capture. When it comes to tasks that require frequent requests and grabbing large amounts of data, Rotating IP Proxies APIs can speed up data capture, ensure more timely data updates, and further improve work efficiency.

Protecting user privacy

Rotating IP Proxies APIs are also important for scenarios where user privacy needs to be protected. By constantly changing IPs, users' online activities can remain anonymous, reducing the risk of personal information leakage. Especially when dealing with sensitive data, protecting user privacy has become an important task for business operations.

How to Effectively Utilize the Rotating IP Proxies API

Develop a reasonable IP scheduling strategy

An effective IP scheduling strategy is the basis for ensuring the efficient operation of the Rotating Proxies API. Enterprises should plan the frequency of IP address replacement based on actual demand and Porfiles to avoid too frequent IP switching causing system anomalies or being recognized as abnormal behavior.

Optimize service performance

Regular monitoring and optimization of service performance, especially in the case of highly concurrent requests, can help enterprises identify and solve potential problems in a timely manner. Reasonable performance optimization not only improves the speed of data collection, but also reduces the burden on the server and ensures stable system operation.

Choosing the Right Proxies

While Rotating IP Proxies APIs can offer many advantages, choosing a reliable service provider is crucial. A good service provider will not only be able to provide high-quality IP resources, but also ensure the stability and security of the service.

In this context, Proxy4Free Residential Proxies service can provide a perfect solution for users. As a leading IP proxy service provider, Proxy4Free not only provides high-speed and stable proxy services, but also ensures a high degree of anonymity and security. This makes Proxy4Free an ideal choice for a wide range of business scenarios such as multinational market research, advertisement monitoring, price comparison and so on.

Proxy4Free Residential Proxies have the following advantages:

Unlimited traffic and bandwidth: Whether you need to perform a large-scale data crawling or continuous market monitoring you don't need to worry about traffic limitations.

30MB/S High-speed Internet: No matter what part of the world you are in, you can enjoy extremely fast Proxies connection to ensure your operation is efficient and stable.

Global Coverage: Provides Proxies IP from 195 countries/regions to meet the needs of different geographic markets.

High anonymity and security: Proxies keep your privacy safe from malicious tracking or disclosure and ensure that your data collection activities are not interrupted.

With Proxy4Free Residential Proxies, you can flexibly choose the appropriate IP scheduling policy according to your needs, effectively spreading out the pressure of requests and avoiding the risk of access anomalies or blocking due to frequent IP changes. In addition, you can monitor and optimize service performance in real time to ensure efficient data collection and traffic management.

Click on the link to try it out now!

concluding remarks

The role of Rotating IP Proxies API interface in solving highly concurrent requests cannot be ignored. It can not only disperse the request pressure and avoid IP blocking, but also improve the efficiency of data collection and protect user privacy. When choosing a Proxies service provider, enterprises should make a comprehensive assessment from the quality of IP pool, interface support and service stability. Reasonable planning of IP scheduling strategies and optimization of service performance will help improve system stability and reliability, ensuring that enterprises can efficiently and safely complete various online tasks.

#Rotating IP Proxies API#Highly Concurrent Requests#Data Collection#Market Research#Anti-Crawler#Server Stress#IP Blocking#Request Dispersal#User Privacy Protection#Proxies

0 notes

Text

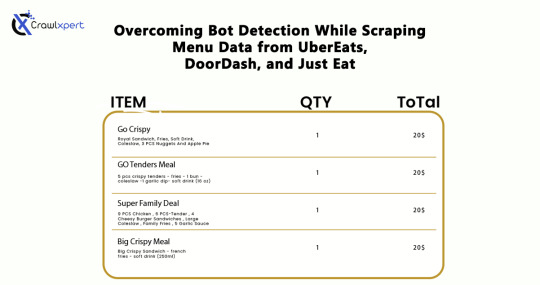

Overcoming Bot Detection While Scraping Menu Data from UberEats, DoorDash, and Just Eat

Introduction

In industries where menu data collection is concerned, web scraping would serve very well for us: UberEats, DoorDash, and Just Eat are the some examples. However, websites use very elaborate bot detection methods to stop the automated collection of information. In overcoming these factors, advanced scraping techniques would apply with huge relevance: rotating IPs, headless browsing, CAPTCHA solving, and AI methodology.

This guide will discuss how to bypass bot detection during menu data scraping and all challenges with the best practices for seamless and ethical data extraction.

Understanding Bot Detection on Food Delivery Platforms

1. Common Bot Detection Techniques

Food delivery platforms use various methods to block automated scrapers:

IP Blocking – Detects repeated requests from the same IP and blocks access.

User-Agent Tracking – Identifies and blocks non-human browsing patterns.

CAPTCHA Challenges – Requires solving puzzles to verify human presence.

JavaScript Challenges – Uses scripts to detect bots attempting to load pages without interaction.

Behavioral Analysis – Tracks mouse movements, scrolling, and keystrokes to differentiate bots from humans.

2. Rate Limiting and Request Patterns

Platforms monitor the frequency of requests coming from a specific IP or user session. If a scraper makes too many requests within a short time frame, it triggers rate limiting, causing the scraper to receive 403 Forbidden or 429 Too Many Requests errors.

3. Device Fingerprinting

Many websites use sophisticated techniques to detect unique attributes of a browser and device. This includes screen resolution, installed plugins, and system fonts. If a scraper runs on a known bot signature, it gets flagged.

Techniques to Overcome Bot Detection

1. IP Rotation and Proxy Management

Using a pool of rotating IPs helps avoid detection and blocking.

Use residential proxies instead of data center IPs.

Rotate IPs with each request to simulate different users.

Leverage proxy providers like Bright Data, ScraperAPI, and Smartproxy.

Implement session-based IP switching to maintain persistence.

2. Mimic Human Browsing Behavior

To appear more human-like, scrapers should:

Introduce random time delays between requests.

Use headless browsers like Puppeteer or Playwright to simulate real interactions.

Scroll pages and click elements programmatically to mimic real user behavior.

Randomize mouse movements and keyboard inputs.

Avoid loading pages at robotic speeds; introduce a natural browsing flow.

3. Bypassing CAPTCHA Challenges

Implement automated CAPTCHA-solving services like 2Captcha, Anti-Captcha, or DeathByCaptcha.

Use machine learning models to recognize and solve simple CAPTCHAs.

Avoid triggering CAPTCHAs by limiting request frequency and mimicking human navigation.

Employ AI-based CAPTCHA solvers that use pattern recognition to bypass common challenges.

4. Handling JavaScript-Rendered Content

Use Selenium, Puppeteer, or Playwright to interact with JavaScript-heavy pages.

Extract data directly from network requests instead of parsing the rendered HTML.

Load pages dynamically to prevent detection through static scrapers.

Emulate browser interactions by executing JavaScript code as real users would.

Cache previously scraped data to minimize redundant requests.

5. API-Based Extraction (Where Possible)

Some food delivery platforms offer APIs to access menu data. If available:

Check the official API documentation for pricing and access conditions.

Use API keys responsibly and avoid exceeding rate limits.

Combine API-based and web scraping approaches for optimal efficiency.

6. Using AI for Advanced Scraping

Machine learning models can help scrapers adapt to evolving anti-bot measures by:

Detecting and avoiding honeypots designed to catch bots.

Using natural language processing (NLP) to extract and categorize menu data efficiently.

Predicting changes in website structure to maintain scraper functionality.

Best Practices for Ethical Web Scraping

While overcoming bot detection is necessary, ethical web scraping ensures compliance with legal and industry standards:

Respect Robots.txt – Follow site policies on data access.

Avoid Excessive Requests – Scrape efficiently to prevent server overload.

Use Data Responsibly – Extracted data should be used for legitimate business insights only.

Maintain Transparency – If possible, obtain permission before scraping sensitive data.

Ensure Data Accuracy – Validate extracted data to avoid misleading information.

Challenges and Solutions for Long-Term Scraping Success

1. Managing Dynamic Website Changes

Food delivery platforms frequently update their website structure. Strategies to mitigate this include:

Monitoring website changes with automated UI tests.

Using XPath selectors instead of fixed HTML elements.

Implementing fallback scraping techniques in case of site modifications.

2. Avoiding Account Bans and Detection

If scraping requires logging into an account, prevent bans by:

Using multiple accounts to distribute request loads.

Avoiding excessive logins from the same device or IP.

Randomizing browser fingerprints using tools like Multilogin.

3. Cost Considerations for Large-Scale Scraping

Maintaining an advanced scraping infrastructure can be expensive. Cost optimization strategies include:

Using serverless functions to run scrapers on demand.

Choosing affordable proxy providers that balance performance and cost.

Optimizing scraper efficiency to reduce unnecessary requests.

Future Trends in Web Scraping for Food Delivery Data

As web scraping evolves, new advancements are shaping how businesses collect menu data:

AI-Powered Scrapers – Machine learning models will adapt more efficiently to website changes.

Increased Use of APIs – Companies will increasingly rely on API access instead of web scraping.

Stronger Anti-Scraping Technologies – Platforms will develop more advanced security measures.

Ethical Scraping Frameworks – Legal guidelines and compliance measures will become more standardized.

Conclusion

Uber Eats, DoorDash, and Just Eat represent great challenges for menu data scraping, mainly due to their advanced bot detection systems. Nevertheless, if IP rotation, headless browsing, solutions to CAPTCHA, and JavaScript execution methodologies, augmented with AI tools, are applied, businesses can easily scrape valuable data without incurring the wrath of anti-scraping measures.

If you are an automated and reliable web scraper, CrawlXpert is the solution for you, which specializes in tools and services to extract menu data with efficiency while staying legally and ethically compliant. The right techniques, along with updates on recent trends in web scrapping, will keep the food delivery data collection effort successful long into the foreseeable future.

Know More : https://www.crawlxpert.com/blog/scraping-menu-data-from-ubereats-doordash-and-just-eat

#ScrapingMenuDatafromUberEats#ScrapingMenuDatafromDoorDash#ScrapingMenuDatafromJustEat#ScrapingforFoodDeliveryData

0 notes

Text

Mobile Proxies Explained: Use Cases and Advantages by Proxy Lust, Inc.

🛰️ What Are Mobile Proxies?

A mobile proxy is an intermediary that uses IP addresses assigned by mobile carriers (e.g., 4G/5G LTE networks) to route your internet traffic. Unlike datacenter or residential proxies, mobile proxies are tied to real mobile devices, making them far less likely to be flagged, blocked, or banned during intensive tasks like data scraping or social automation.

These proxies rotate through a pool of mobile IPs, dynamically assigned by telecom providers, which helps mimic legitimate mobile user behavior.

📲 Why Mobile Proxies Matter in 2025

The digital landscape in 2025 is dominated by AI detection systems, geo-targeted content, and increasingly sophisticated anti-bot frameworks. This means:

Datacenter proxies are easier to detect.

Residential IPs still face bans on high-volume tasks.

Mobile proxies offer stealth, authenticity, and flexibility.

In short, mobile proxies are now an essential tool for marketers, researchers, cybersecurity professionals, and automation experts.

�� Key Advantages of Mobile Proxies

✅ 1. High Trust Score

Mobile IPs are considered extremely trustworthy by websites, apps, and APIs. Why? Because they belong to real mobile network operators and rotate naturally, like actual human users switching networks.

✅ 2. Automatic IP Rotation

Most mobile proxies auto-rotate every few minutes or based on actions, which prevents IP bans and throttling. It also simulates real-world mobile behavior better than any static IP can.

✅ 3. Bypass Geo-Restrictions

Mobile proxies can be location-specific, allowing users to simulate browsing from a precise city or country. This is especially useful for:

SEO monitoring

Ad verification

Accessing region-locked content

✅ 4. Unmatched Anonymity

Since mobile proxies are dynamic and used by real users, they provide better anonymity and privacy, making it difficult for websites to trace back your actions.

✅ 5. Effective for Social Media Management

If you’re managing multiple Instagram, TikTok, or Facebook accounts, mobile proxies are the safest option to avoid getting flagged or banned by social platforms that aggressively fight automation.

🔒 Choose the Right Provider

At Proxy Lust, Inc., we provide:

Real 4G/5G mobile proxies from ethical sources

Global targeting options

High uptime and fast rotation

Monthly or pay-as-you-go plans

✅ Explore our mobile proxies here →

Mobile proxies are no longer optional in 2025 — they are a core part of digital operations. Whether you’re scaling a business or automating research, they offer unparalleled privacy, authenticity, and reliability.

If you’re ready to take your proxy setup to the next level, get in touch with Proxy Lust, Inc. and explore our premium mobile proxy solutions.

0 notes

Text

Proxy Server IP addresses and their association with Novada services

In the digital age, proxy server IP addresses have become a key tool for online privacy and access control. In order to protect personal privacy, break through the geographical restrictions, or for data collection, understand the proxy server IP address is vital. This article will analyze the role of proxy server IP addresses and explore how Novada can provide high-quality global proxy IP services.

The role of proxy server IP address

The proxy server IP address allows users through a proxy server routing network request, the user’s real IP address. This can not only help users bypass regional restrictions, but also improve network security and prevent personal information from being leaked. Application scenarios for proxy server IP addresses include, but are not limited to, online gaming, social media management, market research, and SEO optimization.

Global proxy IP services provided by Novada

As a global proxy IP resource provider, Novada provides a variety of services including data center proxy and self-built proxy pool. Novada’s services cover 220+ countries and regions around the world, and it has more than 100 million ethical residential IP pools. The services provided by Novada include:

1. Dynamic Residential Proxy: Starting at $3/G, it offers 100M+ residential IP pools, rotational and sticky sessions, unlimited concurrent sessions, and free city-level positioning.

2. Static ISP Residential Proxy: Starting at $6/IP, personal dedicated ISP proxy with 24/7 IP availability, unlimited concurrency and traffic.

3. Dynamic ISP Proxy: starts at $3.5/G and provides up to 6 hours of continuous session time per IP.

4. Data Center Proxy: starting at $0.75/G, 3 million data center ips, <0.5s response time.

Choose the right proxy server IP address

Choose the appropriate proxy server IP address, the stability of the need to consider the service, speed, safety, and the richness of IP resources. Novada’s services not only meet these requirements, but also provide automatic API interface configuration to achieve millisecond response time and improve efficiency. This means Novada’s proxy service remains efficient and stable even when multiple devices are in simultaneous use.

Proxy server IP addresses are essential for protecting online privacy and breaking through access restrictions. Novada satisfies the IP usage requirements in different business scenarios by providing global proxy services. Users can choose the most appropriate proxy IP service according to their business characteristics and requirements to improve business efficiency and success rate. Novada with its excellent performance and reasonable price, becomes in the proxy server IP service optimization.

0 notes

Text

Extract Amazon Product Prices with Web Scraping | Actowiz Solutions

Introduction

In the ever-evolving world of e-commerce, pricing strategy can make or break a brand. Amazon, being the global e-commerce behemoth, is a key platform where pricing intelligence offers an unmatched advantage. To stay ahead in such a competitive environment, businesses need real-time insights into product prices, trends, and fluctuations. This is where Actowiz Solutions comes into play. Through advanced Amazon price scraping solutions, Actowiz empowers businesses with accurate, structured, and actionable data.

Why extract Amazon Product Prices?

Price is one of the most influential factors affecting a customer’s purchasing decision. Here are several reasons why extracting Amazon product prices is crucial:

Competitor Analysis: Stay informed about competitors’ pricing.

Dynamic Pricing: Adjust your prices in real time based on market trends.

Market Research: Understand consumer behavior through price trends.

Inventory & Repricing Strategy: Align stock and pricing decisions with demand.

With Actowiz Solutions’ Amazon scraping services, you get access to clean, structured, and timely data without violating Amazon’s terms.

How Actowiz Solutions Extracts Amazon Price Data

Actowiz Solutions uses advanced scraping technologies tailored for Amazon’s complex site structure. Here’s a breakdown:

1. Custom Scraping Infrastructure

Actowiz Solutions builds custom scrapers that can navigate Amazon’s dynamic content, pagination, and bot protection layers like CAPTCHA, IP throttling, and JavaScript rendering.

2. Proxy Rotation & User-Agent Spoofing

To avoid detection and bans, Actowiz employs rotating proxies and multiple user-agent headers that simulate real user behavior.

3. Scheduled Data Extraction

Actowiz enables regular scheduling of price scraping jobs — be it hourly, daily, or weekly — for ongoing price intelligence.

4. Data Points Captured

The scraping service extracts:

Product name & ASIN

Price (MRP, discounted, deal price)

Availability

Ratings & Reviews

Seller information

Real-World Use Cases for Amazon Price Scraping

A. Retailers & Brands

Monitor price changes for own products or competitors to adjust pricing in real-time.

B. Marketplaces

Aggregate seller data to ensure competitive offerings and improve platform relevance.

C. Price Comparison Sites

Fuel your platform with fresh, real-time Amazon price data.

D. E-commerce Analytics Firms

Get historical and real-time pricing trends to generate valuable reports for clients.

Dataset Snapshot: Amazon Product Prices

Below is a snapshot of average product prices on Amazon across popular categories:

Product CategoryAverage Price (USD)Electronics120.50Books15.75Home & Kitchen45.30Fashion35.90Toys & Games25.40Beauty20.60Sports50.10Automotive75.80

Benefits of Choosing Actowiz Solutions

1. Scalability: From thousands to millions of records.

2. Accuracy: Real-time validation and monitoring ensure data reliability.

3. Customization: Solutions are tailored to each business use case.

4. Compliance: Ethical scraping methods that respect platform policies.

5. Support: Dedicated support and data quality teams

Legal & Ethical Considerations

Amazon has strict policies regarding automated data collection. Actowiz Solutions follows legal frameworks and deploys ethical scraping practices including:

Scraping only public data

Abiding by robots.txt guidelines

Avoiding high-frequency access that may affect site performance

Integration Options for Amazon Price Data

Actowiz Solutions offers flexible delivery and integration methods:

APIs: RESTful APIs for on-demand price fetching.

CSV/JSON Feeds: Periodic data dumps in industry-standard formats.

Dashboard Integration: Plug data directly into internal BI tools like Tableau or Power BI.

Contact Actowiz Solutions today to learn how our Amazon scraping solutions can supercharge your e-commerce strategy.Contact Us Today!

Conclusion: Future-Proof Your Pricing Strategy

The world of online retail is fast-moving and highly competitive. With Amazon as a major marketplace, getting a pulse on product prices is vital. Actowiz Solutions provides a robust, scalable, and ethical way to extract product prices from Amazon.

Whether you’re a startup or a Fortune 500 company, pricing intelligence can be your competitive edge. Learn More

#ExtractProductPrices#PriceIntelligence#AmazonScrapingServices#AmazonPriceScrapingSolutions#RealTimeInsights

0 notes

Text

Boost Your Retail Strategy with Quick Commerce Data Scraping in 2025

Introduction

The retail landscape is evolving rapidly, with Quick Commerce (Q-Commerce) driving instant deliveries across groceries, FMCG, and essential products. Platforms like Blinkit, Instacart, Getir, Gorillas, Swiggy Instamart, and Zapp dominate the space, offering ultra-fast deliveries. However, for retailers to stay competitive, optimize pricing, and track inventory, real-time data insights are crucial.

Quick Commerce Data Scraping has become a game-changer in 2025, enabling retailers to extract, analyze, and act on live market data. Retail Scrape, a leader in AI-powered data extraction, helps businesses track pricing trends, stock levels, promotions, and competitor strategies.

Why Quick Commerce Data Scraping is Essential for Retailers?

Optimize Pricing Strategies – Track real-time competitor prices & adjust dynamically.

Monitor Inventory Trends – Avoid overstocking or stockouts with demand forecasting.

Analyze Promotions & Discounts – Identify top deals & seasonal price drops.

Understand Consumer Behavior – Extract insights from customer reviews & preferences.

Improve Supply Chain Management – Align logistics with real-time demand analysis.

How Quick Commerce Data Scraping Enhances Retail Strategies?

1. Real-Time Competitor Price Monitoring

2. Inventory Optimization & Demand Forecasting

3. Tracking Promotions & Discounts

4. AI-Driven Consumer Behavior Analysis

Challenges in Quick Commerce Scraping & How to Overcome Them

Frequent Website Structure Changes Use AI-driven scrapers that automatically adapt to dynamic HTML structures and website updates.

Anti-Scraping Technologies (CAPTCHAs, Bot Detection, IP Bans) Deploy rotating proxies, headless browsers, and CAPTCHA-solving techniques to bypass restrictions.

Real-Time Price & Stock Changes Implement real-time web scraping APIs to fetch updated pricing, discounts, and inventory availability.

Geo-Restricted Content & Location-Based Offers Use geo-targeted proxies and VPNs to access region-specific data and ensure accuracy.

High Request Volume Leading to Bans Optimize request intervals, use distributed scraping, and implement smart throttling to prevent getting blocked.

Unstructured Data & Parsing Complexities Utilize AI-based data parsing tools to convert raw HTML into structured formats like JSON, CSV, or databases.

Multiple Platforms with Different Data Formats Standardize data collection from apps, websites, and APIs into a unified format for seamless analysis.

Industries Benefiting from Quick Commerce Data Scraping

1. eCommerce & Online Retailers

2. FMCG & Grocery Brands

3. Market Research & Analytics Firms

4. Logistics & Supply Chain Companies

How Retail Scrape Can Help Businesses in 2025

Retail Scrape provides customized Quick Commerce Data Scraping Services to help businesses gain actionable insights. Our solutions include:

Automated Web & Mobile App Scraping for Q-Commerce Data.

Competitor Price & Inventory Tracking with AI-Powered Analysis.

Real-Time Data Extraction with API Integration.

Custom Dashboards for Data Visualization & Predictive Insights.

Conclusion

In 2025, Quick Commerce Data Scraping is an essential tool for retailers looking to optimize pricing, track inventory, and gain competitive intelligence. With platforms like Blinkit, Getir, Instacart, and Swiggy Instamart shaping the future of instant commerce, data-driven strategies are the key to success.

Retail Scrape’s AI-powered solutions help businesses extract, analyze, and leverage real-time pricing, stock, and consumer insights for maximum profitability.

Want to enhance your retail strategy with real-time Q-Commerce insights? Contact Retail Scrape today!

Read more >>https://www.retailscrape.com/fnac-data-scraping-retail-market-intelligence.php

officially published by https://www.retailscrape.com/.

#QuickCommerceDataScraping#RealTimeDataExtraction#AIPoweredDataExtraction#RealTimeCompetitorPriceMonitoring#MobileAppScraping#QCommerceData#QCommerceInsights#BlinkitDataScraping#RealTimeQCommerceInsights#RetailScrape#EcommerceAnalytics#InstantDeliveryData#OnDemandCommerceData#QuickCommerceTrends

0 notes

Text

Unlock the Full Potential of Web Data with ProxyVault’s Datacenter Proxy API

In the age of data-driven decision-making, having reliable, fast, and anonymous access to web resources is no longer optional—it's essential. ProxyVault delivers a cutting-edge solution through its premium residential, datacenter, and rotating proxies, equipped with full HTTP and SOCKS5 support. Whether you're a data scientist, SEO strategist, or enterprise-scale scraper, our platform empowers your projects with a secure and unlimited Proxy API designed for scalability, speed, and anonymity. In this article, we focus on one of the most critical assets in our suite: the datacenter proxy API.

What Is a Datacenter Proxy API and Why It Matters

A datacenter proxy API provides programmatic access to a vast pool of high-speed IP addresses hosted in data centers. Unlike residential proxies that rely on real-user IPs, datacenter proxies are not affiliated with Internet Service Providers (ISPs). This distinction makes them ideal for large-scale operations such as:

Web scraping at volume

Competitive pricing analysis

SEO keyword rank tracking

Traffic simulation and testing

Market intelligence gathering

With ProxyVault’s datacenter proxy API, you get lightning-fast response times, bulk IP rotation, and zero usage restrictions, enabling seamless automation and data extraction at any scale.

Ultra-Fast and Scalable Infrastructure

One of the hallmarks of ProxyVault’s platform is speed. Our datacenter proxy API leverages ultra-reliable servers hosted in high-bandwidth facilities worldwide. This ensures your requests experience minimal latency, even during high-volume data retrieval.

Dedicated infrastructure guarantees consistent uptime

Optimized routing minimizes request delays

Low ping times make real-time scraping and crawling more efficient

Whether you're pulling hundreds or millions of records, our system handles the load without breaking a sweat.

Unlimited Access with Full HTTP and SOCKS5 Support

Our proxy API supports both HTTP and SOCKS5 protocols, offering flexibility for various application environments. Whether you're managing browser-based scraping tools, automated crawlers, or internal dashboards, ProxyVault’s datacenter proxy API integrates seamlessly.

HTTP support is ideal for most standard scraping tools and analytics platforms

SOCKS5 enables deep integration for software requiring full network access, including P2P and FTP operations

This dual-protocol compatibility ensures that no matter your toolset or tech stack, ProxyVault works right out of the box.

Built for SEO, Web Scraping, and Data Mining

Modern businesses rely heavily on data for strategy and operations. ProxyVault’s datacenter proxy API is custom-built for the most demanding use cases:

SEO Ranking and SERP Monitoring

For marketers and SEO professionals, tracking keyword rankings across different locations is critical. Our proxies support geo-targeting, allowing you to simulate searches from specific countries or cities.

Track competitor rankings

Monitor ad placements

Analyze local search visibility

The proxy API ensures automated scripts can run 24/7 without IP bans or CAPTCHAs interfering.

Web Scraping at Scale

From eCommerce sites to travel platforms, web scraping provides invaluable insights. Our rotating datacenter proxies change IPs dynamically, reducing the risk of detection.

Scrape millions of pages without throttling

Bypass rate limits with intelligent IP rotation

Automate large-scale data pulls securely

Data Mining for Enterprise Intelligence

Enterprises use data mining for trend analysis, market research, and customer insights. Our infrastructure supports long sessions, persistent connections, and high concurrency, making ProxyVault a preferred choice for advanced data extraction pipelines.

Advanced Features with Complete Control

ProxyVault offers a powerful suite of controls through its datacenter proxy API, putting you in command of your operations:

Unlimited bandwidth and no request limits

Country and city-level filtering

Sticky sessions for consistent identity

Real-time usage statistics and monitoring

Secure authentication using API tokens or IP whitelisting

These features ensure that your scraping or data-gathering processes are as precise as they are powerful.

Privacy-First, Log-Free Architecture

We take user privacy seriously. ProxyVault operates on a strict no-logs policy, ensuring that your requests are never stored or monitored. All communications are encrypted, and our servers are secured using industry best practices.

Zero tracking of API requests

Anonymity by design

GDPR and CCPA-compliant

This gives you the confidence to deploy large-scale operations without compromising your company’s or clients' data.

Enterprise-Level Support and Reliability

We understand that mission-critical projects demand not just great tools but also reliable support. ProxyVault offers:

24/7 technical support

Dedicated account managers for enterprise clients

Custom SLAs and deployment options

Whether you need integration help or technical advice, our experts are always on hand to assist.

Why Choose ProxyVault for Your Datacenter Proxy API Needs

Choosing the right proxy provider can be the difference between success and failure in data operations. ProxyVault delivers:

High-speed datacenter IPs optimized for web scraping and automation

Fully customizable proxy API with extensive documentation

No limitations on bandwidth, concurrent threads, or request volume

Granular location targeting for more accurate insights

Proactive support and security-first infrastructure

We’ve designed our datacenter proxy API to be robust, reliable, and scalable—ready to meet the needs of modern businesses across all industries.

Get Started with ProxyVault Today

If you’re ready to take your data operations to the next level, ProxyVault offers the most reliable and scalable datacenter proxy API on the market. Whether you're scraping, monitoring, mining, or optimizing, our solution ensures your work is fast, anonymous, and unrestricted.

Start your free trial today and experience the performance that ProxyVault delivers to thousands of users around the globe.

1 note

·

View note

Text

How Proxy Servers Help in Analyzing Competitor Strategies

In a globalized business environment, keeping abreast of competitors' dynamics is a core capability for a company to survive. However, with the upgrading of anti-crawler technology, tightening of privacy regulations (such as the Global Data Privacy Agreement 2024), and regional content blocking, traditional monitoring methods have become seriously ineffective. In 2025, proxy servers will become a "strategic tool" for corporate competitive intelligence systems due to their anonymity, geographic simulation capabilities, and anti-blocking technology. This article combines cutting-edge technical solutions with real cases to analyze how proxy servers enable dynamic analysis of opponents.

Core application scenarios and technical solutions

1. Price monitoring: A global pricing strategy perspective

Technical requirements:

Break through the regional blockade of e-commerce platforms (such as Amazon sub-stations, Lazada regional pricing)

Avoid IP blocking caused by high-frequency access

Capture dynamic price data (flash sales, member-exclusive prices, etc.)

Solutions:

Residential proxy rotation pool: Through real household IPs in Southeast Asia, Europe and other places, 500+ addresses are rotated every hour to simulate natural user browsing behavior.

AI dynamic speed adjustment: Automatically adjust the request frequency according to the anti-crawling rules of the target website (such as Target.com’s flow limit of 3 times per second).

Data cleaning engine: Eliminate the "false price traps" launched by the platform (such as discount prices only displayed to new users).

2. Advertising strategy analysis: decoding localized marketing

Technical requirements :

Capture regional targeted ads (Google/Facebook personalized delivery)

Analyze competitor SEM keyword layout

Monitor the advertising material updates of short video platforms (TikTok/Instagram Reels)

Solutions :

���Mobile 4G proxy cluster: Simulate real mobile devices in the target country (such as India's Jio operator and Japan's Docomo network) to trigger precise advertising push.

Headless browser + proxy binding: Through tools such as Puppeteer-extra, assign independent proxy IP to each browser instance and batch capture advertising landing pages.

Multi-language OCR recognition: Automatically parse advertising copy in non-common languages such as Arabic and Thai.

3. Product iteration and supply chain tracking

Technical requirements:

Monitor new product information hidden on competitor official websites/APIs

Catch supplier bidding platform data (such as Alibaba International Station)

Analyze app store version update logs Solution:

ASN proxy directional penetration: Reduce the API interface access risk control level through the IP of the autonomous system (ASN) where the competitor server is located (such as AWS West Coast node).

Deep crawler + proxy tunnel: Recursively crawl the competitor support page and GitHub repository, and achieve complete anonymization in combination with Tor proxy.

APK decompilation proxy: Download the Middle East limited edition App through the Egyptian mobile proxy and parse the unreleased functional modules in the code.

2025 proxy Technology Upgrade

Compliance Data Flow Architecture

User request → Swiss residential proxy (anonymity layer) → Singapore data center proxy (mass-desensitizing layer) → target website Log retention: zero-log policy + EU GDPR compliance audit channel

Tool recommendation and cost optimization

Conclusion: Reshaping the rules of the game for competitive intelligence

In the commercial battlefield of 2025, proxy servers have been upgraded from "data pipelines" to "intelligent attack and defense platforms." If companies can integrate proxy technology, AI analysis, and compliance frameworks, they can not only see through the dynamics of their opponents, but also proactively set up competitive barriers. In the future, the battlefield of proxy servers will extend to edge computing nodes and decentralized networks, further subverting the traditional intelligence warfare model.

0 notes

Text

What is CNIProxy

CNIProxy's core functionality is to provide high-quality proxy IPs, helping containers and services achieve efficient and stable network connections. The advantages of proxy IPs are particularly crucial in Kubernetes clusters for service and container network management. Below are the main benefits of high-quality proxy IPs and their applications within a cluster:

1. Improved Network Stability

High-quality proxy IPs ensure that containers maintain more stable network connections when communicating with external networks. A stable network connection is vital for inter-container communication and external service interactions within a Kubernetes cluster, effectively avoiding network jitter and connection disruptions.

2. Reduced Latency

By using high-quality proxy IPs, network latency can be significantly reduced, especially when accessing external services across regions or networks. By choosing proxy servers located near the geographical location, the time required for data transmission is shortened, improving application response speed.

3. Enhanced Security

Using high-quality proxy IPs can hide the real IP addresses of containers when accessing external networks, thereby enhancing security. High-quality proxy IPs are typically equipped with additional security measures such as firewalls and encrypted tunnels, which can effectively protect the cluster from potential network attacks.

4. Improved Compatibility with External Services

Some external services may restrict access based on IP addresses. High-quality proxy IPs can bypass these restrictions. Proxy IPs can simulate different geographical locations or IP ranges, thus avoiding access issues caused by IP blocking or geographical restrictions.

5. Load Balancing and Traffic Distribution

High-quality proxy IPs can serve as a medium for load balancing, distributing traffic across multiple proxy IPs, preventing excessive traffic concentration on a single node, and enhancing the cluster's throughput and fault tolerance. This is particularly important for applications requiring high-concurrency requests.

6. Traffic Monitoring and Log Analysis

High-quality proxy IPs often offer detailed traffic monitoring and logging features, helping administrators monitor real-time traffic between containers and external networks. This data is crucial for troubleshooting network issues and optimizing traffic routing.

7. Bypassing Network Restrictions

In some restrictive network environments, containers may face IP blocking or access restrictions. By configuring high-quality proxy IPs, containers can bypass these network restrictions and establish free communication with external services.

8. Improved External Resource Access Efficiency

Many external APIs have rate limits or access permission management. Using high-quality proxy IPs, containers can rotate different IP addresses to bypass these restrictions, improving resource access efficiency. For example, during web scraping, this can prevent being blocked due to excessive requests from the same IP.

9. Cross-Region Access

High-quality proxy IPs support access needs in different regions globally, allowing users to choose proxy IPs from various regions to achieve cross-region service access. This is particularly important for globally distributed Kubernetes clusters or applications, ensuring users from different locations can access application services with low latency and high-quality network connections.

Conclusion

By using high-quality proxy IPs provided by CNIProxy, Kubernetes clusters can optimize communication between containers and between containers and external services, improving overall network quality, stability, and security. High-quality proxy IPs not only reduce network latency and improve access efficiency but also help containers bypass access restrictions, enhancing flexibility and adaptability. This makes CNIProxy an indispensable tool, especially in scenarios requiring high-quality network connections and cross-region or cross-network communication.

1 note

·

View note

Text

Explore the core knowledge and application of POST requests

In the operation of the modern Internet, POST requests serve as an important data transfer method that supports the normal operation of various websites and applications. Understanding how POST requests work is not only crucial for developers, but also helpful for ordinary users to understand the security, efficiency and privacy protection of web requests. In this article, we'll take you through the basics of POST requests and their importance in everyday use.

What is a POST request?

POST request is an HTTP request method that is primarily used to send data from the client to the server. Unlike a GET request, a POST request places the data in the body of the request instead of attaching it directly to the URL. This method makes POST requests suitable for transmitting larger, sensitive data information, such as login passwords, form contents, etc., thus increasing data privacy and security.

Application Scenarios of POST Request

1. Data submission: POST request is widely used for form submission to ensure that user's personal data (such as registration information, payment data, etc.) can be safely sent to the server. For example, when a user registers for a website account, the personal data submitted via POST request will not appear directly in the URL, avoiding the risk of exposing sensitive information.

2. File upload: When users need to upload pictures, files and other content to the server, POST request is the preferred method. It supports high-capacity data transmission, places file information in the request body to guarantee transmission stability, and is especially suitable for the file upload function of e-commerce platforms or social media applications.

3.API Interaction: In Application Programming Interface (API), POST request is used to transfer new data or create resources. Whether it's sending information to the API of a social platform or processing payment requests, POST requests ensure data integrity and accurate transmission, and are an important bridge between server and client interaction.

4. Bulk Data Processing: POST requests are suitable for situations where a large amount of data needs to be transferred at the same time. For example, in data analysis applications, multiple data packets can be sent to the server at once through POST requests to ensure transmission efficiency. This is especially common in data transfer for big data and machine learning models.

Advantages of POST requests

l Data security: the data of a POST request is placed in the request body, avoiding the risk of direct exposure to the URL. Although it is not an encrypted form, it is relatively more suitable for transmitting sensitive information, and combining it with SSL encryption can further protect data privacy.

l Data Volume Support: In contrast to GET requests, which limit the amount of data that can be transferred to a smaller amount, POST requests do not have similar limitations and allow for the transfer of large amounts of data. Whether it is a file or a complex data object, POST requests can be handled with ease.

l URL simplicity: Since POST requests do not display parameters in the URL, the URL is kept simple. For websites that need to maintain page aesthetics and security, POST requests provide a better solution.

As the core data transfer method in the HTTP protocol, POST request is widely used in data submission, file upload, API interaction and other scenarios. Understanding and correctly using POST request can not only improve user experience, but also effectively protect the security and privacy of network data, mastering the basics of POST request is the key to improve the awareness of network application and security.

0 notes

Text

How to Track Restaurant Promotions on Instacart and Postmates Using Web Scraping

Introduction

With the rapid growth of food delivery services, companies such as Instacart and Postmates are constantly advertising for their restaurants to entice customers. Such promotions can range from discounts and free delivery to combinations and limited-time offers. For restaurants and food businesses, tracking these promotions gives them a competitive edge to better adjust their pricing strategies, identify trends, and stay ahead of their competitors.

One of the topmost ways to track promotions is using web scraping, which is an automated way of extracting relevant data from the internet. This article examines how to track restaurant promotions from Instacart and Postmates using the techniques, tools, and best practices in web scraping.

Why Track Restaurant Promotions?

1. Contest Research

Identify promotional strategies of competitors in the market.

Compare their discounting rates between restaurants.

Create pricing strategies for competitiveness.

2. Consumer Behavior Intuition

Understand what kinds of promotions are the most patronized by customers.

Deducing patterns that emerge determine what day, time, or season discounts apply.

Marketing campaigns are also optimized based on popular promotions.

3. Distribution Profit Maximization

Determine the optimum timing for promotion in restaurants.

Analyzing competitors' discounts and adjusting is critical to reducing costs.

Maximize the Return on investments, and ROI of promotional campaigns.

Web Scraping Techniques for Tracking Promotions

Key Data Fields to Extract

To effectively monitor promotions, businesses should extract the following data:

Restaurant Name – Identify which restaurants are offering promotions.

Promotion Type – Discounts, BOGO (Buy One Get One), free delivery, etc.

Discount Percentage – Measure how much customers save.

Promo Start & End Date – Track duration and frequency of offers.

Menu Items Included – Understand which food items are being promoted.

Delivery Charges - Compare free vs. paid delivery promotions.

Methods of Extracting Promotional Data

1. Web Scraping with Python

Using Python-based libraries such as BeautifulSoup, Scrapy, and Selenium, businesses can extract structured data from Instacart and Postmates.

2. API-Based Data Extraction

Some platforms provide official APIs that allow restaurants to retrieve promotional data. If available, APIs can be an efficient and legal way to access data without scraping.

3. Cloud-Based Web Scraping Tools

Services like CrawlXpert, ParseHub, and Octoparse offer automated scraping solutions, making data extraction easier without coding.

Overcoming Anti-Scraping Measures

1. Avoiding IP Blocks

Use proxy rotation to distribute requests across multiple IP addresses.

Implement randomized request intervals to mimic human behavior.

2. Bypassing CAPTCHA Challenges

Use headless browsers like Puppeteer or Playwright.

Leverage CAPTCHA-solving services like 2Captcha.

3. Handling Dynamic Content

Use Selenium or Puppeteer to interact with JavaScript-rendered content.

Scrape API responses directly when possible.

Analyzing and Utilizing Promotion Data

1. Promotional Dashboard Development

Create a real-time dashboard to track ongoing promotions.

Use data visualization tools like Power BI or Tableau to monitor trends.

2. Predictive Analysis for Promotions

Use historical data to forecast future discounts.

Identify peak discount periods and seasonal promotions.

3. Custom Alerts for Promotions

Set up automated email or SMS alerts when competitors launch new promotions.

Implement AI-based recommendations to adjust restaurant pricing.

Ethical and Legal Considerations

Comply with robots.txt guidelines when scraping data.

Avoid excessive server requests to prevent website disruptions.

Ensure extracted data is used for legitimate business insights only.

Conclusion

Web scraping allows tracking restaurant promotions at Instacart and Postmates so that businesses can best optimize their pricing strategies to maximize profits and stay ahead of the game. With the help of automation, proxies, headless browsing, and AI analytics, businesses can beautifully keep track of and respond to the latest promotional trends.

CrawlXpert is a strong provider of automated web scraping services that help restaurants follow promotions and analyze competitors' strategies.

0 notes

Text

Top 6 Scraping Tools That You Cannot Miss in 2024

In today's digital world, data is like money—it's essential for making smart decisions and staying ahead. To tap into this valuable resource, many businesses and individuals are using web crawler tools. These tools help collect important data from websites quickly and efficiently.

What is Web Scraping?

Web scraping is the process of gathering data from websites. It uses software or coding to pull information from web pages, which can then be saved and analyzed for various purposes. While you can scrape data manually, most people use automated tools to save time and avoid errors. It’s important to follow ethical and legal guidelines when scraping to respect website rules.

Why Use Scraping Tools?

Save Time: Manually extracting data takes forever. Web crawlers automate this, allowing you to gather large amounts of data quickly.

Increase Accuracy: Automation reduces human errors, ensuring your data is precise and consistent.

Gain Competitive Insights: Stay updated on market trends and competitors with quick data collection.

Access Real-Time Data: Some tools can provide updated information regularly, which is crucial in fast-paced industries.

Cut Costs: Automating data tasks can lower labor costs, making it a smart investment for any business.

Make Better Decisions: With accurate data, businesses can make informed decisions that drive success.

Top 6 Web Scraping Tools for 2024

APISCRAPY

APISCRAPY is a user-friendly tool that combines advanced features with simplicity. It allows users to turn web data into ready-to-use APIs without needing coding skills.

Key Features:

Converts web data into structured formats.

No coding or complicated setup required.

Automates data extraction for consistency and accuracy.

Delivers data in formats like CSV, JSON, and Excel.

Integrates easily with databases for efficient data management.

ParseHub

ParseHub is great for both beginners and experienced users. It offers a visual interface that makes it easy to set up data extraction rules without any coding.

Key Features:

Automates data extraction from complex websites.

User-friendly visual setup.

Outputs data in formats like CSV and JSON.

Features automatic IP rotation for efficient data collection.

Allows scheduled data extraction for regular updates.

Octoparse

Octoparse is another user-friendly tool designed for those with little coding experience. Its point-and-click interface simplifies data extraction.

Key Features:

Easy point-and-click interface.

Exports data in multiple formats, including CSV and Excel.

Offers cloud-based data extraction for 24/7 access.

Automatic IP rotation to avoid blocks.

Seamlessly integrates with other applications via API.

Apify

Apify is a versatile cloud platform that excels in web scraping and automation, offering a range of ready-made tools for different needs.

Key Features:

Provides pre-built scraping tools.

Automates web workflows and processes.

Supports business intelligence and data visualization.

Includes a robust proxy system to prevent access issues.

Offers monitoring features to track data collection performance.

Scraper API

Scraper API simplifies web scraping tasks with its easy-to-use API and features like proxy management and automatic parsing.

Key Features:

Retrieves HTML from various websites effortlessly.

Manages proxies and CAPTCHAs automatically.

Provides structured data in JSON format.

Offers scheduling for recurring tasks.

Easy integration with extensive documentation.

Scrapy

Scrapy is an open-source framework for advanced users looking to build custom web crawlers. It’s fast and efficient, perfect for complex data extraction tasks.

Key Features:

Built-in support for data selection from HTML and XML.

Handles multiple requests simultaneously.

Allows users to set crawling limits for respectful scraping.

Exports data in various formats like JSON and CSV.

Designed for flexibility and high performance.

Conclusion

Web scraping tools are essential in today’s data-driven environment. They save time, improve accuracy, and help businesses make informed decisions. Whether you’re a developer, a data analyst, or a business owner, the right scraping tool can greatly enhance your data collection efforts. As we move into 2024, consider adding these top web scraping tools to your toolkit to streamline your data extraction process.

0 notes

Link

0 notes

Text

IP2World S5 Manager: A Comprehensive Guide to Efficient Proxy Management

IP2World S5 Manager: A Comprehensive Guide to Efficient Proxy Management In today’s digital age, maintaining online privacy and accessing geo-restricted content are paramount for both individuals and businesses. The IP2World S5 Manager emerges as a robust solution, offering a user-friendly interface and a plethora of features designed to streamline proxy management. This article delves into the functionalities and benefits of the IP2World S5 Manager, providing a detailed overview for users seeking an efficient proxy management tool.To get more news about ip2world s5 manager, you can visit ip2world.com official website.

Introduction to IP2World S5 Manager The IP2World S5 Manager is a versatile application that allows users to easily configure and manage their S5 and Static ISP proxies. With its intuitive design, the manager caters to both beginners and advanced users, ensuring a seamless experience in proxy configuration and usage. The manager supports various proxy types, including Socks5 Residential Proxy, Static ISP Residential Proxy, and Socks5 Unlimited Residential Proxy. Key Features User-Friendly Interface: The IP2World S5 Manager boasts a simple and intuitive interface, making it accessible for users of all technical backgrounds. The straightforward design ensures that users can quickly navigate through the application and configure their proxies with ease. API Support: For advanced users, the manager offers API settings, allowing for automated proxy management and integration with other applications. This feature is particularly beneficial for businesses that require large-scale proxy usage. Batch IP Generation: The manager supports batch IP generation, enabling users to generate multiple IPs simultaneously. This feature is ideal for users who need to manage a large number of proxies efficiently. High-Quality IP Pool: IP2World provides access to a vast pool of high-quality IPs, ensuring reliable and uninterrupted proxy connections. With over 90 million residential IPs available in 220+ locations worldwide, users can access geo-restricted content without any hassle. Flexible Proxy Plans: The IP2World S5 Manager offers a variety of proxy plans to suit different needs. Users can choose from rotating residential proxies, static ISP proxies, and dedicated data center proxies, ensuring they find the best match for their requirements. Benefits of Using IP2World S5 Manager Enhanced Online Privacy: By using the IP2World S5 Manager, users can maintain their online anonymity and protect their digital privacy. The manager’s high-quality proxies ensure that users can browse the internet without revealing their true IP addresses. Access to Geo-Restricted Content: The manager allows users to bypass geo-restrictions and access content from different regions. This feature is particularly useful for businesses involved in market research, web scraping, and social media management. Improved Performance: The IP2World S5 Manager ensures fast and stable proxy connections, enhancing the overall performance of online activities. The manager’s high-quality IP pool and efficient proxy management features contribute to a smooth and uninterrupted browsing experience. Cost-Effective Solutions: With a range of proxy plans available, users can select the most cost-effective solution for their needs. The manager’s flexible pricing options ensure that users can find a plan that fits their budget while still enjoying high-quality proxy services. Conclusion The IP2World S5 Manager stands out as a comprehensive and efficient proxy management tool, offering a range of features designed to enhance online privacy and access to geo-restricted content. Its user-friendly interface, API support, batch IP generation, and high-quality IP pool make it an ideal choice for both individuals and businesses. By leveraging the IP2World S5 Manager, users can enjoy a seamless and secure online experience, tailored to their specific needs.

0 notes

Text

Scrape Google Results - Google Scraping Services

In today's data-driven world, access to vast amounts of information is crucial for businesses, researchers, and developers. Google, being the world's most popular search engine, is often the go-to source for information. However, extracting data directly from Google search results can be challenging due to its restrictions and ever-evolving algorithms. This is where Google scraping services come into play.

What is Google Scraping?

Google scraping involves extracting data from Google's search engine results pages (SERPs). This can include a variety of data types, such as URLs, page titles, meta descriptions, and snippets of content. By automating the process of gathering this data, users can save time and obtain large datasets for analysis or other purposes.

Why Scrape Google?

The reasons for scraping Google are diverse and can include:

Market Research: Companies can analyze competitors' SEO strategies, monitor market trends, and gather insights into customer preferences.

SEO Analysis: Scraping Google allows SEO professionals to track keyword rankings, discover backlink opportunities, and analyze SERP features like featured snippets and knowledge panels.

Content Aggregation: Developers can aggregate news articles, blog posts, or other types of content from multiple sources for content curation or research.

Academic Research: Researchers can gather large datasets for linguistic analysis, sentiment analysis, or other academic pursuits.

Challenges in Scraping Google

Despite its potential benefits, scraping Google is not straightforward due to several challenges:

Legal and Ethical Considerations: Google’s terms of service prohibit scraping their results. Violating these terms can lead to IP bans or other penalties. It's crucial to consider the legal implications and ensure compliance with Google's policies and relevant laws.

Technical Barriers: Google employs sophisticated mechanisms to detect and block scraping bots, including IP tracking, CAPTCHA challenges, and rate limiting.

Dynamic Content: Google's SERPs are highly dynamic, with features like local packs, image carousels, and video results. Extracting data from these components can be complex.

Google Scraping Services: Solutions to the Challenges

Several services specialize in scraping Google, providing tools and infrastructure to overcome the challenges mentioned. Here are a few popular options:

1. ScraperAPI

ScraperAPI is a robust tool that handles proxy management, browser rendering, and CAPTCHA solving. It is designed to scrape even the most complex pages without being blocked. ScraperAPI supports various programming languages and provides an easy-to-use API for seamless integration into your projects.

2. Zenserp

Zenserp offers a powerful and straightforward API specifically for scraping Google search results. It supports various result types, including organic results, images, and videos. Zenserp manages proxies and CAPTCHA solving, ensuring uninterrupted scraping activities.

3. Bright Data (formerly Luminati)

Bright Data provides a vast proxy network and advanced scraping tools to extract data from Google. With its residential and mobile proxies, users can mimic genuine user behavior to bypass Google's anti-scraping measures effectively. Bright Data also offers tools for data collection and analysis.

4. Apify

Apify provides a versatile platform for web scraping and automation. It includes ready-made actors (pre-configured scrapers) for Google search results, making it easy to start scraping without extensive setup. Apify also offers custom scraping solutions for more complex needs.

5. SerpApi

SerpApi is a specialized API that allows users to scrape Google search results with ease. It supports a wide range of result types and includes features for local and international searches. SerpApi handles proxy rotation and CAPTCHA solving, ensuring high success rates in data extraction.

Best Practices for Scraping Google

To scrape Google effectively and ethically, consider the following best practices:

Respect Google's Terms of Service: Always review and adhere to Google’s terms and conditions. Avoid scraping methods that could lead to bans or legal issues.

Use Proxies and Rotate IPs: To avoid detection, use a proxy service and rotate your IP addresses regularly. This helps distribute the requests and mimics genuine user behavior.

Implement Delays and Throttling: To reduce the risk of being flagged as a bot, introduce random delays between requests and limit the number of requests per minute.

Stay Updated: Google frequently updates its SERP structure and anti-scraping measures. Keep your scraping tools and techniques up-to-date to ensure continued effectiveness.

0 notes

Text

Top Benefits of Using Residential ISP Proxies

Residential ISP proxies are a powerful tool for a variety of online activities, offering a unique combination of benefits that make them a top choice for many users. In this blog post, we’ll explore the key advantages of using residential ISP proxies and why they should be your go-to solution for tasks like web scraping, content access, and online privacy.

Unparalleled Authenticity and Reputation

One of the primary benefits of residential ISP proxies is their authenticity. These proxies use IP addresses that are directly allocated to real residential internet service providers (ISPs), making them virtually indistinguishable from genuine user traffic

Exceptional Speed and Reliability

Residential ISP proxies are hosted in data centers, which ensures exceptional speed and reliability. Data center networks are known for their stability and offer gigabit speeds, allowing for fast and efficient data transfer. This makes residential ISP proxies ideal for tasks that require quick responses or large data transfers, such as price aggregation or market research

Bypass Rate Limiters and Geo-Restrictions

Many websites and online services implement rate limiters to prevent excessive requests or downloads from a single IP address. Residential ISP proxies can help you bypass these limitations by providing a unique IP address for each request, making it appear as if the traffic is coming from different users. Additionally, residential ISP proxies allow you to change your apparent location, enabling access to geo-restricted content.

Improved Online Privacy and Security

Compatibility with Various Protocols

Residential ISP proxies are compatible with a wide range of protocols, including HTTP, HTTPS, and SOCKS5. This flexibility allows you to choose the protocol that best suits your needs, whether it’s for web scraping, online shopping, or accessing social media platforms.

Scalability and Flexibility

Many residential ISP proxy providers offer extensive IP pools, with millions of unique IPs across various locations. This scalability allows you to handle large-scale projects or high-volume traffic without worrying about IP exhaustion. Additionally, residential ISP proxies offer flexibility in terms of rotation options, sticky sessions, and API access, making them adaptable to different use cases.

Competitive Pricing and Unlimited Bandwidth

Residential ISP proxies often come with competitive pricing plans and unlimited bandwidth, making them a cost-effective solution for various online activities. Many providers offer flexible plans that cater to different budgets and requirements, ensuring that you can find a plan that suits your needs.

Dedicated Customer Support

Reputable residential ISP proxy providers typically offer dedicated customer support to assist users with any questions or issues they may encounter. This support can be invaluable when setting up or troubleshooting your proxy configuration, ensuring a smooth and hassle-free experience. In conclusion, residential ISP proxies offer a unique combination of authenticity, speed, reliability, and privacy that make them an excellent choice for a wide range of online activities. Whether you’re engaged in web scraping, content access, or online privacy protection, residential ISP proxies can provide the tools and support you need to succeed in today’s digital landscape.

0 notes